Abstract

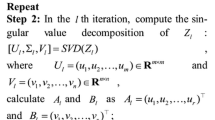

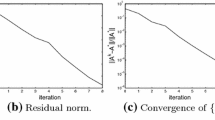

In the past decade, robust principal component analysis (RPCA) and low-rank matrix completion (LRMC), as two very important optimization problems with the view of recovering original low-rank matrix from sparsely and highly corrupted observations or a subset of its entries, have already been successfully adopted in image denoising, video processing, web search, biological information, etc. This paper proposes an efficient and effective algorithm, named the alternating direction and step size minimization (ADSM) algorithm, which employs the alternating direction minimization idea to solve the general relaxed model that can describe small noise (e.g., Gaussian noise). The coupling of sparse noise and small noise makes low-rank matrix recovery more challenging than that of RPCA. We make use of Taylor expansion, singular value decomposition and shrinkage operator as the alternating direction minimization method to deduce iterative direction matrices. A continuous technology is incorporated into ADSM to accelerate convergence. Similarly, the Taylor expansion and step size minimization (TESM) algorithm for LRMC is designed by the above way, but the alternating direction minimization idea needs to be ruled out since there is not a sparse matrix in it. Theoretically, it is proved that the two algorithms globally converge to their respective optimal points based on some conditions. The numerical results are reported, illustrating that ADSM and TESM are quite efficient and effective for recovering large-scale low-rank matrix problems at many cases.

Similar content being viewed by others

References

Wright, J., Ganesh, A., Rao, S., Peng, Y., Ma, Y.: Robust principal component analysis: Exact recovery of corrupted low-rank matrices via convex optimization. Adv. Neural Inf. Process. Syst., 2080–2088 (2009)

Candès, E.J., Li, X., Ma, Y., Wright, J.: Robust principal component analysis. J. ACM. 58, 11 (2011)

Min, K., Zhang, Z., Wright, J., Ma, Y.: Decomposing background topics from keywords by principal component pursuit. In: Proceedings of the 19th ACM International Conference, pp. 269–278 (2010)

Bennett, J., Lanning, S.: The netflix prize. In: Proceedings of KDD Cup and Workshop, p. 35 (2007)

Recht, B., Fazel, M., Parril, P.A.: Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev. 52, 471–501 (2010)

Candès, E.J., Recht, B.: Exact matrix completion via convex optimization. Found. Comput. Math. 9, 717–772 (2009)

Candès, E.J., Tao, T.: Decoding by linear programming. IEEE. T. Inform. Theory 51, 4203–4215 (2005)

Donoho, D.L.: For most large underdetermined systems of linear equations the minimal l 1-norm solution is also the sparsest solution. Commun. Pur. Appl. Math. 59, 797–829 (2006)

Cai, J., Candès, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20, 1956–1982 (2010)

Liu, Z., Vandenberghe, L.: Interior-point method for nuclear norm approximation with application to system identification. SIAM J. Matrix. Anal. A 31, 1235–1256 (2009)

Schmidt, R.: Multiple emitter location and signal parameter estimation. IEEE. T. Antenn. Propag. 34, 276–280 (1986)

Ji, H., Liu, C., Shen, Z., Xu, Y.: Robust video denoising using low-rank matrix completion. In: Proceedings of IEEE Conference (2010)

Zhang, X., Xiong, H.: Illumination compensation via low rank matrix completion for multiview video coding. In: 2013 IEEE International Conference, pp. 1865–1869 (2013)

Chandrasekaran, V., Sanghavi, S., Parrilo, P.A., Willsky, A.S.: Rank-sparsity incoherence for matrix decomposition. SIAM J. Optimiz. 21, 572–596 (2011)

Lin, Z., Ganesh, A., Wright, J., Wu, L., Chen, M., Ma, Y.: Fast convex optimization algorithms for exact recovery of a corrupted low-rank matrix. Comput. Adv. Multi-Sensor Adapt. Process., 61 (2009)

Ma, S., Goldfarb, D., Chen, L.: Fixed point and Bregman iterative methods for matrix rank minimization. Math. Program. 128, 321–353 (2011)

Drineas, P., Kannan, R., Mahoney, M.W.: Fast Monte Carlo algorithms for matrices II: computing a low-rank approximation to a matrix. SIAM J. Comput. 36, 158–183 (2006)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging. Sci. 2, 183–202 (2009)

Toh, K.C., Yun, S.: An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pac. J. Optim. 6, 15 (2010)

Xiao, Y.H., Wu, S.Y., Qi, L.: Nonmonotone Barzilai-Borwein gradient algorithm for l 1-regularized nonsmooth minimization in compressive sensing. J. Sci. Comput. 61, 17–41 (2014)

Chen, C., He, B., Yuan, X.: Matrix completion via an alternating direction method. IMA J. Numer. Anal. 32, 227–245 (2012)

Hiriart-Urruty, J.B., Lemarechal, C.: Convex Analysis and Minimization Algorithms I: Fundamentals. Springer Science & Business Media (2013)

Hale, E.T., Yin, W., Zhang, Y.: A Fixed-point Continuation Method for l 1-regularized Minimization with Applications to Compressed Sensing. Rice University, pp. 43–44 (2007)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Xiao, Y.H., Jin, Z.: An alternating direction method for linear-constrained matrix nuclear norm minimization. Numer. Linear. Algebr. 19, 541–554 (2012)

Acknowledgements

All the authors would like to thank the reviewers for their valuable suggestions.

Funding

This research is supported in part by the Natural Science Foundation of China (Grant NSFC-11301021).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhao, J., Feng, Q. & Zhao, L. Alternating direction and Taylor expansion minimization algorithms for unconstrained nuclear norm optimization. Numer Algor 82, 371–396 (2019). https://doi.org/10.1007/s11075-018-0630-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-018-0630-z

Keywords

- Robust principal component analysis

- Alternating direction minimization

- Taylor expansion

- Low-rank matrix completion