Abstract

Image auto-annotation which annotates images according to their semantic contents has become a research focus in computer vision, as it helps people to edit, retrieve and understand large image collections. In the last decades, researchers have proposed many approaches to solve this task and achieved remarkable performance on several standard image datasets. In this paper, we train neural networks using visual and semantic ranking loss to learn visual-semantic embedding. This embedding can be easily applied to nearest-neighbor based models to boost their performance on image auto-annotation. We test our method on four challenging image datasets, reporting comparison results with existing works. Experimental results show that our method can be applied to several state-of-the-art nearest-neighbor based models including TagProp and 2PKNN, and significantly improves their performance.

Similar content being viewed by others

1 Introduction

The semantic contents of media can be understood better, aided by annotations which are also the basis of many text based image processing techniques, including image retrieval based on key words [28], text aided image classification [43] etc. However, annotating images manually requires time and effort, and it is difficult for people to annotate all relevant words for each image. Thus image auto-annotation emerged. Given an input image, the goal of image auto-annotation is to predict a few relevant words to the image which can mainly reflect its semantic contents. Assigning richer, more relevant words to images can help web image search engines improve their fast indexing and retrieval ability.

Image auto-annotation is a multi-label classification task while most existing works focus on single-label classification where each image is classified into only one category [5, 26, 33, 47]. From this view, image auto-annotation is a much more challenging problem and has become a hot research topic. In last decades, a number of techniques [2, 3, 6, 13, 14, 18, 25, 30, 39, 45, 46, 48] have been proposed and evaluated on standard datasets. In early works in this field, image auto-annotation was considered as special machine translation problem, which tried to establish a relationship between images and annotations. Cross Media Relevance Models (CMRM) [18], Continuous Relevance Model (CRM) [25], and Multiple Bernoulli Relevance Model (MBRM) [6] assume different, non-parametric density representations of the joint word-image space. They approximate the probability of observing a set of blobs and annotations in a given image. Recently, non-parametric nearest neighbor like models [14, 27, 30, 41] have illustrated marked success mainly because patterns in the data can be adapted by the high capacity of these models. These models have two bases. The first is how to design visual features to represent images. These features should be able to reflect the semantic content of the images. Thus low-level features including color, texture etc. and mid-level features, such as GIST [35], SIFT [29], which show their power in many computer vision problems, have been tried. The second basis is finding the visual neighbors which are also semantic neighbors, for testing images from training set. Furthermore, these models can be enhanced by using metric learning to find a more reasonable distance metric for the image features [14, 41, 46]. However, designing and selecting hand-crafted features are usually difficult. These models suffer from semantic gap mainly because visual features can not completely abstract the semantic content of the images.

Several recent embedding methods [11, 12] based on Canonical Correlation Analysis (CCA) [15] have been proposed to bridge the semantic gap by finding linear projections to maximize the correlation between visual features and textual features. Kernel CCA [15], as an extension of CCA, has also been tried, in which visual features and textual features are nonlinearly projected into embedding space [1, 40]. However, CCA or KCCA based embedding methods are hard to scale to large datasets because of the high computational cost to compute the eigenvalues. As an alternative to CCA, other methods learn linear transformations of visual and textual features using stochastic gradient descent (SGD) with rank loss. For example, WSABIE [46] trains an embedding model with the WARP loss and it yields low memory usage and fast computation time. However, to date, these SGD trained models are frustratingly difficult to beat CCA based models [22].

Very recently, researchers adopt deep learning algorithms, which have shown its great power in single-label image classification [24] to automatically learning image features extraction and shown significant performance gain in large image datasets [10, 20, 21, 24]. And deep learning has also been adopted to solve image auto-annotation task. Kiros et al. [21] use convolutional neural network (CNN) [24] to build a hierarchical model for learning image representations from the pixel level and then added an autoencoder which has only one hidden layer to learn binary codes for annotations. Gong et al. [10] respectively train convolutional neural networks using three loss functions—Softmax [14], Pairwise Ranking [19], and WARP [46]. The trained CNNs then directly output the tags of testing images. Training a deep CNN needs not only a large labeled image dataset, but also expensive computational time. Literature [40] proposes a method which directly used a pre-trained network to extract visual features and experimental results show the CCA based model gained remarkable benefit from these deep learning features. Furthermore, literature [44] proposes a CNN-RNN framework to learn a joint image-label embedding to exploit the label dependency and image-label relevance. However, this model still can not achieve even comparable results with the models combing CCA or KCCA embedding and nearest-neighbor based label propagation.

In this paper, we design a simple but effective approach to solve image auto-annotation task. Our method learns visual-semantic embedding by training a neural network using our proposed visual and semantic neighbors ranking loss function. We argue that images can find more semantic neighbors in this embedding space. We apply this embedding method to several state-of-the-art nearest-neighbor like models and conduct several experiments on four image datasets, including Corel 5k, IAPR TC12, ESP Game and NUS-WIDE. Being different from CCA or KCCA based embedding methods, our neural network based embedding method easily scales to large amounts of data with stochastic optimization in training. We will also detail how to train such a neural network. Our main contributions are as follows.

-

We proposed a visual and semantic neighbors ranking loss function which can minimize the divergence between visual and semantic neighbors ranking.

-

We trained neural networks to learn visual-semantic embedding. Images can find more neighbors who have similar semantic contents in this visual-semantic space.

-

We applied our visual-semantic embedding method to several nearest-neighbor based models to predict image’s annotations on four benchmark datasets.

The rest of this paper is organized as follows: In Sect. 2, we first briefly introduce our method. Then we present our proposed visual and semantic neighbors ranking loss function and detailed the network architecture and implementation details followed by demonstrating the nearest-neighbor based label propagation models. The experimental setup and results will be given in Sect. 3. And we conclude our work in the end.

2 Model

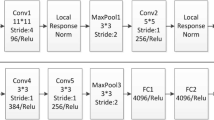

In this section, we detail the proposed image auto-annotation approach. Figure 1 illustrates the framework of our method. The key idea of our method is to learn a visual-semantic embedding network to bridge the semantic gap. This embedding network nonlinearly projects visual features drawn from CNN [24] into a semantic space in which images find more semantic neighbors. Comparing to the CCA based embedding methods, we train a simple but effective neural network with minimizing our proposed visual and semantic neighbors ranking loss, to achieve visual-semantic embedding. The visual and semantic neighbors ranking loss function can minimize the divergence between visual and semantic neighbors ranking. When we train our model, training images are firstly go through a pre-trained CNN to get bare visual features. Their associated annotations are converted into real-valued vectors by word embedding (Word2Vec) [32] and then word2vec embedding of each tag will be pooled using Fisher vector [23, 37] which provides state-of-the-art results on many computer vision tasks [4, 36]. We use the Euclidean distance of fisher vectors to get the semantic neighbors ranking of the training image. The visual-semantic embedding network is trained to make the visual neighbors ranking, which is obtained by measuring the similarities of the output vectors from this embedding network, has minimum divergence respect to the semantic neighbors ranking. At testing stage, the visual feature of the testing image is drawn from CNN and then nonlinearly projected into the semantic space by our visual-semantic embedding network. Then the testing image will find its neighbors in this semantic space. Finally, nearest-neighbor based models such as TagProp [14], 2PKNN [41], et al. are used to annotate the testing image by transfer tags from the neighbors.

2.1 Visual and Semantic Neighbors Ranking

In the task of image auto-annotation, each training image has several annotations to describe the semantic of the image. Thus there are two kinds of image neighbors. The first kind is obtained by comparing images’ annotations (we call them semantic neighbors), and the another is acquired by measuring similarity of images’ visual features (we call them visual neighbors). When we predict the annotations of a testing image, we could only get the testing image’s visual neighbors. Therefore, image’s visual features should reflect the image’s semantic contents as far as possible. Unfortunately visual features especially hand-crafted features are not power enough to completely abstract the semantic of the images. Thus traditional annotation models who find neighbors by visual features suffer from semantic gap. In this paper we extract visual features using pre-trained CNN and use visual-semantic embedding network to project visual features into semantic space with minimizing the visual and semantic neighbors ranking loss. In other words, the ranking of visual neighbors, obtained by measuring images’ visual features’s distances, will be similar as far as possible to ranking of semantic neighbors. In this section we introduce how to rank semantic and visual neighbors, and the visual and semantic neighbors ranking loss function is also proposed.

For a pair of images \(x_i\) and \(x_j\) in a training mini-batch \(\mathcal {B}\) who has m images in total, their semantic distance \(d_{i,j}^S\) can be calculated using the Euclidean distance between fisher vectors of their annotations:

where \(FV_i\) and \(FV_j\) is the fisher vector representation of annotations of image \(x_i\) and \(x_j\) respectively, which will be detailed in Sect. 2.2. Two images having small semantic distance have similar semantics. Then the semantic rank of image \(x_j\in \mathcal {B}\), \(j\ne i\) with respect to image \(x_i\) can be computed using the following equation.

where \(I(\cdot )\) is an indicator function (I(true) \(=\) 1, I(false) \(=\) 0).

Similarly, visual rank of image \(x_j\in \mathcal {B}\), \(j\ne i\) with respect to image \(x_i\) can be obtained using the following equation.

where \(d_{i,j}^V\) means the visual distance between \(x_i\) and \(x_j\). In this paper, we use Euclidean distance to compute this distance.

\(\phi (\cdot )\) is the pre-trained CNN [38] without any modification. \(\psi _\omega (\cdot )\) is the visual-semantic embedding network with parameter \(\omega \). It is worthy to note that the visual neighbors ranking is based on the projected visual features from visual-semantic embedding network, not the raw visual features from CNN. In our method, we aim to learn this visual-semantic embedding to nonlinearly project the output of CNN into a semantic space, by minimizing difference between semantic and visual neighbors ranking. Therefore, we propose the visual and semantic neighbors ranking loss function to train network.

Unfortunately, the Eq. 5 is a non-differentiable loss function. In this paper, we adopt the solution proposed by [49] in which logistic function \(\delta (x)=\log _2(1+2^{-x})\) is used to replace indicator function, and the loss function can be rewrote as follows:

2.2 Network Structure and Training Method

As shown in Fig. 1, our visual-semantic embedding is achieved by training a simple deep neural network. This neural network is composed of several fully connected layers with weight matrices \(W_l,l=1,\ldots ,N\). The output of the last fully connected layer go through batch normalization [17] layer and L2 normalization layer. Specially, sign expansion root (SER) [7, 8] layers, rather than Rectified Linear Unit (ReLU), are add to separate these successive fully connected layers.Footnote 1 Typical neural activation ReLU used in CNN denoted as \(\varphi (\mathbf{x})=max\{\mathbf{0},\mathbf{x}\}\) only keeps the positive activations and drops the negative activations. Experience in action recognition shows that negative activations should be considered for they contain some useful information when CNN features are used [7, 8]. Therefore, we adopt SER to nonlinearly project the activation of each fully connected layer. Suppose the activation is \(\mathbf{x}=[x_1,x_2,\ldots ,x_n]\), then SER is denoted as

where \({\mathbf{x}^+=[x_1^+, x_2^+,\ldots , x_n^+]}\) is the positive part of feature \(\mathbf{x}\), namely \(x_i^+=max\{0,x_i\}\), while \({\mathbf{x}^-=[x_1^-, x_2^-,\ldots , x_n^-]}\) is the negative part of feature \(\mathbf{x}\), namely \(x_i^-=max\{0,-x_i\}\). This operation doubles the dimensionality of feature \(\mathbf{x}\) allowing us to capture important nonlinear information from both positive and negative activations. We also use dropout layer after SER.

For each tag of a training image, we use Word2Vec [32] to encode it as a 300 dimensional real valued vector. Then we use Fisher vector representation of Hybrid Gaussian–Laplacian mixture model (HGLMM) proposed by Klein et al. [22] to encode the Word2Vec vectors of a training image’s all tags into one vector. Following literature [22], Independent Component Analysis (ICA) is applied to construct a codebook with 50 centers using first and second order information. Then HGLMM and Fisher vector are used to produce a \(300\times 50\times 2 = 30{,}000\) dimensional vector to represent one training image’s tags. Furthermore, we apply Principal Component Analysis (PCA) on these 30,000 dimensional vectors to reduce them to 10,000 dimensions. This operation help us save memory and training time. Finally, semantic distance between training images can be calculated based on these 10,000 dimensional fisher vectors using Eq. 1.

To train this visual-semantic embedding network, we are given a training set of images and associated tags, denoted as \(\mathbb {T}\). We use Stochastic Gradient Descent (SGD) to update the network’s parameters. For one training epoch, we randomly partition the training set into N mini-batches, each having m annotated images. For each image in a mini-batch, we resize it with keeping its aspect ratio, making the minimal value of width and height to be 224. Then each image is horizontal flipped. We then crop 224 \(\times \) 224 patches from the center of the original and flipped images. The mean intensity is then subtracted. And each patch inherits all the annotations of the original image. These patches are then forward propagated through a pre-trained CNN to get CNN feature \(\phi (I)\). Our visual-semantic network nonlinearly projects \(\phi (I)\) into \(\psi _\omega (\phi (I))\). Semantic and visual distances then can be obtained to rank neighbors. Explicitly, in our experiment, for fairly comparison with existing work [40], we use VGG-16 [38] trained on ILSVRC-2012 dataset as the CNN feature extractor and the activations of the first fully connected layer are extracted as the CNN feature \(\phi (I)\). Although our visual-semantic embedding network can have many layers in general, We set it having two fully connected layers because this configuration leads to satisfactory results. Since the SER layer will double the dimensionality of this CNN feature, \(W_1\) and \(W_2\) (see Fig. 1) in our visual-semantic embedding network are randomly initialize to be 8192 \(\times \) 2048 matrix and 4096 \(\times \) 1024 matrix respectively. In our work, SGD with momentum 0.9 and weight decay 0.0003 is used to update the parameters of visual-semantic network. The learning rate is initialized to be 0.1 and decayed after each 5 epochs. Batch normalization layer, introduced by [17], is applied right after the last fully connected layer. We also use a dropout layer after SER with probability = 0.5. Algorithm 1 gives the pseudo-code of our visual-semantic embedding network training. In our experiments, we first train the network on the large-scale dataset NUS-WIDE, and annotate the testing images in this dataset. When we predict labels for testing images in the other smaller datasets, we fine-tune this network using their training images, after initializing the network with the weights learned from NUS-WIDE.

Top 5 nearest neighbors found with raw CNN features (top row) and with features after visual-semantic embedding processed(bottom row) for a image (in purple box) with tags “water”, “building”, “monument”, “dock”. The images in red box have at least one of these four tags in ground truth. (Color figure online)

2.3 Label Propagation

With our trained visual-semantic embedding neural network, the testing image’s CNN features can be projected into a semantic space in which it is able to find its semantic neighbors. Figure 2 gives an example to vividly show this property. Given a testing image, we find its top 5 nearest neighbors using CNN features directly (top row) and features after visual-semantic embedding processed (bottom row). The query image in the purple box has tags “water”, “buildin”, “monument”, “dock” in ground truth. Only two of the retrieved 5 images using CNN features have one or more same tags as the query image. Using the features projected by visual-semantic embedding, we find three images having at least ont of the query image’s tags. This property is one of the basis of many nearest-neighbor based models and can effectively improve their performance. Following this key idea, we apply our visual-semantic embedding network to several popular nearest-neighbor based image auto-annotation models including Tagprop [14], 2PKNN [41], as introduced in the following.

2.3.1 TagProp

TagPropFootnote 2 is proposed by Guillaumin [14] which learns weighted nearest neighbor model or word-specific logistic discriminant model by maximizing the log-likelihood of the tag predictions in the training set. When using weighted nearest neighbor model, the probability of tag t appearing in the annotations of testing image I is:

where \(\mathcal {I}(J,t)\) is an indicator function who equals one if image J has label t, zero otherwise. \(\pi _{IJ}\) is a learned weight of image J for propagating tags to image I. This weight can be learned from image rank, referred to as the TagProp-RK, or image distance, referred to as the TagProp-SD for single distance and TagProp-ML for multiple distances combined by metric learning. When using word-specific logistic discriminant model, tag t annotates image I with probability:

where \(\sigma (\cdot )\) is sigmoid function and \(\{\alpha _t,\beta _t\}\) can be trained per tag. The resulting variants are referred to as TagProp-\(\sigma \)RK, TagProp-\(\sigma \)SD, TagProp-\(\sigma \)ML, respectively.

2.3.2 2PKNN

Verma and Jawahar [41] proposed 2PKNNFootnote 3 model to address image auto-annotation task. 2PKNN is composed of two phases. Given a testing image I, the first phase is to identify its neighbors \(\mathcal {N}(I)\) from each semantic group which is a subset of training images with one common label. The second phase of 2PKNN is to predict labels for testing image by weighted summing over the reference images in \(\mathcal {N}(I)\):

where \(d_{I,J}^i\) is the distance between the ith feature of image I and J. The default value of weight \(\omega _i\) is 1 / n. Furthermore, this distance can be improved by metric learning which learns the weight \(\omega _i\), leading to 2PKNN-ML model.

3 Experiments

3.1 Datasets

We conduct a series of experiments to examine the performance of our proposed image auto-annotation method on four widely used standard image datasets. Statistics of these four datasets are shown in Table 1.

Corel 5k This image set is the first and has become a basic dataset for image auto-annotation [3, 6, 14, 25, 30, 41]. It contains 5000 images, falling into 50 categories and each category has 100 images on the same topic. 1 to 5 tags are manually annotated for each image and there are 260 tags in total in the dataset. We extract 4500 images for training and others for testing.

ESP Game It is collected from a computer game, which need two players to predict same tags for a given image to gain points. This dataset is very challenging for it’s wide variety of image contents. We follow [14] and split this image set into training set with 18,689 images and testing set with 2081 images.

IAPR TC12 It is originally used for cross-lingual retrieval. Researchers extract common nouns using natural language processing techniques to generate tags for each image which has been used in [30]. And now it is also a benchmark for image auto-annotation task [6, 30]. This dataset has 17,665 images for training and 1962 images for testing. Each image is annotated with an average of 5.7 labels.

NUS-WIDE This dataset has 269,648 images collected from Flickr. Most of these images are manually annotated with several labels, leading to 81 labels in total for the whole dataset. Following [10], we have 209,347 images after discarding images with no labels and we select a subset of 150k images for training and the others are left for testing.

3.2 Baselines and Evaluation Protocols

In this paper, we propose a visual-semantic embedding network and emphasize that this network can project bare CNN feature into a semantic space, which can improve the performance of nearest-neighbor based models. Thus we consider label propagation (including TagProp-\(\sigma \)ML and 2PKNN-ML) based on bare CNN feature as our baselines. For fair comparison, we follow previous works [1, 14, 21, 41], using the popular protocols to evaluate our method, including mean word recall (R@n), mean word precision (P@n), F1 score which is the trade-off between P and R, denoted as \(F1=2\times P\times R/(P+R)\), and number of tags with non-zero recall value (\(N+\)). Here n is the number of predicted labels for each image. For Corel5k, ESP-Game, and IAPR TC12, we predict 5 labels for each testing image. For NUS-WIDE, we set \(n=3\), since images in this dataset only have averagely 2.4 labels (see Table 1).

3.3 Overall Comparison of Image Annotation Models

As our first experiment, we analyse the performance of our methods and compare with some state-of-the-art image auto-annotation models. We apply our visual-semantic embedding to TagProp-\(\sigma \)ML and 2PKNN-ML respectively (we call them VSE+TagProp-\(\sigma \)ML and VSE+2PKNN-ML). For each method, we both test their performance using single VGG16 activations and VGG16 activations combined with 15 hand-crafted features,Footnote 4 which are also used in models listed in Table 2. Here are three points need to be explained: (1) When we use single VGG16 activations, TagProp-\(\sigma \)ML and 2PKNN-ML can just be considered as TagProp-\(\sigma \)SD and 2PKNN, because only one base distance can be obtained. (2) When we use VGG16 activations and hand-crafted features, we first project the VGG16 activations using our visual-semantic embedding network, and then combine the network output with hand-crafted features. (3) We randomly partition the imagesets into training, validation, and test sets with the predefined size (see Sect. 3.1) and generate 5 such splits of the data and run all experiments on all splits and report the mean value of each performance metric. Table 2 shows the annotation performance of our proposed methods on Corel5k, ESP Game, and IAPR TC-12 datasets, against with four baselines and some state-of-the-art methods proposed in recent years. These methods span the wide range of model types discussed in Sect. 1. The results of our experiments demonstrate that our best method VSE+2PKNN-ML using VGG16 activations combined with hand-crafted features outperforms all these state-of-the-art methods we have reviewed while our other three methods also achieve comparable performance with a number of recently proposed methods. Our method VSE+2PKNN-ML (using VGG16 activations and hand-crafted features) achieves P, R, F1 and N+ scores of 41, 52, 46 and 205 on the Corel 5K dataset, 50, 36, 42 and 262 on the ESP Game dataset, 58, 43, 49 and 281 on the IAPR TC-12 dataset. Compared with the best state-of-the-art method CCA-KNN, our model obtains remarkable improvements on all the three datasets, probably because of the effectiveness of our visual-semantic embedding and combination of CNN features and hand-crafted features. Comparing the results of our methods and the baselines, we also find that our proposed visual-semantic embedding network supervised by textual feature can significantly boost the annotation performance.

A selection of example testing images from the Corel 5K, ESP Game, IAPR TC-12 and NUS-WIDE datasets. We compare the ground-truth tags and the tags predicted by our method VSE+2PKNN-ML. The tags in blue are those that match with ground-truth, while the red tags are missing in their ground-truth annotations, but predicted by our method. (Color figure online)

It is worthy to note that our method VSE+2PKNN-ML (using VGG16 activations) is the one most similar to the best existing method CCA-KNN. The most import differences between our model and CCA-KNN include: (1) We train a network using our proposed loss function to achieve visual-semantic embedding, while CCA-KNN model projects bare CNN feature by learning CCA or KCCA. (2) We use Fisher vector representation of HGLMM to encode the Word2Vec vectors of tags into one vector. It is much more complicated than the method in CCA-KNN who just takes average of all the Word2Vec vectors as textual feature. Experiments show that our model achieves comparable results with CCA-KNN model. Hence, training neural network using our proposed visual and semantic neighbors ranking loss function is an effective approach to achieve visual-semantic embedding. Furthermore, our method scales to large amounts of data more easily than CCA-KNN who needs expensive computational cost to find projection vectors when training.

Table 3 shows the comparison of our methods and the state-of-the-art methods on the large-scale imageset NUS-WIDE. CNN+Softmax and CNN+WARP models train convolutional neural networks from scratch using softmax and WARP loss to achieve end-to-end image annotation, obtaining an inferior performance with respect to the models, including CNN+KNN and CNN+Logistic, using pre-trained CNN features. Wang et al. attempted to learn joint image-label embedding using CNN-RNN framework [44], getting unsatisfactory performance. The best performance is observed in our model VSE+2PKNN-ML, improving P, R, F1, and \(N+\) to 44, 47, 45 and 80. This suggests that nearest-neighbor based model aided by our embedding network is effective to address image annotation task. We also try use ResNet152 [16] which is another new popular deep convolutional network, to extract image visual features and report the performance of our models in Table 4. By comparing Tables 4 with 2 and 3, we can see ResNet slightly benefits our models.

We also show a selection of example testing images from the Corel 5K, ESP Game, IAPR TC-12 and NUS-WIDE datasets. We compare the ground-truth tags and the tags predicted by our method VSE+2PKNN-ML. As shown in Fig. 3, the tags in blue are those matching with ground-truth, while the red tags are missing in their ground-truth annotations, but predicted by our method. We can see that our method predicts most of the ground-truth tags for all these images. Moreover, our model predicts tags which reflect the semantic content of the images but missing in their ground-truth annotation. However, our method may miss rare tags, that have low frequency in the training set. The testing image in the dashed red box gives an example. The ground-truth annotations of this image include gravel, lookout, mountain, road, slope, and tourist, while our method misses gravel and lookout. The reason is that gravel and lookout only appear in a small number of training images’ annotations. Thus, training images, having tags gravel and lookout, have low probability to become neighbors of the the testing image and our method loses these tags.

3.4 Impact of Neighborhood Size

The only parameter need to be set manually in our methods is K which is the size of the neighborhood \(\mathcal {N}\) (see Eqs. 9, 10). To evaluate the impact of K on annotation performance of our methods, we also conduct a series of experiments. For Corel 5K, ESP Game and IAPR TC-12 datasets, We set the parameter K to be 50, 70, 100, 200, 300, 500, 700, 900, 1000 respectively and record the performance of our methods, while K is set to be 100, 200, 300, 500, 600, 700, 800, 900, 1000 for NUS-WIDE dataset. Figure 4 shows how the parameter K affects the performance of our methods and baselines on these four datasets. It is obviously that small value of K leads to low annotation performances for all the methods on all these datasets. It is because we get a small number of neighbors and some important information may be lost when we propagate tags from weighted neighbors to testing image using small K. Furthermore, we see that annotation performance will remain almost the same or rise slowly with increasing the value of K after some point (200 for ESP Game and IAPR TC-12, 500 for NUS-WIDE). Specially, annotation performance will deteriorate slightly with setting K greater than about 500 for Corel5K dataset, probably because selecting too many neighbors from Corel5K’s small training set brings in irrelevant images to the testing image. Big neighborhood size K will increase the computational cost. Hence, we set K to be 200 for Corel 5K, ESP Game, IAPR TC-12 datasets and 500 for NUS-WIDE dataset, obtaining the results in Tables 2 and 3. Furthermore, as expected, we observe that annotation performance of each of our methods is higher than the corresponding baseline. This again confirms that our visual-semantic embedding network can project features into a space where images can find more semantically similar images.

4 Conclusion

In this paper, we present a simple but effective visual-semantic embedding network and apply it to nearest-neighbor based models to address image auto-annotation task. Our embedding is achieved by training a neural network using our proposed visual and semantic neighbors ranking loss. This network can nonlinearly project bare CNN activations into a joint space where images could identify more semantic neighbors. This property guarantees the success of nearest-neighbor based models lying on our embedding network. Our experimental results on four standard datasets including Corel 5K, ESP Game, IAPR TC-12, and large-scale NUS-WIDE demonstrate that our methods make remarkable improvements on all of these datasets, compared with existing state-of-the-art methods.

Notes

We also tried to use ReLU which perform slightly inferior than using SER (F1 values decrease 3–5% in our experiments on four datasets).

The source code of TagProp is available at: http://lear.inrialpes.fr/people/guillaumin/code.php#tagprop.

The source code of 2PKNN is available at: http://researchweb.iiit.ac.in/~yashaswi.verma/eccv12/2pknn.zip.

These features are available at: http://lear.inrialpes.fr/people/guillaumin/data.php.

References

Ballan L, Uricchio T, Seidenari L, Bimbo AD (2014) A cross-media model for automatic image annotation. In: ACM ICMR, pp 73–80

Blei D, Jordan M (2003) Modeling annotated data. In: ACM SIGIR, pp 127–134

Carneiro G, Chan A, Moreno P, Vasconcelos N (2007) Supervised learning of semantic classes for image annotation and retrieval. IEEE Trans Pattern Anal Mach Intell 29(3):394–410

Chatfield K, Lempitsky V, Vedaldi A, Zisserman A (2011) The devil is in the details: an evaluation of recent feature encoding methods. In: BMVC, pp 1–12

Deng J, Dong W, Socher R, Li L, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: CVPR, pp 248–255

Fenga S, Manmatha R, Lavrenko V (2004) Multiple Bernoulli relevance models for image and video annotation. In: CVPR, pp 1002–1009

Fernando B, Anderson P, Hutter M, Gould S (2016) Discriminative hierarchical rank pooling for activity recognition. In: CVPR, pp 1924–1932

Fernando B, Gawes E, Oramas J, Ghodrati J, Tuytelaars T (2017) Rank pooling for action recognition. IEEE Trans Pattern Anal Mach Intell 99:773–787

Fu H, Zhang Q, Qiu G (2012) Random forest for image annotation. In: ECCV, pp 86–99

Gong Y, Jia Y, Leung T, Toshev A, Ioffe S (2014) Deep convolutional ranking for multilabel image annotation. In: CoRR, arXiv:1312.4894

Gong Y, Ke Q, Isard M, Lazebnik S (2014) A multi-view embedding space for modeling internet images, tags, and their semantics. Int J Comput Vis 106(2):210–233

Gong Y, Wang L, Hodosh M, Hockenmaier J, Lazebnik S (2014) Improving image-sentence embeddings using large weakly annotated photo collections. In: ECCV, pp 529–545

Gu Y, Xue H, Yang J (2016) Cross-modal saliency correlation for image annotation. Neural Process Lett 45(3):777–789

Guillaumin M, Mensink T, Verbeek J, Schmid C (2009) Tagprop: discriminative metric learning in nearest neighbor models for image auto-annotation. In: ICCV, pp 309–316

Hardoon D, Szedmak S, Shawe-Taylor J (2004) Cannonical correlation analysis: an overview with application to learning methods. Neural Comput 16(12):2639–2664

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: CVPR, pp 770–778

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: ICML, pp 448–456

Jeon J, Lavreko V, Manmatha R (2003) Automatic image annotation and retrieval using cross-media relevance models. In: ACM SIGIR, pp 119–126

Joachims T (2002) Optimizing search engines using clickthrough data. In: ACM SIGKDD, pp 133–142

Johnson J, Ballan L, Fei-Fei L (2015) Love thy neighbors: image annotation by exploiting image metadata. In: ICCV, pp 4624–4632

Kiros R, Szepesvari C (2015) Deep representations and codes for image auto-annotation. In: NIPS, pp 917–925

Klein B, Lev G, Sadeh G, Wolf L (2015) Fisher vectors derived from hybrid Gaussian–Laplacian mixture models for image annotation. In: CoRR, arXiv:1411.7399

Klein B, Lev G, Sadeh G, Wolf L (2015) Fisher vectors derived from hybrid Gaussian–Laplacian mixture models for image annotation. In: CVPR

Krizhevsky A, Sutskever I, Hinton G (2012) Imagenet classification with deep convolutional neural networks. In: NIPS, pp 1106–1114

Lavrenko V, Manmatha R, Jeon J (2004) A model for learning the semantics of pictures. In: NIPS, pp 553–560

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In: CVPR, pp 2169–2178

Li X, Snoek C, Worring M (2007) Learning social tag relevance by neighbor voting. IEEE TMM 11(7):1310–1322

Liu Y, Xu D, Tsang I, Luo J (2007) Using large-scale web data to facilitate texual query based retrieval of consumer photos. In: ACM MM, pp 1277–1283

Lowe D (2004) Distinctive image features from scale-invariant keypoints. IJCV 60(2):91–110

Makadia A, Pavlovic V, Kumar S (2008) A new baseline for image annotation. In: ECCV, pp 316–329

Makadia A, Pavlovic V, Kumar S (2010) Baselines for image annotation. Int J Comput Vis 90(1):88–105

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. arXiv:1301.3781

Montazer G, Giveki D (2017) Scene classification using multi-resolution WAHOLB features and neural network classifier. Neural Process Lett 46(2):681–704

Moran S, Lanvrenko V (2014) Sparse kernel learning for image annotation. In: ACM ICMR, p 113

Oliva A, Torralba A (2001) Modeling the shape of the scene: a holistic representation of the spatial envelope. IJCV 42(3):145–175

Peng X, Zou C, Qiao Y, Peng Q (2010) Action recognition with stacked fisher vectors. In: ECCV, pp 581–595

Perronnin F, Sanchez J, Mensink T (2010) Improving the fisher kernel for large scale image classification. In: ECCV, pp 143–156

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large scale image recognition. In: ICLR

Song Y, Zhuang Z, Li H, Zhao Q, Li J, Lee W, Giles CL (2008) Real-time automatic tag recommendation. In: ACM SIGIR, pp 515–522

Venkatesh N, Subhransu M, Manmatha R (2015) Automatic image annotation using deep learning representations. In: ACM ICMR, pp 603–606

Verma Y, Jawahar C (2012) Image annotation using metric learning in semantic neighbourhoods. In: ECCV, pp 836–849

Verma Y, Jawahar C (2013) Exploring SVM for image annotation in presence of confusing labels. In: British machine vision conference, pp 1–11

Wang G, Hoiem D, Forsyth D (2009) Building text features for object image classification. In: CVPR, pp 1367–1374

Wang J, Yang Y, Mao J, Huang Z, Huang C, Xu W (2016) Cnn-rnn: a unified framework for multi-label image classification. In: CVPR, pp 2285–2294

Wang L, Liu L, Khan L (2004) Automatic image annotation and retrieval using subspace clustering algorithm. In: ACM international workshop multimedia databases, pp 100–108

Weston J, Bengio S, Usunier N (2011) Wsabie: scaling up to large vocabulary image annotation. In: IJCAI, pp 2764–2770

Wu F, Jing X, Yue D (2017) Multi-view discriminant dictionary learning via learning view-specific and shared structured dictionaries for image classification. Neural Process Lett 45:649–666

Yang C, Dong M, Hua J (2007) Region-based image annotation using asymmetrical support vector machine-based multiple-instance learning. In: CVPR, pp 2057–2063

Yun H, Raman P, Vishwanathan S (2014) Ranking via robust binary classification. In: NIPS, pp 2582–2590

Zhang S, Huang J, Huang Y (2010) Automatic image annotation using group sparsity. In: CVPR, pp 3312–3319

Acknowledgements

This work is supported by the Natural Science Foundation of China (No. 61572162) and the Zhejiang Provincial Key Science and Technology Project Foundation (No. 2017C01010).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Rights and permissions

About this article

Cite this article

Zhang, W., Hu, H. & Hu, H. Training Visual-Semantic Embedding Network for Boosting Automatic Image Annotation. Neural Process Lett 48, 1503–1519 (2018). https://doi.org/10.1007/s11063-017-9753-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-017-9753-9