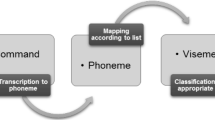

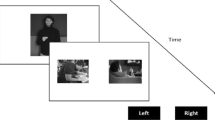

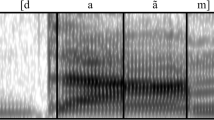

The formation of visual-auditory associations on recognition of speech sounds (phonemes) in conditions of simultaneous presentation of visual stimuli was studied. Two categories of visual stimuli were used – images of gestures and geometrical figures. The formation of visual-auditory associations was assessed in terms of degradation of response parameters in control experiments after completion of training. The protocol for presentation of combined pairs of stimuli included new combinations of stimulus pairs differing from those used during training to visual-auditory interactions. Reaction times and the proportions of correct responses were measured on phoneme recognition. These experiments showed that use of images of gestures as visual stimuli produced marked visual-auditory associations, which contrasted with combinations of sounds with images of geometrical figures. It is suggested that these differences can be explained in terms of the involvement of the neuron system operating in gesture perception in forming visual-auditory associations.

Similar content being viewed by others

References

T. Allison, A. Puce, and G. McCarthy, “Social perception from visual cues: role of the STS region,” Trends Cogn. Sci., 4, No. 7, 267–278 (2000).

D. F. Armstrong, W. C. Stokoe, and S. E. Wilcox, Gesture and the Nature of Language, Cambridge University Press (1995).

G. Buccino, F. Binkofski, G. R. Fink, L. Fadiga, L. Fogassi, V. Gallese, R. J. Seitz, G. Rizzolatti, and H.-J. Freund, “Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study,” Eur. J. Neurosci., 13, 400–404 (2001).

G. A. Calvert, E. T. Bullmore, M. J. Brammer, R. Campbell, S. C. R. Williams, P. K. McGuire, P. W. R. Woodruff, S. D. Iversen, and A. S. David, “Activation of auditory cortex during silent lipreading,” Science, 276, No. 5312, 593–595 (1997).

G. A. Calvert, M. J. Brammer, and S. D. Iversen, “Crossmodal identification,” Trends Cogn. Sci., 2, 247–253 (1998).

G. A. Calvert, “Crossmodal processing in the human brain: Insights from functional neuroimaging studies,” Cerebral Cortex, 11, No. 12, 1110–1123 (2002).

M. C. Corballis, “On the evolution of language and generativity,” Cognition, 44, 197–226 (1992).

S. T. Grafton, M. A. Arbib, L. Fadiga, and G. Rizzolatti, “Localization of grasp representations in humans by positron emission tomography. 2. Observation compared with imagination,” Exp. Brain Res., 112, No. 1, 103–111 (1996).

K. W. Grant and P. F. Seitz, “Measures of auditory-visual integration in nonsense syllables and sentences,” J. Acoust. Soc. Am., 104, No. 4, 2438–2450 (1998).

S. Hockley and L. Polka, “A developmental study of audio-visual speech perception using the McGurk paradigm,” J. Acoust. Soc. Am., 96, 3309 (1994).

D. Kimura, Neuromotor Mechanisms in Human Communication, Oxford University Press (1993).

Z. Kourtzi and N. Kanwisher, “Activation in human MT/MST by static images with implied motion,” J. Cogn. Neurosci., 12, 48–55 (2000).

A. MacLeod and Q. Summerfield, “Quantifying the contribution of vision to speech perception in noise,” Brit. J. Audiol., 21, 131–141 (1987).

D. W. Massaro, “Speech perception by ear and eye,” in: Hearing by Eye: The Psychology of Lipreading, B. Dodd and R. Campbell (eds.), Erlbaum, Hillsdale, NJ (1987), pp. 53–83.

D. W. Massaro, “Speechreading: illusion or window into pattern recognition,” Trends Cogn. Sci., 3, No. 8, 310–317 (1998).

H. McGurk and J. MacDonald, “Hearing lips and seeing voices,” Nature, 264, 746–748 (1976).

D. Reisberg, J. McLean, and A. Goldfield, “Easy to hear but hard to understand: A lip-reading advantage with intact auditory stimuli,” in: Hearing by Eye: The Psychology of Lipreading, B. Dodd and R. Campbell (eds.), Erlbaum, Hillsdale, NJ (1987).

G. Rizzolatti and M. A. Arbib, “Language within our grasp,” Trends Neurosci., 21, 188–194 (1998).

G. Rizzolatti and L. Craighero, “The mirror-neuron system,” Ann. Rev. Neurosci., 27, 169–192 (2004).

G. Rizzolatti, L. Fadiga, M. Matelli,V. Bettinardi, E. Paulesu, D. Perani, and F. Fazio, “Localization of grasp representations in humans by PET: 1. Observation versus execution,” Exp. Brain Res., 111, 246–252 (1996).

M. Sams, R. Aulanko, M. Hämäläinen, R. Hari, O. Lounasmaa, S. T. Lu, and J. Simola, “Seeing speech: visual information from lip movements modifies activity in the human auditory cortex,” Neurosci. Lett., 127, 141–145 (1991).

B. E. Stein and M. A. Meredith, The Merging of the Senses, MIT Press, Cambridge, MA (1993).

W. H. Sumby and I. Pollack, “Visual contribution to speech intelligibility in noise,” J. Acoust. Soc. Am., 26, No. 2, 212–215 (1954).

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated from Rossiiskii Fiziologicheskii Zhurnal imeni I. M. Sechenova, Vol. 95, No. 8, pp. 850–856, August, 2009.

Rights and permissions

About this article

Cite this article

Aleksandrov, A.A., Dmitrieva, E.S. & Stankevich, L.N. Experimental Formation of Visual-Auditory Associations on Phoneme Recognition. Neurosci Behav Physi 40, 998–1002 (2010). https://doi.org/10.1007/s11055-010-9359-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11055-010-9359-4