Abstract

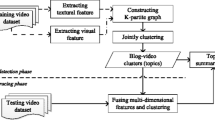

Effectively and efficiently summarizing social media is crucial and non-trivial to analyze social media. On social streams, events which are the main concept of semantic similar social messages, often bring us a firsthand story of daily news. However, to identify the valuable news, it is almost impossible to plough through millions of multi-modal messages one by one with traditional methods. Thus, it is urgent to summarize events with a few representative data samples on the streams. In this paper, we provide a vivid textual-visual media summarization approach for microblog streams, which exploits the incremental latent semantic analysis (LSA) of detected events. Firstly, with a novel weighting scheme for keyword relationship, we can detect and track daily sub-events on a keyword relation graph (WordGraph) of microblog streams effectively. Then, to summarize the stream with representative texts and images, we use cross-modal fusion to analyze the semantics of microblog texts and images incrementally and separately, with a novel incremental cross-modal LSA algorithm. The experimental results on a real microblog dataset show that our method is at least 1.31% better and 23.67% faster than existing state-of-the-art methods, and cross-modal fusion can improve the summarization performance by 4.16% on average.

Similar content being viewed by others

Notes

Tecent: t.qq.com

Sina: news.sina.com.cn, People: news.people.com.cn, Ifeng: news.ifeng.com, NetEase: news.163.com, Baidu: news.baidu.com

References

Abdelhaq H, Sengstock C, Gertz M (2013) Eventweet: online localized event detection from twitter. Proc of VLDB Endow 6(12):1326–1329

Aiello LM, Petkos G, Martin C, Corney D, Papadopoulos S, Skraba R, Goker A, Kompatsiaris I, Jaimes A (2013) Sensing trending topics in twitter. TMM 15(6):1268–1282

Alqadah F, Bhatnagar R (2011) A game theoretic framework for heterogenous information network clustering. In: Proc. of 17th KDD. ACM, pp 795–804

Atefeh F, Khreich W (2015) A survey of techniques for event detection in twitter. Comput Intell 31(1):132–164

Bian J, Yang Y, Zhang H, Chua TS (2015) Multimedia summarization for social events in microblog stream. TMM 17(2):216–228

Cai H, Yang Y, Li X, Huang Z (2015) What are popular: exploring twitter features for event detection, tracking and visualization. In: Proc. of 23rd MM. ACM, pp 89–98

Cai H, Huang Z, Srivastava D, Zhang Q (2016) Indexing evolving events from tweet streams. In: Proc. of 32nd ICDE. IEEE, pp 1538–1539

Chang Y, Wang X, Mei Q, Liu Y (2013) Towards twitter context summarization with user influence models. In: Proc. of 6th WSDM. ACM, pp 527–536

Chen X, Candan KS (2014) Lwi-svd: low-rank, windowed, incremental singular value decompositions on time-evolving data sets. In: Proc. of 20th KDD. ACM, pp 987–996

Eitel A, Scheiter K, Schüler A, Nyström M, Holmqvist K (2013) How a picture facilitates the process of learning from text: evidence for scaffolding. Learn Instr 16:48–63

Gao X, Cao J, Jin Z, Li X, Li J (2013) Gesodeck: a geo-social event detection and tracking system. In: Proc. of 21st MM. ACM, pp 471–472

Inouye D, Kalita JK (2011) Comparing twitter summarization algorithms for multiple post summaries. In: Proc. of 3rd PASSAT/SocialCom. IEEE Computer Society, pp 298–306

Jiang J, Tao X, Li K (2018) Dfc: density fragment clustering without peaks. J Intell Fuzzy Syst 34(1):525–536

Kumar S, Udupa R (2011) Learning hash functions for cross-view similarity search. In: Proc. of 22nd IJCAI, pp 1360–1365

Long R, Wang H, Chen Y, Jin O, Yu Y (2011) Towards effective event detection, tracking and summarization on microblog data. In: Proc. of 12th WAIM. Springer, pp 652–663

Lu T, Jin Y, Su F, Shivakumara P, Tan CL (2015) Content-oriented multimedia document understanding through cross-media correlation. Multimed Tools Appl 74:8105–8135

Nguyen DT, Jung JE (2017) Real-time event detection for online behavioral analysis of big social data. Future Gener Comput Syst 66:137–145

Nichols J, Mahmud J, Drews C (2012) Summarizing sporting events using twitter. In: Proc. of 17th IUI. ACM, pp 189–198

Petrović S., Osborne M, Lavrenko V (2010) Streaming first story detection with application to twitter. In: Proc. of NAACL HLT’10. ACL, pp 181–189

Popescu AM, Pennacchiotti M, Paranjpe D (2011) Extracting events and event descriptions from twitter. In: Proc. of 20th WWW. ACM, pp 105–106

Qian S, Zhang T, Xu C, Shao J (2016) Multi-modal event topic model for social event analysis. TMM 18(2):233–246

Rafailidis D, Manolopoulou S, Daras P (2013) A unified framework for multimodal retrieval. Pattern Recognit 46(12):3358–3370

Ramos AMS, Woloszyn V, Wives LK (2017) An experimental analysis of feature selection and similarity assessment for textual summarization. In: Proc. of 12th CCC. Springer, pp 146–155

Sakaki T, Okazaki M, Matsuo Y (2010) Earthquake shakes twitter users: real-time event detection by social sensors. In: Proc. of 19th WWW. ACM, pp 851–860

Sayyadi H, Raschid L (2013) A graph analytical approach for topic detection. ACM Trans Internet Technol 13(2):Article 4

Shah RR, Yu Y, Verma A, Tang S, Shaikh AD, Zimmermann R (2016) Leveraging multimodal information for event summarization and concept-level sentiment analysis. Knowl-Based Syst 108:102–109

Sharifi B, Hutton MA, Kalita JK (2010) Experiments in microblog summarization. In: Proc. of 2nd PASSAT/SocialCom. IEEE Computer Society, pp 685–688

Sorden SD (2013) The cognitive theory of multimedia learning. In: Irby BJ, Brown G, Lara-Alecio R, Jackson S (eds) Handbook of educational theories. chap. 8. Information Age Pub, pp 155–165

Sun Y, Aggarwal CC, Han J (2012) Relation strength-aware clustering of heterogeneous information networks with incomplete attributes. Proc VLDB Endow 5 (5):394–405

Wang J, Korayem M, Blanco S, Crandall DJ (2016) Tracking natural events through social media and computer vision. In: Proc. of 24th MM. ACM, pp 1097–1101

Wang W, Ooi BC, Yang X, Zhang D, Zhuang Y (2014) Effective multi-modal retrieval based on stacked auto-encoders. Proc VLDB Endow 7(8):649–660

Wang Z, Shou L, Chen K, Chen G, Mehrotra S (2015) On summarization and timeline generation for evolutionary tweet streams. TKDE 27(5):1301–1315

Wei S, Zhao Y, Yang T, Zhou Z, Ge S (2018) Enhancing heterogeneous similarity estimation via neighborhood reversibility. Multimed Tools Appl 77:1437–1452

Wu G, Zhang L (2016) A new method for computing \(\varphi \)-functions and their condition numbers of large sparse matrices. ArXiv e-prints, pp 1–21

Wu F, Yu Z, Yang Y, Tang S, Zhang Y, Zhuang Y (2014) Sparse multi-modal hashing. TMM 16(2):427–439

Xiong Y, Wang D, Zhang Y, Feng S, Wang G (2014) Multimodal data fusion in text-image heterogeneous graph for social media recommendation. In: Proc. of 15th WAIM. Springer, pp LNCS 8485 96–99

Xiong Y, Zhang Y, Wang D, Feng S (2017) Picture or it didnt happen: catch the truth for events. Multimed Tools Appl 76(14):15,681–15,706

Yan R, Wan X, Otterbacher J, Kong L, Li X, Zhang Y (2011) Evolutionary timeline summarization: a balanced optimization framework via iterative substitution. In: Proc. of 34th SIGIR. ACM, pp 745–754

Yang Y, Zha ZJ, Gao Y, Zhu X, Chua TS (2014) Exploiting web images for semantic video indexing via robust sample-specific loss. TMM 16(6):1677–1689

Yang Z, Li Q, Liu W, Ma Y, Cheng M (2017) Dual graph regularized nmf model for social event detection from flickr data. WWW 20(5):995–1015

Yang Z, Li Q, Lu Z, Ma Y, Gong Z, Liu W (2017) Dual structure constrained multimodal feature coding for social event detection from flickr data. TOIT 17(2):Article No 19

Yue G, Zhao S, Yang Y, Chua TS (2015) Multimedia social event detection in microblog. In: Proc. of 21st MMM. Springer, pp 269–281

Zhang W, Chen J, Shen J, Yu Y (2014) Location-based hierarchical event summary for social media photos. In: Proc. of 15th PCM. Springer, pp LNCS 8879 254–257

Zhou X, Chen L (2014) Event detection over twitter social media streams. VLDB J 23(3):381–400

Zhou X, Chen L, Zhang Y, Cao L, Huang G, Wang C (2015) Online video recommendation in sharing community. In: Proc. of SIGMOD’15. ACM, pp 1645–1656

Acknowledgements

The project is supported by National Natural Science Foundation of China (61772122, 61402091).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: The derivation of incremental LSA

Appendix: The derivation of incremental LSA

In Section 3.2.2, the incremental summarization of evolving events is based on the incremental LSA of \(\mathbf {B}^{t + 1}\), which means it should be specified by the LSA of \(\mathbf {B}^{t}\) (UtΣtVtT) and the feature matrix \(\mathbf {{\Delta }}\) of new data in day \(t + 1\). The solution of (11) is based on the algorithm in [9]. It uses the quasi-Gram-Schmidt algorithm [34] to perform a quick SVD on a large matrix. The entire process consists of two parts: the reconstruction of \(\mathbf {B}^{t + 1}\) based on QR factorization, and the fast SVD of \(\mathbf {B}^{t + 1}\) based on the quasi-Gram-Schmidt.

First, the \(\mathbf {B}^{t + 1}\) of (10) should be rewritten in the production format, since its SVD cannot be represented by the sum of the SVDs of several sub-matrices. By substituting \(\mathbf {B}^{t}\) with its LSA, \(\mathbf {B}^{t + 1}\) can be decomposed as:

To decompose \(\mathbf {B}^{t + 1}\) further, the QR factorization is performed on the \([\mathbf {U}^{t} \quad \mathbf {I}]\) and \([\mathbf {V}^{t} \quad \mathbf {{\Delta }}^{T}]\) of (19). Supposing \(\mathbf {Q}_{\mathrm {I}} \mathbf {R}_{\mathrm {I}} = (\mathbf {I} - \mathbf {U}^{t} {\mathbf {U}^{t}}^{T}) \mathbf {I}\) and \(\mathbf {Q}_{\mathrm {{\Delta }}} \mathbf {R}_{\mathrm {{\Delta }}} = (\mathbf {I} - \mathbf {V}^{t} {\mathbf {V}^{t}}^{T}) \mathbf {{\Delta }}^{T}\) are two QR factorizations, the matrix \(\mathbf {I}\) can be specified by \(\mathbf {U}^{t} {\mathbf {U}^{t}}^{T} \mathbf {I} + \mathbf {Q}_{\mathrm {I}} \mathbf {R}_{\mathrm {I}}\) and \(\mathbf {{\Delta }}^{T}\) by \(\mathbf {V}^{t} {\mathbf {V}^{t}}^{T} \mathbf {{\Delta }}^{T} + \mathbf {Q}_{\mathrm {{\Delta }}} \mathbf {R}_{\mathrm {{\Delta }}}\). Consequently, the \(\mathbf {B}^{t + 1}\) of (19) is rewritten as:

Let \(\mathbf {{\Omega }}\) be the middle matrix \( \left [ \begin {array}{llllllll} \mathbf {{\Sigma }}^{t} & \mathbf {0} \\ \mathbf {0} & \mathbf {0} \end {array} \right ] + \left [ \begin {array}{llllllll} {\mathbf {U}^{t}}^{T} \mathbf {I} \\ \mathbf {R}_{\mathbf {I}} \end {array} \right ] \left [ \begin {array}{llllllll} {\mathbf {V}^{t}}^{T} \mathbf {{\Delta }}^{T} \\ \mathbf {R}_{\mathrm {{\Delta }}} \end {array} \right ]^{T} \) of (20). To get the incremental SVD of Bt+ 1, we only need to decompose \(\mathbf {{\Omega }}\) efficiently. Since \(\mathbf {U}^{t}\) is the LSA of Bt, \(\mathbf {U}^{t} {\mathbf {U}^{t}}^{T}\) is \( \left [ \begin {array}{llllllll} \mathbf {I}_{m} & \mathbf {0} \\ \mathbf {0} & \mathbf {0} \end {array} \right ]_{m^{\prime }} \) . Thus, QI is \( \left [ \begin {array}{llllllll} \mathbf {0} \\ \mathbf {I}_{m^{\prime }-m} \end {array} \right ] \) and RI is \([ \mathbf {0} \quad \mathbf {I}_{m^{\prime }-m} ]\). Similarly, since the \(\mathbf {V}^{t}_{n^{\prime } \times n}\) of (20) is \([ \mathbf {V}^{t}_{n \times n} \quad \mathbf {0}_{(n^{\prime }-n) \times n} ]\), \({\mathbf {V}^{t}}^{T} \mathbf {{\Delta }}^{T}\) can be decomposed as \([ \mathbf {0} \quad {\mathbf {V}^{t}}^{T} \mathbf {{\Delta }}^{T}_{2} ]\). Hence, \(\mathbf {{\Omega }}\) can be rewritten as:

where \(\mathbf {R}_{\mathrm {{\Delta }}1} \!\in \! \mathbb {R}^{(n^{\prime }-n) \times m}\), \(\mathbf {R}_{\mathrm {{\Delta }}2} \!\in \! \mathbb {R}^{(n^{\prime }-n) \times (m^{\prime }-m)}\). Thus, we have to get the SVD of \(\mathbf {{\Omega }}\) efficiently.

Second, to perform a fast SVD of \(\mathbf {{\Omega }}\), we follow the principle of quasi-Gram-Schmidt [34], by approximating \(\mathbf {{\Omega }}\) with a reduced matrix \(\mathbf {\widehat {{\Omega }}} = \mathbf {C} \mathbf {H} \mathbf {R}^{T}\) where \(\mathbf {C}\), \(\mathbf {R}\) are sampled from the columns or rows of \(\mathbf {{\Omega }}\) separately. Generally, new appended and large singular value related rows or columns, will be sampled. The difference between \(\mathbf {{\Omega }}\) and \(\mathbf {\widehat {{\Omega }}}\) can be minimized, if \(\mathbf {H}\) is \((\mathbf {R}^{-1}_{c} \mathbf {R}^{-T}_{c} ) (\mathbf {C}^{T} \mathbf {{\Omega }} \mathbf {R} ) (\mathbf {R}^{-1}_{r} \mathbf {R}^{-T}_{r} )\), where \(\mathbf {R}_{c}\), \(\mathbf {R}_{r}\) are upper triangular matrices of the QR factorization on \(\mathbf {C}\), \(\mathbf {R}\) respectively. Let \(\mathbf {{\Psi }}\) be the matrix \(\mathbf {R}^{-T}_{c} \mathbf {C}^{T} \mathbf {{\Omega }} \mathbf {R} \mathbf {R}^{-1}_{r}\) and its SVD \(\mathbf {U}_{\mathrm {{\Psi }}} \mathbf {{\Sigma }}_{\mathrm {{\Psi }}} \mathbf {V}^{T}_{\mathrm {{\Psi }}}\). Therefore, the SVD of \(\mathbf {\widehat {{\Omega }}}\) can be formulated as:

Since the dimension of \(\mathbf {{\Psi }}\) is much smaller than that of \(\mathbf {\widehat {{\Omega }}}\), its SVD will be very fast. With the fast SVD of \(\mathbf {\widehat {{\Omega }}}\), the incremental LSA of \(\mathbf {B}^{t + 1}\) can finally be approximated by:

where \(\mathbf {U}^{t + 1} \mathbf {{\Sigma }}^{t + 1} {\mathbf {V}^{t + 1}}^{T}\) is the LSA of \(\mathbf {B}^{t + 1}\). Consequently, the LSA of \(\mathbf {B}^{t + 1}\) is specified by that of \(\mathbf {B}^{t}\) (UtΣtVtT), as well as some other matrices derived from \(\mathbf {U}^{t}\), \(\mathbf {{\Sigma }}^{t}\), \(\mathbf {V}^{t}\), \(\mathbf {{\Delta }}\). With the LSA of \(\mathbf {B}^{t + 1}\), we can provide the summarization of evolving events easily in Section 3.2.2. For more information about the derivation, please refer to [9] and [34].

Rights and permissions

About this article

Cite this article

Xiong, Y., Zhou, X., Zhang, Y. et al. Cross the data desert: generating textual-visual summary on the evolutionary microblog stream. Multimed Tools Appl 78, 6409–6440 (2019). https://doi.org/10.1007/s11042-018-6297-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-6297-6