Abstract

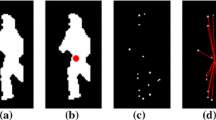

The problem of human action retrieval based on the representation of the human body as a 3D mesh is addressed. The proposed 3D mesh sequence descriptor is based on a set of trajectories of salient points of the human body: its centroid and its five protrusion ends. The extracted descriptor of the corresponding trajectories incorporates a set of significant features of human motion, such as velocity, total displacement from the initial position and direction. As distance measure, a variation of the Dynamic Time Warping (DTW) algorithm, combined with a k − means based method for multiple distance matrix fusion, is applied. The proposed method is fully unsupervised. Experimental evaluation has been performed on two artificial datasets, one of which is being made publicly available by the authors. The experimentation on these datasets shows that the proposed scheme achieves retrieval performance beyond the state of the art.

Similar content being viewed by others

References

Evangelidis G, Singh G, Horaud R (2014) Skeletal quads: human action recognition using joint quadruples. In: Proceedings of the IEEE international conference on pattern recognition, pp 1–6

Gao Y, Wang M, Ji R, Wu X, Dai Q (2012) 3D object retrieval and recognition with hypergraph analysis. IEEE Trans Image Process 21(9):4290–4303. https://doi.org/10.1109/TIP.2012.2199502

Gao Y, Wang M, Ji R, Wu X, Dai Q (2014) 3D object retrieval with Hausdorff distance learning. IEEE Trans Ind Electron 61(4):2088–2098. https://doi.org/10.1109/TIE.2013.2262760

Gkalelis N, Kim H, Hilton A, Nikolaidis N, Pitas I (2009) The i3Dpost multi-view and 3D human action/interaction. In: Proceedings of CVMP, pp 159–168

Holte MB, Moeslund TB, Fihl P (2010) View-invariant gesture recognition using 3D optical flow and harmonic motion context. Comput Vis Image Underst 114(12):1353–1361. https://doi.org/10.1016/635j.cviu.2010.07.012

Holte M, Moeslund T, Nikolaidis N, Pitas I (2011) A3D human action recognition for multi-view camera systems. In: Proceedings of the 3DIMPVT

Huang P, Hilton A, Starck J (2010) Shape similarity for 3D video sequences of people. Int J Comput Vis 89(2–3):362–381

Johansson G (1973) Visual perception of biological motion and a model for its analysis. Percept Psychophys 14(2):201–211

Johnson A, Hebert M (1999) Using spin images for efficient object recognition in cluttered 3D scenes. IEEE Trans PAMI 21(5):433–449

Kasai D, Yamasaki T, Aizawa K (2009) Retrieval of time-varying mesh and motion capture data using 2D video queries based on silhouette shape descriptors. In: IEEE ICME, pp 854–857, https://doi.org/10.1109/ICME.2009.5202629

Kelgeorgiadis K, Nikolaidis N (2014) Human action recognition in 3D motion sequences. In: Proceedings of the 22nd European signal processing conference (EUSIPCO). [IEEE]

Li W, Zhang Z, Liu Z (2010) Action recognition based on a bag of 3D points. In: Proceedings of computer vision and pattern recognition workshops (CVPRW). IEEE, San Francisco, pp 9–14, https://doi.org/10.1109/CVPRW.2010.5543273

Masood SZ, Ellis C, Tappen MF, LaViola JJ, Sukthankar R (2013) Exploring the trade-off between accuracy and observational latency in action recognition. Int J Comput Vis 101(3):420–436. https://doi.org/10.1007/s11263-012-0550-7

Matikainen P, Hebert M, Sukthankar R (2010) Representing pairwise spatial and temporal relations for action recognition. In: ECCV, pp 508–521

Ofli F, Chaudhry R, Kurillo G, Vidal R, Bajcsy R (2014) Sequence of the most informative joints (smij). J Vis Commun Image Represent 25(1):24–38. https://doi.org/10.1016/j.jvcir.2013.04.007

Osada R, Funkhouser T, Chazelle B, Dobkin D (2002) Shape distributions. ACM Trans Graph (TOG) 21:807–832. https://doi.org/10.1145/571647.571648

Papadakis P, Pratikakis I, Theoharis T, Passalis G, Perantonis S (2008) 3D object retrieval using an efficient and compact hybrid shape descriptor. In: Eurographics 2008 workshop on 3D object retrieval, https://doi.org/10.2312/3DOR/3DOR08/009-016

Papadakis P, Pratikakis I, Theoharis T, Passalis G, Perantonis S (2010) PANORAMA: A 3D shape descriptor based on panoramic views for unsupervised 3D object retrieval. Int J Comput Vis 89:177–192

Presti LL, Cascia ML (2016) 3D skeleton-based human action classification: a survey. Pattern Recogn 53:130–147. https://doi.org/10.1016/j.patcog.2015.11.019

Qiao R, Liu L, Shen C, van den Hengel A (2017) Learning discriminative trajectorylet detector sets for accurate skeleton-based action recognition. Pattern Recogn 66:202–212. https://doi.org/10.1016/j.patcog.2017.01.015

Sfikas K, Pratikakis I, Koutsoudis A, Savelonas M, Theoharis T (2014) Partial matching of 3D cultural heritage objects using panoramic views, multimedia tools and applications, in press. Springer. https://doi.org/10.1007/s11042-014-2069-0

Shahroudy A, Wang G, Ng T-T, Yang Q (2016) Multimodal multipart learning for action recognition in depth videos. IEEE Trans Pattern Anal Mach Intell 38(10):2123–2129. https://doi.org/10.1109/TPAMI.2015.2505295

Shilane P, Min P, Kazhdan M, Funkhouser T (2004) The princeton shape benchmark. In: Shape modeling international, pp 167–178

Slama R, Wannous H, Daoudi M (2014) 3D human motion analysis framework for shape similarity and retrieval. Image Vis Comput 32(2):131–154

Starck J, Aizawa K (2003) Model-based multiple view reconstruction of people. In: Proceedings of the ninth international conference on computer vision, pp 915–922

Starck J, Hilton A (2007) Surface capture for performance based animation. IEEE Comput Graph Appl 27(3):21–31

Veinidis C, Pratikakis I, Theoharis T (2017) On the retrieval of 3D mesh sequences of human actions. Multimed Tools Appl 76(2):2059–2085

Vemulapalli R, Arrate F, Chellappa R (2014) Human action recognition by representing 3D skeletons as points in a lie group. In: IEEE conference on computer vision and pattern recognition, pp 588–595

Vlachos M, Hadjieleftheriou M, Gunopulos D, Keogh E (2006) Indexing multidimensional time-series. VLDB J 15(1):1–20. https://doi.org/10.1007/s00778-004-0144-2

Wang H, Klaser A, Schmid C, Liu C (2013) Dense trajectories and motion boundary descriptors for action recognition. Int J Comput Vis 103(1):60–79. https://doi.org/10.1007/s11263-012-0594-8

Wang J, Liu Z, Wu Y, Yuan J (2014) Learning actionlet ensemble for 3D human action recognition. TPAMI 36(5):914–927

Weinland D, Boyer E (2008) Action recognition using exemplar-based embedding. In: IEEE conference on computer vision and pattern recognition, CVPR, pp 1–7

Yamasaki T, Aizawa K (2007) Motion segmentation and retrieval for 3D video based on modified shape distribution. EURASIP J Appl Signal Process 2007(1):211–222. https://doi.org/10.1155/2007/59535

Yamasaki T, Aizawa K (2009) A euclidean-geodesic shape distribution for retrieval of time-varying mesh sequences. In: IEEE ICME, pp 846–849

Yang X, Tian Y (2014) Effective 3D action recognition using eigenjoints. J Vis Commun Image Represent 25(1):2–11

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Veinidis, C., Pratikakis, I. & Theoharis, T. Unsupervised human action retrieval using salient points in 3D mesh sequences. Multimed Tools Appl 78, 2789–2814 (2019). https://doi.org/10.1007/s11042-018-5855-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-5855-2