Abstract

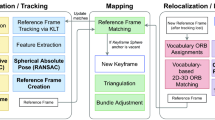

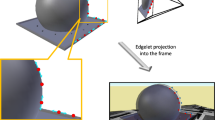

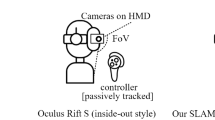

This paper addresses the challenging issue of marker less tracking for Augmented Reality. It proposes a real-time camera localization in a partially known environment, i.e. for which a geometric 3D model of one static object in the scene is available. We propose to take benefit from this geometric model to improve the localization of keyframe-based SLAM by constraining the local bundle adjustment process with this additional information. We demonstrate the advantages of this solution, called contrained SLAM, on both synthetic and real data and present very convincing augmentation of 3D objects in real-time. Using this tracker, we also propose an interactive augmented reality system for training application. This system, based on a Optical See-Through Head Mounted Display, allows to augment the users vision field with virtual information accurately co-registered with the real world. To keep greatly benefit of the potential of this hand free device, the system combines the tracker module with a simple user-interaction vision-based module to provide overlaid information in response to user requests.

Similar content being viewed by others

Notes

In [17] a global bundle adjustment is also performed in a dedicated thread

T = 3 in [33]

Note that due to a parallel implementation in a mapping and a tracking threads, a global bundle adjustment is also performed in [17] while keeping real-time performance.

LC means Lines Constraints, PC means Planar Constraints for the model constraints, i. e. the known part of the environment and E means that the multi-view relationship of the unknown part of the environment are taken into account.

The plane equation z = 0 can be refined by selecting the surrounding point cloud and using a plane fitting algorithm.

References

Bartoli A, Sturm P (2003) Constrained structure and motion from multiple uncalibrated views of a piecewise planar scene. Int J Comput Vis 52(1):45–64

Benhimane S, Malis E (2006) Integration of euclidean constraints in template based visual tracking of piecewise-planar scenes. In: International Conference on Intelligent Robots and Systems, IROS

Bleser G, Wuest H, Stricker D (2006) Online camera pose estimation in partially known and dynamic scenes. In: International Symposium on Mixed and Augmented Reality, ISMAR

Caron G, Dame A, Marchand E (2014) Direct model-based visual tracking and pose estimation using mutual information. Image Vis Comput 32(1):54–63

Chun WH, Höllerer T (2013) Real-time hand interaction for augmented reality on mobile phones. In: Proceedings of the 2013 International Conference on Intelligent User Interfaces, IUI ’13, NY, USA, pp 307–314

Comport AI, Marchand E, Chaumette F (2003) A real-time tracker for markerless augmented reality. In: International Symposium on Mixed and Augmented Reality, ISMAR

Davison AJ, Reid ID, Molton ND, Monoslam OS (2007) Real-time single camera slam. Trans Pattern Anal Mach Intell 29(6):1052–1067

Drummond T, Cipolla R (2002) Real-time visual tracking of complex structures. Trans Pattern Anal Mach Intell 24(7):932–946

Engels C, Stewènius H, Nistèr D (2006) Bundle adjustment rules. In: Photogrammetric Computer Vision (PCV), ISPRS

Farenzena M, Bartoli A, Mezouar Y (2008) Efficient camera smoothing in sequential structure-from-motion using approximate cross-validation. In: European Conference on Computer Vision, ECCV

Gay-Bellile V, Lothe P, Bourgeois S, Royer E, Naudet-Colette S (2010) Augmented reality in large environments: Application to aided navigation in urban context. In: International Symposium on Mixed and Augmented Reality, ISMAR

Gay-Bellile V, Tamaazousti M, Dupont R, Naudet-Collette S (2010) A vision-based hybrid system for real-time accurate localization in an indoor environment. In: International Conference on Computer Vision Theory and Applications, VISAPP

Harris C (1992) Tracking with rigid objects. In: MIT Press

Harrison C, Benko H, Omnitouch ADW (2011) Wearable multitouch interaction everywhere. In: Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, UIST ’11, pp 441–450

Wolfgang H, Wezel CV (2013) Gesture-based interaction via finger tracking for mobile augmented reality. Multimed Tools Appl 62(1):233–258

Kempter T, Wendel A, Bischof H (2012) Online model-based multi-scale pose estimation. In: Computer Vision Winter Workshop, CVWW

Klein G, Murray D (2007) Parallel tracking and mapping for small AR workspaces. In: International Symposium on Mixed and Augmented Reality, ISMAR

Klein G, Murray D (2008) Improving the agility of keyframe-based slam. In: European Conference on Computer Vision, ECCV

Kurz D (2014) Thermal touch: Thermography-enabled everywhere touch interfaces for mobile augmented reality applications. In: Proceedings IEEE International Symposium on Mixed and Augmented Reality (ISMAR2014), pp 9–16

Kurz D, Hantsch F, Grobe M, Schiewe A, Bimber O (2007) Laser pointer tracking in projector-augmented architectural environments. ISMAR, pp 1–8

Kyrki V, Kragic D (2011) Tracking rigid objects using integration of model-based and model-free cues. Mach Vis Appl 22(2):323–335

Ladikos A, Benhimane S, Navab N (2007) A real-time tracking system combining template-based and feature-based approaches. In: International Conference on Computer Vision Theory and Applications, VISAPP

Lepetit V, Fua P (2005) Monocular model-based 3d tracking of rigid objects: A survey. Found Trends Comput Graph Vis 1(1):1–89

Lepetit V, Fua P (2006) Keypoint recognition using randomized trees. Trans Pattern Anal Mach Intell 28(9):1465–1479

Lothe P, Bourgeois S, Dekeyser F, Royer E, Dhome M (2009) Towards geographical referencing of monocular slam reconstruction using 3d city models: Application to real-time accurate vision-based localization. In: Computer Vision and Pattern Recognition, CVPR

Lothe P, Bourgeois S, Royer E, Dhome M, Naudet-Collette S (2010) Real-time vehicle global localisation with a single camera in dense urban areas: Exploitation of coarse 3d city models. In: Computer Vision and Pattern Recognition, CVPR

Lourakis MIA, Argyros AA (2005) Is levenberg-marquardt the most efficient optimization algorithm for implementing bundle adjustment?. In: International Conference on Computer Vision, ICCV

Lowe DG (1987) Three-dimensional object recognition from single two-dimensional images. J Artif Intell 31(3):355–395

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Marchand E, Bouthemy P, Chaumette F, Moreau V (1999) Robust real-time visual tracking using a 2d-3d model-based approach. In: International Conference on Computer Vision, ICCV

Marchand E, Chaumette F (2002) Virtual visual servoing: a framework for real-time augmented reality. Comput Graph Forum 21(3):289–298

Marquardt D (1963) An algorithm for least-squares estimation of non linear parameters. J Soc Ind Appl Math 11(1):431–444

Mouragnon E, Lhuillier M, Dhome M, Dekeyser F, Sayd P (2006) Real time localization and 3d reconstruction. In: Conference on Computer Vision and Pattern Recognition, CVPR

Newcombe RA, Davison AJ, Izadi S, Kohli P, Hilliges O, Shotton J, Molyneaux D, Hodges S, Kim D, Kinectfusion AF (2011) Real-time dense surface mapping and tracking. In: IEEE International Symposium on Mixed and Augmented Reality, ISMAR

Newcombe RA, Lovegrove S, Dtam AJD (2011) Dense tracking and mapping in real-time. In: International Conference on Computer Vision, ICCV

Nistér D, Naroditsky O, Bergen J (2004) Visual odometry. In: Computer Vision and Pattern Recognition, CVPR

Petit A, Marchand E, Kanani K (2012) Tracking complex targets for space rendezvous and debris removal applications. In: International Conference on Intelligent Robots and Systems, IROS

Platonov J, Heibel H, Meier P, Grollmann B (2006) A mobile markerless ar system for maintenance and repair. In: International Symposium on Mixed and Augmented Reality, ISMAR

Rothganger F, Lazebnik S, Schmid C, Ponce J (2006) 3d object modeling and recognition using local affine-invariant image descriptors and multi-view spatial constraints. Int J Comput Vis 66(3):231–259

Royer E, Lhuillier M, Dhome M, Chateau T (2005) Localization in urban environments: Monocular vision compared to a differential gps sensor. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR

Simon G (2011) Tracking-by-synthesis using point features and pyramidal blurring. In: International Symposium on Mixed and Augmented Reality, ISMAR

Simon G, Berger M-O (2002) Pose estimation for planar structures. Comput Graph Appl 22(6):46–53

Snavely N, Seitz SM, Szeliski R (2008) Modeling the world from internet photo collections. Int J Comput Vis 80(2):189–210

Sourimant G, Morin L, Bouatouch K (2007) Gps, gis and video fusion for urban modeling. In: Computer Graphics International Conference, CGI

Strasdat H, Montiel JMM, Davison AJ (2010) Real-time monocular slam: Why filter?. In: International Conference on Robotics and Automation, ICRA

Peter FS (2000) Algorithms for plane-based pose estimation. In: Computer Vision and Pattern Recognition, CVPR, pp 1706–1711

Szeliski R (2006) Image alignment and stitching: a tutorial. Found Trends Comput Graph Vis 2(1):1–104

Tamaazousti M, Gay-Bellile V, Naudet-Collette S, Bourgeois S, Dhome M (2011) Nonlinear refinement of structure from motion reconstruction by taking advantage of a partial knowledge of the environment. In: Computer Vision and Pattern Recognition, CVPR

Tamaazousti M, Gay-Bellile V, Naudet-Collette S, Bourgeois S, Dhome M (2011) Real-time accurate localization in a partially known environment: Application to augmented reality on 3d objects. In: International workshop on AR/MR registration, tracking and benchmarking, ISMAR Workshop, TrakMark

Triggs B, McLauchlan PF, Hartley RI, Fitzgibbon AW (2000) Bundle adjustment - a modern synthesis. In: International Workshop on Vision Algorithms Theory and Practice, ICCVW

Vacchetti L, Lepetit V (2004) Stable real-time 3d tracking using online and offline information. Trans Pattern Anal Mach Intell 26(20):1385–1392

Vacchetti L, Lepetit V, Fua P (2004) Combining edge and texture information for real-time accurate 3d camera tracking. In: International Symposium on Mixed and Augmented Reality, ISMAR

Wagner D, Reitmayr G, Mulloni A, Drummond T, Schmalstieg D (2010) Real-time detection and tracking for augmented reality on mobile phones. Trans Vis Comput Graph 16(3):355–368

Whelan T, Johannsson H, Kaess M, Leonard JJ, McDonald JB (2013) Robust real-time visual odometry for dense RGB-D mapping. In: International Conference on Robotics and Automation, ICRA

Whelan T, Kaess M, Fallon MF, Johannsson H, Leonard JJ, Kintinuous JBM (2012) Spatially extended KinectFusion. In: RSS Workshop on RGB-D: Advanced Reasoning with Depth Cameras

Wuest H, Stricker D, Herder J (2007) Tracking of industrial objects by using cad models. J Virtual Real Broadcast 4(1)

Wuest H, Vial F, Stricker D (2005) Adaptive line tracking with multiple hypotheses for augmented reality. In: International Symposium on Mixed and Augmented Reality, ISMAR

Wuest H, Wientapper F, Stricker D (2007) Adaptable model-based tracking using analysis-by-synthesis techniques. In: Computer Analysis of Images and Patterns, CAIP

Acknowledgments

We thank Laster Technologies company who provided the glasses prototype.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tamaazousti, M., Naudet-Collette, S., Gay-Bellile, V. et al. The constrained SLAM framework for non-instrumented augmented reality. Multimed Tools Appl 75, 9511–9547 (2016). https://doi.org/10.1007/s11042-015-2968-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-015-2968-8