Abstract

Automatic indexing of images and videos is a highly relevant and important research area in multimedia information retrieval. The difficulty of this task is no longer something to prove. Most efforts of the research community have been focusing, in the past, on the detection of single concepts in images/videos, which is already a hard task. With the evolution of information retrieval systems, users’ needs become more abstract, and lead to a larger number of words composing the queries. It is important to think about indexing multimedia documents with more than just individual concepts, to help retrieval systems to answer such complex queries. Few studies addressed specifically the problem of detecting multiple concepts (multi-concept) in images and videos. Most of them concern the detection of concept pairs. These studies showed that such challenge is even greater than the one of single concept detection. In this work, we address the problem of multi-concept detection in images/videos by making a comparative and detailed study. Three types of approaches are considered: 1) building detectors for multi-concept, 2) fusing single concepts detectors and 3) exploiting detectors of a set of single concepts in a stacking scheme. We conducted our evaluations on PASCAL VOC’12 collection regarding the detection of pairs and triplets of concepts. We extended the evaluation process on TRECVid 2013 dataset for infrequent concept pairs’ detection. Our results show that the three types of approaches give globally comparable results for images, but they differ for specific kinds of pairs/triplets. In the case of videos, late fusion of detectors seems to be more effective and efficient when single concept detectors have good performances. Otherwise, directly building bi-concept detectors remains the best alternative, especially if a well-annotated dataset is available. The third approach did not bring additional gain or efficiency.

Similar content being viewed by others

References

Aly R, Hiemstra D, de Vries A, de Jong F (2008) A probabilistic ranking framework using unobservable binary events for video search. In: 7th ACM international conference on content-based image and video retrieval, CIVR 2008, pp 349–358. ACM, New York, NY, USA

Ayache S, Quénot G (2008) Video corpus annotation using active learning. In: Proceedings of the IR research, ECIR’08, pp 187–198. Springer-Verlag, Berlin, Heidelberg

Ballas N, Labbé B, Shabou A, Le Borgne H, Gosselin P, Redi M, Merialdo B, Jégou H, Delhumeau J, Vieux R, Mansencal B, Benois-Pineau J, Ayache S, Hamadi A, Safadi B, Thollard F, Derbas N, Quénot G, Bredin H, Cord M, Gao B, Zhu C, tang Y, Dellandrea E, Bichot CE, Chen L, Benot A, Lambert P, Strat T, Razik J, Paris S, Glotin H, Ngoc Trung T, Petrovska Delacrétaz D, Chollet G, Stoian A, Crucianu M (2012) IRIM at TRECVID 2012: semantic indexing and instance search. In: Proc. TRECVID Workshop. Gaithersburg, MD, USA

Brown L, Cao L, Chang SF, Cheng Y, Choudhary A, Codella N, Cotton C, Ellis D, Fan Q, Feris R, Gong L, Hill M, Hua G, Kender J, Merler M, Mu Y, Pankanti S, Smith JR, Yu FX (2013) Ibm research and columbia university trecvid-2013 multimedia event detection (med), multimedia event recounting (mer), surveillance event detection (sed), and semantic indexing (sin) systems. In: Proc. TRECVID Workshop. Gaithersburg, MD, USA

Chang SF, Hsu W, Jiang W, Kennedy L, Xu D, Yanagawa A, Zavesky E (2006) Columbia university trecvid-2006 video search and high-level feature extraction. in proc. trecvid workshop. In: Proc. TRECVID Workshop

Chen SC, Shyu ML, Chen M (2008) An effective multi-concept classifier for video streams. In: 2008 IEEE international conference on semantic computing, pp 80–87, doi:10.1109/ICSC.2008.72, (to appear in print)

Hamadi A, Mulhem P, Quenot G (2013) Conceptual feedback for semantic multimedia indexing. In: 2013 11th international workshop on content-based multimedia indexing (CBMI), pp 53–58, doi:10.1109/CBMI.2013.6576552, (to appear in print)

Hamadi A, Mulhem P, Qunot G (2014) Extended conceptual feedback for semantic multimedia indexing. Multimedia Tools and Applications pp 1–24

Hamadi A, Safadi B, Vuong TTT, Han D, Derbas N, Mulhem P, Qunot G. (2013) Quaero at TRECVID 2013: Semantic Indexing and Instance Search. In: Proc. TRECVID Workshop. Gaithersburg, MD, USA

Ishikawa S, Koskela M, Sjoberg M, Laaksonen J, Oja E, Amid E, Palomaki K, Mesaros A, Kurimo M (2013) Picsom experiments in trecvid 2013. In: Proc. TRECVID Workshop. Gaithersburg, MD, USA

Jiang W (2010) Advanced techniques for semantic concept detection in general videos. Ph.D. thesis, Columbia University

Li X, Snoek CGM, Worring M, Smeulders A (2012) Harvesting social images for bi-concept search. IEEE Trans Multimedia 14(4):1091–1104

Li X, Wang D, Li J, Zhang B (2007) Video search in concept subspace: A text-like paradigm. In: Proc. of CIVR

Platt J (2000) Probabilistic outputs for support vector machines and comparison to regularize likelihood methods. In: Advances in Large Margin Classifiers, pp 61–74

Qi GJ, Hua XS, Rui Y, Tang J, Mei T, Zhang HJ, Prasad AR (2007) Correlative multi-label video annotation. In: Lienhart R, Hanjalic A, Choi S, Bailey BP, Sebe N (eds) Proceedings of the 15th international conference on multimedia 2007, Augsburg, Germany, September 24-29, 2007. ACM, pp 17–26. doi:10.1145/1291233.1291245

Safadi B, Quénot G (2010) Evaluations of multi-learner approaches for concept indexing in video documents. In: RIAO, pp 88–91

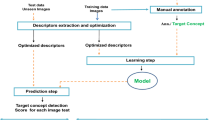

Safadi B, Qunot G (2013) Descriptor Optimization for Multimedia Indexing and Retrieval. In: CBMI 2013, 11th international workshop on content-based multimedia indexing. Veszprem, HUNGARY

Salton G, Fox EA, Wu H (1983) Extended boolean information retrieval. Commun ACM 26(11):1022–1036. doi:10.1145/182.358466

Salton G, Fox EA, Wu H (1983) Extended boolean information retrieval. Commun ACM 26(11):1022–1036

Smith JR, Naphade M, Natsev A (2003) Multimedia semantic indexing using model vectors. In: Proceedings of ICME - Volume 1, pp 445–448. IEEE Computer Society, Washington, DC, USA. http://dl.acm.org/citation.cfm?id=1153922.1154410

Snoek CG, Huurnink B, Hollink L, de Rijke M, Schreiber G, Worring M (2007) Adding semantics to detectors for video retrieval. Trans Multi 9(5):975–986

Wang G, Forsyth DA (2009) Joint learning of visual attributes, object classes and visual saliency. In: ICCV 09, pp 537–544

Wei XY, Jiang YG, Ngo CW (2011) Concept-driven multi-modality fusion for video search. IEEE Trans Circuits Syst Video Technol 21(1):62–73

Weng MF, Chuang YY Multi-cue fusion for semantic video indexing. In: Proceeding of the 16th ACM international conference on multimedia, MM 08, pp 71-80, New York, NY, USA, 2008. ACM. ACM ID : 1459370

Wolpert DH (1992) Stacked generalization. Neural Netw 5:241–259

Xie L, Yan R, Yang J (2008) Multi-concept learning with large-scale multimedia lexicons. In: 15th IEEE international conference on image processing, ICIP 2008, pp 2148–2151, doi:10.1109/ICIP.2008.4712213, (to appear in print)

Yan R, Hauptmann AG (2003) The combination limit in multimedia retrieval. In: Proceedings of the eleventh ACM international conference on multimedia, pp 339–342

Zadeh LA (1965) Fuzzy sets. Inf Control 8:338–353

Acknowledgments

This work was partly realized as part of the Quaero Program funded by OSEO, French State agency for innovation. This work was supported in part by the French project VideoSense ANR-09-CORD-026 of the ANR. Experiments presented in this paper were carried out using the Grid’5000 experimental test bed, being developed under the INRIA ALADDIN development action with support from CNRS, RENATER and several Universities as well as other funding bodies (see https://www.grid5000.fr). The authors wish to thanks the participants of the IRIM (Indexation et Recherche d’Information Multimédia) group of the GDR-ISIS research network from CNRS for providing the descriptors used in these experiments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hamadi, A., Mulhem, P. & Quénot, G. A comparative study for multiple visual concepts detection in images and videos. Multimed Tools Appl 75, 8973–8997 (2016). https://doi.org/10.1007/s11042-015-2730-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-015-2730-2