Abstract

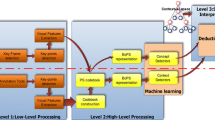

In this paper, we consider the problem of automatically detecting a large number of visual concepts in images or video shots. State of the art systems generally involve feature (descriptor) extraction, classification (supervised learning) and fusion when several descriptors and/or classifiers are used. Though direct multi-label approaches are considered in some works, detection scores are often computed independently for each target concept. We propose a method that we call “conceptual feedback” which implicitly takes into account the relations between concepts to improve the overall concepts detection performance. A conceptual descriptor is built from the system’s output scores and fed back by adding it to the pool of already available descriptors. Our proposal can be iterated several times. Moreover, we propose three extensions of our method. Firstly, a weighting of the conceptual dimensions is performed to give more importance to concepts which are more correlated to the target concept. Secondly, an explicit selection of a set of concepts that are semantically or statically related to the target concept is introduced. For video indexing, we propose a third extension which integrates the temporal dimension in the feedback process by taking into account simultaneously the conceptual and the temporal dimensions to build the high-level descriptor. Our proposals have been evaluated in the context of the TRECVid 2012 semantic indexing task involving the detection of 346 visual or multi-modal concepts. Overall, combined with temporal re-scoring, the proposed method increased the global system performance (MAP) from 0.2613 to 0.3082 ( + 17.9 % of relative improvement) while the temporal re-scoring alone increased it only from 0.2613 to 0.2691 ( + 3.0 %).

Similar content being viewed by others

References

Ayache S, Quénot G (2008) Video corpus annotation using active learning. In: European conference on information retrieval (ECIR). Glasgow, Scotland, pp 187–198

Ballas N, Labbé B, Shabou A, Le Borgne H, Gosselin P, Redi M, Merialdo B, Jégou H, Delhumeau J, Vieux R, Mansencal B, Benois-Pineau J, Ayache S, Hamadi A, Safadi B, Thollard F, Derbas N, Quénot G, Bredin H, Cord M, Gao B, Zhu C, Tang Y, Dellandrea E, Bichot CE, Chen L, Benoît A, Lambert P, Strat T, Razik J, Paris S, Glotin H, Ngoc Trung T, Petrovska Delacrétaz D, Chollet G, Stoian A, Crucianu M (2012) IRIM at TRECVID 2012: Semantic Indexing and Instance Search. In: Proceedings on TRECVID Workshop. Gaithersburg, MD, USA

Cover TM, Thomas JA (1991) Elements of information theory. Wiley-Interscience, New York

Hamadi A, Quenot G, Mulhem P (2012) Two-layers re-ranking approach based on contextual information for visual concepts detection in videos. In: 2012 10th international workshop on content-based multimedia indexing (CBMI), pp 1–6. doi:10.1109/CBMI.2012.6269837

Hradis M, Kolár M, Láník A, Král J, Zemcík P, Smrz P (2012) Annotating images with suggestions - user study of a tagging system. ACIVS, pp 155–166

Inoue N, Kamishima Y, Mori K, Shinoda K (2012) TokyoTechCanon at TRECVID 2012. In: TRECVID 2012. USA, Gaithersburg, MD

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In: 2006 IEEE computer society conference on computer vision and pattern recognition, vol 2, pp 2169–2178. doi:10.1109/CVPR.2006.68

Over P, Awad G, Michel M, Fiscus J, Sanders G, Shaw B, Kraaij W, Smeaton AF, Quénot G (2012) TRECVID 2012 – an overview of the goals, tasks, data, evaluation mechanisms and metrics. In: Proceedings of TRECVID 2012. NIST, USA

Platt JC (1999) Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. In: Advances in large margin classifiers. MIT Press, pp 61–74

Qi GJ, Hua XS, Rui Y, Tang J, Mei T, Wang M, Zhang HJ (2008) Correlative multilabel video annotation with temporal kernels. ACM Trans Multimed Comput Commun Appl 5(1):3:1–3:27. doi:10.1145/1404880.1404883

Qi GJ, Hua XS, Rui Y, Tang J, Mei T, Zhang HJ (2007) Correlative multi-label video annotation. In: Lienhart R, Prasad AR, Hanjalic A, Choi S, Bailey BP, Sebe N (eds) Proceedings of the 15th international conference on multimedia. ACM, Augsburg, Germany, pp 17–26. doi:10.1145/1291233.1291245

Ramesh Naphade M, Kozintsev IV, Huang TS (2002) Factor graph framework for semantic video indexing. IEEE Trans Circ Sys Video Technol 12(1):40–52. doi:10.1109/76.981844

Safadi B, Quénot G (2011) Re-ranking by local re-scoring for video indexing and retrieval. In: CIKM 2011: 20th ACM conference on information and knowledge management, CIKM ’11. ACM, Glasgow, Scotland, pp 2081–2084. http://dl.acm.org/citation.cfm?doid=2063576.2063895

Safadi B, Quénot G (2011) Re-ranking for multimedia indexing and retrieval. In: ECIR 2011: 33rd European conference on information retrieval. Springer, Dublin, Ireland, pp 708–711. http://link.springer.com/chapter/10.1007$%2F978-3-642-20161-5_$76

Safadi B, Quénot G (2013) Descriptor optimization for multimedia indexing and retrieval. In: Proceedings of content based multimedia ingexing (CBMI) Workshop, Veszprém, Hungary

Smeaton A.F., Over P., Kraaij W. (2009). In: Divakaran A. (ed) High-Level Feature Detection from Video in TRECVid: a 5-Year Retrospective of Achievements. Multimedia Content Analysis, Theory and Applications. Springer Verlag, Berlin, pp 151–174

Smeulders AWM, Worring M, Santini S, Gupta A, Jain R (2000) Content-based image retrieval at the end of the early years. IEEE Trans Pattern Anal Mach Intell 12:1349–1380. doi:10.1109/34.895972

Smith JR, Naphade MR, Natsev A (2003) Multimedia semantic indexing using model vectors. In: ICME. IEEE, pp 445–448

Snoek CG, Worring M, Smeulders AW (2005) Early versus late fusion in semantic video analysis. In: Proceedings of ACM multimedia

Snoek CGM, Worring M (2009) Concept-based video retrieval. Found Trends Inf Retr 4(2):215–322

Snoek CGM, Worring M, Hauptmann AG (2006) Learning rich semantics from news video archives by style analysis. ACM Trans Multimed Comput Commun Appl 2(2):91–108. doi:10.1145/1142020.1142021

Tao M, Shyu ML (2012) Leveraging concept association network for multimedia rare concept mining and retrieval. In: ICME. IEEE, pp 860–865

Tiberius Strat S., Benot A, Bredin H, Quénot G, Lambert P (2012) Hierarchical late fusion for concept detection in videos. In: ECCV 2012, Workshop on Information Fusion in Computer Vision for Concept Recognition. Firenze, Italy

Tseng B, Lin CY, Naphade M, Natsev A, Smith J (2003) Normalized classifier fusion for semantic visual concept detection. In: 2003 International conference on image processing, 2003, ICIP 2003, vol 2, pp II–535–8 vol 3. doi:10.1109/ICIP.2003.1246735

Wang F, Merialdo B (2009) Eurecom at trecvid 2009 high-level feature extraction. In: TREC2009 notebook

Weng MF, Chuang YY (2012) Cross-domain multicue fusion for concept-based video indexing. IEEE Trans Pattern Anal Mach Intell 34(10):1927–1941. doi:10.1109/TPAMI.2011.273

Yilmaz E, Aslam JA (2006) Estimating average precision with incomplete and imperfect judgments. In: Proceedings of the 15th ACM international conference on Information and knowledge management, CIKM ’06:102–111. doi:10.1145/1183614.1183633

Yuan J, Li J, Zhang B (2007) Exploiting spatial context constraints for automatic image region annotation. In: Proceedings of the 15th international conference on multimedia, Multimedia ’07: 595–604. doi:10.1145/1291233.1291379

Zhu Q, Liu D, Meng T, Chen C, Shyu ML, Yang Y, Ha H, Fleites F, Chen SC (2012) Florida international university and university of miami trecvid 2012 - semantic indexing. In: TRECVID 2012. USA, Gaithersburg, MD

Acknowledgments

This work was partly realized as part of the Quaero Program funded by OSEO, French State agency for innovation. This work was supported in part by the French project VideoSense ANR-09-CORD-026 of the ANR. Experiments presented in this paper were carried out using the Grid’5000 experimental test bed, being developed under the INRIA ALADDIN development action with support from CNRS, RENATER and several Universities as well as other funding bodies (see https://www.grid5000.fr). The authors wish to thanks the participants of the IRIM (Indexation et Recherche d’Information Multimédia) group of the GDR-ISIS research network from CNRS for providing the descriptors used in these experiments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hamadi, A., Mulhem, P. & Quénot, G. Extended conceptual feedback for semantic multimedia indexing. Multimed Tools Appl 74, 1225–1248 (2015). https://doi.org/10.1007/s11042-014-1937-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-014-1937-y