Abstract

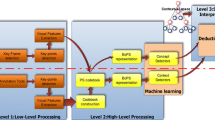

The rapidly increasing quantity of publicly available videos has driven research into developing automatic tools for indexing, rating, searching and retrieval. Textual semantic representations, such as tagging, labelling and annotation, are often important factors in the process of indexing any video, because of their user-friendly way of representing the semantics appropriate for search and retrieval. Ideally, this annotation should be inspired by the human cognitive way of perceiving and of describing videos. The difference between the low-level visual contents and the corresponding human perception is referred to as the ‘semantic gap’. Tackling this gap is even harder in the case of unconstrained videos, mainly due to the lack of any previous information about the analyzed video on the one hand, and the huge amount of generic knowledge required on the other. This paper introduces a framework for the Automatic Semantic Annotation of unconstrained videos. The proposed framework utilizes two non-domain-specific layers: low-level visual similarity matching, and an annotation analysis that employs commonsense knowledgebases. Commonsense ontology is created by incorporating multiple-structured semantic relationships. Experiments and black-box tests are carried out on standard video databases for action recognition and video information retrieval. White-box tests examine the performance of the individual intermediate layers of the framework, and the evaluation of the results and the statistical analysis show that integrating visual similarity matching with commonsense semantic relationships provides an effective approach to automated video annotation.

Similar content being viewed by others

References

Ahmed A (2009) Video representation and processing for multimedia data mining, pp 1–31. Semantic Mining Technologies for Multimedia Databases. Information Science Publishing

Altadmri A, Ahmed A (2009) Automatic semantic video annotation in wide domain videos based on similarity and commonsense knowledgebases. In: The IEEE international conference on signal and image processing applications, pp 74–79

Altadmri A, Ahmed A (2009) Video databases annotation enhancing using commonsense knowledgebases for indexing and retrieval. In: The IASTED international conference on artificial intelligence and soft computing, vol 683, pp 34–39

Altadmri A, Ahmed A (2009) Visualnet: commonsense knowledgebase for video and image indexing and retrieval application. In: IEEE international conference on intelligent computing and intelligent systems, vol 3, pp 636–641

Amir A, Basu S, Iyengar G, Lin CY, Naphade M, Smith JR, Srinivasan S, Tseng B (2004) A multi-modal system for the retrieval of semantic video events. Comput Vis Image Underst 96(2):216–236

Bagdanov AD, Bertini M, Bimbo AD, Serra G, Torniai C (2007) Semantic annotation and retrieval of video events using multimedia ontologies. In: International conference on semantic computing, pp 713–720

Basharat A, Zhai Y, Shah M (2008) Content based video matching using spatiotemporal volumes. Comput Vis Image Underst 110(3):360–377

Bay H, Tuytelaars T, Gool LV (2006) Surf: speeded up robust features. In: European conference on computer vision, vol 3951, pp 404–417

Blank M, Gorelick L, Shechtman E, Irani M, Basri R (2005) Actions as space-time shapes. In: Tenth IEEE international conference on computer vision, vol 2, pp 1395–1402

Brox T, Malik J (2011) Large displacement optical flow: descriptor matching in variational motion estimation. IEEE Trans Pattern Anal Mach Intell 33(3):500–513

Chandrasekaran B, Josephson JR, Benjamins VR (1999) What are ontologies, and why do we need them? IEEE Intell Syst Their Appl 14(1):20–26

Deng Y, Manjunath B (1997) Content-based search of video using color, texture, and motion. In: International conference on image processing, vol 2, pp 534–537

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: Computer vision and pattern recognition, pp 248–255

Farhadi A, Hejrati M, Sadeghi M, Young P, Rashtchian C, Hockenmaier J, Forsyth D (2010) Every picture tells a story: generating sentences from images. In: The 11th European conference on computer vision, vol 6314, pp 15–29

Fellbaum C (1998) WordNet: an electronic lexical database. MIT Press, Cambridge, MA

Fergus R, Fei-Fei L, Perona P, Zisserman A (2010) Learning object categories from internet image searches. Proc IEEE 98(8):1453–1466

Guillaumin M, Mensink T, Verbeek J, Schmid C (2009) Tagprop: discriminative metric learning in nearest neighbor models for image auto-annotation. In: IEEE 12th international conference on computer vision, pp 309–316

Gupta A, Kembhavi A, Davis LS (2009) Observing human-object interactions: using spatial and functional compatibility for recognition. IEEE Trans Pattern Anal Mach Intell 31(10):1775–1789

Haering N, Qian RJ, Sezan MI (2000) A semantic event-detection approach and its application to detecting hunts in wildlife video. IEEE Trans Circuits Syst Video Technol 10(6):857–868

Hauptmann AG, Chen MY, Christel M, Lin WH, Yang J (2007) A hybrid approach to improving semantic extraction of news video. In: International conference on semantic computing, pp 79–86

Hsu MH, Tsai MF, Chen HH (2008) Combining wordnet and conceptnet for automatic query expansion: a learning approach. In: Asia information retrieval symposium, vol 4993, pp 213–224. Springer

Ikizler N, Duygulu P (2007) Human action recognition using distribution of oriented rectangular patches. In: ICCV workshop on human motion understanding, modeling, capture and animation, pp 271–284

Jiang YG, Yang J, Ngo CW, Hauptmann AG (2010) Representations of keypoint-based semantic concept detection: a comprehensive study. IEEE Trans Multimedia 12(1):42–53

Kapoor A, Grauman K, Urtasun R, Darrell T (2010) Gaussian processes for object categorization. Int J Comput Vis 88(2):169–188

Lenat DB (1995) Cyc: a large-scale investment in knowledge infrastructure. Commun ACM 38(11):33–38

Liu H, Singh P (2004) Conceptnet: a practical commonsense reasoning tool-kit. BT Technol J 22(4):211–226

Liu J, Luo J, Shah M (2009) Recognizing realistic actions from videos in the wild. In: Computer vision and pattern recognition, pp 1996–2003

Lowe DG (1999) Object recognition from local scale-invariant features. In: 7th international conference on computer vision, vol 2, pp 1150–1157

Motulsky H (1999) Analyzing data with GraphPad prism. GraphPad Software Inc, San Diego, CA

Ngo CW, Jiang YG, Wei XY, Zhao W, Liu Y, Wang J, Zhu S, Chang SF (2009) Vireo/dvmm at trecvid 2009: high-level feature extraction, automatic video search, and content-based copy detection. In: TREC video retrieval evaluation workshop online proceedings

Niebles J, Fei-Fei L (2007) A hierarchical model of shape and appearance for human action classification. In: IEEE conference on computer vision and pattern recognition, pp 1–8

Over P, Awad G, Fiscus J, Antonishek B, Michel M, Smeaton AF, Kraaij W, Qunot G (2011) Trecvid 2010: an overview of the goals, tasks, data, evaluation mechanisms, and metrics. In: TRECVid 2010, pp 1–34

Shyu ML, Xie Z, Chen M, Chen SC (2008) Video semantic event/concept detection using a subspace-based multimedia data mining framework. IEEE Trans Multimedia 10(2):252–259

Siersdorfer S, Pedro JS, Sanderson M (2009) Automatic video tagging using content redundancy. In: The 32nd international ACM SIGIR conference on research and development in information retrieval, pp 395–402

Sivic J, Zisserman A (2009) Efficient visual search of videos cast as text retrieval. IEEE Trans Pattern Anal Mach Intell 31(4):591–606

Smeaton AF, Browne P (2006) A usage study of retrieval modalities for video shot retrieval. Inf Process Manag 42(5):1330–1344

Stanford_NLP_Group (2008) The Stanford nlp log-linear part of speech tagger (28–09–2008). http://nlp.stanford.edu/software/tagger.shtml

TrecVid (2011) Trec video retrieval track, bbc ruch 2005 (01–02–2011). http://www-nlpir.nist.gov/projects/trecvid/

UCF_Computer_Vision_lab (2011) Ucf action dataset (11–11–2011). http://www.cs.ucf.edu/~liujg/YouTube_Action_dataset.html

Ulges A, Schulze C, Koch M, Breuel TM (2010) Learning automatic concept detectors from online video. Comput Vis Image Underst 114(4):429–438

Ventura C, Martos M, Nieto XG, Vilaplana V, Marques F (2012) Hierarchical navigation and visual search for video keyframe retrieval. In: The international conference on advances in multimedia modeling, pp 652–654

Wei XY, Jiang YG, Ngo CW (2011) Concept-driven multi-modality fusion for video search. IEEE Trans Circuits Syst Video Technol 21(1):62–73

Yuan P, Zhang B, Li J (2008) Semantic concept learning through massive internet video mining. In: IEEE international conference on data mining workshops, pp 847–853

Zhao WL, Wu X, Ngo CW (2010) On the annotation of Web videos by efficient near-duplicate search. IEEE Trans Multimedia 12(5):448–461

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Altadmri, A., Ahmed, A. A framework for automatic semantic video annotation. Multimed Tools Appl 72, 1167–1191 (2014). https://doi.org/10.1007/s11042-013-1363-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-013-1363-6