Abstract

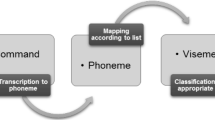

There are numerous multimedia applications such as talking head, lip reading, lip synchronization, and computer assisted pronunciation training, which entices researchers to bring clustering and analyzing viseme into focus. With respect to the fact that clustering and analyzing visemes are language dependent process, we concentrated our research on Persian language, which indeed has suffered from the lack of such study. To this end, we proposed a novel adopting image-based approach which consists of four main steps including (a) extracting the lip region, (b) obtaining Eigenviseme of each phoneme considering coarticulation effect, (c) mapping each viseme into its subspace and other phonemes’ subspaces in order to create the distance matrix so as to calculate the distance between viseme’s cluster, and finally (d) comparing similarity of each viseme based on the weight value of reconstructed one. In order to indicate the robustness of the proposed algorithm, three sets of experiments were conducted on Persian and English databases in which Consonant/Vowel and Consonant/Vowel/Consonant syllables were examined. The results indicated that the proposed method outperformed the observed state-of-the-art algorithms in feature extraction, and it had a comparable efficiency in generating adequate clusters. Moreover, obtained results reached a milestone in grouping Persian visemes with respect to the perceptual test given by volunteers.

Similar content being viewed by others

Notes

C is stand for Consonant and V is stand for Vowel

References

Bälter O, Engwall O, Öster A-M, Kjellström H (2005) Wizard-of-Oz test of ARTUR: a computer-based speech training system with articulation correction. Paper presented at the proceedings of the 7th international ACM SIGACCESS conference on computers and accessibility, Baltimore, MD, USA

Bastanfard A, Aghaahmadi M, Kelishami A, Fazel M, Moghadam M (2009) Persian viseme classification for developing visual speech training application advances in multimedia information processing—PCM 2009. In: Muneesawang P, Wu F, Kumazawa I, Roeksabutr A, Liao M, Tang X (eds) Lecture notes in computer science, vol 5879. Springer, Berlin, pp 1080–1085

Bastanfard A, Fazel M, Kelishami AA, Aghaahmadi M (2009) A comprehensive audio-visual corpus for teaching sound persian phoneme articulation. Paper presented at the Proceedings of the 2009 IEEE international conference on Systems, Man and Cybernetics, San Antonio, TX, USA

Bastanfard A, Fazel M, Kelishami A, Aghaahmadi M (2010) The Persian linguistic based audio-visual data corpus, AVA II, considering coarticulation. Advances in multimedia modeling. In: Boll S, Tian Q, Zhang L, Zhang Z, Chen Y-P (eds) Lecture notes in computer science, vol 5916. Springer, Berlin, pp 284–294

Bastanfard A, Rezaei NA, Mottaghizadeh M, Fazel M (2010) A novel multimedia educational speech therapy system for hearing impaired children. Paper presented at the proceedings of the advances in multimedia information processing, and 11th Pacific Rim conference on Multimedia: Part II, Shanghai, China

Belkin M, Niyogi P (2001) Laplacian eigenmaps and spectral techniques for embedding and clustering. Adv Neural Inf Process Syst 14:585–591

Benguerel A-P, Pichora-Fuller MK (1984) Coarticulation effects in lipreading. J Speech Hear Res 25(4):600–607

Ezzat T, Poggio T (2000) Visual speech synthesis by morphing visemes. Int J Comput Vision 38(1):45–57

Fisher CG (1968) Confusions among visually perceived consonants. J Speech Hear Res 11:796–804

Garcia C, Zikos G, Tziritas G (1998) A wavelet-based framework for face recognition. Paper presented at the workshop on advances in facial image analysis and recognition technology, 5th European conference on computer vision

Harris C, Stephens M (1988) A combined corner and edge detector. Paper presented at the proceedings of the 4th Alvey vision conference

Hartigan JA, Wong MA (1977) Algorithm AS 136: a K-means clustering algorithm. J R Stat Soc Ser C (Appl Stat) 28(1):100–108

Henton C, Edelman B (1996) Generating and manipulating emotional synthetic speech on a personal computer. Multimed Tools Appl 3(2):105–125

http://www.ldc.upenn.edu/Catalog/CatalogEntry.jsp?catalogId=LDC2009V01 Audiovisual database of spoken American English. Accessed 13th December 2011

Joe H, Ward J (1963) Hierarchical grouping to optimize an objective function. J Am Stat Assoc 58(301):236–244

Karabalkan H, Erdoğan H (2007) Audio-visual speech recognition in vehicular noise using a multi-classifier approach. Paper presented at the DSP for in-Vehicle and Mobile Systems, Istanbul, Turkey

Kjellstrm H, Engwall O, Abdou S, Balter O (2007) Audio-visual phoneme classification for pronunciation training applications paper presented at the 8th Annual Conference of the International Speech Communication Association

Kjellström H, Engwall O (2009) Audiovisual-to-articulatory inversion. Speech Commun 51(3):195–209

Kohonen T (1990) The self-organizing map. Proc IEEE 78(9):1464–1480

Krňoul Z, Císař P, Železný M, Holas J (2005) Viseme analysis for speech-driven facial animation for Czech audio-visual speech synthesis. Paper presented at the SPECOM, Moscow, Russia

Lehiste I, Shockey L (1972) Coarticulation effects in the identification of final plosives. J Acoust Soc Am 51(1A):101

Leszczynski M, Skarbek W (2005) Viseme classification for talking head application computer analysis of images and patterns. In: Gagalowicz A, Philips W (eds) Lecture notes in computer science, vol 3691. Springer, Berlin, pp 773–780

Lofqvist A (2009) Vowel-to-vowel coarticulation in Japanese: the effect of consonant duration. J Acoust Soc Am 125(2):636–639

Mansoorizadeh M, Charkari NM (2010) Multimodal information fusion application to human emotion recognition from face and speech. Multimed Tools Appl 49(2):277–297

Melenchon J, Simo J, Cobo G, Martinez E (2007) Objective viseme extraction and audiovisual uncertainty: estimation limits between auditory and visual modes. Paper presented at the International Conference on Auditory-Visual Speech Processing

Möttönen R, Olivés J, Kulja J, Sams M (2000) Parameterized visual speech synthesis and its evaluation Proc. of EUSIPCO 2000, Tampere, Finland

Nefian AV, Liang L, Pi X, Liu X, Mao C, Murphy K (2002) A coupled HMM for audio-visual speech recognition. In: Proceedings of ICASSP‘02

Potamianos G, Graf HP, Cosatto E (1998) An image transform approach for HMM based automatic lipreading. International Conference on Image Processing ICIP (3):173–177

Potamianos G, Neti C, Luettin J, Matthews I (2004) Audiovisual automatic speech recognition: an overview. Issues inb Visual and Audio-Visual Speech Processing, MIT Press

Safabakhsh R, Mirzazadeh F. AUT-Talk: a farsi talking head. In: information and communication technologies, 2006. ICTTA ‘06. 2nd, 0-0 0 2006, pp 2994–2998

Salah W, Walid M, Abdelmajid H (2007) Lip localization and viseme classification for visual speech recognition. Int J Comput Inf Sci 5(1):62–75

Scholkopf B, Smola AJ, Muller K-R (1998) Nonlinear component analysis as a kernel eigenvalue problem. Neural Comput 10(5):1299–1319

Shaw R, Laplante PA, Salinas J, Riccone R (1996) A multimedia speech learning system for the hearing impaired. Multimed Tools Appl 3(1):55–70

Tiddeman B, Perrett D (2002) Prototyping and transforming visemes for animated speech. Paper presented at the proceedings of the computer animation

Turk M, Pentland A (1991) Eigenfaces for recognition. J Cogn Neurosci 3(1):71–86

Visser M, Poel M, Nijholt A (1999) Classifying visemes for automatic lipreading. Paper presented at the Proceedings of the Second International Workshop on Text, Speech and Dialogue

Waters K, Levergood T (1995) DECface: a system for synthetic face applications. Multimed Tools Appl 1(4):349–366

Williams JJ, Rutledge JC, Katsaggelos AK, Garstecki DC (1998) Frame rate and viseme analysis for multimedia applications to assist speechreading. J VLSI Signal Process 20(1):7–23

Yu K, Jiang X, Bunke H (2002) Sentence lipreading using hidden Markov model with integrated grammar. In: Hidden Markov models. World Scientific Publishing Co., Inc., pp 161–176

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Aghaahmadi, M., Dehshibi, M.M., Bastanfard, A. et al. Clustering Persian viseme using phoneme subspace for developing visual speech application. Multimed Tools Appl 65, 521–541 (2013). https://doi.org/10.1007/s11042-012-1128-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-012-1128-7