Abstract

This paper deals with information retrieval and semantic indexing of multimedia documents. We propose a generic scheme combining an ontology-based evidential framework and high-level multimodal fusion, aimed at recognising semantic concepts in videos. This work is represented on two stages: First, the adaptation of evidence theory to neural network, thus giving Neural Network based on Evidence Theory (NNET). This theory presents two important information for decision-making compared to the probabilistic methods: belief degree and system ignorance. The NNET is then improved further by incorporating the relationship between descriptors and concepts, modeled by a weight vector based on entropy and perplexity. The combination of this vector with the classifiers outputs, gives us a new model called Perplexity-based Evidential Neural Network (PENN). Secondly, an ontology-based concept is introduced via the influence representation of the relations between concepts and the ontological readjustment of the confidence values. To represent this relationship, three types of information are computed: low-level visual descriptors, concept co-occurrence and semantic similarities. The final system is called Ontological-PENN. A comparison between the main similarity construction methodologies are proposed. Experimental results using the TRECVid dataset are presented to support the effectiveness of our scheme.

Similar content being viewed by others

Notes

We can also use the weight in the feature extraction step.

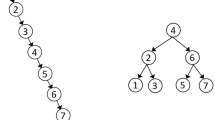

NIST TREC-2003 Video Retrieval Benchmark defines 133 video concepts, organized hierarchically and each video data belong to one or more concepts [35].

Three image datasets are used: Corel Images, Google Images, and LabelMe.

The LSCOM-lite (Large-Scale Concept Ontology for Multimedia) [36] annotations include 39 concepts, which are interim results from the effort in developing a LSCOM. The dimensions consist of program category, setting/scene/site, people, object, activity, event, and graphics. A collaborative effort among participants in the TRECVid benchmark was completed to produce the annotations. Human subjects judge the presence or absence of each concept in the video shots.

The concept subsumes is the most common specific concept.

The balanced error rate is the average of the errors on each class. BER is used in “Performance Prediction Challenge Workshop”.

NNET: Neural Network based on Evidence Theory.

PENN: Perplexity-based Evidential Neural Network.

Onto-PENN: Ontological readjustment of the PENN. The results presented in the rest of paper for the Onto-PENN, are given by (26) for the semantic similarity computation.

References

Adamek T (2007) Extension of MPEG-7 low-level visual descriptors for TRECVid07. Kspace Technical Report, FP6-027026

Aigrain P, Joly P (1994) The automatic real-time analysis of film editing and transition effects and its applications. Comput Graph 18(1):93–103

Ayache S, Quènot G (2007) TRECVid 2007 collaborative annotation using active learning. In: TRECVid, 11th international workshop on video retrieval evaluation, Gaithersburg, USA

Benmokhtar R, Huet B (2006) Classifier fusion: combination methods for semantic indexing in video content. In: International conference on artificial neural networks, pp 65–74

Benmokhtar R, Huet B (2007) Neural network combining classifier based on Dempster-Shafer theory for semantic indexing in video content. In: International multimedia modeling conference, pp 196–205

Benmokhtar R, Huet B (2008) Perplexity-based evidential neural network classifier fusion using MPEG-7 low-level visual features. In: ACM international conference on multimedia information retrieval, pp 336–341

Benmokhtar R, Huet B (2009) Hierarchical ontology-based robust video shots indexing using global MPEG-7 visual descriptors. In: Proceedings of the international workshop on content-based multimedia indexing, pp 195–200

Berners T, Hendler J, Lassila O (2011) The semantic web. Scientific American, pp 29–37

Chang S-F, Chen W, Meng H, Sundaram H, Zhong D (1998) A fully automated content-based video search engine supporting spatiotemporal queries. In: IEEE transactions circuits and systems for video technology, pp 602–615

Denoeux T (1995) An evidence-theoretic neural network classifer. In: International conference on systems, man and cybernetics, vol 31, pp 712–717

Dimitrova N (2003) Multimedia content analysis: the next wave. In: International conference on image and video retrieval. Lecture notes in computer science, vol 25, pp 8–17

Duin R, Tax D (2000) Experiements with classifier combining rules. In: Proc. first int. workshop MCS 2000, vol 1857, pp 16–29

Faloutsos C, Barber R, Flickner M, Hafner J, Niblack W, Petkovic D, Equitz W (1994) Efficient and effective querying by image content. JIIS 3(3), 231–262

Fan J, Gao Y, Luo H (2007) Hierarchical classification for automatic image annotation. In: Proceedings of the 30th annual international ACM SIGIR conference on research and development in information retrieval, pp 111–118

Gao J, Goodman J, Li M, Lee K (2001) Toward a unified approach to statistical language modeling for chinese. In: ACM transactions on Asian language information processing

Hauptmann A, Christel M, Concescu R, Gao J, Jin Q, Lin W, Pan J, Stevens S, Yan R, Yang J, Zhang Y (2005) CMU informedia’s TRECVid 2005 skirmishes. In: TREC video retrieval evaluation online proceedings

ISO/IEC 14496-2. Information Technology (2001) Coding of moving pictures and associated audio information.

Jain A, Duin R, Mao J (2000) Statistical pattern recognition: a review. IEEE Trans Pattern Anal Mach Intell 20(1), 4–37

Jiang J, Conrath D-W (1997) Semantic similarity based on corpus statistics and lexical taxonomy. In: International conference research on computational linguistics

Jiang W, Cotton C, Chang S-F, Ellis D, Loui A (2009) Short-term audio-visual atoms for generic video concept classification. In: MM ’09: proceedings of the seventeen ACM international conference on multimedia, pp 5–14

Kasutani E, Yamada A (2001) The MPEG-7 color layout descriptor: a compact image feature description for high-speed image/ video retrieval. In: Proceedings of the IEEE international conference on image processing, vol 1, pp 674–677

Koskela M, Smeaton A (2006) Clustering-based analysis of semantic concept models for video shots. In: Proceedings of the international conference on multimedia and expo, pp 45–48

Koskela M, Smeaton A, Laaksonen J (2007) Measuring concept similarities in multimedia ontologies: analysis and evaluations. IEEE Trans Multimedia 9:912–922

Kotsiantis S-B (2007) Supervised machine learning: a review of classification techniques. Informatica 31:249–268

Kuncheva L (2003) Fuzzy versus nonfuzzy in combining classifiers designed by bossting. IEEE Trans Fuzzy Syst 11(6):729–741

Kuncheva L, Bezdek JC, Duin R (2001) Decision templates for multiple classifier fusion: an experiemental comparaison. Pattern Recogn 34:299–314

Laaksonen J, Moskela M, Oja E (2004) Class distributions on SOM surfaces for feature extraction and object retrieval. Neural Netw 17:1121–1133

Li B, Goh K (2003) Confidence-based dynamic ensemble for image annotation and semantics discovery. In: Proceedings of the eleventh ACM international conference on multimedia, pp 195–206

Li Y, Bandar ZA, Mclean D (2003) An approach for measuring semantic similarity between words using multiple information sources. IEEE Trans Knowl Data Eng 15(4):871–882

Lin D (1998) An information-theoretic definition of similarity. In: Proceedings of the 15th international conference on machine learning. Morgan Kaufmann, pp 296–304

Manjunath B, Salembier P, Sikora T (2002) Introduction to MPEG-7: multimedia content description interface. Wiley, New York

Messing D-S, Beek PV, Errico J-H (2001) The MPEG-7 color structure descriptor: image description using color and local spatial information. In: Proceedings of the IEEE international conference on image processing, vol 1, pp 670–673

Naphade MR, Kozintsev I, Huang T (2000) Probabilistic semantic video indexing. In: Proceedings of neural information processing systems, pp 967–973

Naphade M, Kristjansson T, Frey B, Huang T (1998) Probabilistic multimedia objects (multijects): a novel approach to video indexing and retrieval in multimedia systems. In: Proceedings of the IEEE international conference on image processing, pp 536–540

Naphade M, Kennedy L, Kender J, Chang S, Smith J, Over P, Hauptmann A (2005) A light scale concept ontology for multimedia understanding for TRECVid 2005 (LSCOM-Lite). IBM Research Technical Report

Naphade M, Kennedy L, Kender J, Chang S, Smith J, Over P, Hauptmann A (2005) A light scale concept ontology for multimedia understanding for trecvid 2005. IBM Research Technical Report

OpenCV (2010) Intelcorporation: open source computer vision library: reference manual. http://opencvlibrary.sourceforge.net

Park D, Jeon YS, Won CS (2000) Efficient use of local edge histogram descriptor. In: Proceedings of ACM workshop on multimedia, pp 51–54

Pentland A, Picard R, Sclaroff S (1994) Photobook: content-based manipulation of image databases. In: Proceedings of SPIE conference on storage and retrieval for image and video databases

Rada R, Mili H, Bicknell E, Blettner M (1989) Development and application of a metric on semantic nets. IEEE Trans Syst Man Cybern 19(1):17–30

Resnik P (1995) Using information content to evaluate semantic similarity in a taxonomy. In: Proceedings of the 14th international joint conference on artificial intelligence, pp 448–453

Seco N, Veale T, Hayes J (2004) An intrinsic information content metric for semantic similarity in WordNet. In: Proceedings of European conference on artificial intelligence

Slimani T, BenYaghlane B, Mellouli K (2007) Une extension de mesure de similarité entre les concepts d’une ontologie. In: International conference on sciences of electronic, technologies of information and telecommunications, pp 1–10

Smith J-R, Chang S-F (1996) VisualSEEk: a fully automated content-based image query system. In: Proceedings of ACM international conference on multimedia, pp 87–98

Snoek C-M, Worring M (2005) Multimodal video indexing: a review of the state-of-the-art. Multimedia Tools and Applications 25:5–35

Snoek C, Worring M, Geusebroek J-M, Koelma D-C, Seinstra F-J (2004) The mediamill TRECVid 2004 semantic viedo search engine. In: TREC video retrieval evaluation online proceedings

Souvannavong F (2005) Indexation et recherche de plans vidéo par le contenu sémantique. PhD thesis, Eurécom, France

Sun X, Manjunath B, Divakaran A (2002) Representation of motion activity in hierarchical levels for video indexing and filtering. In: Proceedings of the IEEE international conference on image processing, pp 149–152

TRECVID (2010). Digital video retrieval at NIST. http://www-nlpir.nist.gov/projects/trecvid/

Tsinaraki C, Polydoros P, Christodoulakis S (2004) Interoperability support for ontology-based video retrieval applications. In: Proceedings of the third international conference on image and video retrieval

Vapnik V (2000) The nature of statistical learning theory. Springer, New York

Vembu S, Kiesel M, Sintek M, Baumann S (2006) Towards bridging the semantic gap in multimedia annotation and retrieval. In: Proceedings of the 1st international workshop on semantic web annotations for multimedia

Wactlar H, Kanade T, Smith MA, Stevens SM (1996) Intelligent access to digital video: the informedia project. In: IEEE computer, vol 29

Wu Z, Palmer M (1994) Verbs semantics and lexical selection. In: Proceedings of the 32nd annual meeting on association for computational linguistics, pp 133–138

Wu Y, Tseng B, Smith J (2004) Ontology-based multi-classification learning for video concept detection. In: Proceedings of the international conference on multimedia and expo, vol 2, pp 1003–1006

Xu F, Zhang Y (2006) Evaluation and comparison of texture descriptors proposed in MPEG-7. J Vis Commun Image Represent 17:701–716

Xu L, Krzyzak A, Suen C (1992) Methods of combining multiple classifiers and their application to hardwriting recognition. IEEE Trans Syst Man Cybern 22:418–435

Yining D, Manjunath B (1998) Netra-V: toward an object-based video representation. In: Proceedings of IEEE conference of multimedia and expo, vol 8, no 5, pp 616–627

Acknowledgements

This research was supported by Eurecom’s industrial members: Ascom, Bouygues Telecom, Cegetel, France Telecom, Hitachi, ST Microelectronics, Motorola, Swisscom, Texas Instruments and Thales.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Benmokhtar, R., Huet, B. An ontology-based evidential framework for video indexing using high-level multimodal fusion. Multimed Tools Appl 73, 663–689 (2014). https://doi.org/10.1007/s11042-011-0936-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-011-0936-5