Abstract

The procedure of updating an existing but inaccurate model is an essential step toward establishing an effective model. Updating damping and stiffness matrices simultaneously with measured modal data can be mathematically formulated as following two problems. Problem 1: Let M a ∈SR n×n be the analytical mass matrix, and Λ=diag{λ 1,…,λ p }∈C p×p, X=[x 1,…,x p ]∈C n×p be the measured eigenvalue and eigenvector matrices, where rank(X)=p, p<n and both Λ and X are closed under complex conjugation in the sense that \(\lambda_{2j} = \bar{\lambda}_{2j-1} \in\nobreak{\mathbf{C}} \), \(x_{2j} = \bar{x}_{2j-1} \in{\mathbf{C}}^{n} \) for j=1,…,l, and λ k ∈R, x k ∈R n for k=2l+1,…,p. Find real-valued symmetric matrices D and K such that M a XΛ 2+DXΛ+KX=0. Problem 2: Let D a ,K a ∈SR n×n be the analytical damping and stiffness matrices. Find \((\hat{D}, \hat{K}) \in\mathbf{S}_{\mathbf{E}}\) such that \(\| \hat{D}-D_{a} \|^{2}+\| \hat{K}-K_{a} \|^{2}= \min_{(D,K) \in \mathbf{S}_{\mathbf{E}}}(\| D-D_{a} \|^{2} +\|K-K_{a} \|^{2})\), where S E is the solution set of Problem 1 and ∥⋅∥ is the Frobenius norm. In this paper, a gradient based iterative (GI) algorithm is constructed to solve Problems 1 and 2. A sufficient condition for the convergence of the iterative method is derived and the range of the convergence factor is given to guarantee that the iterative solutions consistently converge to the unique minimum Frobenius norm symmetric solution of Problem 2 when a suitable initial symmetric matrix pair is chosen. The algorithm proposed requires less storage capacity than the existing numerical ones and is numerically reliable as only matrix manipulation is required. Two numerical examples show that the introduced iterative algorithm is quite efficient.

Similar content being viewed by others

1 Introduction

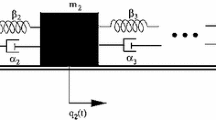

Using finite element techniques, the equation of motion of an n-degree-of-freedom damped linear system in free vibration can be written in the form

Here, q(t) is the displacement vector and M a , D a and K a are the analytical mass, damping and stiffness matrices, respectively. In many practical applications, the matrix M a is often symmetric positive definite and K a is symmetric positive semi-definite. The damping matrix D a is hard to determine in practice, however, very often, for the sake of computational convenience and other practical considerations, it is assumed to be symmetric. If a fundamental solution to (1) is represented by

then the scalar λ and the vector x must solve the quadratic eigenvalue problem (QEP)

Complex numbers λ and nonzero complex vectors x for which this relation holds are, respectively, the eigenvalues and eigenvectors of the system. It is known that the equation of (2) has 2n finite eigenvalues over the complex field because the leading matrix coefficient M a is nonsingular. Note that the dynamical bearing of the differential system (1) usually can be interpreted via the eigenvalues and eigenvectors of Eq. (2). Because of this connection, a lot of efforts have been devoted to the QEP in the literature. Readers are referred to the treatise by Tisseur and Meerbergen [1] for a good survey of many applications, mathematical properties, and a variety of numerical techniques for the QEP.

Model updating is a quadratic inverse eigenvalue problem (QIEP) which concerns the modification of an existing but inaccurate model with measured modal data. The model updating process requires that the updated model can reproduce a given set of measured data by replacing the corresponding ones from the original analytical model, and preserves the symmetry of the original model. The problem of updating damping and stiffness matrices simultaneously can be mathematically formulated as follows.

Problem 1

Let Λ=diag{λ 1,…,λ p }∈C p×p and X=[x 1,…,x p ]∈C n×p be the measured eigenvalue and eigenvector matrices, where rank(X)=p, p<n and both Λ and X are closed under complex conjugation in the sense that \(\lambda_{2j} = \bar{\lambda}_{2j-1} \in{\mathbf{C}} \), \(x_{2j} = \bar{x}_{2j-1} \in{\mathbf{C}}^{n} \) for j=1,…,l, and λ k ∈R, x k ∈R n for k=2l+1,…,p. Find real-valued symmetric matrices D and K such that

A quick count shows that there are np equations in n(n+1) unknowns, which implies that the matrices D and K in Eq. (3) can not be uniquely determined. It is known that D a and K a are good approximations of D and K. The strategy for obtaining an improved model is to find D and K that satisfy (3) and deviate as little as possible from D a and K a . Thus, we should further solve the following optimal approximation problem.

Problem 2

Let S E be the solution set of Problem 1. Find \((\hat{D}, \hat{K}) \in \mathbf{S}_{\mathbf{E}}\) such that

In this paper we provide a gradient based iterative (GI) algorithm to solve Problems 1 and 2. The proposed iterative method is developed from an optimization point of view and contains the well-known Jacobi iteration, Gauss-Seidel iteration and some recently reported iterative algorithms by using the hierarchical identification principle, as special cases [2–4]. The convergence analysis indicates that the iterative solutions generated by the GI algorithm always converge to the unique minimum Frobenius norm symmetric solution of Problem 2 when a suitable initial symmetric matrix pair is chosen. The merits of the proposed algorithm include: (i) It can be easily constructed without any factorizations on the known matrices; (ii) Only matrix multiplication is required during the iteration; (iii) Convergence of the algorithm can be guaranteed provided the convergence factor μ is suitable chosen and (iv) Compared with the finite iterative method proposed by Yuan and Liu [5], we observe that the GI algorithm is more simple and easy to perform, and seems to have enough generality that, with some suitable modifications, it can be applied to other types of structural dynamics model updating problems as well.

The model updating problem is a practical industrial problem that arises in vibration industries, including aerospace, automobile, manufacturing, and others. In these industries a theoretical finite element model described by (1) often needs to be updated using a few measured frequencies and mode shapes (eigenvalues and eigenvectors) from a real-life structure. The reason for doing so is that the theoretical model of a structure is constructed on the basis of highly idealized engineering blue prints and designs that may not truly represent all the physical aspects of an actual structure. In fact, an analytical (finite element) model will be erroneous due to inevitable difficulties in modeling of joints, boundary conditions and damping. When field dynamic tests are performed to validate the theoretical model, inevitably their results, commonly natural frequencies and mode shapes, do not coincide well with the expected results from the theoretical model. In this situation a vibration engineer needs to update the theoretical model so that inaccurate modeling assumptions can be corrected in the original analytical model and the updated model may be considered to be a better dynamic representation of the structure. This model may be used with greater confidence for the analysis of the structure under different boundary conditions.

In the past 30 years, structural dynamics model updating problems have received considerable discussions. A significant number of model updating techniques for updating mass and stiffness matrices for undamped systems (i.e., D a =0) using measured response data have been discussed by Baruch [6], Baruch and Bar-Itzhack [7], Berman [8], Berman and Nagy [9], Wei [10–12], Yang and Chen [13], and Yuan [14, 15], etc. For an account of the earlier methods, we refer readers to see the authoritative book by Friswell and Mottershead [16], an integral introduction of the basic theory of finite element model updating is given. For damped structural systems, the theory and computation have been considered by Friswell et al. [17], Pilkey [18], Kuo et al. [19], Chu et al. [20] and Yuan [21, 22], etc. Although these existing methods for updating damped structural systems are direct methods, the explicit solution is too difficult to be obtained by applying matrix computation techniques, which restrict their usefulness in real applications. We notice that the iterative methods for structural dynamics model updating have received little attention in these years. Iterative algorithms are not only widely applied in system identification [23, 24], but have also been developed for solving linear matrix equations [4, 25]. This paper we will offer a simple yet effective iterative method to solve damped structural model updating problems. We believe that our method is new in the field and the features of simple operations and easy performance make the method practical for large-scale applications.

The rest of the paper is outlined as follows. In Sect. 2, an efficient gradient based iterative method is presented to solve Problems 1 and 2 and the convergence properties are discussed. In Sect. 3, two numerical examples are used to test the effectiveness of the proposed algorithm. Concluding remarks are given in Sect. 4.

Throughout this paper, we shall adopt the following notation. C m×n and R m×n denote the set of all m×n complex and real matrices, respectively. SR n×n denotes the set of all symmetric matrices in R n×n. A ⊤, A +, tr(A) and R(A) stand for the transpose, the Moore-Penrose generalized inverse, the trace and the column space of the matrix A, respectively. λ max(M ⊤ M) denotes the maximum eigenvalue of M ⊤ M. I n represents the identity matrix of order n, \(\bar{\alpha}\) denotes the conjugation of the complex number α. For A,B∈R m×n, an inner product in R m×n is defined by (A,B)=tr(B ⊤ A), then R m×n is a Hilbert space. The matrix norm ∥⋅∥ induced by the inner product is the Frobenius norm. Given two matrices A=[a ij ]∈R m×n and B∈R p×q, the Kronecker product of A and B is defined by A⊗B=[a ij B]∈R mp×nq. Also, for an m×n matrix A=[a 1,a 2,…,a n ], where a i , i=1,…,n, is the i-th column vector of A, the stretching function vec(A) is defined as \(\mbox{vec}(A)=[a_{1}^{\top}, a_{2}^{\top}, \ldots, a_{n}^{\top}]^{\top}\).

2 The solution of Problem 1 and Problem 2

Define a complex matrix T p as

where \(i=\sqrt{-1}\). It is easy to verify that T p is a unitary matrix, that is, \(\bar{T}_{p}^{\top}T_{p}=I_{p}\). Using this transformation matrix, we have

where ζ j and η j are respectively the real part and the imaginary part of the complex number λ j , and y j and z j are respectively the real part and the imaginary part of the complex vector x j for j=1,3,…,2l−1.

It follows from (6) and (7) that the equation of (3) can be equivalently written as

For a given symmetric matrix pair (D a ,K a ), we have

Let

then solving Problem 1 and Problem 2 is equivalent to finding the minimum Frobenius norm solution of the matrix equation

We should point out that the equation of (8) must be consistent. In fact, let

It is easy to check that (D ∗−D a ,K ∗−K a ) is a particular solution of (8). Therefore, once the minimum Frobenius norm solution \((\tilde{D}^{*}, \tilde{K}^{*})\) of (8) is obtained, the solution of the matrix optimal approximation Problem 2 can be computed. In this case, can be expressed as

Lemma 1

If the linear equation system Mx=b, where M∈R m×n, b∈R m, has a unique solution x ∗, then the gradient based iterative algorithm

yields lim k→∞ x k =x ∗.

Lemma 2

[27]

Suppose that the consistent linear equation Mx=b, where M∈R m×n, b∈R m, has a solution x∈R(M ⊤), then x is the unique minimum Frobenius norm solution of the linear equation.

Lemma 3

The equation of (8) has a symmetric solution pair \((\tilde{D}, \tilde {K})\) if and only if the matrix equations

are consistent.

Proof

If the equation of (8) has a symmetric solution pair \((\tilde{D}^{*}, \tilde{K}^{*})\), then \(\tilde{D}^{*} \tilde{X}\tilde{\varLambda }+\tilde{K}^{*} \tilde{X}=F\), and \((\tilde{D}^{*} \tilde{X}\tilde{\varLambda}+\tilde{K}^{*} \tilde{X})^{\top}= \tilde{\varLambda}^{\top}\tilde{X}^{\top}\tilde{D}^{*}+\tilde{X}^{\top}\tilde {K}^{*}=F^{\top}\). That is to say, \((\tilde{D}^{*}, \tilde{K}^{*})\) is a solution of (10).

Conversely, if the matrix equations of (10) has a solution, say, \(\tilde{D}=U\), \(\tilde{K}=V\). Let \(\tilde{D}^{*}=\frac{1}{2}(U+U^{\top})\), \(\tilde{K}^{*}=\frac{1}{2}(V+V^{\top})\), then \(\tilde{D}^{*} \) and \(\tilde {K}^{*}\) are symmetric matrices, and

Hence, \((\tilde{D}^{*}, \tilde{K}^{*})\) is a symmetric solution pair of (8).

Using the Kronecker product and the stretching function, we know that the equations of (10) are equivalent to

Let

According to Lemma 1, we have the gradient based iterative algorithm for the equations of (10) described as following:

After some algebra manipulations this results in

From (12) and (13) we can easily see that if the initial matrices \(\tilde{D}_{0}\), \(\tilde{K}_{0} \in \mathbf{SR}^{n \times n}\), then \(\tilde{D}_{s} \in\mathbf{SR}^{n \times n}\) and \(\tilde{K}_{s} \in\mathbf{SR}^{n \times n}\) for s=1,2,… . □

Theorem 1

Suppose that the equation of (8) has a unique symmetric solution \((\tilde{D}^{*}, \tilde{K}^{*})\). If we choose the convergence factor as

then the sequences \(\{\tilde{D}_{i}\}\) and \(\{\tilde{K}_{i}\}\) generated by (12) and (13) satisfy

for any arbitrary initial matrix pair \(( \tilde{D}_{0}, \tilde{K}_{0})\) with \(\tilde{D}_{0}, \tilde{K}_{0} \in \mathbf{SR}^{n \times n}\).

Proof

Define the error matrices \(\tilde{D}_{s}^{*}\) and \(\tilde {K}_{s}^{*}\) as

Using (12), (13) and (10), we have

Let

By (16) and noting that the symmetry of \(\tilde{D}_{s}^{*}\), i=0,1,… , we obtain

Observe that

Thus, it follows from (18) that

Similarly, by (17) we can obtain

Note that

Therefore, from (19) and (20) we have

If the convergence factor μ is chosen to satisfy 0<μ<μ 0, then the inequality of (21) implies that

or

it follows that

or equivalently,

Under the condition that the solution to the equation of (8) is unique, we can conclude that \(\tilde{D}_{s}^{*} \rightarrow0\) and \(\tilde {K}_{s}^{*}\rightarrow0\) as s→∞. This proves Theorem 1. □

Now, assume that J∈R n×p is an arbitrary matrix, then we have

It is obvious that if we choose

then all \(\tilde{D}_{s}\) and \(\tilde{K}_{s}\) generated by (12) and (13) satisfy

It follows from Lemma 2 and Theorem 1 that if we choose the initial symmetric matrix pair by (22), then the iterative solution pair \((\tilde{D}_{s}, \tilde{K}_{s})\) obtained by the gradient iterative algorithm (12), (13) and (14) converges to the unique minimum Frobenius norm symmetric solution pair \((\tilde{D}^{*}, \tilde{K}^{*})\). In summary of above discussion, we have proved the following result.

Theorem 2

Suppose that the condition (14) is satisfied. If we choose the initial symmetric matrices by (22), where J is an arbitrary matrix, or especially, \(\tilde {D}_{0}=0\) and \(\tilde{K}_{0}=0\), then the iterative solution pair \((\tilde{D}_{s}, \tilde{K}_{s})\) obtained by the gradient iterative algorithm (12) and (13) converges to the unique minimum Frobenius norm symmetric solution pair \((\tilde{D}^{*}, \tilde{K}^{*})\) of Eq. (8), and the unique solution of Problem 2 is achieved and given by (9).

3 Numerical examples

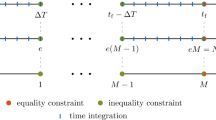

In this section, we will give two numerical examples to illustrate our results. The test is performed using MATLAB 6.5. The iteration will stop if the corresponding relative residue satisfies \(\delta_{s}=\frac{\|F-\tilde{D}_{s}\tilde{X}\tilde{\varLambda}-\tilde {K}_{s}\tilde{X}\|}{\|M_{a}\tilde{X}\tilde{\varLambda}^{2}\| +\|\hat{D}\tilde{X}\tilde{\varLambda}\|+\|\hat{K}\tilde{X}\|}\allowbreak \leq1.0\mathrm{e}{-}005\).

Example 1

[28]

Consider an analytical five-degree-of-freedom system with mass, stiffness and damping matrices given by M a =diag{1,2,5,4,3}, K a and D a , where K a =[k aij ]5×5, D a =[d aij ]5×5 are real-valued symmetric 3-diagonal matrices with k a11=100, k a12=−20, k a22=120, k a23=−35, k a33=80, k a34=−12, k a44=95, k a45=−40, k a55=124; d a11=11, d a12=−2, d a22=14, d a23=−3.5, d a33=13, d a34=−1.2, d a44=13.5, d a45=−4, d a55=15.4.

The model used to simulate the consistent experimental data is given by M=M a , D=D a , and K=[k ij ]∈R 5×5, where K is a symmetric 3-diagonal matrix with k 11=100, k 12=−20, k 22=120, k 23=−35, k 33=70, k 34=−12, k 44=95, k 45=−40, k 55=124. Note that the difference between K a and K is in the (3,3) element. The eigensolution of the experimental model is used to create the experimental modal data. It is assumed that the measured eigenvalue and eigenvector matrices Λ and X are given by

and

Taking \(\tilde{D}_{0}=0\), \(\tilde{K}_{0}=0\) and \(\mu=\frac{1}{23}\), we apply the GI algorithm in (12), (13) to compute \((\tilde{D}_{s}, \tilde{K}_{s})\). The relative residual δ s versus iteration s is shown in Fig. 1. From Fig. 1, it is clear that the relative residual δ s is becoming smaller and approaches zero as iteration time s increases. This indicates that the proposed algorithm is effective and convergent. After 100 iteration steps, we get the minimum Frobenius norm solution \(( {\tilde{D}}^{*}, { \tilde{K} }^{*})\) of Eq. (8) as follows:

with corresponding relative residual

Therefore, by (9), the updated damping and stiffness matrices are given by

Inspection shows that although all the elements of the damping and stiffness matrices have been adjusted, the algorithm has concentrated the major change in the proper location.

Example 2

Consider a model updating problem. The original model is the statically condensed oil rig model (M a ,D a ,K a ) represented by the triplet BCSSTRUC1 in the Harwell-Boeing collection [29]. In this model, M a and K a ∈R 66×66 are symmetric and positive definite, and D a =1.55I 66. There are 132 eigenpairs.

The measured data for experiment is simulated by reducing the quantity of stiffness matrix in K a (1,1)=1990.33 to K(1,1)=1600. That is, the difference between K a and K is in the (1,1) element. Assume that the measured eigenvalues are λ 1=−34.62+574.48i, λ 2=−34.62−574.48i, λ 3=−12.865+465.35i and λ 4=−12.865−465.35i, and the corresponding eigenvectors are the same as those of the experimental model (M a ,D a ,K). Applying Theorem 2 and taking \(\tilde{D}_{0}=0\), \(\tilde{K}_{0}=0\) and μ=1.5106e−006, after 191 iteration steps, we get the minimum Frobenius norm solution \(( \tilde{D}_{191}, \tilde{K}_{191})\) of Eq. (8). The relative residual is estimated by

Observe that the prescribed eigenvalues and eigenvectors have been embedded in the new model \(M_{a}\tilde{X}\tilde{\varLambda}^{2}+\hat{D} \tilde{X}\tilde{\varLambda}+\hat{K} \tilde{X}=0 \), where \(\hat{D}=D_{a}+ \tilde{D}_{191}\), \(\hat{K}=K_{a}+ \tilde{K}_{191}\).

4 Concluding remarks

A gradient based iterative algorithm has been developed to incorporate measured experimental modal data into an analytical finite element model with nonproportional damping, such that the adjusted finite element model more closely matches the experimental results. The approach is demonstrated by two numerical examples and reasonable results are produced.

References

Tisseur F, Meerbergen K (2001) The quadratic eigenvalue problem. SIAM Rev 43:235–286

Ding F, Chen T (2005) Gradient based iterative algorithms for solving a class of matrix equations. IEEE Trans Autom Control 50:1216–1221

Ding F, Chen T (2006) On iterative solutions of general coupled matrix equations. SIAM J Control Optim 44:2269–2284

Ding F, Liu PX, Ding J (2008) Iterative solutions of the generalized Sylvester matrix equations by using the hierarchical identification principle. Appl Math Comput 197:41–50

Yuan Y, Liu H (2012) An iterative updating method for undamped structural systems. Meccanica 47:699–706

Baruch M (1978) Optimization procedure to correct stiffness and flexibility matrices using vibration tests. AIAA J 16:1208–1210

Baruch M, Bar-Itzhack IY (1978) Optimal weighted orthogonalization of measured modes. AIAA J 16:346–351

Berman A (1979) Mass matrix correction using an incomplete set of measured modes. AIAA J 17:1147–1148

Berman A, Nagy EJ (1983) Improvement of a large analytical model using test data. AIAA J 21:1168–1173

Wei FS (1980) Stiffness matrix correction from incomplete test data. AIAA J 18:1274–1275

Wei FS (1990) Mass and stiffness interaction effects in analytical model modification. AIAA J 28:1686–1688

Wei FS (1990) Analytical dynamic model improvement using vibration test data. AIAA J 28:174–176

Yang YB, Chen YJ (2009) A new direct method for updating structural models based on measured modal data. Eng Struct 31:32–42

Yuan Y (2008) A model updating method for undamped structural systems. J Comput Appl Math 219:294–301

Yuan Y (2009) A symmetric inverse eigenvalue problem in structural dynamic model updating. Appl Math Comput 213:516–521

Friswell MI, Mottershead JE (1995) Finite element model updating in structural dynamics. Kluwer Academic, Dordrecht

Friswell MI, Inman DJ, Pilkey DF (1998) The direct updating of damping and stiffness matrices. AIAA J 36:491–493

Pilkey DF (1998) Computation of a damping matrix for finite element model updating. PhD Thesis, Dept of Engineering Mechanics, Virginia Polytechnical Institute and State University

Kuo YC, Lin WW, Xu SF (2006) New methods for finite element model updating problems. AIAA J 44:1310–1316

Chu DL, Chu M, Lin WW (2009) Quadratic model updating with symmetry, positive definiteness, and no spill-over. SIAM J Matrix Anal Appl 31:546–564

Yuan Y (2008) An inverse quadratic eigenvalue problem for damped structural systems. In: Mathematical problems in engineering. Article ID 730358, 9 pp

Yuan Y, Dai H (2011) On a class of inverse quadratic eigenvalue problem. J Comput Appl Math 235:2662–2669

Ding J, Ding F (2008) The residual based extended least squares identification method for dual-rate systems. Comput Math Appl 56:1479–1487

Han LL, Ding F (2009) Multi-innovation stochastic gradient algorithms for multi-input multi-output systems. Digit Signal Process 19:545–554

Dehghan M, Hajarian M (2010) An iterative method for solving the generalized coupled Sylvester matrix equations over generalized bisymmetric matrices. Appl Math Model 34:639–654

Ding F, Chen T (2005) Iterative least squares solutions of coupled Sylvester matrix equations. Syst Control Lett 54:95–107

Ben-Israel A, Greville TNE (2003) Generalized inverses. Theory and applications, 2nd edn. Springer, New York

Zimmerman D, Widengren M (1990) Correcting finite element models using a symmetric eigenstructure assignment technique. AIAA J 28:1670–1676

Boisvert R, Pozo R, Remington K, Barrett R, Dongarra JJ. http://math.nist.gov/MatrixMarket

Acknowledgements

The authors would like to express their heartfelt thanks to the anonymous reviewers and Professor Alberto Carpinteri (Editor-in-Chief) for their constructive criticisms and helpful suggestions which substantially improved the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Research supported by the National Natural Science Foundation of China (No. 10926130).

Rights and permissions

About this article

Cite this article

Yuan, Y., Liu, H. A gradient based iterative algorithm for solving structural dynamics model updating problems. Meccanica 48, 2245–2253 (2013). https://doi.org/10.1007/s11012-013-9741-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11012-013-9741-8