Abstract

Studies on incentives to stimulate researcher engagement in knowledge valorisation have primarily focused on incentives for economic output and it remains unclear how universities configure incentives for a broad societal impact of knowledge. Therefore, this article explores the presence and design of incentives for the full range of knowledge valorisation activities by employing a bottom-up, mixed-methods design. In 17 semi-structured interviews with representatives from highly ranked European universities, 11 distinct incentives for valorisation activities with an academic, civil society, entrepreneurial or state-governmental orientation were identified. Subsequently, a quantifying survey was conducted (n = 48). Perceived effectiveness did not directly correspond to presence and desirability, indicating that additional motives play a role in implementing incentives, including managing conflict of interest situations. For non-scalable (hour-based) activities broader bandwidths of allocated percentages were employed that were more dependent on case by case attribution of income and for which more conditions to limit conflicts of interest were in place. Interestingly, this study found a negative correlation between the number of such restricting conditions being in place for consultancy and the university’s overall success in industry income. A flowchart is presented that university management may use to align the selection and design of their incentives with their motives.

Similar content being viewed by others

1 Introduction

For over 15 years, research institutes have placed the broad societal impact of knowledge more prominently on the agenda as a means to contribute to the European knowledge economy (Dale 2010). However, they still have not succeeded in overcoming the European knowledge paradox (Vilarinho 2015). The inconsistency between excellent scientific insights and limited innovation outcomes that is described by this paradox is generally considered to be best addressed by improvements in knowledge transfer and knowledge valorisation processes (Van Vught 2009). Whereas knowledge transfer highlights the formal transfer of academic knowledge to parties in the commercial sector for economic benefit, knowledge valorisation takes a broader scope and looks at the creation of societal value from knowledge by translating research findings into innovative products, services, processes and/or business activities (Benneworth and Jongbloed 2010; De Jong 2015; Hladchenko 2016). This latter definition includes the creation of spin-off companies and the filing of patent applications on the one hand and the writing of books and the development of guidelines for policy improvements on the other. Regardless of the broad nature of valorisation activities and despite best efforts to improve their accompanying processes, the active engagement of researchers in these processes continues to be a step-limiting factor (Abreu and Grinevich 2013; Audretsch and Aldridge 2012; Olmos-Penuela et al. 2015).

As a first step to support knowledge valorisation, almost all European countries have adopted a Bayh-Dole-like regime in which universities own the intellectual property (IP) generated at their institutes and inventive researchers receive a share of the resulting revenues in turn (Swamidass and Vulasa 2008). Under this regime, universities act as principals who assign their faculty members the task to not only contribute to academic knowledge generation, but also to the development of valuable knowledge that can be used within a broader societal context (Braun and Guston 2003). From the perspective of faculty members this task is seen as ‘additional’ and sometimes as conflicting with their internal preference for pure academic science (D’Este and Perkmann 2011; De Jong et al. 2016). Consequently, academics violate ownership policies by circumventing engagement with technology transfer offices (Pinto and Fernández-Esquinas 2016) or by failing to file invention disclosures and patent applications before publishing their findings (Baldini et al. 2007; Markman et al. 2005; Siegel et al. 2003).

Consistent with the principal-agent theory, the misalignment of objectives between universities and researchers can be remedied by providing incentives that reward faculty members for achieving the desired outcomes (Eisenhardt 1988). While there are many intrinsic motivators that stimulate scientists, which cannot be directly influenced by university policy makers (Lam 2011), the importance of extrinsic personal rewards in engaging researchers with knowledge valorisation has been highlighted by numerous studies (Debackere and Veugelers 2005; Derrick and Bryant 2013; Galán-Muros et al. 2015; Geuna and Muscio 2009; Göktepe-Hulten and Mahagaonkar 2010). As a result, universities have implemented a wide variety of incentives for researchers to engage in knowledge transfer activities: revenues from intellectual property rights, royalties, shareholding, bonuses, promotions, etc. (Belenzon and Schankerman 2009; Galán-Muros et al. 2015; Väänänen 2010).

Despite this wide implementation of incentives, there is still little insight in how incentive systems can stimulate economic as well as non-economic, societal impact. Most studies evaluating incentives for knowledge valorisation have focused on those incentives aiming to improve the economic output of research (Arqué-Castells et al. 2016; Baldini 2010; Di Gregorio and Shane 2003; Friedman and Silberman 2003; Göktepe-Hulten and Mahagaonkar 2010; Lach and Schankerman 2004; Macho-Stadler and Pérez-Castrillo 2010; Markman et al. 2004; Walter et al. 2013). Well-defined IP policies and licensing contracts could act as an incentive mechanism by reducing information asymmetries and by clarifying the expectations from and benefits for researchers (Jensen and Thursby 2003; Macho-Stadler and Pérez-Castrillo 2010). While some studies found a positive correlation between the presence of written IP policies and patent applications or license agreements (Baldini 2011; Barjak et al. 2013), others could not confirm this correlation (González-Pernía et al. 2013). Regarding the share of revenues that is shared with inventors, some studies found that researcher engagement increases when researchers benefit from a higher revenue split (Caldera and Debande 2010; Lach and Schankerman 2004, 2008; Link and Siegel 2005). A more recent study found that the actual revenue split is less important than the percentage being above a certain threshold (Arqué-Castells et al. 2016). Another study found that over-allocation of income to the department (negatively) effects licensing income (Friedman and Silberman 2003).

Even fewer studies have looked at the differences in the configuration of those incentives across universities. A recent study benchmarking revenue sharing policies in the UK looked at how revenues were distributed among researchers, departments and the central administration but did not differentiate between different types of activities leading to those revenues (Gazzard and Brown 2012). Consequently, the extent to which incentives reward the full range of activities that contribute to a societal impact of knowledge is unclear. Furthermore, only a handful of studies have examined conditions to manage conflicts of interest that are linked to these incentives. Some studies found that providing rules on how to manage conflicts of interest is positively correlated with the number of invention disclosures, patent applications, licensing income and number of licenses (Barjak et al. 2013; Caldera and Debande 2010), while others found a negative correlation of such conditions with spin-off formation (Muscio et al. 2016). The nature of these conditions, however, remains unclear and although there is a general consensus that incentives play a role in improving engagement from academics, it is not clear how universities implement incentives in their policies to manage and promote knowledge valorisation (Galán-Muros et al. 2015).

Since knowledge valorisation encompasses many different dimensions, a single focus on the economic dimension neglects other important impacts of research, such as impact of knowledge on the general public and societal welfare. Moreover, the lack of insight into the full scope of incentives and their accompanying conditions contributes to many uncertainties on which policy provides the best results (Walter et al. 2013). This paper addresses this knowledge gap and aims to contribute to the improvement of university policies by gaining insight into how European universities shape their knowledge valorisation incentives. In order to reach this objective, this study identifies and classifies incentives and their accompanying conditions and evaluates the relation between the design of incentives and the ranking of universities. As opposed to earlier studies focusing on a small set of incentives for economic activities, this study takes an exploratory, bottom-up approach to identify all incentives stimulating engagement in broad valorisation practices. The results contribute to the knowledge valorisation research field by providing insight into the nature, abundance and desirability of different incentives and configurations. Additionally, the study contributes to knowledge valorisation practices by serving as a benchmark and by presenting a flowchart that universities can use to select and design incentives in such a way that they best fit their strategic focus.

2 Methodology

Although formal incentives for economic activities can mostly be found in institutional policy documents, e.g. guidelines on royalty sharing (Lach and Schankerman 2008), these documents generally do not describe less formal incentives that reward non-economic, societal impact activities nor do they shed light on the actual practice of awarding incentives. To address and avoid this information gap and in line with previous studies (Davey et al. 2011; Markman et al. 2004), this study took a mixed-method approach; the incentives and conditions that were in place were explored by qualitative interviews and insight in the prevalence of these incentives and conditions was gained via a survey.

In order to identify all incentives that stimulate a broad societal impact of knowledge, we operationalized the concept of valorisation by using a framework that differentiates communication of research results to different target groups (Hakala and Ylijoki 2001; Mostert et al. 2010; van Ark and Klasen 2007). According to this framework knowledge production, knowledge exchange and knowledge use can be linked to four different target groups (scientific community, civil society, actors with an entrepreneurial orientation and state-governmental decision-makers) and correspondingly have an impact on knowledge, culture, economy and wellbeing, see Table 1.

2.1 Study population

In line with previous studies, professionals working in technology transfer or similar offices (e.g. innovation or commercialization offices) were used as a primary source of information because they are most likely to have an overview of the different incentives in place in their institution (Davey et al. 2011). The Times Higher Education World University Rankings (THE Rankings) was used to select European public universities. The first 200 universities of the THE list 2014–2015 are ranked with a single number allowing for comparison across rank. Within this set 85 universities were European. Professionals working with knowledge transfer and valorisation were identified and e-mail addresses were collected via their institutional websites.

2.2 Semi-structured interviews

Semi-structured interviews were conducted to explore how incentive systems were implemented in universities across Europe as the aim was to obtain qualitative data to interpret the design of incentives and the practice of their implementation (Gray 2013). Interview candidates were approached by email and respondents received the interview questions beforehand. The total number of interviews was based on saturation of identified incentives. During the interviews the framework was introduced and the interviewee was asked to highlight which incentives were in place per domain. The semi-structured design allowed for probing on the mechanism for attributing rewards and on further conditions being in place. With the permission of the respondents, the interviews were recorded, fully transcribed and independently coded by two researchers according to thematic coding (Van den Nieuwboer et al. 2015). Subsequently, the codes were consensually harmonized to compile a list of incentives with their respective conditions.

2.3 Survey

Based upon this overview of incentive systems, a questionnaire was developed to quantitatively collect data on incentive systems in-place, their desirability, perceived effectiveness and comments for improvement. The voluntary, open survey was pilot tested after which the final survey was created and distributed through the online web survey program SurveyMonkey.

Initially, 179 e-mail invitations were sent and delivered to representatives from the 85 institutions. Another 58 invitations were sent but could not be delivered or were responded to with an out-of-office reply. If in the out-of-office reply referral was made to one or more colleagues, the invitation was forwarded to these persons, leading to an additional 60 respondents. In sum, 239 initial invitations were sent and delivered to representatives and not answered with a direct out-of-office reply during the time of the study. Reminders were sent after two and again after 4 weeks to increase the response rate. Respondents’ IP addresses were used to identify potential duplicate entries (Eysenbach 2004). Duplicate database entries by the same IP address or by respondents representing the same institution were eliminated before analysis. In case of duplicate entries only the most complete (number of questions answered) or the one filled out by the most senior professional (years of experience in knowledge valorisation) was included. Respondents who didn’t fill out the name of their host institution were excluded.

The anonymous survey started with an informed consent page and a time indication of 15 min to complete the survey. Next, respondents were asked to fill out demographic data and subsequently whether the incentives that were identified during the interviews were in place, whether the respondents thought they should be in place and what the accompanying conditions were. Furthermore, respondents were asked per incentive whether they would recommend any improvements and respondents were allowed to suggest new incentives that should be in place. Finally, respondents were asked to rank their top three of most effective incentives (1 being most effective and representing a weight of 3). In this last question all different types of revenue sharing were combined to avoid a bias of the respondent’s preference for specific channels.

2.4 Statistical analyses

For sliding scales, the percentages attributed to the first €100.000 was used in the analysis. When provided, the starting point for negotiations was used when the percentages were determined on a case by case basis. Percentages for answer possibilities were calculated based on the total number of respondents that answered that specific question. The significance threshold was set at .05.

To calculate the effectiveness of incentives, for each incentive the scores were multiplied with the respective weight. The sum of the weighted scores reflects the total weighted score of the incentive. The total weighted scores were rescaled to a range from 1 to 100 to facilitate interpretation (Van den Nieuwboer et al. 2015; Weenen et al. 2013), according to the following formula (WRI, weighted ranking incentive; HRI, highest rated incentive; n, number of times; R1, rank 1; R2, rank 2, R3, rank 3):

To analyze the difference between presence and desirability of incentives, the McNemar test for binary matched-pairs data was used (Fagerland et al. 2013).

Because the percentage attributed to the researcher was not normally distributed for all incentives (e.g. D(42) = 0.8, p < .001, Shapiro–Wilk test for patents) and Levene’s test showed there was no homogeneity of variance (F(6,111) = 4.0, p < .01), a Kruskal–Wallis test with posthoc Mann–Whitney tests was conducted to test differences in attributed percentages. For these posthoc tests a Bonferroni correction was applied to the significance threshold to correct for the number of tests. Chi square tests were conducted to analyze the differences in formality per incentive. Adjusted, standardized residuals (ASR) were calculated to identify which cells (ASR ≥ +/−2) contributed to statistically significant omnibus Chi square test results (Sharpe 2015). The same test was used to analyze differences in income direction and presence of limits and caps. The comments made for improvement by respondents on the survey were thematically coded and differences between types of comments were analyzed via a nonparametric binominal test.

The correlation of controlling mechanisms, the researcher-attributed percentage and the number of formal incentives in place was evaluated with nonparametric Kendall’s tau test. To evaluate the correlation of controlling mechanisms with rank and industry income of universities, the number of restricting mechanisms for consultancy was used. For this incentive all types of restricting conditions were described and of the incentives rewarding engagement in non-scalable activities it was most often in place. For the researcher-attributed percentage the overall most prevalent incentive—revenue sharing for patents—was selected.

3 Results

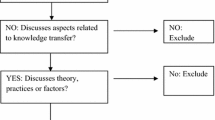

Seventeen interviews were conducted and saturation of identified incentives was reached after nine interviews (see Fig. 1). Of the 239 representatives who received the invitation to participate during the time of the study, 78 initial responses were collected from 71 unique IP addresses. This participation rate of 30% is well within the norm (36 ± 19) for organizational analyses (Baruch and Holtom 2008) and as high as can be expected given the extensive surveying that knowledge valorisation professionals have been subjected to. After data cleaning, responses from participants representing 48 unique universities were included, leading to a combined institutional response rate of 56%. Data cleaning included the elimination of data from 14 respondents who started but did not complete the survey (i.e. completion rate of 80%); 6 respondents who represented the same institution as another respondent and 3 respondents who didn’t include the name of their host institution. There was no selection bias per country sampled (see Supplemental Material, Table A).

Career progression was considered the most effective incentive (relative rank of 100). At 74 and 58, revenue sharing and attributing university resources were considered moderately effective. Equity sharing (14), prizes (13) and bonuses (13) were hardly considered effective (see Fig. 2).

Five incentives were implemented in more than half of the universities: revenue sharing from patents (98%), revenue sharing from other Intellectual Property Rights (IPRs; 73%), equity sharing (73%), prizes (64%) and revenue sharing from consultancy (61%, see Fig. 2). The least common incentive was revenue sharing from contract education, which was in place in less than 1 out of 4 institutions sampled.

Overall, for all incentives the desirability was at least equal (in the case of revenue sharing from patents) or higher (for all other incentives) than the actual implementation. The difference in presence and desirability was statistically significant for three individual incentives: revenue sharing for university-launched products (p = .03); attributing university resources (p = .008) and revenue sharing for consultancy (p = .02), see Fig. 2. The least desired incentives were revenue sharing for contract education (38%), revenue sharing for contract research (50%), and attributing bonuses (50%). In line with their relative abundance but in contrast to their limited perceived effectiveness, equity sharing and attributing prizes were among the most desired incentives (82 and 77%, respectively).

The average percentage attributed to researchers was 42%, with overall the lowest percentage given for contract research (average = 29%) and the highest for consultancy (average = 76%), see Fig. 3. The percentage attributed for consultancy activities statistically significantly differed from other attributed percentages H = 21 (6), p < .01), Kruskal–Wallis test, Supplemental Material, Table B. As shown in Fig. 3, the bandwidths of percentages that are attributed to incentivize activities based on making a margin on hours (non-scalable activities) are much broader than for activities based on making a margin on knowledge (scalable activities).

The bandwidths of percentage attributed to the researcher is much broader for activities that make a margin on hours (non-scalable activities) than for activities that make a margin on hours (scalable activities). The average attributed percentage is significantly higher for consultancy than for all other incentives. The figure shows the percentages that are attributed for the first €100.000 in case of a sliding scale or, when provided, the starting point for negotiations when the percentages were determined on a case by case basis. Awards/prizes, resources sharing and bonuses are excluded because they are not based on percentages

In many cases the percentages were not set in advance (‘informal’ incentives) and the prevalence of formally set percentages differed per incentive type (p < .0001, Fisher’s Exact Test; see Fig. 4). Especially patents (ASR = 3.7) and other IPRs (ASR = 2.1) were statistically significant more likely to be organized formally, whereas equity (ASR = −5.4) was less likely to be organized formally.

In general, income resulting from scalable activities was more often paid out in private and less often within the university context (e.g. on a personal account for work-related expenses or to be used in further research) than income resulting from non-scalable activities, see Table 2. This prevalence of researchers being rewarded in private was (statistically significant) low for contract research (36%, ASR = −2.9) and high for patents (84%, ASR = 2.0), p = .03, FET. For contract research a payout on a personal account for work-related expenses was a possibility in 55% of the cases (ASR = 2.1) but for patents this was only 16% (ASR = −2.2), p = .03, FET. In turn, the use of revenues for further research was highly likely for contract research (64%, ASR = 2.6) and contract education (75%, ASR = 2.0) and less likely for patents (19%, ASR = −2.0), p = .003, FET (see Table 2). The percentage of times in which the researcher could decide how the revenues were attributed averaged at 46%, with no statistically significant differences between incentives.

Restrictions to the incentives were found in the form of limits on the maximum amount of time a researcher can spend on these activities and caps on the maximum amount of money a researcher can earn with them. Time limits were only described for non-scalable activities and the prevalence of such limits differed statistically significantly between incentives (p < .001, FET, see Table 2). Whereas a time limit was in place in the majority of universities with incentives for consultancy (88%, ASR = 3.6), only 20% of institutions with incentives for contract research had a time limit in place for these activities (ASR = −4.3). Time limits were also prominent for contract education (80%) but this difference was non-significant (ASR = 0.8). Caps were found for all revenue-sharing incentives and although in general more institutions implemented this cap for non-scalable activities than for scalable activities these differences were statistically non-significant (p = .2, FET, Table 2). Other limiting conditions were the need to disclose income, the need to disclose activities, a limitation on transfer of university intellectual property or use of university assets and the need to ask for permission for specific consultancy activities.

Comments for improvements were categorized as relating to rules and regulations, or to rewards. The majority of comments (25) on rules and regulations argued for more rules and included more clarity (8 comments), increased control on conflicts of interest or prizing (10 comments), uniformity of rules within the institution or country (3 comments) or improved enforcement (4 comments), (86%, p < .001). Only four comments argued for limiting the rules and regulations, advocating a more laissez-faire approach to the activities. There was no statistically significant difference between comments arguing for more rewards (11; more revenues or resources attributed, faster return to inventor, inclusion of contributors next to inventors, inclusion of career progression, broader use within organization) and those arguing for less rewards (4; setting a sliding scale, reducing attributed percentage, narrowing types of activities that are rewarded), p = .12.

The researcher-attributed percentage of patent revenues did not correlate with the position of the university on the THE ranking nor with the industry income ranking of the university (r = −.01, p = .91 and r = .01, p = .39, Kendall’s tau, respectively; see Fig. 5a, b). The same holds true for the correlation between these two positions on the THE ranking and the number of formal incentives in place at the surveyed institutions (r = .15, p = .23 and r = .03, p = .85, Kendall’s tau for position and industry income, respectively; see Fig. 5c, d). Results show that there is statistically significant correlation at the α = 10% level between the number of restricting conditions in place for consultancy and the position on the THE ranking in terms of Industry Income (see Fig. 5f; 100 = best; r = −.30, p = .06, Kendall’s tau) but not between these conditions and the final position on the THE ranking (Fig. 5e; 1 = best; r = .11, p = .50, Kendall’s tau).

Negative correlation between the number of restricting conditions in place for consultancy activities and the position on the Industry Income Ranking from the THE Ranking 2014 (f, 100 = best). No such correlations were shown for the percentage of patent revenues shared with the researcher and the number of formal incentives in place (b, d). Furthermore, the number of restricting conditions, the percentage of patent revenues shared with the researcher and the number of formal incentives in place did not correlate with the final position on THE ranking (1 = best, a, c and e, respectively)

4 Discussion

This study provides a benchmark of incentives for knowledge valorisation in top European universities and shows that the presence and desirability of incentives are not related to their perceived effectiveness. This supports the idea that different motives may play a role when establishing incentives, such as managing conflict of interest situations, distributing income or fostering a university culture of knowledge valorisation. Furthermore, this study highlights that differences between scalable knowledge-based and non-scalable hour-based activities are reflected in their corresponding incentives. For non-scalable activities the bandwidths of allocated percentages are broader, incentives are more often informally regulated, more often rely on the income being directed to the university context rather than paying revenues out in private and there are more restricting conditions in place. Interestingly, this study also supports the idea that increasing restricting conditions may limit a university’s success in terms of industry income.

4.1 Rationales for implementing incentives

Valorisation professionals considered career progression to be the most effective incentive for academics to engage in knowledge valorisation activities, which is consistent with previous research highlighting the effectiveness of this incentive [e.g. (Lam 2011; Renault 2006)]. Attributing prizes or awards and sharing equity were considered least effective, which is in marked contrast to their high desirability. For prizes, this contrast may be explained by their contribution to institutional logics; i.e. the socially constructed patterns of practices, norms, values and rules that determine which activities are considered legitimate and desirable (Thornton and Ocasio 2008). As illustrated by quote #1 in Table 3, prizes may signal the value the institution places in these types of activities (Siegel et al. 2003), reduce the tension between research and knowledge valorisation (Olmos-Penuela et al. 2015; Sauermann and Stephan 2013) and lead to a crowding-in effect by rewarding researchers for a performance they would most likely also deliver without these incentives (Derrick and Bryant 2013; Korff et al. 2014). Finally, prizes may increase awareness of activities by colleague scientists and as such improve appreciation of such activities (Besley 2015; Frey and Neckermann 2008). A similar argument could explain the contrast between perceived effectiveness and desirability of equity sharing. Equity sharing may facilitate engagement of researchers in spin-off companies by serving as a mechanism to distribute the resulting revenues rather than as a motivating mechanism (Gazzard and Brown 2012; Grimaldi et al. 2011). In this capacity, equity sharing also contributes to prolonged involvement of researchers in the further development of the technology or knowledge in question (Jensen and Thursby 2003).

Revenue sharing, although generally considered quite effective, was not so desirable for contract research and contract education. One reason to not incentivize academics for contract research can be found in the idea that contract research is a benefit in itself; it allows researcher to do more research, which is in line with previous research on personal drivers (D’Este and Perkmann 2011; Derrick and Bryant 2013; Göktepe-Hulten and Mahagaonkar 2010) and illustrated by quote #2. Another reason can be found in the possible negative effects such an incentive could have in diverting efforts to attract research funding or to provide education in this specific direction, as illustrated in quote #3. This statement finds its basis in the theory of ‘multiple tasking’ where activities that are rewarded are given more attention to the neglect of activities that are not part of incentive schemes, such as curriculum-based education and administration tasks (Fehr and Schmidt 2004; Prendergast 1999).

While sharing university resources was considered moderately effective and was described as being highly desirable (see quote #4), this incentive was rarely implemented. Although especially relevant for researchers with spin-off companies (Fini et al. 2009) and for teachers with a high teaching load (Arvanitis et al. 2008), objections to its implementation were found in its zero-sum nature: university resources are limited and to reward one researcher or research group would mean to simultaneously ‘punish’ another leading to potential conflicts within the university (see quote #5).

4.2 Incentivizing scalable versus non-scalable activities

This study found an abundance of incentives that rely on revenue sharing and correspondingly reward economic outcomes rather than efforts to establish a broad societal impact of knowledge. Revenue sharing incentives reduce the principal’s risks of moral hazard and information asymmetry but increase the risk for researchers because the outcome of their efforts is mediated by factors beyond their influence, such as the efficiency of technology transfer professionals and the willingness of industry to adopt specific technologies (Belenzon and Schankerman 2009).

The average attributed percentage for successful patent exploitation in the top public universities in Europe is lower (38%) than was previously found in the US (51%) and in the UK (56%) (Gazzard and Brown 2012; Lach and Schankerman 2004). More importantly, this study shows that the bandwidths of percentages that are attributed to researchers across universities are especially broad for non-scalable activities. Additionally, incentives for these type of activities are less often formally organized. This informal organisation provides researchers with opportunities to take control on the outcomes and could serve as an incentive in itself, as explained by quote #6.

In line with previous studies, this study found no correlation between the percentage of patent revenues attributed to the researchers and the university’s ranking on the Times Higher Education list (Friedman and Silberman 2003; Lach and Schankerman 2004). While previous studies demonstrated a correlation between the researcher-attributed percentage and licensing income (Di Gregorio and Shane 2003; Friedman and Silberman 2003; Lach and Schankerman 2004), this study found no correlation between this percentage and the broader category industry income. This study does suggest that the presence of a bureaucratic atmosphere might be counterproductive in stimulating knowledge valorisation, as shown by the negative correlation between industry income and the number of restrictive conditions for consultancy activities. This is in contrast to some (Barjak et al. 2013; Caldera and Debande 2010) but not all (Muscio et al. 2016) previous studies looking at the relation between restricting conditions and indicators for economic impact. Despite this negative correlation, valorisation professionals expressed their preference for a bureaucratic atmosphere as shown by the statistically significant majority of the comments made for improvement referring to implementing more or better reinforcing rules and regulations. As such, these professionals emphasized the role incentives play in managing conflict of interest situations, which may at the same time limit the very process they aim to improve.

4.3 Implications, limitations and future research

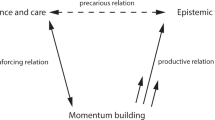

Although researchers are incentivized to engage in knowledge valorisation efforts, they simultaneously incur opportunity costs for time they cannot spend on the “knowledge for knowledge” activities that currently primarily determine their career progression. Some alignment between academic and valorisation tasks can be found in contract research and contract education, but incentives for these activities are underrepresented in top European universities. Other incentives that have the potential to alleviate opportunity costs are incentives with a discretionary component that may (in part) reward effort rather than outcome, such as considering knowledge valorisation activities in career progression decisions, attributing awards or prizes, attributing bonuses and sharing university resources (see Fig. 6). Although this study sheds some light on these discretionary incentives, in order to be able to incentivize efforts into the civil society and state-governmental domains as well as those in the economic domain, future research should look at ways to more objectively identify performances that contribute to an impact in these domains (see Fig. 6a).

Flowchart to select and design incentives. Areas for further research include a ways to more objectively evaluate efforts and performance in non-economic domains, b the effect of formality on how researchers organize scalable and non-scalable activities c how limiting conditions effect researchers’ perception of procedural justice. N No; Y Yes

The large differences in attributed percentages between institutions and the lack of correlation with institutional quality and industry income could tempt universities to employ incentive mechanisms as differentiating recruitment tools and lead to football-like migration of excellent academics (Friedman and Silberman 2003). This might be especially beneficial to attract younger researchers who have not yet achieved a tenured position, since they are more likely to move between public research organisations (Crespi et al. 2006), and have higher expectations of success and financial gains from knowledge valorisation activities than more experienced researchers (Hayter 2015). Scientists that might also be attracted by this type of recruitment tool are those experienced with entrepreneurial behaviour (Renault 2006). Obviously, these effects are mediated by the awareness of researchers on current incentive schemes as well as the perceived effectiveness of support organisations in bringing academic knowledge to the market successfully (Arqué-Castells et al. 2016; Göktepe-Hulten and Mahagaonkar 2010). Although an early study on mobility between public research institutions did not find an influence of different percentages (Crespi et al. 2006), more recent anecdotal evidence suggests that differential incentives are used to recruit researchers to universities (Derrick and Bryant 2013). Consequently, this behaviour could serve a reinforcing cycle in which competitive incentive schemes attract high quality researchers with well-established relationships with societal partners who in turn attract further (industrial) research funding (Derrick and Bryant 2013). As such, this benchmark could lead to an observer bias after the fact with changes in performance, behaviour, and rules and regulations on the basis of increased insight into corresponding mechanisms at different universities.

Next to revenue splits, the formality of the incentives might be a factor to take into consideration. But to further elucidate this relationship, more insight into how researchers organize their scalable and non-scalable activities around these incentives is needed. Previous studies suggest that star scientists may have a different approach to informal conditions than less experienced academic entrepreneurs (Markman et al. 2008). From the university perspective, a reason for a more informal approach to non-scalable activities can be found in the possibilities to limit the impact these activities have on the other tasks of academics, which was also suggested by the presence of numerous limiting conditions. Consequently, the risks of information asymmetry and moral hazard might differ between scalable and non-scalable activities and the principal and agent might differentially benefit from a more formal or informal organization for each (see Fig. 6b).

Although the direction of the causality cannot be determined in a survey study, the negative correlation between restrictive conditions and industry income could argue for a decoupling of incentives and control mechanisms. Academics are expected to behave entrepreneurially, not only via protecting IP and starting spin-off companies but also in their research and education activities. As such, rationales for restrictive conditions that emphasize the fear of academics neglecting their main tasks of teaching and research (Kalar and Antoncic 2015; Philpott et al. 2011) cannot fully explain the implementation of restricting conditions on contract research and contract education. On the contrary, controlling conditions based upon this rationale counteract themselves by restricting the same entrepreneurial actions that the incentives they accompany aim to support (Renault 2006). The detrimental effect of such restricting conditions was even found in the core of the academic enterprise, where academics showed less effort for publishing in high-impact journals when newly introduced incentives were perceived as controlling, thus highlighting that controlling mechanisms can crowd out intrinsic motivation (Andersen and Pallesen 2008). While we do not argue that the possible risks of conflict of interest should be ignored, the results do suggest that a less bureaucratic and more laissez-faire approach might actually benefit the societal impact of knowledge and perhaps academics should be given some slack and more support when engaging with societal stakeholders rather than be inhibited by limiting conditions. Previous studies have shown that perceived procedural justice might be an important mediating factor in determining efforts to engage in valorisation activities and subsequent likelihood of commercial success (Arqué-Castells et al. 2016; Muscio et al. 2016) and future studies looking into restricting conditions are encouraged to take this concept into account (see Fig. 6c).

This study focused on 48 of the 85 best European universities as listed by the THE Ranking. The reason for this approach is twofold. First, from a pragmatic standpoint, only for the top European universities their exact rank was disclosed by the THE Ranking, allowing for comparisons between universities. More importantly, research excellence is described as a necessary condition for knowledge valorisation success (Debackere and Veugelers 2005) and by focusing on the best European universities, those universities that were more likely to meet this condition were selected. Accordingly, the sample of the current study represents a good example for other universities but it also needs to be noted that contextual factors for these universities might differ significantly from other universities.

By shedding light on the presence, desirability and perceived effectiveness of incentives that are in place this study serves as a valuable policy tool but it also has some limitations. In many universities, the organisation of knowledge valorisation is diffuse with technology transfer offices, department heads, deans and communication offices sharing responsibility for the broad range of activities researchers engage in. Consequently, valorisation professionals might not have a complete perspective on all incentives that are in place across the university. However, given their close interaction with researchers, their involvement with numerous valorisation projects and the fact that they are closely involved with the majority of valorisation activities, they are the most adept party within universities to shed light on these issues. This approach also harbours its own weakness; valorisation professionals are often asked to participate in academic research and survey fatigue might be causing limited response rates, ultimately leading to biased results. Future studies might benefit from a more in-depth approach for which the current results can serve as a theoretical basis. One such approach would be to question the researchers, rather than support staff or their managers which incentives they are most motivated by.

4.4 Conclusion

For all incentives, universities are suggested to reconsider the reasons for implementing them since for each reason, a different balance between the type of incentives and their design is best suited. Consequently, incentives may not only play a role in rewarding societally engaged academics and motivating academics that are still reluctant to engage in knowledge valorisation but also provide organizational legitimacy to universities within the changing research and innovation context (Braun and Guston 2003). In this sense, a proper design of incentives may on the long run influence the issue of responsiveness within the broader research community.

References

Abreu, M., & Grinevich, V. (2013). The nature of academic entrepreneurship in the UK: Widening the focus on entrepreneurial activities. Research Policy, 42(2), 408–422.

Andersen, L. B., & Pallesen, T. (2008). “Not just for the money?” How financial incentives affect the number of publications at Danish research institutions. International Public Management Journal, 11(1), 28–47.

Arqué-Castells, P., Cartaxo, R. M., García-Quevedo, J., & Godinho, M. M. (2016). Royalty sharing, effort and invention in universities: Evidence from Portugal and Spain. Research Policy, 45(9), 1858–1872.

Arvanitis, S., Kubli, U., & Woerter, M. (2008). University-industry knowledge and technology transfer in Switzerland: What university scientists think about co-operation with private enterprises. Research Policy, 37(10), 1865–1883. doi:10.1016/j.respol.2008.07.005.

Audretsch, D., & Aldridge, T. (2012). Transnational social capital and scientist entrepreneurship. Journal of Management and Governance, 16(3), 369–376.

Baldini, N. (2010). Do royalties really foster university patenting activity? An answer from Italy. Technovation, 30(2), 109–116. doi:10.1016/j.technovation.2009.09.007.

Baldini, N. (2011). University patenting: Patterns of faculty motivations. Technology Analysis & Strategic Management, 23(2), 103–121. doi:10.1080/09537325.2011.543329.

Baldini, N., Grimaldi, R., & Sobrero, M. (2007). To patent or not to patent? A survey of Italian inventors on motivations, incentives, and obstacles to university patenting. Scientometrics, 70(2), 333–354. doi:10.1007/s11192-007-0206-5.

Barjak, F., Es-Sadki, N., & Arundel, A. (2013). Knowledge and technology transfer performance of European universities and research institutions: Assessing the influence of institutional by-laws and practices. In Paper presented at the 18th international conference on science and technology indicators.

Baruch, Y., & Holtom, B. C. (2008). Survey response rate levels and trends in organizational research. Human Relations, 61(8), 1139–1160.

Belenzon, S., & Schankerman, M. (2009). University knowledge transfer: Private ownership, incentives, and local development objectives. Journal of Law and Economics, 52(1), 111–144.

Benneworth, P., & Jongbloed, B. W. (2010). Who matters to universities? A stakeholder perspective on humanities, arts and social sciences valorisation. Higher Education, 59(5), 567–588. doi:10.1007/s10734-009-9265-2.

Besley, J. C. (2015). What do scientists think about the public and does it matter to their online engagement? Science and Public Policy, 42(2), 201–214. doi:10.1093/scipol/scu042.

Braun, D., & Guston, D. H. (2003). Principal-agent theory and research policy: An introduction. Science and Public Policy, 30(5), 302–308.

Caldera, A., & Debande, O. (2010). Performance of Spanish universities in technology transfer: An empirical analysis. Research Policy, 39(9), 1160–1173.

Crespi, G. A., Geuna, A., & Nesta, L. (2006). The mobility of university inventors in Europe. The Journal of Technology Transfer, 32(3), 195–215. doi:10.1007/s10961-006-9012-0.

Dale, R. (2010). Constructing Universities’ Responses to Europe’s Lisbon Agenda: The Roles of the European Commission in creating the Europe of Knowledge published by the Centre for Learning and Life Chances in Knowledge Economies and Societies. http://www.llakes.org.

Davey, T., Baaken, T., Galan Muros, V., & Meerman, A. (2011). The State of European University-Business Cooperation. In Part of the DG education and culture study on the cooperation between higher education institutions and public and private organisations in Europe.

De Jong, S. (2015). Engaging scientists: Organising valorisation in the Netherlands. Doctoral thesis, Leiden University. The Hague: Rathenau Institute.

De Jong, S., Smit, J., & Van Drooge, L. (2016). Scientists’ response to societal impact policies: A policy paradox. Science and Public Policy, 43(1), 102–114.

Debackere, K., & Veugelers, R. (2005). The role of academic technology transfer organizations in improving industry science links. Research Policy, 34(3), 321–342. doi:10.1016/j.respol.2004.12.003.

Derrick, G. E., & Bryant, C. (2013). The role of research incentives in medical research organisations. R & D Management, 43(1), 75–86. doi:10.1111/radm.12001.

D’Este, P., & Perkmann, M. (2011). Why do academics engage with industry? The entrepreneurial university and individual motivations. Journal of Technology Transfer, 36(3), 316–339. doi:10.1007/s10961-010-9153-z.

Di Gregorio, D., & Shane, S. (2003). Why do some universities generate more start-ups than others? Research Policy, 32(2), 209–227.

Eisenhardt, K. M. (1988). Agency-and institutional-theory explanations: The case of retail sales compensation. Academy of Management Journal, 31(3), 488–511.

Eysenbach, G. (2004). Improving the quality of Web surveys: The Checklist for Reporting Results of Internet E-Surveys (CHERRIES). J Med Internet Res, 6(3), e34.

Fagerland, M. W., Lydersen, S., & Laake, P. (2013). The McNemar test for binary matched-pairs data: Mid-p and asymptotic are better than exact conditional. BMC Medical Research Methodology, 13(91), 1–8. doi:10.1186/1471-2288-13-91.

Fehr, E., & Schmidt, K. M. (2004). Fairness and Incentives in a multi-task principal-agent model. The Scandinavian Journal of Economics, 106(3), 453–474.

Fini, R., Grimaldi, R., & Sobrero, M. (2009). Factors fostering academics to start up new ventures: An assessment of Italian founders’ incentives. Journal of Technology Transfer, 34(4), 380–402. doi:10.1007/s10961-008-9093-z.

Frey, B. S., & Necke rmann, S. (2008). Academics appreciate awards-a new aspect of incentives in research. In CESifo Working Paper Series, 2531, SSRN: https://ssrn.com/abstract=1319323

Friedman, J., & Silberman, J. (2003). University technology transfer: Do incentives, management, and location matter? Journal of Technology Transfer, 28, 17–30.

Galán-Muros, V., van der Sijde, P., Groenewegen, P., & Baaken, T. (2015). Nurture over nature: How do European universities support their collaboration with business? The Journal of Technology Transfer. doi:10.1007/s10961-015-9451-6.

Gazzard, J., & Brown, S. A. (2012). Revenue sharing: An assessment of current policies at UK universities. Industry and Higher Education, 26(1), 21–29. doi:10.5367/ihe.2012.0077.

Geuna, A., & Muscio, A. (2009). The governance of university knowledge transfer: A critical review of the literature. Minerva, 47(1), 93–114. doi:10.1007/s11024-009-9118-2.

Göktepe-Hulten, D., & Mahagaonkar, P. (2010). Inventing and patenting activities of scientists: In the expectation of money or reputation? The Journal of Technology Transfer, 35(4), 401–423.

González-Pernía, J. L., Kuechle, G., & Peña-Legazkue, I. (2013). An assessment of the determinants of university technology transfer. Economic Development Quarterly, 27(1), 6–17.

Gray, D. E. (2013). Doing research in the real world (3rd ed.). London: Sage.

Grimaldi, R., Kenney, M., Siegel, D. S., & Wright, M. (2011). 30 years after Bayh–Dole: Reassessing academic entrepreneurship. Research Policy, 40(8), 1045–1057. doi:10.1016/j.respol.2011.04.005.

Hakala, J., & Ylijoki, O.-H. (2001). Research for whom? Research orientations in three academic cultures. Organization, 8(2), 373–380.

Hayter, C. S. (2015). Public or private entrepreneurship? Revisiting motivations and definitions of success among academic entrepreneurs. Journal of Technology Transfer, 40(6), 1003–1015. doi:10.1007/s10961-015-9426-7.

Hladchenko, M. (2016). Knowledge valorisation: A route of knowledge that ends in surplus value (an example of the Netherlands). International Journal of Educational Management, 30(5), 668–678. doi:10.1108/ijem-12-2014-0167.

Jensen, R., & Thursby, M. (2003). Proofs and prototypes for sale: The licensing of university inventions. International Library of Critical Writings in Economics, 165, 639–660.

Kalar, B., & Antoncic, B. (2015). The entrepreneurial university, academic activities and technology and knowledge transfer in four European countries. Technovation, 36, 1–11.

Korff, N., van der Sijde, P., Groenewegen, P., & Davey, T. (2014). Supporting university–industry linkages: A case study of the relationship between the organizational and individual levels. Industry and Higher Education, 28(4), 281–300.

Lach, S., & Schankerman, M. (2004). Royalty sharing and technology licensing in universities. Journal of the European Economic Association, 2(2–3), 252–264.

Lach, S., & Schankerman, M. (2008). Incentives and invention in universities. The Rand Journal of Economics, 39(2), 403–433.

Lam, A. (2011). What motivates academic scientists to engage in research commercialization: ‘Gold’, ‘ribbon’ or ‘puzzle’? Research Policy, 40(10), 1354–1368. doi:10.1016/j.respol.2011.09.002.

Link, A. N., & Siegel, D. S. (2005). Generating science-based growth: An econometric analysis of the impact of organizational incentives on university–industry technology transfer. The European Journal of Finance, 11(3), 169–181. doi:10.1080/1351847042000254211.

Macho-Stadler, I., & Pérez-Castrillo, D. (2010). Incentives in university technology transfers. International Journal of Industrial Organization, 28(4), 362–367.

Markman, G. D., Gianiodis, P. T., Phan, P. H., & Balkin, D. B. (2004). Entrepreneurship from the Ivory tower: Do incentive systems matter? The Journal of Technology Transfer, 29(3–4), 353–364.

Markman, G. D., Gianiodis, P. T., Phan, P. H., & Balkin, D. B. (2005). Innovation speed: Transferring university technology to market. Research Policy, 34(7), 1058–1075. doi:10.1016/j.respol.2005.05.007.

Markman, G. D., Siegel, D. S., & Wright, M. (2008). Research and technology commercialization. Journal of Management Studies, 45(8), 1401–1423. doi:10.1111/j.1467-6486.2008.00803.x.

Mostert, S. P., Ellenbroek, S. P., Meijer, I., van Ark, G., & Klasen, E. C. (2010). Societal output and use of research performed by health research groups. Health Research Policy and Systems, 8, 30. doi:10.1186/1478-4505-8-30.

Muscio, A., Quaglione, D., & Ramaciotti, L. (2016). The effects of university rules on spinoff creation: The case of academia in Italy. Research Policy, 45(7), 1386–1396.

Olmos-Penuela, J., Benneworth, P., & Castro-Martinez, E. (2015). What stimulates researchers to make their research usable? Towards an ‘Openness’ approach. Minerva, 53(4), 381–410. doi:10.1007/s11024-015-9283-4.

Philpott, K., Dooley, L., O’Reilly, C., & Lupton, G. (2011). The entrepreneurial university: Examining the underlying academic tensions. Technovation, 31(4), 161–170. doi:10.1016/j.technovation.2010.12.003.

Pinto, H., & Fernández-Esquinas, M. (2016). What do stakeholders think about knowledge transfer offices? The perspective of firms and research groups in a regional innovation system. Industry and Innovation. doi:10.1080/13662716.2016.1270820.

Prendergast, C. (1999). The provision of incentives in firms. Journal of Economic Literature, 37(1), 7–63.

Renault, C. S. (2006). Academic capitalism and university incentives for faculty entrepreneurship. The Journal of Technology Transfer, 31(2), 227–239.

Sauermann, H., & Stephan, P. (2013). Conflicting logics? A Multidimensional view of industrial and academic science. Organization Science, 24(3), 889–909. doi:10.1287/orsc.1120.0769.

Sharpe, D. (2015). Your chi square test is statistically significant: Now what? Practical Assessment, Research & Evaluation, 20(8), 2.

Siegel, D. S., Waldman, D. A., Atwater, L. E., & Link, A. N. (2003). Commercial knowledge transfers from universities to firms: Improving the effectiveness of university–industry collaboration. The Journal of High Technology Management Research, 14(1), 111–133. doi:10.1016/s1047-8310(03)00007-5.

Swamidass, P. M., & Vulasa, V. (2008). Why university inventions rarely produce income? Bottlenecks in university technology transfer. The Journal of Technology Transfer, 34(4), 343–363. doi:10.1007/s10961-008-9097-8.

Thornton, P. H., & Ocasio, W. (2008). Institutional logics. The Sage Handbook of Organizational Institutionalism, 840, 99–128.

Väänänen, L. (2010). Human capital and incentives in the creation of inventions: A study of Finnish inventors. Helsinki: School of Economics, Aalto University.

van Ark, G., & Klasen, E. C. (2007). Maatschappelijke impact van gezondheidsonderzoek. TSG, 85(5), 259–261.

Van den Nieuwboer, M., Van de Burgwal, L. H. M., & Claassen, E. (2015). A quantitative key-opinion-leader analysis of innovation barriers in probiotic research and development: Valorisation and improving the tech transfer cycle. PharmaNutrition. doi:10.1016/j.phanu.2015.09.003.

Van Vught, F. (2009). The EU innovation agenda: Challenges for European higher education and research. Higher Education Management and Policy, 21(2), 1–22.

Vilarinho, P. M. (2015). COTEC’s technology commercialization accelerator (Act®). In Competitive Strategies for academic entrepreneurship: Commercialization of research-based products (p. 188).

Walter, T., Ihl, C., Mauer, R., & Brettel, M. (2013). Grace, gold, or glory? Exploring incentives for invention disclosure in the university context. The Journal of Technology Transfer. doi:10.1007/s10961-013-9303-1.

Weenen, T. C., Pronker, E. S., Commandeur, H. R., & Claassen, E. (2013). Barriers to innovation in the medical nutrition industry: A quantitative key opinion leader analysis. PharmaNutrition, 1(3), 79–85. doi:10.1016/j.phanu.2013.04.001.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest and are not part of any technology transfer office.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

van de Burgwal, L.H.M., Dias, A. & Claassen, E. Incentives for knowledge valorisation: a European benchmark. J Technol Transf 44, 1–20 (2019). https://doi.org/10.1007/s10961-017-9594-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10961-017-9594-8