Abstract

A notorious problem in mathematics and physics is to create a solvable model for random sequential adsorption of non-overlapping congruent spheres in the d-dimensional Euclidean space with \(d\ge 2\). Spheres arrive sequentially at uniformly chosen locations in space and are accepted only when there is no overlap with previously deposited spheres. Due to spatial correlations, characterizing the fraction of accepted spheres remains largely intractable. We study this fraction by taking a novel approach that compares random sequential adsorption in Euclidean space to the nearest-neighbor blocking on a sequence of clustered random graphs. This random network model can be thought of as a corrected mean-field model for the interaction graph between the attempted spheres. Using functional limit theorems, we characterize the fraction of accepted spheres and its fluctuations.

Similar content being viewed by others

1 Introduction

Random sequential adsorption of congruent spheres in the d-dimensional Euclidean space has been a topic of great interest across the sciences, serving as basic models in condensed matter and quantum physics [21, 28, 35, 37], nanotechnology [11, 14], information theory and optimization problems [20, 24, 40]. Random sequential adsorption also arises naturally in experimental settings, ranging from the deposition of nano-scale particles on polymer surfaces, adsorption of proteins on solid surfaces to the creation of logic gates for quantum computing, and many more applications in domains as diverse biology, ecology and sociology, see [10, 45, 46] for extensive surveys. We refer with random sequential adsorption (\(\textsc {rsa}\)) to the dynamic process defined as follows: At each time epoch, a point appears at a uniformly chosen location in space, and an attempt is made to place a sphere of radius r with the chosen point as its center. The new sphere must either fit in the empty area without overlap with the spheres deposited earlier, or its deposition attempt is discarded. After n deposition attempts, the quantity of interest is the proportionof accepted spheres, or equivalently, the volume covered by the accepted spheres. Figure 1a illustrates an instance of this rsaprocess in 2D.

a Random sequential adsorption in 2D with density \(c=15\). Dots indicate the centers of accepted (red) and discarded (blue) spheres. b \(\textsc {rgg}(15,2)\) graph with 1000 vertices: Two vertices share an edge if they are less than 2r distance apart, where r is such that a vertex has on average \(c=15\) neighbors. Notice the many local clusters. a rsain 2D b rggnetwork (Color figure online)

Equivalently, we may think of the interaction network of the n chosen centers of spheres by drawing an edge between two points if they are at most 2r distance apart. This is because a deposition attempt can block another deposition attempt if and only if the centers are at most 2r distance apart. The obtained random graph is known as the random geometric graph (\(\textsc {rgg}\)) [32]. The fraction of accepted spheres can be obtained via the following greedy algorithm to find independent sets of \(\textsc {rgg}\): Given a graph G, initially, all the vertices are declared inactive. Sequentially activate uniformly chosen inactive vertices of the graph and block the neighborhood until all the inactive vertices are exhausted. We refer to the above greedy algorithm as \(\textsc {rsa}\) on the graph G. If G has the same distribution as \(\textsc {rgg}\) on n vertices, then the final set of active vertices has the same distribution as the number of accepted spheres in the continuum after n deposition attempts. Thus, we one can equivalently study \(\textsc {rsa}\) on \(\textsc {rgg}\) to obtain the fraction of accepted spheres when \(\textsc {rsa}\) is applied in continuum.

The precise setting in this paper considers \(\textsc {rsa}\) in a finite-volume box \([0,1]^d\) with periodic boundary, filled with ‘small’ spheres of radius r and volume \(V_d(r)\) [34, 36, 37]. Since the volume of \([0,1]^d\) is 1, the probability that two randomly chosen vertices share an edge in the interaction network is equal to the volume of a sphere of radius 2r given by \(V_d(2r)=\pi ^{d/2} (2r)^d/\Gamma (1+d/2) \). Thus the average vertex degree in the \(\textsc {rgg}\) is \(c=n V_d(2r)\), and since c is also the average number of overlaps per sphere, with all other attempted spheres, we interchangeably use the terms density and average degree for c. We operate in the sparse regime, where both \(r\rightarrow 0\) and \(n\rightarrow \infty \), so that \(c\ge 0\) is an arbitrary but fixed constant. In fact, maintaining a constant density c in the large-network limit is necessary to observe a non-degenerate limit of the fraction of accepted spheres. In other words, as we will see, the jamming fraction converges to 1 or 0 when c converges to 0 or infinity. Thus, in order for c to remain fixed as \(n\rightarrow \infty \), the radius should scale as a function of n according to

Notice that it is equivalent to consider the deposition of spheres with fixed radii into a box of growing volume. We parameterize the \(\textsc {rgg}\) model by the density c and the dimension d, and henceforth write this as \(\textsc {rgg}(c,d)\). A typical instance of \(\textsc {rgg}(5,2)\) with \(n = 1000\) vertices is shown in Fig. 1b. Let \(J_n(c,d)\) be the fraction of active vertices in the \(\textsc {rgg}(c,d)\) model on n vertices.

In the classical setting, one keeps adding spheres until there is no place left to add another sphere and studies the fraction of area \(N_n(d)\) covered by the accepted spheres. Detailed results about \(N_n(d)\) involving law of large numbers and Gaussian fluctuations have been obtained in [33, 38]. The settings under consideration in this paper, however, are known as fixed or finite input packing in the literature. It was shown in [34] that there exist constants J(c, d) and V(c, d) such that \(J_n(c,d) \xrightarrow {\scriptscriptstyle \mathbb {P}} J(c,d)\) and \(\sqrt{n}(J_n(c,d) - J(c,d))\xrightarrow {\scriptscriptstyle d} {\mathrm {Normal}}(0,V(c,d))\). More detailed results about the point process of locations of accepted spheres and the number of accepted spheres have been obtained in [4,5,6, 17, 39]. This includes both moderate and large deviation results for \(N_n(d)\). To the best of our knowledge, finding an explicit quantitative characterization of J(c, d) for dimensions \(\ge 2\) remains an open problem, although numerical estimates have been obtained through extensive simulations [3, 13, 19, 41, 44, 47, 51].

In this paper, we do not aim to analyze the rsaprocess on rgg’s directly. Rather, we introduce an approximate approach for studying J(c, d) and V(c, d) by considering \(\textsc {rsa}\) on a clustered random graph model, designed to match the local spatial properties of the \(\textsc {rgg}\) model in terms of average degree and clustering. Exact analysis of this random graph model leads to expressions for the limiting jamming fraction and its fluctuations, in turn providing approximations for J(c, d) and V(c, d). Using simulations we show that these approximations are accurate. havior of \(\textsc {rsa}\) on \(\textsc {rgg}\) and our proposed random graph model via. extensive simulation.

The paper is structured as follows: Sect. 2 introduces the clustered random graph and the correspondence with the random geometric graph. Section 3 presents the main results for the jamming fraction in the mean-field regime. We also show through extensive simulations that the mean-field approximations are accurate for all densities and dimensions. Sections 4, 5, and 6 contain all the proofs, and we conclude in Sect. 7 with a discussion.

2 Clustered Random Graphs

Random graphs serve to model large networked systems, but are typically unfit for capturing local clustering in the form of relatively many short cycles. This can be resolved by locally adding so-called households or small dense graphs [2, 12, 23, 29, 42, 43, 48, 49]. Vertices in a household have a much denser connectivity to all (or many) other household members, which enforces local clustering. We now introduce a specific household model, called clustered random graph model (\({\textsc {crg}}\)), designed for the purpose of analyzing the \(\textsc {rsa}\) problem. An arbitrary vertex in the crgmodel has local or short-distance connections with nearby vertices, and global or long-distance connections with the other vertices. When pairing vertices, the local and global connections are formed according to different statistical rules. The degree distribution of a typical vertex is taken to be Poisson(c) (approximately) in both the \(\textsc {rgg}\) and \({\textsc {crg}}\) model. Thus a typical vertex, when activated, blocks approximately Poisson(c) other vertices. In the \({\textsc {crg}}(c,\alpha )\) model however, the total mass of connectivity measured in the density parameter c, is split into \(\alpha c\) to account for direct local blocking and \((1-\alpha )c\) to incorporate the propagation of spatial correlations over longer distances. The \({\textsc {crg}}(c,\alpha )\) model with n vertices is then defined as follows (see Fig. 2):

-

Partition the n vertices into random households of size \(1+{\mathrm {Poisson}}(\alpha c)\). This can be done by sequentially selecting \(1+{\mathrm {Poisson}}(\alpha c)\) vertices uniformly at random and declaring them as a household, and repeat this procedure until at some point the next \(1+{\mathrm {Poisson}}(\alpha c)\) random variable is at most the number of remaining vertices. All the remaining vertices are then declared a household too, and the household formation process is completed.

-

Now that all vertices are declared members of some household, the random graph is constructed according to a local and a global rule. The local rule says that all vertices in the same household get connected by an edge, leading to complete graphs of size 1+Poisson(\(\alpha c\)). The global rule adds a connection between any two vertices belonging to two different households with probability \((1-\alpha )c/n\).

This creates a class of random networks with average degree c and tunable level of clustering via the free parameter \(\alpha \). With the goal to design a solvable model for the \(\textsc {rsa}\) process, the \({\textsc {crg}}(c,\alpha )\) model has nc / 2 connections to build a random structure that mimics the local spatial structure of the \(\textsc {rgg}(c,d)\) model on n vertices.

Seen as the topology underlying the \(\textsc {rsa}\) problem, the \({\textsc {crg}}(c,\alpha )\) model incorporates local clusters of overlapping spheres, which occur naturally in random geometric graphs; see Fig. 1b. We can now also consider rsaon the \({\textsc {crg}}(c,\alpha )\) model, by using the greedy algorithm that constructs an independent set on the graph by sequentially selecting vertices uniformly at random, and placing them in the independent set unless they are adjacent to some vertex already chosen. The jamming fraction \(J^\star _n(c,\alpha )\) is then the size of the greedy independent set divided by the network size n. From a high-level perspective, we will solve the rsaproblem on the \({\textsc {crg}}(c,\alpha )\) model, and translate this solution into an equivalent result for \(\textsc {rsa}\) on the \(\textsc {rgg}(c,d)\).

Our ansatz is that for large enough n, a unique relation can be established between dimension d in \(\textsc {rgg}\) and the parameter \(\alpha = \alpha _d\) in \({\textsc {crg}}\), so that the jamming fractions are comparable, i.e., \(J_n(c, d)\approx J^\star _n(c, \alpha _d),\) and virtually indistinguishable in the large network limit. In order to do so, we map the \({\textsc {crg}}(c,\alpha )\) model onto the \(\textsc {rgg}(c,d)\) model by imposing two natural conditions. The first condition matches the average degrees in both topologies, i.e., c is chosen to be equal to \(nV_d(2r)\). The second condition tunes the local clustering.

Let us first describe the clustering in the \(\textsc {rgg}\) model. Consider two points chosen uniformly at random in a d-dimensional hypersphere of radius 2r. Then what is the probability that these two points are themselves at most 2r distance apart? From the \(\textsc {rgg}\) perspective, this corresponds to the probability that, conditional on two vertices u and v being neighbors, a uniformly chosen neighbor w of u is also a neighbor of v, which is known as the local clustering coefficient [30]. In the \({\textsc {crg}}(c,\alpha )\) model, on the other hand, the relevant measure of clustering is \(\alpha \), the probability that a randomly chosen neighbor is a neighbor of one of its household members. We then choose the unique \(\alpha \)-value that equates to the clustering coefficient of \(\textsc {rgg}\). Denote this unique value by \(\alpha _d\), to express its dependence on the dimension d. In Sect. 6, we show that

with \(I_z(a,b)\) the normalized incomplete beta integral. Table 1 shows the numerical values of \(\alpha _d\) for dimensions 1 to 5. With the uniquely characterized \(\alpha _d\) in (2.1), the \({\textsc {crg}}(c,\alpha _d)\) model can now serve as a generator of random topologies for guiding the rsaprocess. By extending the mean-field techniques recently developed for analyzing rsaon random graph models [7, 9, 16, 36], it turns out that \(\textsc {rsa}\) on the \({\textsc {crg}}(c,\alpha _d)\) model is analytically solvable, even at later times when the filled space becomes more dense (large c).

The main goal of these works was to find greedy independent sets (or colorings) of large random networks. All these results, however, were obtained for non-geometric random graphs, typically used as first approximations for sparse interaction networks in the absence of any known geometry.

3 Main Results

3.1 Limiting Jamming Fraction

For the \({\textsc {crg}}(c,\alpha )\) model on n vertices, recall that \(J^\star _n(c,\alpha )\) denotes the fraction of active vertices at the end of the \(\textsc {rsa}\) process. We then have the following result, which characterize the limiting fraction:

Theorem 3.1

(Limiting jamming fraction) For any \(c>0\) and \(\alpha \in [0,1]\), as \(n\rightarrow \infty \), \(J^\star _n(c,\alpha )\) converges in probability to \(J^\star (c,\alpha )\), where \(J^\star (c,\alpha )\) is the smallest nonnegative root of the deterministic function x(t) described by the integral equation

The ODE (3.1) can be understood intuitively in terms of the algorithmic description in Sect. 4.1 that sequentially explores the graph while activating the allowed vertices. Rescale time by n, so that after rescaling the algorithm has to end before time \(t=1\) (because the network size is n). Now think of x(t) as the fraction of neutral vertices at time t. Then clearly \(x(0) = 1\), and the drift \(-t\) says that one vertex activates per time unit. Upon activation, a vertex on average blocks its \(\alpha c\) household members and \((1-\alpha )c\) other vertices outside its household. At time t, the fraction of vertices that are not members of any discovered households equals on average \((1 - (1+\alpha c)t)\) and all vertices which are not part of any discovered households, are potential household members of the newly active vertex (irrespective of whether it is blocked or not). Since household members are uniformly selected at random, only a fraction \(x(t) / (1 - (1+\alpha c)t)\) of the new \(\alpha c\) household members will belong to the set of neutral vertices. Moreover, since all x(t)n vertices are being blocked by the newly active vertex with probability \((1-\alpha )c/n\), on average \((1-\alpha )c x(t)\) neutral vertices will be blocked due to distant connections. Notice that the graph will be maximally packed when x(t) becomes zero, i.e., there are no neutral vertices that can become active. This explains why \(J^\star (c,\alpha )\) should be the time t when \(x(t)=0\), i.e., smallest root of (3.1).

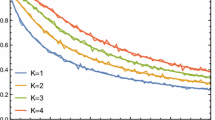

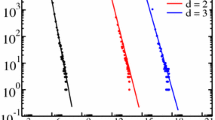

Upon substituting \(\alpha =\alpha _d\), \(J^\star (c,\alpha _d)=\lim _{n\rightarrow \infty }J^\star _n(c,\alpha _d)\) is completely characterized by (3.1) and serves an approximation for the intractable counterpart J(c, d), the limiting jammed fraction for the \(\textsc {rgg}(c,d)\) model. The choice of \(\alpha _d\), as discussed earlier, is given by (2.1) and shown in Table 1. Figure 3 validates the mean-field limit for the crgmodel, and shows the theoretical values \(J^\star (c,\alpha _2)\) from Theorem 3.1, along with the simulated values of \(J_n(c,2)\) on the \(\textsc {rgg}(c,2)\) model for values of c ranging from 0 to 30. Figure 4 shows further comparisons between \(J^\star (c,\alpha _d)\) and \(J_n(c,d)\) for dimensions \(d=3,4,5\), and densities \(0\le c\le 30\). All simulations use \(n=1000\) vertices. The remarkable agreement of the \(J^\star (c,\alpha _d)\)-curves with the simulated results across all dimensions shows that the integral equation (3.1) accurately describes the mean-field large-network behavior of the \(\textsc {rsa}\) process, not only for the crgmodel, but also for the \(\textsc {rgg}\) model. The following result is a direct consequence of Theorem 3.1, and gives a simple law to describe the asymptotic fraction \(J^\star (c,\alpha _d)\) in the large density (\(c\rightarrow \infty \)) regime.

Corollary 3.1

As \(c\rightarrow \infty \), \( J^\star (c,\alpha _d) \sim (1+\alpha _dc)^{-1}. \)

Hence, for large enough c, \(J^\star (c,\alpha _d)\approx (1+\alpha _d c)^{-1}\) serves as an approximation for all dimensions. Due to the accurate prediction provided by the crgmodel, the total scaled volume \(c{J}(c,d)/2^d\) covered by the deposited spheres in dimension d can be well approximated. Indeed, for large c, Corollary 3.1 yields \(J^\star (c,\alpha _d)\sim 1/(\alpha _dc)\), and in any dimension d, our model leads to a precise characterization of the covered volume given by

Notice that \(\alpha _d\rightarrow 0\) as \(d\rightarrow \infty \). Thus, the interaction network described by the \({\textsc {crg}}(c,\alpha _d)\) model becomes almost like the (pure) mean-field Erdős-Rényi random graph model, which supports the widely believed conjecture that in high dimensions the interaction network associated with the random geometric graph loses its local clustering property [15].

3.2 Fluctuations of the Jamming Fraction

The next theorem characterizes the fluctuations of \(J_n^\star (c,\alpha )\) around its mean:

Theorem 3.2

(CLT for jamming fraction) As \(n\rightarrow \infty \),

where Z has a normal distribution with mean zero and variance \(V^\star (c,\alpha )\). Here \(J^\star (c,\alpha )\) is given by Theorem 3.1, and \(V^\star (c,\alpha )=\sigma _{xx}(J^\star (c,\alpha ))\) with \(\sigma _{xx}(t)\) being the unique solution of the system of differential equations, for \(0\le t<1/\mu \),

with

a Fitted normal curve for 2000 repetitions of the \({\textsc {crg}}(20,\alpha _2)\) model with 1000 vertices. The solid curve represents the normal density with properly scaled theoretical variance \(V^\star (c,\alpha _2)\), centered around the sample mean. b Fitted normal curves for the \({\textsc {crg}}(c,\alpha _2)\) model for increasing c values 10, 20, and 30. As c increases, the curve become more sub-Poissonian. a Fitted normal curve b Effect of density on variance

Figure 5a confirms that the asymptotic analytical variance given in (3.3) and (3.4) is a sharp approximation for the crgmodel with only 2000 vertices. Table 2 shows numerical values of \(V^\star (c,\alpha _d)\) and compares the analytically obtained values of \(J^\star (c,\alpha _d)\) and \(V^\star (c,\alpha _d)\), and simulated mean and variance for the random geometric graph ensemble. The agreement again confirms the appropriateness of the \({\textsc {crg}}(c,\alpha _d)\) model for modeling the continuum \(\textsc {rsa}\). Furthermore, \(V^\star (c,\alpha _d)\) serves as an approximation for the value of V(c, d), the asymptotic variance of J(c, d) (suitably rescaled). Figure 5b shows the density function of the random variable based on the Gaussian approximation in Theorem 3.2. We observe that both the mean and the fluctuations around the mean decrease with c. Indeed, the variance-to-mean ratio has been typically observed to be smaller than one for rsain the continuum, and it is generally believed that the jamming fractions are typically of sub-Poissonian nature with fluctuations that are not as large as for a Poisson distribution; see for instance the Mandel Q parameter in quantum physics [36]. So, while a closed-form expression remains out of reach (as for the Mandel Q parameter [36]), our solvable model gives a way to describe approximately the variance-to-mean ratio as \(V^\star (c,\alpha _d)/J^\star (c,\alpha _d)\).

4 Proof of Theorem 3.1

In this section we analyze several asymptotic properties of rsaon the \({\textsc {crg}}(c,\alpha )\) model. In particular, we will prove Theorem 3.1. We first introduce an algorithm that sequentially activates the vertices while obeying the hard-core exclusion constraint, and then analyze the exploration algorithm (see [8, 9, 16] for similar analyses in various other contexts). The idea is to keep track of the number of vertices that are not neighbors of already actives vertices (termed unexplored vertices), so that when this number becomes zero, no vertex can be activated further. The number of unexplored vertices can then be decomposed into a drift part which converges to a deterministic function and a fluctuation or martingale part which becomes asymptotically negligible in the mean-filed case (Theorem 3.1) but gives rise to the a system of SDEs with variance (3.3). The proof crucially relies on the Functional Laws of Large Numbers (FLLN) and the Functional Central Limit Theorem (FCLT). The key challenge here is that the process that keeps track of the number of unexplored vertices while the exploration algorithm is running does not yield a Markov process, so we have to introduce another process to make the system Markovian and analyze this two-dimensional system.

For each vertex, the neighboring vertices inside and outside its own household will be referred to as ‘household neighbors’ and ‘distant neighbors’, respectively. If H denotes the size of the households, then \(H\sim 1+{\mathrm {Poisson}}(\alpha c)\). Therefore, \(\mathbb {E}\left( H\right) =1+\alpha c\), and \({\mathrm {Var}}(H)=\alpha c\). Furthermore, any two vertices belonging to two different households are connected by an edge with probability \(p_n=(1-\alpha )c/n\), so the number of distant neighbors is a Bin\((n-H-1,p_n)\) random variable, Poisson\(((1-\alpha )c)\) in the large n limit. As mentioned earlier, the total number of neighbors, is then asymptotically given by a Poisson(c) random variable. In this section we fix \(c>0\) and \(\alpha \in [0,1]\), and simply write \(J^\star _n\) and \(J^\star \) for \(J^\star _n(c,\alpha )\) and \(J^\star (c,\alpha )\) respectively.

Notation We will use boldfaced letters to denote stochastic processes and vectors. A sequence of random variables \(\{X_n\}_{n\ge 1}\) is said to be \(O_{\scriptscriptstyle \mathbb {P}}(f(n))\), or \(o_{\scriptscriptstyle \mathbb {P}}(f(n))\), for some function \(f:\mathbb {R}\rightarrow \mathbb {R}_+\), if the sequence of scaled random variables \(\{X_n/f(n)\}_{n\ge 1}\) is tight, or converges to zero in probability, respectively. We denote by \(D_E[0,\infty )\) the set of all càdlàg (right continuous left limit exists) functions from \([0,\infty )\) to a complete, separable metric space E, endowed with the Skorohod \(J_1\) topology, and by ‘\( \xrightarrow {\scriptscriptstyle d}\)’ and ‘\(\xrightarrow {\scriptscriptstyle \mathbb {P}}\)’, convergence in distribution and in probability, respectively. In particular, if the sample paths of a stochastic process \(\mathbf {X}\) are continuous, we write \(\mathbf {X}_n=\{X_n(t)\}_{t\ge 0}\xrightarrow {\scriptscriptstyle d} \mathbf {X}=\{X(t)\}_{t\ge 0}\), if for any \(T\ge 0\),

4.1 The Exploration Algorithm

Instead of fixing a particular realization of the random graph and then studying rsaon that given graph, we introduce an algorithm which sequentially activates the vertices one-by-one, explores the neighborhood of the activated vertices, and simultaneously builds the random graph topology on the activated and explored vertices. The joint distribution of the random graph and active vertices obtained this way is same as those obtained by first fixing the random graph and then studying rsa. The idea of exploring in the above fashion simplifies the whole analysis, since the evolution of the system can be described recursively in terms of the previous states, as described below in detail.

Observe that during the process of sequential activation, until the jamming state is reached, the vertices can be in either of three states: active, blocked, and unexplored (i.e. vertices with future potential activation). Furthermore, there can be two types of blocked vertices: (i) blocked due to activation of some household neighbor, or (ii) none of the household neighbors is active, but there is an active distant neighbor. Therefore, at each time \(t\ge 0\), categorize the vertices into four sets:

-

A(t): set of all vertices active.

-

U(t): set of all vertices that are not active and that have not been blocked by any vertex in A(t).

-

BH(t): set of all vertices that belong to a household of some vertex in A(t).

-

BO(t): set of all vertices that do not belong to a household yet, but are blocked due to connections with some vertex in A(t) as a distant neighbor.

Note that \(\mathrm {BH}(t)\cup \mathrm {BO}(t)\) constitutes the set of all blocked vertices at time t, and \(\mathrm {BH}(t)\cap \mathrm {BO}(t)=\emptyset \). Initially, all vertices are unexplored, so that U\((0)= V(G)\), the set of all n vertices. At time step t, one vertex v is selected from U\((t-1)\) uniformly at random and is transferred to A(t), i.e., one unexplored vertex becomes active.

We now explore the neighbors of v, which can be of two types: the household neighbors, and the distant neighbors. Further observe that v can have its household neighbors only from the set \(\mathrm {U}(t-1)\cup \mathrm {BO}(t-1)\setminus \{v\}\), since each vertex in \(\mathrm {BH}(t-1)\) already belongs to some household. Define

i.e., draw a Poisson\((\alpha c)\) random variable independently of any other process, and if it is smaller than \(|\mathrm {U}(t-1)\cup \mathrm {BO}(t-1)\setminus \{v\}|\), then take it to be the value of \(H-1\), and otherwise set \(H(t)=|\mathrm {U}(t-1)\cup \mathrm {BO}(t-1)\setminus \{v\}|\). Select H(t) vertices \(\{u_1,u_2,\ldots ,u_{H}\}\) at random from all vertices in \(U(t-1)\cup \mathrm {BO}(t-1)\setminus \{v\}\). These H(t) vertices together form the household containing v, and are moved to \(\mathrm {BH}(t)\), irrespective of the set they are selected from. To explore the distant neighbors, select one by one, all the vertices in \(\mathrm {U}(t-1)\cup \mathrm {BO}(t-1)\cup \mathrm {BH}(t-1)\setminus \{v,u_1,\ldots ,u_{H}\}\), and for every such selected vertex \(\bar{u}\), put an edge between \(\bar{u}\) and v with probability \(p_n\). Denote the newly created distant neighbors that belonged to \(\mathrm {U}(t-1)\) by \(\{\bar{u}_1,\ldots ,\bar{u}_d\}\), and move these vertices to \(\mathrm {BO}(t)\). In summary, the exploration algorithm yields the following recursion relations:

The algorithm terminates when there is no vertex left in the set U(t) (implying that all vertices are either active or blocked), and outputs the cardinality of A(t) as the number of active vertices in the jammed state.

4.2 State Description and Martingale Decomposition

Denote for \(t\ge 0\),

Observe that \(\{(X_n(t),Y_n(t))\}_{t\ge 0}\) is a Markov chain. At each time step, one new vertex becomes active, so that \(|\mathrm {A}(t)|=t\), and the total number of vertices in the jammed state is given by the time step when \(X_n(t)\) hits zero, i.e., the time step when the exploration algorithm terminates. Let us now introduce the shorthand notation \(\mu = \mathbb {E}[H] = 1+\alpha c\), \(\sigma ^2 = {\mathrm {Var}}\left( H\right) = \alpha c\) and \(\lambda = (1-\alpha ) c\).

Dynamics of \(X_n\) First we make the following observations:

-

\(X_n(t)\) decreases by one, when a new vertex v becomes active.

-

The household neighbors of v are selected from \(Y_n(t-1)\) vertices, and \(X_n(t)\) decreases by an amount of the number of such vertices which are in \(\mathrm {U}(t-1)\).

-

\(X_n(t)\) decreases by the number of distant neighbors of the newly active vertex that belong to \(\mathrm {U}(t-1)\) (since they are transferred to \(\mathrm {BO}(t)\)).

Thus,

with

where conditionally on \((X_n(t),Y_n(t))\),

i.e., \(\eta _1(t+1)\) has a Hypergeometric distribution with favorable outcomes \(X_n(t)\), population size \(Y_n(t)\), and sample size H(t). Further, conditionally on \((X_n(t),Y_n(t),\eta _1(t+1))\),

Therefore, the drift function of the \(\mathbf {X}_n\) process satisfies

where, in the last step, we have used the fact that \(X_n(t)\le Y_n(t)\).

Dynamics of \(Y_n\) The value of \(Y_n\) does not change due to the creation of distant neighbors. At time t, it can only decrease due to an activation of a vertex v (since it is moved to \(\mathrm {A}(t)\)), and the formation of a household, since all the vertices that make the household of v, were in \(\mathrm {U}(t-1)\cup \mathrm {BO}(t-1)\), and are moved to \(\mathrm {BH}(t)\). Thus, at each time step, \(Y_n(t)\) decreases on average by an amount \(\mu =1+\alpha c\), the expected household size, except at the final step when the residual number of vertices can be smaller than the household size. But this will not affect our asymptotic results in any way, and we will ignore it. Hence,

where

Martingale decomposition Using the Doob-Meyer decomposition [22, Theorem 4.10] of \(\varvec{X}_n\), (4.6) yields the following martingale decomposition

where \(\varvec{M}_n^{\scriptscriptstyle X}=\{M_n^{\scriptscriptstyle X}(t)\}_{t\ge 1}\) is a square-integrable martingale with respect to the usual filtration generated by the exploration algorithm. Let us now define the scaled processes

Also define

Thus, we can write

Similar arguments yield

where \(\varvec{M}_n^{\scriptscriptstyle Y} =\{M_n^{\scriptscriptstyle Y}(t)\}_{t\ge 1}\) is a square-integrable martingale with respect to a suitable filtration. We write \(\varvec{x}_n\) and \(\varvec{y}_n\) to denote the processes \((x_n(t))_{t\ge 0}\) an \((y_n(t))_{t\ge 0}\) respectively.

4.3 Quadratic Variation and Covariation

To investigate the scaling behavior of the martingales, we will now compute the respective quadratic variation and covariation terms. For convenience in notation, denote by \(\mathbb {P}_t, \mathbb {E}_t\), \({\mathrm {Var}}_t\), \({\mathrm {Cov}}_t\), the conditional probability, expectation, variance and covariance, respectively, conditioned on \((X_n(t), Y_n(t))\). Notice that, for the martingales \(\varvec{M}_n^{\scriptscriptstyle X}\) and \(\varvec{M}_n^{\scriptscriptstyle Y}\), the quadratic variation and covariation terms are given by

Thus, the quantities of interest are \({\mathrm {Var}}_t(\xi _n(t+1))\), \({\mathrm {Var}}_t(\zeta _n(t+1))\) and \({\mathrm {Cov}}_t(\xi _n(t+1),\zeta _n(t+1))\), which we derive in the three successive claims.

Claim 1

For any \(t\ge 1\),

Proof

The proof is immediate by observing that the random variable denoting the household size has variance \(\sigma ^2\). \(\square \)

Claim 2

For any \(t\ge 1\),

Proof

From the definition of \(\xi _n\) in (4.3), the computation of \({\mathrm {Var}}_t(\xi _n(t+1))\) requires computation of \({\mathrm {Var}}_t(\eta _1(t+1))\), \({\mathrm {Cov}}_t(\eta _1(t+1),\eta _2(t+1))\) and \({\mathrm {Var}}_t(\eta _2(t+1))\). Since \(\eta _1\) follows a Hypergeometric distribution,

and

since \(\sigma ^2=\mu -1=\alpha c.\) Also, we have

and therefore

Further,

which implies that

Combining (4.16), (4.18) and (4.20), gives (4.14). \(\square \)

Claim 3

For any \(t\ge 1\),

Proof

Observe that

and therefore,

Thus,

and hence

Combining (4.23) and (4.25) yields (4.21). \(\square \)

Based on the quadratic variation and covariation results above, the following lemma shows that the martingales when scaled by n, converge to the zero-process.

Lemma 4.1

For any fixed \(T\ge 0\), as \(n\rightarrow \infty \),

Proof

Observe that using (4.12) along with (4.13) and (4.14), we can claim for any \(T\ge 0\),

Thus, from Doob’s inequality [27, Theorem 1.9.1.3], the proof follows. \(\square \)

4.4 Convergence of the Scaled Exploration Process

Based on the estimates from Sections 4.2, and 4.3, we now complete the proof of Theorem 3.1. Recall the representations of \(\varvec{x}_n\), and \(\varvec{y}_n\) from (4.10) and (4.11). Fix any \( 0 \le T < 1/\mu \). Observe that Lemma 4.1 immediately yields

Next note that \(\delta (x,y)\), as defined in (4.9), is Lipschitz continuous on \([0,1]\times [\epsilon , 1]\) for any \(\epsilon >0\) and we can choose this \(\epsilon > 0\) in such a way that \(y(t)\ge \epsilon \) for all \(t\le T\) (since \(T<1/\mu \)). Therefore, the Lipschitz continuity of \(\delta \) implies that there exists a constant \(C>0\) such that

Thus,

where, by Lemma 4.1 and (4.28),

which converges in probability to zero, as \(n\rightarrow \infty \). Using Grőnwall’s inequality [18, Theorem 5.1], we get

Finally, due to Claim 4 below we note that the smallest root of x(t) is strictly smaller than \(1/\mu \). Also, the convergence in (4.29) holds for any \(T<1/\mu \). This concludes the proof of Theorem 3.1. \(\square \)

The claim below establishes that \(J^{\star }<1/\mu \).

Claim 4

\(J^\star <1/\mu \).

Proof

Recall that \(\mu = (1+\alpha c)\) and \(\lambda = (1-\alpha ) c\). Notice that (3.1) gives a linear differential equation, and the solution is given by

Thus, the smallest root of the integral equation (3.1) defined as \(J^\star \) must be the smallest positive solution of

The integrand in the left hand side of (4.31) is positive, and tends to \(\infty \) as t increases to \(1/\mu \). Therefore, the integral \( \int _0^t\mathrm {e}^{\lambda s}(1-\mu s)^{-1+1/\mu }\mathrm {d}s\) tends to infinity as well. Thus, there must exist a solution of (4.31) which is smaller that \(1/\mu \). This in turn implies that \(J^\star <\mu ^{-1}\). \(\square \)

We now complete the proof of Corollary 3.1.

Proof of Corollary 3.1

Observe from (4.30) and (4.31) that, for \(t<\mu ^{-1}\),

and the last term is zero when

Since \(J^\star \ge (1-\mathrm {e}^{-\mu \mathrm {e}^{-\frac{\lambda }{\mu }}})\), the proof is complete. \(\square \)

5 Proof of Theorem 3.2

Define the diffusion-scaled processes

and the diffusion-scaled martingales

Now observe from (4.10) that

Therefore, we can write

where

Furthermore, (4.11) yields

Based on the quadratic variation and covariation results in Sect. 4.3, the following lemma shows that the martingales when scaled by \(\sqrt{n}\) converge to a diffusion process described by an SDE.

Lemma 5.1

(Diffusion limit of martingales) As \(n\rightarrow \infty \), \(({\bar{\varvec{M}}}_n^{\scriptscriptstyle X}, {\bar{\varvec{M}}}_n^{\scriptscriptstyle Y}) \xrightarrow {\scriptscriptstyle d}(\varvec{W}_1,\varvec{W}_2)\), where the process \((\varvec{W}_1,\varvec{W}_2)\) is described by the SDE

with \(\varvec{B}_1\) and \(\varvec{B}_2\) two independent standard Brownian motions.

Proof

The idea is to use the martingale functional central limit theorem (cf. [31, Theorem 8.1]), where the convergence of the martingales is characterized by the convergence of their quadratic variation process. Using Theorem 3.1, we compute the asymptotics of the quadratic variations and covariation of \({\bar{\varvec{M}}}_n^{\scriptscriptstyle X}\) and \({\bar{\varvec{M}}}_n^{\scriptscriptstyle Y}\). From (4.10) and (4.13), we obtain

Again, (4.10), (4.14) and Theorem 3.1 yields

Finally, from (4.10), (4.21) and Theorem 3.1 we obtain

From the martingale functional central limit theorem, we get that \(({\bar{\varvec{M}}}_n^{\scriptscriptstyle X}, {\bar{\varvec{M}}}_n^{\scriptscriptstyle Y}) \xrightarrow {\scriptscriptstyle d}({\hat{\varvec{W}}}_1,{\hat{\varvec{W}}}_2)\), where \(({\hat{\varvec{W}}}_1,{\hat{\varvec{W}}}_2)\) are Brownian motions with zero means and quadratic covariation matrix

The proof then follows by noting the fact that  \(\square \)

\(\square \)

Having proved the above convergence of martingales, we now establish weak convergence of the scaled exploration process to a suitable diffusion process.

Proposition 5.2

(Functional CLT of the exploration process) As \(n\rightarrow \infty \), \((\bar{\varvec{X}}_n,{\bar{\varvec{Y}}}_n) \xrightarrow {\scriptscriptstyle d}(\varvec{X},\varvec{Y})\) where \((\varvec{X},\varvec{Y})\) is the two-dimensional stochastic process satisfying the SDE

with \(\varvec{B}_1\), \(\varvec{B}_2\) being independent standard Brownian motions, and f(t), g(t) and \(\rho (t)\) as defined in (3.4).

Proof

First we show that \((({\bar{\varvec{X}}}_n,{\bar{\varvec{Y}}}_n))_{n\ge 1}\) is a stochastically bounded sequence of processes. Indeed stochastic boundedness (and in fact weak convergence) of the \({\bar{\mathbf {Y}}}_n\) process follows from Lemma 5.1. Further observe that for any \(T<1/\mu \), by Theorem 3.1,

where f, g are defined in (3.4). Therefore, for any \(T<1/\mu \),

and again using Grőnwall’s inequality, it follows that

Then stochastic boundedness of \(({\bar{\varvec{X}}}_n)_{n\ge 1}\) follows from Lemma 5.1, (5.7), and the stochastic boundedness criterion for square-integrable martingales given in [31, Lemma 5.8].

From stochastic boundedness of the processes we can claim that any sequence \((n_k)_{k\ge 1}\) has a further subsequence \((n_k^\prime )_{k\ge 1}\subseteq (n_k)_{k\ge 1}\) such that

along that subsequence, where the limit \((\varvec{X}^\prime ,\varvec{Y}^\prime )\) may depend on the subsequence \((n_k)_{k\ge 1}\). However, due to the convergence result in Lemma 5.1 and (5.7), the continuous mapping theorem (see [50, Section 3.4]) implies that the limit \((\varvec{X}^\prime ,\varvec{Y}^\prime )\) must satisfy (5.6). Again, the solution to the SDE in (5.6) is unique, and therefore the limit \((\varvec{X}^\prime ,\varvec{Y}^\prime )\) does not depend on the subsequence \((n_k)_{k\ge 1}\). Thus, the proof is complete. \(\square \)

Proof of Theorem 3.2

First observe that

Indeed this can be seen by the application of the hitting time distribution theorem in [18, Theorem 4.1], and noting the fact that \(x^\prime (J^\star )=-1\). Now since \(\mathbf {X}\) is a centered Gaussian process, in order to complete the proof of Theorem 3.2, we only need to compute \({\mathrm {Var}}(X(J^\star ))\). We will use the following known result [1, Theorem 8.5.5] to calculate the variance of X(t).

Lemma 5.3

(Expectation and variance of SDE) Consider the d-dimensional stochastic differential equation given by

where \(Z(0)=z_0\in \mathbb {R}^d\), the \(b_i\)’s are \(\mathbb {R}^d\)-valued functions, and the \(B_i\)’s are independent standard Brownian motions, \(i=1,\ldots , d\). Then given \(Z(0)=x_0\), Z(t) has a normal distribution with mean vector m(t) and covariance matrix V(t), where m(t) and V(t) satisfy the recursion relations

with initial conditions \(m(0) = x_0\), and \(V (0) = 0\).

In our case, observe from (5.6) that

Denote the variance-covariance matrix of (X(t), Y(t)) by

Then

Therefore, the variance of X(t) can be obtained from the solution of the recursion equations

and the proof is thus completed by noting that \(\sigma _{yy}(t)=\sigma ^2t\). \(\square \)

6 Clustering Coefficient of Random Geometric Graphs

The clustering coefficient for the random geometric graph was derived in [13] along with an asymptotic formula, when the dimension becomes large. Below we give an alternative derivation. The formula (2.1) is more tractable in all dimensions compared with the formula in [13]. Consider n uniformly chosen points on a d-dimensional box \([0,1]^d\) and connect two points u, v by an edge if they are at most 2r distance apart. Fix any three vertex indices u, v, and w. We write \(u\leftrightarrow v\) to denote that u and v share an edge. The clustering coefficient for \(\textsc {rgg}(c,d)\) on n vertices is then defined by

The following proposition explicitly characterizes the asymptotic value of \(C_n(c,d)\) for any density c and dimension d.

Proposition 6.1

For any fixed \(c> 0\), and \(d\ge 1\), as \(n\rightarrow \infty ,\)

Proof

Observe that the \(\textsc {rgg}\) model can be constructed by throwing points sequentially at uniformly chosen locations independently, and then connecting to the previous vertices that are at most 2r distance away. Since the locations of the vertices are chosen independently, without loss of generality we assume that in the construction of the \(\textsc {rgg}\) model, the locations of u, v, w are chosen in this respective order. Now, the event \(\{u\leftrightarrow v,u\leftrightarrow w,v \leftrightarrow w\}\) occurs if and only if v falls within the 2r neighborhood of u and w falls within the intersection region of two spheres of radius 2r, centered at u and v, respectively. Let \(B_d(\varvec{x},2r)\) denote the d-dimensional sphere with radius 2r, centered at \(\varvec{x}\), and let \(V_d(2r)\) denote its volume. Since r is sufficiently small, so that \(B_d(\varvec{x},2r)\subseteq [0,1]^d\), using translation invariance, we assume that the location of u is \(\varvec{0}\). Let \(\varvec{v},\varvec{w}\) denote the positions in the d-dimensional space, of vertices v and w, respectively. Notice that, conditional on the event \(\{\varvec{v}\in B_d(\varvec{0},2r)\}\), the position \(\varvec{v}\) is uniformly distributed over \(B_d(\varvec{0},2r)\). Let \(\varvec{V}\) be a point chosen uniformly from \(B_d(\varvec{0},2r)\). Then the above discussion yields

We shall use the following lemma to compute the expectation term in (6.3).

Lemma 6.2

[26] For any \(\varvec{x}\) with \(\Vert \varvec{x}\Vert = \rho \), the intersection volume \(|B_d(\varvec{0},2r)\cap B_d(\varvec{x},2r)|\) depends only on \(\rho \) and r, and is given by

where \(I_z(a,b)\) denotes the normalized incomplete beta integral given by

Observe that the Jacobian corresponding to the transformation from the Cartesian coordinates \((x_1,\ldots ,x_d)\) to the Polar coordinates \((\rho ,\theta ,\phi _1,\ldots ,\phi _{d-2})\), is given by

Thus, (6.3) reduces to

and we obtain,

since

Therefore, putting \(x = \rho /2r\), yields

which proves the result. \(\square \)

7 Discussion

We introduced a clustered random graph model with tunable local clustering and a sparse superimposed structure. The level of clustering was set to suitably match the local clustering in the topology generated by the random geometric graph. This resulted in a unique parameter \(\alpha _d\) that for each dimension d creates a one-to-one mapping between the tractable random network model and the intractable random geometric graph. In this way, we offer a new perspective for understanding \(\textsc {rsa}\) on the continuum space in terms of rsaon random networks with local clustering. Analysis of the random network model resulted in precise characterizations of the limiting jamming fraction and its fluctuation. The precise results then served, using the one-to-one mapping, as predictions for the fraction of covered volume for rsain the Euclidean space. Based on extensive simulations we then showed that these prediction were remarkably accurate, irrespective of density or dimension.

In our analysis the random network model serves as a topology generator that replaces the topology generated by the random geometric graph. While the latter is directly connected with the metric in the Euclidian space, the only spatial information in the topologies generated by the random network model is contained in the matched average degree and clustering. One could be inclined to think that random topology generators such as the \({\textsc {crg}}(c,\alpha _d)\) model may not be good enough. Indeed, this random network model reduces all possible interactions among pairs of vertices to only two principal components: the local interactions due to the clustering, and a mean-field distant interaction. There is, however, building evidence that such randomized topologies can approximate rigid spatial topologies when the local interactions in both topologies are matched. Apart from this paper, the strongest evidence to date for this line of reasoning is [25], where it was shown that the typical ensembles from the latent-space geometric graph model can be modeled by an inhomogeneous random graph model that matches with the original graph in terms of the average degree and a measure of clustering. We should mention that [25] is restricted to one-dimensional models and does not deal with \(\textsc {rsa}\), but it shares with this paper the perspective that matching degrees and local clustering can be sufficient for describing spatial settings.

References

Arnold, L.: Stochastic Differential Equations: Theory and Applications. Wiley, New York (1974)

Ball, F., Britton, T., Sirl, D.: A network with tunable clustering, degree correlation and degree distribution, and an epidemic thereon. J. Math. Biol. 66(4), 979–1019 (2013)

Barthélemy, M.: Spatial networks. Phys. Rep. 499(1), 1–101 (2011)

Baryshnikov, Y., Eichelsbacher, P., Schreiber, T., Yukich, J.E.: Moderate deviations for some point measures in geometric probability. Ann. Inst. Henri Poincaré Probab. Stat. 44(3), 422–446 (2008)

Baryshnikov, Y., Yukich, J.E.: Gaussian fields and random packing. J. Stat. Phys. 111(1), 443–463 (2003)

Baryshnikov, Y., Yukich, J.E.: Gaussian limits for random measures in geometric probability. Ann. Appl. Probab. 15(1A), 213–253 (2005)

Bermolen, P., Jonckheere, M., Moyal, P.: The jamming constant of uniform random graphs. arXiv:1310.8475 (2013)

Bermolen, P., Jonckheere, M., Sanders, J.: Scaling limits for exploration algorithms. arXiv:1504.02438 (2015)

Brightwell, G., Janson, S., Luczak, M.: The greedy independent set in a random graph with given degrees. arXiv:1510.05560 (2015)

Cadilhe, A., Araújo, N.A.M., Privman, V.: Random sequential adsorption: from continuum to lattice and pre-patterned substrates. J. Phys. Condens. Matter 19(6), 65124 (2007)

Chen, J.Y., Klemic, J.F., Elimelech, M.: Micropatterning microscopic charge heterogeneity on flat surfaces for studying the interaction between colloidal particles and heterogeneously charged surfaces. Nano Lett. 2(4), 393–396 (2002)

Coupechoux, E., Lelarge, M.: How clustering affects epidemics in random networks. Adv. Appl. Probab. 46(4), 985–1008 (2014)

Dall, J., Christensen, M.: Random geometric graphs. Phys. Rev. E 66(1), 016121 (2002)

Demers, L.M., Ginger, D.S., Park, S.J., Li, Z., Chung, S.W., Mirkin, C.A.: Direct patterning of modified oligonucleotides on metals and insulators by dip-pen nanolithography. Science 296(5574), 1836–1838 (2002)

Devroye, L., György, A., Lugosi, G., Udina, F.: High-dimensional random geometric graphs and their clique number. Electron. J. Probab. 16, 2481–2508 (2011)

Dhara, S., van Leeuwaarden, J.S.H., Mukherjee, D.: Generalized random sequential adsorption on Erdős–Rényi random graphs. J. Stat. Phys. 164(5), 1217–1232 (2016)

Eichelsbacher, P., Raič, M., Schreiber, T.: Moderate deviations for stabilizing functionals in geometric probability. Ann. Inst. Henri Poincaré Probab. Stat. 51(1), 89–128 (2015)

Ethier, S.N., Kurtz, T.G.: Markov Processes: Characterization and Convergence. Wile, New York (2009)

Feder, J.: Random sequential adsorption. J. Theor. Biol. 87(2), 237–254 (1980)

Gijswijt, D.C., Mittelmann, H.D., Schrijver, A.: Semidefinite code bounds based on quadruple distances. IEEE Trans. Inf. Theory 58(5), 2697–2705 (2012)

Jaksch, D., Cirac, J.I., Zoller, P., Rolston, S.L., Côté, R., Lukin, M.D.: Fast quantum gates for neutral atoms. Phys. Rev. Lett. 85(10), 2208–2211 (2000)

Karatzas, I., Shreve, S.E.: Brownian Motion and Stochastic Calculus, vol. 113. Springer, New York (1991)

Karrer, B., Newman, M.E.J.: Random graphs containing arbitrary distributions of subgraphs. Phys. Rev. E 82(6), 066118 (2010)

Kim, H.K., Toan, P.T.: Improved semidefinite programming bound on sizes of codes. IEEE Trans. Inf. Theory 59(11), 7337–7345 (2013)

Krioukov, D.: Clustering implies geometry in networks. Phys. Rev. Lett. 116(20), 208302 (2016)

Li, S.: Concise formulas for the area and volume of a hyperspherical cap. Asian J. Math. Stat. 4(1), 66–70 (2011)

Liptser, R., Shiryaev, A.: Theory of Martingales. Springer, New York (1989)

Lukin, M.D., Fleischhauer, M., Cote, R., Duan, L.M., Jaksch, D., Cirac, J.I., Zoller, P.: Dipole blockade and quantum information processing in mesoscopic atomic ensembles. Phys. Rev. Lett. 87(3), 037901 (2001)

Newman, M.E.J.: Random graphs with clustering. Phys. Rev. Lett. 103(5), 058701 (2009)

Newman, M.E.J.: Networks: An Introduction. Oxford University Press, Oxford (2010)

Pang, G., Talreja, R., Whitt, W.: Martingale proofs of many-server heavy-traffic limits for Markovian queues. Probab. Surv. 4, 193–267 (2007)

Penrose, M.: Random Geometric Graphs. Oxford University Press, Oxford (2003)

Penrose, M.D.: Random parking, sequential adsorption, and the jamming limit. Commun. Math. Phys. 218(1), 153–176 (2001)

Penrose, M.D., Yukich, J.: Limit theory for random sequential packing and deposition. Ann. Appl. Probab. 12(1), 272–301 (2002)

Saffman, M., Walker, T.G., Mølmer, K.: Quantum information with Rydberg atoms. Rev. Mod. Phys. 82(3), 2313–2363 (2010)

Sanders, J., Jonckheere, M., Kokkelmans, S.: Sub-Poissonian statistics of jamming limits in ultracold Rydberg gases. Phys. Rev. Lett. 115(4), 043002 (2015)

Sanders, J., van Bijnen, R., Vredenbregt, E., Kokkelmans, S.: Wireless network control of interacting Rydberg atoms. Phys. Rev. Lett. 112(16), 163001 (2014)

Schreiber, T., Penrose, M.D., Yukich, J.E.: Gaussian limits for multidimensional random sequential packing at saturation. Commun. Math. Phys. 272(1), 167–183 (2007)

Schreiber, T., Yukich, J.: Large deviations for functionals of spatial point processes with applications to random packing and spatial graphs. Stoch. Process. Appl. 115(8), 1332–1356 (2005)

Shannon, C.E.: A mathematical theory of communication. ACM SIGMOBILE Mob. Comput. Commun. Rev. 5(1), 3–55 (2001)

Solomon, H.: Random packing density. In: Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics, vol. 3, pp. 119–134 (1967)

Stegehuis, C., van der Hofstad, R., van Leeuwaarden, J.S.H.: Epidemic spreading on complex networks with community structures. Sci. Rep. 6, 29748 (2016)

Stegehuis, C., van der Hofstad, R., van Leeuwaarden, J.S.H.: Power-law relations in random networks with communities. Phys. Rev. E 94(1), 012302 (2016)

Talbot, J., Schaaf, P., Tarjus, G.: Random sequential addition of hard spheres. Mol. Phys. 72(6), 1397–1406 (1991)

Torquato, S.: Random Heterogeneous Materials: Microstructure and Macroscopic Properties, vol. 16. Springer, New York (2013)

Torquato, S., Stillinger, F.H.: Jammed hard-particle packings: from kepler to bernal and beyond. Rev. Mod. Phys. 82, 2633–2672 (2010)

Torquato, S., Uche, O.U., Stillinger, F.H.: Random sequential addition of hard spheres in high Euclidean dimensions. Phys. Rev. E 74(6), 061308 (2006)

Trapman, P.: On analytical approaches to epidemics on networks. Theor. Popul. Biol. 71(2), 160–173 (2007)

van der Hofstad, R., van Leeuwaarden, J.S.H., Stegehuis, C.: Hierarchical configuration model. arXiv:1512.08397 (2015)

Whitt, W.: Stochastic-Process Limits. Operations Research and Financial Engineering. Springer, New York (2002)

Zhang, G., Torquato, S.: Precise algorithm to generate random sequential addition of hard hyperspheres at saturation. Phys. Rev. E 88(5), 053312 (2013)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Dhara, S., van Leeuwaarden, J.S.H. & Mukherjee, D. Corrected Mean-Field Model for Random Sequential Adsorption on Random Geometric Graphs. J Stat Phys 173, 872–894 (2018). https://doi.org/10.1007/s10955-018-2019-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-018-2019-8