Abstract

We study the equilibrium distribution of relative strategy scores of agents in the asymmetric phase (\(\alpha \equiv P/N\gtrsim 1\)) of the basic Minority Game using sign-payoff, with N agents holding two strategies over P histories. We formulate a statistical model that makes use of the gauge freedom with respect to the ordering of an agent’s strategies to quantify the correlation between the attendance and the distribution of strategies. The relative score \(x\in \mathbb {Z}\) of the two strategies of an agent is described in terms of a one dimensional random walk with asymmetric jump probabilities, leading either to a static and asymmetric exponential distribution centered at \(x=0\) for fickle agents or to diffusion with a positive or negative drift for frozen agents. In terms of scaled coordinates \(x/\sqrt{N}\) and t / N the distributions are uniquely given by \(\alpha \) and in quantitative agreement with direct simulations of the game. As the model avoids the reformulation in terms of a constrained minimization problem it can be used for arbitrary payoff functions with little calculational effort and provides a transparent and simple formulation of the dynamics of the basic Minority Game in the asymmetric phase.

Similar content being viewed by others

1 Introduction

A minority game can be exemplified by the following simple market analogy; An odd number N of traders (agents) must at each time step choose between two options, buying or selling a share, with the aim of picking the minority group. If sell is in minority and buy in majority one may expect the price to go up to satisfy demand and vice versa if buy is in minority, thus motivating the minority character of the game. Clearly, there is no way to make everyone content, at least half of the agents will inevitably end up in the majority group each round. As the losing agents will try to improve their lot there is no static equilibrium. Instead, agents might be expected to adapt their buy or sell strategies based on perceived trends in the history of outcomes [1–12].

The Minority Game proposed by Challet and Zhang [2, 3] formalizes this type of market dynamics where agents of limited intellect compete for a scarce resource by adapting to the aggregate input of all others [1, 12]. Each agent has a set of strategies that, depending on the recent past history of minority groups going m time steps back, gives a prediction of the next minority being buy or sell. The agent uses at each time step her highest scoring strategy which has most accurately predicted correct minority groups historically. The state space of the game is given by the strategy scores of each agent together with the recent history of minority groups, and the discrete time evolution in this space represents an intricate dynamical system.

What makes the game appealing from a physics perspective is that it can be described using methods for the statistical physics of disordered systems, with the set of randomly assigned strategies corresponding to quenched disorder [5, 8, 13–17]. In particular Challet, Marsili, and co-workers showed that the model can be formulated in terms of the gradient descent dynamics of an underlying Hamiltonian [13], plus noise. The asymptotic dynamics corresponds to minimizing the Hamiltonian with respect to the frequency at which agents use each strategy, a problem which in turn can be solved using the replica method [8, 17, 18]. In a complementary development Coolen solved the statistical dynamics of the problem in its full complexity using generating functionals [14–16].

The game is controlled by the parameter \(\alpha =P/N\), where \(P=2^m\) is the number of distinct histories that agents take into account, which tunes the system through a phase transition (for \(N\rightarrow \infty \)) at a critical value \(\alpha _c=0.3374\ldots \). In the symmetric (or crowded) phase, \(\alpha < \alpha _c\), the game is quasi-periodic with period 2P where a given history gives alternately one or the other of the outcomes for minority group [4, 19]. A somewhat oversimplified characterization of the dynamics is that the information about the last winning minority group for a given history gives a crowding effect [20] where many agents want to repeat the last winning outcome which then counterproductively instead puts them in the majority group. The crowding also gives large fluctuations of the size of the minority group.

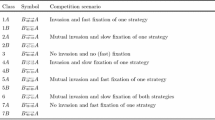

In the asymmetric (or dilute) phase, \(\alpha >\alpha _c\), agents are sufficiently uncorrelated that crowding effects are not important and there is no periodic behavior. Instead, as exemplified in Fig. 1 the score dynamics is random but with a net correlation between agents that makes fluctuations in the size of the minority group small. The dilute occupation of the full strategy space gives rise to a non-uniform frequency distribution of histories which can be beneficial for agents with strategies that are tuned to this asymmetry.

Evolution of strategy scores for the two strategies of four (\(i=1,\ldots 4\)) representative agents in a game with \(N=101\) agents and a memory of length \(m=7\) (\(P=2^7\)). At each time step every agent uses the one of her two strategies which has the highest momentary score, given by how well the strategy has predicted the past minority groups. The corresponding score difference \(x_i(t)\) (inset) shows the distinction between frozen agents that consistently use a single strategy, and fickle agents that switch between strategies

In this paper we study the dynamics of the Minority Game in the asymmetric phase by formulating a simplified statistical model, focusing on finding probability distributions for the relative strategy scores. In particular, we study the original formulation of the game with sign-payoff for which quantitative results are challenging to derive. By sorting the strategies based on how strongly they are correlated with the average over all strategies in the game, we find that sufficient statistical information can be extracted to formulate a quantitatively accurate model for \(\alpha \gtrsim 1\).

We discuss how the relative score for each agent can be derived from the master equation of a random walk on a chain with asymmetric jump probabilities to nearest neighbor sites, and how these jump probabilities can be calculated from the basic dynamic update equation of the scores. The corresponding probability distributions of scores are either of the form of exponential localization or diffusion with a drift. In the appendices we show that the model is related to but independent from the Hamiltonian formulation and we show how it can also be readily applied to the game with linear payoff where the master equation has long-range hopping.

Although the MG is well understood from the classic works discussed above, it is our hope that the simplified model of the steady state attendance and score distributions presented in this paper provides an alternative and readily accessible perspective on this fascinating model.

2 Definition of the Game and Outline

In order to give an overview of our results and for completeness we start by providing the formal definition of the Minority Game and some basic properties [2, 3, 10, 11].

At each discrete time step every agent gives a binary bid \(a_i(t)=\pm 1\), all of which are collected into a total attendance

(N odd) and the winning minority group is then identified through \(-\text {sign}(A_t)\). A binary string of the m past winning bids, called a history \(\mu \), is provided as global information to each agent upon which to base her decision for the following round. There are thus \(\mu =1,\ldots ,P\) with \(P=2^m\) different histories. At her disposal each agent has two randomly assigned strategies (a.k.a. strategy tables) that provide a unique bid for each history. The bid of strategy \(j=1,2\) of agent \(i=1,\ldots ,N\) in response to history \(\mu \) is given by \(a_{i,j}^\mu =\pm 1\) and the full strategy is the P dimensional random binary vector \(\vec {a}_{i,j}\). There are thus a total of \(2^P\) distinct strategies available.

The agent uses at each time step the strategy that has made the best predictions for minority group historically. This is decided by a score \(U_{i,j}(t)\) for each strategy which is updated according to \(U_{i,j}(t+1)=U_{i,j}(t)-a_{i,j}^{\mu }\text {sign}(A^{\mu }_t)\), irrespectively of the strategy actually being used or not. (Here the superscript \(\mu \) on \(A_t\) just indicates that the attendance will depend on the history \(\mu (t)\) giving the bids at time t.) Ties, i.e. \(U_{i,1}=U_{i,2}\), are decided by a coin toss.

Since it is only the relative score between an agent’s two strategies that is important in deciding which strategy to use, one may focus on the relative score

This is updated according to

where

and where \(\vec {\xi }_i=(\vec {a}_{i,1}-\vec {a}_{i,2})/2\) is an agents “difference vector” that takes values \(\pm 1\) or 0 for each history \(\mu \).

To make the dynamics generated by these equations more concrete, Fig. 1 shows the scores of the strategies of four particular agents \(U_{i,1/2}\), \(i=1,\ldots ,4\) for one realization of a game with \(N=101\), \(P=2^7\), together with the corresponding relative scores \(x_i\) (inset), over a limited time interval. As exemplified by this figure agents come in two flavors, known as ”frozen” and ”fickle” [5, 14]. An agent is frozen if one of her strategies performs consistently better than the other, such that on average the score difference is diverging, whereas fickle agents have a relative score that meanders around \(x=0\) switching their used strategy. The motion of \(x_i\) for both fickle and frozen agents is a random walk with a bias towards or away from \(x=0\). A basic problem is to characterize and understand this random walk and derive the corresponding probability distribution \(P_i(x,t)\); the probability to find agent i at position x at time t [10, 16].

2.1 Outline and Results

As presented in Sect. 3 we can quantify the correlation between an agent’s strategies, specified by \(\xi _i^\mu \), and the total attendance \(A_t^\mu \), which in turn allows for characterizing the mean (time averaged) step size \(\Delta _i=\langle x_i(t+1)-x_i(t)\rangle \) in terms of a distribution over agents \(P(\Delta _i)\). In agreement with earlier work we find that \(\Delta _i\) has two contributions; one center (\(x=0\)) seeking bias term which arises from self interaction (the used strategy contributes to the attendance and as such is more likely to be in the majority group [17]) and a fitness term which reflects the relative adaptation of the agent’s two strategies to the time averaged stochastic environment of the game. The distribution of step sizes over the population of agents are shown in Fig. 3 where frozen agents are simply those where the fitness overcomes the bias, such that \(\Delta _i>0\) for \(x>0\) or \(\Delta _i<0\) for \(x<0\), whereas for fickle agents \(\Delta _i<0\) for \(x>0\) and vice versa.

Knowing the mean step size of an agent allows for a formulation in terms of a one dimensional random walk (Fig. 4) with corresponding jump probabilities, as presented in Sect. 4. Depending on whether it is more likely to jump towards the center or not (fickle or frozen respectively) the master equation on the chain can be solved in terms of a stationary exponential distribution centered at \(x=0\) or (in the continuum limit) a normal distribution with a variance and mean that grow linearly in time (diffusion with drift). These are the distributions \(P_i(x,t)\) depending on \(\Delta _i\).

In simulations over many agents it is natural to consider the full distribution \(P(x,t)=\sum _{i=1}^{N} P_i(x,t)/N=\int P(\Delta _i)P_i(x,t)d\Delta _i\), with NP(x, t) thus the probability of finding an agent at time t with relative score x. In terms of scaled coordinates \(x/\sqrt{N}\) and t / N we find that the distribution only depends on \(\alpha \). The model distributions show excellent agreement with direct numerical simulations (Figs. 5 and 6) with no fitting parameters. This result for the full distribution of relative scores together with its systematic derivation for the original sign-payoff game represent the main results of this paper.

In Appendix 2 we discuss the relation between the model presented in this work and the formulation in terms of a minimization problem of a Hamiltonian generator of the asymptotic dynamics [8, 13]. We find that one way to view the present model is as a reduced ansatz for the ground state where the only parameters are the fraction of positively and negatively frozen agents (solved for self-consistently) instead of the full space of the frequency of use of each strategy. With this ansatz closed expressions can be derived for the steady state distributions irrespective of the form of the Hamiltonian.

In Appendix 3 we show how the model applies to the game with linear payoff \(\Delta _i(t)=-\xi _i^\mu A^\mu _t\).

3 Statistical Model

We will now turn to describing the statistical model in some detail and derive the results discussed in the previous section. We define for each agent the sum and difference of strategies for each bid \(\vec {\omega }_i=(\vec {a}_{i,1}+\vec {a}_{i,2})/2\) and (as discussed above) \(\vec {\xi }_i=(\vec {a}_{i,1}-\vec {a}_{i,2})/2\) [5]. Clearly \(\omega _i^\mu \), being the sum of two random numbers \(\pm 1\) is distributed over \((-1,0,1)\) with probability (1 / 4, 1 / 2, 1 / 4). A non-zero value of \(\omega _i^\mu \) means that agent i always has the same bid for history \(\mu \) independently of which strategy it has in play. The sum over all agents, \(\vec {\Omega }=\sum _{i=1}^N\vec {\omega }_i\), thus gives a constant history dependent but time independent background contribution to the attendance. (In the sense that every time history \(\mu \) occurs in the time series it gives the same contribution.) This background \(\Omega ^\mu \) is, for large N, normally distributed with mean zero and variance

An interesting property of the Minority Game is that there is a “\(Z_2\) gauge” freedom with respect to an arbitrary choice of which is called strategy 1 and which is 2, thus corresponding to a change of sign of \(\vec {\xi }_i\). Such a sign change will simply result in a change of sign of \(x_i(t)\) having no consequence on which strategy is actually in play. (It is the strategy in play which is an observable, not whether it is labeled by 1 or 2.) Nevertheless, it turns out that making a consistent definition of the order of strategies is helpful in formulating a simple statistical model. Explicitly we order the two strategies (“fix the gauge”) of all agents i such that

Shortly we will describe the distribution over agents of \(\xi _i^\mu \), to quantify its anticorrelation with \(\Omega _i^\mu \).

To proceed we write the attendance at a time step t with history \(\mu \) as

where \(s_i(t)=\pm 1\) depending on which strategy agent i is playing [5]. Again, the relative strategy score \(x_i\) of agent i is updated according to Eq. 4. Given the background contribution to the attendance \(\vec {\Omega }\) we expect there to be a surplus of \(s_i=1\) in the steady state with our choice of gauge because the strategy 1 is expected to be favored by the score update function. (In other words, strategy 1 is expected to have a higher fitness.) However, this correlation is not trivial as the accumulated score also depends on the dynamically generated contribution the attendance. As discussed previously some fraction \(\phi \) of the agents are frozen, in the sense of always using the same strategy, \(s_i=\text {constant}\). We make an additional distinction (made significant by our choice of gauge) and separate the group of frozen agents into those with \(s_i(t)=1\) (fraction \(\phi _1\)), and those with \(s_i(t)=-1\) (fraction \(\phi _2\)), such that \(\phi =\phi _1+\phi _2\). Clearly, we expect the former to be more plentiful than the latter.

We will now derive steady state distributions over agents for the mean step size \(\Delta _i\). For this purpose we will write the attendance as

where

corresponding to the three categories of agents discussed previously. We will make the following simplifying approximations for these three components: the fickle component we will model as completely disordered, such that \(s_i(t)=\pm 1\) is random, and correspondingly (for large N) \(S_t\) is normally distributed with mean zero and variance

with \(\varphi =(1-\phi _1-\phi _2)\) the fraction of fickle agents. (Thus, neglecting that the fickle agents would also have a net anticorrelation with the background \(\vec {\Omega }\)). We will assume the frozen agents to simply be a sum of independent random variables drawn from the distribution of \(\vec {\xi }\), thus neglecting that the agents that are frozen may come from the extremes of this distribution.

To proceed, we need to find the distribution of \(\vec {\xi }_i\), i.e. how it varies over the set of agents. (Henceforth we will usually drop the index i and regard the objects as drawn from a distribution.) Begin by defining \(\vec {\psi }=\text {Random}(\pm 1)\vec {\xi }\), which is thus disordered with respect to the sign of \(\vec {\Omega }\cdot \vec {\psi }\) Footnote 1. The object \(\psi ^\mu \) is independent of \(\Omega ^\mu \) (ignoring 1 / N corrections due to \(\Omega ^\mu \ne 0\) limiting the available bids \(\pm 1\)), taking values \((1,0,-1)\) with probability (1 / 4, 1 / 2, 1 / 4), which gives mean zero and variance 1 / 2. Consider the joint object \(h=\frac{1}{P}\vec {\Omega }\cdot \vec {\psi }\), for large P this becomes normally distributed with mean zero and variance \(\sigma _h^2=\frac{1}{P}(N/2)(1/2)=1/(4\alpha )\) [5].

Now, to quantify the correlation between \(\vec {\xi }\) and \(\vec {\Omega }\) we define the object

which consequently has mean \(<\tilde{h}>=-\int dhP(h)|h|=-1/\sqrt{2\pi \alpha }\) and \(<\tilde{h}^2>=\sigma _h^2\). We will represent this distribution by assuming that each component \(\xi ^\mu \) are independent Gaussian random variables with a mean that is linearly dependent on \(\Omega ^\mu \). With this assumption we find the conditional distribution

where \(c(\alpha )=\sqrt{\frac{2}{\pi \alpha }}\), and \(\sigma ^2_{\xi }=1/2\), and where we write the normal distribution over x with mean \(\mu \) and variance \(\sigma ^2\) as \(\mathcal{N}_x(\mu ,\sigma )=\frac{1}{\sqrt{2\pi }\sigma }e^{-(x-\mu )^2/2\sigma ^2}\). This quantifies that \(\xi ^\mu \) is on average anticorrelated with \(\Omega ^\mu \) which is expected to place strategy 1 in the minority group more often than strategy 2.

Using Eq. 11 we can also calculate the distributions of \(X^\mu \) (\(Y^\mu \)) as the sum of \(\phi _1 N\) (\(\phi _2 N\)) correlated objects \(\xi _i^\mu \), giving

with conditional variances \(\sigma _{X|\Omega }^2=\phi _1N/2\) and \(\sigma _{Y|\Omega }^2=\phi _2N/2\).

3.1 Distribution of Step Sizes

Given the model expressions for the distributions of all the components of the score update equation (Eq. 4) we will find the distribution of mean (time averaged) step sizes. As a first step we integrate out the fast variable \(S_t\) to get a conditional on \(\mu \) time averaged step size \(\Delta ^\mu =\langle \Delta (t)|\mu \rangle \). (Over a long time series of the game every history \(\mu \) will occur many times, we thus average over all those occurrences of a single history.) This corresponds to

The second term, which is a self-interaction, follows from the discrete nature of the original problem. It gives a negative bias for the used strategy coming from the fact that if the net attendance from all other agents is zero, the used strategy puts the agent in the majority group. (The factor \(\frac{1}{2}\) in the delta function is to account for the fact that the attendance, as defined in Eq. 1, changes in steps of two and the factor \(\text {sign}(x) \xi ^\mu \) comes from the fact that only the used strategy enters the attendance.) Integrated this gives

where we have identified the first term as a fitness \(\Delta _{\text {fit}}\) which quantifies the relative fitness of the agent’s two strategies and the second as a negative bias \(\Delta _{\text {bias}}\) for the used strategy as discussed previously.

To calculate the distribution of mean step sizes we will assume that histories occur with the same frequency such that \(\Delta =\frac{1}{P}\sum _\mu \Delta ^\mu \). This is in fact not the case for a single realization of the game in the dilute phase, some histories occur more often than others, as one can see directly from any simulation in this regime. Nevertheless, for large P we will assume that this variation of occurrences of \(\mu \) averages out. As discussed extensively in the literature the overall behavior of the game is insensitive to whether the actual history is used (endogenous information) as input to the agents or if a random history is supplied (exogenous information) [10, 11, 16, 21, 22]. This is also confirmed by the present work through the good agreement between the model using exogenous information and simulations in which we use the actual history.

Assuming large P and given the assumption of independence of the distributions \(\Omega ,\xi ,X,Y\) for different \(\mu \) we expect the distribution \(P(\Delta )\) to approach a Gaussian (by the central limit theorem) with mean

with \(\Delta ^\mu \) as in Eq. 15, and with variance \(\sigma ^2=\frac{1}{P}(\overline{\Delta ^2}-\bar{\Delta }^2)\).

The integrals are readily done analytically as described in the Appendix 1, but the expressions are very lengthy. The main features can be expressed in the following form:

where \(\tilde{\Delta }_{\text {bias}/\text {fit}}>0\) are functions that only depend on N and P through \(\alpha =P/N\), change slowly as a function of the arguments in the physically relevant regime \(0\le \phi _1+\phi _2\le 1\) (Fig. 7) and which satisfy \(\tilde{\Delta }_{\text {bias}}(\alpha ,0,0)=\frac{1}{\sqrt{2\pi }}\) and \(\tilde{\Delta }_{\text {fit}}(\alpha ,0,0)=\frac{1}{\pi }\). As seen from Eq. 17, the mean bias is towards \(x=0\), the used strategy is penalized, while the mean fitness is positive acting to increase the relative score x, consistent with our choice of gauge as discussed earlier.

The only appreciable contribution to the variance comes from the fitness term scaling as 1 / P whereas the bias has a variance that scales with 1 / (NP) and thus negligible (as is the cross term). The variance can be written

where \(\tilde{\sigma }>0\) also changes slowly in the relevant regime (Fig. 7) and satisfies \(\tilde{\sigma }(\alpha ,0,0)=\frac{1}{\sqrt{6}}\). The width of the fitness distribution explains the fact that even though \(\bar{\Delta }_\mathrm{fit}>0\) consistent with \(\phi _1\ne 0\), there are also some agents with a large negative fitness which implies \(\phi _2\ne 0\). The fact that \(\vec {\xi }\cdot \vec {\Omega }< 0\) thus does not necessarily imply that strategy 1 is more successful than strategy 2 as the correlation with the other frozen agents is also an important factor. For large \(\alpha \), both the mean and variance of the fitness vanish, as can be understood as a result of there being too few agents compared to the number of possible outcomes to maintain any appreciable correlation between an agents strategies and the aggregate background, \(\vec {\xi }\cdot \vec {\Omega }\approx 0\). In this limit, since the bias term always penalizes the used strategy there can be no frozen agents. We also see that both the mean and width of the distribution for given \(\alpha \) scales with \(1/\sqrt{N}\), consistent with simulations (Fig. 3).

3.2 Fraction of Frozen Agents

For each agent the score difference \(x_i\) moves with a mean step per unit time of

where \(\Delta _{\text {fit}}\) is drawn from the distribution \(\mathcal{N}(\bar{\Delta }_{\text {fit}},\sigma _\mathrm{fit})\). If the fitness is high, such that \(\Delta ^+>0\), the agent will have a net positive movement and the agent is frozen, with \(x_i>0\) and growing unbounded. The fraction of positive frozen agents is given by

Similarly, if the fitness is relatively very poor, such that \(\Delta ^-<0\) the agent is frozen (with \(x_i<0\)) with magnitude growing unbounded. The fraction of negatively frozen agents is given by

and correspondingly the complete fraction of frozen agents \(\phi =\phi _1+\phi _2\) and fickle agents \(\varphi =1-\phi \) are found. Since \(\tilde{\Delta }_{\text {fit}}\), \(\tilde{\Delta }_{\text {bias}}\), and \(\tilde{\sigma }\) are functions of \(\alpha \), \(\phi _1\), and \(\phi _2\), the two equations allow for solving for \(\phi _1(\alpha )\) and \(\phi _2(\alpha )\) as a function of the only parameter \(\alpha \). We find that the solutions are readily found by forward iteration, and the results are plotted and compared to direct simulations of the game in Fig. 2 Footnote 2. The fit is good, but there is no indication of a phase transition for small \(\alpha \) in this simplified model.

The fraction of frozen agents as a function of \(\alpha =P/N\) from the statistical model (Eqs. 21 and 22) compared to results from direct numerical simulations of the game. The frozen agents are divided into two groups \(\phi _1\) and \(\phi _2\) depending on if they are frozen with relative score \(x>0\) or \(x<0\) respectively. The fact that \(\phi _1>\phi _2\) follows from our convention \(\vec {\xi }_i\cdot \vec {\Omega }\le 0\) (Eq. 5). Also shown is the total fraction of frozen agents from the replica calculation for linear payoff (Eqs. 3.41–3.44 of [10]). (Each data point is averaged over 20 runs with \(\sim 1e6\) time steps each (1e5 steps for \(N=2001\)))

Distributions for mean step per unit time \(\Delta =\langle x(t+1)-x(t)\rangle \) at \(\alpha \approx 4\) for \(x>0\) (top) and \(x<0\) (bottom), comparing direct simulations of the game to the statistical model (Eq. 20). The fraction of frozen agents with \(x>0\) (\(\phi _1\)) is indicated by ”fr,+” and similarly for \(x<0\) (\(\phi _2\)). The distributions of step sizes are different for \(x>0\) and \(x<0\) because of the convention \(\vec {\xi }_i\cdot \vec {\Omega }\le 0\) as explained in Fig. 2. (Simulations averaged over 1e6 time steps, excluding a 1e4 equilibration time)

From simulations we can also measure the distribution of mean step sizes to compare to the model, which is shown in Fig. 3. There we show an intermediate value of \(\alpha \), the fit in terms of mean and width is not as good close to \(\alpha _c\) and almost perfect for large \(\alpha \), but everywhere the data seems well represented by a normal distribution. We also use the mean step size distributions from simulations to calculate the fraction of frozen agents, Fig. 2. (The naive way to distinguish between frozen and switching agents; to introduce a cut-off \(x_{\text {cut}}\) at some time t, with any agents with \(|x_t|>x_{\text {cut}}\) considered frozen, makes it difficult to distinguish between frozen and switching agents with \(\Delta \) near 0.)

4 Distributions Over x

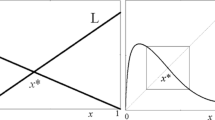

We now use the fact that each agent is characterized by an average step size per unit time, specified by the fitness \(\Delta _{\text {fit}}\), to describe the movement of the relative score x on the set of integers. Consider that the agent at time step t has score difference x, what is the probability that at time \(t+1\) the score difference is \(x'\)? In each time step, x can only change by \(-1,0,1\) as given by the basic score update Eq. 4. We specify the respective probabilities \(p_-,p_0,p_+\) with \(p_-+p_0+p_+=1\) for \(x>0\) and \(q_-,q_0,q_+\) for \(x<0\). The mean probability that x remains unchanged is \(p_0=q_0=\frac{1}{2}\) as this corresponds to \(\xi _i^\mu =0\), meaning that the agent’s two strategies have the same bid which on average (over \(\mu \)) will be the case for half of the histories. It should also be clear that the stepping probabilities cannot depend on the magnitude of x, only the sign, because the difference in score between strategies does not enter the game, only which strategy is currently used. The case \(x=0\) has to be treated separately; we toss a coin to decide which strategy is used, thus the probability for a \(+1\) increment is \((p_++q_+)/2\) and for a \(-1\) increment is \((p_-+q_-)/2\). The movement of x thus corresponds to a one-dimensional random walk on a chain, with asymmetric jump probabilities, as sketched in Fig. 4.

The movement of the relative strategy score x of an agent is described by a random walk on a chain with jump probabilities \(p_+,p_-,p_0\) for \(x>1\) (i.e. strategy 1 in play) and \(q_+,q_-,q_0\) for \(x<-1\) (i.e. strategy 2 in play). At the boundary \(x=-1,0,1\) due to the coin toss choice of strategy the probabilities are altered as in the figure

To relate the probabilities to the mean step size we note that for \(x>0\), \(\Delta ^+=1\cdot p_++0\cdot p_0 -1\cdot p_-\), which together with the conservation of probability and the fact that \(p_0=1/2\) gives

where results for q follow from the same analysis for \(x<0\). Keeping in mind that for a fickle agent \(\Delta ^+<0\) and \(\Delta ^->0\) this is of course consistent with \(p_+<p_-\) and \(q_-<q_+\). A frozen agent is instead given by \(p_+>p_-\) or \(q_->q_+\).

With the known probabilities we can write down a master equation on the chain for the probability distribution \(P_x(t)\) (implicit \(\Delta _{\text {fit}}\) dependence)

and at the boundary

Assuming that the distribution is stationary, such that \(P_x(t)=P_x\), and concentrating on \(x>0\), we find after some manipulations the equation

which has the exponential solution

In the last step we used Eq. 23 and the fact that from Eq. 17 the mean step size is small such that \(|\Delta ^+|\sim 1/\sqrt{N}\ll 1\). From this we can identify a decay length \(x_+=1/(4|\Delta ^+|)\sim \sqrt{N}\), which characterizes the range of positive excursions of the score difference of the fickle agent. Clearly, this solution requires \(p_->p_+\) (\(\Delta ^+<0\)) to be bounded, as is the case for fickle agents. From the same analysis for \(x<1\) the fickle agents with \(q_-<q_+\) have the distribution \(P_x\sim e^{x\ln \frac{q_+}{q_-}}\approx e^{4x\Delta ^-}\). What remains is to match up the solutions for positive and negative x at the interface. This can be solved exactly, but given that the exponential prefactor is small we settle for the approximate expression

From this expression we see that the distribution is asymmetric, such that given that on average \(|\Delta ^+|<\Delta _-\) agents are more likely to be found with \(x>0\). This opens up for a more sophisticated modelling (left for future work) where this aspect is fed back into the initial statistical description of the sum of fickle agents through the dynamical variable \(S_t\), the total attendance of the fickle agents, acquiring a mean depending on \(\mu \).

For the frozen agents the master equation is the same, but given \(p_+>p-\) (or \(q_->q_+\)) we expect a drift of the mean of the distribution. Thus focusing on long times we can consider one or the other of Eqs. 25 depending on whether the agent is frozen with \(x>0\) or \(x<0\). For \(x>0\) and assuming that the agent at time \(t=0\) is at site \(x=0\) (neglecting the influence of any excursions to \(x<0\)) we can write down an exact expression for \(P_{x}(t)\) in terms of a multinomial distribution. Alternatively, and simpler, we can take the continuum limit \(P_x(t+1)=P(x,t)+\frac{dP}{dt}\) and \(P_{x\pm 1}(t)=P(x,t)\pm \frac{dP}{dx}+\frac{1}{2}\frac{d^2P}{dx^2}\) to find the Fokker-Planck equation

Given the initial condition \(P(x,0)=\delta (x)\) this has the solution \(P(x,t)=\mathcal{N}_x(\bar{x},\sigma _t)\) with \(\bar{x}=(p_+-p_-)t=\Delta ^+t\) and \(\sigma ^2_t=(p_++p_-)t=\frac{1}{2}t\), thus describing diffusion with a drift.

4.1 Full Score Distributions

Given that we now have a description of the relative score distribution of a single agent in terms of an asymmetric exponential decay or diffusion, we can also consider the full distribution of relative scores over all agents, by integrating over the distribution of mean step sizes. Defining the scaled variables \(\tilde{x}=x/\sqrt{N}\) and \(\tilde{t}=t/N\) we write \(P(\tilde{x},\tilde{t})=P_{\text {fi}}(\tilde{x})+P_{\text {fr},+}(\tilde{x},\tilde{t})+P_{\text {fr},-} (\tilde{x},\tilde{t})\), corresponding to the stationary distribution of the fickle agents and diffusive distributions of the frozen agents with \(x>0\) and \(x<0\) respectively. The first component is

where ± corresponds to \(x<0\) and \(x>0\) respectively, and where \(b_\alpha =|\tilde{\Delta }_{\text {bias}}|\). For the frozen agents we have

where \(\sigma ^2_{\tilde{t}}=\tilde{t}/2\). These expressions are compared to direct simulations of the game for intermediate \(\alpha \approx 4\) in Fig. 5. The simulations are averaged over a specific time window and the diffusive component Eq. 31 is integrated over the corresponding scaled time window. The agreement is excellent over the complete stationary and diffusive components of the distribution and shows the data collapse in terms of scaled coordinates. In Fig. 6 we also show a comparison for large \(\alpha \approx 80\) where the simulations have no frozen agents and all fickle agents are localized by a length close to the \(\alpha \rightarrow \infty \) value \(x_0=\sqrt{\pi N/8}\).

Full scaled distribution \(P_{\tilde{x}}\) with \(\tilde{x}=x/\sqrt{N}\) over all agents for \(\alpha \approx 4\) compiled by averaging simulations over scaled time window \(\tilde{t}_0=t_0/\sqrt{N}\) to \(\tilde{t}_1=t_1/\sqrt{N}\). The model results (”fickle+frozen”) are \(P_{\tilde{x}}=\frac{1}{\tilde{t}_1-\tilde{t}_0}\int _{\tilde{t}_0}^{\tilde{t}_1}d\tilde{t}P(\tilde{x},\tilde{t})\), using Equations 30 and 31. Also shown are model results using only fickle agents. The following time windows are used: for \(N=501\), \(t_0=5e5\) to \(t_1=5e6\); for \(N=1001\), \(t=2t_0\) to \(2t_1\); for \(N=2001\), \(t=4t_0\) to \(4t_1\), which correspond to the same \(\tilde{t}_0\) and \(\tilde{t}_1\). (Simulations are averaged over 80 runs for \(N=501\) and 15 runs for \(N=1001\) and 2001)

The asymmetry of these plots is an artefact of our gauge choice \(\vec {\xi }_i\cdot \vec {\Omega }\le 0\) which implies that on average agents will use strategy 1 (\(x>0\)) more frequently than strategy 2 (\(x<0\)). To restore the full symmetry is simply a matter of symmetrizing the distributions around \(x=0\).

Finally, we remark that the formal solution in terms of an exponential distribution of strategy scores for frozen agents was derived in [13] from a Fokker-Planck equation for the linear payoff game. See Appendix 2 and 3 for a further discussion of the comparison between the present model and the Hamiltonian formulation.

Distribution \(P_{\tilde{x}}\) at large \(\alpha \approx 80\). There are no frozen agents, and the simulated and model (“fickle”) distributions are stationary. Also shown is the asymptotic \(\alpha \rightarrow \infty \) behavior where all agents are symmetrically localized with localization length \(x_0=\sqrt{\pi N/8}\), and a simulation at \(\alpha \approx 650\) which approaches this asymptotic behavior. (Simulations averaged over \(\sim 4e8\) time steps)

5 Summary

We have studied the asymmetric phase of the basic Minority Game, focusing on the statistical distribution of relative strategy scores and the original sign-payoff formulation of the game. We formulate a statistical model for the attendance that relies on a specific gauge choice in which the two strategies of each agent are ordered with respect to the background (\(\vec {\xi }_i\cdot \vec {\Omega }\le 0\) for all agents i). Using this model we can derive a distribution of the mean step per time increment for the relative scores, specified in terms of a bias for the used strategy and the relative fitness of the two strategies. The relative strategy score for each agent is conveniently described as a random walk on an integer chain, where the jump probabilities are calculated from the mean step. The probability distribution of observing the agent at some position on the chain at a given time is either given by a static asymmetric exponential localized around \(x=0\) for fickle agents or to diffusion with a drift for frozen agents. Excellent agreement with direct simulations of the game for the score distribution confirms the basic validity of the modelling. At the same time, as discussed in the appendix, the fluctuations of the attendance are overestimated by the model. By contrasting with the Hamiltonian formulation of the dynamics the reason for this discrepancy is readily understood from viewing the model as a crude ansatz for full minimization problem. This also opens up for improving the model by introducing some variational parameters without having to confront the full complexity of the minimization of a non-quadratic Hamiltonian for general payoff functions.

We thank Erik Werner for valuable discussions. Simulations were performed on resources at Chalmers Centre for Computational Science and Engineering (C3SE) provided by the Swedish National Infrastructure for Computing (SNIC).

Notes

Note that what we here refer to as \(\psi \) is what is called \(\xi \) in the literature [5]. In this paper we reserve \(\xi \) for the object where strategies are ordered such that \(\vec {\Omega }\cdot \vec {\xi }_i\le 0\), corresponding to \(\xi _i^\mu =-\psi _i^\mu \text {sign}(\vec {\Omega }\cdot \vec {\psi }_i)\).

The exact expressions for these quantities are derived from the integral formulas as explained, but we are also happy to share them directly. Contact the first author.

References

Arthur, W.B.: Inductive reasoning and bounded rationality: the El Farol problem. Am. Econ. Rev. 84, 406 (1994)

Challet, D., Zhang, Y.-C.: Emergence of cooperation and organization in an evolutionary game. Physica A 246, 407 (1997)

Zhang, Y.-C.: Evolving models of financial markets. Europhys. News 29, 51 (1998)

Savit, R., Manuca, R., Riolo, R.: Adaptive competition, market efficiency, and phase transitions. Phys. Rev. Lett. 82, 2203 (1999)

Challet, D., Marsili, M.: Phase transition and symmetry breaking in the Minority Game. Phys. Rev. E 60, R6271(R) (1999)

de Cara, M.A.R., Pla, O., Guinea, F.: Competition, efficiency and collective behavior in the ”El Farol” bar model. Eur. Phys. J. B 10, 187 (1999)

Cavagna, A., Garrahan, J.P., Giardina, I., Sherrington, D.: Thermal model for adaptive competition in a market. Phys. Rev. Lett. 83, 4429 (1999)

Challet, D., Marsili, M., Zecchina, R.: Statistical mechanics of systems with heterogeneous agents: minority games. Phys. Rev. Lett. 84, 1824 (2000)

Jefferies, P., Hart, M.L., Hui, P.M., Johnson, N.F.: From market games to real-world markets. Eur. Phys. J. B 20, 493 (2001)

Challet, D., Marsili, M., Zhang, Y.-C.: Minority Games. Oxford University Press, Oxford (2005)

Yeung, C.H., Zhang, Y.-C.: Minority Games. Encyclopedia of Complexity and Systems Science, pp. 5588–5604. Springer, New York (2009)

Chakrabortia, A., Challeta, D., Chatterjeec, A., Marsilie, M., Zhang, Y.-C., Chakrabartid, B.K.: Statistical mechanics of competitive resource allocation using agent-based models. Phys. Rep. 552, 1 (2015)

Marsili, M., Challet, D.: Continuum time limit and stationary states in the minority game. Phys. Rev. E 64, 056138 (2001)

Hemiel, J.A.F., Coolen, A.C.C.: Generating functional analysis of the dynamics of the batch minority game with random external information. Phys. Rev. E 63, 056121 (2001)

Coolen, A.C.C.: Generating functional analysis of minority games with real market histories. J. Phys. A 38, 2311 (2005)

Coolen, A.C.C.: The Mathematical Theory of Minority Games: Statistical Mechanics of Interacting Agents. Oxford University Press, Oxford (2005)

Marsilia, M., Challet, D., Zecchinac, R.: Exact solution of a modified El Farol’s bar problem: efficiency and the role of market impact. Physica A 280, 522 (2000)

Mezard, M., Parisi G., Virasoro, M.: Spin glass theory and beyond: an introduction to the replica method and its applications. World scientific lecture notes in physics, vol. 9. World Scientific, Singapore (1987)

Acosta, G., Caridi, I., Guala, S., Marenco, J.: The quasi-periodicity of the minority game revisited. Physica A 392, 4450 (2013)

Hart, M., Jefferies, P., Hui, P.M., Johnson, N.F.: Crowd-anticrowd theory of multi-agent market games. Eur. Phys. J. B 20, 547 (2001)

Cavagna, A.: Irrelevance of memory in the minority game. Phys. Rev. E 59, R3783 (1999)

Challet, D., Marsili, M.: Relevance of memory in minority games. Phys. Rev. E 62, 1862 (2000)

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Solving for Mean and Variance of Step Size

The integrals to calculate the mean and variance for the distribution of average step sizes, Eq. 16, are Gaussian integrals including the error function. To solve these we first rescale the variables in terms of the variance \(\Omega /\sigma _\Omega \rightarrow \Omega \), \(X/\sigma _{X|\Omega }\rightarrow X\) etc. and perform the integral over the distribution of agents \(\xi \) which evaluates to \(\langle \xi |\Omega \rangle =-c(\alpha )\Omega /\sqrt{2 N}\) (\(c(\alpha )=\sqrt{\frac{2}{\pi \alpha }}\)) and \(\langle \xi ^2|\Omega \rangle =\frac{1}{2}\). We are left with integrals

and

To evaluate these we use the following integral formulas

and

where A is a symmetric (positive definite) matrix, and b and c are real constants. The bias term thus follows from a direct application of the first integral formula to a \(3 \times 3\) matrix. The fitness term follows from a substitution \(X'=X+\sqrt{\phi _1}c(\alpha )\Omega \) and \(Y'=Y-\sqrt{\phi _2}c(\alpha )\Omega \) to apply the second integral formula over \(\Omega \) and subsequently the first integral formula on a \(2 \times 2\) matrix. The variance can be calculated by the substitution for \(\Omega \), \(z=\Omega +\sqrt{\phi _1}X+\sqrt{\phi _2}Y\), followed by integrating out X and Y to finally apply the third integral formula over z. The actual expressions are quite lengthyFootnote 3, but the important features can be represented according to Eqs. 17 and 19 in terms of functions \(\tilde{\Delta }_{\text {bias}}(\alpha ,\phi _1,\phi _2)\), \(\tilde{\Delta }_{\text {fit}}(\alpha ,\phi _1,\phi _2)\), and \(\tilde{\sigma }(\alpha ,\phi _1,\phi _2)\). After solving for for the fractions of frozen agents \(\phi _1(\alpha )\) and \(\phi _2(\alpha )\) using Eqs. 21 and 22, we can consider these functions as dependent only on the control parameter \(\alpha \). The dependence on \(\alpha \) is plotted in Fig. 7, to point out that these functions change little over the whole relevant range \(\alpha >\alpha _c\approx 0.3\).

Appendix 2: Hamiltonian Formulation

Here we connect the formalism in the present work to the solution using the replica method, following closely the presentation in [13] and [8]. Expressing the attendance for given history in terms of fluctuations around a mean as

where \(S_t\) is a Gaussian random variable with mean zero and variance \(\sigma _S^2\) (to be determined self-consistently). This is related to expression (7), where we take an explicit statistical form \(\langle A|\mu \rangle =\Omega ^\mu +X^\mu +Y^\mu \), assumed to correspond to background plus frozen agents. Also, in the model in this paper we have the magnitude of \(\sigma _S^2\) as \(\varphi N/2\), with \(\varphi \) the fraction of fickle agents. This is not assumed in the present treatise, but as we will see the outcome is related.

There is also the explicit expression, Eq. 6, for the attendance \(A_t^\mu =\Omega ^\mu +\sum _i\xi _i^\mu s_i(t)\), where \(s_i(t)=\pm 1\) depending on which strategy is momentarily used by the agent. Taking the time average of this and assuming that the frequency of use is not influenced by the rapid switches of history we write \(\langle s_i(t)\rangle =m_i\), where for frozen agents \(m_i=\pm 1\) and for fickle \(|m_i|<1\). As discussed in [13] the fluctuations of \(s_i(t)\) are statistically independent such that \(\langle s_i(t)s_j(t)\rangle =m_im_j\) for \(i\ne j\), whereas \((s_i(t))^2=1\) by definition. With this we can write \(\langle A|\mu \rangle =\Omega ^\mu +\sum _i\xi _i^\mu m_i\), noting that \(\frac{\partial \langle A|\mu \rangle }{\partial m_i}=\xi _i^\mu \).

Now, evaluating the variance of the attendance using Eq. 6 and \(\sigma ^2_\Omega =N/2\), we find

This we can alternatively write (using Eq. 38) as \(\sigma ^2=\frac{1}{P}\sum _\mu \langle A |\mu \rangle ^2+\sigma _S^2=H+\sigma _S^2\). Here H, the predictability, also has the alternative form (using Eq. 6)

Correspondingly we find for the rapidly fluctuating field \(S_t\) the variance

(using \(\sigma _{\xi }^2=1/2\)). The latter expression has no contribution from frozen agents (as expected), and assuming that the distribution of \(m_i\) is quite strongly centred at 0 it will be close to, but always lower than, our assumed value of \(\varphi N/2\).

Consider now the fixed history time averaged step size for agent i, \(\Delta _i^\mu =-\xi _i^\mu \langle \text {sign}(A_t)|\mu \rangle \), with

The aim is to find a Hamiltonian generator \(\mathcal{H}\) of the long time dynamics such that the time and history averaged update is given by

(Note that this expression is not equivalent to Eq. 16. The latter is the mean of a distribution, whereas the present object represents the full distribution of average step sizes over agents corresponding to different i.) A function that does this is \(\mathcal{H}=\int dS P(S) G(\langle A |\mu \rangle +S)\) where \(G(x)=x\,\text {sign}(x)\) such that \(\frac{dG}{dx}=\text {sign}(x)\), which evaluates to

Thinking of the long-time evolution of the score difference for agent \(x_i\) which has an average step size \(\bar{\Delta }_i\), we find that if \(\bar{\Delta }_i>0\) the agent will be frozen positive, with \(m_i=1\) and similarly if \(\bar{\Delta }_i<0\) it will be frozen negative, with \(m_i=-1\). Only if \(\bar{\Delta }_i=0\) the agent will be fickle, with \(-1<m_i<1\). Considering that \(\bar{\Delta }_i=-\frac{\partial \mathcal{H}}{\partial m_i}\) we find the three cases: \(m_1=1\) corresponds to \(\frac{\partial \mathcal{H}}{\partial m_i}<0\), \(m_1=-1\) corresponds to \(\frac{\partial \mathcal{H}}{\partial m_i}>0\), and \(-1<m_i<1\) corresponds to \(\frac{\partial \mathcal{H}}{\partial m_i}=0\). The solution to this thus corresponds to finding the minimum of \(\mathcal{H}\) with respect to \(\{m_i\}\).

The minimization of Eq. 39 however, looks like a formidable problem in the thermodynamic limit, and we are not aware that it has been pursued in the literature. (Note that \(\langle A|\mu \rangle \sim \sqrt{N}\sim \sigma _S\) such that an expansion in \(\langle A|\mu \rangle \) is not appropriate.) This is in contrast to the case of linear payoff (see Appendix 3) where \(\mathcal{H}_{\text {linear}}=H=\frac{1}{P}\sum _\mu \langle A|\mu \rangle ^2\) which is a quadratic form in the variables \(m_i\). For the latter case the minimization problem has been solved using the replica method [8, 17, 18]. The equilibrium score distributions that we focus on in the present work have been solved for in [13] but to the best of our knowledge not for the sign-payoff game. Also, it appears that these distributions have not been discussed or studied in any detail, or compared to simulations, in earlier work.

Appendix 3: Distributions with Linear Payoff

Here we repeat the analysis of the main paper for the case of linear payoff where Eq. 4 is replaced by

We apply the same distributions, Eqs. 11–13, for the relative bid \(\xi ^\mu \), the contribution to the attendance of the positively (\(x>0\)) frozen agents \(X^\mu \), and the negatively (\(x<0\)) frozen agents \(Y^\mu \) and write \(A_t^\mu =\Omega ^{\mu }+X^{\mu }+Y^\mu +S_t\) (Eq. 7). Here \(\Omega ^\mu \) is the background (mean zero, variance N / 2) and \(S_t\) is the contribution from the fickle agents (with assumed mean zero). Integrating over time at fixed history \(\mu \), \(S_t\) integrates to zero because of linearity, giving

where we have explicitly inserted the negative bias term for the used strategy. Averaging over histories in the large P limit we find that the bias is just a constant

and the fitness is normal with mean and variance given by

where as before \(c=c(\alpha )=\sqrt{2/\pi \alpha }\) and \(\phi _1\) and \(\phi _2\) are the respective fractions of frozen agents. We note that the step size is of order 1 for the linear payoff, compared to order \(1/\sqrt{N}\) for the sign payoff game. Similarly in both cases, for large \(\alpha \) the fitness drops out, ensuring that there are no frozen agents. For moderate \(\alpha \) the fraction of frozen agents need to be solved for self-consistently through the equations

As for the sign-payoff game the results from solving these equations numerically are in good agreement with simulation data in the dilute phase as shown in Fig. 8. (Note, compared to Fig. 2, that both the data and model results for the fraction of frozen agents are very similar and quite insensitive to whether sign-payoff or linear payoff is used.)

The fraction of frozen agents as a function of \(\alpha \) for linear payoff. Also shown is the total fraction of frozen agents from the replica calculation (Eqs. 3.41–3.44 of [10]) (Each data point is averaged over 20 runs with \(\sim 1e6\) time steps each (1e5 steps for \(N=2001\)))

The fluctuations of attendance \(\sigma ^2=\langle A^2\rangle =H+\varphi N/2\) with \(H=\frac{1}{P}\sum _\mu \langle A |\mu \rangle ^2=\frac{N}{2}(1-c(\phi _1-\phi _2)))^2\) are compared to simulations in Fig. 9. These are clearly significantly overestimated by the model. (Similar results are found for the sign-payoff game and model.) Following the exposition in Appendix 2, the reasons for this discrepancy is quite clear. The model always overestimates the fluctuations \(S_t\), and since we are assuming that only the frozen agents contribute to \(\langle A|\mu \rangle \) we also miss the contribution of the fickle agents to reduce H. There seems to be a quite clear path to improve the model along these lines, which is left for future work. Here we opt for the simplicity of solving the present model and the fact that it does give quantitative agreement with distribution of relative strategy scores.

As a next step we can find the score distributions by solving the master equation on an integer chain. In contrast to the t game where scores are only updated by 0 or ±1, we now have to consider longer range hopping where scores are updated by integer steps in the range \(-N\) to N. Taking into account the individual time averaged step size \(\Delta _\pm =\Delta _{\text {fit}}\mp \frac{1}{2}\) (for \(x>0\) and \(x<0\) respectively) and the fact that \(\xi ^{\mu (t)} A_t\) has variance N / 2, we expect that the jump propabilities are well represented by a normal distribution (for a jump from x to \(x'\))

The master equation takes the form

Taking the continuum limit over space and ignoring complications due to the boundary \(x=0\), this can be solved in terms of exponential localization for fickle agents (\(\Delta _+<0\) and \(\Delta _->0\)) and diffusion with a drift for frozen agents (\(\Delta _+>0\) or \(\Delta _-<0\)). For fickle agents the score distributions are given by

for \(x>0\) and \(x<0\) respectively, which in the large \(\alpha \) limit reduces to \(P(x)\sim e^{\mp 2x/N}\). For frozen agents the distributions are given by

for positively and negatively frozen agents respectively.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Granath, M., Perez-Diaz, A. Diffusion and Localization of Relative Strategy Scores in The Minority Game. J Stat Phys 165, 94–114 (2016). https://doi.org/10.1007/s10955-016-1607-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-016-1607-8