Abstract

Locating the seismic event hypocenter is the very first issue undertaken when studying any seismological problem. Thus, the accuracy of the obtained solution can significantly influence consecutive stages of an analysis, so there is a continuous demand for new, more efficient, and accurate location algorithms. It is well recognized that there exists no single universal location algorithm which performs equally well in all situations. Seismic activity and its spatial variability over time, seismic network geometry, and the controlled area’s geological complexity are factors influencing the performance of location algorithms. For example, in the case of mining applications, the planarity of the seismic network usually operated at the exploitation level becomes an important issue limiting the accuracy of location of the hypocenter depths. In this paper, we push forward the discussion on the performance of the newly proposed location algorithm called the extended double difference (EDD), concentrating on the reliability of source depth estimation for mining-induced seismic events. We demonstrate that the EDD algorithm very efficiently uses information originating from the nonplanarity of the seismic network, improving the hypocenter depth estimates with respect to the classical double difference technique. Methodological considerations are illustrated by real data analysis of selected events from the Rudna copper mine (Poland).

Similar content being viewed by others

1 Introduction

The main goal of seismic networks operating in mines is continuous monitoring of seismicity in the mining area (Gibowicz and Kijko 1994; Gibowicz and Lasocki 2001; Mendecki and Sciocatti 1997). This comprises detection of seismic events followed by location of hypocenters, energy and seismic moment estimation, and, possibly further, more advanced analysis. The growing demand for more and more precise monitoring of induced seismic activity, for increasing the accuracy and reliability of data analysis as well as the necessity of analyzing very small seismic events requires many improvements in seismic data analysis procedures. In this paper, we concentrate on the second step of mining (seismic) data analysis, namely, the hypocenter location task.

The possibility of achieving high location accuracy crucially depends on a few elements, namely, data quality, seismometer network geometry, and knowledge of the seismic velocity spatial distribution (Lomax et al. 2000; Wiejacz and Debski 2001). None of these elements are usually sufficiently known for the location problem. Some of them, like the optimum network configuration (Kijko and Sciocatti 1995) or data quality, are strongly connected to the mining process and cannot be easily changed or improved. Another factor, the velocity spatial distribution, is subject to many simplifications and is usually very poorly known (Lomax et al. 2000). Moreover, in a mining environment, the velocity also changes with time due to the dynamic response of the rock mass to excavation, and the changes can reach up to 10–20 % of the background velocity (Gibowicz and Lasocki 2001). As a consequence, the velocity distribution is usually poorly known and actually is the most significant factor limiting location accuracy (Lomax et al. 2000; Husen et al. 1999; Debski et al. 1997). Therefore, there is a need in mining practice to use new location algorithms which are as independent as possible of the velocity structure. An example of an algorithm which meets this criterion is the double difference (DD) technique proposed by Waldhauser and Ellsworth (2000).

The main idea behind this algorithm is to locate a group (spatial cluster) of events simultaneously rather than process seismic data for each event separately. As observational data, the method uses differential travel times—the differences between travel times of seismic waves coming from different events recorded at the same stations—instead of absolute travel time onsets for each event separately. This significantly reduces the dependence of location results on velocity models (Waldhauser and Ellsworth 2000). Moreover, it allows to achieve sub-sampling data accuracy and also automate data pre-processing (Waldhauser 2009) by using any of the signal cross-correlation techniques (Waldhauser and Schaff 2008). The DD algorithm has proved its outstanding performance in locating clusters and swarms of seismic events, significantly contributing to the better characterization of large earthquake source areas (see, e.g., Enescu et al. 2005), and thus, in previous papers (Rudzinski and Debski 2008), we have analyzed its application in a mining environment. We have concluded that the direct application of the DD approach for mining-induced seismicity analysis is quite limited. The reason for this is the algorithm’s stability. Achieving it requires the seismic signals from events located together to be recorded by most (ideally all) of the stations used for the DD location. Unfortunately, this condition can hardly be met in mining practice. Firstly, this is because the located events are usually quite small and they are recorded by nearby stations only—usually different for different events due to mining noise. Secondly, mining progress requires almost continuous updating of the seismic monitoring network. Again, this results in rejecting a number of events from the DD analysis because the events recorded before and after a network update cannot be located together due to differences in network configuration. Having identified the problems with using the DD algorithm, we have proposed its extension and call it the extended double difference (EDD) (Rudzinski and Debski 2011). In the cited paper, we already noticed an improvement of the depth estimation achieved by the EDD algorithm with respect to the DD one. This is a very important point because while the DD algorithm significantly reduces the influence of the velocity structure on location results, the problem of precise estimation of hypocenter depth still remains if the seismic network is almost planar and located at the same depth at which most seismic events occur. This is usually the case in underground deep mines where the seismic network being located at exploitation level is almost planar. This inherently limits location accuracy because for such a network and event configuration, there is no natural depth variability scale which could help determine the depth of events. It needs noting that in the case of horizontal spatial (epicentral) coordinates, such a length scale is provided by horizontal spreading of the network. Thus, any improvement in hypocenter depth estimation calls for algorithms which can efficiently “enhance” the information on network and source nonplanarity. The EDD algorithm seems to fulfill this requirement.

In this paper, we push forward the previous analysis (Rudzinski and Debski 2011) of the performance of the DD and EDD algorithms, concentrating this time on the resolution of the hypocenter depth and using real mining rockburst data as a case study.

The paper is organized as follows: first, we briefly describe the basic elements of the DD and EDD techniques; next, some elements of the probabilistic inverse theory are briefly outlined. This theoretical part is followed by an analysis of 10 events from the Rudna copper mine (Poland) and the obtained results are discussed.

2 Mathematics of the DD and EDD location algorithms

Let m i = (X i,Y i,Z i,T i) be the set of parameters describing the hypocenter spatial coordinates and origin time of the ith seismic event. Let \(T_{k}^{i}\) denote the generic wave onset time from the ith event read at the kth station. From now on, we assume that all arrival times correspond to the same seismic phase. Solving the location problem requires theoretical/numerical calculations of onset times (hereafter, denoted as \({(T^\textrm{m})}_{k}^{i}\)) for comparing with observed arrival times (\({(T^\textrm{o})}_{k}^{i}\text{(m)}\)). Next, let us introduce the generalized differential arrival times, defined as the difference between arrival times from different sources at different stations.

and the observed and modeled differential arrival times will be denoted as \((\Delta^\textrm{o})_{kl}^{ij}\) and \((\Delta^\textrm{m})_{kl}^{ij}\), respectively.

Let us consider events forming a spatial cluster and assume that the hypocenter separation between sources is small compared to the hypocenter–station distance and to local velocity heterogeneity sizes. Then, the ray paths between the sources and a common station can be hypothetically divided into two parts. The first part is common for all rays and roughly speaking spans from the station to the source cluster. The second one takes into account differentiation of ray paths in the neighborhood of and within the cluster. This hypothetical splitting of ray paths demonstrates that wave travel times from different sources have a large common factor connected to the wave traveling along the common part of all rays. The remaining part of the travel times brings information on the relative source location within the cluster. By using the differential travel times, we cancel (at least partially) the propagation time contribution from the common ray part, thus diminishing the location result’s sensitivity to the velocity model. Simultaneously, the differential travel times preserve information on the relative source distribution within the cluster (Evangelidis et al. 2008).

In practice, the DD technique performs the simultaneous location of all events forming a cluster, assuming that most of the seismic phases coming from all events in the cluster are recorded by most of the stations. Then, it is possible to form the differential residua (Waldhauser and Ellsworth 2000):

and the location solution is found by minimizing the misfit function, which in the case of using the l 2 norm reads

Since the differential travel times \((\Delta^\textrm{o})_k^{ij}\) can be calculated automatically with very high accuracy from seismograms by means of the cross-correlation technique, the approach is very suitable for automatic almost real-time event location (Waldhauser and Ellsworth 2000).

The EDD algorithm extends the DD approach by generalizing the differential residua formula given by Eq. 2 as follows:

and constructing the generalized misfit function

where again the subscripts in the symbols \(\Delta^\textrm{o}\) and \(\Delta^\textrm{m}\) are used to distinguish between differential travel times calculated for observational and modeled travel times, respectively. Contrary to the DD approach, the sum is now taken over all sources and all stations; thus, we can use all observational information we have in hand. There is no need anymore to reject events not recorded by some stations or to exclude stations which do not record signals from considered sources. Moreover, considering all possible combinations of source-station difference pairs, we include additional information not available in the DD method. To see this, let us rewrite \(S_{\textrm{EDD}}\) as follows:

The first row on the right side of this formula corresponds to the DD misfit function \(S_{\textrm{DD}}\) as defined in Eq. 3. The second term (referred to as \(S_{\textrm{SE}}\)) describes the differential times calculated for each seismic source and different stations which recorded signals from that source. This term, according to the reciprocity theorem, is equivalent to the first one with exchanged source and receivers. Observe that this term is the sum over all sources of the differential travel times between different stations and thus can be interpreted as the sum of the residual misfit functions \(S_{\textrm{SE}}^i\) defined as

for each event separately as if it was located separately but using the differential data instead of the absolute arrival times. Finally, the third term (referred to as \(S_{\textrm{ED}}\)) brings all the remaining combinations of sources and receivers which are not included in the first two terms.

The question which should be asked at this point is how each of the terms in Eq. 6 contributes to the final solution. To analyze this problem, let us rewrite Eq. 6 as

introducing three coefficients \(a_{\textrm{dd}}\), \(a_{\textrm{se}}\), and \(a_{\textrm{ed}}\) which allow to control the relative influence of each term in Eq. 6 on the final location solution. Setting all these coefficients to 1 results in the full EDD misfit function, while assuming a 0 value for a selected coefficient excludes a given term from the inversion (location) algorithm. Actually, different choices of the \(a_{\textrm{dd}}\), \(a_{\textrm{se}}\), and \(a_{\textrm{ed}}\) coefficients lead to different misfit functions and, consequently, to different location algorithms. Among the infinite number of algorithms generated in this way, the most interesting are those which correspond to setting the a i coefficients to 0 or 1. They are listed in Table 1.

The question of the contribution of each of the terms in Eq. 8 to the final location results can now be reformulated as a task of comparing different location algorithms (inversion schemata) generated by the misfit functions listed in Table 1. This comparison can be performed using various numerical characteristics calculated for each of the inversion schemata. For the current analysis, we decided to use the Shannon information measure (Tarantola 1987) which reads

where μ(·) stands for the reference distribution (usually a noninformative probability distribution which accounts for the size of the model space (Mosegaard and Tarantola 2002; Tarantola 2005)). We have chosen this parameter instead of the more traditional measures, like the a posteriori error or the RMS value of residua for the optimum model found, because the Shannon measure takes into account not only the “goodness” of the optimum best fitting model (RMS residua) but also the shape of the a posteriori probability distribution which determines the a posteriori errors. The price for choosing this robust measure I S is the necessity of performing the full probabilistic (Bayesian) inversion rather than the most popular optimization-based inversion (Debski 2010). Since the probabilistic inverse theory is still not commonly used, let us describe its very basic elements in the next section. Readers interested in the details of the probabilistic inverse theory are referred to the basic textbooks and review papers, for example, (Tarantola 1987, 2005; Debski 2010; Mosegaard and Tarantola 2002; Mosegaard and Sambridge 2002; Lomax et al. 2000; Matsu’ura 1984).

3 Inverse theory—probabilistic point of view

The primary goal of the most frequent inverse tasks is determining the values of some parameters (m) which cannot be measured directly (Tarantola 2005). In the case of the location problem, they are the hypocenter coordinates and rupture origin time. To estimate the m parameters, we choose some additional physical parameters (d) which can be directly measured and which are connected to the sought ones in a known way (dat = G(m)). Then, we perform an inference about m having the measured \(\mathbf{d}^{\textrm{obs}}\).

There are basically three possible approaches to carry out this inference (Debski 2010; Tarantola 2005).

The first approach, often called the back projection technique (Deans 1983; Jakka et al. 2010), relies on a direct projection of the measured values onto the model space which allows a direct “calculation” of the sought parameter values. This approach corresponds to a direct solving of the equation

and thus has very limited application because it can be carried out under very strong assumptions imposed on the G(·) function.

The second approach relies on inspecting a model space—the space of all possible values of m—to find the model \(\mathbf{m}^{\textrm{ml}}\) for which the theoretical prediction best fits the measured one. In practice, this is achieved by solving the optimization task which in a simplified form reads

where ||·|| stands for a norm in data space. The approach is very general and usually very fast but offers a limited possibility of evaluating the uncertainty of the solution found. This is a consequence of searching for the optimum model only and disregarding information from a part of the model space containing suboptimal models contributing to the final inversion uncertainties (Scales and Tenorio 2001; Debski 2008; Scales 1996).

This additional information is efficiently explored by the third approach offered by the probabilistic inverse theory (Tarantola and Valette 1982; Tarantola 1987, 2005). The method relies on the evaluation of each model m and assigning to it the probability of being the true one. In the simplest case, this a posteriori probability reads (Tarantola and Vallete 1982)

where f(·) stands for an arbitrary a priori probability function known from elsewhere and the misfit function S(m) reads

Comparing Eq. 11 with Eqs. 12 and 13, we can see that in this simplified case, if only \(f(\mathbf{m}) = \textrm{cons.}\) (no significant a priori information), the \(\mathbf{m}^{\textrm{ml}}\) solution provided by the optimization approach maximizes the a posteriori probability density constructed within the framework of the probabilistic inverse theory.

The advantage of the probabilistic approach is that having the a posteriori probability density, we can evaluate any characteristic of the final solution allowing for any exhaustive error, resolution, trade-off, etc. analysis (Debski 2004; Wiejacz and Debski 2001). We need this feature to evaluate and compare the performance of the DD and EDD location algorithms.

However, any practical use of the probabilistic inverse technique requires an efficient method of sampling of the a posteriori distribution, which is by no means trivial if the number of inverted parameters is large (Curtis and Lomax 2001). The most popular class of sampling algorithms used in such situations is the Markov chain Monte Carlo (MCMC) technique (see Gilks et al. 1995; Robert and Casella 1999). This technique is able to perform the so-called importance sampling efficiently in any multidimensional model space which relies on sampling only that part of the model space where the sampled function has its dominating values. Since we were locating nine seismic events simultaneously in the studied problem, which give a total of 36 inverted parameters, the sampling of the a posteriori distribution was carried out by a very simple MCMC algorithm—the Metropolis algorithm (Chib and Greenberg 1995).

4 Rudna copper mine case

In this section we present the results of comparing the two techniques when applied to seismic data from the Rudna copper mine (Poland). The mine runs a digital seismic network composed of 32 vertical sensors located underground at depths from 550 to 1150 m. The frequency band of the recording/transmission system is from 0.5 to 150 Hz and the sampling period is dt = 2 ms. The accuracy of routinely located events is better than 100 m, typically around 50 m for the epicentral coordinates and much worse for hypocenter depth (Fig. 1). For the comparison analysis, we have selected a cluster composed of 10 events from the XVII/1 mining panel described in Table 2 and shown in Fig. 2 where a sketch of the part of the mine where the considered events occurred is depicted. Event no. 1 was fixed as the master event and has not been relocated by either the DD or EDD algorithm but its location, well supported by mining observations, was provided by the mine. The location procedures were based on the P wave arrival times picked manually from seismograms. We have decided not to use any cross-correlation technique for observational data preprocessing to avoid any numerical errors which could influence the performance of the DD and EDD algorithms in different ways. The location procedure was carried out within the probabilistic (Bayesian) approach by sampling the a posteriori probability functions defined for DD and EDD as

using the Metropolis algorithm with settings as in Rudzinski and Debski (2011). No a priori information was used. As the numerical estimators of the hypocenter locations, the maximum likelihood models for corresponding a posteriori distributions were the ones we considered and the location errors were estimated by the a posteriori covariance matrix. The goodness of the depth solutions was evaluated by means of the Shannon information measure calculated for the marginal 1D probability distributions.

Epicentral distances between the DD and EDD solutions for the first eight well-resolved events weighted by the doubled averaged a posteriori epicentral errors gathered in Table 5

Let us begin the analysis of the location results by noticing, according to Table 2, a significant variation in the number of stations contributing to the location of different events. It ranges from 8 for the smallest event (event no. 10) up to 28 (event no. 3), which means that almost all the stations of the network contribute to the location of this event. The consequence of this fact is that the location errors for events no. 9 and 10 are larger than for other events from the cluster, as reported in Table 5.

The location results expressed by the maximum likelihood solutions are gathered in Table 3 for the EDD solutions and in Table 4 for the DD approach. Table 5 shows location errors estimated by the a posteriori covariance matrix. A comparison of the epicentral solutions (X, Y) shows that both solutions coincide quite well within 50–100-m accuracy, as is also shown in Fig. 1 where the epicentral distances weighted by the doubled epicentral errors are shown.

Another conclusion which follows from Tables 3, 4, and 5 is that both DD and EDD resolve the epicentral coordinates in a similar way. However, this is not the case with depth. The solutions for Z not only differ between the two methods but also the inversion errors for the DD solutions are almost twice larger than for the EDD solutions. This is a result of significant differences between the a posteriori probability densities, as shown in Fig. 3.

Now, after the general analysis of the location results, let us return to the main question about the role of the \(S_{\textrm{DD}}\), \(S_{\textrm{SE}}\), and \(S_{\textrm{ED}}\) terms in the EDD solutions. Let us begin this discussion by analyzing the Shannon measure for depth solutions generated by misfit functions with a different choice of \(a_{\textrm{dd}}\), \(a_{\textrm{se}}\), and \(a_{\textrm{ed}}\) coefficients. The choice of \(a_{\textrm{dd}} = a_{\textrm{se}} = a_{\textrm{ed}} = 1\) corresponds to the EDD solution while the choice \(a_{\textrm{dd}} = 1,\; a_{\textrm{se}} = a_{\textrm{ed}} = 0\) corresponds to the standard DD technique. The results for two events: well-resolved (event no. 6) and poorly resolved (event no. 9) events are shown in Fig. 4.

The Shannon measure for the Z coordinate and different choices of the misfit function obtained by setting the \(a_{\textrm{dd}}\), \(a_{\textrm{se}}\), and \(a_{\textrm{ed}}\) coefficients to 0 and 1 in all possible combinations. The results are marked by those terms of the misfit function for which corresponding coefficients were nonvanishing. The left panel corresponds to the well-resolved event while the right one to the very weak event localized by only a few nearby stations (see Table 2)

The most remarkable feature visible in this figure is that in the case of the well-resolved events, I S for the solution generated by the \(S_{\textrm{DD}}\) part of the misfit function alone (classical DD solution) has a much smaller value than for solutions obtained for the misfit function containing the remaining combinations of the \(S_{\textrm{DD}}\), \(S_{\textrm{SE}}\), and \(S_{\textrm{ED}}\) terms. This is especially true when comparing the I S values for solutions generated by the \(S_{\textrm{DD}}\) and \(S_{\textrm{SE}}\) terms and by \(S_{\textrm{ED}}\) alone. In the case of the poorly resolved event (right panel in Fig. 4), this effect disappears. The value of the I S factor is now very low and similar for all choices of the misfit function. It seems that in this case, the resolution of all methods is similar and limited by insufficient information in the input data. The conclusion which we have drawn from these observations is that algorithms built upon \(S_{\textrm{ED}}\) or \(S_{\textrm{SE}}\) combinations explore information about event depth more efficiently than the DD technique. To understand the mechanism of this “depreciation” of the DD method, let us examine the Shannon measure for all nine located events and different choices of the misfit function. The results are shown in Fig. 5.

There are two extremely striking features visible in Fig. 5. The first one is the much lower values of the I S factor for the DD solutions with respect to other choices of \(\ensuremath{S(\mathbf{m})}\) for all well-resolved events. Moreover, the I S values are almost the same for all events. The second characteristic is an apparent progressive diminishing of the I S factor for all but one, \(S_{\textrm{DD}}\) alone, of the combinations of the \(S_{\textrm{DD}}\), \(S_{\textrm{SE}}\), and \(S_{\textrm{ED}}\) terms with successive models. Inspection of Table 2 indicates that the varying number of seismic time onsets recorded and used for locating events is the main factor influencing I S . In fact, the dependence of I S on the number of used onsets plotted in Fig. 6 fully proves this expectation. A similar effect has been reported for the DD technique by Bai et al. (2006). An analysis of Fig. 6 suggests that for a large number of onsets, I S saturates for any considered misfit functions. At the moment, we are not sure if this is caused by exhaustion of all the independent information contained in the data or if it results from the inherent resolution ability of the algorithms imposed by the structure of the assumed misfit functions.

5 Conclusions

The EDD location technique was originally proposed in order to include the specific demands of the mining seismic environment, such as event clustering (Gibowicz and Lasocki 2001; Orlecka-Sikora and Lasocki 2002) and continuous changing of the monitoring system (Mendecki and Sciocatti 1997; Rudzinski and Debski 2011), in the DD method. We expected the resulting algorithm to have a large enough spatial resolution to enable a detailed analysis of the structure of the spatial seismic clusters. Through performing synthetic tests, we have concluded (Rudzinski and Debski 2011) that the performance of the EDD approach with respect to the epicentral coordinates is essentially the same as for the DD technique. The current analysis of real data further supports this conclusion. However, the performance of the EDD approach with respect to the hypocenter depth has been found to be significantly better than that of DD.

The analysis performed in this paper clearly shows that the DD algorithm does not fully exploit the information contained in the input seismic data. This is not the case with the EDD approach. Using the same data set, the EDD algorithm leads to more precise depth solutions (larger value of the Shannon information measure). We believe that this is because the additional terms introduced into the misfit function by the EDD algorithm bring additional constraints (information) on the final solution with respect to the DD term. To illustrate this point, let us assume that we are locating N events using M stations and that signals from all the events are recorded by all stations. It is easy to show that in such a case, the \(S_{\textrm{DD}}\) term contains N ×(N − 1)×M/2 travel time difference factors, the \(S_{\textrm{SE}}\) term contains M ×(M − 1)×N/2 factors, and finally the \(S_{\textrm{ED}}\) term consists of M ×N ×(N − 1) ×(M − 1)/2 terms. Assuming that each such factor represents some constraint imposed on the final solution, it is obvious that in typical conditions when M > N, the \(S_{\textrm{DD}}\) term has the lowest “resolving power.” This analysis remains true provided that there are no dominating factors in the \(S_{\textrm{DD}}\), \(S_{\textrm{SE}}\), and \(S_{\textrm{ED}}\) terms. Otherwise, the dominating factors will determine the structure and the most important features of the misfit function. Consequently, adding new factors to the misfit function \(\ensuremath{S(\mathbf{m})}\) by changing N or M does not change it significantly. The consequence of this is stationarity of the a posteriori probability distribution, which means that the Shannon measure saturates when the number of phase readings increases.

It is not easy to say when the misfit function can be dominated by travel time difference factors. From the physical point of view, by analogy to phase transition processes, such a situation can happen if there exists some natural temporal or spatial scale for the analyzed (inverted) parameters. This is the case, for example, with epicentral coordinates for which the spatial extension of the network provides such a characteristic length scale. On the other hand, if such a scale length is missing, we can expect that all factors in \(\ensuremath{S(\mathbf{m})}\) have similar importance and thus the DD approach will not be able to exploit all available information efficiently. We believe that one example of this is the planar seismic network and seismicity located at the depth of the network.

Finally, to conclude our discussion about the performance of the EDD algorithm, let us observe that similar to the DD approach, the EDD algorithm uses the differential travel times as input data. However, contrary to the DD approach, it includes all possible combinations of source–receiver pairs. This means, however, that some of the input differential times can hardly be calculated reliably by means of cross-correlation techniques because of a lack of similarity between the considered seismograms. One consequence of this fact is that the algorithm needs careful and, in some part, manual preprocessing of the input data. Its automatic implementation may be problematic.

References

Bai L, Wu Z, Zhang T, Kawasaki I (2006) The effect of distribution of stations upon location error: statistical tests based on the double difference earthquake location algorithm and the bootstrap method. Earth Planets Space 58:e9–e12

Chib S, Greenberg (1995) Understanding the metropolis–hastings algorithm. Am Stat 49:327–335

Curtis A, Lomax A (2001) Prior information sampling distributions and the curse of dimensionality. Geophysics 66(2):372–378

Deans SR (1983) The radon transform and some of its applications. Wiley, New York

Debski W (2004) Application of Monte Carlo techniques for solving selected seismological inverse problems. Publ Inst Geophys Pol Acad Sc B-34(367):1–207

Debski W (2008) Estimating the source time function by Markov Chain Monte Carlo sampling. Pure Appl Geophys 165:1263–1287. doi:10.1007/s00024-008-0357-1

Debski W (2010) Probabilistic inverse theory. Adv Geophys 52:1–102. doi:10.1016/S0065-2687(10)52001-6

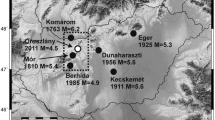

Debski W, Guterch B, Lewandowska H, Labak P (1997) Earthquake sequences in the Krynica region Western Carpathians 1992–1993. Acta Geophys Pol XLV(4):255–290

Enescu B, Mori J, Ohmi S (2005) Double-difference relocation of the 2004 off the Kii peninsula earthquakes. Earth Planets Space 57:357–362

Evangelidis CP, Konstantinou KI, Melis NS, Charalambakis M, Stavrakakis GN (2008) Waveform relocation and focal mechanism analysis of an earthquake swarm in Trichonis lake, western Greece. Bull Seismol Soc Am 98(2):804–811. doi:10.1785/0120070185

Gibowicz SJ, Kijko A (1994) An introduction to mining seismology. Academic, San Diego

Gibowicz SJ, Lasocki S (2001) Seismicity induced by mining: ten years later. Adv Geophys 44:39–181

Gilks W, Richardson S, Spiegelhalter D (1995) Markov chain Monte Carlo in practice. Chapman& Hall/CRC Press, London

Husen S, Kissling E, Flueh E, Asch G (1999) Accurate hypocentre determination in the seismogenic zone of the subducting Nazca Plate in northern Chile using a combined on-/offshore network. Geophys J Int 138(3):687–701

Jakka R, Cochran E, Lawrence J (2010) Earthquake source characterization by the isochrone back projection method using near-source ground motions. Geophys J Int 182(2):1058–1072. doi:10.1111/j.1365-246X.2010.04670.x

Kijko A, Sciocatti M (1995) Optimal spatial distribution of seismic stations in mines. Int J Rock Mech Min Sci Geomech Abstr 32:607–615

Lomax A, Virieux J, Volant P, Berge C (2000) Probabilistic earthquake location in 3D and layered models: introduction. Kluver, Amsterdam

Matsu’ura M (1984) Bayesian estimation of hypocenter with origin time eliminated. J Phys Earth 32(6):469–83

Mendecki A, Sciocatti M (1997) Seismic monitoring in mines. Chapman and Hall, London

Mosegaard K, Sambridge M (2002) Monte Carlo analysis of invers problems. Inv Prob 18:R29–45

Mosegaard K, Tarantola A (2002) International handbook of earthquake & engineering seismology. Academic, New York

Orlecka-Sikora B, Lasocki S (2002) Clustered structure of seismicity from the Legnica-Glogow copper district. Publs Inst Geophys Pol Acad Sc M-24(340):105–119

Robert CP, Casella G (1999) Monte Carlo statistical methods. Springer, New York

Rudzinski L, Debski W (2008) Relocation of mining-induced seismic events in the upper Silesian coal basin Poland by a double-difference method. Acta Geodyn Geomater 5(2):97–104

Rudzinski L, Debski W (2011) Extending the double difference location technique for mining applications—part I: numerical study. Acta Geophys. doi:10.2478/s11600-011-0021-5

Scales JA (1996) Uncertainties in seismic inverse calculations. Lecture notes in earth sciences, vol 63. Springer, Berlin

Scales JA, Tenorio L (2001) Prior information and uncertainty in inverse problems. Geophysics 66(2):389–397

Tarantola A (1987) Inverse problem theory: methods for data fitting and model parameter. Elsevier, Amsterdam

Tarantola A (2005) Inverse problem theory and methods for model parameter estimation. SIAM, Philadelphia

Tarantola A, Valette B (1982) Generalized nonlinear inverse problems. Rev Geophys Space Phys 20(2):219–232

Tarantola A, Vallete B (1982) Inverse problems = quest for information. J Geophys 50:159–170

Waldhauser F (2009) Near-real-time double - difference event location using long-term seismic archives, with application to Northern California. Bull Seismol Soc Am 99(5):2736–2748

Waldhauser F, Ellsworth W (2000) A double-difference earthquake location algorithm: method and application to the northern Hayward fault. Bull Seismol Soc Am 90:1353–1368

Waldhauser F, Schaff DP (2008) Large-scale relocation of two decades of Northern California seismicity using cross-correlation and double-difference methods. J Geophys Res 113(B08311). doi:10.1029/2007JB005479

Wiejacz P, Debski W (2001) New observation of Gulf of Gdansk seismic events. Phys Earth Planet Int 123(2–4):233–245

Acknowledgements

The authors are very grateful to the Rudna copper mine for its cooperation and kind permission to use its seismic data. This work was partially supported by grant no. 2011/01/B/ST10/07305 from the National Science Center. The anonymous reviewers are acknowledged for their effort and help in improving the paper.

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Rudziński, L., Dȩbski, W. Extending the double difference location technique—improving hypocenter depth determination. J Seismol 17, 83–94 (2013). https://doi.org/10.1007/s10950-012-9322-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10950-012-9322-7