Abstract

We study the numerical solutions of time-dependent systems of partial differential equations, focusing on the implementation of boundary conditions. The numerical method considered is a finite difference scheme constructed by high order summation by parts operators, combined with a boundary procedure using penalties (SBP–SAT). Recently it was shown that SBP–SAT finite difference methods can yield superconvergent functional output if the boundary conditions are imposed such that the discretization is dual consistent. We generalize these results so that they include a broader range of boundary conditions and penalty parameters. The results are also generalized to hold for narrow-stencil second derivative operators. The derivations are supported by numerical experiments.

Similar content being viewed by others

References

Berg, J., Nordström, J.: Superconvergent functional output for time-dependent problems using finite differences on summation-by-parts form. J. Comput. Phys. 231(20), 6846–6860 (2012)

Berg, J., Nordström, J.: On the impact of boundary conditions on dual consistent finite difference discretizations. J. Comput. Phys. 236, 41–55 (2013)

Berg, J., Nordström, J.: Duality based boundary conditions and dual consistent finite difference discretizations of the Navier–Stokes and Euler equations. J. Comput. Phys. 259, 135–153 (2014)

Carpenter, M.H., Nordström, J., Gottlieb, D.: A stable and conservative interface treatment of arbitrary spatial accuracy. J. Comput. Phys. 148(2), 341–365 (1999)

Eriksson, S., Nordström, J.: Analysis of the order of accuracy for node-centered finite volume schemes. Appl. Numer. Math. 59(10), 2659–2676 (2009)

Fernández, D.C.D.R., Hicken, J.E., Zingg, D.W.: Review of summation-by-parts operators with simultaneous approximation terms for the numerical solution of partial differential equations. Comput. Fluids 95, 171–196 (2014)

Gustafsson, B., Kreiss, H.O., Oliger, J.: Time-Dependent Problems and Difference Methods. Wiley, New York (2013)

Hicken, J.E.: Output error estimation for summation-by-parts finite-difference schemes. J. Comput. Phys. 231(9), 3828–3848 (2012)

Hicken, J.E., Zingg, D.W.: Superconvergent functional estimates from summation-by-parts finite-difference discretizations. SIAM J. Sci. Comput. 33(2), 893–922 (2011)

Hicken, J.E., Zingg, D.W.: Summation-by-parts operators and high-order quadrature. J. Comput. Appl. Math. 237(1), 111–125 (2013)

Kreiss, H.O., Lorenz, J.: Initial-Boundary Value Problems and the Navier–Stokes Equations. Academic Press, New York (1989)

Mattsson, K.: Summation by parts operators for finite difference approximations of second-derivatives with variable coefficients. J. Sci. Comput. 51(3), 650–682 (2012)

Mattsson, K., Nordström, J.: Summation by parts operators for finite difference approximations of second derivatives. J. Comput. Phys. 199(2), 503–540 (2004)

Nordström, J., Eriksson, S., Eliasson, P.: Weak and strong wall boundary procedures and convergence to steady-state of the Navier–Stokes equations. J. Comput. Phys. 231(14), 4867–4884 (2012)

Nordström, J., Svärd, M.: Well-posed boundary conditions for the Navier–Stokes equations. SIAM J. Numer. Anal. 43(3), 1231–1255 (2005)

Quarteroni, A., Sacco, R., Saleri, F.: Numerical Mathematics. Springer, Berlin (2000)

Strand, B.: Summation by parts for finite difference approximation for d/dx. J. Comput. Phys. 110(1), 47–67 (1994)

Svärd, M., Nordström, J.: On the order of accuracy for difference approximations of initial-boundary value problems. J. Comput. Phys. 218(1), 333–352 (2006)

Svärd, M., Nordström, J.: Review of summation-by-parts schemes for initial-boundary-value problems. J. Comput. Phys. 268, 17–38 (2014)

Acknowledgements

The author would like to sincerely thank the anonymous referees for their valuable comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Reformulation of the First Order Form Discretization

We derive the scheme (33) with penalty parameters (35), using the hyperbolic results.

Step 1: Consider the problem (31), which is a first order system. We represent the solution \(\bar{\mathcal {U}}\) by a discrete solution vector \(\bar{U}=[\bar{U}_0^T,\bar{U}_1^T,\ldots ,\bar{U}_N^T]^T\), where \(\bar{U}_i(t)\approx \bar{\mathcal {U}}(x_i,t)\) and discretize (31) exactly as was done in (11) for the hyperbolic case, that is as

As proposed in Theorem 1, we let \(\bar{{\varSigma }}_{0}=-\bar{Z}_+\bar{{\varDelta }}^{ }_+\bar{P}_{L}^{-1}\) and \(\bar{{\varSigma }}_N=\bar{Z}_-\bar{{\varDelta }}^{ }_-\bar{P}_{R}^{-1}\).

Step 2: We discretize (30) directly by approximating \(\mathcal {U}\) by \(U\) and \(\mathcal {U}_x\) by \(\widehat{W}\). We obtain

If \( \bar{{\varSigma }}_{0}=[\sigma _0^T,\tau _0^T]^T\) and \(\bar{{\varSigma }}_N=[\sigma _N^T,\tau _N^T]^T\), then (67) is a permutation of (66).

Step 3: The scheme in (67) is a system of differential algebraic equations, so we would like to cancel the variable \(\widehat{W}\) and get a system of ordinary differential equations instead. Multiplying (67b) by \(\bar{D}=(D_1\otimes I_n)\) and adding the result to (67a), yields

where

Next, using the properties in (12), together with the fact that \(H\) is diagonal, we compute

where \(\widehat{q}\) is the scalar \(\widehat{q}=e_0^TH^{-1}e_0=e_N^TH^{-1}e_N\) given in (37). This yields

where \(\bar{H}=(H\otimes I_n)\). However, the boundary condition deviations \(\widehat{\chi }_0\) and \(\widehat{\chi }_N\) still contain \(\widehat{W}\), so we multiply (67b) by \((e_0^T\otimes I_n)\) and \((e_N^T\otimes I_n)\), respectively, to get

Next, we need boundary condition deviations without \(\widehat{W}\), and define

Recall that \(\mathcal {G}_{L,R}=\mathcal {K}_{L,R}\mathcal {E}\). Using (70), we can now relate \(\widehat{\xi }_{0,N}\) above to \(\widehat{\chi }_{0,N}\) in (68) as

where \(I_{m_+}\) and \(I_{m_-}\) are identity matrices of sizes corresponding to the number of positive (\({m_+}\)) and negative (\(m_-\)) eigenvalues of \(\bar{\mathcal {A}}\), respectively. Inserting \(\widehat{\chi }_{0,N}\) from (71) into (69) allows us to finally write the scheme without any \(\widehat{W}\) terms and we obtain (33), with

From Step 1 and 2 we know that

where \(\bar{Z}_{1,2,3,4}\) are given in (36). Inserting the above relation into (72), we obtain the penalty parameters presented in (35).

Appendix B: Validity of the Derivations and Penalty Parameters in Appendix A

The following Lemma will prove useful:

Lemma 1

(Determinant theorem) For any matrices A and B of size \(m \times n\) and \(n \times m\), respectively, \(\det (I_{ m }+AB)=\det (I_{ n }+BA)\) holds. This lemma is a generalization of the “matrix determinant lemma” and sometimes referred to as “Sylvester’s determinant theorem”.

Proof

Consider the product of block matrices below:

Using the multiplicativity of determinants, the determinant rule for block triangular matrices and the fact that \(\det (I_m)=\det (I_n)=1\), we see that the determinant of the left hand side is \( \det (I_m+AB)\) and that the determinant of the right hand side is \( \det (I_n + BA)\). \(\square \)

When (67) in “Appendix A” is rewritten such that all dependence of \(\widehat{W}\) is removed, we rely on the assumption that we can extract \((I_N\otimes \mathcal {E})\widehat{W}\) from (67b) and insert it into (67a). Intuitively we expect this to be possible, since (67) is in fact (although indirectly) a consistent approximation of (30). To investigate this more carefully, we multiply (67b) by \((H\otimes I_n)\) and move all \(\widehat{W}\) dependent parts to the left hand side (recall that \(\mathcal {G}_{L,R}=\mathcal {K}_{L,R}\mathcal {E}\)). This yields

We see that we can solve for \((I_N\otimes \mathcal {E})\widehat{W}\) if the matrices \(H_{0,0}I_n-\tau _0\mathcal {K}_L\) and \(H_{N,N}I_n-\tau _N\mathcal {K}_R\) are non-singular. From “Appendix A” we know that

that is, we need \(I_n+\widehat{q}\bar{Z}_2\bar{{\varDelta }}^{ }_+\bar{P}_{L}^{-1} \mathcal {K}_L\) and \(I_n-\widehat{q}\bar{Z}_4\bar{{\varDelta }}^{ }_-\bar{P}_{R}^{-1} \mathcal {K}_R\) to be non-singular (note that \(\widehat{q}=1/H_{0,0}=1/H_{N,N}\) for diagonal matrices \(H\)). According to Lemma 1 above, we have

That is, we can solve for \((I_N\otimes \mathcal {E})\widehat{W}\) in (67b) if the matrices \(\widehat{{\varXi }}_{L,R}\) are non-singular, where \(\widehat{{\varXi }}_{L,R}\) are nothing else than the matrices that shows up in the penalty parameters in (35). So, we are thus interested in the regularity of the matrices

which are inverted in (35)—or if \(\widehat{q}\) is replaced by \(q\) we consider \({\varXi }_{L,R}\) from (43). Below we show that \(\widehat{{\varXi }}_L\) is non-singular for well-posed problems. First, using (6), (32) and (36) we obtain

and realize that the matrices \(\bar{Z}_2\) and \(\bar{Z}_4\) scales with \(\mathcal {E}\) as \(\bar{Z}_2=\mathcal {E}\widetilde{Z_2}\) and \(\bar{Z}_4=\mathcal {E}\widetilde{Z_4}\), where

Secondly, using (10), (32) and (36) leads to \(\mathcal {G}_L=\bar{P}_{L}(\bar{Z}_2^T+\bar{R}_L\bar{Z}_4^T)\) and we can rewrite \(\widehat{{\varXi }}_L\) as

where we have used that \(\mathcal {G}_L=\mathcal {K}_L\mathcal {E}\). Now Lemma 1 yields \(\det (\widehat{{\varXi }}_L) =\det (\bar{P}_{L}) \det \left( {\varUpsilon }\right) \), where \({\varUpsilon }\equiv I_{n}+\widehat{q}\mathcal {E}^{1/2}\widetilde{Z_2}\bar{{\varDelta }}^{}_+\big (\widetilde{Z_2}^T+\bar{R}_L\widetilde{Z_4}^T\big )\mathcal {E}^{1/2}\) and where we by \(\mathcal {E}^{1/2}\) refer to the principal square root of \(\mathcal {E}\). The permutation matrix \(\bar{P}_{L}\) is invertible but \({\varUpsilon }\) must be checked. Thus we compute

Next, thanks to the condition for well-posedness, \(\bar{\mathcal {C}}_L=\bar{{\varDelta }}^{ }_-+\bar{R}_L^T\bar{{\varDelta }}^{ }_+\bar{R}_L\le 0\), we obtain

Inserting this into \({\varUpsilon }{\varUpsilon }^T\) above, gives (recall that \(\widehat{q}\) is positive)

We now let \(\mathcal {E}=X{\varLambda }X^T\) be the eigendecomposition of \(\mathcal {E}\), with—for simplicity—the eigenvalues sorted as \({\varLambda }=\text {diag}({\varLambda }_+, {\varLambda }_0)\), where \({\varLambda }_+>0\) and \({\varLambda }_0=0\). Furthermore, we denote

where \({\varTheta }_{1}\) and \({\varTheta }_{4}\) have the same sizes as \({\varLambda }_+\) and \({\varLambda }_0\), respectively. Using (32), (36) and (7) leads to \(\bar{Z}_2\bar{{\varDelta }}^{ }_+\bar{Z}_2^T+\bar{Z}_4\bar{{\varDelta }}^{ }_-\bar{Z}_4^T =0\), that is

which means that \({\varTheta }_{1}=0\) must hold. This in turn leads to

where we have used that \(\mathcal {E}^{1/2}=X{\varLambda }^{1/2}X^T\). Thus \({\varUpsilon }{\varUpsilon }^T\) is non-singular, since

It follows that \({\varUpsilon }\) is non-singular, since \({\text {rank}}({\varUpsilon }{\varUpsilon }^T) = {\text {rank}}({\varUpsilon }) \), and consequently so is \(\widehat{{\varXi }}_L\), since \(\det (\widehat{{\varXi }}_L) =\det (\bar{P}_{L}) \det \left( {\varUpsilon }\right) \). The same derivations can be repeated for the right boundary.

Appendix C: Proof of Proposition 1 and Examples of \(q\)

In Proposition 1 we claim that the inverse of \(\widetilde{A}_{{S}}= A_{{S}}+\delta E_0\) has the structure \(\widetilde{A}_{{S}}^{-1}=J/\delta +K_0\) and that the corners of \(\widetilde{M}^{-1}=S\widetilde{A}_{{S}}^{-1}S^T\) are independent of \(\delta \). We prove this below.

Proof

First we make sure that \(\widetilde{A}_{{S}}\) is non-singular. By numerically investigating the eigenvalue of \(\widetilde{A}_{{S}}\) which is closest to zero, we see that (for all operators in this paper) it scales almost as \(c(\delta )/N\), where \(c(\delta )\) is nearly independent of the operator and where \(c(\delta )=0\) only if \(\delta =0\). We now show that the inverse of \(\widetilde{A}_{{S}}\) is \(J/\delta +K_0\). We denote the parts of \( A_{{S}}\), \( \widetilde{A}_{{S}}\), \(K_0\) and J

where \(\mathbf {a}\) and \(\mathbf {1}=[1, 1, \ldots , 1]^T\) are vectors and \(\bar{A}\) and \(\bar{J}\) are matrices of size \(N\times N\). Since \(A_{{S}}\) consists of consistent difference operators, it yields zero when operating on constants. Therefore, \(A_{{S}}J=0\) (because J is an all-ones matrix) and \(\mathbf {a}+\bar{A}\mathbf {1}=\mathbf {0}\). Note that the relation \(\mathbf {a}+\bar{A}\mathbf {1}=\mathbf {0}\) leads to \(\left[ \begin{array}{cc} \mathbf {a}&\bar{A}\end{array}\right] =\bar{A}B\), where \(B=\left[ \begin{array}{cc} -\mathbf {1}&\bar{I}\end{array}\right] \) is an \(N\times (N+1)\) matrix of rank N, in which case it holds that \( {\text {rank}}(\bar{A}B) = {\text {rank}}(\bar{A})\). Moreover, since \(\widetilde{A}_{{S}}\) is non-singular, \(\left[ \begin{array}{cc} \mathbf {a}&\bar{A}\end{array}\right] \) must have full rank N. Hence \( {\text {rank}}(\bar{A})= {\text {rank}}(\bar{A}B) =N\), i.e., \(\bar{A}\) has full rank N and is invertible. Next, due to the structure of \(K_0\), we know that \(E_0K_0=0\). Thus we have

In the last step we have used that \(\mathbf {a}^T+\mathbf {1}^T\bar{A}^T=\mathbf {0}^T\) and that \(\bar{A}\) is symmetric.

The first and the last row of the matrix S are consistent difference stencils. We can thus write \(S=[\mathbf {s}_0, \times , \mathbf {s}_N]^T\), where the vectors \(\mathbf {s}_{0, N}\) have the property \(\mathbf {s}_{0, N}^TJ=0\). The interior rows of S are marked by a \(\times \) because they are not uniquely defined. We compute

We see that the corner elements of \(\widetilde{M}^{-1}\) are independent of \(\delta \). We conclude that if S is defined such that it is non-singular, the constants in (42) can be computed using (60). \(\square \)

As an example, consider the narrow (2, 0) order operator in Table 1, specified by \(D_2\) below and associated with the following matrices \(H\) and S

Using (73) and (39) we obtain \(A_{{S}}\) such that we can compute \(K_0\) and \(\widetilde{M}^{-1}\) as

In this case we get \(q_0=q_N=1/h\) and \(q_c=0\), such that \(q=1/h\).

In addition to the operator discussed above, we use the diagonal-norm operators in [13]. For the higher order accurate operators found in [13], \(q\) varies with N. For example, for the narrow (4, 2) order accurate operator, we have

Since the values do not differ so much, it is practical to use the largest value, the one for \(N=8\), regardless of the number of grid points.

Appendix D: Time-Dependent Numerical Examples

For simplicity we mainly consider stationary numerical examples. Below we give a couple of examples confirming the superconvergence also for time-dependent problems.

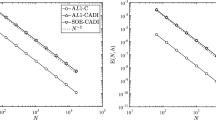

1.1 The Heat Equation with Dirichlet Boundary Conditions

We consider the heat equation. We solve \(\mathcal {U}_t=\varepsilon \mathcal {U}_{xx}+\mathcal {F}(x,t)\) with \(\varepsilon =0.01\) and the exact solution \(\mathcal {U}(x,t)=\cos (30x)+\sin (20x)\cos (10t)+\sin (35t)\). For the time propagation the classical 4th order accurate Runge–Kutta scheme is used, with sufficiently small time steps, \({\varDelta }t=10^{-4}\), such that the spatial errors dominate. In Fig. 13 the errors obtained using the narrow (6, 3) order scheme are shown as a function of time.

The corresponding spatial order of convergence (at time \(t=1\)) is shown in Table 2. The simulations confirm the steady results, namely that both \(\omega =2\varepsilon \) and \(\omega =q\varepsilon \) give superconvergent functionals but that choosing the factorization parameter as \(\omega \sim \varepsilon /h\) improves the solution significantly compared to when using the eigendecomposition.

1.2 The Heat Equation with Neumann Boundary Conditions

We solve \(\mathcal {U}_t=\varepsilon \mathcal {U}_{xx}+\mathcal {F}(x,t)\) again, but this time with Neumann boundary conditions, and the penalty parameters are now given by (65) with \(a=0\), \(\varepsilon =0.01\), \(\alpha _{{}_{L,R}}=0\) and \(\beta _{{}_{L,R}}=1\). In contrast to when having Dirichlet boundary conditions, the spectral radius \(\rho \) does not depend so strongly on \(\omega \) and therefore we can let \(\omega \rightarrow \infty \) (we can use \(\omega =q\varepsilon \) here too, it gives the same convergence rates as \(\omega =\infty \)). In Table 3 we show the errors and convergence orders (at time \(t=1\)) for the same setup as in the previous section, that is when solving using the 4th order Runge–Kutta scheme with \({\varDelta }t=10^{-4}\) and having the exact solution \(\mathcal {U}(x,t)=\cos (30x)+\sin (20x)\cos (10t)+\sin (35t)\) and the weight function \(\mathcal {G}=1\). We note that the convergence rates behaves similarly to the Dirichlet case.

Rights and permissions

About this article

Cite this article

Eriksson, S. A Dual Consistent Finite Difference Method with Narrow Stencil Second Derivative Operators. J Sci Comput 75, 906–940 (2018). https://doi.org/10.1007/s10915-017-0569-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-017-0569-6

Keywords

- Finite differences

- Summation by parts

- Simultaneous approximation term

- Dual consistency

- Superconvergence

- Narrow stencil