Abstract

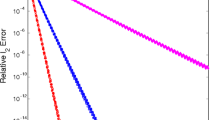

This paper aims to develop new and fast algorithms for recovering a sparse vector from a small number of measurements, which is a fundamental problem in the field of compressive sensing (CS). Currently, CS favors incoherent systems, in which any two measurements are as little correlated as possible. In reality, however, many problems are coherent, and conventional methods such as \(L_1\) minimization do not work well. Recently, the difference of the \(L_1\) and \(L_2\) norms, denoted as \(L_1\)–\(L_2\), is shown to have superior performance over the classic \(L_1\) method, but it is computationally expensive. We derive an analytical solution for the proximal operator of the \(L_1\)–\(L_2\) metric, and it makes some fast \(L_1\) solvers such as forward–backward splitting (FBS) and alternating direction method of multipliers (ADMM) applicable for \(L_1\)–\(L_2\). We describe in details how to incorporate the proximal operator into FBS and ADMM and show that the resulting algorithms are convergent under mild conditions. Both algorithms are shown to be much more efficient than the original implementation of \(L_1\)–\(L_2\) based on a difference-of-convex approach in the numerical experiments.

Similar content being viewed by others

Notes

If POCS does not converge, we discard this trial in the analysis.

We use the author’s Matlab implementation with default parameter settings and the same stopping condition adopted as \(L_1\)–\(L_2\) in the comparsion.

References

Beck, A., Teboulle, M.: A fast iterative shrinkage–thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Bredies, K., Lorenz, D.A., Reiterer, S.: Minimization of non-smooth, non-convex functionals by iterative thresholding. J. Optim. Theory Appl. 165(1), 78–112 (2015)

Candès, E.J., Romberg, J., Tao, T.: Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 59, 1207–1223 (2006)

Chartrand, R.: Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Process. Lett. 10(14), 707–710 (2007)

Chartrand, R., Yin, W.: Iteratively reweighted algorithms for compressive sensing. In: International Conference on Acoustics, Speech, and Signal Processing (ICASSP), pp. 3869–3872 (2008)

Cheney, W., Goldstein, A.A.: Proximity maps for convex sets. Proc. Am. Math. Soc. 10(3), 448–450 (1959)

Donoho, D., Elad, M.: Optimally sparse representation in general (nonorthogonal) dictionaries via l1 minimization. Proc. Natl. Acad. Sci. U.S.A. 100, 2197–2202 (2003)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theory 52(4), 1289–1306 (2006)

Esser, E., Lou, Y., Xin, J.: A method for finding structured sparse solutions to non-negative least squares problems with applications. SIAM J. Imaging Sci. 6(4), 2010–2046 (2013)

Fannjiang, A., Liao, W.: Coherence pattern-guided compressive sensing with unresolved grids. SIAM J. Imaging Sci. 5(1), 179–202 (2012)

Gribonval, R., Nielsen, M.: Sparse representations in unions of bases. IEEE Trans. Inf. Theory 49(12), 3320–3325 (2003)

Huang, X., Shi, L., Yan, M.: Nonconvex sorted l1 minimization for sparse approximation. J. Oper. Res. Soc. China 3, 207–229 (2015)

Krishnan, D., Fergus, R.: Fast image deconvolution using hyper-Laplacian priors. In: Advances in Neural Information Processing Systems (NIPS), pp. 1033–1041 (2009)

Lai, M.J., Xu, Y., Yin, W.: Improved iteratively reweighted least squares for unconstrained smoothed lq minimization. SIAM J. Numer. Anal. 5(2), 927–957 (2013)

Li, G., Pong, T.K.: Global convergence of splitting methods for nonconvex composite optimization. SIAM J. Optim. 25, 2434–2460 (2015)

Li, H., Lin, Z.: Accelerated proximal gradient methods for nonconvex programming. In: Advances in Neural Information Processing Systems, pp. 379–387 (2015)

Liu, T., Pong, T.K.: Further properties of the forward-backward envelope with applications to difference-of-convex programming. Comput. Optim. Appl. 67(3), 489–520 (2017)

Lorenz, D.A.: Constructing test instances for basis pursuit denoising. Trans. Signal Process. 61(5), 1210–1214 (2013)

Lou, Y., Osher, S., Xin, J.: Computational aspects of l1-l2 minimization for compressive sensing. In: Le Thi, H., Pham Dinh, T., Nguyen, N. (eds.) Modelling, Computation and Optimization in Information Systems and Management Sciences. Advances in Intelligent Systems and Computing, vol. 359, pp. 169–180. Springer, Cham (2015)

Lou, Y., Yin, P., He, Q., Xin, J.: Computing sparse representation in a highly coherent dictionary based on difference of l1 and l2. J. Sci. Comput. 64(1), 178–196 (2015)

Lou, Y., Yin, P., Xin, J.: Point source super-resolution via non-convex l1 based methods. J. Sci. Comput. 68(3), 1082–1100 (2016)

Mammone, R.J.: Spectral extrapolation of constrained signals. J. Opt. Soc. Am. 73(11), 1476–1480 (1983)

Natarajan, B.K.: Sparse approximate solutions to linear systems. SIAM J. Comput. 24, 227–234 (1995)

Papoulis, A., Chamzas, C.: Improvement of range resolution by spectral extrapolation. Ultrason. Imaging 1(2), 121–135 (1979)

Pham-Dinh, T., Le-Thi, H.A.: A DC optimization algorithm for solving the trust-region subproblem. SIAM J. Optim. 8(2), 476–505 (1998)

Repetti, A., Pham, M.Q., Duval, L., Chouzenoux, E., Pesquet, J.C.: Euclid in a taxicab: sparse blind deconvolution with smoothed regularization. IEEE Signal Process. Lett. 22(5), 539–543 (2015)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1997)

Rockafellar, R.T., Wets, R.J.B.: Variational Analysis. Springer, Dordrecht (2009)

Santosa, F., Symes, W.W.: Linear inversion of band-limited reflection seismograms. SIAM J. Sci. Stat. Comput. 7(4), 1307–1330 (1986)

Wang, Y., Yin, W., Zeng, J.: Global convergence of ADMM in nonconvex nonsmooth optimization. arXiv:1511.06324 [cs, math] (2015)

Woodworth, J., Chartrand, R.: Compressed sensing recovery via nonconvex shrinkage penalties. Inverse Probl. 32(7), 075,004 (2016)

Wu, L., Sun, Z., Li, D.H.: A Barzilai–Borwein-like iterative half thresholding algorithm for the \(l_{1/2}\) regularized problem. J. Sci. Comput. 67, 581–601 (2016)

Xu, Z., Chang, X., Xu, F., Zhang, H.: \(l_{1/2}\) regularization: a thresholding representation theory and a fast solver. IEEE Trans. Neural Netw. Learn. Syst. 23, 1013–1027 (2012)

Yin, P., Lou, Y., He, Q., Xin, J.: Minimization of \(l_1\)– \(l_2\) for compressed sensing. SIAM J. Sci. Comput. 37, A536–A563 (2015)

Zhang, S., Xin, J.: Minimization of transformed \(l_1\) penalty: Theory, difference of convex function algorithm, and robust application in compressed sensing. arXiv preprint arXiv:1411.5735 (2014)

Acknowledgements

The authors would like to thank Zhi Li and the anonymous reviewers for valuable comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was partially supported by the NSF grants DMS-1522786 and DMS-1621798.

Rights and permissions

About this article

Cite this article

Lou, Y., Yan, M. Fast L1–L2 Minimization via a Proximal Operator. J Sci Comput 74, 767–785 (2018). https://doi.org/10.1007/s10915-017-0463-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-017-0463-2

Keywords

- Compressive sensing

- Proximal operator

- Forward–backward splitting

- Alternating direction method of multipliers

- Difference-of-convex