Abstract

The exact nonnegative matrix factorization (exact NMF) problem is the following: given an m-by-n nonnegative matrix X and a factorization rank r, find, if possible, an m-by-r nonnegative matrix W and an r-by-n nonnegative matrix H such that \(X = WH\). In this paper, we propose two heuristics for exact NMF, one inspired from simulated annealing and the other from the greedy randomized adaptive search procedure. We show empirically that these two heuristics are able to compute exact nonnegative factorizations for several classes of nonnegative matrices (namely, linear Euclidean distance matrices, slack matrices, unique-disjointness matrices, and randomly generated matrices) and as such demonstrate their superiority over standard multi-start strategies. We also consider a hybridization between these two heuristics that allows us to combine the advantages of both methods. Finally, we discuss the use of these heuristics to gain insight on the behavior of the nonnegative rank, i.e., the minimum factorization rank such that an exact NMF exists. In particular, we disprove a conjecture on the nonnegative rank of a Kronecker product, propose a new upper bound on the extension complexity of generic n-gons and conjecture the exact value of (i) the extension complexity of regular n-gons and (ii) the nonnegative rank of a submatrix of the slack matrix of the correlation polytope.

Similar content being viewed by others

Notes

Bob is given a, Alice b, and they have to decide whether \(a^Tb \ne 0\) while minimizing the number of bits exchanged; see [41] for more details.

For example, for a 50-by-50 matrix and \(r= 10\), running standard multiplicative updates for 1 s allows to perform about 10000 iterations on a standard laptop.

The generalized slack matrix of a pair of polytopes P (inner) and Q (outer) is defined as \(S(i,j) = b_i - a_i^T v_j\) where \(\{ x | b_i - a_i^T x \ge 0 \}\) is the inequality defining the ith facet of Q and \(v_j\) is the jth vertex of P; see, e.g., [29]. Note that the standard slack matrix corresponds to the particular case of equal inner and outer polytopes.

Because it requires a rather high computational cost for larger n, we stopped testing the conjecture at \(n=78\). In fact, running this experiment on a regular laptop took about two weeks.

As a vertex gets closer and closer to the convex hull generated by the other vertices, it becomes numerically harder and harder to decide whether or not it belongs to the convex hull.

References

Arora, S., Ge, R., Kannan, R., Moitra, A.: Computing a nonnegative matrix factorization—provably. in Proceedings of the 44th Symposium on Theory of Computing, STOC ’12, pp. 145–162, (2012)

Beasley, L., Laffey, T.: Real rank versus nonnegative rank. Linear Algebra Appl. 431(12), 2330–2335 (2009)

Beasley, L., Lee, T., Klauck, H., Theis, D.: Dagstuhl report 13082: communication complexity, linear optimization, and lower bounds for the nonnegative rank of matrices (2013). arXiv:1305.4147

Ben-Tal, A., Nemirovski, A.: On polyhedral approximations of the second-order cone. Math. Oper. Res. 26(2), 193–205 (2001)

Bocci, C., Carlini, E., Rapallo, F.: Perturbation of matrices and nonnegative rank with a view toward statistical models. SIAM J. Matrix Anal. Appl. 32(4), 1500–1512 (2011)

Boutsidis, C., Gallopoulos, E.: SVD based initialization: a head start for nonnegative matrix factorization. Pattern Recognit. 41(4), 1350–1362 (2008)

Brown, C.W.: Qepcad b: a program for computing with semi-algebraic sets using cads. ACM SIGSAM Bull. 37(4), 97–108 (2003)

Carlini, E., Rapallo, F.: Probability matrices, non-negative rank, and parameterization of mixture models. Linear Algebra Appl. 433, 424–432 (2010)

Cichocki, A., Amari, S.-I., Zdunek, R., Phan, A.: Non-negative Matrix and Tensor Factorizations: Applications to Exploratory Multi-way Data Analysis and Blind Source Separation. Wiley, London (2009)

Cichocki, A., Phan, A.H.: Fast local algorithms for large scale nonnegative matrix and tensor factorizations. IEICE Trans. Fundam. Electron. E92–A(3), 708–721 (2009)

Cichocki, A., Zdunek, R., Amari, S.-i.: Hierarchical ALS Algorithms for Nonnegative Matrix and 3D Tensor Factorization. Lecture notes in computer science (Springer, 2007), pp. 169–176

Cohen, J., Rothblum, U.: Nonnegative ranks, decompositions and factorization of nonnegative matrices. Linear Algebra Appl. 190, 149–168 (1993)

Conforti, M., Cornuéjols, G., Zambelli, G.: Extended formulations in combinatorial optimization. 4OR A Q.J. Oper. Res. 10(1), 1–48 (2010)

de Caen, D., Gregory, D.A., Pullman, N.J.: The boolean rank of zero-one matrices. in Proceedings of Third Caribbean Conference on Combinatorics and Computing (Barbados), pp. 169–173 (1981)

Fawzi, H., Gouveia, J., Parrilo, P., Robinson, R., Thomas, R.: Positive Semidefinite Rank (2014). arXiv:1407.4095

Fiorini, S., Kaibel, V., Pashkovich, K., Theis, D.: Combinatorial bounds on nonnegative rank and extended formulations. Discret. Math. 313(1), 67–83 (2013)

Fiorini, S., Massar, S., Pokutta, S., Tiwary, H., de Wolf, R.: Linear Versus Semidefinite Extended Formulations: Exponential Separation and Strong Lower Bounds. in Proceedings of the Forty-Fourth Annual ACM Symposium on Theory of Computing, ACM, pp. 95–106, (2012)

Fiorini, S., Rothvoss, T., Tiwary, H.: Extended formulations for polygons. Discret. Comput. Geom. 48(3), 658–668 (2012)

Gillis, N.: Sparse and unique nonnegative matrix factorization through data preprocessing. J. Mach. Learn. Res. 13(Nov), 3349–3386 (2012)

Gillis, N.: The why and how of nonnegative matrix factorization. In: Suykens, J., Signoretto, M., Argyriou, A. (eds.) Regularization, Optimization, Kernels, and Support Vector Machines. Machine Learning and Pattern Recognition Series. Chapman & Hall/CRC, London (2014)

Gillis, N., Glineur, F.: Using underapproximations for sparse nonnegative matrix factorization. Pattern Recognit. 43(4), 1676–1687 (2010)

Gillis, N., Glineur, F.: Accelerated multiplicative updates and hierarchical ALS algorithms for nonnegative matrix factorization. Neural Comput. 24(4), 1085–1105 (2012)

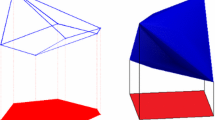

Gillis, N., Glineur, F.: On the geometric interpretation of the nonnegative rank. Linear Algebra Appl. 437(11), 2685–2712 (2012)

Gillis, N., Vavasis, S.: Semidefinite programming based preconditioning for more robust near-separable nonnegative matrix factorization. SIAM J.Optim. 25, 677–698 (2015)

Goemans, M.: Smallest Compact Formulation for the Permutahedron (2009). http://math.mit.edu/~goemans/PAPERS/permutahedron

Gouveia, J.: Personnal Comunication (2014)

Gouveia, J., Fawzi, H., Robinson, R.: Rational and Real Positive Srank can be Different (2014). arXiv:1404.4864

Gouveia, J., Parrilo, P., Thomas, R.: Lifts of convex sets and cone factorizations. Math. Oper. Res. 38(2), 248–264 (2013)

Gouveia, J., Robinson, R., Thomas, R.: Worst-case Results for Positive Semidefinite Rank (2013). arXiv:1305.4600

Gregory, D.A., Pullman, N.J.: Semiring rank: boolean rank and nonnegative rank factorizations. J. Combin. Inform. Syst. Sci. 8(3), 223–233 (1983)

Hrubeš, P.: On the nonnegative rank of distance matrices. Inf. Process. Lett. 112(11), 457–461 (2012)

Janecek, A., Tan, Y.: Iterative improvement of the multiplicative update NMF algorithm using nature-inspired optimization. in Seventh International Conference on Natural Computation vol. 3 (2011), pp. 1668–1672

Janecek, A., Tan, Y.: Swarm intelligence for non-negative matrix factorization. Int. J. Swarm Intell. Res. 2(4), 12–34 (2011)

Janecek, A., Tan, Y.: Using population based algorithms for initializing nonnegative matrix factorization. Adv. Swarm Intell. 6729, 307–316 (2011)

Kaibel, V.: Extended formulations in combinatorial optimization. Optima 85, 2–7 (2011)

Kaibel, V., Weltge, S.: A Short Proof that the Extension Complexity of the Correlation Polytope Grows Exponentially (2013). arXiv:1307.3543

Kim, J., He, Y., Park, H.: Algorithms for nonnegative matrix and tensor factorizations: a unified view based on block coordinate descent framework. J. Global Optim. 58(2), 285–319 (2014)

Kim, J., Park, H.: Fast nonnegative matrix factorization: an active-set-like method and comparisons. SIAM J. Sci. Comput. 33(6), 3261–3281 (2011)

Lee, D., Seung, H.: Learning the parts of objects by nonnegative matrix factorization. Nature 401, 788–791 (1999)

Lee, D., Seung, H.: Algorithms for non-negative matrix factorization. In: Advances in Neural Information Processing Systems, vol. 13, pp. 556–562 (2001)

Lee, T., Shraibman, A.: Lower Bounds in Communication Complexity. Found. Trends Theor. Comput. Sci. 3(4), 263–399 (2007)

Moitra, A.: An Almost Optimal Algorithm for Computing Nonnegative Rank. in Proceedings of the 24th Annual ACM-SIAM Symposium on Discrete Algorithms (SODA ’13), pp. 1454–1464 (2013)

Oelze, M., Vandaele, A., Weltge, S.: Computing the Extension Complexities of all 4-Dimensional 0/1-polytopes (2014). arXiv:1406.4895

Padrol, A., Pfeifle, J.: Polygons as Slices of Higher-Dimensional Polytopes (2014). arXiv:1404.2443

Pirlot, M.: General local search methods. Eur. J. Oper. Res. 92(3), 493–511 (1996)

Rothvoss, T.: The Matching Polytope has Exponential Extension Complexity (2013). arXiv:1311.2369

Shitov, Y.: Sublinear Extensions of Polygons (2014). arXiv:1412.0728

Shitov, Y.: An upper bound for nonnegative rank. J. Combin. Theory Ser. A 122, 126–132 (2014)

Shitov, Y.: Nonnegative Rank Depends on the Field (2015). arXiv:1505.01893

Takahashi, N., Hibi, R.: Global convergence of modified multiplicative updates for nonnegative matrix factorization. Comput. Optim. Appl. 57(2), 417–440 (2014)

Thomas, L.: Rank factorization of nonnegative matrices. SIAM Rev. 16(3), 393–394 (1974)

Vandaele, A., Gillis, N., Glineur, F.: On the Linear Extension Complexity of Regular n-gons (2015). arXiv:1505.08031

Vavasis, S.: On the complexity of nonnegative matrix factorization. SIAM J. Optim. 20(3), 1364–1377 (2010)

Watson, T.: Sampling Versus Unambiguous Nondeterminism in Communication Complexity (2014). http://www.cs.toronto.edu/~thomasw/papers/nnr

Yannakakis, M.: Expressing combinatorial optimization problems by linear programs. J. Comput. Syst. Sci. 43(3), 441–466 (1991)

Zdunek, R.: Initialization of nonnegative matrix factorization with vertices of convex polytope. In: Artificial Intelligence and Soft Computing, vol. 7267, pp. 448–455. Lecture Notes in Computer Science (2012)

Acknowledgments

The authors would like to thank the reviewers and the editor for their insightful comments which helped improve the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper presents research results of the Belgian Network DYSCO (Dynamical Systems, Control, and Optimization), funded by the Interuniversity Attraction Poles Programme initiated by the Belgian Science Policy Office, and of the Concerted Research Action (ARC) programme supported by the Federation Wallonia-Brussels (contract ARC 14/19-060).

Appendices

Appendix: Sensitivity to the parameters \(\alpha \) and \({\varDelta }t\)

In this section, we show some numerical results to stress out that the heuristics are not too sensitive (in terms of number of exact NMF’s found) to the parameters \(\alpha \) and \({\varDelta }t\) of the local search heuristic (Algorithm FR), as long as they are chosen sufficiently large; see Tables 8 and 9. This is the reason why we selected the rather conservative values of \(\alpha = 0.99\) and \({\varDelta }t = 1\) in this paper.

In practice however, it would be good to start the heuristics with smaller values for \(\alpha \) and \({\varDelta }t\) and increase them progressively if the heuristic fails to identify exact NMF’s: for easily factorizable matrices (such as the randomly generated ones) it does not make sense to choose large parameters, while for difficult matrices choosing \(\alpha \) and \({\varDelta }t\) too small does not allow the heuristics to find exact NMF’s because convergence of NMF algorithms can, in some cases, be too slow.

Parameters for simulated annealing

Table 10 shows the performance of SA for different initialization strategies described in Sect. 3.2 (for \(T_0 = 0.1\), \(T_{end} = 10^{-4}\), \(J=2\), \(N = 100\) and \(K = 50\)): it appears that SPARSE10 works on average the best hence we keep this initialization for SA. In particular, it is interesting to notice that SPARSE10 is able to compute exact NMF’s of 32-G while the other initializations have much more difficulties (only SPARSE00 finds one exact NMF).

Table 11 shows the performance for different values of \(T_{end}\) (for \(J=2\), \(N = 100\) and \(K = 50\)): it appears that the value \(T_{end} = 10^{-4}\) for the final temperature works well.

Table 12 shows the performance for different values of N and K, for \(T_{end} = 10^{-4}\) and \(J = 2\). It seems that \(K = 50\) and \(N = 100\) is a good compromise between number of exact NMF’s found and computational time.

Table 13 shows the performance for different values of J (for \(T_{end} = 10^{-4}\), \(K = 50\) and \(N = 100\)), and shows that \(J = 2\) performs the best.

Parameters for the rank-by-rank heuristic

Table 14 shows the performance of RBR for the different initialization strategies (for \(N = 100\) and \(K = 50\)): SPARSE10 works on average the best. As for SA, it allows to compute exact NMF’s of 32-G (6/10) while all other initializations fail.

Table 15 gives the results for several values of the parameters K and N. It is interesting to observe that when K gets larger, the heuristic performs rather poorly in some cases (e.g., for the UDISJ6 matrix). The reason is that when K increases, the heuristic tends to generate similar solutions: the ones obtained with Algorithm getRankPlusOne initialized with the best solution that can be obtained by combining the rank-\((k-1)\) solution with a rank-one one. In other words, the search domain that can be explored by RBR is reduced when K increases.

Initialization for the hybridization

Again the best initialization strategy is SPARSE10. However, it is interesting to note that Hybrid is less sensitive to initialization than SA and RBR. In fact, except for 32-G with RNDCUBE and LEDM32 with SPARSE01, it was able to compute exact NMF’s in all situations. In other words, as shown in Table 16, Hybrid is a more robust strategy than RBR and SA although it is computationally more expensive on average.

Rights and permissions

About this article

Cite this article

Vandaele, A., Gillis, N., Glineur, F. et al. Heuristics for exact nonnegative matrix factorization. J Glob Optim 65, 369–400 (2016). https://doi.org/10.1007/s10898-015-0350-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-015-0350-z