Abstract

We prove the existence of small amplitude, time-quasi-periodic solutions (invariant tori) for the incompressible Navier–Stokes equation on the d-dimensional torus \(\mathbb T^d\), with a small, quasi-periodic in time external force. We also show that they are orbitally and asymptotically stable in \(H^s\) (for s large enough). More precisely, for any initial datum which is close to the invariant torus, there exists a unique global in time solution which stays close to the invariant torus for all times. Moreover, the solution converges asymptotically to the invariant torus for \(t \rightarrow + \infty \), with an exponential rate of convergence \(O( e^{- \alpha t })\) for any arbitrary \(\alpha \in (0, 1)\).

Similar content being viewed by others

1 Introduction and Main Results

We consider the Navier–Stokes equation for an incompressible fluid on the d-dimensional torus \(\mathbb T^d\), \(d \ge 2\), \(\mathbb T:= \mathbb R/ 2 \pi \mathbb Z\),

where \(\varepsilon \in (0, 1)\) is a small parameter, the frequency \(\omega = (\omega _1, \ldots , \omega _\nu ) \in \mathbb R^\nu \) is a \(\nu \)-dimensional vector and \(f : \mathbb T^\nu \times \mathbb T^d \rightarrow \mathbb R^d\) is a smooth quasi-periodic external force. The unknowns of the problem are the velocity field \(u = (u_1, \ldots , u_d) : \mathbb R\times \mathbb T^d \rightarrow \mathbb R^d\), and the pressure \(p : \mathbb R\times \mathbb T^d \rightarrow \mathbb R\). For convenience, we set the viscosity parameter in front of the laplacian equal to one. We assume that f has zero space-time average, namely

The purpose of the present paper is to show the existence and the stability of smooth quasi-periodic solutions of the Eq. (1.1). More precisely we show that if f is a sufficiently regular vector field satisfying (1.2), for \(\varepsilon \) sufficiently small and for \(\omega \in \mathbb R^\nu \) DiophantineFootnote 1, i.e.

then the Eq. (1.1) admits smooth quasi-periodic solutions (which are referred to also as invariant tori) \(u_\omega (t, x) = U(\omega t, x)\), \(p_\omega (t, x) = P(\omega t, x)\), \(U : \mathbb T^\nu \times \mathbb T^d \rightarrow \mathbb R^d\), \(P : \mathbb T^\nu \times \mathbb T^d \rightarrow \mathbb R\) of size \(O(\varepsilon )\), oscillating with the same frequency \(\omega \in \mathbb R^\nu \) of the forcing term. If the forcing term has zero-average in x, i.e.

then the result holds for any frequency vector \(\omega \in \mathbb R^\nu \), without requiring any non-resonance condition. Furthermore, we show also the orbital and the asymptotic stability of these quasi-periodic solutions in high Sobolev norms. More precisely, for any sufficiently regular initial datum which is \(\delta \)-close to the invariant torus (w.r. to the \(H^s\) topology), the corresponding solution of (1.1) is global in time and it satisfies the following properties.

-

Orbital stability For all times \(t \ge 0\), the distance in \(H^s\) between the solution and the invariant torus is of order \(O(\delta )\).

-

Asymptotic stability The solution converges asymptotically to the invariant torus in high Sobolev norm \(\Vert \cdot \Vert _{H^s_x}\) as \(t \rightarrow + \infty \), with a rate of convergence which is exponential, i.e. \(O(e^{- \alpha t})\), for any arbitrary \(\alpha \in (0, 1)\).

In order to state precisely our main results, we introduce some notations. For any vector \(a = (a_1, \ldots , a_p) \in \mathbb R^p\), we denote by |a| its Euclidean norm, namely \(|a| := \sqrt{a_1^2 + \cdots + a_p^2}\). Let \(d, n \in \mathbb N\) and a function \(u \in L^2(\mathbb T^d, \mathbb R^n)\). Then u(x) can be expanded in Fourier series

where its Fourier coefficients \(\widehat{u}(\xi )\) are defined by

For any \(s \ge 0\), we denote by \(H^s(\mathbb T^d, \mathbb R^n)\) the standard Sobolev space of functions \(u : \mathbb T^d \rightarrow \mathbb R^n\) equipped by the norm

We also define the Sobolev space of functions with zero average

Moreover, given a Banach space \((X, \Vert \cdot \Vert _X)\) and an interval \(\mathcal{I} \subseteq \mathbb R\), we denote by \(\mathcal{C}^0_b(\mathcal{I}, X)\) the space of bounded, continuous functions \(u : \mathcal{I} \rightarrow X\), equipped with the sup-norm

For any integer \(k \ge 1\), \(\mathcal{C}^k_b(\mathcal{I}, X)\) is the space of k-times differentiable functions \(u : \mathcal{I} \rightarrow X\) with continuous and bounded derivatives equipped with the norm

In a similar way we define the spaces \(\mathcal{C}^0(\mathbb T^\nu , X)\), \(\mathcal{C}^k(\mathbb T^\nu , X)\), \(k \ge 1\) and the corresponding norms \(\Vert \cdot \Vert _{\mathcal{C}^0_\varphi X}\), \(\Vert \cdot \Vert _{\mathcal{C}^k_\varphi X}\) (where \(\mathbb T^\nu \) is the \(\nu \)-dimensional torus). We also denote by \(\mathcal{C}^N(\mathbb T^\nu \times \mathbb T^d, \mathbb R^d)\) the space of N-times continuously differentiable functions \(\mathbb T^\nu \times \mathbb T^d \rightarrow \mathbb R^d\) equipped with the standard \(\mathcal{C}^N\) norm \(\Vert \cdot \Vert _{\mathcal{C}^N}\).

Notation. Throughout the whole paper, the notation \(A \lesssim B\) means that there exists a constant C which can depend on the number of frequencies \(\nu \), the dimension of the torus d, the constant \(\gamma \) appearing in the diophantine condition (1.3) and on the \(\mathcal{C}^N\) norm of the forcing term \(\Vert f \Vert _{\mathcal{C}^N}\) such that \(A \le B\). Given n positive real numbers \(s_1, \ldots , s_n > 0\), we write \(A \lesssim _{s_1, \ldots , s_n} B\) if there exists a constant \(C = C(s_1, \ldots , s_n) > 0\) (eventually depending also on \(d, \nu , \gamma , \Vert f \Vert _{\mathcal{C}^N}\)) such that \(A \le C B\).

We are now ready to state the main results of our paper.

Theorem 1.1

(Existence of quasi-periodic solutions) Let \(s > d/2 + 1\), \(N > \frac{3 \nu }{2} + s + 2\), \(\omega \in \mathbb R^\nu \) diophantine (see 1.3) and assume that the forcing term f is in \( \mathcal{C}^N(\mathbb T^\nu \times \mathbb T^d, \mathbb R^d)\) and it satisfies (1.2). Then there exists \(\varepsilon _0 = \varepsilon _0(f, s, d, \nu ) \in (0, 1)\) small enough and a constant \(C = C(f, s, d, \nu ) > 0\) large enough such that for any \(\varepsilon \in (0, \varepsilon _0)\) there exist \(U \in \mathcal{C}^1(\mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^d))\), \(P \in \mathcal{C}^0(\mathbb T^\nu , H^s(\mathbb T^d, \mathbb R))\) satisfying

such that \((u_\omega (t, x), p_\omega ( t, x)) : = (U(\omega t, x), P(\omega t, x))\) solves the Navier–Stokes equation (1.1) and

If the forcing term f has zero space average, i.e. it satisfies (1.4), then the same statement holds for any frequency vector \(\omega \in \mathbb R^\nu \) and \(U(\varphi , x)\) satisfies

Theorem 1.2

(Stability) Let \(\alpha \in (0, 1)\), \(s > d/2 + 1\), \(N > \frac{3 \nu }{2} + s + 2\), \(u_\omega \), \(p_\omega \) be given in Theorem 1.1. Then there exists \(\delta = \delta (f , s, \alpha , d, \nu ) \in (0, 1)\) small enough and a constant \(C = C(f, s, \alpha , d, \nu ) > 0\) large enough such that for \(\varepsilon \le \delta \) and for any initial datum \(u_0 \in H^s(\mathbb T^d, \mathbb R^d)\) satisfying

there exists a unique global solution (u, p) of the Navier–Stokes equation (1.1) with initial datum \(u(0, x) = u_0(x)\) which satisfies

for any \(t \ge 0\).

The investigation of the Navier–Stokes equation with time periodic external force dates back to Serrin [40], Yudovich [41], Lions [30], Prodi [36] and Prouse [37]. In these papers the authors proved the existence of weak periodic solutions on bounded domains, oscillating with the same frequency of the external force. The existence of weak quasi-periodic solutions in dimension two has been proved by Prouse [38]. More recently these results have been extended to unbounded domains by Maremonti [27], Maremonti-Padula [28], Salvi [39] and then by Galdi [19, 20], Galdi-Silvestre [21], Galdi-Kyed [22] and Kyed [32]. We point out that in some of the aforementioned results, no smallness assumptions on the forcing term are needed and therefore, the periodic solutions obtained are not small in size, see for instance [28, 36,37,38,39,40,41]. The asymptotic stability of periodic solutions (also referred to as attainability property) has been also investigated in [27, 28], but it is only proved with respect to the \(L^2\)-norm and the rate of convergence provided is \(O(t^{- \eta })\) for some constant \(\eta > 0\). More recently Galdi and Hishida [23] proved the asymptotic stability for the Navier–Stokes equation with a translation velocity term, by using the Lorentz spaces and they provided a rate of convergence which is essentially \(O(t^{- \frac{1}{2} + \varepsilon })\). In the present paper we consider the Navier–Stokes equation on the d-dimensional torus with a small, quasi-periodic in time external force. We show the existence of smooth quasi-periodic solutions (which are also referred to as invariant tori) of small amplitude and we prove their orbital and asymptotic stability in \(H^s\) for s large enough (at least larger than \(d/2 + 1\)). Furthermore the rate of convergence to the invariant torus, in \(H^s\), for \(t \rightarrow + \infty \) is of order \(O(e^{- \alpha t})\) for any arbitrary \(\alpha \in (0, 1)\). To the best of our knowledge, this is the first result of this kind.

It is also worth to mention that the existence of quasi-periodic solutions, that is also referred to as KAM (Kolmogorov-Arnold-Moser) theory, for dispersive and hyperbolic-type PDEs is a more difficult matter, due to the presence of the so-called small divisors problem. The existence of time-periodic and quasi-periodic solutions of PDEs started in the late 1980s with the pioneering papers of Kuksin [33], Wayne [43] and Craig-Wayne [13], see also [31, 34] for generalizations to PDEs with unbounded nonlinearities. We refer to the recent review [7] for a complete list of references.

Many PDEs arising from fluid dynamics like the water waves equations or the Euler equation are fully nonlinear or quasi-linear equations (the nonlinear part contains as many derivatives as the linear part). The breakthrough idea, based on pseudo-differential calculus and micro-local analysis, in order to deal with these kind of PDEs has been introduced by Iooss, Plotnikov and Toland [25] in the problem of finding periodic solutions for the water waves equation. The methods developed in [25], combined with a KAM-normal form procedure have been used to develop a general method for PDEs in one dimension, which allows to construct quasi-periodic solutions of quasilinear and fully nonlinear PDEs, see [1, 2, 11, 17] and references therein. The extension of KAM theory to higher space dimension \(d > 1\) is a difficult matter due to the presence of very strong resonance-phenomena, often related to high multiplicity of eigenvalues. The first breakthrough results in this directions (for equations with perturbations which do not contain derivatives) have been obtained by Eliasson and Kuksin [16] and by Bourgain [12] (see also Berti-Bolle [8, 9], Geng-Xu-You [24], Procesi-Procesi [35], Berti-Corsi-Procesi [10].)

Extending KAM theory to PDEs with unbounded perturbations in higher space dimension is one of the main open problems in the field. Up to now, this has been achieved only in few examples, see [4,5,6, 15, 18, 29] and recently on the 3D Euler equation [3] which is the most meaningful physical example.

For the Navier–Stokes equation, unlike in the aforementioned papers on KAM for PDEs, the existence of quasi-periodic solutions is not a small divisors problem and it can be done by using a classical fixed point argument. This is due to the fact that the Navier–Stokes equation is a parabolic PDE and the presence of dissipation avoids the small divisors. In the same spirit, it is also worth to mention [14, 42], in which the authors investigate quasi-periodic solutions of some PDEs with singular damping, in which the small divisors problem is avoided thanks to this damping term. We also point out that the present paper is the first example in which the stability of invariant tori, in high Sobolev norms, is proved for all times (and it is even an asymptotic stability). This is possible since the presence of the dissipation allows to prove strong time-decay estimates from which one deduces orbital and asymptotic stability. In the framework of dispersive and hyperbolic PDEs, the orbital stability of invariant tori is usually proved only for large, but finite, times by using normal form techniques. The first result in this direction has been proved in [26]. In the remaining part of the introduction, we sketch the main points of our proof.

As we already explained above, the absence of small divisors is due to the fact that the Navier–Stokes equation is a parabolic PDE. More precisely, this fact is related to invertibility properties of the linear operator \(L_\omega := \omega \cdot \partial _\varphi - \Delta \) (where \(\omega \cdot \partial _\varphi := \sum _{i = 0}^\nu \omega _i \partial _{\varphi _i}\)) acting on Sobolev spaces of functions \(u(\varphi , x)\), \((\varphi , x) \in \mathbb T^\nu \times \mathbb T^d\) with zero average w.r. to x. Since the eigenvalues of \(L_\omega \) are \(\mathrm{i} \omega \cdot \ell + |j|^2\), \(\ell \in \mathbb Z^\nu \), \(j \in \mathbb Z^d {\setminus } \{ 0 \}\), the inverse of \(L_\omega \) gains two space derivatives, see Lemma 3.2. This is suffcient to perform a fixed point argument on the map \(\Phi \) defined in (3.13) from which one deduces the existence of smooth quasi-periodic solutions of small amplitude. The asymptotic and orbital stability of quasi-periodic solutions (which are constructed in Sect. 3) are proved in Sect. 4. More precisely we show that for any initial datum \(u_0\) which is \(\delta \)-close to the quasi-periodic solution \(u_\omega (0, x)\) in \(H^s\) norm (and such that \(u_0 - u_\omega (0, \cdot )\) has zero average), there exists a unique solution (u, p) such that

for any \(t \ge 0\). This is exactly the content of Theorem 1.2, which easily follows from Proposition 4.1. This Proposition is proved also by a fixed point argument on the nonlinear map \(\Phi \) defined in (4.31) in weighted Sobolev spaces \(\mathcal{E}_s\) (see (4.14)), defined by the norm

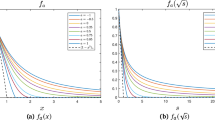

where \(\alpha \in (0, 1)\) is a fixed constant. The fixed point argument relies on some dispersive-type estimates for the heat propagator \(e^{t \Delta }\), which are proved in Sect. 4.1. The key estimates are the following.

-

1.

For any \(u_0 \in H^{s - 1}(\mathbb T^d, \mathbb R^d)\) with zero average and for any \(n \in \mathbb N\), \(\alpha \in (0, 1)\), \(t > 0\), one has

$$\begin{aligned} \Vert e^{t \Delta } u_0 \Vert _{H^s_x} \le C(n, \alpha ) t^{- \frac{n}{2}} e^{- \alpha t} \Vert u_0 \Vert _{H^{s - 1}_x} \end{aligned}$$(1.7)for some constant \(C(n, \alpha ) > 0\) (see Lemma 4.2). This estimate states that the heat propagator gains one-space derivative and exponential decay in time \(e^{- \alpha t} t^{- \frac{n}{2}}\). Note that, without gain of derivatives on \(u_0\), the exponential decay is stronger, namely \(e^{- t}\), see Lemma 4.2-(i).

-

2.

For any \(f \in \mathcal{E}_{s - 1}\)

$$\begin{aligned} \Big \Vert \int _0^t e^{(t - \tau )\Delta } f(\tau , \cdot )\, d\tau \Big \Vert _{H^s_x} \le C(\alpha ) e^{- \alpha t} \Vert f \Vert _{\mathcal{E}_{s - 1}} \end{aligned}$$(1.8)for some constant \(C(\alpha ) > 0\) (see Proposition 4.5). This estimate states that the integral term which usually appears in the Duhamel formula (see (4.31)) gains one space derivative w.r. to f(t, x) and keeps the same exponential decay in time as f(t, x).

We also remark that the constants \(C(n, \alpha )\), \(C(\alpha )\) appearing in the estimates (1.7), (1.8) tend to \(\infty \) when \(\alpha \rightarrow 1\). This is the reason why it is not possible to get a decay \(O(e^{- t})\) in the asymptotic stability estimate provided in Theorem 1.2.

The latter two estimates allow to show in Proposition 4.9 that the map \(\Phi \) defined in (4.31) is a contraction. The proof of Theorem 1.2 is then easily concluded in Sect. 4.3.

It is also worth to mention that our methods does not cover the zero viscosity limit \(\mu \rightarrow 0\), where \(\mu \) is the usual viscosity parameter in front of the laplacian (that we set for convenience equal to one). Indeed some constants in our estimates become infinity when \(\mu \rightarrow 0\). Actually, it would be very interesting to study the singular perturbation problem for \(\mu \rightarrow 0\) and to see if one is able to recover the quasi-periodic solutions of the Euler equation constructed in [3].

As a concluding remark, we mention that the methods used in this paper also apply to other parabolic-type equations with some technical modifications. For instance, one could prove the existence of quasi-periodic solutions (Theorem 1.1) for a general fully nonlinear parabolic type equation of the form

where N is a smooth nonlinearity depending also on the second derivatives of u and which is at least quadratic w.r. to \((u, \nabla u, \nabla ^2 u)\). Indeed, as we explained above, this can be done by a fixed point argument, by inverting the operator \(L_\omega := \omega \cdot \partial _\varphi - \Delta + m\). The inverse of this operator gains two space derivatives and hence it compensates the fact that the nonlinearity has a loss of two space-derivatives.

We prefer in this paper to focus on the Navier–Stokes equation for clarity of exposition and since it is a very important physical model.

2 Functional Spaces

In this section we collect some standard technical tools which will be used in the proof of our results. For \(u = (u_1, \ldots , u_n) \in H^s(\mathbb T^d, \mathbb R^n)\), one has

The following standard algebra lemma holds.

Lemma 2.1

Let \(s > d/2\) and \(u, v \in H^s(\mathbb T^d, \mathbb R^n)\). Then \(u \cdot v \in H^s(\mathbb T^d, \mathbb R)\) (where \(\cdot \) denotes the standard scalar product on \(\mathbb R^n\)) and \(\Vert u \cdot v \Vert _{H^s_x} \lesssim _s \Vert u \Vert _{H^s_x} \Vert v \Vert _{H^s_x}\).

We also consider functions

which are in \(L^2\Big (\mathbb T^\nu , L^2(\mathbb T^d, \mathbb R^n)\Big )\). We can write the Fourier series of a function \(u \in L^2\Big (\mathbb T^\nu , L^2(\mathbb T^d, \mathbb R^n)\Big )\) as

where

By expanding also the function \(\widehat{u}(\ell , \cdot )\) in Fourier series, we get

and hence we can write

For any \(\sigma , s \ge 0\), we define the Sobolev space \(H^\sigma \Big (\mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^n) \Big )\) as the space of functions \(u \in L^2\Big (\mathbb T^\nu , L^2(\mathbb T^d, \mathbb R^n) \Big )\) equipped by the norm

Similarly to (2.1), one has that for \(u = (u_1, \ldots , u_n) \in H^\sigma \Big (\mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^n) \Big )\)

If \(\sigma >\nu /2\), then

Moreover, the following standard algebra property holds.

Lemma 2.2

Let \(\sigma > \frac{\nu }{2}\), \(s > \frac{d}{2}\), \(u, v \in H^\sigma \Big (\mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^n) \Big )\). Then \(u \cdot v \in H^\sigma \Big (\mathbb T^\nu , H^s(\mathbb T^d, \mathbb R) \Big )\) and \(\Vert u \cdot v \Vert _{\sigma , s} \lesssim _{\sigma , s} \Vert u \Vert _{\sigma , s} \Vert v \Vert _{\sigma , s}\).

For any \(u \in L^2(\mathbb T^d, \mathbb R^n)\) we define the orthogonal projections \(\pi _0\) and \(\pi _0^\bot \) as

According to (2.9), (2.5), every function \(u \in L^2\Big (\mathbb T^\nu , L^2(\mathbb T^d, \mathbb R^n) \Big )\) can be decomposed as

Clearly if \(u \in H^\sigma \Big ( \mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^n) \Big )\), \(\sigma , s \ge 0\), then

We also prove the following lemma that we shall apply in Sect. 4.

Lemma 2.3

Let \(\sigma > \nu /2\), \(U \in H^\sigma \Big (\mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^n) \Big )\) and \(\omega \in \mathbb R^\nu \). Defining \(u_\omega (t, x) := U(\omega t, x)\), \((t, x) \in \mathbb R\times \mathbb T^d\), one has that \(u_\omega \in \mathcal{C}^0_b\Big (\mathbb R, H^s(\mathbb T^d, \mathbb R^n) \Big )\) and \(\Vert u_\omega \Vert _{\mathcal{C}^0_t H^s_x} \lesssim _\sigma \Vert U \Vert _{\sigma , s}\).

Proof

By the Sobolev embedding (2.8), and using that the map \(\mathbb R\rightarrow \mathbb T^d\), \(t \mapsto \omega t\) is continuous, one has that \(u_\omega \in \mathcal{C}^0_b\Big (\mathbb R, H^s(\mathbb T^d, \mathbb R^n) \Big )\) and

2.1 Leray Projector and Some Elementary Properties of the Navier–Stokes Equation

We introduce the space of zero-divergence vector fields

where clearly the divergence has to be interpreted in a distributional sense. The \(L^2\)-orthogonal projector on this subspace of \(L^2(\mathbb T^d, \mathbb R^d)\) is called the Leray projector and its explicit formula is given by

where the inverse of the laplacian (on the space of zero average functions) \((- \Delta )^{- 1}\) is defined by

By expanding in Fourier series, the Leray projector \({\mathfrak {L}}\) can be written as

By the latter formula, one immediately deduces some elementary properties of the Leray projector \({\mathfrak {L}}\). One has

and for any Fourier multiplier \(\Lambda \), \(\Lambda u(x) = \sum _{\xi \in \mathbb Z^d} \Lambda (\xi ) \widehat{u}(\xi ) e^{\mathrm{i} x \cdot \xi }\), the commutator

Moreover

For later purposes, we now prove the following Lemma.

Lemma 2.4

-

(i)

Let \(u, v \in H^1(\mathbb T^d, \mathbb R^d)\) and assume that \(\mathrm{div}(u) = 0\), then \(u \cdot \nabla v\), \({\mathfrak {L}}(u \cdot \nabla v)\) have zero average.

-

(ii)

Let \(\sigma > \nu /2\), \(s > d/2\), \(u \in H^\sigma \Big ( \mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^d) \Big )\), \(v \in H^\sigma \Big ( \mathbb T^\nu , H^{s + 1}(\mathbb T^d, \mathbb R^d) \Big )\). Then \(u \cdot \nabla v \in H^\sigma \Big ( \mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^d) \Big )\) and \(\Vert u \cdot \nabla v\Vert _{\sigma , s} \lesssim _{\sigma , s} \Vert u \Vert _{\sigma , s} \Vert v \Vert _{\sigma , s + 1}\).

Proof of (i)

By integrating by parts,

Proof of (ii)

For \(u = (u_1, \ldots , u_d)\), \(v = (v_1, \ldots , v_d)\), the vector field \(u \cdot \nabla v\) is given by

Then the claimed statement follows by (2.7) and the algebra Lemma 2.2.

3 Construction of Quasi-Periodic Solutions

We look for quasi periodic solutions \(u_\omega (t, x)\), \(p_\omega (t,x)\) of the Eq. (1.1), oscillating with frequency \(\omega = (\omega _1, \ldots , \omega _\nu ) \in \mathbb R^\nu \), namely we look for \(u_\omega (t, x) := U(\omega t, x)\), \(p_\omega (t, x) := P(\omega t, x)\) where \(U : \mathbb T^\nu \times \mathbb T^d \rightarrow \mathbb R^d\) and \(P : \mathbb T^\nu \times \mathbb T^d \rightarrow \mathbb R\) are smooth functions. This leads to solve a functional equation for \(U(\varphi , x)\), \(P(\varphi , x)\) of the form

If we take the divergence of the first equation in (3.1), one gets

and by projecting on the space of zero divergence vector fields, one gets a closed equation for U of the form

where we recall the definitions (2.12), (2.13). According to the splitting (2.10) and by applying the projectors \(\pi _0, \pi _0^\bot \) to the Eq. (3.3) one gets the decoupled equations

and

Then, since \(\omega \) is diophantine (see 1.3) and using that

(\(\widehat{f}(0, 0) = 0\)) the averaged equation (3.4) can be solved explicitely by setting

By (2.11) and using (1.3), one gets the estimate

Remark 3.1

(Non resonance conditions) The diophantine condition (1.3) on the frequency vector \(\omega \) is used only to solve the averaged equation (3.4). In order to solve the Eq. (3.5) on the space of zero average functions (with respect to x) no resonance conditions are required.

We now solve the Eq. (3.5) by means of a fixed point argument. To this end, we need to analyze some invertibility properties of the linear operator

Lemma 3.2

(Invertibility of \(L_\omega \)) Let \(\sigma , s \ge 0\), \(g \in H^\sigma \Big (\mathbb T^\nu , H^s_0(\mathbb T^d, \mathbb R^d) \Big )\) and assume that g has zero divergence. Then there exists a unique \(u := L_\omega ^{- 1} g \in H^\sigma \Big (\mathbb T^\nu , H^{s + 2}_0(\mathbb T^d, \mathbb R^d) \Big )\) with zero divergence which solves the equation \(L_\omega u = g\). Moreover

Proof

By (2.5), we can write

Note that since \(j \ne 0\), one has that

Hence, the equation \(L_\omega u = g\) admits the unique solution with zero space average given by

Clearly if \(\mathrm{div}(g) = 0\) and then also \(\mathrm{div}(u) = 0\). We now estimate \(\Vert u \Vert _{\sigma , s + 2}\). According to (2.6), (3.11), one has

which proves the claimed statement.

We now implement the fixed point argument for the Eq. (3.5) (to simplify notations we write U instead of \(U_\bot \)). For any \(\sigma , s, R \ge 0\), we define the ball

and we define the nonlinear operator

The following Proposition holds.

Proposition 3.3

(Contraction for \(\Phi \)) Let \(\sigma > \nu /2\), \(s > d/2 + 1\), \(f \in \mathcal{C}^N(\mathbb T^\nu \times \mathbb T^d, \mathbb R^d)\), \(N > \sigma + s - 2\). Then there exists a constant \(C_* = C_*(f , \sigma , s) > 0\) large enough and \(\varepsilon _0= \varepsilon _0(f , \sigma , s) \in (0, 1)\) small enough, such that for any \(\varepsilon \in (0, \varepsilon _0)\), the map \(\Phi : \mathcal{B}_{\sigma , s}(C_* \varepsilon ) \rightarrow \mathcal{B}_{\sigma , s}(C_* \varepsilon )\) is a contraction.

Proof

Let \(U \in \mathcal{B}_{\sigma , s} (C_* \varepsilon )\). We apply Lemmata 2.4-(i), 3.2 from which one immediately deduces that

Moreover

Note that since \(f \in \mathcal{C}^N\) with \(N > \sigma + s - 2\), one has that \(\Vert f \Vert _{\sigma , s - 2} \lesssim \Vert f \Vert _{\mathcal{C}^N}\). In view of Lemma 2.4-(ii), using that \(\sigma > \nu /2\), \(s - 1 > d/2\), one gets that

for some constant \(C(f, s, \sigma ) > 0\). Using that \(\Vert U \Vert _{\sigma , s} \le C_* \varepsilon \), one gets that

provided

Hence \(\Phi : \mathcal{B}_{\sigma , s}(C_* \varepsilon ) \rightarrow \mathcal{B}_{\sigma , s}(C_* \varepsilon )\). Now let \(U_1, U_2 \in \mathcal{B}_{\sigma , s}(C_* \varepsilon )\) and we estimate

One has that

for some constant \(C(s, \sigma ) > 0\). Since \(U_1, U_2 \in \mathcal{B}_{\sigma , s}(C_* \varepsilon )\), one then has that

provided \(\varepsilon \le \frac{1}{4 C(s, \sigma ) C_*}\,.\) Hence \(\Phi \) is a contraction.

3.1 Proof of Theorem 1.1

Proposition 3.3 implies that for \(\sigma > \nu /2\), \(s > \frac{d}{2} + 1\), there exists a unique \(U_\bot \in H^\sigma (\mathbb T^\nu , H^s_0(\mathbb T^d, \mathbb R^d))\), \(\Vert U_\bot \Vert _{\sigma , s} \lesssim _{\sigma , s} \varepsilon \) which is a fixed point of the map \(\Phi \) defined in (3.13). We fix \(\sigma := \nu /2 + 2\) and \(N > \frac{3 \nu }{2} + s + 2 \). By the Sobolev embedding property (2.8), since \(\sigma - 1 > \nu /2\), one gets that

and \(U_\bot \) is a solution of the Eq. (3.5). Similarly, by recalling (3.4), (3.6), (3.7), one gets that

and \(U_0\) is a solution of the Eq. (3.4). Hence \(U = U_0 + U_\bot \in \mathcal{C}^1\Big ( \mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^d) \Big )\) is a solution of (3.3) and it satisfies \(\int _{\mathbb T^\nu \times \mathbb T^d} U(\varphi , x)\, d \varphi \, d x = 0\). The unique solution with zero average in x of the Eq. (3.2) is given by

Hence, \(P \in \mathcal{C}^0_b\Big (\mathbb T^\nu , H^s_0(\mathbb T^d, \mathbb R^d) \Big )\) and

The claimed estimate on P then follows since \( \Vert f \Vert _{\sigma , s - 1} \lesssim \Vert f \Vert _{\mathcal{C}^N}\), \(\Vert U \Vert _{\sigma , s} \le C_* \varepsilon \). Note that if f has zero average in x, one has that

The Eq. (3.4) reduces to \(\omega \cdot \partial _\varphi U_0 = 0\). Hence the only solution \(U = U_0 + U_\bot \) of (3.3) with zero average in x is the one where we choose \(U_0 = 0\) and hence \(U = U_\bot \). The claimed statement has then been proved.

4 Orbital and Asymptotic Stability

We now want to study the Cauchy problem for the Eq. (1.1) for initial data which are close to the quasi-periodic solution \((u_\omega , p_\omega )\), where

and the periodic functions \(U \in \mathcal{C}^1\Big (\mathbb T^\nu , H^s(\mathbb T^d, \mathbb R^d) \Big )\), \(P\in \mathcal{C}^0\Big (\mathbb T^\nu , H^s_0(\mathbb T^d, \mathbb R^d) \Big )\) are given by Theorem 1.1. We then look for solutions which are perturbations of the quasi-periodic ones \((u_\omega , p_\omega )\), namely we look for solutions of the form

Plugging the latter ansatz into the Eq. (1.1), one obtains an equation for v(t, x), q(t, x) of the form

If we take the divergence in the latter equation we get the equation for the pressure q(t, x)

By using the Leray projector defined in (2.13), we then get a closed equation for v of the form

We prove the following

Proposition 4.1

Let \(s > d/2 + 1\), \(\alpha \in (0, 1)\). Then there exists \(\delta = \delta (s, \alpha , d, \nu )\in (0, 1)\) small enough and \(C = C(s, \alpha , d, \nu ) > 0\) large enough, such that for any \(\varepsilon \in (0, \delta )\) and for any initial datum \(v_0 \in H^s_0(\mathbb T^d, \mathbb R^d)\) with \(\Vert v_0 \Vert _{H^s_x} \le \delta \), there exists a unique global solution

of the Eq. (4.5) which satisfies

The Proposition above will be proved by a fixed point argument in some weighted Sobolev spaces which take care of the decay in time of the solutions we are looking for. In the next section we shall exploit some decay estimates of the linear heat propagator which will be used in the proof of our result.

4.1 Dispersive Estimates for the Heat Propagator

In this section we analyze some properties of the heat propagator. We recall that the heat propagator is defined as follows. Consider the Cauchy problem for the heat equation

It is well known that there exists a unique solution

which can be written as \(u(t, x) := e^{t \Delta } u_0(x)\), namely

Lemma 4.2

-

(i)

Let \(u_0 \in H^s_0(\mathbb T^d, \mathbb R^d)\). Then

$$\begin{aligned} \Vert e^{t \Delta } u_0 \Vert _{H^s_x} \le e^{- t} \Vert u_0 \Vert _{H^s_x}, \quad \forall t \ge 0\,. \end{aligned}$$(4.10) -

(ii)

Let \(u_0 \in H^{s - 1}_0(\mathbb T^d, \mathbb R^d)\). Then, for any integer \(n \ge 1\) and for any \(\alpha \in (0, 1)\),

$$\begin{aligned} \Vert e^{t \Delta } u_0 \Vert _{H^{s }_x} \lesssim _n t^{- \frac{n}{2}} (1 - \alpha )^{- \frac{n}{2}} e^{- \alpha t}\Vert u_0 \Vert _{H^{s - n}_x} \lesssim _n t^{- \frac{n}{2}} (1 - \alpha )^{- \frac{n}{2}} e^{- \alpha t} \Vert u_0 \Vert _{H^{s - 1}_x}, \quad \forall t > 0\,. \end{aligned}$$(4.11)

Proof

The item (i) follows by (4.9), using that \(e^{- t |\xi |^2} \le e^{- t}\) for any \(t \ge 0\), \(\xi \in \mathbb Z^d {\setminus } \{ 0 \}\), since \(|\xi |^2 \ge 1\). We now prove the item (ii). Let \(n \in \mathbb N\), \(\alpha \in (0, 1)\). One has

Using that for any \(\xi \in \mathbb Z^d {\setminus } \{ 0 \}\), \(t \ge 0\), \(e^{- 2 \alpha t |\xi |^2} \le e^{- 2 \alpha t}\), by (4.12), one gets that

By Lemma A.1 (applied with \(\zeta = 2 (1 - \alpha ) t\)), one has that

for some constant \(C(n)> 0\). Therefore by (4.13), one gets that

The second inequality in (4.11) clearly follows since \(\Vert \cdot \Vert _{H^{s - n}_x} \le \Vert \cdot \Vert _{H^{s - 1}_x}\) for \(n \ge 1\).

We fix \(\alpha \in (0, 1)\) and for any \(s \ge 0\), we define the space

Clearly

The following elementary lemma holds:

Lemma 4.3

-

(i)

Let \(u \in \mathcal{E}_s\). Then

$$\begin{aligned} \begin{aligned}&\Vert u \Vert _{\mathcal{C}^0_t H^s_x} \lesssim \Vert u \Vert _{\mathcal{E}_s} \quad \text {and} \quad \Vert {\mathfrak {L}}(u) \Vert _{\mathcal{E}_s} \lesssim \Vert u \Vert _{\mathcal{E}_s}\,, \\&\Vert u (t) \Vert _{H^s_x} \le e^{- \alpha t} \Vert u \Vert _{\mathcal{E}_s}, \quad \forall t \ge 0\,. \end{aligned} \end{aligned}$$(4.16) -

(ii)

Let \(s > d/2\), \(u \in \mathcal{E}_s\), \(v \in \mathcal{C}^0_b\Big ([0, + \infty ), H^{s + 1}(\mathbb T^d, \mathbb R^d) \Big )\), \(\mathrm{div}(u) = 0\). Then the product \(u \cdot \nabla v \in \mathcal{E}_s\) and

$$\begin{aligned} \Vert u \cdot \nabla v \Vert _{\mathcal{E}_s} \lesssim _s \Vert u \Vert _{\mathcal{E}_s} \Vert v \Vert _{\mathcal{C}^0_t H^{s + 1}_x}\,. \end{aligned}$$(4.17) -

(iii)

Let \(s > d/2\), \(u \in \mathcal{C}^0_b\Big ([0, + \infty ), H^s(\mathbb T^d, \mathbb R^d) \Big )\), \(\mathrm{div}(u) = 0\) and \(v \in \mathcal{E}_{s + 1}\). Then the product \(u \cdot \nabla v \in \mathcal{E}_s\) and

$$\begin{aligned} \Vert u \cdot \nabla v \Vert _{\mathcal{E}_s} \lesssim _s \Vert u \Vert _{\mathcal{C}^0_t H^s_x} \Vert v \Vert _{\mathcal{E}_{s + 1}}\,. \end{aligned}$$(4.18) -

(iv)

Let \(s> d/2\), \(u \in \mathcal{E}_s\), \(\mathrm{div}(u)= 0\), \(v \in \mathcal{E}_{s + 1}\). Then \(u \cdot \nabla v \in \mathcal{E}_{s}\) and

$$\begin{aligned} \Vert u \cdot \nabla v \Vert _{\mathcal{E}_s} \lesssim _s \Vert u \Vert _{\mathcal{E}_s} \Vert v \Vert _{\mathcal{E}_{s + 1}}\,. \end{aligned}$$(4.19)

Proof

The item (i) is very elementary and it follows in a straightforward way by the definition (4.14) and by recalling the estimate (2.18) on the Leray projector \({\mathfrak {L}}\). We prove the item (ii). By Lemma 2.4-(i), one has that \(u(t) \cdot \nabla v(t)\) has zero average in x. Moreover

therefore, since \(s > d/2\), by Lemma 2.1 and using (2.1) one has that for any \(t \in [0, + \infty )\) \(\Vert u (t) \cdot \nabla v(t) \Vert _{H^s_x} \lesssim _s \Vert u(t) \Vert _{H^s_x} \Vert v(t) \Vert _{H^{s + 1}_x}\) implying that

Passing to the supremum over \(t \ge 0\) in the left hand side of (4.20), we get the claimed statement. The item (iii) follows by similar arguments and the item (iv) follows by applying items (i) and (ii).

We now prove some estimates for the heat propagator \(e^{t \Delta }\) in the space \(\mathcal{E}_s\).

Lemma 4.4

Let \(s \ge 0\), \(u_0 \in H^s_0(\mathbb T^d, \mathbb R^d)\). Then \(\Vert e^{t \Delta } u_0 \Vert _{\mathcal{E}_s} \lesssim _\alpha \Vert u_0 \Vert _{H^s_x}\).

Proof

We have to estimate uniformly w.r. to \(t \ge 0\), the quantity \(e^{\alpha t} \Vert e^{t \Delta } u_0 \Vert _{H^s_x}\). For \(t \in [0, 1]\), since \(e^{\alpha t} \le e^{\alpha } {\mathop {\le }\limits ^{\alpha < 1}} e\) and by applying Lemma 4.2-(i), one gets that

For \(t > 1\), by applying Lemma 4.2-(ii) (for \(n = 1\)), one gets that

Hence the claimed statement follows by (4.21), (4.22) passing to the supremum over \(t \ge 0\).

The main result of this section is the following Proposition

Proposition 4.5

Let \(s\ge 1\), \(f \in \mathcal{E}_{s - 1}\) and define

Then \(u \in \mathcal{E}_s\) and

The proof is split in several steps. The first step is to estimate the integral in (4.23) for any \(t \in [0, 1]\).

Lemma 4.6

Let \(t \in [0, 1]\), \(f \in \mathcal{E}_{s - 1}\) and u defined by (4.23). Then

Proof

Let \(t \in [0, 1]\). Then

By making the change of variables \(z = t - \tau \), one gets that

and hence in view of (4.25), one gets \(\Vert u (t) \Vert _{H^s_x} \lesssim _\alpha \Vert f \Vert _{\mathcal{C}^0_t H^{s - 1}_x}\) for any \(t \in [0, 1]\), which is the claimed stetement.

For \(t > 1\), we split the integral term in (4.23) as

and we estimate separately the two terms in the latter formula. More precisely the first term is estimated in Lemma 4.7 and the second one in Lemma 4.8.

Lemma 4.7

Let \(t > 1\). Then

Proof

Let \(t > 1\). One then has

By choosing \(n = 4\) and by making the change of variables \(z = t - \tau \), on gets that

The latter estimate, together with the estimate (4.26) imply the claimed statement.

Lemma 4.8

Let \(t > 1\). Then

Proof

One has

By making the change of variables \(z = t - \tau \), one gets

The latter estimate, together with (4.27) imply the claimed statement.

Proof of Proposition 4.5

For any \(t \in [0, 1]\), since \(e^{\alpha t} \le e^{\alpha }{\mathop {\le }\limits ^{\alpha < 1}} e\), by Lemma 4.6, one has that

For any \(t > 1\), by applying Lemmata 4.7, 4.8, one gets

The claimed statement then follows by (4.28), (4.29) and passing to the supremum over \(t \in [0, + \infty )\).

4.2 Proof of Proposition 4.1

The Proposition 4.1 is proved by a fixed point argument. For any \(\delta > 0\) and \(s\ge 0\), we define the ball

and for any \(v \in \mathcal{B}_s(\delta )\), we define the map

Proposition 4.9

Let \(s > d/2 + 1\), \(\alpha \in (0, 1)\). Then there exists \(\delta = \delta (s, \alpha , \nu , d) \in (0, 1)\) small enough such that for any \(\varepsilon \in (0, \delta )\), \(\Phi : \mathcal{B}_s(\delta ) \rightarrow \mathcal{B}_s(\delta )\) is a contraction.

Proof

Since \(v_0\) has zero divergence and zero average, then clearly by (4.9), \(\mathrm{div}\big ( e^{t \Delta } v_0\big ) = 0\), \(\int _{\mathbb T^d} e^{t \Delta } v_0(x)\, d x= 0\) . Now let v(t, x) be a function with zero average and zero divergence. Clearly \(\mathrm{div}\Big ( \mathcal{N}(v)\Big ) = 0\) since in the definition of \(\mathcal{N}( v)\) in (4.31), there is the Leray projector. Moreover using that \(\mathrm{div}(v ) = \mathrm{div}(u_\omega ) = 0\), by Lemma 2.4-(i), one gets \(\mathcal{N}(v)\) has zero average and then by (4.9) also \(\int _0^t e^{(t - \tau ) \Delta } \mathcal{N}(v)(\tau , \cdot )\, d \tau \) has zero average. Hence, we have shown that \(\mathrm{div}(\Phi (v)) = 0\) and \(\int _{\mathbb T^d} \Phi (v)\, d x= 0\). Let now \(\Vert v \Vert _{\mathcal{E}_s} \le \delta \). We estimate \(\Vert \Phi (v) \Vert _{\mathcal{E}_s}\). By recalling (4.31), Lemma 4.4, Proposition 4.5 and Lemma 4.3, one has

By the estimates of Theorem 1.1, by the Definition (4.1) and by applying Lemma 2.3, one has

Since \(v \in \mathcal{B}_s(\delta )\), the estimate (4.32) implies that

Hence \(\Vert \Phi (v) \Vert _{\mathcal{E}_s} \le \delta \) provided

These conditions are fullfilled by taking \(\delta \) small enough and \(\Vert v_0 \Vert _{H^s_x}\ll \delta \). Hence \(\Phi : \mathcal{B}_s(\delta ) \rightarrow \mathcal{B}_s(\delta )\). Now let \(v_1, v_2 \in \mathcal{B}_s(\delta )\). We need to estimate

By (4.31)

Hence, (4.33) and using that \(v_1, v_2 \in \mathcal{B}_s(\delta )\) (\(\Vert v_1 \Vert _{\mathcal{E}_s}, \Vert v_2 \Vert _{\mathcal{E}_s} \le \delta \)) and the estimate (4.36) imply that

By (4.35), one gets that

for some constant \(C(s, \alpha ) > 0\). Therefore

provided \(\delta \le \frac{1}{2 C(s, \alpha )}\). The claimed statement has then been proved.

Proof of Proposition 4.1concluded. By Proposition 4.9, using the contraction mapping theorem there exists a unique \(v \in \mathcal{B}_s(\delta )\) which is a fixed point of the map \(\Phi \) in (4.31). By the functional equation \(v = \Phi (v)\), one deduces in a standard way that

and hence v is a solution of the Eq. (4.5). By (4.16) and using the trivial fact that \(\Vert \Delta v \Vert _{\mathcal{E}_{s - 2}} \le \Vert v \Vert _{\mathcal{E}_s}\)

Therefore using that \(v \in \mathcal{B}_s(\delta )\) (\(\Vert v \Vert _{\mathcal{E}_s} \le \delta \)) and by (4.33), \(\Vert u_\omega \Vert _{\mathcal{C}^0_t H^s_x} \lesssim _s \delta \), one gets, for \(\delta \) small enough, the estimate \(\Vert \partial _t v \Vert _{\mathcal{E}_{s - 2}} \lesssim _s \delta \) and the claimed statement follows by recalling (4.16).

4.3 Proof of Theorem 1.2

In view of Proposition 4.1, it remains only to solve the Eq. (4.4) for the pressure q(t, x). The only solution with zero average of this latter equation is given by

Using that \(\Vert (- \Delta )^{- 1} \mathrm{div} a \Vert _{\mathcal{E}_s} \lesssim \Vert a \Vert _{\mathcal{E}_{s - 1}}\) for any \(a \in \mathcal{E}_s\), one gets the inequality

Hence arguing as in (4.39), one deduces the estimate \(\Vert q \Vert _{\mathcal{E}_s} \lesssim _{s} \delta \). The claimed estimate on q then follows by recalling (4.16) and the proof is concluded (recall that by (4.2), \(v = u - u_\omega \), \(q = p - p_\omega \)).

Change history

02 April 2021

The Open Access funding note is included in the article.

Notes

It is well known that a.e. frequency in \(\mathbb R^\nu \) (w.r. to the Lebesgue measure) is diophantine.

References

Baldi, P., Berti, M., Haus, E., Montalto, R.: Time quasi-periodic gravity water waves in finite depth. Invent. Math. 214(2), 739–911 (2018)

Baldi, P., Berti, M., Montalto, R.: KAM for quasi-linear and fully nonlinear forced perturbations of Airy equation. Math. Ann. 359, 471–536 (2014)

Baldi, P., Montalto, R.: Quasi-periodic incompressible Euler flows in 3D. Preprint arXiv:2003.14313 (2020)

Bambusi, D., Grebert, B., Maspero, A., Robert, D.: Growth of Sobolev norms for abstract linear Schrödinger Equations. JEMS (to appear). Preprint arXiv:1706.09708 (2017)

Bambusi, D., Grebert, B., Maspero, A., Robert, D.: Reducibility of the quantum Harmonic oscillator in \(d\)-dimensions with polynomial time dependent perturbation. Anal. PDEs 11(3), 775–799 (2018)

Bambusi, D., Langella, B., Montalto, R.: Reducibility of non-resonant transport equation on \(\mathbb{T}^d\) with unbounded perturbations. Ann. Inst. Henri Poincaré 20, 1893–1929 (2019). https://doi.org/10.1007/s00023-019-00795-2

Berti, M.: KAM for PDEs. Boll. Unione Mat. Ital. 9, 115–142 (2016)

Berti, M., Bolle, P.: Quasi-periodic solutions with Sobolev regularity of NLS on \(\mathbb{T}^d\) with a multiplicative potential. Eur. J. Math. 15, 229–286 (2013)

Berti, M., Bolle, P.: Sobolev quasi-periodic solutions of multidimensional wave equations with a multiplicative potential. Nonlinearity 25(9), 2579–2613 (2012)

Berti, M., Corsi, L., Procesi, M.: An abstract Nash-Moser theorem and quasi-periodic solutions for NLW and NLS on compact Lie groups and homogeneous manifolds. Commun. Math. Phys. 334(3), 1413–1454 (2015)

Berti, M., Montalto, R.: Quasi-periodic standing wave solutions of gravity capillary standing water waves. Mem. Am. Math. Soc. 263, 1273 (2019)

Bourgain, J.: Quasi-periodic solutions of Hamiltonian perturbations of 2D linear Schrödinger equations. Ann. Math. 148, 363–439 (1998)

Craig, W., Eugene Wayne, C.: Newton’s method and periodic solutions of nonlinear wave equations. Commun. Pure Appl. Math. 46(11), 1409–1498 (1993)

Calleja, R., Celletti, A., Corsi, L., de la Llave, R.: Response solutions for quasi-periodically forced, dissipative wave equations. SIAM J. Math Anal. 49(4), 3161–3207 (2017)

Corsi, L., Montalto, R.: Quasi-periodic solutions for the forced Kirchhoff equation on \(\mathbb{T}^d\). Nonlinearity 31, 5075–5109 (2018). https://doi.org/10.1088/1361-6544/aad6fe

Eliasson, H.L., Kuksin, S.B.: On reducibility of Schrödinger equations with quasiperiodic in time potentials. Commun. Math. Phys. 286(1), 125–135 (2009)

Feola, R., Procesi, M.: Quasi-periodic solutions for fully nonlinear forced reversible Schrödinger equations. J. Differ. Equ. 259(7), 3389–3447 (2015)

Feola, R., Giuliani, F., Montalto, R., Procesi, M.: Reducibility of first order linear operators on tori via Moser’s theorem. J. Funct. Anal. 276, 932–970 (2019)

Galdi, G.P.: Existence and uniqueness of time-periodic solutions to the Navier–Stokes equations in the whole plane. Discret. Contin. Dyn. Syst. Ser. S 6(5), 1237–1257 (2013)

Galdi, P.G.: On time-periodic flow of a viscous liquid past a moving cylinder. Arch. Ration. Mech. Anal. 210(2), 451–498 (2013)

Galdi, G.P., Silvestre, A.L.: Existence of time-periodic solutions to the Navier–Stokes equations around a moving body. Pac. J. Math. 223(2), 251–267 (2006)

Galdi, G.P., Kyed, M.: Time-periodic solutions to the Navier–Stokes equations in the three-dimensional whole-space with a drift term: asymptotic profile at spatial infinity. Contemp. Math. (2016) (to appear)

Galdi, G.P., Hishida, T.: Attainability of time-periodic flow of a viscous liquid past an oscillating body. Preprint arXiv. 2001.07292 (2020)

Geng, J., Xu, X., You, J.: An infinite dimensional KAM theorem and its application to the two dimensional cubic Schrödinger equation. Adv. Math. 226, 5361–5402 (2011)

Iooss, G., Plotnikov, P.I., Toland, J.F.: Standing waves on an infinitely deep perfect fluid under gravity. Arch. Ration. Mech. Anal. 177(3), 367–478 (2005)

Liu, J., Cong, H., Yuan, X.: Stability of KAM tori for nonlinear Schrödinger equation. Mem. Am. Math. Soc. 239, 1134 (2016)

Maremonti, P.: Existence and stability of time-periodic solutions to the Navier–Stokes equations in the whole space. Nonlinearity 4(2), 503–529 (1991)

Maremonti, P., Padula, M.: Existence, uniqueness, and attainability of periodic solutions of the Navier–Stokes equations in exterior domains. J. Math. Sci. (N.Y.) 93, 719–746 (1999)

Montalto, R.: A reducibility result for a class of linear wave equations on \(\mathbb{T}^d\). Int. Math. Res. Not. 6, 1788–1862 (2019). https://doi.org/10.1093/imrn/rnx167

Lions, J.L.: Quelques methodes de resolution des problemes aux limites non lineares. Gounod and Gautier-Villars, Paris (1969)

Liu, J., Yuan, X.: A KAM theorem for Hamiltonian partial differential equations with unbounded perturbations. Commun. Math. Phys. 307(3), 629–673 (2011)

Kyed, M.: Existence and regularity of time-periodic solutions to the three-dimensional Navier–Stokes equations. Nonlinearity 27(12), 2909–2935 (2014)

Kuksin, S.B.: Hamiltonian perturbations of infinite-dimensional linear systems with an imaginary spectrum. Funct. Anal. Appl. 21, 192–205 (1987)

Kuksin, S.: A KAM theorem for equations of the Korteweg-de Vries type. Rev. Math. Phys. 10(3), 1–64 (1998)

Procesi, C., Procesi, M.: A KAM algorithm for the completely resonant nonlinear Schrödinger equation. Adv. Math. 272, 399–470 (2015)

Prodi, G.: Qualche risultato riguardo alle equazioni di Navier–Stokes nel caso bidimensionale. Rend. Sem. Mat. Univ. Padova 30, 1–15 (1960)

Prouse, G.: Soluzioni periodiche dell–equazione di Navier–Stokes. Atti Accad. Naz. Lincei Rend. Cl. Sci. Fis. Mat. Nat. 35(8), 443–447 (1963)

Prouse, G.: Soluzioni quasi-periodiche dell-equazione differenziale di Navier–Stokes in due dimensioni. Rend. Sem. Mat. Univ. Padova 33, 186–212 (1963)

Salvi, R.: On the existence of periodic weak solutions on the Navier–Stokes equations in exterior regions with periodically moving boundaries. In: Sequeira, A. (ed.) Navier–Stokes Equations and Related Nonlinear Problems. Springer, Boston (1995)

Serrin, J.: A note on the existence of periodic solutions of the Navier–Stokes equations. Arch. Ration. Mech. Anal. 3, 120–122 (1959)

Yudovich, V.I.: Periodic motions of a viscous incompressible fluid. Sov. Math. Dokl. 1, 168–172 (1960)

Wang, F., de la Llave, R.: Response solutions to quasi-periodically forced systems, even to possibly ill-posed PDEs, with strong dissipation and any frequency vectors. SIAM J. Math. Anal. 52(4), 3149–3191 (2020)

Wayne, C.E.: Periodic and quasi-periodic solutions of nonlinear wave equations via KAM theory. Commun. Math. Phys. 127(3), 479–528 (1990)

Acknowledgements

The author is supported by INDAM-GNFM. The author warmly thanks Dario Bambusi, Luca Franzoi, Thomas Kappeler, Sergei Kuksin, Alberto Maspero and Eugene Wayne for their feedbacks. I am also deeply indebted to Paolo Maremonti for a vary stimulating discussion on the topic.

Funding

Open access funding provided by Università degli Studi di Milano within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Lemma A.1

Let \(n \in \mathbb N\), \(\zeta > 0\) and \(f : [0, + \infty ] \rightarrow [0, + \infty ]\) defined by \(f(y) := y^n e^{- \zeta y}\). Then

Proof

One has that \(f \ge 0\) and

Moreover

therefore f admits a global maximum at \(y = n/\zeta \). This implies that

and the lemma follows.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Montalto, R. The Navier–Stokes Equation with Time Quasi-Periodic External Force: Existence and Stability of Quasi-Periodic Solutions. J Dyn Diff Equat 33, 1341–1362 (2021). https://doi.org/10.1007/s10884-021-09944-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10884-021-09944-w