Abstract

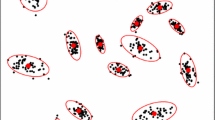

Finite mixture models (FMM) are flexible models with varying uses such as density estimation, clustering, classification, modeling heterogeneity, model averaging, and handling missing data. Expectation maximization (EM) algorithm can learn the maximum likelihood estimates for the model parameters. One of the prerequisites for using the EM algorithm is the a priori knowledge of the number of mixture components in the mixture model. However, the number of mixing components is often unknown. Therefore, determining the number of mixture components has been a central problem in mixture modelling. Thus, mixture modelling is often a two-stage process of determining the number of mixture components and then estimating the parameters of the mixture model. This paper proposes a fast training of a series of mixture models using progressive merging of mixture components to facilitate model selection algorithm to make appropriate choice of the model. The paper also proposes a data driven, fast approximation of the Kullback–Leibler (KL) divergence as a criterion to measure the similarity of the mixture components. We use the proposed methodology in mixture modelling of a synthetic dataset, a publicly available zoo dataset, and two chromosomal aberration datasets showing that model selection is efficient and effective.

Similar content being viewed by others

References

Adhikari, P.R., & Hollmén, J. (2010a). Patterns from multi-resolution 0–1 data. In B. Goethals, N. Tatti, J. Vreeken (Eds.) Proceedings of the ACM SIGKDD workshop on useful patterns (UP’10) (pp. 8–12). ACM.

Adhikari, P.R., & Hollmén, J. (2010b). Preservation of statistically significant patterns in multiresolution 0–1 data. In T. Dijkstra, E. Tsivtsivadze, E. Marchiori, T. Heskes (Eds.) Pattern recognition in bioinformatics. Lecture notes in computer science (Vol. 6282, pp. 86–97). Berlin/Heidelberg: Springer.

Adhikari, P.R., & Hollmén, J. (2012). Fast progressive training of mixture models for model selection. In J.-G. Ganascia, P. Lenca, J.-M. Petit (Eds.) Proceedings of fifteenth international conference on discovery science (DS 2012). LNAI (Vol. 7569, pp. 194–208). Springer-Verlag.

Akaike, H. (1974). A new look at the statistical model identification. IEEE Transactions on Automatic Control, 19(6), 716–723.

Bache, K., & Lichman, M. (2013). UCI machine learning repository. University of California, Irvine, School of Information and Computer Science. http://archive.ics.uci.edu/ml.

Baudis, M. (2007). Genomic imbalances in 5918 malignant epithelial tumors: an explorative meta-analysis of chromosomal CGH data. BMC Cancer, 7, 226.

Beeferman, D., & Berger, A. (2000). Agglomerative clustering of a search engine query log. In Proceedings of the ACM KDD ’00, New York, USA (pp. 407–416).

Blekas, K., & Lagaris, I.E. (2007). Split-merge incremental learning (SMILE) of mixture models. In Proceedings of the ICANN’07 (pp. 291–300). Springer-Verlag.

Cai, H., Kulkarni, S.R., Verdú, S. (2006). Universal divergence estimation for finite-alphabet sources. IEEE Transactions on Information Theory, 52(8), 3456–3475.

Cover, T.M., & Thomas, J.A. (1991). Elements of information theory. New York: Wiley-Interscience.

Dempster, A.P., Laird, N.M., Rubin, D.B. (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal Of The Royal Statistical Society, Series B, 39(1), 1–38.

Donoho, D.L. (2000) High-dimensional data analysis: the curses and blessings of dimensionality. Aide–Memoire of a lecture. In AMS conference on math challenges of the 21st century.

Everitt, B.S., & Hand, D.J. (1981). Finite mixture distributions. London, New York: Chapman and Hall.

Figueiredo, M.A.T, & Jain, A.K. (2002). Unsupervised learning of finite mixture models. IEEE Transactions on Pattern Analysis Machicne Intelligence, 24(3), 381–396.

Goldberger, J., Gordon, S., Greenspan, H. (2003). An efficient image similarity measure based on approximations of KL-divergence between two Gaussian mixtures. In Proceedings of the ICCV ’03, Washington DC, USA (pp. 487–493).

Hershey, J.R., & Olsen, P.A. (2007). Approximating the Kullback Leibler divergence between Gaussian mixture models. In IEEE. ICASSP 2007 (Vol. 4, pp. 317–320).

Juang, B.H., & Rabiner, L.R. (1985). A probabilistic distance measure for Hidden Markov models. AT&T Technical Journal, 64(2), 391–408.

Hollmén, J., & Tikka, J. (2007). Compact and understandable descriptions of mixture of Bernoulli distributions. In M.R. Berthold, J. Shawe-Taylor, N. Lavrač (Eds.) Proceedings of the IDA 2007. LNCS (Vol. 4723, pp. 1–12).

Kittler, J. (1986). Feature selection and extraction. Handbook of pattern recognition and image processing.. Academic Press.

Kullback, S., & Leibler, R.A. (1951). On information and sufficiency. Annals of Mathematical Statistics, 22(1), 79–86.

Lee, Y.K., & Park, B.U. (2006). Estimation of Kullback–Leibler divergence by local likelihood. Annals of the Institute of Statistical Mathematics, 58, 327–340.

Leonenko, N., Pronzato, L., Savani, V. (2008). A class of Rényi information estimators for multidimensional densities. Annals of Statistics, 36(5), 2153–2182.

Li, T. (2005). A general model for clustering binary data. In Proceedings of the eleventh ACM SIGKDD international conference on knowledge discovery in data mining, KDD ’05 (pp. 188–197). ACM: New York.

Li, Y., & Li, L. (2009). A novel split and merge EM algorithm for gaussian mixture model. In Fifth international conference on natural computation, 2009. ICNC ’09 (Vol. 6, pp. 479–483).

Li, Y., & Li, L. (2009). A split and merge EM algorithm for color image segmentation. In IEEE ICIS 2009 (Vol. 4, pp. 395–399).

Mclachlan, G.J., & Krishnan, T. (1996). The EM algorithm and extensions (1st ed.). Wiley-Interscience.

McLachlan, G.J., & Peel, D. (2000). Finite mixture models. New York: Wiley.

Myllykangas, S., Tikka, J., Böhling, T., Knuutila, S., Hollmén, J. (2008). Classification of human cancers based on DNA copy number amplification modeling. BMC Medical Genomics, 1(15), 1–18.

Perez-Cruz, F. (2008). Kullback–Leibler divergence estimation of continuous distributions. In IEEE international symposium on information theory, ISIT 2008 (pp. 1666–1670).

Smyth, P. (2000). Model selection for probabilistic clustering using cross-validated likelihood. Statistics and Computing, 10, 63–72.

Tikka, J., & Hollmén, J. (2008). A sequential input selection algorithm for long-term prediction of time series. Neurocomputing, 71(13–15), 2604–2615.

Tikka, J., Hollmén, J., Myllykangas, S. (2007). Mixture modeling of DNA copy number amplification patterns in cancer. In F. Sandoval, A. Prieto, J. Cabestany, M. Graña (Eds.) Proceedings of the IWANN 2007. Lecture notes in computer science (Vol. 4507, pp. 972–979). San Sebastián, Spain: Springer-Verlag.

Ueda, N., Nakano, R., Ghahramani, Z., Hinton, G.E. (2000). SMEM algorithm for mixture models. Neural Computation, 12(9), 2109–2128.

Wang, Q., Kulkarni, S.R., Verdú, S. (2005). Universal estimation of divergence for continuous distributions via data-dependent partitions. In Proceedings international symposium on information theory, ISIT 2005 (pp. 152–156).

Windham, M.P., & Cutler, A. (1992). Information ratios for validating mixture analyses. Journal of the American Statistical Association, 87(420), 1188–1192.

Wolfe, J.H. (1970). Pattern clustering by multivariate mixture analysis. Multivariate Behavioral Research, 5, 329–350.

Zhang, B., Zhang, C., Yi, X. (2004). Competitive EM algorithm for finite mixture models. Pattern Recognition, 37(1), 131–144.

Zhang, Z., Chen, C., Sun, J., Chan, K.L. (2003). EM algorithms for Gaussian mixtures with split-and-merge operation. Pattern Recognition, 36(9), 1973–1983.

Acknowledgements

Helsinki Doctoral Programme in Computer Science—Advanced Computing and Intelligent Systems (Hecse), and Finnish Center of Excellence for Algorithmic Data Analysis (ALGODAN) funds the current research.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Adhikari, P.R., Hollmén, J. Fast progressive training of mixture models for model selection. J Intell Inf Syst 44, 223–241 (2015). https://doi.org/10.1007/s10844-013-0282-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10844-013-0282-3