Abstract

We propose the first join tree (JT) propagation architecture that labels the probability information passed between JT nodes in terms of conditional probability tables (CPTs) rather than potentials. By modeling the task of inference involving evidence, we can generate three work schedules that are more time-efficient for LAZY propagation. Our experimental results, involving five real-world or benchmark Bayesian networks (BNs), demonstrate a reasonable improvement over LAZY propagation. Our architecture also models inference not involving evidence. After the CPTs identified by our architecture have been physically constructed, we show that each JT node has a sound, local BN that preserves all conditional independencies of the original BN. Exploiting inference not involving evidence is used to develop an automated procedure for building multiply sectioned BNs. It also allows direct computation techniques to answer localized queries in local BNs, for which the empirical results on a real-world medical BN are promising. Screen shots of our implemented system demonstrate the improvements in semantic knowledge.

Similar content being viewed by others

1 Introduction

Bayesian networks (BNs) (Castillo et al. 1997; Cowell et al. 1999; Hájek et al. 1992; Jensen 1996; Neapolitan 1990; Pearl 1988; Xiang 2002) are a clear and concise semantic modeling tool for managing uncertainty in complex domains. A BN consists of a directed acyclic graph (DAG) and a corresponding set of conditional probability tables (CPTs). The probabilistic conditional independencies (Wong et al. 2000) encoded in the DAG indicate that the product of the CPTs is a joint probability distribution (JPD). Although Cooper (1990) has shown that the complexity of exact inference in BNs is NP-hard, several approaches have been developed that apparently work quite well in practice. One approach, called multiply sectioned Bayesian networks (MSBNs) (Xiang 1996, 2002; Xiang and Jensen 1999; Xiang et al. 2006; Xiang et al. 2000, 1993), performs inference in sections of a BN. A second approach, called direct computation (DC) (Dechter 1996; Li and D’Ambrosio 1994; Zhang 1998), answers queries directly in the original BN. Although we focus on a third approach called join tree propagation, in which inference is conducted in a join tree (JT) (Pearl 1988; Shafer 1996) constructed from the DAG of a BN, our work has practical applications in all three approaches.

Shafer emphasizes that JT probability propagation is central to the theory and practice of probabilistic expert systems (Shafer 1996). JT propagation passes information, in the form of potentials, between neighbouring nodes in a systematic fashion. Unlike a CPT, a potential (Hájek et al. 1992) is not clearly interpretable (Castillo et al. 1997) as the probability values forming the distribution may have no recognizable pattern or structure. In an elegant review of traditional JT propagation (Shafer 1996), it is explicitly written that while independencies play an important role in semantic modeling, they do not play a major role in JT propagation. We argue that they should.

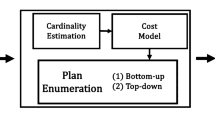

By iterating between semantic modeling and computing the actual probability distributions in computer memory, the JT propagation algorithm suggested by Madsen and Jensen (1999), called LAZY propagation, is a significant advancement in the study of JT propagation. Unlike traditional approaches, LAZY propagation maintains structure in the form of a multiplicative factorization of the potentials at each JT node and each JT separator. Thereby, when a node is ready to send its messages to a neighbour, the structure is modeled to remove irrelevant potentials from the multiplicative factorization by exploiting barren variables (Shachter 1986) and independencies induced by evidence. Next, physical computation is performed on the relevant potentials. Performing semantic modeling before physical computation improves the efficiency of inference, as the experimental results presented in Madsen and Jensen (1999) explicitly demonstrate. With respect to the computational efficiency at the sending node, any remaining independencies, in the relevant potentials, are immaterial. On the contrary, by ignoring these independencies, LAZY propagation is not able to precisely articulate the probability information being passed from one node to another. Passing potentials not only hinders the identification of barren variables at the receiving node, but also blurs the exact probability information remaining at each JT node when propagation finishes. More importantly, iterating between semantic modeling and physical computation essentially guarantees that LAZY performs JT probability propagation more slowly than necessary.

In this study, we propose the first JT propagation architecture for modeling two tasks of inference, one involving evidence and the other not. Our architecture, itself, does not construct the actual probability distributions stored in computer memory. Instead, it labels the probability information to be propagated more precisely in terms of CPTs. The notions of parent-set and elder-set are introduced in order to identify independencies that are not utilized in previous architectures. The identified independencies are very useful, since they allow us to maintain a CPT factorization after a variable is eliminated. When a JT node is ready to send its messages to a neighbour, it calls the IdentifyCPTMessages (ICM) algorithm to determine the CPT labels corresponding to the probability information to be sent to the neighbour, that is, without building the actual probability tables in computer memory. We prove the correctness of our architecture and also show that each JT node can identify its CPT labels in polynomial time. Screen shots of our implemented system demonstrate the improvements in semantic knowledge.

As discussed in Section 5, modeling the processing of evidence is beneficial as our architecture is able to identify relevant and irrelevant messages faster than it takes other propagation algorithms to construct the actual probability distributions in the memory. In a real-world BN for coronary heart disease (CHD) (Hájek et al. 1992), for instance, our architecture can identify all messages in the JT in less time than it takes to physically construct the distribution for one message. We make use of this semantic knowledge to generate three work schedules for LAZY propagation. Our first work schedule identifies irrelevant non-empty messages. This information is very useful, since LAZY’s lack of semantic knowledge could force a receiving node to wait for the physical construction of a message that is irrelevant to its subsequent message computation. Our second work schedule indicates the empty messages to be propagated from non-leaf JT nodes. This has merit as LAZY could force a receiving node to wait for the identification of an empty message that will not even be constructed, let alone sent. Our third work schedule also helps LAZY finish sooner by pointing out those variables that can be eliminated at a non-leaf node before the node has received any messages. Thus, our three work schedules save time, which is the measure used to evaluate inference methods in Madsen and Jensen (1999). As reported in Tables 2, 3 and 4, our empirical results, involving processing the evidence in five real-world or benchmark BNs, are encouraging.

Our architecture is also useful for modeling inference not involving evidence. As shown in Section 6, after the CPTs identified by our architecture have been physically constructed, say, by either of the methods in Madsen and Jensen (1999); Zhang (1998), each JT node has a sound, local BN preserving all conditional independencies of the original BN involving variables in this node. These local BNs are useful to the MSBN and to the DC techniques. We show that our JT propagation architecture is instrumental in developing an automated procedure for constructing a MSBN from a given BN. This is a worthwhile result, since several problems with the manual construction of a MSBN from a BN have recently been acknowledged (Xiang et al. 2000). We also suggest a method for exploiting localized queries in DC techniques. Practical experience has demonstrated that queries tend to involve variables in close proximity within a BN (Xiang et al. 1993). Our approach allows DC to process localized queries in local BNs. As a result, the experimental results involving a real-world BN for CHD show promise.

This paper is organized as follows. Section 2 contains definitions. Our semantic architecture for JT probability propagation is given in Section 3. Complexity analysis and the correctness of our architecture are established in Section 4. In Section 5, we model the task of inference involving evidence and show its usefulness in practice. In Section 6, we show the practical benefits of modeling inference not involving evidence. The conclusion is given in Section 7.

2 Definitions

Let U = {v 1,v 2,...,v m } be a finite set of variables. Each variable v i has a finite domain, denoted dom(v i ), representing the values v i can assume. For a subset \(X \subseteq U\), we write dom(X) for the Cartesian product of the domains of the individual variables in X. Each element x ∈ dom(X) is called a configuration of X.

Definition 1

A potential (Hájek et al. 1992) on dom(X) is a function ϕ on dom(X) such that ϕ(x) ≥ 0, for each configuration x ∈ dom(X), and at least one ϕ(x) is positive.

For example, five potentials ϕ(b), ϕ(f,g), ϕ(g,h), ϕ(f) and ϕ(g) are illustrated in Fig. 1.

Definition 2

For variable v i , a unity-potential 1(v i ) is a potential 1 assigning value 1.0 to each configuration of v i . For a subset \(X \subseteq U\), the unity-potential 1(X) is defined as the product of the unity-potentials 1(v i ), v i ∈ X.

For brevity, we refer to a potential as a probability distribution on X rather than dom(X), and we call X, not dom(X), its domain (Shafer 1996). Also, for simplified notation, we may write a set {v 1,v 2,...,v k } as v 1 v 2 ⋯ v k and use XY to denote X ∪ Y.

Definition 3

A JPD (Shafer 1996) on U, denoted p(U), is a potential on U that sums to one.

Definition 4

Given X ⊂ U, a CPT (Shafer 1996) for a variable \(v \not\in X\) is a distribution, denoted p(v|X), satisfying the following condition: for each configuration x ∈ dom(X), ∑ c ∈ dom(v) p( v = c | X = x ) = 1.0.

For example, given binary variables U = {a,b,...,k}, CPTs p(a), p(b|a), p(c), p(d|c), p(e|c), p(f|d,e), p(g|b,f), p(h|c), p(i|h), p(j|g,h,i) and p(k|g) are depicted in Fig. 2. The missing conditional probabilities can be obtained by definition, for instance, p(a = 0) = 0.504 and p(b = 0|a = 0) = 0.943.

Definition 5

The label of a probability distribution is the heading appearing above the probability column.

For example, the five probability distributions in Fig. 1 can be more precisely labeled as p(b), p(g|f), p(g,h), p(f) and p(g), respectively.

Definition 6

Let x,y and z denote arbitrary configurations of pairwise disjoint subsets X,Y,Z of U, respectively. We say X and Z are conditionally independent (Wong et al. 2000) given Y under the JPD p(U), denoted I(X,Y,Z), if p(X = x|Y = y,Z = z) = p(X = x|Y = y), whenever p(Y = y,Z = z) > 0. If Y = ∅, then we say X and Z are unconditionally independent. The independence I(X,Y,Z) can be equivalently written as \(p(X,Y,Z) = \frac{p(X,Y) \cdot p(Y,Z)}{p(Y)}\).

Definition 7

A Markov blanket (Pearl 1988) of a variable v ∈ U is any subset of variables X ⊂ U with \(v \not\in X\) such that the independence I(v,X,U − X − v) holds in p(U).

Definition 8

A BN (Pearl 1988) on U is a pair (D,C). D is a DAG on U. C is a set of CPTs defined as: for each variable v i ∈ D, there is a CPT for v i given its parents.

Note that, for each CPT in the BN, there is a corresponding CPT labe. The family of a variable in the DAG of a BN is the variable and its parents. We may use the terms BN and DAG interchangeably, if no confusion arises.

Example 1

One real-world BN for CHD (Hájek et al. 1992) is shown in Fig. 3, where the CPTs are given in Fig. 2. For pedagogical reasons, we have made the following minor adjustments: edge (a,f) has been removed; edges (c,f) and (g,i) have been replaced with edges (c,d), (c,e), (d,f), (e,f) and (g,j ), respectively, where d and e are dummy variables.

The coronary heart disease (CHD) BN (Hájek et al. 1992) in Example 1

Definition 9

A numbering ≺ of the variables in a DAG is called ancestral (Castillo et al. 1997), if the number corresponding to any variable v i is lower than the number corresponding to each of its children v j , denoted v i ≺ v j .

Definition 10

The moralization (Pearl 1988) of a DAG D is the undirected graph obtained by making the family of each variable in D complete.

Given pairwise disjoint subsets of variables X,Y,Z in a DAG D, the independence I(X,Y,Z) holds in D (Lauritzen et al. 1990), if Y separates X and Z in the moralization of D′, where D′ is the sub-DAG of D restricted to the edges (v i ,v j ) such that v i ,v j are in XYZ ∪ An(XYZ), and where An(XYZ) denotes the ancestors of XYZ in D. The independencies encoded in D indicate that the product of the CPTs in C is a JPD. For instance, the independencies encoded in the DAG of Fig. 3 indicate that the product of the CPTs in Fig. 2 is a JPD on U = {a,b,c,d,...,k}, namely, p(U) = p(a) ·p(b|a) ·p(c) ·p(d|c) ·...·p(k|g).

Probabilistic inference, also known as query processing, means computing p(X) or p(X|E = e), where X ∩ E = ∅ and \(X,E \subseteq U\). The evidence in the latter query is that E is instantiated to configuration e, while X contains target variables. Barren variables can be exploited in inference (Shachter 1986).

Definition 11

A variable is barren (Madsen and Jensen 1999), if it is neither an evidence nor a target variable and either it has no descendants or (recursively) all its descendants are barren.

As discussed in Section 6, probabilistic inference can be conducted directly in an entire BN or in parts of a BN. It can also be conducted in a JT (Jensen et al. 1990; Lauritzen and Spiegelhalter 1988; Madsen and Jensen 1999; Shafer and Shenoy 1990).

Definition 12

A JT (Pearl 1988; Shafer 1996) is a tree having sets of variables as nodes, with the property that any variable in two nodes is also in any node on the path between the two. The separator (Shafer 1996) S between any two neighbouring nodes N i and N j is S = N i ∩ N j . A JT node with only one neighbour is called a leaf (Shafer 1996); otherwise it is a non-leaf node.

Constructing a minimal JT is NP-complete (Yannakakis 1981). The reader is referred to (Becker and Geiger 2001; Kjaerulff 1990; Olesen and Madsen 2002) for discussions on constructing a JT from a BN. For example, one possible JT for the DAG in Fig. 3 is depicted in Fig. 4 (ignoring the messages for the moment). We label the nodes of this JT as ab, bfg, cdefgh, ghij and gk. The separators of this JT are b, fg, gh and g.

Although JT propagation can be performed serially, our discussion here is based on parallel computation (Kozlov and Singh 1999; Madsen and Jensen 1999; Shafer 1996). We provide a quick overview of the Shafer-Shenoy (SS) architecture for probability propagation in JTs (Shafer and Shenoy 1990; Shafer 1996). Each JT node N has exactly one potential ϕ(N), which is defined by the product of the unity-potential 1(N) and all of the BN CPTs assigned to N. The SS architecture allocates two storage registers in each separator, one for a message sent in each direction. Each node sends messages to all its neighbours, according to the following two rules. First, each node waits to send its message to a particular neighbour until it has received messages from all its other neighbours. Second, when a node N i is ready to send its message to a particular neighbour N j , it computes the message ϕ(N i ∩ N j ) by collecting all its messages from other neighbours, multiplying its potential ϕ(N i ) by these messages, and marginalizing the product to N i ∩ N j .

The LAZY architecture, proposed by Madsen and Jensen (1999), is more sophisticated than the SS architecture, in that it maintains a multiplicative factorization of potentials at each JT node and each JT separator. Modelling structure in this manner leads to significant computational savings (Madsen and Jensen 1999). For ease of exposition, evidence will not be considered here, but later, in Section 5. When LAZY propagation terminates, the potentials at each JT node N are a factorization of the marginal p(N) of p(U). Example 2 illustrates the messages passed in LAZY and the probability information that remains after propagation.

Example 2

Consider the CHD BN in Fig. 3 and one possible JT with assigned CPTs in Fig. 4. The distributions of the five potentials ϕ(b), ϕ(f,g), ϕ(g,h), ϕ(f) and ϕ(g) in Fig. 4, passed in LAZY propagation, are illustrated in Fig. 1. After propagation the marginal distribution at each node is:

3 Modeling inference not involving evidence

In this paper, we are interested in identifying the probability information passed during propagation and that which remains after propagation terminates. Although LAZY propagation can efficiently compute the five distributions in Fig. 1, it does not clearly articulate the semantics of the messages being propagated between JT nodes. For instance, it can be verified that the potential ϕ(f,g) in Fig. 1 is, in fact, the CPT p(g|f) of p(U). To address these problems, instead of developing yet another architecture for probabilistic inference, our architecture models probabilistic inference.

Our simple architecture identifies the probability information being passed between JT nodes. It uses five rules, given below, for filling the storage registers in the JT separators with CPT or unity-potential labels. The key to our architecture is the ICM algorithm used in Rule 4. Before starting JT propagation, each CPT label p(v|X) in the original BN is assigned to exactly one JT node N, where N contains the variables in {v} ∪ X.

-

Rule 1.

For every variable in every separator, allocate two empty storage registers, one for a label in each direction.

-

Rule 2.

Fix an ancestral numbering ≺ of the variables in the BN.

-

Rule 3.

Each node N i waits to identify its label(s) to a given neighbour until all of N i ’s other neighbours have filled their storage registers to N i .

-

Rule 4.

When a node N i is able to send the label(s) to a neighbour N j , it calls the ICM algorithm, passing its assigned CPT labels and all CPT labels received by the storage registers from its other neighbours to N i , as well as the variables N i − N j to be eliminated.

-

Rule 5.

For each CPT label p(v k |P k ) returned by ICM, fill the storage register for variable v k from N i to N j with label p(v k |P k ). For any variable v l with a storage register from N i to N j still empty, fill the register with the unity-potential label 1(v l ).

We illustrate Rules 1–3 with the following example.

Example 3

Consider the JT with assigned BN CPTs in Fig. 5. By Rule 1, the separator gh in Fig. 4, for instance, has four storage registers in Fig. 5, which initially are empty. For Rule 2, we fix the ancestral numbering as a ≺ b ≺ ... ≺ k. According to Rule 3, node b fg, for example, can only send labels to node ab after the message labels have been received by the storage registers from its other neighbours to bfg, namely, the storage registers from both nodes cdefgh and gk to bfg. In other words, Rule 3 means leaf JT nodes are ready to identify their messages immediately.

Unlike Fig. 4, our architecture precisely articulates the probability information being passed between JT nodes

The ICM algorithm is built upon the FindRelevantCPTs (FRC) and MaintainCPTLabels (MCL) algorithms.

Definition 13

Given a set C of CPT labels and a variable v i to be eliminated, the FindRelevantCPTs (FRC) algorithm returns the set C′ of CPT labels in C involving v i , where FRC first sorts the CPT labels in C′ according to ≺ in Rule 2, say \(C^\prime = \{p(v_i|P_i),p(v_1|P_1),\ldots,p(v_k|P_k)\}\), where v i ≺ v 1 ≺ ... ≺ v k .

Example 4

Suppose FRC is called with C = {p(c), p(d|c), p(e|c), p(f|d,e), p(h|c)} and variable d is to be eliminated. By ≺ in Example 3, FRC returns C′ = {p(d|c), p(f|d,e)} and not C′ = {p(f|d,e),p(d|c)}.

Definition 14

To eliminate v i , suppose FRC returns {p(v i |P i ),p(v 1|P 1),..., p(v k |P k )}. Consider a variable v j ∈ v i v 1 ⋯ v k . The parent-set of v j is P j .

Example 5

To eliminate c, suppose FRC returns {p(c), p(e|c), p(f|c,e), p(h|c)}. The parent-sets of variables c, e, f and h are {}, {c}, {c,e} and {c}, respectively.

Definition 15

Suppose FRC returns {p(v i |P i ), p(v 1|P 1),...,p(v k |P k )} for eliminating variable v i . We call C i = {v 1,...,v k } the child-set of v i , where v 1 ≺ ... ≺ v k in Rule 2.

Example 6

To eliminate c, suppose FRC returns {p(c), p(d|c), p(e|c), p(h|c)}. Besides p(c), variable c appears in the CPT labels for {h,d,e}. By ≺ in Example 3, e ≺ h, while d ≺ e. By definition, the child-set of v i = c is C i = {v 1 = d, v 2 = e, v 3 = h}.

To eliminate v i , given that FRC returns {p(v i |P i ),p(v 1|P 1),..., p(v k |P k )}, those CPT labels \(p(v_1|P_1),\ldots\), p(v k |P k ) of the variables v j ∈ C i are modified. While variable v i is deleted from P j , only certain variables preceding v j in ≺ of Rule 2 may be added to P j .

Definition 16

To eliminate v i , suppose FRC returns {p(v i |P i ),p(v 1|P 1),..., p(v k |P k )}. Consider a variable v j ∈ v i v 1 ⋯ v k . The family-set, denoted F j , of v j is v j P j .

Example 7

To eliminate c, suppose FRC returns {p(c), p(e|c), p(f|c,e), p(h|c)}. The family-sets of variables c, e, f and h are {c}, {c,e}, {c,e,f} and {c,h}, respectively.

Definition 17

Given a set of CPT labels {p(v 1|P 1),...,p(v m |P m )}, the directed graph defined by these CPT labels, called the CPT-graph, has variables F 1 ⋯ F m , and a directed edge from each variable in P k to v k , k = 1,...,m.

Example 8

Consider the set of CPT labels {p(a),p(c|a),p(d),p(e|b),p(g|d), p(h),p(j|c,d,e), p(k|c,d,e,h,j),p(l|c,d,e,h,i,j,k),p(m|j)}. The CPT-graph is shown in Fig. 6. Note that variables b and i do not have CPT labels in the given set.

Definition 18

To eliminate v i , suppose FRC returns {p(v i |P i ),p(v 1|P 1),..., p(v k |P k )}. The child-set C i = {v 1,...,v k } is defined by ≺ in Rule 2. For each variable v j ∈ C i , the elder-set E j is defined as E 1 = F i − v i , E 2 = (E 1 F 1) − v i , E 3 = (E 2 F 2) − v i , ..., E k = (E k − 1 F k − 1) − v i .

For simplified notation, we will write (E j F j ) − v i as E j F j − v i . Definition 18 says that the elder-set E j of variable v j in C i is F i together with the family-sets of the variables in C i preceding v j , with v i subsequently removed.

Example 9

Consider the set of CPT labels {p(a),p(c|a),p(d),p(e|b),p(f|c,d), p(g|d),p(h),p(j|e,f), p(k|d,f,h),p(l|f,i),p(m|j)}. The CPT-graph defined by these CPT labels is shown in Fig. 7. Let us assume that ≺ in Rule 2 orders this subset of variables in alphabetical order. Consider eliminating variable v i = f. The call to FRC returns {p(f|c,d), p(j|e,f), p(k|d,f,h), p(l|f,i)}. With respect to ≺ in Rule 2, the elder-set of each variable in the child-set C i = {v 1 = j,v 2 = k,v 3 = l} is E 1 = {c,d}, E 2 = {c,d,e,j} and E 3 = {c,d,e,h,j,k}, as depicted in Fig. 7.

The MCL algorithm now can be introduced.

For simplified notation, we will write (E j P j ) − v i as E j P j − v i .

Example 10

To eliminate variable v i = f, suppose the set of CPT labels obtained by FRC in Example 9 is passed to MCL. For ≺ in Example 9, consider variable v 3 = l. By definition, E 3 = {c,d,e,h,j,k} and P 3 = {f,i}. Then p(l|f,i) is adjusted to p(l|c,d,e,h,i,j,k), as E 3 P 3 − v i is {c,d,e,h,i,j,k}. Similarly, p(k|d,f,h) is changed to p(k|c,d,e,h,j), and p(j|e,f) is modified to p(j|c,d,e). By Definition 17, the CPT-graph defined by the CPT labels remaining after the elimination of variable f is shown in Fig. 6.

Lemma 1

According to our architecture, let \(C^{\prime\prime} = \{p(v_1|P_1),\ldots,p(v_k|P_k)\}\) be the set of CPT labels returned by the MCL algorithm. Then the CPT-graph defined by C″ is a DAG.

Proof

For any BN CPT p(v j |P j ), by Rule 2, v ≺ v j , where v ∈ P j . Moreover, by the definition of elder-set, v ≺ v j , where v ∈ E j . Since the parent set P j of v j is modified as P j E j − v i , for any CPT label p(v j |P j ) returned by the MCL algorithm, it is still the case that v ≺ v j , where v ∈ P j . Therefore, the CPT-graph defined by C″ is acyclic, namely, it is a DAG. □

We now present the ICM algorithm.

We use two examples to illustrate the subtle points of all five rules in our architecture.

Example 11

Let us demonstrate how our architecture determines the CPT labels p(g) and p(h|g) in the storage registers for g and h from cdefgh to ghij, shown in Fig. 5. By Rule 2, we use ≺ in Example 3. By Rule 4, cdefgh has collected the CPT label p(g|f) in the storage register of g from bfg to cdefgh, but not 1(f) as this is not a CPT label, and calls ICM with C = {p(c),p(d|c),p(e|c), p(f|d,e),p(g|f),p(h|c)} and X = {c,d,e,f}. For pedagogical purposes, let us eliminate the variables in the order d,c,e,f. ICM calls FRC, passing it C and variable d. FRC returns {p(d|c),p(f|d,e)}, which ICM initially assigns to C′ and subsequently passes to MCL. Here v i = d, C i = {v 1 = f}, P 1 = {d,e} and E 1 = {c}. As E 1 P 1 − v i is {c,e}, MCL returns {p(f|c,e)}, which ICM assigns to C″. Next, the set C of CPT labels under consideration in ICM is adjusted to be C = {p(c),p(e|c),p(f|c,e),p(g|f),p(h|c)}. For variable c, the call to FRC results in C′ = {p(c),p(e|c), p(f|c,e),p(h|c)}. To eliminate v i = c, the elder-set of each variable in the child-set C i = {v 1 = e,v 2 = f,v 3 = h} is E 1 = {}, E 2 = {e} and E 3 = {e,f}. Moreover, the parent-set of each variable in the child-set is P 1 = {c}, P 2 = {c,e} and P 3 = {c}. In MCL, then p(h|c) is adjusted to p(h|e,f), as E 3 P 3 − v i is {e,f}. Similarly, p(f|c,e) is changed to p(f|e) and p(e|c) is modified to p(e). Therefore, MCL returns the set {p(e),p(f|e),p(h|e,f)} of CPT labels, which ICM assigns to C″. Then C is updated to be C = {p(e),p(f|e),p(g|f),p(h|e,f)}. After eliminating variable e, the set of labels under consideration is modified to C = {p(f), p(g|f), p(h|f)}. Moreover, after considering the last variable f, the set of labels under consideration is C = {p(g), p(h|g)}. ICM returns C to cdefgh. By Rule 5, cdefgh places the CPT labels p(g) and p(h|g) in the storage registers of g and h from cdefgh to ghij, respectively.

Example 12

Now let us show how our architecture determines the labels p(f) and 1(g) in the storage registers for f and g from cdefgh to bfg, shown in Fig. 5. By Rule 2, we use ≺ in Example 3. By Rule 4, cdefgh does not collect the unity-potential labels 1(g) and 1(h) in the storage registers of g and h from ghij and calls ICM with C = {p(c), p(d|c), p(e|c), p(f|d,e), p(h|c)} and X = {c,d,e,h}. For simplicity, let us eliminate the variables in the order d,c,e,h. Similar to Example 11, the set of CPT labels under consideration, after the elimination of variables c,d,e, is C = {p(f), p(h|f)}. After eliminating the last variable h, the set of labels under consideration is C = {p(f)}. ICM returns C to cdefgh. By Rule 5, cdefgh places the CPT label p(f) in the storage register of f from cdefgh to bfg and places the unity-potential label 1(g) in the empty storage register for variable g from cdefgh to bfg.

All of the identified CPT messages for Fig. 5 are illustrated in Fig. 8, which is a screen shot of our implemented system. For simplified discussion, we will henceforth speak of messages passed between JT nodes with the separators understood.

Identification of the CPT messages in Fig. 5

Example 13

Let us review Example 11 in terms of equations:

The derivation of p(g) and p(h|g) is not correct without independencies. For instance, the independencies I(d,c,e) and I(f,de,c) are necessary when moving from (2) to (3). In fact, without the unconditional independence I(b, ∅ ,f), the message p(g|f), from bfg to cdefgh, used in (1), is not correct either:

Thus, the importance of showing that we can identify these independencies and utilize them, as shown above, is made obvious.

4 Complexity and correctness

Here we establish the time complexity of the ICM algorithm and the correctness of our architecture for modeling inference not involving evidence.

Lemma 2

Let n be the number of CPT labels in C′ given as input to the MCL algorithm for eliminating variable v i . The time complexity of MCL is O(n).

Proof

Let {p(v i |P i ),p(v 1|P 1),...,p(v n − 1|P n − 1)} be the input set C′ of CPT labels given to MCL. The elder-sets E 1,...,E n − 1 and parent-sets P 1,...,P n − 1 can be obtained in one pass over C′. Given that C′ has n labels, the time complexity to determine the required parent-sets and elder-sets is O(n). Similarly, for each label in {p(v 1|P 1),...,p(v n − 1|P n − 1)}, the parent-set is modified exactly once. Hence, this for-loop executes n − 1 times. Therefore, the time complexity of MCL is O(n). □

Our main complexity result, given in Theorem 1, is that the CPT labels sent from a JT node can be identified in polynomial time.

Theorem 1

In the input to the ICM algorithm, let n be the number of CPT labels in C , and let X be the set of variables to be eliminated. The time complexity of ICM is O(n 2 log n).

Proof

As the number of CPT labels in C is n, the maximum number of variables to be eliminated in X is n. The loop body is executed n times, once for each variable in X. Recall that the loop body calls the FRC and MCL algorithms. Clearly, FRC takes O(n) time to find those CPT labels involving v i . According to ≺ in Rule 2, these CPT labels can be sorted using the merge sort algorithm in O(n log n) time (Cormen et al. 2001). Thus, FRC has time complexity O(n log n). By Lemma 2, MCL has time complexity O(n). Thus, the loop body takes O(n log n) time. Therefore, the time complexity of the ICM algorithm is O(n 2 log n). □

We now turn to the correctness of our architecture. The next result shows that certain independencies involving elder-sets hold in any BN.

Lemma 3

Let (D,C) be a BN defining a JPD p(U). Given the set C of CPT labels and any variable v i ∈ D, the FRC algorithm returns {p(v i |P i ),p(v 1|P 1),...,p(v k |P k )}, where the variables in the child-set C i = {v 1,...,v k } are written according to ≺ in Rule 2. For each v j ∈ C i with elder-set E j , the independencies I(v i , E j , P j − v i ) and I(v j , P j ,E j ) hold in the JPD p(U).

Proof

Observe that the parents, children, and family of any variable v in D are precisely the parent-set, child-set and family-set of v, which are defined by C′, respectively. Let us first show that I(v j ,P j ,E j ) holds for j = 1,...,k. Pearl (1988) has shown that I(v,P v ,N v ) holds for every variable v in a BN, where N v denotes the set of all non-descendants of v in D. By definition, no variable in the elder-set E j can be a descendant of v j in D. Thereby, \(E_j \subseteq N_j\). By the decomposition axiom of probabilistic independence (Pearl 1988; Wong et al. 2000), the JPD p(U) satisfying I(v j ,P j ,N j ) logically implies that p(U) satisfies I(v j ,P j ,E j ).

We now show that I(v i ,E j ,P j − v i ) holds, for j = 1,...,k. According to the method in Section 2 for testing independencies, we write I(v i ,E j ,P j − v i ) as I(v i ,E j ,P j − v i − E j ) and determine the ancestral set of the variables in I(v i ,E j ,P j − v i − E j ) as An(v i ) ∪ An(E j ) ∪ An(P j − v i − E j ), which is the same as An(E j P j ), since v i ∈ P j . Note that v j , and any child of v i in D succeeding v j in ≺ of Rule 2, are not members in the set An(E j P j ). Hence, in the constructed sub-DAG D′ of DAG D onto An(E j P j ), the only directed edges involving v i are those from each variable in P i to v i and from v i to every child preceding v j . By Corollary 6 in Pearl (1988), E j is a Markov blanket of v i in D′. By definition, I(v i ,E j ,U − E j − v i ) holds in p(U). By the decomposition axiom, p(U) satisfies I(v i ,E j ,P j − v i ). □

Let us first focus on the elimination of just one variable. We can maintain a CPT factorization after v i ∈ X is eliminated, provided that the corresponding elder-set independencies are satisfied by the JPD p(U). Note that, as done previously, we write (E j P j ) − v i , (E j F k ) − v i and (E j F j ) − v i v j more simply as E j P j − v i , E j F k − v i and E j F j − v i v j , respectively.

Lemma 4

Given the output {p(v i |P i ),p(v 1|P 1),...,p(v k |P k )} of FRC to eliminate variable v i in our architecture. By Rule 2, v 1 ≺ ... ≺ v k in the child-set C i = {v 1,...,v k } of v i . For each variable v j ∈ C i with elder-set E j , if the independencies I(v i ,E j ,P j − v i ) and I(v j ,P j ,E j ) hold in the JPD p(U), then

Proof

We first show, by mathematical induction on the number k of variables in C i , that the product of these relevant CPTs can be rewritten as follows:

(Basic step: j = 1). By definition, E 1 = P i . Thus, p(v i |P i ) ·p(v 1|P 1) = p(v i |E 1) ·p(v 1|P 1). By definition,

When j = 1, by assumption, I(v i ,E 1,P 1 − v i ) and I(v 1,P 1,E 1) hold. We can apply these independencies consecutively by multiplying the numerator and denominator of the right side of Eq. 4 by p(E 1 P 1 − v i ), namely,

By definition,

By the product rule (Xiang 2002) of probability, which states that p(X,Y|Z) = p(X|Y,Z) ·p(Y|Z) for pairwise disjoint subsets X,Y and Z, Eq. 6 is expressed as:

(Inductive hypothesis: j = k − 1, k ≥ 2). Suppose

(Inductive step: j = k). Consider the following product

By the inductive hypothesis,

Since E k = E k − 1 F k − 1 − v i , (10) can be rewritten as

It can easily be shown that

by following (4)–(7) replacing j = 1 with j = k. Substituting (12) into (11), the desired result follows:

Having rewritten the factorization of the relevant CPTs, we now consider the elimination of variable v i as follows:

By (13) and (14), we obtain the desired result

□

Equation (15) explicitly demonstrates the form of the factorization in terms of CPTs after variable v i is eliminated. Hence, the right-side of (15) is used in the MCL algorithm to adjust the label of the CPT for each variable in v i ’s child-set. That is, \(\prod_{j=1}^k\) corresponds to the for-loop construct running from j = k,...,1, while p(v j |E j P j − v i ) corresponds to the statement P j = E j P j − v i for one iteration of the for-loop.

Example 14

Recall eliminating variable d in (2). By Example 11, v i = d, C i = {v 1 = f}, P 1 = {d,e} and E 1 = {c}. By Lemma 3, I(v i ,E j ,P j − v i ) and I(v j ,P j ,E j ) hold, namely, I(d,c,e) and I(f,de,c). By Lemma 4, ∑ d p(d|c) ·p(f|d,e) is equal to p(f|c,e), as shown in (3).

We now establish the correctness of our architecture by showing that our messages are equivalent to those of the SS architecture (Shafer and Shenoy 1990; Shafer 1996). Since their architecture computes the probability distributions stored in computer memory, while our architecture determines labels, let us assume that the label in each storage register of our architecture is replaced with its corresponding probability distribution.

Theorem 2

Given a BN D and a JT for D . Apply our architecture and also the SS architecture. For any two neighbouring JT nodes N i and N j , the product of the distributions in the storage registers from N i to N j in our architecture is the message in the storage register from N i to N j in the SS architecture.

Proof

When a JT node N i receives a message from a neighbour N j , it is also receiving, indirectly, information from the nodes on the other side of N j (Shafer 1996). Thus, without a loss of generality, let the JT for D consist of two nodes, N 1 and N 2. Consider the message from N 2 to N 1. Let Z be those variables v m of N 2 such that the BN CPT p(v m |P m ) is assigned to N 2. The variables to be eliminated are X = N 2 − N 1. Let Y = N 2 − (XZ). Following the discussion in Section 2, the SS message ϕ(N 2 ∩ N 1) from N 2 to N 1 is:

Consider the first variable v i of X eliminated from \(\prod_{v_m \in Z}p(v_m|P_m)\). By Lemma 3, for each v j ∈ C i , the independencies I(v i , E j , P j − v i ) and I(v j , P j ,E j ) hold in p(U). By Lemma 4, the exact form of the CPTs is known after the elimination of v i . By (Shafer 1996), the product of all remaining probability tables in the entire JT is the marginal distribution p(U − v i ) of the original joint distribution p(U). Let us more carefully examine the remaining CPTs in the entire JT. First, each variable in U − v i has exactly one CPT. It follows from Lemma 1 that the CPT-graph defined by all CPT labels remaining in the JT is a DAG. Therefore, the CPTs for the remaining variables U − v i are a BN defining the marginal distribution p(U − v i ) of the original JPD p(U). By the definition of probabilistic conditional independence, an independence holding in the marginal p(U − v i ) necessarily means that it holds in the joint distribution p(U). Thereby, we can recursively apply Lemmas 1, 3 and 4 to eliminate the other variables in X. The CPTs remaining from the marginalization of X from \(\prod_{v_m \in Z}p(v_m|P_m)\) are exactly the distributions of the CPT labels output by the ICM algorithm when called by N 2 to eliminate X from C = { p(v m |P m ) | v m ∈ Z}. In our architecture, the storage registers from N 2 to N 1 for those variables in Y are still empty. Filling these empty storage registers with unity-potentials 1(v l ), v l ∈ Y, follows directly from the definition of the unity-potential 1(Y). Therefore, the product of the distributions in the storage registers from N 2 to N 1 in our architecture is the message in the storage register from N 2 to N 1 in the SS architecture. □

Example 15

It can be verified that the identified CPTs shown in Fig. 5 are correct. In particular, the SS message ϕ(f,g) from cdefgh to bfg is p(f) ·1(g).

Corollary 1

The marginal distribution p(N) for any JT node N can be computed by collecting all CPTs sent to N by N ’s neighbours and multiplying them with those CPTs assigned to N.

5 Modeling inference involving evidence

Pearl (1988) emphasizes the importance of structure by opening his chapter on Markov and BNs with the following quote:

Probability is not really about numbers; it is about the structure of reasoning.

- G. Shafer

In this section, we extend our architecture to model the processing of evidence and show that it can still identify CPT messages. Modeling the processing of evidence is faster than the physical computation needed for evidence processing. By allowing our architecture to take full responsibility for modeling structure, we empirically demonstrate that LAZY can finish its work sooner.

It is important to realize that the processing of evidence E = e can be viewed as computing marginal distributions (Schmidt and Shenoy 1998; Shafer 1996; Xu 1995). For each JT node N, compute the marginal p(NE), from whence p(N − E,E = e) can be obtained. The desired distribution p(N − E|E = e) can then be determined via normalization.

Rules 6 and 7 extend our architecture to model the processing of evidence.

-

Rule 6.

Given evidence E = e. For each node N in the JT, set N = N ∪ E. On this augmented JT, apply Rules 1–5 of our architecture for modeling inference not involving evidence.

-

Rule 7.

For each evidence variable v ∈ E, change each occurrence of v in an assigned or propagated CPT label from v to v = ε, where ε is the observed value of v.

We now show the correctness of our architecture for modeling inference involving evidence.

Theorem 3

Given a BN D , a JT for D , and observed evidence E = e. After applying our architecture, extended by Rules 6 and 7 for modeling inference involving evidence, the probability information at each JT node N defines p(N − E,E = e).

Proof

Apply Rule 6. By Corollary 1, the probability information at each node N is p(NE). By selecting those configurations agreeing with E = e in Rule 7, the probability information at each node is p(N − E,E = e). □

Example 16

Consider evidence b = 0 in the real-world BN for CHD in Fig. 3. With respect to the CHD JT in Fig. 5, the CPT messages to be propagated are depicted in Fig. 9.

The next example involves three evidence variables.

Example 17

Consider the JT with assigned BN CPTs in Fig. 10 (top). Given evidence a = 0, c = 0, f = 0, the LAZY potentials propagated towards node de are shown (Madsen and Jensen 1999). In comparison, all CPT labels identified by our architecture are depicted in Fig. 10 (bottom).

We can identify the labels of the messages faster than the probability distributions themselves can be built in computer memory.

Example 18

Given evidence b = 0 in Example 16, constructing the distribution p(b = 0) in memory for the message from node ab to bfg required 1.813 ms. Identifying all CPT messages in Fig. 9 required only 0.954 ms.

Although semantic modeling can be done significantly faster than physical computation, the LAZY architecture applies these two tasks iteratively. The consequence, as the next two examples show, is that semantic modeling must wait while the physical computation catches-up.

Example 19

Madsen and Jensen (1999) Consider the BN (left) and JT with assigned CPTs (right) in Fig. 11. Suppose evidence d = 0 is collected. Before node bcdef can send its messages to node efg, it must first wait for the message ϕ(b,c) to be physically constructed at node abc as:

Upon receiving distribution ϕ(b,c) at node bcdef, LAZY exploits the independence I(bc,d,ef) induced by the evidence d = 0 to identify that ϕ(b,c) is irrelevant to the computation for the messages to be sent from b cdef to efg.

Madsen and Jensen (1999) A BN (left) and a JT with assigned CPTs (right). LAZY exploits the independence I(bc,d,ef) induced by evidence d = 0 only after the irrelevant potential ϕ(b,c) has been physically constructed at abc and sent to bcdef

In the exploitation of independencies induced by evidence, Example 19 explicitly demonstrates that LAZY forces node bcdef to wait for the construction of the irrelevant message ϕ(b,c).

Example 20

Madsen and Jensen (1999) Consider the BN (left) and JT with assigned CPTs (right) in Fig. 12. Before node acde can send its messages to node ef, it must first wait for the message ϕ(c,d|a) to be constructed in computer memory at node abcd as:

Upon receiving distribution ϕ(c,d|a) at acde, LAZY exploits barren variables c and d to identify that ϕ(c,d|a) is irrelevant to the computation for the messages to be sent from acde to ef.

Madsen and Jensen (1999) A BN (left) and a JT with assigned CPTs (right). LAZY exploits barren variables c and d only after the irrelevant potential ϕ(c,d|a) has been physically constructed at abcd and sent to acde

In the exploitation of barren variables, Example 20 explicitly demonstrates that LAZY forces node acde to wait for the physical construction of the irrelevant message ϕ(c,d|a). These unnecessary delays in Examples 19 and 20 are inherently built into the main philosophy of LAZY propagation (Madsen and Jensen 1999):

The bulk of LAZY propagation is to maintain a multiplicative [factorization of the CPTs] and to postpone combination of [CPTs]. This gives opportunities for exploiting barren variables . . . during inference. . . . Thereby, when a message is to be computed only the required [CPTs] are combined.

The notion of a barren variable, however, is relative. For instance, in Example 20, variables c and d are not barren at the sending node abcd, but are barren at the receiving node acde. Thus, the interweaving of modeling structure and physical computation underlay these unnecessary delays.

We advocate the uncoupling of these two independent tasks. More specifically, we argue that modeling structure and computation of the actual probability distributions in computer memory should be performed separately. As our architecture can model structure faster than the physical computation can be performed, it can scout the structure in the JT and organize the collected information in three kinds of work schedules.

Our first work schedule pertains to non-empty messages that are irrelevant to subsequent message computation at the receiving node, as evident in Examples 19 and 20. More specifically, for three distinct nodes N i , N j and N k such that N i and N j are neighbours, as are N j and N k , our first work schedule indicates that the messages from N i to N j are irrelevant in the physical construction of the messages to be sent from N j to N k .

Example 21

Given evidence d = 0 in Example 19, the work schedule in Fig. 13 indicates that the messages from node abc are irrelevant to node bcdef in the physical construction of the messages to be sent from bcdef to node efg. Now reconsider Example 20. The work schedule in Fig. 14 indicates that the messages from node abcd are irrelevant to node acde in the physical construction of the messages to be sent from acde to node ef.

Our second work schedule indicates that an empty message will be propagated from a non-leaf node to a neighbour. (As LAZY could begin physical computation at the leaf JT nodes, we focus on the non-leaf JT nodes.) For instance, given evidence b = 0 in the extended CHD BN and JT with assigned CPTs in Fig. 15, the work schedule in Fig. 16 indicates that bfg will send ab an empty message. Our second work schedule saves LAZY time.

Our architecture can identify all empty messages sent by non-leaf nodes given evidence b = 0 in the extended CHD JT of Fig. 15 (right)

Example 22

Recall the extended CHD BN and JT in Fig. 15. Given evidence b = 0, LAZY determines that bfg will send ab an empty message only after bfg receives the probability distributions ϕ(f) from cdefgh. Physically constructing ϕ(f) involves eliminating the four variables c,d,e,h from the five potentials p(c),p(d|c),p(e|c),p(f|d,e), p(h|c). In less time than is required for this physical computation, our architecture has generated the work schedule in Fig. 16. Without waiting for LAZY to eventually consider bfg, node ab can immediately send its messages {p(a|b = 0),p(b = 0)}to node al, which, in turn, can send its respective messages {p(b = 0),p(l|b = 0)} to node lm.

Last, but not least, our architecture can generate another type of beneficial work schedule. This third work schedule indicates the variables that can be eliminated at a non-leaf node with respect to the messages for a particular neighbour, before the sending node has received any messages. Given evidence b = 0 in the CHD JT of Fig. 5, the work schedule in Fig. 17 indicates that, for instance, non-leaf node cdefgh can immediately eliminate variables c,d,e in its construction of the message to ghij. Assisting LAZY to eliminate variables early saves time, as the next example clearly demonstrates.

Given evidence b = 0 in the CHD JT of Fig. 5, our architecture identifies those variables that can be eliminated at non-leaf nodes before any messages are received

Example 23

Given evidence b = 0 in the CHD JT of Fig. 5, consider the message from cdefgh to ghij. LAZY waits to eliminate variables c, d, e, f at cdefgh until cdefgh receives its messages {ϕ(b = 0),ϕ b = 0(f,g)} from bfg, which, in turn, has to wait for message ϕ(b = 0) from ab. Thus, LAZY computes the message from cdefgh to ghij as:

In contrast, the work schedule of Fig. 17 allows LAZY to immediately eliminate variables c,d,e as:

The benefit is that when the LAZY messages {ϕ(b = 0),ϕ b = 0(f,g)} from bfg are received, only variable f remains to be eliminated. That is to say, LAZY’s (18) is simplified to

In our CHD example, LAZY will eliminate c,d,e twice at node cdefgh, once for each message to bfg and ghij. This duplication of effort is a well-known undesirable property in JT propagation (Shafer 1996). Utilizing our third work schedule, such as in Fig. 17, to remove this duplication remains for future work.

We conclude this section by providing some empirical results illustrating the usefulness of our JT architecture. In particular, we provide the time taken for LAZY propagation and the time saved by allowing our architecture to guide LAZY. The source code for LAZY propagation was obtained from (Consortium 2002), as was the code for generating a JT from a BN. Table 1 describes five real-world or benchmark BNs and their corresponding JTs used in the experiments.

We first measure the time cost of performing inference not involving evidence. Table 2 shows the time taken for : (i) LAZY propagation by itself, (ii) LAZY propagation guided by our architecture, (iii) time saved by using our approach, and (iv) time saved as a percentage. All propagation was timed in seconds using a SGI R12000 processor. Note that our architecture lowered the time taken for propagation in all five real-world or benchmark BNs. The time percentage saved ranged from 11.76% to 69.26% with an average percentage of 37.02%.

Next, we measure the time cost of performing inference involving evidence. As shown in Tables 3 and 4, approximately nine percent and eighteen percent of the variables in each BN are randomly instantiated as evidence variables, respectively. Note that once again our architecture lowered the time taken for propagation in all five real-world or benchmark BNs. In Table 3, the time percentage saved ranged from 0.42% to 15.48% with an average percentage of 6.42%. In Table 4, the time percentage saved ranged from 1.86% to 14.89% with an average percentage of 6.12%.

It is worth mentioning that our architecture is more useful with fewer evidence variables. One reason for this is that the cost of physical computation is lowered with collected evidence, since the probability tables to be propagated are much smaller. For instance, LAZY takes over 882 s to perform propagation not involving evidence on the Mildew BN, yet only takes about 15 s to perform propagation if 6 variables are instantiated as evidence variables. Therefore, LAZY needs more help in the case of processing no or fewer evidence variables and this is precisely when our architecture is most beneficial.

6 Local BNs and practical applications

After applying our architecture, as described in Section 3, to identify the probability information being passed in a JT, let us apply either method from (Madsen and Jensen 1999; Zhang 1998) to physically construct the identified CPT messages. That is, we assume that the label in each storage register of our architecture is replaced with its corresponding probability distributions. Unlike all previous JT architectures (Jensen et al. 1990; Lauritzen and Spiegelhalter 1988; Madsen and Jensen 1999; Shafer and Shenoy 1990), in our architecture, after propagation not involving evidence, each JT node N has a sound, local BN preserving all conditional independencies of the original BN involving variables in N. Practical applications of local BNs include an automated modeling procedure for MSBNs and a method for exploiting localized queries in DC techniques.

Lemma 5

Given a BN D and a JT for D , apply Rules 1–5 in our architecture. For any JT node N , the CPTs assigned to N , together with all CPTs sent to N from N ’s neighbours, define a local BN D N .

Proof

As previously mentioned, when a JT node N i receives a message from a neighbour N j , it is also receiving, indirectly, information from the nodes on the other side of N j (Shafer 1996). Thus, without a loss of generality, let the JT for D consist of two nodes, N 1 and N 2. We only need focus on variables in the separator N 1 ∩ N 2. Consider a variable v m in N 1 ∩ N 2 such that the BN CPT p(v m |P m ) is assigned to N 1. Thus, v m is without a CPT label with respect to N 2. In N 1’s call to ICM for its messages to N 2, variable v m is not in X = N 1 − N 2, the set of variables to be eliminated, Thus, ICM will return a CPT label for v m to N 1. By Rule 5, N 1 will place this CPT label in the empty storage register for v m from N 1 to N 2. Therefore, after propagation, variable v m has a CPT label at node N 2. Conversely, consider N 2’s call of ICM for its messages to N 1. Since the BN CPT p(v m |P m ) is assigned to N 1, there is no CPT label for v m passed to ICM. Thus, ICM does not return a CPT label for v m to N 2. By Rule 5, N 2 fills the empty storage register of v m from N 2 to N 1 with the unity-potential label 1(v m ). Therefore, after propagation, both N 1 and N 2 have precisely one CPT label for v m . Moreover, for each node, it follows from Lemma 1 that the CPT-graph defined by the assigned CPTs and the message CPTs is a DAG. By definition, each JT node N has a local BN D N . □

Example 24

Recall the JT with assigned CPTs in Fig. 5. Each JT node has a local BN after propagation not involving evidence, as depicted by a screen shot of our implemented system in Fig. 18.

Theorem 4

Given Lemma 5. If an independence I(X,Y,Z) holds in a local BN D N for a JT node N , then I(X,Y,Z) holds in the original BN D.

Proof

We will show the claim by removing variables in the JT towards the node N. Let v i be the first variable eliminated in the JT for D. After calling MCL, let D′ be the CPT-graph defined by the CPTs remaining at this node, together with the CPTs assigned to all other nodes. It follows from Lemma 1 that D′ is a DAG. We now establish that I(X,Y,Z) holding in D′ implies that I(X,Y,Z) holds in D. Note that since v i is eliminated, \(v_i \not\in XYZ\). By contraposition, suppose I(X,Y,Z) does not hold in D. By the method for testing independencies in Section 2, let D m be the moralization of the sub-DAG of D onto XYZ ∪ An(XYZ). There are two cases to consider. Suppose v i ∉ An(XYZ). In this case, I(X,Y,Z) does not hold in D′ as D m is also the moralization of the sub-DAG of D′ onto XYZ ∪ An(XYZ). Now, suppose v i ∈ An(XYZ). Let \(D^\prime_m\) be the moralization of the sub-DAG of D′ onto XYZ ∪ An(XYZ). By assumption, there is an undirected path in D m from X to Z, which does not involve Y. If this path does not involve v i , this same path must exist in \(D^\prime_m\) as the MCL algorithm only removes those edges involving v i . Otherwise, as \(v_i \not \in XYZ\), this path must include edges (v,v i ) and \((v_i,v^\prime)\), where \(v,v^\prime\in P_iC_i\). Since the moralization process will add an undirected edge between every pair of variables in P i , and since the MCL algorithm will add a directed edge from every variable in P i to every variable in C i and also from every variable v j in C i to every other variable v k in C i such that v j ≺ v k in Rule 2, it is necessarily the case that (v,v′) is an undirected edge in the moralization \(D_m^\prime\) of D′. As there is an undirected path in \(D_m^\prime\) from X to Z not involving Y, by definition, I(X,Y,Z) does not hold in D′. Thus, every independence I(X,Y,Z) encoded in D′ is encoded in the original BN D. Therefore, by recursively eliminating all variables \(v \not\in N\), all conditional independencies encoded in D N are also encoded in D. □

Example 25

In Fig. 18, it is particularly illuminating that our architecture correctly models I(g,c,h), the conditional independence of g and h given c, in the local BN for cdefgh, yet at the same time correctly models I(g,h,i), the conditional independence of g and i given h, in the local BN for ghij.

Although unconditional independencies of the original BN might not be saved in the local BNs, Theorem 5 shows that conditional independencies are.

Theorem 5

Given Lemma 5. If an independence I(X,Y,Z) holds in the original BN D , where Y ≠ ∅ and XYZ is a subset of a JT node N , then I(X,Y,Z) holds in the local BN D N .

Proof

Let v i ∈ U be the first variable eliminated by our architecture. Suppose FRC returns the set of CPT labels \(C^\prime = \{p(v_i|P_i),p(v_1|P_1),\ldots,p(v_k|P_k)\}\). By ≺ in Rule 2, the child-set of v i is C i = {v 1,...,v k }. The set of all variables appearing in any label of C′ is E k F k . Clearly, the CPT-graph D′ defined by C′ is a DAG. By Corollary 6 in Pearl (1988), variable v i is independent of all other variables in U given E k F k − v i . Thus, we only need to show that any conditional independence I(X,Y,Z) holding in D′ with \(v_i \not\in XYZ\) is preserved in D″, where D″ is the CPT-graph defined by the set C″ of CPT labels output by MCL given C′ as input. By Lemma 1, D″ is a DAG. By contraposition, suppose a conditional independence I(X,Y,Z) does not hold in D″. According to the method for testing independencies in Section 2, let \(D^\prime_m\) be the moralization of the sub-DAG of D′ onto XYZ ∪ An(XYZ). There are two cases to consider. Suppose \(v_i \not\in An(XYZ)\). In this case, I(X,Y,Z) does not hold in D′ as \(D^\prime_m\) is also the moralization of the sub-DAG of D″ onto XYZ ∪ An(XYZ). Now, suppose v i ∈ An(XYZ). By definition, the moralization process makes families complete. Observe that the family of each variable v m in D′ is, by definition, the family-set of v m in the CPT label p(v m |P m ) of C′. For every variable v m in the sub-DAG of D′ onto XYZ ∪ An(XYZ), v i is a member of the family-set F m . Therefore, there is an edge (v i ,v) in \(D^\prime_m\) between v i and every other variable v in \(D^\prime_m\). Then, in particular, there is a path (x,v i ),(v i ,z) from every variable x ∈ X to every variable z ∈ Z. Since \(v_i \not\in Y\), by definition, I(X,Y,Z) does not hold in D′. Hence, all conditional independencies on U − v i are kept. Therefore, by recursively eliminating all variables \(v \not\in N\), all conditional independencies on N are kept. That is, by Lemma 5, all conditional independencies I(X,Y,Z) with \(XYZ \subseteq N\) are preserved in the local BN D N . □

Example 26

Recall the CPT-graphs in Figs. 7 and 6 defined by the CPT labels before and after the elimination of variable v i = f, respectively, where C i = {v 1 = j,v 2 = k,v 3 = l}. It may seem as though some conditional independencies are lost due to the additional directed edges like (c,l), (e,l) and (j,l), where c ∈ P i , e ∈ P 1 and j,l ∈ C i . On the contrary, although I(c,ejkhi,l), I(e,bcdhjk,l) and I(j,cde,l) do not hold in Fig. 6, these independencies do not hold in Fig. 7 either. Some unconditional independencies were lost, however, such as I(be, ∅ , kl).

Our first practical application of local BNs concerns MSBNs (Xiang 1996, 2002; Xiang and Jensen 1999; Xiang et al. 2006, 2000, 1993), which are formally defined as follows.

Definition 19

A MSBN is a finite set {B 1, B 2, ..., B n } of BNs satisfying the following condition: N 1, N 2,..., N n can be organized as a join tee, where N i is the set of variables in the local BN B i , i = 1,2, ..., n.

Before any inference takes place, a given BN needs first be modeled as a MSBN. Several problems, however, with the manual construction of a MSBN from a BN have recently been acknowledged (Xiang et al. 2000). We resolve this modeling problem as follows.

Recently, Olesen and Madsen (2002) gave a simple method for constructing a special JT based on the maximal prime decomposition (MPD) of a BN. One desirable property of a MPD JT is that the JT nodes are unique for a given BN. We favour MPD JTs over conventional JTs, since they facilitate inference in the LAZY architecture while still only requiring polynomial time for construction (Olesen and Madsen 2002). For example, given the CHD BN in Fig. 3, the JT shown in Fig. 5 is the unique MPD JT.

We now propose Algorithm 3 to introduce an automated procedure for constructing a MSBN from a given BN.

Example 27

We illustrate Algorithm 3 using the real-world CHD BN in Fig. 3. The MPD JT with assigned BN CPTs is shown in Fig. 5. The CPTs identified by our architecture are listed in Fig. 8. Apply any probabilistic inference algorithm to physically construct these CPTs. By definition, Fig. 18 is a MSBN for the CHD BN.

We now establish the correctness of Algorithm 3.

Lemma 6

Given as input a BN D , the output {B 1, B 2,..., B n } of Algorithm 3 is a MSBN.

Proof

The claim holds immediately by Lemma 5. □

It is worth emphasizing that the local BNs have been shown in Theorems 4 and 5 to possess two favourable features, namely, the local BNs are sound and they preserve all conditional independencies of the original BN onto the context of the variables in each JT node. Note that recursive conditioning (Allen and Darwiche 2003) can also be used to build the CPTs in step 4 of Algorithm 3. Recursive conditioning was recently introduced as the first any-space algorithm for exact inference in BNs. Recursive conditioning finds an optimal cache factor to store the probability distributions under different memory constraints. The experimental results in Allen and Darwiche (2003) show that the memory requirements for inference in many large real-world BNs can be significantly reduced by recursive conditioning. Therefore, recursive conditioning allows for performing exact inference in the situations previously considered impractical (Allen and Darwiche 2003).

The significance of our suggestion is not aimed at an improvement in MSBN computational efficiency. Instead, an automated procedure for the semantic modeling of MSBNs overcomes the recently acknowledged problems with manually constructing MSBNs (Xiang et al. 2000).

Our second practical application of local BNs concerns DC (Dechter 1996; Li and D’Ambrosio 1994; Zhang 1998). MSBNs are well established in the probabilistic reasoning community due, in large part, to the presence of localized queries (Xiang 1996, 2002; Xiang and Jensen 1999). That is, practical experience has previously demonstrated that queries tend to involve variables in close proximity within the BN (Xiang et al. 1993). We conclude our discussion by showing how our semantic architecture allows DC to exploit localized queries.

DC is usually better than JT propagation, if one only is interested in updating a small set of non-evidence variables (Madsen and Jensen 1999), where small is shown empirically to be twenty or fewer variables in Zhang (1998). However, DC processes every query using the original BN. Therefore, it is not exploiting localized queries.

Our semantic architecture models the original BN as a set of local BNs, once the actual probability distributions corresponding to the identified CPT labels have been constructed in computer memory. Hence, DC techniques can process localized queries in local BNs. The following empirical evaluation is styled after the one reported by Schmidt and Shenoy (1998).

Example 28

We suggest that the CHD BN in Fig. 3 be represented as the smaller local BNs in Fig. 18, after the physical construction of CPTs p(b), p(f), p(g), p(g|f) and p(h|g). Table 5 shows the work needed by DC to answer five localized queries using the original CHD BN of Fig. 3 in comparison to using the local BNs of Fig. 18.

While it is acknowledged that our suggestion here is beneficial only for localized queries, practical experience with BNs, such as in neuromuscular diagnosis (Xiang et al. 1993), has long established that localized queries are a reality.

7 Conclusion

Unlike all previous JT architectures (Jensen et al. 1990; Lauritzen and Spiegelhalter 1988; Madsen and Jensen 1999; Shafer and Shenoy 1990), we have proposed the first architecture to precisely model the processing of evidence in terms of CPTs. The key advantage is that we can identify the labels of the messages significantly faster than the probability distributions themselves can be built in computer memory. For instance, in the medical BN for CHD, our architecture can identify all messages to be propagated in the JT in less time than it takes to physically construct one message (see Example 18). We can assist LAZY propagation (Madsen and Jensen 1999), which interlaces semantic modeling with physical computation, by uncoupling these two independent tasks. Treating semantic modeling and physical computation as being dependent practically ensures that LAZY will perform probability propagation unnecessarily slowly. When exploiting barren variables and independencies induced by evidence, Examples 19 and 20 explicitly demonstrate that LAZY forced a node to wait for the physical construction of a non-empty message that was irrelevant to its subsequent message computation. These irrelevant non-empty messages are identified by our first work schedule, as depicted in Figs. 13 and 14. Another advantage of allowing semantic modeling to scout the structure in the JT is our second work schedule, which presents the empty messages propagated from non-leaf JT nodes, such as shown in Fig. 16. This second work schedule is beneficial as was demonstrated in Example 22, where LAZY forced a receiving node to wait for the identification of an empty message that would be neither constructed nor sent. Finally, to send a message from one node to a neighbour, LAZY does not eliminate any variables at the sending node until all messages have been received from its other neighbours (see Example 23). Our third work schedule lists those variables that can be eliminated before any messages are received, as the screen shot in Fig. 17 indicates. Besides the real-world BN for CHD, we also evaluated our architecture on four benchmark BNs, called Alarm, Insurance, Hailfinder and Mildew. The experimental results reported in Tables 2, 3 and 4 are very encouraging. The important point, with respect to JT probability propagation, is that our architecture can assist LAZY by saving time, which is the measure used to compare inference methods in Madsen and Jensen (1999).

Even when modeling inference not involving evidence, our architecture still is useful to the MSBN technique and to the DC techniques. We have shown that our JT propagation architecture is instrumental in developing an automated procedure for constructing a MSBN from a given BN. This is a worthwhile result, since several problems with the manual construction of a MSBN from a BN have recently been acknowledged (Xiang et al. 2000). We also have suggested a method for exploiting localized queries in DC techniques. Practical experience, such as that gained from neuromuscular diagnosis (Xiang et al. 1993), has demonstrated that queries tend to involve variables in close proximity within a BN. Our approach allows DC to process localized queries in local BNs. The experimental results in Table 5 involving a real-world BN for CHD show promise.

In his eloquent review of three traditional JT architectures (Jensen et al. 1990; Lauritzen and Spiegelhalter 1988; Shafer and Shenoy 1990), Shafer (1996) writes that the notion of probabilistic conditional independence (Wong et al. 2000) does not play a major role in inference. More recently, the LAZY architecture has demonstrated a remarkable improvement in efficiency over the traditional methods by actively exploiting independencies to remove irrelevant potentials before variable elimination. However, LAZY propagation does not utilize the independencies holding in the relevant potentials. In our architecture, we introduce the notions of parent-set and elder-set in order to take advantage of these valuable independencies. Based on this exploitation of independency information, we believe that the computationally efficient LAZY method, and the semantically rich architecture proposed here, serve as complementary examples of second-generation JT probability propagation architectures.

References

Allen, D., & Darwiche, A. (2003). Optimal time-space tradeoff in probabilistic inference, In Proc. 18th international joint conference on artificial intelligence (pp. 969-975). Acapulco, Mexico.

Becker, A., & Geiger, D. (2001). A sufficiently fast algorithm for finding close to optimal clique trees. Artificial Intelligence, 125(1–2) 3–17.

Castillo, E., Gutiérrez, J., & Hadi, A. (1997). Expert systems and probabilistic network models. New York: Springer.

Consortium, E. (2002). Elvira: An environment for probabilistic graphical models. In Proceedings of the 1st European workshop on probabilistic graphical models (pp. 222–230). Cuenca, Espana.

Cooper, G. F. (1990). The computational complexity of probabilistic inference using Bayesian belief networks. Artificial Intelligence, 42(2–3), 393–405.

Cormen, T. H., Leiserson, C. E., Rivest, R. L., & Stein, C. (2001). Introduction to algorithms. Toronto: MIT.

Cowell, R. G., Dawid, A. P., Lauritzen, S. L., & Spiegelhalter, D. J. (1999). Probabilistic networks and expert systems. New York: Springer.

Dechter, R. (1996). Bucket elimination: A unifying framework for probabilistic inference. In Proc. 12th conference on uncertainty in artificial intelligence (pp. 211–219). Portland, OR.

Hájek, P., Havránek, T., & Jiroušek, R. (1992). Uncertain information processing in expert systems. Ann Arbor: CRC.

Jensen, F. V. (1996). An introduction to Bayesian networks. London: UCL.

Jensen, F. V., Lauritzen, S. L., & Olesen, K. G. (1990). Bayesian updating in causal probabilistic networks by local computations. Computational Statistics Quarterly, 4, 269–282.

Kjaerulff, U. (1990). Triangulation of graphs—algorithms giving small total state space, Research Report R-90-09. Dept. of Math. and Comp. Sci., Aalborg University, Denmark.

Kozlov, A. V., & Singh, J. P. (1999). Parallel implementations of probabilistic inference. IEEE Computer, 29(12), 33–40.

Lauritzen, S. L., & Spiegelhalter, D. J. (1988). Local computations with probabilities on graphical structures and their application to expert systems. Journal of the Royal Statistical Society Series B, 50(2), 157–244.

Lauritzen, S. L., Dawid, A. P., Larsen, B. N., & Leimer, H. G. (1990). Independence properties of directed Markov fields. Networks, 20(5), 491–505.

Li, Z., & D’Ambrosio, B. (1994). Efficient inference in Bayes networks as a combinatorial optimization problem. International Journal of Approximate Reasoning, 11(1), 55–81.

Madsen, A. L., & Jensen, F. V. (1999). LAZY propagation: A junction tree inference algorithm based on lazy evaluation. Artificial Intelligence, 113(1–2), 203–245.

Madsen, A. L., & Jensen, F. V. (1999). Parallelization of inference in Bayesian networks. Research Report R-99-5002, Dept. of Comp. Sci., Aalborg University, Denmark

Neapolitan, R. E. (1990). Probabilistic reasoning in expert systems. Toronto: Wiley.

Olesen, K. G., & Madsen, A. L. (2002). Maximal prime subgraph decomposition of Bayesian networks. IEEE Transactions on Systems, Man and Cybernetics B, 32(1), 21–31.

Pearl, J. (1988). Probabilistic reasoning in intelligent systems: Networks of plausible inference. San Francisco: Morgan Kaufmann.

Schmidt, T., & Shenoy, P. P. (1998). Some improvements to the Shenoy-Shafer and Hugin architectures for computing marginals. Artificial Intelligence, 102, 323–333.

Shachter, R. (1986). Evaluating influence diagrams. Operational Research, 34(6), 871–882.

Shafer, G., & Shenoy, P. P. (1990). Probability propagation. Annals of Mathematics and Artificial Intelligence, 2, 327–352.

Shafer, G. (1996). Probabilistic expert systems. Philadelphia: SIAM.

Wong, S. K. M., Butz, C. J., & Wu, D. (2000). On the implication problem for probabilistic conditional independency. IEEE Transactions on Systems, Man and Cybernetics A, 30(6), 785–805.

Xiang, Y. (1996). A probabilistic framework for cooperative multi-agent distributed interpretation and optimization of communication. Artificial Intelligence, 87(1–2), 295–342.

Xiang, Y. (2002). Probabilistic reasoning in multiagent systems: A graphical models approach. New York: Cambridge University Press.

Xiang, Y., & Jensen, F. V. (1999). Inference in multiply sectioned Bayesian networks with extended Shafer-Shenoy and Lazy propagation, In Proc. 15th conference on uncertainty in artificial intelligence (pp. 680–687). Stockholm, Sweden.

Xiang, Y., Jensen, F. V., & Chen, X. (2006). Inference in multiply sectioned Bayesian networks: Methods and performance comparison. IEEE Transactions on Systems, Man and Cybernetics B, 36(3), 546–558.

Xiang, Y., Olesen, K. G., & Jensen, F. V. (2000). Practical issues in modeling large diagnostic systems with multiply sectioned Bayesian networks. International Journal of Pattern Recognition and Artificial Intelligence, 14(1), 59–71.

Xiang, Y., Pant, B., Eisen, A., Beddoes, M. P., & Poole, D. (1993). Multiply sectioned Bayesian networks for neuromuscular diagnosis. Artificial Intelligence in Medicine, 5, 293–314.

Xu, H. (1995). Computing marginals for arbitrary subsets from marginal representation in Markov trees. Artificial Intelligence, 74(1), 177–189.

Yannakakis, M. (1981). Computing the minimal fill-in is NP-Complete. SIAM Journal on Algebraic and Discrete Methods, 2, 77–79.

Zhang, N. L. (1998). Computational properties of two exact algorithms for Bayesian networks. Applied Intelligence, 9(2), 173–184.

Acknowledgements

This research is supported by NSERC Discovery Grant 238880. The authors would like to thank F.V. Jensen, Q. Hu, H. Geng, C.A. Maguire and anonymous reviewers, for insightful suggestions.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Butz, C.J., Yao, H. & Hua, S. A join tree probability propagation architecture for semantic modeling. J Intell Inf Syst 33, 145–178 (2009). https://doi.org/10.1007/s10844-008-0073-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10844-008-0073-4