Abstract

We objectively quantified the neural sensitivity of school-aged boys with and without autism spectrum disorder (ASD) to detect briefly presented fearful expressions by combining fast periodic visual stimulation with frequency-tagging electroencephalography. Images of neutral faces were presented at 6 Hz, periodically interleaved with fearful expressions at 1.2 Hz oddball rate. While both groups equally display the face inversion effect and mainly rely on information from the mouth to detect fearful expressions, boys with ASD generally show reduced neural responses to rapid changes in expression. At an individual level, fear discrimination responses predict clinical status with an 83% accuracy. This implicit and straightforward approach identifies subtle deficits that remain concealed in behavioral tasks, thereby opening new perspectives for clinical diagnosis.

Similar content being viewed by others

Introduction

Social behavior and communication are largely determined by the efficient use and interpretation of nonverbal cues (Argyle 1972), such as facial expressions. Emotional face processing has often been studied in individuals with autism spectrum disorder (ASD), a neurodevelopmental disorder characterized by impaired reciprocal social communication and interaction, including deficient non-verbal communicative behavior (American Psychiatric Association 2014).

Facial Emotion Processing Strategies in ASD

An abundance of behavioral studies has investigated emotion recognition in individuals with and without ASD, yielding mixed results in terms of group differences (Harms et al. 2010; Lozier et al. 2014; Uljarevic and Hamilton 2013). Deficits in fear recognition, for instance, have often been shown in adults with ASD (Humphreys et al. 2007; Pelphrey et al. 2002; Rump et al. 2009; Wallace et al. 2008), whereas child studies often reported intact fear processing in ASD (Evers et al. 2015; Lacroix et al. 2014; Law Smith et al. 2010; Tracy et al. 2011). Due to the ongoing development of fear recognition abilities during childhood, floor effects in both ASD and control children might conceal possible group differences until they emerge during adulthood.

The use of alternative, less automatic processing strategies in ASD (Harms et al. 2010) might affect expression recognition. Perceptual processing styles are commonly investigated using the face-inversion paradigm, as inversion of the face disrupts the typical holistic or configural face processing (Rossion 2008; Tanaka and Simonyi 2016). Reports of an absent face inversion effect in ASD (Behrmann et al. 2006; Gross 2008; Rosset et al. 2008) suggest the use of an atypical, more local and feature-based (emotion) processing style. However, other studies reported better emotion recognition in upright versus inverted faces, both in ASD and TD participants (McMahon et al. 2016; Wallace et al. 2008), indicating that participants with ASD are capable of holistic or configural face processing.

Difficulties in emotion processing may also occur when one fails to inspect the most relevant facial cues (Ellison an Massaro 1997). The eyes have been suggested to play a crucial role in fear recognition (Bombari et al. 2013; Wegrzyn et al. 2017), but also the importance of the mouth, and the combination of both these regions, has been emphasized (Beaudry et al. 2014; Eisenbarth and Alpers 2011; Gagnon et al. 2014; Guarnera et al. 2015). Results on the most informative facial features for emotion processing in ASD versus TD are inconclusive. Some studies demonstrated reliance on different facial cues for emotion recognition (Grossman and Tager-Flusberg 2008; Neumann et al. 2006; Spezio et al. 2007), whereas other studies showed that both groups employ the same facial information (Leung et al. 2013; McMahon et al. 2016; Sawyer et al. 2012). Still, a similar way of looking at faces for reading emotions does not automatically imply similar neural processing, nor a similar level of emotion recognition performance (Sawyer et al. 2012).

Event Related Potential studies

To understand the neural basis of facial emotion processing in ASD, many researchers have measured Event-Related Potentials (ERPs) using electroencephalography (EEG) (Jeste and Nelson 2009; Luckhardt et al. 2014), but generally fail to draw consistent conclusions (Black et al. 2017; Monteiro et al. 2017).

One ERP component of particular interest for (expressive) face processing is the N170 (Hinojosa et al. 2015). Kang et al. (2018) proposed this ERP component as a possible neural biomarker of the face processing impairments in individuals with ASD. However, the differences in N170 found between ASD and TD groups could merely reflect a slower general processing of social stimuli (Vettori et al. 2018) or they could be caused by carryover effects from changes in the immediately preceding P100 component (Hileman et al. 2011). In addition, atypicalities in the N170 response to emotional faces may not be autism-specific: similar atypicalities have been observed in other psychiatric and neurological disorders and may rather be an indication of emotional face processing dysfunction as a symptom of these diagnoses, than disorder-specific deficits (Feuerriegel et al. 2015).

The use of visual mismatch negativity (vMMN) paradigms has also been suggested as a clinically relevant application (Kremláček et al. 2016). However, the low number of oddballs and the low signal-to-noise ratio (SNR) of classic ERP measurements require many trials, resulting in long EEG recordings. Furthermore, to be valuable and reliable as a clinical tool, measurements should be consistent across studies and participants, in order to facilitate individual assessment. Yet, the variable expression of the vMMN in terms of individual timing and format (Kremlácek et al. 2016) hampers the objective marking of the vMMN, especially at an individual level.

Fast Periodic Visual Stimulation EEG

To overcome these difficulties, we used a relatively novel approach in the emotion-processing field, combining fast periodic visual stimulation (FPVS) with EEG. FPVS-EEG is based on the principle that brain activity synchronizes to a periodically flickering stimulus (Adrian and Matthews 1934). Similar to previous studies (Dzhelyova et al. 2016; Leleu et al. 2018), we applied this principle in an oddball paradigm, where we periodically embedded expressive faces in a stream of neutral faces. The periodic presentation at predefined, yet different, base and oddball frequency rates makes FPVS-EEG a highly objective measure that supports direct quantification of the responses. Furthermore, the rapid presentation enables a fast acquisition of many discrimination responses in a short amount of time, with a high SNR. In addition, FPVS-EEG allows the collection of discriminative responses not only at a group level, but also at an individual level. Individual assessments may help us gain more insight in the heterogeneity within the autism spectrum.

Present Study Design

We applied FPVS-EEG in boys with and without ASD to quantify and understand the nature of the facial emotion processing difficulties in autism. We implemented fear as the deviant expression between series of neutral faces, because of its potential to elicit large neurophysiological responses (Nuske et al. 2014; Smith 2012). By using neutral faces as forward and backward masks for the fearful faces in a rapidly presented stream (i.e. images are only presented for about 167 ms), the facial emotion processing system is put under tight temporal constraints (Alonso-Prieto et al. 2013; Dzhelyova et al. 2016). This allows us to selectively isolate the sensitivity to the expression.

Based on the literature, we expect a lower neural sensitivity (i.e. reduced EEG responses) for fearful expressions in children with ASD as compared to TD. Detection (i.e. the ability to notice that an emotional content is displayed in a facial expression) of fearful faces can occur without emotion categorization (i.e. the appraisal of which specific expression is shown) (Frank et al. 2018; Sweeny et al. 2013). Therefore, where possible group differences in emotion categorization might be concealed because of floor effects in both groups due to the ongoing development of fear recognition abilities, we expect that FPVS-EEG will reveal possible group differences in the implicit detection of rapidly presented fearful faces. In addition, series of upright as well as inverted faces are presented to assess possible differences in perceptual strategies. Here, we expect to observe more pronounced inversion effects in TD as compared to ASD children. Finally, we investigate whether the detection of a fearful face is modulated by directing the participants’ attention to the eyes versus the mouth of the target face, by placing the fixation cross either on the nasion (i.e. nose bridge) or on the mouth of the face stimuli. This should inform us about the most informative facial cue for fear detection, and whether this most informative cue differs for children with ASD versus TD.

Methods

Participants

We recruited 46 8-to-12 year old boys without intellectual disability (FSIQ ≥ 70), comprising 23 TD boys and 23 boys with ASD. Given the higher prevalence of ASD in males (Haney 2016; Loomes et al. 2017) and to avoid confounds due to gender effects on facial emotion processing (McClure, 2000), we only included boys in this study. In addition, given the “own-culture advantage” of emotion processing (Elfenbein and Ambady 2002; Gendron et al. 2014), participants had to be living in Belgium for at least 5 years.

Children with ASD were recruited via the Autism Expertise Centre at the University Hospital and via special need schools. TD participants were recruited via mainstream elementary schools and sport clubs. Four out of the 46 children were left-handed (2 TD), and three children reported colour blindness (1 TD). Because this did not affect their ability to detect the colour changes of the fixation cross, these participants were not excluded. All participants had normal or corrected-to-normal visual acuity. Five participants with ASD had a comorbid diagnosis of ADHD and seven participants of this group took medication to reduce symptoms related to ASD and/or ADHD (methylphenidate, aripiprazole).

Exclusion criteria were the suspicion or presence of a psychiatric, neurological, learning or developmental disorder (other than ASD or comorbid ADHD in ASD participants) in the participant or in a first-degree relative. To be included in the ASD group, the children needed a formal diagnosis of ASD, established by a multidisciplinary team, according to DSM-IV-TR or DSM-5 criteria (American Psychiatric Association 2000, 2014). Furthermore, the Dutch parent version of the Social Responsiveness Scale (SRS; Roeyers et al. 2012) was used to measure ASD traits in all participants. A total T-score of 60 was employed as cut-off for inclusion, with all ASD children scoring above 60 and all TD children scoring below 60 to exclude the presence of substantial ASD symptoms.

Both participant groups were group-wise matched on chronological age and IQ. Participant demographics and descriptive statistics are displayed in Table 1.

The Medical Ethical Committee of the university hospital approved this study. Written informed consent according to the Declaration of Helsinki was gathered from the participants and their parents prior to participation.

Stimuli

The stimuli comprised a subset of the stimuli used by Dzhelyova et al. (2016). Full front images of a neutral and a fearful expression of four individuals—two males, two females—were selected from the Karolinska Directed Emotional Faces database (AF01, AF15, AM01, AM06, (Lundqvist et al. 1998)). The colored images were set to a size of 210 × 290 pixels, equalizing 4.04° × 5.04° of visual angle at 80 cm viewing distance, and were placed against a gray background (RGB = 128, 128, 128; alpha = 255). The facial stimuli varied randomly in size between 80 and 120% of the original size. Mean pixel luminance and contrast of the faces was equalized during stimulus presentation.

Design

The design was similar to recent studies with fast periodic oddball paradigms (Dzhelyova et al. 2016; Vettori et al. 2019). The experiment consisted of four conditions—based on the orientation of the faces (upright or inverted) and the position of the fixation cross (nasion or mouth)—all repeated four times, resulting in 16 sequences. At the beginning of each sequence, a blank screen appeared for a variable duration of 2–5 s, followed by 2 s of gradually fading in (0–100%) of the stimuli. The images were presented for 40 s, followed by 2 s of gradually fading out (100–0%). The order of the conditions was counterbalanced, with the sequences randomised within each condition.

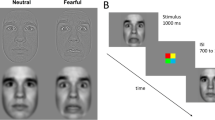

Stimuli of neutral faces (e.g. individual A) were displayed at a base rate of 6 Hz, periodically interleaved with a fearful oddball stimulus of the same individual every fifth image [6 Hz/5 = 1.2 Hz oddball rate; based on previous research (Alonso-Prieto et al. 2013; Dzhelyova and Rossion 2014a; Liu-Shuang et al. 2014)], generating the following sequence AneutralAneutralAneutralAneutralAfearfulAneutralAneutralAneutralAneutralAfearful (see Fig. 1 and the Movie in Online Resource 1). A custom application software written in Java was used to present images through sinusoidal contrast modulation (0–100%) (see also Fig. 1).

Fast periodic visual stimulation (FPVS) oddball paradigm for the detection of fearful faces, where neutral faces are presented sequentially at a fast 6 Hz base rate, periodically interleaved with a fearful face every fifth image (i.e. 1.2 Hz oddball rate). In separate trials, the faces are presented either upright or inverted and with the fixation cross on the nasion or on the mouth (Dzhelyova et al. 2016)

Procedure

Participants were seated in a dimly lit room in front of a LCD 24-in. computer screen, which was placed at eye level. To guarantee attentiveness of the participants, an orthogonal task was implemented. A fixation cross, presented either on the nasion of the face or on the mouth, briefly (300 ms) changed color from black to red 10 times within every sequence. The participants had to respond as soon and accurately as possible when noticing the color changes of the fixation cross.

EEG Acquisition

We recorded EEG activity using a BIOSEMI Active-Two amplifier system with 64 Ag/AgCl electrodes and two additional electrodes as reference and ground electrodes (Common Mode Sense active electrode and Driven Right Leg passive electrode). We recorded vertical eye movements by positioning one electrode above and one below the right eye; additionally, one electrode was placed at the corner of both eyes to record horizontal eye movements. We recorded EEG and electrooculogram at 512 Hz.

EEG Analysis

Preprocessing

We processed all EEG data using Letswave 6 (http://www.nocions.org/letswave/) in Matlab R2017b (The Mathworks, Inc.). We cropped the continuously recorded EEG data into segments of 45 s (2 s before and 3 s after each sequence), bandpass filtered it at 0.1–100 Hz using a fourth-order Butterworth filter, and resampled the data to 256 Hz. We applied independent component analysis via the runica algorithm (Bell and Sejnowski 1995; Makeig et al. 1995) to remove blink artefacts for two TD participants who blinked on average more than 2SD above the mean (average number of blinks across participants = 0.19, SD = 0.22). We re-estimated noisy or artifact-ridden channels through linear interpolation of the three spatially nearest, neighboring electrodes. All data segments were re-referenced to a common average reference.

Frequency Domain Analysis

The preprocessed data segments were cropped to contain an integer number of 1.2 Hz cycles starting immediately after the fade-in until approximately 39.2 s (47 cycles). Data were then averaged in the time domain, for each participant individually and per condition. A fast fourier transformation (FFT) was applied to these averaged segments, yielding a spectrum ranging from 0 to 127.96 Hz with a spectral resolution of 0.025 (= 1/40 s).

The recorded EEG contains signals at frequencies that are integer multiples (harmonics) of the 6 Hz base stimulation frequency and the 1.2 Hz oddball frequency. To measure the discrimination response to fearful faces, only the amplitude at the frequencies corresponding to the oddball frequency and its harmonics (i.e. n*F/5 = 2.4 Hz, 3.6 Hz, 4.8 Hz, etc.) is considered (Dzhelyova et al. 2016). We used two measures to describe this fear discrimination response: SNR and baseline-corrected amplitudes. SNR is expressed as the amplitude value of a specific frequency bin divided by the average amplitude of the 20 surrounding frequency bins, whereas the baseline-corrected amplitude is calculated by subtracting the average amplitude level of the 20 surrounding bins from the amplitude of the frequency bin of interest (Liu-Shuang et al. 2014). We used SNR spectra for visualization, because the responses at high frequency ranges may be of small amplitude, but with a high SNR. Baseline-correction expresses responses in amplitudes (µV) that can be summed across significant harmonics to quantify an overall base and oddball response (Dzhelyova and Rossion 2014b; Retter and Rossion 2016).

To define the number of harmonics of the base and oddball frequencies to include in the analyses, for each condition we assessed the significance of the responses at different harmonics by calculating Z-scores (Liu-Shuang et al. 2014) on the FFT grand-averaged data across all electrodes and across electrodes in the relevant regions of interest (ROIs; cf. infra). We considered harmonics significant and relevant to include as long as the Z-score for two consecutive harmonics was above 1.64 (p < 0.05, one-tailed) across both groups and across all conditions (Retter and Rossion 2016). Following this principle, we quantified the oddball response as the sum of the responses of seven harmonics (i.e. 7F/5 = 8.4 Hz), without the harmonics corresponding to the base rate frequency (F = 6 Hz). The base frequency response was quantified as the summed responses of the base rate and its following two harmonics (2F and 3F = 12 Hz and 18 Hz, respectively).

In addition, analyses were performed at the individual subject level by calculating individual Z-scores for each of the relevant ROIs. We averaged the raw FFT spectrum per ROI and cropped it into segments centered at the oddball frequency and its harmonics, surrounded by 20 neighboring bins on each side that represent the noise level (Dzhelyova et al. 2016; Vettori et al. 2019). These spectra were summed across the significant harmonics and then transformed into a Z-score (see above).

Brain Topographical Analysis and Determination of ROIs

Based on visual inspection of the topographical maps and in accordance with the identification of the left and right occipito-temporal region as most responsive for socially relevant oddball stimuli, and the medial occipital region as most responsive for base rate stimulation (Dzhelyova et al. 2016; Vettori et al. 2019), we defined the following ROIs: (1) left and right occipito-temporal (LOT and ROT) ROIs by averaging for each hemisphere the four channels with the highest summed baseline-corrected oddball response averaged across all conditions (i.e. channels P7, P9, PO7 and O1 for LOT, and P8, P10, PO8 and O2 for ROT), (2) medial occipital ROI (MO) by averaging the two channels with the largest common response at 6 Hz (i.e. channels Iz and Oz).

Behavioral Facial Expression Measures

Two computerized behavioral facial expression processing tasks were administered.

The Emotion Recognition Task (Kessels et al. 2014; Montagne et al. 2007) investigates the explicit recognition of six dynamic basic facial expressions. Similar to the study of Evers et al. (2015), we applied two levels of emotion intensity: 50% and 100%. Children observe short video clips of a dynamic face in front view (4 clips per emotion), and have to select the corresponding emotion from the six written labels displayed left on the screen. Prior to task administration, participants were asked to provide an example situation for each emotion to ensure that they understood the emotion labels.

In the Emotion-matching task (Palermo et al. 2013) participants have to detect a target face showing a different facial emotion compared to two distractor faces both showing the same expression. The same six emotions as in the Emotion Recognition Task are involved. Here, we used the shorter 65-item version of the task, preceded by four practice trials (for specifics, see Palermo et al. 2013).

Statistical Analysis

For the statistical group-level analyses of the baseline-corrected amplitudes, we applied a linear mixed-model ANOVA (function ‘lmer’ (package ‘lme4’) in R (Bates et al. 2015)), fitted with restricted maximum likelihood. Separate models were fitted with either the base or the oddball rate response as the dependent variable. Fixation (eyes vs. mouth), orientation (upright vs. inverted faces) and ROI (LOT, ROT, MO) were added as fixed within-subject factors, and group (ASD vs. TD) as a fixed between-subject factor. To account for the repeated testing, we included a random intercept per participant. Degrees of freedom were calculated using the Kenward–Roger method. Planned posthoc contrasts were tested for significance using a Bonferroni correction for multiple comparisons, by multiplying the p-values by the number of comparisons.

In addition to the group-level analyses, we also evaluated the significance of the fear detection response for each individual participant based on their z-scores. Responses were considered significant if the z-score in one of the three ROIs exceeded 1.64 (i.e. p < 0.05; one-tailed: signal > noise).

Subsequently, we applied a linear discriminant analysis (LDA) on the EEG data to classify individuals as either belonging to the ASD or TD group. We carried out a variable selection (‘gamboost’ function in R (Buehlmann et al. 2018)) to identify the most informative predictors, resulting in 12 input vectors for the LDA model—i.e. the first four oddball harmonics for each of the three ROIs. We expect them to be highly correlated, however, these between-predictor correlations are handled by the LDA (Kuhn and Johnson 2013). Before performing the LDA classification, assumptions were checked. A Henze-Zirklers test (α = 0.05) with supplementary Mardia’s skewness and kurtosis measures showed a multivariate normal distribution of the variables. A Box’s M-test (α = 0.05) revealed equal covariance matrices for both groups. In addition, we assessed the competence of the classification model to address the issues of small sample sizes and possible over-fitting by carrying out permutation tests (Noirhomme et al. 2014).

For the behavioral data of the orthogonal task and the Emotion-matching task, the assumptions of normality and homoscedasticity were checked using a Shapiro–Wilk and Levene’s test, respectively. For normal distributions, an independent-samples T test was applied, otherwise, we performed a Mann–Whitney U test. When the assumption of homogeneity of variances was violated, degrees of freedom were corrected using the Welch-Sattertwaite method. For the Emotion Recognition Task, we applied a linear mixed-model ANOVA, with intensity level (50% vs. 100%) and expression (anger, fear, happiness, sadness, disgust, surprise) as fixed within-subject factors and group as between-subject factor. Again, we included a random intercept per participant.

All assumptions in terms of linearity, normality and constance of variance of residuals were verified and met for all linear mixed-model ANOVAs.

Due to equipment failure, data on the Emotion Recognition Task were missing for one TD participant. In addition, data of the Emotion-matching task were discarded for one TD participant because he did not follow the instructions and randomly pressed the buttons.

All analyses have been performed with and without inclusion of colorblind children, ASD children with comorbidities, and ASD children who take medication. As their inclusion/exclusion did not affect any results, we only report results with all participants included.

Results

General Visual Base Rate Responses

Clear brain responses were visible at the 6 Hz base rate and harmonics, reflecting the general visual response to the faces (Fig. 2). The response was distributed over medial occipital sites. The linear mixed-model ANOVA revealed a highly significant main effect of ROI (F(2,498)= 441.26, p = < 0.001), with planned contrasts indicating highest responses in the MO region and lowest responses in the LOT region (MLOT = 2.49 < MROT = 3.19 < MMO = 7.21; t(498)LOT–MO = − 27.52, t(498)LOT–ROT = − 4.07, t(498)ROT–MO = − 23.45, all pBonferroni < 0.001). There were no other significant main and/or interaction effects, suggesting similar synchronization to the flickering stimuli in the two participant groups (all p > 0.15).

Similar general visual responses to faces in ASD and TDs. Left SNR spectrum over the averaged electrodes of the MO region, with clear peaks at the base frequency (6 Hz) and its two subsequent harmonics (12 Hz and 18 Hz). Middle Scalp distribution of the general visual base rate responses. The four most leftward and four most rightward open circles on the topographical map constitute LOT and ROT, respectively. The two central open circles constitute MO. Right The summed baseline-subtracted amplitudes across the three harmonics of the base rate for each of the three ROIs [medial-occipital (MO) and left and right occipito-temporal (LOT and ROT) regions]. Error bars indicate standard errors of the mean. The main effect of ROI is indicated on the bar graphs, with MO > LOT & ROT, and ROT > LOT

Fear Discrimination Responses

Figure 3 visualizes clear fear discrimination responses in the four experimental conditions at the oddball frequency and its harmonics.

Oddball responses for each experimental condition (based on the orientation of the face and the position of the fixation cross; eye fixation on the top, mouth fixation on the bottom) visualized via two measures: (1) SNR spectra averaged across the three ROIs, and (2) summed baseline-subtracted amplitudes for the seven first oddball harmonics (excluding 6 Hz; i.e. the dashed line) shown in bar graphs. Error bars reflect standard errors of the mean

Most importantly, the linear mixed-model ANOVA of the fear detection responses showed a highly significant main effect of group, with higher responses in the TD group (MTD = 1.69) versus the ASD group (MASD = 1.08, F(1,44) = 12.17, p = 0.001; Fig. 4a). Additionally, the main effect for orientation of the presented faces (F(1,498) = 11.52, p < 0.001) indicated higher fear discrimination responses for upright versus inverted faces (Minverted = 1.28 < Mupright = 1.49; Fig. 4b). The main effect of fixation (F(1,498) = 155.51, p < 0.001) demonstrated much higher discrimination responses when the fixation cross is placed on the mouth versus the eyes (Meyes = 1.01 < Mmouth = 1.76; Fig. 4c). The absence of interactions with Group (all p > 0.56) indicated that all these effects were equally present in the TD and the ASD group. The linear mixed-model ANOVA yielded no main effect of ROI (p > 0.63).

Main effects of group, orientation and fixation. Mean fear discrimination responses (averaged across all three ROIs) of both participant groups in all experimental conditions, visualized via scalp topographies and bar graphs of the summed baseline-subtracted amplitudes for the included oddball harmonics (until 8.4 Hz, excluding the 6 Hz harmonic). Error bars are standard errors of the mean. a The main effect of Group shows overall higher responses to fearful faces in the TD group compared to the ASD group. These significantly higher responses of the TD group remain visible in all conditions. b The main effect of Orientation demonstrates a clear inversion effect, with significantly higher fear discrimination responses to upright faces compared to inverted faces. c The main effect of Fixation reveals significantly higher responses when the fixation cross is placed on the mouth, compared to the eye region

Thus, the group analysis revealed large and significant quantitative differences in the amplitude of the fear discrimination response between TD and ASD. Yet, it is also important to investigate to what extent reliable fear discrimination responses can be recorded at the individual subject level. Statistical analysis of the individual subject data confirmed that all subjects but one boy with ASD (45/46) displayed a significant discrimination response for the most robust condition with upright faces and fixation cross on the mouth (z > 1.64, p < 0.05). See Table 2 for the results in all conditions.

Thus far, a reliable biomarker to distinguish people with and without ASD has not yet been established (Raznahan et al. 2009). To qualify as biomarker, objective quantifications of biological and functional processes are needed at the individual level (Mcpartland 2016; McPartland 2017), rather than mere statistical group differences. To evaluate the potential of our fear detection paradigm as a sensitive and objective marker of clinical status, we analyzed how well these responses can predict group membership of our participants. To understand how well the LDA classification generalizes, we relied on a leave-one-out cross-validation, which estimated an overall accuracy of 83% of the LDA model to predict group membership. More specifically, the sensitivity (i.e. correctly classifying individuals with ASD in the ASD group) and specificity (i.e. correctly classifying TD boys in the TD group) were estimated at 78% and 87%, respectively. The linear differentiation between both groups based on the full dataset is shown in Fig. 5. Statistical assessment of the competence of the classification model demonstrated a likelihood of obtaining the observed accuracy by chance of p < 0.0001 for 10,000 permutations and additional inclusion of the neural responses of either the 7.2 Hz oddball harmonic or both the 7.2 Hz and 8.4 Hz oddball harmonics.

Violin plot of the LDA classification. The horizontal line represents the decision boundary of the LDA classifier and illustrates the differentiation between the two groups. When fitted to the full dataset, the LDA classifies 21 out of 23 participants with ASD and 22 out of 23 TD participants correctly. In white: mean ± 1 SD

Behavioral Measures: Orthogonal Task and Explicit Facial Emotion Processing

Results from the Mann–Whitney U test demonstrated equal accuracy (MASD = 90%, SD = 12; MTD = 93%, SD = 6.8; W = 215, p = 0.54) and reaction times (MASD = 0.53 s, MTD = 0.48 s, W = 296, p = 0.21) for both groups on the fixation cross color change detection task, suggesting a similar level of attention throughout the EEG experiment.

For both explicit emotion processing computer tasks, all ASD and TD participants performed above chance level. A mixed-model ANOVA on the accuracy data of the Emotion Recognition Task showed that full-blown expressions were labelled more accurately compared to expressions presented at 50% intensity (F(1,478) = 5.59, p = 0.019). A main effect of emotion (F(5,478) = 76.32, p < 0.001) revealed that happy and angry faces were most often labelled correctly, whereas fearful and sad faces were the most difficult to label correctly. The main effect of group and the interaction effects were not significant (all p > 0.40). To ensure that results were not driven by differential response biases, we calculated how often specific emotion labels were chosen by each individual. Since we did not find group differences in response bias (see Appendix Table 3), there was no need to repeat the analysis with corrected performances.

Whereas both participant groups showed equal performance in terms of emotion labelling, a significant group difference was found for the matching of expressive faces, with the TD group outperforming the ASD group (MASD = 63%, SD = 11.0; MTD = 69%, SD = 6.8; t(37.01) = − 2.29, p = 0.028). No differences were found in reaction times (MASD = 4.27 s, MTD = 4.24 s, t(41.78) = 0.08, p = 0.94).

Discussion

With FPVS-EEG, we evaluated the implicit neural sensitivity of school-aged boys with and without ASD to detect briefly presented fearful faces among a stream of neutral faces, and we investigated to what extent this sensitivity is influenced by the orientation of the face and by attentional focus to the eye versus mouth region. In addition, we analyzed the performance of both groups on two explicit tasks: an emotion labeling and an emotion matching task.

No group differences were found for the general visual base rate responses, indicating that the brains of children with and without ASD are equally capable of synchronizing with the periodically flickering stimuli, irrespective of the position of the fixation cross or the orientation of the presented faces. However, examination of the responses to changes in expression did reveal differences. We found an overall lower sensitivity to detect fearful faces in boys with ASD as compared to TD boys, regardless whether the faces were presented upright or inverted, or whether attention was oriented towards the eye or the mouth region. As there were no group differences in accuracy and response time of the performances on the orthogonal task, there is no evidence of less attention or motivation of the ASD participants. Analysis of the effects of the experimental conditions showed similar effects in both groups, with higher discrimination responses for upright versus inverted faces, and higher discrimination responses for fixations focused on the mouth versus the eyes. Results of the Emotion Recognition Task showed an equal performance in both groups, with a more accurate performance on the full blown versus half intensity expressions, and with more accurate labelling of happy and angry expressions as compared to sad and fearful expressions. Results on the Emotion-matching task did reveal a group difference, with the TD group outperforming the ASD group.

Neural Responses Children Versus Adults

Clear responses to brief changes in facial expressions were visible in both participant groups, indicating that 8-to-12-year old boys can detect rapid changes to fearful expressions. Comparison of the brain responses of the TD boys in our sample with brain responses of healthy adults on an identical FPVS paradigm (Dzhelyova et al. 2016) reveals topographical differences for the oddball, but not for the base rate responses. Base rate responses of both children and adults were recorded over the medial occipital sites, spreading out bilaterally to the left and right occipito-temporal regions, with a right hemisphere advantage. The expression-change responses of adults were distributed over occipito-temporal sites, with a right hemisphere advantage (Dzhelyova et al. 2016), whereas the oddball responses of the children in our study did not show this clear lateralization. The relatively larger involvement of MO in fear detection in children as compared to adults may reflect a relatively larger involvement of the primary visual cortex, and thus low-level visual processing (Dzhelyova et al. 2016; Dzhelyova and Rossion 2014a; Liu-Shuang et al. 2014). Indeed, the neural system involved in (expressive) face processing progressively specializes throughout development (Cohen Kadosh and Johnson 2007; Leppänen and Nelson 2009), which is mirrored by a shift in neural activation from a broader medial distribution in childhood to a more focused (bi-)lateral or unilateral distribution in adulthood (de Haan 2011; Dzhelyova et al. 2016; Taylor et al. 2004).

The typical age-related improvement in facial emotion processing (Herba et al. 2006; Herba and Phillips 2004; Luyster et al. 2017) seems to be absent (Gepner 2001; Rump et al. 2009), or at least less pronounced (Trevisan and Birmingham 2016) in individuals ASD. For example, although results are mixed, different latencies and/or amplitudes for the N170 component in ASD, relative to TDs, have been reported from early childhood (Dawson et al. 2004), extending throughout adolescence (Batty et al. 2011; Wang et al. 2004). However, different results when matching participants on verbal or mental age instead of chronological age suggest a developmental delay in specialized facial expression processing in children with ASD (Batty et al. 2011; De Jong et al. 2008), but the neural mechanisms across the developmental trajectory of facial expression processing in ASD remain unclear (Leung 2018). Therefore, from a developmental perspective, applying this paradigm in children, adolescents and adults with ASD could clarify the course of the atypical maturation in individuals with ASD.

Reduced Neural Sensitivity to Fearful Faces in ASD

In terms of topographical distribution of the selective neural response to fearful faces, there is no difference between the ASD and TD group, suggesting the use of a similar emotional face processing network. However, given the progressive development of emotional face processing capacities in childhood, potential group differences in topography may still appear in adolescence and adulthood.

Turning towards the size of the selective response to the fearful faces, we do observe clear group differences, with lower amplitudes in the ASD sample. Given that adults with ASD display impaired emotion detection (Frank et al. 2018), it is not surprising that a deficit in this ability is already present during childhood. Importantly, the reduced neural sensitivity for detecting fearful faces among a stream of neutral faces is not due to deficits in implicitly detecting oddball categories per se. Indeed, a parallel study on a related group of 8-to-12 year old boys with ASD versus TD matched controls (Vettori et al. 2019) does show intact generic face categorization responses in children with ASD, indicating an equal sensitivity to implicitly detect faces within a stream of non-social images. However, boys with ASD were clearly less sensitive to detect the more subtle socio-communicative cues signaling the appearance of a different facial identity (Vettori et al. 2019). In the present study, we only used fearful faces to investigate facial expression discrimination. Including other emotions as well could elucidate whether facial emotion detection deficits in individuals with ASD are specific for fear, or if results may generalize to other facial expressions.

Previous studies have shown that age (Lozier et al. 2014; Luyster et al. 2017) and intellectual ability (Hileman et al. 2011; Trevisan and Birmingham 2016) might influence emotion processing performance. As our participant groups were closely matched on age and IQ, the observed group difference in neural sensitivity to fearful faces cannot be attributed to these factors. Likewise, neither can the group difference be driven by a reduced focus of attention in the ASD group, given the equal performances of both groups on the orthogonal task. Five participants with ASD had a comorbid ADHD diagnosis, which may influence attention and be associated with emotion recognition deficits (Tye et al. 2014). Yet, exclusion of these participants did not alter the findings in any way, indicating that the observed group difference in oddball responses is strong and not driven by comorbid ADHD.

Another factor that could explain the differences in fear detection is social functioning. Social functioning has been found to be related to emotional face processing on the neural level (Dawson et al. 2004; Yeung et al. 2014). As evaluating this factor was out of the scope of our study, we did not collect early personal data on the social behavior of our participants, other than the SRS, nor did we administer additional behavioral tasks that could have tapped more into the social skills. Yet, future studies could further explore if and how differences in social functioning affect emotion perception.

Inversion Affects Fear Detection

Face processing, both in terms of identity and expressions, typically involves a holistic/configural approach (Rossion 2013; Tanaka and Farah 1993). Accordingly, performance is typically disrupted by inverting faces and thereby forcing the use of a less efficient and more feature-based approach, i.e. the face inversion effect (Rossion 2008; Tanaka and Simonyi 2016). Previous studies with similar FPVS-EEG paradigms have indeed demonstrated significantly reduced oddball responses for identity (Liu-Shuang et al. 2014; Vettori et al. 2019) and emotion (Dzhelyova et al. 2016) discrimination in TD children and adults, respectively, when faces are presented upside-down compared to upright. Moreover, the study of Vettori et al. (2019) showed a strong inversion effect for facial identity discrimination in TD boys and an absent inversion effect in boys with ASD. These findings were interpreted as evidence for holistic face perception in TD, and a more feature-based face processing strategy in ASD (Vettori et al. 2019). In the current study, we find a significant face inversion effect in both the TD and ASD sample, suggesting that both groups generally apply a holistic facial expression processing approach, additionally supported by an effective feature-based approach. There is evidence that facial expression processing—and in particular fear detection (Bombari et al. 2013)—is more strongly determined by the processing of specific salient facial features instead of the configural relationship between those features (Sweeny et al. 2013). In our study, for instance, the open mouth in the fearful faces might have facilitated fear detection, also in the inverted condition.

Directing Attention to the Mouth Facilitates Fear Detection

Evidence regarding the role of the eyes versus the mouth in fear recognition is mixed (Beaudry et al. 2014; Eisenbarth and Alpers 2011; Guarnera et al. 2015). In a similar vein, even though reduced eye contact is one of the clinical criteria of ASD (American Psychiatric Association 2014), the empirical evidence that individuals with ASD focus less on the eyes and more on the mouth is not unequivocal (Bal et al. 2010; Black et al. 2017; Guillon et al. 2014; Nuske et al. 2014). Here, we do find higher fear discrimination responses in boys with ASD when their attention is directed towards the mouth instead of the eyes, which suggests that the mouth region is more informative for them than the eye region. However, rather unexpectedly, this was also the case in the TD group. Apparently, for both groups of children, the mouth is a more salient cue to rapidly detect fearful faces than the eyes. It has indeed been suggested that the mouth is the most informative area for expression processing (Blais et al. 2012) and that, when presented opened, it might enhance early automatic attention (Langeslag et al. 2018). Especially the presence of teeth tends to augment neural responses to expressive faces (DaSilva et al. 2016). The occurring contrast of white teeth against a darker mouth opening and lips might draw the attention. Although the images in our study were presented at a very fast rate, these low-level changes of the fearful mouth might elicit larger responses.

Implicit Versus Explicit Emotion Processing

The contradicting findings on the behavioral face processing tasks align with the generally mixed findings in previous behavioral research (Lacroix et al. 2014; Uljarevic and Hamilton 2013). Contrary to the implicit FPVS-EEG paradigm, explicit tasks allow the use of various verbal, perceptual and cognitive compensatory strategies (Harms et al. 2010), possibly aiding individuals with ASD to compensate for their intrinsic emotion processing deficits (Frank et al. 2018). These compensatory mechanisms, as well as task characteristics, could account for the mixed findings on behavioral discrimination between ASD and TD individuals (Jemel et al. 2006; Lozier et al. 2014; Nuske et al. 2013; Uljarevic and Hamilton 2013), indicating the limited sensitivity of (certain) behavioral measures to pinpoint the socio-communicative impairments of individuals with ASD (Harms et al. 2010).

The (small) group difference found on the matching task might relate to the more feature based approach used by the ASD children to process facial expressions. As the target faces are paired with maximally confusable distractor emotions, involving similar low-level features (Palermo et al. 2013), reliance on the separate facial features instead of the configuration of the facial expressions may hamper accurate emotion matching in the ASD group.

Future Research

In addition to the behavioral emotion matching or labelling tasks, an additional explicit emotion detection task at the same rapid presentation rate might allow to compare the implicit and explicit emotion discrimination abilities more directly in these samples.

Our results support the sensitivity of the FPVS-EEG approach to rapidly detect and quantify even small responses at an individual level (Dzhelyova et al. 2016; Liu-Shuang et al. 2014; Liu-Shuang et al. 2016). Furthermore, the predefined expression change frequency allows a direct and objective measurement of the discrimination response, without the complexity of post hoc subtraction of the responses (Campanella et al. 2002; Gayle et al. 2012; Stefanics et al. 2012). It also tackles the hurdle of subjectively defining various components and time windows as is done with the standard ERP approach (Kremlácek et al. 2016). Another asset of the FVPS-EEG approach is the fast acquisition of profuse discrimination responses in a short amount of time, because of the rapid stimulus presentation and the high signal-to-noise ratio. Whereas many trials and long recordings are needed in typical ERP studies to obtain reliable responses, we only need four stimulation sequences of 40 s to reliably measure implicit neural discrimination. All these advantages make it a suitable approach for studying populations that are often difficult to include in research, such as infants and people with low-functioning ASD. Furthermore, the promising result of the LDA classification shows the potential of this technique (possibly by combining several paradigms) to serve as a biomarker for ASD. However, to fully understand the potential of FPVS-EEG as a biomarker for socio-communicative deficits, more research is needed in (clinical) samples with a different age and/or IQ.

Conclusions

Our results indicate that children with ASD are less sensitive to rapidly and implicitly detect fearful faces among a stream of neutral faces, possibly contributing to difficulties with emotion processing. Both children with and without ASD apply a combined holistic and feature-based processing style, and rely mostly on information from the mouth region to detect the fearful faces.

The advantages of FPVS-EEG with its implicit nature, the strength of the effects, and its straightforward application and analysis, pave the way to including populations that are often excluded from studies because of verbal or cognitive constraints.

References

Adrian, E. D., & Matthews, B. H. C. (1934). The Berger Rhythm: Potential changes from the occipital lobes in man. Brain, 57, 355–385.

Alonso-Prieto, E., Van Belle, G., Liu-Shuang, J., Norcia, A. M., & Rossion, B. (2013). The 6 Hz fundamental stimulation frequency rate for individual face discrimination in the right occipito-temporal cortex. Neuropsychologia, 51, 2863–2875. https://doi.org/10.1016/j.neuropsychologia.2013.08.018.

American Psychiatric Association. (2000). Diagnostic and Statistical Manual of Mental Disorders - TR (Dutch version) (4th ed.). Amsterdam: Uitgeverij Boom.

American Psychiatric Association. (2014). Diagnostic and Statistical Manual of Mental Disorders (Dutch version) (5th ed.). Amsterdam: Uitgeverij Boom.

Argyle, M. (1972). Non-verbal communication in human social interaction. In R. A. Hinde (Ed.), Non-verbal communication (pp. 243–270). London: Cambridge University Press.

Bal, E., Harden, E., Lamb, D., Van Hecke, A. V., Denver, J. W., & Porges, S. W. (2010). Emotion recognition in children with autism spectrum disorders: Relations to eye gaze and autonomic state. Journal of Autism and Developmental Disorders, 40, 358–370. https://doi.org/10.1007/s10803-009-0884-3.

Bates, D., Maechler, M., Bolker, B., & Walker, S. (2015). Package “lme4”. Journal of Statistical Software, 67, 1–48. https://doi.org/10.2307/2533043.

Batty, M., Meaux, E., Wittemeyer, K., Rogé, B., & Taylor, M. J. (2011). Early processing of emotional faces in children with autism: An event-related potential study. Journal of Experimental Child Psychology, 109(4), 430–444. https://doi.org/10.1016/j.jecp.2011.02.001.

Beaudry, O., Roy-Charland, A., Perron, M., Cormier, I., & Tapp, R. (2014). Featural processing in recognition of emotional facial expressions. Cognition and Emotion, 28(3), 416–432. https://doi.org/10.1080/02699931.2013.833500.

Behrmann, M., Thomas, C., & Humphreys, K. (2006). Seeing it differently: Visual processing in autism. Trends in Cognitive Sciences, 10(6), 258–264. https://doi.org/10.1016/j.tics.2006.05.001.

Bell, A. J., & Sejnowski, T. J. (1995). An information-maximisation approach to blind separation and blind deconvolution. Neural Computation, 7(6), 1004–1034. https://doi.org/10.1162/neco.1995.7.6.1129.

Black, M. H., Chen, N. T. M., Iyer, K. K., Lipp, O. V., Bölte, S., Falkmer, M., et al. (2017). Mechanisms of facial emotion recognition in autism spectrum disorders: Insights from eye tracking and electroencephalography. Neuroscience and Biobehavioral Reviews, 80, 488–515. https://doi.org/10.1016/j.neubiorev.2017.06.016.

Blais, C., Roy, C., Fiset, D., Arguin, M., & Gosselin, F. (2012). The eyes are not the window to basic emotions. Neuropsychologia, 50, 2830–2838. https://doi.org/10.1016/j.neuropsychologia.2012.08.010.

Bombari, D., Schmid, P. C., Schmid Mast, M., Birri, S., Mast, F. W., & Lobmaier, J. S. (2013). Emotion recognition: The role of featural and configural face information, 66(12), 2426–2442. https://doi.org/10.1080/17470218.2013.789065.

Buehlmann, P., Kneib, T., Schmid, M., Hofner, B., Sobotka, F., Scheipl, F., … Hofner, M. B. (2018). Package “mboost”: Model-Based Boosting.

Campanella, S., Gaspard, C., Debatisse, D., Bruyer, R., Crommelinck, M., & Guerit, J. M. (2002). Discrimination of emotional facial expressions in a visual oddball task: an ERP study. Biological Psychology, 59, 171–186. https://doi.org/10.1016/S0301-0511(02)00005-4.

Cohen Kadosh, K., & Johnson, M. H. (2007). Developing a cortex specialized for face perception. Trends in Cognitive Sciences, 11(9), 367–369. https://doi.org/10.1016/j.tics.2007.06.007.

DaSilva, E. B., Crager, K., Geisler, D., Newbern, P., Orem, B., & Puce, A. (2016). Something to sink your teeth into: The presence of teeth augments ERPs to mouth expressions. NeuroImage, 127, 227–241. https://doi.org/10.1016/j.neuroimage.2015.12.020.

Dawson, G., Webb, S. J., Carver, L., Panagiotides, H., & Mcpartland, J. (2004). Young children with autism show atypical brain responses to fearful versus neutral facial expressions of emotion. Developmental Science, 7(3), 340–359. https://doi.org/10.1111/j.1467-7687.2004.00352.x.

de Haan, M. (2011). The neurodevelopment of face perception. In A. J. Calder, G. Rhodes, M. H. Johnson, & J. V. Haxby (Eds.), The Oxford handbook of face perception (pp. 731–751). New York: Oxford University Press Inc.

De Jong, M. C., Van Engeland, H., & Kemner, C. (2008). Attentional effects of gaze shifts are influenced by emotion and spatial frequency, but not in autism. Journal of the American Academy of Child and Adolescent Psychiatry, 47(4), 443–454. https://doi.org/10.1097/CHI.0b013e31816429a6.

Dzhelyova, M., Jacques, C., & Rossion, B. (2016). At a single glance: Fast periodic visual stimulation uncovers the spatio-temporal dynamics of brief facial expression changes in the human brain. Cerebral Cortex. https://doi.org/10.1093/cercor/bhw223.

Dzhelyova, M., & Rossion, B. (2014a). Supra-additive contribution of shape and surface information to individual face discrimination as revealed by fast periodic visual stimulation. Journal of Vision, 14(15), 1–14. https://doi.org/10.1167/14.14.15.

Dzhelyova, M., & Rossion, B. (2014b). The effect of parametric stimulus size variation on individual face discrimination indexed by fast periodic visual stimulation. BMC Neuroscience. https://doi.org/10.1186/1471-2202-15-87.

Eisenbarth, H., & Alpers, G. W. (2011). Happy mouth and sad eyes: scanning emotional facial expressions. Emotion, 11(4), 860–865. https://doi.org/10.1037/a0022758.

Elfenbein, H. A., & Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychological Bulletin, 128(2), 203–235. https://doi.org/10.1037/0033-2909.128.2.203.

Ellison, J. W., & Massaro, D. W. (1997). Featural evaluation, integration, and judgment of facial affect. Journal of Experimental Psychology: Human Perception and Performance, 23(1), 213–226.

Evers, K., Steyaert, J., Noens, I., & Wagemans, J. (2015). Reduced recognition of dynamic facial emotional expressions and emotion-specific response bias in children with an autism spectrum disorder. Journal of Autism and Developmental Disorders, 45, 1774–1784. https://doi.org/10.1007/s10803-014-2337-x.

Feuerriegel, D., Churches, O., Hofmann, J., & Keage, H. A. D. (2015). The N170 and face perception in psychiatric and neurological disorders: A systematic review. Clinical Neurophysiology, 126, 1141–1158. https://doi.org/10.1016/j.clinph.2014.09.015.

Frank, R., Schulze, L., Hellweg, R., Koehne, S., & Roepke, S. (2018). Impaired detection and differentiation of briefly presented facial emotions in adults with high-functioning autism and Asperger syndrome. Behaviour Research and Therapy, 104, 7–13. https://doi.org/10.1016/j.brat.2018.02.005.

Gagnon, M., Gosselin, P., & Maassarani, R. (2014). Children’s ability to recognize emotions from partial and complete facial expressions. Journal of Genetic Psychology, 175(5), 416–430. https://doi.org/10.1080/00221325.2014.941322.

Gayle, L. C., Gal, D. E., & Kieffaber, P. D. (2012). Measuring affective reactivity in individuals with autism spectrum personality traits using the visual mismatch negativity event-related brain potential. Frontiers in Human Neuroscience. https://doi.org/10.3389/fnhum.2012.00334.

Gendron, M., Roberson, D., van der Vyver, J. M., & Feldman Barrett, L. (2014). Perceptions of emotion from facial expressions are not culturally universal: Evidence from a remote culture. Emotion, 14(2), 251–262. https://doi.org/10.1037/a0036052.Perceptions.

Gepner, B. (2001). Motion and emotion: A novel approach to the study of face processing by young autistic children. Journal of Autism and Developmental Disorders, 31(1), 37–45. https://doi.org/10.1023/A:1005609629218.

Gross, T. F. (2008). Recognition of immaturity and emotional expressions in blended faces by children with autism and other developmental disabilities. Journal of Autism and Developmental Disorders, 38, 297–311. https://doi.org/10.1007/s10803-007-0391-3.

Grossman, R. B., & Tager-Flusberg, H. (2008). Reading faces for information about words and emotions in adolescents with autism. Research in Autism Spectrum Disorders, 2, 681–695. https://doi.org/10.1016/j.rasd.2008.02.004.

Guarnera, M., Hichy, Z., Cascio, M. I., & Carrubba, S. (2015). Facial expressions and ability to recognize emotions from eyes or mouth in children. Europe’s Journal of Psychology, 11(2), 183–196. https://doi.org/10.5964/ejop.v11i2.890.

Guillon, Q., Hadjikhani, N., Baduel, S., & Rogé, B. (2014). Visual social attention in autism spectrum disorder: Insights from eye tracking studies. Neuroscience and Biobehavioral Reviews, 42, 279–297. https://doi.org/10.1016/j.neubiorev.2014.03.013.

Haney, J. L. (2016). Autism, females, and the DSM-5: Gender bias in autism diagnosis. Social Work in Mental Health, 14(4), 396–407. https://doi.org/10.1080/15332985.2015.1031858.

Harms, M. B., Martin, A., & Wallace, G. L. (2010). Facial emotion recognition in autism spectrum disorders: A review of behavioral and neuroimaging studies. Neuropsychology Review, 20, 290–322. https://doi.org/10.1007/s11065-010-9138-6.

Herba, C. M., Landau, S., Russell, T., Ecker, C., & Phillips, M. L. (2006). The development of emotion-processing in children: Effects of age, emotion, and intensity. Journal of Child Psychology and Psychiatry and Allied Disciplines, 47(11), 1098–1106. https://doi.org/10.1111/j.1469-7610.2006.01652.x.

Herba, C. M., & Phillips, M. (2004). Annotation: Development of facial expression recognition from childhood to adolescence: Behavioural and neurological perspectives. Journal of Child Psychology and Psychiatry and Allied Disciplines, 45(7), 1185–1198. https://doi.org/10.1111/j.1469-7610.2004.00316.x.

Hileman, C. M., Henderson, H., Mundy, P., Newell, L., & Jaime, M. (2011). Developmental and individual differences on the P1 and N170 ERP components in children with and without autism. Developmental Neuropsychology, 36(2), 214–236. https://doi.org/10.1080/87565641.2010.549870.

Hinojosa, J. A., Mercado, F., & Carretié, L. (2015). N170 sensitivity to facial expression: A meta-analysis. Neuroscience and Biobehavioral Reviews, 55, 498–509. https://doi.org/10.1016/j.neubiorev.2015.06.002.

Humphreys, K., Minshew, N., Leonard, G. L., & Behrmann, M. (2007). A fine-grained analysis of facial expression processing in high-functioning adults with autism. Neuropsychologia, 45, 685–695. https://doi.org/10.1016/j.neuropsychologia.2006.08.003.

Jemel, B., Mottron, L., & Dawson, M. (2006). Impaired face processing in autism: Fact or artifact? Journal of Autism and Developmental Disorders, 36(1), 91–106. https://doi.org/10.1007/s10803-005-0050-5.

Jeste, S. S., & Nelson, C. A. (2009). Event related potentials in the understanding of autism spectrum disorders: An analytical review. Journal of Autism and Developmental Disorders, 39, 495–510. https://doi.org/10.1007/s10803-008-0652-9.

Kang, E., Keifer, C. M., Levy, E. J., Foss-Feig, J. H., McPartland, J. C., & Lerner, M. D. (2018). Atypicality of the N170 Event-Related Potential in Autism Spectrum Disorder: A Meta-analysis. Biological Psychiatry. https://doi.org/10.1016/j.bpsc.2017.11.003.

Kessels, R. P. C., Montagne, B., Hendriks, A. W., Perrett, D. I., & De Haan, E. H. F. (2014). Assessment of perception of morphed facial expressions using the emotion recognition task: Normative data from healthy participants aged 8–75. Journal of Neuropsychology, 8, 75–93. https://doi.org/10.1111/jnp.12009.

Kremláček, J., Kreegipuu, K., Tales, A., Astikainen, P., Põldver, N., Näätänen, R., et al. (2016). Visual mismatch negativity (vMMN): A review and meta-analysis of studies in psychiatric and neurological disorders. Cortex, 80, 76–112. https://doi.org/10.1016/j.cortex.2016.03.017.

Kuhn, M., & Johnson, K. (2013). Applied predictive modeling. New York: Springer. https://doi.org/10.1007/978-1-4614-6849-3.

Lacroix, A., Guidetti, M., Rogé, B., & Reilly, J. (2014). Facial emotion recognition in 4- to 8-year-olds with autism spectrum disorder: A developmental trajectory approach. Research in Autism Spectrum Disorders, 8, 1146–1154. https://doi.org/10.1016/j.rasd.2014.05.012.

Langeslag, S. J. E., Gootjes, L., & van Strien, J. W. (2018). The effect of mouth opening in emotional faces on subjective experience and the early posterior negativity amplitude. Brain and Cognition, 127, 51–59. https://doi.org/10.1016/j.bandc.2018.10.003.

Law Smith, M. J., Montagne, B., Perrett, D. I., Gill, M., & Gallagher, L. (2010). Detecting subtle facial emotion recognition deficits in high-functioning Autism using dynamic stimuli of varying intensities. Neuropsychologia, 48, 2777–2781. https://doi.org/10.1016/j.neuropsychologia.2010.03.008.

Leleu, A., Dzhelyova, M., Rossion, B., Brochard, R., Durand, K., Schaal, B., et al. (2018). Tuning functions for automatic detection of brief changes of facial expression in the human brain. NeuroImage, 179, 235–251. https://doi.org/10.1016/j.neuroimage.2018.06.048.

Leppänen, J. M., & Nelson, C. A. (2009). Tuning the developing brain to social signals of emotions. Nature Reviews Neuroscience, 10, 37–47. https://doi.org/10.1038/nrn2554.

Leung, D., Ordqvist, A., Falkmer, T., Parsons, R., & Falkmer, M. (2013). Facial emotion recognition and visual search strategies of children with high functioning autism and Asperger syndrome. Research in Autism Spectrum Disorders, 7, 833–844. https://doi.org/10.1016/j.rasd.2013.03.009.

Leung, R. C. (2018). Neural substrates of emotional face processing across development in autism spectrum disorder.

Liu-Shuang, J., Norcia, A. M., & Rossion, B. (2014). An objective index of individual face discrimination in the right occipito-temporal cortex by means of fast periodic oddball stimulation. Neuropsychologia, 52, 57–72. https://doi.org/10.1016/j.neuropsychologia.2013.10.022.

Liu-Shuang, J., Torfs, K., & Rossion, B. (2016). An objective electrophysiological marker of face individualisation impairment in acquired prosopagnosia with fast periodic visual stimulation. Neuropsychologia, 83, 100–113. https://doi.org/10.1016/j.neuropsychologia.2015.08.023.

Loomes, R., Hull, L., & Mandy, W. P. L. (2017). What is the male-to-female ratio in autism spectrum disorder? A systematic review and meta-analysis. Journal of the American Academy of Child and Adolescent Psychiatry, 56(6), 466–474. https://doi.org/10.1016/j.jaac.2017.03.013.

Lozier, L. M., Vanmeter, J. W., & Marsh, A. A. (2014). Impairments in facial affect recognition associated with autism spectrum disorders: A meta-analysis. Development and Psychopathology, 26, 933–945. https://doi.org/10.1017/S0954579414000479.

Luckhardt, C., Jarczok, T. A., & Bender, S. (2014). Elucidating the neurophysiological underpinnings of autism spectrum disorder: New developments. Journal of Neural Transmission, 121, 1129–1144. https://doi.org/10.1007/s00702-014-1265-4.

Lundqvist, D., Flykt, A., & Öhman, A. (1998). The Karolinska directed emotional faces—KDEF. Stockholm: Karolinska Institutet.

Luyster, R. J., Bick, J., Westerlund, A., & Nelson, C. A., III. (2017). Testing the effects of expression, intensity and age on emotional face processing in ASD. Neuropsychologia. https://doi.org/10.1016/j.neuropsychologia.2017.06.023.

Makeig, S., Bell, A. J., Jung, T.-P., & Sejnowski, T. J. (1995). Independent component analysis of electroencephalographic data. In D. S. Touretzky, M. C. Mozer, & M. E. Hasselmo (Eds.), Advances in neural information processing systems 8 (pp. 145–151). Cambridge (MA): MIT Press.

McClure, E. (2000). A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychological Bulletin, 126(3), 424–453.

McMahon, C. M., Henderson, H. A., Newell, L., Jaime, M., & Mundy, P. (2016). Metacognitive awareness of facial affect in higher-functioning children and adolescents with autism spectrum disorder. Journal of Autism and Developmental Disorders, 46, 882–898. https://doi.org/10.1007/s10803-015-2630-3.

Mcpartland, J. C. (2016). Considerations in biomarker development for neurodevelopmental disorders. Current Opinion in Neurology, 29(2), 118–122. https://doi.org/10.1097/WCO.0000000000000300.Considerations.

McPartland, J. C. (2017). Developing clinically practicable biomarkers for autism spectrum disorder. Journal of Autism and Developmental Disorders, 47, 2935–2937. https://doi.org/10.1007/s10803-017-3237-7.

Montagne, B., Kessels, R. P. C., De Haan, E. H. F., & Perrett, D. I. (2007). The emotion recognition task: A paradigm to measure the perception of facial emotional expressions at different intensities. Perceptual and Motor Skills, 104, 589–598. https://doi.org/10.2466/PMS.104.2.589-598.

Monteiro, R., Simões, M., Andrade, J., & Castelo Branco, M. (2017). Processing of facial expressions in autism: A systematic review of EEG/ERP evidence. Review Journal of Autism and Developmental Disorders, 4, 255–276. https://doi.org/10.1007/s40489-017-0112-6.

Neumann, D., Spezio, M. L., Piven, J., & Adolphs, R. (2006). Looking you in the mouth: Abnormal gaze in autism resulting from impaired top-down modulation of visual attention. SCAN, 1, 194–202. https://doi.org/10.1093/scan/nsl030.

Noirhomme, Q., Lesenfants, D., Gomez, F., Soddu, A., Schrouff, J., Garraux, G., … Laureys, S. (2014). Biased binomial assessment of cross-validated estimation of classification accuracies illustrated in diagnosis predictions. NeuroImage, 4, 687–694. https://doi.org/10.1016/j.nicl.2014.04.004

Nuske, H. J., Vivanti, G., & Dissanayake, C. (2013). Are emotion impairments unique to, universal, or specific in autism spectrum disorder? A comprehensive review. Cognition & Emotion, 27(6), 1042–1061. https://doi.org/10.1080/02699931.2012.762900.

Nuske, H. J., Vivanti, G., & Dissanayake, C. (2014a). Reactivity to fearful expressions of familiar and unfamiliar people in children with autism : An eye-tracking pupillometry study. Journal of Neurodevelopmental Disorders, 6, 14.

Nuske, H. J., Vivanti, G., Hudry, K., & Dissanayake, C. (2014b). Pupillometry reveals reduced unconscious emotional reactivity in autism. Biological Psychology, 101, 24–35. https://doi.org/10.1016/j.biopsycho.2014.07.003.

Palermo, R., Connor, K. B., Davis, J. M., Irons, J., & McKone, E. (2013). New tests to measure individual differences in matching and labelling facial expressions of emotion, and their association with ability to recognise vocal emotions and facial identity. PLoS ONE. https://doi.org/10.1371/journal.pone.0068126.

Pelphrey, K., Sasson, N. J., Reznick, J. S., Paul, G., Goldman, B. D., & Piven, J. (2002). Visual scanning of faces in autism. Journal of Autism and Developmental Disorders, 32(4), 249–261. https://doi.org/10.1023/A:101637461.

Raznahan, A., Giedd, J. N., & Bolton, P. F. (2009). Neurostructural endophenotypes in autism spectrum disorder. In M. S. Ritsner (Ed.), The handbook of neuropsychiatric biomarkers, endophenotypes and genes volume II: Neuroanatomical and neuroimaging endophenotypes and biomarkers. New York: Springer. https://doi.org/10.15713/ins.mmj.3.

Retter, T. L., & Rossion, B. (2016). Uncovering the neural magnitude and spatio-temporal dynamics of natural image categorization in a fast visual stream. Neuropsychologia, 91, 9–28. https://doi.org/10.1016/j.neuropsychologia.2016.07.028.

Roeyers, H., Thys, M., Druart, C., De Schryver, M., & Schittekatte, M. (2012). SRS: Screeningslijst voor autismespectrumstoornissen. Amsterdam: Hogrefe.

Rosset, D. B., Rondan, C., Da Fonseca, D., Santos, A., Assouline, B., & Deruelle, C. (2008). Typical emotion processing for cartoon but not for real faces in children with autistic spectrum disorders. Journal of Autism and Developmental Disorders, 38, 919–925. https://doi.org/10.1007/s10803-007-0465-2.

Rossion, B. (2008). Picture-plane inversion leads to qualitative changes of face perception. Acta Psychologica, 128, 274–289. https://doi.org/10.1016/j.actpsy.2008.02.003.

Rossion, B. (2013). The composite face illusion: A whole window into our understanding of holistic face perception. Visual Cognition. https://doi.org/10.1080/13506285.2013.772929.

Rump, K. M., Giovannelli, J. L., Minshew, N. J., & Strauss, M. S. (2009). The development of emotion recognition in individuals with autism. Child Development, 80(5), 1434–1447.

Sattler, J. M. (2001). Assessment of children: Cognitive applications (4th ed.). San Diego: Jerome M Sattler Publisher Inc.

Sawyer, A. C. P., Williamson, P., & Young, R. L. (2012). Can gaze avoidance explain why individuals with Asperger’s syndrome can’t recognise emotions from facial expressions? Journal of Autism and Developmental Disorders, 42, 606–618. https://doi.org/10.1007/s10803-011-1283-0.

Smith, M. L. (2012). Rapid processing of emotional expressions without conscious awareness. Cerebral Cortex, 22, 1748–1760. https://doi.org/10.1093/cercor/bhr250.

Spezio, M. L., Adolphs, R., Hurley, R. S. E., & Piven, J. (2007). Analysis of face gaze in autism using “Bubbles”. Neuropsychologia, 45, 144–151. https://doi.org/10.1016/j.neuropsychologia.2006.04.027.

Stefanics, G., Csukly, G., Komlósi, S., Czobor, P., & Czigler, I. (2012). Processing of unattended facial emotions: A visual mismatch negativity study. NeuroImage, 59, 3042–3049. https://doi.org/10.1016/j.neuroimage.2011.10.041.

Sweeny, T. D., Suzuki, S., Grabowecky, M., & Paller, K. A. (2013). Detecting and categorizing fleeting emotions in faces. Emotion, 13(1), 76–91. https://doi.org/10.1037/a0029193.

Tanaka, J. W., & Farah, M. J. (1993). Parts and wholes in face recognition. Quarterly Journal of Experimental Psychology, 46A(2), 225–245. https://doi.org/10.1016/0257-8972(91)90292-5.

Tanaka, J. W., & Simonyi, D. (2016). The “parts and wholes” of face recognition: A review of the literature. Quarterly Journal of Experimental Psychology, 69(10), 1876–1889. https://doi.org/10.1080/17470218.2016.1146780.

Taylor, M. J., Batty, M., & Itier, R. J. (2004). The faces of development: A review of early face processing over childhood. Journal of Cognitive Neuroscience, 16(8), 1426–1442. https://doi.org/10.1002/jcp.22349.

Tracy, J. L., Robins, R. W., Schriber, R. A., & Solomon, M. (2011). Is emotion recognition impaired in individuals with autism spectrum disorders? Journal of Autism and Developmental Disorders, 41, 102–109. https://doi.org/10.1007/s10803-010-1030-y.

Trevisan, D. A., & Birmingham, E. (2016). Are emotion recognition abilities related to everyday social functioning in ASD? A meta-analysis. Research in Autism Spectrum Disorders, 32, 24–42. https://doi.org/10.1016/j.rasd.2016.08.004.

Tye, C., Battaglia, M., Bertoletti, E., Ashwood, K. L., Azadi, B., Asherson, P., et al. (2014). Altered neurophysiological responses to emotional faces discriminate children with ASD, ADHD and ASD + ADHD. Biological Psychology, 103, 125–134. https://doi.org/10.1016/j.biopsycho.2014.08.013.

Uljarevic, M., & Hamilton, A. (2013). Recognition of emotions in autism: A formal meta-analysis. Journal of Autism and Developmental Disorders, 43(7), 1517–1526. https://doi.org/10.1007/s10803-012-1695-5.

Vettori, S., Dzhelyova, M., Van der Donck, S., Jacques, C., Steyaert, J., Rossion, B., et al. (2019). Reduced neural sensitivity to rapid individual face discrimination in autism spectrum disorder. NeuroImage. https://doi.org/10.1016/j.nicl.2018.101613.

Vettori, S., Jacques, C., Boets, B., & Rossion, B. (2018). Can the N170 be used as an electrophysiological biomarker indexing face processing difficulties in autism spectrum disorder? Biological Psychiatry. https://doi.org/10.1016/j.bpsc.2018.07.015.

Wallace, S., Coleman, M., & Bailey, A. (2008). An investigation of basic facial expression recognition in autism spectrum disorders. Cognition and Emotion, 22(7), 1353–1380. https://doi.org/10.1080/02699930701782153.

Wang, A. T., Dapretto, M., Hariri, A. R., Sigman, M., & Bookheimer, S. Y. (2004). Neural correlates of facial affect processing in children and adolescents with autism spectrum disorder. Journal of the American Academy of Child and Adolescent Psychiatry, 43(4), 481–490. https://doi.org/10.1097/00004583-200404000-00015.

Wechsler, D. (1992). Wechsler intelligence scale for children (3rd ed.). London: The Psychological Corporation.

Wegrzyn, M., Vogt, M., Kireclioglu, B., Schneider, J., & Kissler, J. (2017). Mapping the emotional face How individual face parts contribute to successful emotion recognition. PLoS ONE. https://doi.org/10.1371/journal.pone.0177239.

Yeung, M. K., Han, Y. M. Y., Sze, S. L., & Chan, A. S. (2014). Altered right frontal cortical connectivity during facial emotion recognition in children with autism spectrum disorders. Research in Autism Spectrum Disorders, 8, 1567–1577. https://doi.org/10.1016/j.rasd.2014.08.013.

Acknowledgments

This work was supported by Grants of the Research Foundation Flanders (FWO; G0C7816N) and Excellence of Science (EOS) Grants (G0E8718N; HUMVISCAT). The authors would like to thank all the children and parents who contributed to this study.

Author information

Authors and Affiliations

Contributions

BR and BB conceived of the study. SVDD, MD, SV, BR and BB participated in its design. HT and JS contributed to participant recruitment. SVDD, SV and HT collected the data. SVDD, MD and SV statistically analyzed and interpreted the data. SVDD and BB wrote the manuscript. All authors discussed the results and commented on the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Approval

All procedures involving human participants were in accordance with the ethical standards of the authors’ institutional research committee and with the 1964 Declaration of Helsinki and its later amendments.

Informed Consent

Informed consent was obtained from all individual participants and their parents.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (MOV 37506 kb)

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Van der Donck, S., Dzhelyova, M., Vettori, S. et al. Fast Periodic Visual Stimulation EEG Reveals Reduced Neural Sensitivity to Fearful Faces in Children with Autism. J Autism Dev Disord 49, 4658–4673 (2019). https://doi.org/10.1007/s10803-019-04172-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-019-04172-0