Abstract

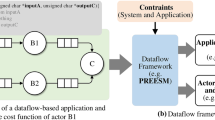

Dataflow programming consists in developing a program by describing its sequential stages and the interactions between them. The runtime systems supporting this kind of programming are responsible for exploiting the parallelism by concurrently executing the different stages as soon as their dependencies are met. In this paper we introduce a new parallel programming model and framework based on the dataflow paradigm. It presents a new combination of features that allows to easily map programs to shared or distributed memory, exploiting data locality and affinity to obtain the same performance than optimized coarse-grain MPI programs. These features include: It is a unique one-tier model that supports hybrid shared- and distributed-memory systems with the same abstractions; it can express activities arbitrarily linked, including non-nested cycles; it uses internally a distributed work-stealing mechanism to allow Multiple-Producer/Multiple-Consumer configurations; and it has a runtime mechanism for the reconfiguration of the dependences and communication channels which also allows the creation of task-to-task data affinities. We present an evaluation using examples of different classes of applications. Experimental results show that programs generated using this framework deliver good performance in hybrid distributed- and shared-memory environments, with a similar development effort as other dataflow programming models oriented to shared-memory.

Similar content being viewed by others

Notes

In the current implementation, MPI communications are always used to move tokens from queue to queue, even when the queue objects are mapped into the same MPI process. Although this simplifies the implementation and MPI communications are highly optimized in shared-memory, this decision clearly opens possibilities for further optimization.

References

Gropp, W., Lusk, E., Skjellum, A.: Using MPI : Portable Parallel Programming With the Message-passing Interface, 2nd edn. MIT Press, Cambridge (1999)

Chandra, R., Dagum, L., Kohr, D., Maydan, D., McDonald, J., Menon, R.: Parallel Programming in OpenMP, 1st edn. Morgan Kaufmann, Burlington (2001)

Aldinucci, M., Danelutto, M., Kilpatrick, P., Torquati, M.: FastFlow: high-level and efficient streaming on multi-core. In: Pllana, S., Xhafa, F. (eds.) Programming Multi-core and Many-core Computing Systems Parallel and Distributed Computing, 1st edn. Wiley, Hoboken (2017)

Budimlić, Z., Burke, M., Cavé, V., Knobe, K., Lowney, G., Newton, R., Palsberg, J., Peixotto, D., Sarkar, V., Schlimbach, F., Tasirlar, S.: Concurrent collections. Sci. Programm. 18(3–4), 203–217 (2010). https://doi.org/10.1155/2010/521797

Pop, A., Cohen, A.: A openstream: expressiveness and data-flow compilation of OpenMP streaming programs. ACM Trans. Archit. Code Optim. 9(4), 53:1–53:25 (2013)

Grelck, C., Scholz, S.-B., Shafarenko, A.: A gentle introduction to S-Net: typed stream processing and declarative coordination of asynchronous components. Parallel Process. Lett. 18(2), 221–237 (2008)

Fresno, J., Gonzalez-Escribano, A., Llanos, D.R.: Runtime support for dynamic skeletons implementation. In: Proceedings of the 19th International Conference on Parallel and Distributed Processing Techniques and Applications (PDPTA), Las Vegas, NV, pp. 320–326. (2013)

Jensen, K., Kristensen, L.M., Wells, L.: Coloured Petri nets and CPN tools for modelling and validation of concurrent systems. Int. J. Softw. Tools Technol. Transf. 9(3–4), 213–254 (2007)

Fresno, J., Gonzalez-Escribano, A., Llanos, D.R.: Additional material for the paper: Dataflow programming model for hybrid distributed and shared memory systems, Tech. Rep. IT-DI-2015-0003, Department of Computer Science, University of Valladolid, Spain (2015). URL http://www.infor.uva.es/jfresno/reports/IT-DI-2015-0003.pdf

Holmes, D.: Ideas for persistant point to point communication. Tech. rep., MPI Forum Meetings (2014). URL http://meetings.mpi-forum.org/2014-11-scbof-p2p.pdf

Dinan, J., Balaji, P., Goodell, D., Miller, D., Snir, M., Thakur, R.: Enabling MPI interoperability through flexible communication endpoints. In: 20th European MPI Users’s Group Meeting, EuroMPI ’13, Madrid, Spain, pp. 13–18. (2013). https://doi.org/10.1145/2488551.2488553

Szalkowski, A., Ledergerber, C., Krähenbühl, P., Dessimoz, C.: SWPS3–fast multi-threaded vectorized Smith-Waterman for IBM Cell/B.E. and x86/SSE2. BMC Res. Notes 1(107)

Clote, P.: Biologically significant sequence alignments using Boltzmann probabilities. Tech. rep. (2003). http://bioinformatics.bc.edu/ clote/pub/boltzmannParis03.pdf

Aldinucci, M., Campa, S., Danelutto, M.: Targeting distributed systems in FastFlow. In: Proceedings of the Euro-Par 2012 Parallel Processing Workshops, Vol. 7640 of Lecture Notes in Computer Science. Springer, Berlin, pp. 47–56. (2013)

Fresno, J., Gonzalez-Escribano, A., Llanos, D.R.: One tier dataflow programming model for hybrid distributed- and shared-memory systems. In: Proceedings of International Workshop on High-Level Programming for Heterogeneous and Hierarchical Parallel Systems (HLPGPU), Prague, Czech Republic (2016)

Aldinucci, M., Meneghin, M., Torquati, M.: Efficient smith-waterman on multi-core with FastFlow. In: Danelutto, M., Bourgeois, J., Gross, T. (eds.), Proceedings of the 18th Euromicro Conference on Parallel, Distributed and Network-based Processing (PDP). IEEE Computer Society, Pisa, Italy, pp. 195–199 (2010)

UniProt Knowledgebase (UniProtKB), www.uniprot.org/

McCabe, T.J.: A complexity measure. IEEE Trans. Softw. Eng. 2(4), 308–320 (1976). https://doi.org/10.1109/TSE.1976.233837

Halstead, M.H.: Elements of Software Science (Operating and Programming Systems Series). Elsevier Science Inc., New York (1977)

Fresno, J., Gonzalez-Escribano, A., Llanos, D.R.: Extending a hierarchical tiling arrays library to support sparse data partitioning. J. Supercomput. 64(1), 59–68 (2013)

Gonzalez-Escribano, A., Torres, Y., Fresno, J., Llanos, D.R.: An extensible system for multilevel automatic data partition and mapping. IEEE Trans. Parallel Distrib. Syst. 25(5), 1145–1154 (2014)

Moreton-Fernandez, A., Gonzalez-Escribano, A., Llanos, D.: Exploiting distributed and shared memory hierarchies with hitmap. In: International Conference on High Performance Computing Simulation (HPCS), pp. 278–286 (2014). https://doi.org/10.1109/HPCSim.2014.6903696

OpenMP Architecture Review Board, OpenMP application program interface version 4.5 (November 2015). http://www.openmp.org/mp-documents/openmp-4.5.pdf

Barcelona Supercomputing Center, OmpSs specification 4.5 (December 2015). http://pm.bsc.es/ompss-docs/specs

Augonnet, C., Thibault, S., Namyst, R., Wacrenier, P.-A.: Starpu: A unified platform for task scheduling on heterogeneous multicore architectures. Concurr. Comput. Pract. Exper. 23(2), 187–198 (2011). https://doi.org/10.1002/cpe.1631

Johan Enmyren, C.W.K.: Skepu: a multi-backend skeleton programming library for multi-gpu systems. In: Proceedings of the Fourth International Workshop on High-Level Parallel Programming and Applications (HLPP 2010), ACM, pp. 5–14 (2010)

Philipp Ciechanowicz, H.K.: Enhancing muesli’s data parallel skeletons for multi-core computer architectures. In: Proceedings of 12th IEEE International Conference on High Performance Computing and Communications (HPCC 2010) (2010)

Acknowledgements

This research has been partially supported by MICINN (Spain) and ERDF program of the European Union: HomProg-HetSys Project (TIN2014-58876-P), PCAS Project (TIN2017-88614-R), CAPAP-H6 (TIN2016-81840-REDT), and COST Program Action IC1305: Network for Sustainable Ultrascale Computing (NESUS). By Junta de Castilla y León, Project PROPHET (VA082P17). And by the computing facilities of Extremadura Research Centre for Advanced Technologies (CETA- CIEMAT), funded by the European Regional Development Fund (ERDF). CETA-CIEMAT belongs to CIEMAT and the Government of Spain.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fresno, J., Barba, D., Gonzalez-Escribano, A. et al. HitFlow: A Dataflow Programming Model for Hybrid Distributed- and Shared-Memory Systems. Int J Parallel Prog 47, 3–23 (2019). https://doi.org/10.1007/s10766-018-0561-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10766-018-0561-2