Abstract

Air pollution and sea pollution are both impacting human health and all the natural environments on Earth. These complex interactions in the biosphere are becoming better known and understood. Major progress has been made in recent past years for understanding their societal and environmental impacts, thanks to remote sensors placed aboard satellites. This paper describes the state of the art of what is known about air pollution and focuses on specific aspects of marine pollution, which all benefit from the improved knowledge of the small-scale eddy field in the oceans. Examples of recent findings are shown, based on the global observing system (both remote and in situ) with standardized protocols for monitoring emerging environmental threats at the global scale.

Similar content being viewed by others

1 Introduction

According to the World Health Organization (WHO), air pollution currently kills more than 4 million people per year (more than cholesterol), and projections indicate that 6.5 million people will be affected in 2050. Pollution levels are very unevenly distributed across the globe (Asia being the most affected), and each individual is affected differently by pollution episodes. On average, an adult inhales about 10 L of air every minute and up to 100 L during sports activities. Children, seniors, and people with cardiovascular or respiratory problems are the most affected. It is estimated that air pollution contributes to 7.6% of all deaths mainly due to cardiovascular and respiratory diseases (World Health Organization 2017). Air pollution is ranked as the fifth highest mortality risk factor associated with 147 million years of healthy life lost (Health Effect Institute 2019). For instance, pollution accounts for 800,000 additional deaths per year in Europe (Fig. 1).

Figure from Lelieveld et al. (2015)

Mortality related to outdoor pollution (in 2010). The color scale represents the number of deaths over areas of 100 × 100 km, as an annual average, for fine particulate matter (PM2.5) and ozone. White areas are areas where concentrations are below the limits of health impact.

Covering more than 70% of our planet, oceans contain the Earth’s most valuable natural resources but are also the end point for so much of the pollution from societies and civilization generated on land. The types of ocean pollution are vast, ranging from carbon emissions, to choking marine debris, to leaking oil, to noise, and to simply introducing harmful or toxic substances and wastes into the natural environment. The majority of the garbage that enters the ocean each year is made out of plastic. Because of its properties, this type of pollution is not biodegradable and persists for long periods of time, making plastic pollution one of the most ubiquitous and pressing contemporary threats to the worldwide coastal and marine environments. Due to its potential impact through the food chain on human health, the WHO calls in early 2019 for a further assessment of microplastics in the whole environment. Despite the increasing body of evidence and the literature that microplastic pollution is accumulating in the oceans, the real diffusion and pathways in the oceans remain uncertain and long-term life cycle of such pollution is unknown.

This paper is organized as follows: Sect. 1 focuses on air pollution, starting with a few highlights on the main pollutants and their evolution with time. A general description on how to monitor pollutants from space is then provided, with illustrations of four case studies involving different pollutants. Section 2 is dedicated to sea pollution, starting with an introduction, and followed by a description of three major issues: marine accident and safety, oil spill pollution, and the problem of plastics as marine debris.

2 Air Pollution

2.1 Main Atmospheric Pollutants and Trends

A pollutant is a chemical compound that affects health, not to be confused with a greenhouse gas that affects the climate. They can be emitted directly from the surface (primary pollutants) and/or formed through chemical reactions in the atmosphere (secondary pollutants). Depending on the chemical composition, number, size, and shape of the gases and particles, effects on humans, animals, plants, microorganisms and the environment may vary. The health impacts of each pollutant are difficult to estimate, and it is only relatively recently that documented and quantified correlations between particulate matter levels and health have been established, although it has long been known that life expectancy in polluted countries is lower (Brunekreef 1997).

Air quality standards generally define and set the norms of the following air pollutants: sulfur dioxide (SO2), nitrogen dioxide (NO2), carbon monoxide (CO), ozone (O3), ammonia (NH3) and particulate matter (with a diameter less than 10 and 2.5 micrometers in size, PM10 and PM2.5, respectively). The sources of these pollutants are various and mainly linked to human activities: transport, heating, industrial activity, agriculture, etc.

-

Sulfur dioxide (SO2): is primarily produced from the burning of fossil fuels from power plants, industry, automobiles, planes and ships. When combined with water in the atmosphere, it forms sulfuric acid that is the main component of acid rain. Exposure to SO2 affects the respiratory system and causes irritation of the eyes.

-

Nitrogen dioxide (NO2): is an odorous gas mainly emitted by industrial and traffic sources and is an important constituent of particulate matter and a precursor of ozone. These affect health through respiratory diseases and are linked to premature mortality.

-

Carbon monoxide (CO): is a colorless and odorless gas that is toxic and causes death at high concentrations. In the atmosphere, CO is produced by incomplete combustion of carbon-containing fuels, such as gasoline, natural gas, oil, coal, and wood.

-

Ozone (O3): is a secondary pollutant produced when CO, methane, or other volatile organic compounds (VOCs) are oxidized in the presence of nitrogen oxides (NOx) and sunlight. It is a major component of photochemical smog and leads to breathing problems, asthma, reduced lung function and respiratory diseases.

-

Ammonia (NH3): is a reactive pollutant for which emissions are at least four times higher than in the pre-industrial era leading to environmental damages such as acidification and eutrophication. It is mainly emitted from the agriculture sector including nitrogen fertilizers and livestock manure. It is also a precursor of particulate matter.

-

Particulate matter (PM): can be composed of many constituents such as sulfates, nitrates, ammonia, black carbon, and mineral dust. They are emitted by traffic (both diesel and petrol), by industry of energy production as well as other industrial activities (building, mining, manufacture of cement). Size of PM varies, from a diameter of less than 10 microns (PM10) to 2.5 microns (PM2.5) and less. PM poses major risks to health, as they are capable of penetrating lungs and entering the bloodstream.

Depending on the country, pollutant emissions are more or less significant (Elguindi et al. 2020), and air quality is regulated or not; currently, it is regulated in about fifty countries, but with variable population alert thresholds. Trends in the emissions depend strongly on national implemented mitigation strategies (Fig. 2). In most industrial countries (where coal is not used anymore), the pollutants that often exceed the harmful thresholds are ozone, ammonia and particles (PM10 and PM2.5). Ammonia (related to fertilizers and livestock) also remains difficult to control.

China and India are the two most polluted countries. India is currently implementing strategies for effective air pollution control (Apte and Pant 2019) especially by mitigating emissions from household sources (Chowdhury et al. 2019). While China’s ambient air is still very polluted, the remarkable improvements seen in recent years (Fig. 3) highlight the potential for air quality management efforts to rapidly and substantially improve air quality both in China and around the world.

Figure adapted from Zheng et al. (2018)

Trends of emissions of SO2 (black), NOx (in Gg/yr of NO2, blue), PM10 (red), and NH3 (green) over China resulting from aggressive pollution control.

2.2 How to Monitor Pollution from Space?

Instrumental remote sensing techniques dedicated to the monitoring of atmospheric gases concentrations have greatly improved in the past few decades. They now allow the probing into the lowest layers of the troposphere and contribute to air quality assessment. For atmospheric composition, satellite observational tools are mainly in the form of spectrometers with high spectral resolution ranging from the thermal infrared (IR) to the ultraviolet (UV) spectral range. Combined with ground-based measurements and atmospheric models, space observations are now essential and used in most scientific studies and many atmospheric applications to improve our knowledge about the physical and chemical processes from the global to the local scale.

Back in the 1980s, global space-borne observations of trace gases from space were dedicated to water vapor and ozone measurements. For instance, the total ozone mapping spectrometer (TOMS) instruments were able to characterize the ozone hole formation over Antarctica and monitor its evolution, specifically in response to the implementation of the Montreal Protocol in 1987 to ban ozone depleting substances. With the improvement of measurement techniques and retrieval algorithms of species concentration, it is now possible to provide quantitative information about many trace gases and pollutants concentration at regional or even local scales and with an increasingly fine vertical resolution. The latest achievements even allow sounding down to the Earth’s surface for some reactive species.

To observe atmospheric constituents, passive remote sensing instruments from satellites measure atmospheric spectra resulting from the interaction between radiation (solar or emitted by the Earth or the atmosphere) and molecules. The exploitation of these signals containing specific features or signatures of the different molecules allows retrieving their concentrations at different altitudes (for strong absorbers) or their total column concentration (integrated along the vertical, for weak absorbers). Each molecular absorption/emission line (in the IR) or cross section (in the UV) in a spectrum has a characteristic signature: The position indicates the identity of the molecule, and the intensity infers its atmospheric concentration. To properly retrieve atmospheric concentrations from any raw satellite spectrum, other parameters are required, such as characteristics of the instrument (detector, optics, etc.), spectroscopic data, auxiliary data such as temperature or air mass factors, and an atmospheric radiative transfer algorithm.

There are many satellite instruments at the moment able to measure atmospheric pollution. The state-of-the-art instruments using the UV–visible and thermal infrared radiation are TROPOMI (TROPOspheric Monitoring Instrument, Veefkind et al., 2012) and IASI (Infrared Atmospheric Sounding Interferometer, Clerbaux et al., 2009), respectively. These missions have shown their ability to accurately monitor concentrations and variability of key gases involved in atmospheric pollution. TROPOMI is a multispectral imaging spectrometer which has ultraviolet and visible spectral (UV–Vis—270–500 nm), near infrared (675–775 nm) and infrared (2305–2385 nm) bands (Fig. 4). It was launched in October 2017 by the European Space Agency (ESA) to monitor ground-level pollutants such as nitrogen dioxide, ozone, formaldehyde (HCHO), and sulfur dioxide with an accuracy and spatial resolution (3.5 × 7 km2) never achieved before (see https://sentinels.copernicus.eu/web/sentinel/missions/sentinel-5p).

Figure from Veefkind et al. (2012)

Spectral ranges for TROPOMI and the heritage instruments: OMI (Ozone Monitoring Instrument), SCIAMACHY (SCanning Imaging Absorption SpectroMeter for Atmospheric CHartographY) and GOME (Global Ozone Monitoring Experiment).

In the infrared part of the spectrum, the IASI instrument performs complementary measurements of pollutants from numerous (8641) channels in the thermal infrared spectral range with more than 31 molecules detected in its spectra (Fig. 5). Three identical IASI instruments were built by Centre National d’Etudes Spatiales (CNES) and European Organization for the Exploitation of Meteorological Satellites (EUMETSAT) for three successive launches: Metop-A on October 19, 2006, Metop-B on September 17, 2012, and Metop-C on November 7, 2018. All these measurements are analyzed and distributed in near real time to deliver gas concentration maps around the world less than 3 h after the measurement is taken.

The complementarity of multi-platform measurements, on board satellites or in situ from the ground, allows a better observation of the chemical composition of the atmosphere. In situ measurements are more accurate at local scale, while satellite measurements provide better spatial coverage, with information on the transport of pollution plumes. In addition, satellite instruments can monitor in remote areas with very limited coverage by ground-based measurement networks. These satellite observations are therefore important and are used to constrain emission inventories, validate our knowledge about physical and chemical processes of the atmosphere, and improve the prediction of pollution peaks when assimilated into dedicated atmospheric models.

Both the infrared and ultraviolet observations provide the user community with valuable information to carry out research on pollution processes across the globe. On the operational side, routine pollution forecasts are provided by the Copernicus Atmospheric Composition Monitoring (CAMS; https://atmosphere.copernicus.eu/ service: data from a dozen of instruments on board satellites are assimilated into regional and global models, to deliver air quality information for the main pollutants).

2.3 A Few Illustrations of Atmospheric Pollution

2.3.1 Case study 1: Mapping Nitrogen Dioxide (NO2) from Space at the City Scale

Nitrogen dioxide and nitrogen oxide (NO) together are usually referred to as nitrogen oxides (NOx = NO + NO2). They are important pollutants emitted by combustion processes in the troposphere. In the presence of sunlight, a photochemical cycle involving ozone converts very rapidly NO into NO2 making it a robust measure for nitrogen oxides concentrations. With a relatively short lifetime in the troposphere (few hours), the NO2 will remain relatively close to its source, making the NOx sources well detectable from space, whereas NO is disappearing too rapidly to be measured from space.

With TROPOMI’s high spatial resolution, pollution from individual power plants, other industrial complexes, and major highways can be observed. Figure 6 shows hot spots of NO2 pollution over the urban regions of UK, the Netherlands, the Pô Valley and the Ruhr area in the monthly average tropospheric NO2 for April 2018 over Europe derived from TROPOMI data (van Geffen et al. 2019). This is very valuable in improving our knowledge on how different sectors contribute to the overall emission of nitrogen oxides all over the world.

Figure from van Geffen et al. (2019)

Monthly average distribution of tropospheric NO2 columns for April 2018 over Europe based on TROPOMI data, derived with processor version 1.2.0.

2.3.2 Case Study 2: Regional Atmospheric Ammonia Variability over EUROPE and Link with Local PM Formation over the Paris Urban Area

With respect to primary pollutants, such as sulfur dioxide (SO2) or nitrogen oxides (NOx), in most countries no mitigation policies have been implemented to reduce NH3 emissions despite the importance of this gas in the formation of secondary aerosols, visibility degradation and/or acidification and eutrophication. Despite this, our knowledge of (1) spatiotemporal distributions of NH3 sources at the regional scale, and (2) the role of NH3 in the PM22.5 formation is very limited (mainly because there are very few systematic measurements), and emission inventories are uncertain.

Recently, IASI satellite observations were used to map for the first time the distribution of NH3 concentrations to the nearest kilometer (Van Damme et al. 2018). More than 400 NH3 point and extended sources have been identified worldwide, and this study showed that emissions from known sources are largely underestimated by emission inventories.

The Paris megacity (which is one of the most populated cities in the European Union with 10.5 million inhabitants) experiences frequent particulate matter pollution episodes in springtime (March–April). At this time of the year, major parts of of the particles consist of ammonium sulfate and nitrate which are formed from ammonia released during fertilizer spreading practices and transported from the surrounding areas to Paris and affect local air quality. There is still limited knowledge on the emission sources around Paris, both in terms of magnitude and seasonality.

Using IASI space-borne NH3 observation records of 10-years (2008–2017) a regional pattern of NH3 variabilities (seasonal and interannual) was derived (Viatte et al. 2020). Three main regions of high NH3 occurring between March and August were identified (Fig. 7). Those hot spots are found in (A) the French Champagne-Ardennes region which is the leader region for mineral fertilization used for sugar industry in France (Ramanantenasoa et al. 2018); (B) the northern part of the domain known for its animal farming (Scarlat et al. 2018); and (C) in the Pays de la Loire region also areas of livestock farming (Robinson et al. 2014). It has been found that air masses originated from rich NH3 areas, mainly over Belgium and the Netherlands, increase the observed NH3 total columns measured by IASI over the urban area of Paris. The latter study also suggests that meteorological parameters are the limiting factors and that low temperature, thin boundary layer, coupled with almost no precipitation and wind coming from the northeast, all favor the PM2.5 formation with the presence of atmospheric NH3 in the Paris urban region.

Figure from Viatte et al. (2020)

Seasonal variability of NH3 derived from 10 years of IASI measurements. The blue cross represents Paris.

2.3.3 Case Study 3: Summertime Tropospheric Ozone over the Mediterranean Region

Tropospheric ozone is a greenhouse gas, air pollutant, and a primary source of the hydroxyl radical (OH), the most important oxidant in the atmosphere (Chameides and Walker 1973). Previous observations and studies have shown that tropospheric O3 over the Mediterranean exhibits a significant increase during summertime, especially in the east of the basin (Kouvarakis et al. 2000; Im et al. 2011; Richards et al. 2013; Safieddine et al. 2014, among others).

Ozone is a secondary pollutant which is not directly emitted in the troposphere but is formed when its precursors (i.e., specific chemical compounds such as carbon monoxide, methane and nitrogen oxides) react with other chemical compounds, in the presence of solar radiation. Meteorological conditions such as frequent clear-sky conditions and high exposure to solar radiation in summer enhance the formation of photochemical O3 due to the availability of its precursors. Locally, the eastern part of the basin is surrounded by megacities such as Cairo, Istanbul, and Athens that are large sources of local anthropogenic emissions. The geographical location of the basin makes it a receptor for anthropogenic pollution from Europe both in the boundary layer and the mid-troposphere. Moreover, numerous studies have also shown that the eastern part of the basin is associated with strong summer anti-cyclonic activity leading to downward transport of upper tropospheric ozone into the lower troposphere (Zanis et al. 2014; Kalabokas et al. 2015). Summertime stratospheric intrusions are also common events above this region influencing both the upper and mid-troposphere (Safieddine et al. 2014; Akritidis et al. 2016). Figure 8 shows the tropospheric [0-8] km ozone column above Europe and the Mediterranean basin. The east side of the basin exhibits the highest ozone concentration as seen by the IASI instrument.

Figure from Safieddine et al. (2014)

Tropospheric [0–8] km O3 column during summer 2013. High values are recorded in the Mediterranean, especially to the east part of the basin.

2.3.4 Case study 4: CO Pollution Across Europe Linked with the Hurricane Ophelia

With the remnants of hurricane Ophelia reaching the British Isles in October 2017, a rare pollution event crossed Europe, with transport of dust from the Sahara and of thick smoke from disastrous wildfires in northern Portugal/Spain. On October 15–16, both dust and smoke were rapidly advected by Ophelia-energized strong southerly winds into the Bay of Biscay, northwestern France (Brittany) and across the UK. On October 17, residents of Belgium and the Netherlands woke up under a surprising orange-red Sun with low visibility enduring for most of the day (Osborne et al. 2019).

Two unusual events were intersecting: a tropical storm and high-intensity late-season fires. We now benefit from a range of observations above Europe allowing us to track the transport of a pollution plume. Key observations on the spreading of this event are provided by IASI. From its radiance, data near real-time global scale maps of dust, carbon monoxide (Fig. 9) and ammonia (two proxies for fire plumes) were derived. The highly polluted plumes could be followed for a full week using IASI CO data, as they spread over large parts of northern and eastern Europe, and into Asia (Clerbaux 2018).

Figure from Clerbaux (2018)

CO plume associated with fires transported from Spain/Portugal in October 2017. Each subplot is representing the CO total column measured at each IASI overpass, in the morning and in the afternoon.

3 Sea Pollution

3.1 Marine Accident and Safety

Of increasing concern in parallel to air pollution are the marine ecosystem degradation and the sea pollution. More than 3 billion people depend crucially on the oceans for their livelihoods. Associated with their presence, it has been reported that litter contaminates marine habitats from the poles to the equator and from the coastal borders to the deep environments. Sea pollution is far from being uniform and that depends on our knowledge of the oceans themselves. Monitoring and forecasting of accidental marine pollution, for which oil spills are an important factor, depend on access to quality information on ocean circulation. It is one of the most important applications of operational oceanography.

Over the past decades, the accuracy of weather and oceanographic forecasts has improved, but millions of dollars of goods and thousands of lives are still lost at sea each year due to extreme weather and oceanographic conditions. In the marine environment, vessels of all sizes are exposed and vulnerable to the elements. High winds, high waves, fog, thunderstorms, sea ice and freezing spray make shipping a high-risk business. Nevertheless, the ocean and seas are a sustainable transport route for the global economy—a “blue economy” estimated at 84% of world trade (The International Union of Marine Insurance Stats report 2018: https://iumi.com). The value of the global ocean economy is estimated at 3–6 trillion USD per year (United Nations Conference on Trade and Development, 2019: https://unctad.org). In addition, ferries carry more than a quarter of the world’s population each year (InterFerry, 2019, see https://interferry.com/ferry-industry-facts/). Maritime incidents endanger lives and property on board and can also cause environmental disasters.

The International Convention for the Safety of Life at Sea (SOLAS), which was created after the Titanic tragedy in 1912 and maintained by the International Maritime Organization (IMO), sets out various standards for the safety, security and operation of ships. IMO and the World Meteorological Organization (WMO), which are specialized agencies of the United Nations, ensure that consistent weather-related marine safety information is available globally for ships at sea. WMO provides a coordination structure that establishes protocols to ensure that coastal countries provide standardized weather information, forecasts and warnings to ensure the safety of people and goods at sea. This includes the requirement to issue explicit warnings for tropical cyclones and for gale and storm wind speeds. Other services include warnings for other severe conditions such as abnormal or devastating waves, reduced visibility due to fog and ice accumulation. Services are prepared and disseminated by National Meteorological Services according to areas of responsibility called Meteorological Areas (METAREA) as shown in Fig. 10. METAREAs are closely aligned with navigation zones, which are used to provide navigational information and warnings to ships at sea. Weather-related maritime safety information is transmitted to ships at sea by countries through the WMO Maritime Broadcast System, which supports the IMO Global Maritime Distress and Safety System (GMDSS).

These warning and forecasting services are widely used by all mariners, safety and security organizations and economic sectors that make informed decisions using marine weather information to improve the safety of people and goods, environmental safety and socio-economic benefits in marine and coastal environments. Numerical weather, wave and ocean prediction models provide marine forecasters with indications that underpin the forecasting process. Weather and ocean forecasting models are run two to four times a day using the data collected to make future forecasts. These models are based on supercomputers that perform calculations based on complex systems of differential equations that approximate the physical processes of the atmosphere, the oceans and sea ice. Experienced marine forecasters evaluate all available observations and numerical forecasts to ensure that the atmosphere and the ocean are represented as accurately as possible in the initial state of the model and to determine where the greatest model uncertainties lie on a given day. Forecasters then prepare and disseminate their marine weather forecasts, warn of hazardous weather conditions as required and send their forecasts for broadcast on the Internet, radio or satellite.

As a result of decades of coordination with universities, research centers, research infrastructures and private companies, operational oceanographic services, such as the Copernicus Marine Environment Monitoring Service (CMEMS: http://marine.copernicus.eu), have been set up. The combination of advanced observations and forecasts offers new downstream service opportunities to meet the needs of heavily populated coastal areas and climate change. Over the next decade, the challenge will be to maintain ocean observations, monitor small-scale variability, resolve sub-basin/seasonal and interannual variability in circulation, understand and correct model biases, and improve model data integration and overall forecasting for uncertainty estimation. Better knowledge and understanding of the level of variability will enable subsequent assessment of the impacts and mitigation of human activities and climate change on biodiversity and ecosystems, which will support environmental assessments and decisions. Other challenges include the extension of value-added scientific products to socially relevant downstream services and engagement with communities to develop initiatives that will contribute, inter alia, to the United Nations Decade of Ocean Sciences for Sustainable Development, thereby helping to bridge the gap between science and policy.

Forecasting services can play an important role in both incident decision making and the design of response services. Monitoring, forecasting and, to some extent, detection of marine pollution depend mainly on reliable and timely access to environmental data, observations and predictions products. These products provide an overview of the current and future state of meteorological and oceanographic conditions. They can also be used to drive pollutant fate prediction models, either directly or by providing boundary conditions for high-resolution nested models. Access to large geophysical datasets that are interoperable with regional and sub-regional observation and modeling systems, using standard service formats and specifications such as those provided by CMEMS, is an undeniable achievement.

Oil spill prediction is generally performed using a numerical model to predict the advection and weathering of oil in the sea (examples will be discussed in the next section). Although the treatment of these processes can vary considerably from one model to another, they all critically depend on geophysical forcing to determine the fate of the oil spill, particularly its movement implying currents and winds. Oil spill models consider different forcing fields from data on wind, ocean currents, wave energy, Stokes drift, air temperature, water temperature and salinity, turbulent kinetic energy, depending on the settings used by the particular model. For the prediction of the movement of oil slicks on the high seas, ocean circulation data has the greatest potential for improvement, mainly because ocean forecasts are less mature than weather and wave forecasts. Operational ocean forecasting systems offer the promise of improved forecast accuracy through the assimilation of available ocean observations. The geographical scope of these systems extends from basin to global scale, facilitating global oil spill modeling capabilities. It should be noted at this point that oil spill modeling systems can use ocean forecast data in two ways: as direct forcing to the oil drift model and as boundary conditions to higher-resolution local ocean models which, in turn, provide forcing data to the oil drift model.

The forecasting systems used to assist search-and-rescue operations are very similar to those used for oil spills. Speed of response is an essential criterion for finding people lost at sea. The size of the search area grows rapidly over time, and it is important to have accurate environmental data (winds and currents) to reduce this area and quickly find the target you are being looked for. Futch and Allen (2019) state that 60% of SAR incidents under the US Coast Guard areas of responsibility are outside areas where high-resolution wind and current data are available, and only global forecasts are available. Finding solutions that reduce the size of these areas of intervention is therefore crucial.

3.1.1 The Example of the Grande America Accident

The Grande America accident is a recent example of the use of ocean current data from several models. On March 10, 2019, a fire broke out on board the Italian merchant ship Grande America. The ship carries 365 containers, 45 of which contain dangerous goods and 2000 vehicles (cars, trucks, trailers, construction machinery) in its car decks. It sank on March 12 at a depth of 4600 m, 350 km off the French coast, with about 2200 tonnes of bunker fuel on board. More than 1000 tonnes of heavy fuel oil were released into the marine environment on the day the ship sank, followed by 35 days of continuous leaks before the breaches in the hull were filled by an underwater robot. A technical committee bringing together experts from Cedre, Météo-France, IFREMER and SHOM assessed the drift forecasts provided by the MOTHY oil spill drift model (Daniel 1996). The Drift Committee was responsible for providing the maritime authorities with consistent and relevant information on oil drift, observations and forecasts on a daily basis. MOTHY was used daily during the aerial surveillance and recovery period using oceanic forcing fields provided by CMEMS. Significant discrepancies were found between ocean models. Finally, the drift predictions closest to the observations were obtained with the currents provided by the ocean model of lower resolution (Fig. 11). This shows the importance of having several ocean models and the difficulty of using high-resolution ocean forecasts that generate many eddies whose precise location is sometimes difficult to model.

MOTHY output from a simulated 10-day leak from the Grande America wreck (dark green star near 46° N–6° W). Black disks are aerial observations. Particle drift: in green without ocean current data, in red using ocean current data from the operational Iberian Biscay Irish (IBI) Ocean Analysis and Forecasting system at 1/36 degree, in blue using ocean current data from the operational Mercator global ocean analysis and forecast system at 1/12 degree

This example highlights the main challenge for data providers in the future, namely improving the accuracy of current forecasts. Therefore, international collaboration should continue to consolidate work on validation measurements and model comparisons to ensure that a minimum set of measurements is implemented internationally. In particular, it is necessary to include spatially explicit estimates of uncertainty, both for forcing data and for the results of the oil spill model. From this point of view, ensemble forecasting is a very promising research area for quantifying the uncertainties inherent in drift prediction at sea. It should be further studied. Products should be delivered to users efficiently and should be provided with adequate spatial and temporal resolution. The interaction between users and production must be an important criterion for future developments.

3.2 Oil Spill Pollution

The end of the last century and the beginning of this new one have been plagued by major environmental catastrophes in terms of ocean oil pollution. Amoco Cadiz (1978), Exxon Valdez (1989), Sea Empress (1996), Erika (1999), Prestige (2002) and Tasman Spirit (2003) are but examples of oil tanker accidents. Nevertheless, these tanker accidents account for only a maximum of 2–3% of the whole oil pollutions worldwide. Another small percentage (2–3%) is due to platform accidents (Deep Water Horizon is a good example) leaving the rest to wild discharges (see Fig. 12 extracted from Pavlakis et al. 2001).

Figure from Pavlakis et al. (2001)

Fingerprints of illicit vessel discharges detected on ERS-1 and ERS-2 SAR images, during 1999 within the Mediterranean Sea.

Detecting and tracking oil spills were necessary, and rapidly, the best and most efficient means of observation and analysis were recognized through synthetic-aperture radar (SAR or imaging radar) measurements. Indeed, on board satellite or aircraft, SAR is an adapted tool for monitoring the surface of the ocean and therefore for detecting sea surface pollution. Due to microwave properties, SAR measurements do not depend on weather or cloud cover, and slicks have significant backscattering behaviors that can be highlighted by SAR systems. Imaging radars measure mainly the surface roughness and because slicks are producing a strong impact on short waves they modify seawater viscosity and dampened backscatter returns. Nevertheless, automatic spill recognition is not an easy task as the presence of extended dark manifestations in the image, due to occurrences other than spills but comparable to them, yields similar SAR signatures.

These extended dark patches are due to other phenomena also modifying the sea surface conditions: wind, sea state, sea temperature, rain showers, and currents (Girard-Ardhuin et al. 2003). An experienced image interpreter is essential to sort out irrelevant disturbances linked to natural phenomena. Furthermore, specific SAR parameters, using different SAR modes, are to be considered and provide more flexibility for the analysis: radar wave polarization, wavelength, and incidence angle for example, but also several other parameters such as satellite or aircraft flight direction, waves and wind directions. Then, many of the possible confusions arising from automatic recognition can be smoothed by integrating this information surplus, helping to discriminate between different sources of sea surface disturbances.

In order to go beyond oil spill detection and tracking, and for setting an efficient prevention system, one must also detect and recognize the ships responsible for the oil discharges. For that purpose, synergetic approaches have been used, mixing radar imaging inputs with met information and mandatory ship identification systems (AIS—automatic identification system) mainly. This effort is quite important as the vast majority of these pollutions occur near the coastal zones where 80% of the world population lives.

3.2.1 Effect of Slicks on the Ocean Surface and Radar Imaging Measurements

Slicks have the effect of damping the waves at the surface (Alpers and Hühnerfuss 1988, 1989; Solberg et al. 1999). When a slick covers the surface, wind has a lesser effect, and the amplitude of wave crest/trough decreases, implying a surface stress gradient, with opposite strength to this alternated motion. Therefore, a film at the ocean surface implies an energy decrease with distribution to high and short waves, corresponding to the important surface stress decrease. Because the radar is only sensitive to the surface roughness, several other modifying aspects need to be considered: waves, sea properties (diffusion, mixing, turbulence, air–sea exchanges, biology, etc.), surface currents, eddies, internal waves, oceanography, meteorology, atmospheric dynamics, etc., in order to understand the all influences on slick dissipation.

The SAR technology is one of the most suitable (see for instance Girard-Ardhuin et al. 2003, among many others) instruments for oil slick measurements since it does not depend on weather conditions (clouds) nor sunshine, which allows showing illegal discharges that most frequently appear during night; it can also survey storms areas, where accident risks are increased. Added to local aircraft tracking, SAR is helpful for synoptic oil spill monitoring. Its spatial coverage can be as wide as 500 × 500 km, with high-resolution images of about 10 m, or in very high-resolution mode down to a meter-scale resolution with a much smaller coverage (20 × 20 km).

Figure 13a shows an example of a large extent zone North West of Spain, on the path of the Finisterre TSS (traffic separation scheme) used by the oil tankers going back and forth between Rotterdam and Athens. The radar image is acquired by Radarsat-2 on 03/25/2016 at 06:41 UTC. This image was received, processed and analyzed by the VIGISAT 24/7 operational center in Brest, dedicated to the near real-time maritime surveillance services. Figure 13b shows, in the enlargement, not only the slick but several ships as well (white dots).

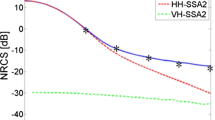

Surface roughness, linked by gravity–capillarity waves, is dampened by slicks, and the radar backscattering level is therefore decreased, yielding dark patches with weak backscattering in comparison with the surrounding regions in radar image, with at least 3 dB contrasts. From a synthesis of experimental previous studies, the best parameters to use to detect slicks are a function of radar configuration, slick nature and meteorological and oceanic conditions. SAR parameters are particularly of importance as their selection may affect the oil slick detection capabilities. Per construction, one can choose the radar wavelength, the radar polarization or the incidence angle of the radar wave. Other parameters strongly affecting the end results are conjunctural, such as the angle between flight direction and wind direction and, of course, the nature of the slick itself. The influence of meteorological and oceanic conditions has been discussed before and is entering into the category of additional parameters allowing some possible discrimination (Girard-Ardhuin et al. 2003).

For two decades (1980–2000), several experiments were conducted with a clear choice for higher microwave frequencies (C- to Ku-bands) over longer wavelength (S- or L-band), and an ability to show about 5 dB contrast for a slick made with “light” fuel, and 10–15 dB contrast for a “heavy” fuel one. Wind speed being an external parameter to consider, C-band frequency seems to be the most suitable frequency allowing to measure strong contrasts up to wind speed about 13 m/s. Wind speed intervenes in the choice of radar polarization. Again C-band frequency seems to be the most suitable choice with VV polarization, notably with strong wind, higher than 11 m/s. Finally, the nature of the slicks plays also a role in detection and, potentially, identification. Indeed, backscatter damping is a function of the origin and of the slick viscosity and elasticity properties. Measured with C-, X- and Ku-bands, wave damping is more important for oil slicks than for natural films, and the latter are often detectable only at low wind speed (< 5 m/s). Thus, using multi-frequency radar measurements is a good solution, when possible, for determining slick nature.

3.2.2 SAR and AIS Coupled Detection

SAR imagery provides a great potential in observing and monitoring the marine environment. Ship detection in SAR imagery is well advanced (Crisp 2004), and knowledge about vessels position and type yields a wide range of applications, such as maritime traffic safety, fisheries control, illegal discharges and border surveillance. Applications developed in this area are vast, and the evolution of SAR sensors capabilities and the availability of large SAR image dataset coupled with cooperative positioning data (such as AIS—automatic identification system ones) allows a much finer monitoring for man-made oil pollution (Pelich et al. 2015a). The AIS system allows the tracks of all vessels (Fig. 14) in the vicinity of the spill (AIS data from 01:00 to 06:47 UTC).

In order to identify the potential polluter, a series of steps must be applied. As too many candidates are present, there is a need to reduce the selection of potential ships. Figure 15a then shows the track of only three ships sailing southbound, in the vicinity of the spill. One AIS track is more likely to be the polluter. In order to assess the correct track, a drift algorithm is run for confirmation.

Finally, to assess which track is more likely to point to the polluter, a drift model is applied on all three tracks with an estimation of “fake” pollutions, as if all three ships had polluted. From the AIS-based locations of vessels, and considering the wind and the current information, the displacement of the pollution at sea is computed for a specific time interval (typically 6 h). The pollution transport model is based on a 2D Lagrangian advection scheme which linearly combines wind and current effects, with an additional diffuse scheme (Pelich et al. 2015b; Longépé et al. 2015). Figure 15 shows that only one AIS track is consistent with the oil spill shape.

In summary, ocean oil pollutions are mainly coming from illegal discharges and one of the best tools for detecting them is the imaging radar on board satellites. But SAR instruments cannot, alone, achieve the level of recognition expected by organizations such as European Maritime Safety Agency (EMSA). More evidences are required, and we showed here that by adding informations directly derived from the SAR image (ship detection, wind speed and direction, image interpreters) coupled with external ones (met conditions, AIS positions, drift model), we are able to accumulate enough proofs for having a significant impact on the polluters, including prosecution (a set of evidences can be accepted as a proof).

3.3 The Problem of Plastics as Marine Debris

World plastic production has increased exponentially in recent decades, from 2.3 million of tonnes (Mt) in 1950 to 162 million in 1993 to 448 million by 2015. As a consequence of their high durability (and associated low cost), substantial quantities of end-of-life plastics are accumulating in the environment. Presently, the part of unmanaged debris and plastics that end up in the World Ocean is estimated between 8 and 15 Mt per year. Nevertheless, the real dimension of the problem emerged only recently, and it has been pushed forward the scene under the auspices of the Sustainable Development Goals on life below water (SDG 14) that goals to “conserve and sustainably use the oceans, seas and marine resources for sustainable development.” Before that step, scientific research started in the early 1970s with the report of plastics on the seafloor and at sea and with numerous studies focusing on their impact on a variety of marine animals (Ryan 2015). Marine debris and litter represent nowadays a growing environmental problem and plastic, the largest component of litter, is now widely reported within the marine and as well as all natural environments on Earth. The problem is so huge that it represents threats to the environment, the economy, and human well-being on a global scale (Nielsen et al. 2019). Despite its many benefits on land and extensive usage inside our societies, the plastics and other artificial debris are increasingly being recognized as the source of severe environmental problems, and in particular in the oceans. Among the numerous aspects of the plastic problem, the fact that plastics and debris could travel across large distances, typically at the scale of ocean basins, is at the heart of the research priorities addressed by the scientific community to the G7 Science Ministers at Berlin in October 2015 (Williamson et al. 2016). In the top research priorities listed by this latter report, understanding the sources and the pathways in order to “establish connections between sources and sinks for different types of debris, and how these are influenced by oceanic and atmospheric dynamics” are the two first basic points that need to be solved to define the extent of the problem. More or less, all the different international reports dedicated to the plastic problem at global scales addressed the same starting points in addition to the fact that present ocean circulation models are not able to accurately simulate drift of debris because of its complex hydrodynamics (Maximenko et al. 2019). It is also important to note that, despite some progress made in spectral and hyperspectal remote sensing technologies (Garaba et al. 2018; Goddijn-Murphy and Williamson 2019), observations from space remain limited.

In the following, a simple Lagrangian assessment of dispersion from modeled surface current trajectories at the global ocean scales is addressed. The reason for that is linked to focus more on the physics of the oceanic environment rather than on the material itself requiring to specify, in such a case, different parameters like its density, size, shape and so on. Considering the problem of microplastic marine particles, Khatmullina and Chubarenko (2019) recently conclude that further integration or coupling with different type of models is required to advance on such complex problem. Here, we consider that plastics and marine floating debris represent one unique tracer that provides the opportunity to learn more about the physics and dynamics of the oceans across multiple scales. The evolution of a tracer in any turbulent flows is given by the paradigm of Eckart (1948): At first, during the stirring phase, the variance of the scalar gradient is increased, and later, during the mixing phase the molecular diffusion dominates and the strong gradient disappears (homogeneous final state). However, the “stirring and mixing” problem in a stratified ocean relies on the physics that need to be parametrized in ocean models and great challenging open problems remain at all levels, from very fundamental to highly applied aspects (Müller and Garrett 2002).

For centuries, surface currents were inferred from bottles and drifting objects but, since the 1980s, the World Climate Research Program initiated the global array of surface drifters (with anchors drogue set at 15 m depth). Accumulation of observations and continuous improvement leads to a quite precise time-mean circulation resolving details such as the cross-stream structure of western boundary currents, recirculation cells, and zonally elongated mid-ocean striations (Laurindo et al. 2017). If the averaged seasonal climatology is quite pertinent, daily and near real-time observations are only based on 1200–1300 active buoys on global scales representing approximately ~ 80% of 5° x 5° coverage within the 60 °N–60 °S band. On the other hand, satellite remote sensing proposes surface geostrophic currents derived from the altimetry since the 1990s, and with the addition of a wind-driven Ekman current, one estimate of surface current could be delivered (i.e., Sudre et al. 2013). If the variability of large-scale currents (200-km wavelength and 15-day period) is well depicted by satellite altimetry and vector winds from remote sensing, it leaves important observation gaps that will require other technology such as the understanding of Doppler properties of radar backscatter from the ocean (Ardhuin et al. 2019a, b). Representing another important tool in climate services, ocean reanalyses combine ocean models, atmospheric forcing fluxes, and observations using data assimilation to give a four-dimensional description of the ocean (i.e., Storto and Masina 2016). Based on these estimates, the dispersion dimension of the problem should be addressed from a Lagrangian point of view.

Complementing the traditional Eulerian approach, Lagrangian ocean methodology is a powerful way to analyze the ocean circulation or dynamics. The purpose is based on large sets of virtual (passive or fictive) particles whose displacements (trajectories) are integrated within the 3D/2D and time evolving velocity field. Among the expectations, questions about pathways and flow connectivity can be addressed and it avoids the recourse to the classical use of passive tracers such as geotrace gases. In a simplified framework, the analyses include the evaluation of the ocean currents, the determination of the initial positions of the particle and the computation of trajectories under physical assumptions, and finally their post-treatment. Some concrete examples that have been studied during the recent couple of years include: the tracking of the origins of plastic bottles across the Coral Sea (Maes and Blanke 2015), the discovering of “exit routes” near the core of the subtropical convergence zones of the Pacific Ocean (Maes et al. 2016), the determination of the mesoscale features characterizing the dynamics of the Coral Sea with its impact on water masses and circulation (Rousselet et al. 2016), the potential connection between the South Indian and the South Pacific subtropical convergence zones revealing that large-scale convergence zones should not be seen as “closed systems” (Maes et al. 2018), and more recently, the important role of the Stokes drift in the surface dispersion of floating debris in the South Indian Ocean (Dobler et al. 2019). Based on these different studies and other consistent results reported in the literature, we were able to identity these important findings: We need to know the time variability of surface currents to adequately address the long distance connectivity and pathways, we need to consider high-resolution horizontal currents (mesoscale at least) to estimate the time transfer on specific pathways, and finally, we need to evaluate more accurately the emission scenario (and the sinks) of surface floating litter to access the distributions at global scales.

Regarding the latter aforementioned point, additional experiments were performed using two different scenarios of particle release: The first one is based on one instantaneous release over the full ocean domain of the model (i.e., one particle on each ocean grid point at the initial timestep), whereas the second one is based on a permanent release along the coastlines (i.e., the ocean grid point adjacent to the land mask), the distribution being based on the population density as used by van Sebille et al. (2015). Not surprisingly, both experiments exhibit the presence of the subtropical convergence zones mainly due to the ocean dynamics (Fig. 16), which results in accumulation in the absence of vertical mixing and potential sinks. Nevertheless, the experiment with continuous release along the coastlines shows much more complex structures and higher concentrations in some areas including the different marginal Seas of the western Pacific Ocean, the Indonesian Seas, the Mediterranean Sea and the Bay of Bengal as well as the South Indian Ocean. It is also clear that some places within the tropical band such as in the Eastern Pacific Ocean near the Galapagos Islands or in the Gulf of Guinea are also characterized by some convergence of particles (Fig. 16b). Such places could not be identified in the first experiment (Fig. 16a) due to the divergent nature of the ocean dynamics, whereas they could be seen as semi-permanent places in the second case. To determine which one of these spatial distributions represents the actual concentration of marine debris or plastic at sea is quite impossible, due to the absence of in situ observations, and they are probably not realistic either. It is important to remember that most of the present studies put their focus on the surface layer (and so, on floating material), whereas the vertical distribution of floating plastic depends not only on the particle’s buoyancy, but also on the dynamic pressure due to vertical movements of ocean water. Understanding of the vertical flow in the ocean is challenging because it is induced by several processes acting at different temporal and spatial scales, not always fully resolved by present ocean models. Secondly, most of the present studies have assumed a steady, non-changing ocean circulation. However, low-frequency variations, such as the El Niño Southern Oscillation (ENSO) and the Pacific Decadal Oscillation (PDO), modify the ocean circulation and modulate the different physical processes. Trends associated with climate change can also amplify some of the dispersion processes at work in the future. It is not clear how these processes and the coupling with the internal variability of the ocean will or not affect the dispersion of marine debris and plastics at the global scales.

4 Conclusions

Atmospheric pollution is one of the greatest environmental disasters today with more than 90% of the population living in places exceeding WHO air quality guidelines. Air pollution is a complex mixture of many constituents that can be transported far from its source. It is a cross-border problem that affects each individual differently. Concentrations of key pollutants need to be known, quantified, and evaluated against atmospheric models as the first step toward crucial scientific understanding and a common awareness to address global environmental problems. Satellite instruments are powerful tools to monitor the atmospheric composition and its evolution globally, and the Copernicus CAMS infrastructure allows us to predict air quality for the next few days based on these observations. With technological advancements, space-borne observations are expected to be even more refined in space and time and hence help attributing pollutant’s sources and mitigating air pollution.

Recourse to short-term forecasts to modeling oil spill drift or trajectory for search-and-rescue support is quite recent in operational oceanography but essential to be efficient. Such processes and systems depend strongly on our understanding of the intrinsic variability in ocean dynamics. Thanks to the development of the technologies of satellite remote sensing, which began in the 1970s, tremendous advances have been achieved on large-scale oceanographic phenomena that lead to significant contributions to ocean and climate research for instance. However, satellite observations also emphasize the importance of the strongly energetic mesoscale turbulent eddy field in all the oceans involving energetic scale interactions that are not completely understood so far. Refining our understanding of ocean motions (and ultimately the coupling between the atmosphere, ocean and surface waves) as well as our capability of observations of current velocities in the top few meters of the ocean is crucial to provide the framework to relevant national and intergovernmental organizations to tackle the different types of sea pollution at local and global scales.

4.1 Perspectives

In the coming decades, we expect that satellite measurements will achieve a very high resolution in space as well as a more frequent revisit in time to track pollution on hourly basis within one kilometer or less (atmosphere) and a few meters’ (ocean) footprints. At the same time, many in situ sensors will provide additional information allowing better estimation of vertical information (atmosphere) and drifts due to currents and surface winds (ocean). These new datasets will boost research on pollutants variability and transport and afterward increase the accuracy of atmospheric and ocean models.

The large increase in data collection amount will be challenging for the processing systems, based on data science new developments (machine/deep learning and artificial intelligence) which will be in the near future massively used.

It means that researchers in atmospheric and marine sciences and technology will be able to capture pollution episodes (such as rush-hour traffic for instance) and detect whether pollution within a given region was generated on the spot or transported over from other places.

References

Akritidis D, Pozzer A, Zanis P, Tyrlis E, Škerlak B, Sprenger M, Lelieveld J (2016) On the role of tropopause folds in summertime tropospheric ozone over the eastern Mediterranean and the Middle East. Atmos Chem Phys 16:14025–14039. https://doi.org/10.5194/acp-16-14025-2016

Alpers W, Hühnerfuss H (1988) Radar signatures of oil films floating on the sea surface and the Marangoni effect. J Geophys Res 93(C4):3642–3648

Alpers W, Hühnerfuss H (1989) The damping of ocean waves by surface films: a new look at an old problem. J Geophys Res 94(C5):6251–6265

Apte JS, Pant P (2019) Toward cleaner air for a billion Indians. PNAS 116(22):10614–10616. https://doi.org/10.1073/pnas1905458116

Ardhuin F, Brandt P, Gaultier L, Donlon C, Battaglia A, Boy F, Casal T, Chapron B, Collard F, Cravatte S, Delouis J-M, De Witte E, Dibarboure G, Engen G, Johnsen H, Lique C, Lopez-Dekker P, Maes C, Martin A, Marié L, Menemenlis D, Nouguier F, Peureux C, Rampal P, Ressler G, Rio M-H, Rommen B, Shutler JD, Suess M, Tsamados M, Ubelmann C, van Sebille E, van den Oever M, Stammer D (2019a) SKIM a candidate satellite mission exploring global ocean currents and waves. Front Mar Sci. https://doi.org/10.3389/fmars.2019.00209

Ardhuin F, Chapron B, Maes C, Romeiser R, Gommenginger C, Cravatte S, Morrow R, Donlon C, Bourassa M (2019b) Satellite Doppler observations for the motions of the oceans. Bull Am Meteorol Soc 100:ES215–ES219

Brunekreef B (1997) Air pollution and life expectancy: is there a relation? Occup Environ Med 54(11):781–784. https://doi.org/10.1136/oem.54.11.781

Chameides W, Walker JCG (1973) A photochemical theory of tropospheric ozone. J Geophys Res 78:8751–8760

Chowdhury S, Dey S, Guttikunda S, Pillarisetti A, Smith KR, Di Girolamo L (2019) Indian annual ambient air quality standard is achievable by completely mitigating emissions from household sources. Proc Natl Acad Sci. https://doi.org/10.1073/pnas.1900888116

Clerbaux C (2018) Ciel jaune et soleil rouge: l’ouragan Ophélia décrypté. The conversation. https://theconversation.com/ciel-jaune-et-soleil-rouge-louragan-ophelia-decrypte-86894. Accessed 30 Oct 2019

Clerbaux C, Boynard A, Clarisse L, George M, Hadji-Lazaro J, Herbin H, Hurtmans D, Pommier M, Razavi A, Turquety S, Wespes C, Coheur P-F (2009) Monitoring of atmospheric composition using the thermal infrared IASI/MetOp sounder. Atmos Chem Phys 9:6041–6054. https://doi.org/10.5194/acp-9-6041-2009

Crippa M, Guizzardi D, Muntean M, Schaaf E, Dentener F, van Aardenne JA, Monni S, Doering U, Olivier JGJ, Pagliari V, Janssens-Maenhout G (2018) Gridded emissions of air pollutants for the period 1970–2012 within EDGAR v4.3.2. Earth Syst Sci Data 10:1987–2013. https://doi.org/10.5194/essd-10-1987-2018

Crisp DJ (2004) The state-of-the-art in ship detection in synthetic aperture radar imagery. Intelligence, Surveillance and Reconnaissance Division Information Sciences Laboratory, Edinburgh, South Australia, Australia, Technical Report, May 2004

Daniel P (1996) Operational forecasting of oil spill drift at METEO-FRANCE. Spill Sci Technol Bull 3(1/2):53–64

Dobler D, Huck T, Maes C, Grima N, Blanke B, Martinez E, Ardhuin F (2019) Large impact of Stokes drift on the fate of surface floating debris in the South Indian Basin. Mar Pollut Bull 148:202–209

Eckart C (1948) An analysis of the stirring and mixing processes in incompressible fluids. J Mar Res 7:265–275

Elguindi N, Granier C, Stravakou T, Darras S, Bauwens M, Cao H, Chen C, Denier van der Gon HAC, Dubovik O, Fu TM, Henze D, Jiang Z, Kuenen JJP, Kurokawa J, Liousse C, Miyazaki K, Muller JF, Qu Z, Sekou K, Solmon F, Zheng B (2020) Analysis of recent anthropogenic surface emissions from bottom-up inventories and top-down estimates: are future emission scenarios valid for the recent past? Earth Space Sci Open Arch. https://doi.org/10.1002/essoar.10502317.1

European Environment Agency (EEA) (2018) https://www.eea.europa.eu/data-and-maps/data/national-emissions-reported-to-the-convention-on-long-range-transboundary-air-pollution-lrtap-convention-13#tab-european-data. Accessed 30 Oct 2019

Futch V, Allen A (2019) Search and rescue applications: on the need to improve ocean observing data systems in offshore or remote locations. Front Mar Sci 6:301. https://doi.org/10.3389/fmars.2019.00301

Garaba SP, Aitken J, Slat B, Dierssen HM, Lebreton L, Zielinski O, Reisser J (2018) Sensing ocean plastics with an airborne hyperspectral shortwave infrared imager. Environ Sci Technol 52(20):11699–11707. https://doi.org/10.1021/acs.est.8b02855

Girard-Ardhuin F, Mercier G, Garello R (2003) Oil slick detection by SAR imagery: potential and limitation. In: IEEE/MTS proceedings of the marine technology and ocean science conference OCEANS2003, San Diego, CA, USA, vol 1, pp 164–169, 22–26 September 2003

Goddijn-Murphy L, Williamson B (2019) On thermal infrared remote sensing of plastic pollution in natural waters. Remote Sens 11:2159. https://doi.org/10.3390/rs11182159

Health Effects Institute State of Global Air (2019) Special report, Boston MA, Health Effects Institute, ISSN 2578-687

Im U, Markakis K, Poupkou A, Melas D, Unal A, Gerasopoulos E, Daskalakis N, Kindap T, Kanakidou M (2011) The impact of temperature changes on summer time ozone and its precursors in the Eastern Mediterranean. Atmos Chem Phys 11:3847–3864. https://doi.org/10.5194/acp-11-3847-2011

Kalabokas PD, Thouret V, Cammas J-P, Volz-Thomas A, Boulanger D, Repapis CC (2015) The geographical distribution of meteorological parameters associated with high and low summer ozone levels in the lower troposphere and the boundary layer over the Eastern Mediterranean (Cairo case). Tellus B Chem Phys Meteorol 67:27853. https://doi.org/10.3402/tellusb.v67.27853

Khatmullina L, Chubarenko I (2019) Transport of marine microplastic particles: why is it so difficult to predict? Anthropocene Coasts 2:293–305. https://doi.org/10.1139/anc-2018-0024

Kouvarakis G, Tsigaridis K, Kanakidou M, Mihalopoulos N (2000) Temporal variations of surface regional background ozone over Crete Island in the southeast Mediterranean. J Geophys Res 105:4399–4407

Laurindo LC, Mariano A, Lumpkin R (2017) An improved surface velocity climatology for the global ocean from drifter observations. Deep Sea Res Part I 124:73–92. https://doi.org/10.1016/j.dsr.2017.04.009

Lelieveld J, Evans JS, Fnais M, Giannadaki D, Pozzer A (2015) The contribution of outdoor air pollution sources to premature mortality on a global scale. Nature 525:367–371. https://doi.org/10.1038/nature15371

Longépé NA, Mouche M, Goacolou N, Granier L, Carrere J-Y, Le Bras P, Lozac’h P, Besnard S (2015) Polluter identification with spaceborne radar imagery AIS and forward drift modeling. Mar Pollut Bull 101:826–833

Maes C, Blanke B (2015) Tracking the origins of plastic debris across the Coral Sea: a case study from the Ouvéa Island New Caledonia. Mar Pollut Bull 97:160–168

Maes C, Blanke B, Martinez E (2016) Origin and fate of surface drift in the oceanic convergence zones of the eastern Pacific. Geophys Res Lett 43:3398–3405. https://doi.org/10.1002/2016GL068217

Maes C, Grima N, Blanke B, Martinez E, Paviet-Salomon T, Huck T (2018) A surface “superconvergence” pathway connecting the South Indian Ocean to the subtropical South Pacific gyre. Geophys Res Lett 45:1915–1922. https://doi.org/10.1002/2017GL076366

Maximenko N, Corradi P, Law KL, Van Sebille E, Garaba SP, Lampitt RS, Galgani F, Martinez-Vicente V, Goddijn-Murphy L, Veiga JM, Thompson RC, Maes C, Moller D, Löscher CR, Addamo AM, Lamson MR, Centurioni LR, Posth NR, Lumpkin R, Vinci M, Martins AM, Pieper CD, Isobe A, Hanke G, Edwards M, Chubarenko IP, Rodriguez E, Aliani S, Arias M, Asner GP, Brosich A, Carlton JT, Chao Y, Cook A-M, Cundy AB, Galloway TS, Giorgetti A, Goni GJ, Guichoux Y, Haram LE, Hardesty BD, Holdsworth N, Lebreton L, Leslie HA, Macadam-Somer I, Mace T, Manuel M, Marsh R, Martinez E, Mayor DJ, Le Moigne M, Molina Jack ME, Mowlem MC, Obbard RW, Pabortsava K, Robberson B, Rotaru A-E, Ruiz GM, Spedicato MT, Thiel M, Turra A, Wilcox C (2019) Toward the integrated marine debris observing system. Front Mar Sci 6:447. https://doi.org/10.3389/fmars.2019.00447

Müller P, Garrett C (2002) From stirring to mixing in a stratified ocean. Oceanography 15:12–19

Nielsen TD, Hasselbalch J, Holmberg K, Stripple J (2019) Politics and the plastic crisis: a review throughout the plastic life cycle. WIREs Energy Environ. https://doi.org/10.1002/wene.360

Osborne M, Malavelle FF, Adam M, Buxmann J, Sugier J, Marenco F, Haywood J (2019) Saharan dust and biomass burning aerosols during ex-hurricane Ophelia: observations from the new UK lidar and sun-photometer network. Atmos Chem Phys 19:3557–3578. https://doi.org/10.5194/acp-19-3557-2019

Pavlakis P, Tarchi D, Sieber AJ (2001) On the monitoring of illicit vessel discharges using spaceborne SAR remote sensing—a reconnaissance study in the Mediterranean Sea. Ann Telecommun 56(11–12):700–718

Pelich N, Longépé N, Mercier G, Hajduch G, Garello R (2015a) Performance evaluation of Sentinel-1 data in SAR ship detection. In: 2015 IEEE international geoscience and remote sensing symposium (IGARSS), pp 2103–2106

Pelich N, Longépé N, Mercier G, Hajduch G, Garello R (2015b) AIS-based evaluation of target detectors and SAR sensors characteristics for maritime surveillance. IEEE J Sel Top Appl Earth Obs Remote Sens 8(8):3892–3901

Ramanantenasoa MMJ, Gilliot J-M, Mignolet C, Bedos C, Mathias E, Eglin T, Makowski D, Génermont S (2018) A new framework to estimate spatio-temporal ammonia emissions due to nitrogen fertilization in France. Sci Total Environ 645:205–219. https://doi.org/10.1016/j.scitotenv.2018.06.202

Richards NAD, Arnold SR, Chipperfield MP, Miles G, Rap A, Siddans R, Monks SA, Hollaway MJ (2013) The Mediterranean summertime ozone maximum: global emission sensitivities and radiative impacts. Atmos Chem Phys 13:2331–2345. https://doi.org/10.5194/acp-13-2331-2013

Robinson TP, Wint GRW, Conchedda G, Van Boeckel TP, Ercoli V, Palamara E, Cinardi G, D’Aietti L, Hay S, Gilbert M (2014) Mapping the global distribution of livestock. PLoS ONE 9(5):e96084. https://doi.org/10.1371/journal.pone.0096084

Rousselet LA, Doglioli M, Maes C, Blanke B, Petrenko AA (2016) Impacts of mesoscale activity on the water masses and circulation in the Coral Sea. J Geophys Res Oceans 121:7277–7289. https://doi.org/10.1002/2016jc011861

Ryan PG (2015) A brief history of marine litter research. In: Bergmann M, Gutow L, Klages M (eds) Marine anthropogenic litter. Springer, Cham, pp 1–25. https://doi.org/10.1007/978-3-319-.16510-3_1

Safieddine S, Boynard A, Coheur P-F, Hurtmans D, Pfister G, Quennehen B, Thomas JL, Raut J-C, Law KS, Klimont Z, Hadji-Lazaro J, George M, Clerbaux C (2014) Summertime tropospheric ozone assessment over the Mediterranean region using the thermal infrared IASI/MetOp sounder and the WRF-Chem model. Atmos Chem Phys 14:10119–10131. https://doi.org/10.5194/acp-14-10119-2014

Scarlat N, Fahl F, Dallemand J-F, Monforti F, Motola V (2018) A spatial analysis of biogas potential from manure in Europe. Renew Sustain Energy Rev 94:915–930. https://doi.org/10.1016/j.rser.2018.06.035

Solberg AH, Storvik SG, Solberg R, Volden E (1999) Automatic detection of oil spills in ERS SAR images. IEEE Trans Geosci Remote Sens 37(4):1916–1924

Storto A, Masina S (2016) C-GLORSv5 an improved multipurpose global ocean eddy-permitting physical reanalysis. Earth Syst Sci Data 8(2):679–696. https://doi.org/10.5194/essd-8-679-2016

Sudre J, Maes C, Garcon V (2013) On the global estimates of geostrophic and Ekman surface currents. Limnol Oceanogr Fluids Environ 3(1):1–20. https://doi.org/10.1215/21573689-2071927

United States Environmental Protection Agency (EPA) https://www.epa.gov/air-emissions-inventories/air-pollutant-emissions-trends-data. Accessed 30 Oct 2019

Van Damme M, Clarisse L, Whitburn S, Hadji-Lazaro J, Hurtmans D, Clerbaux C, Coheur P-F (2018) Industrial and agricultural ammonia point sources exposed. Nature 564:99–103. https://doi.org/10.1038/s41586-018-0747-1

van Geffen JHGM, Eskes HJ, Boersma KF, Maasakkers JD, Veefkind JP (2019) TROPOMI ATBD of the total and tropospheric NO2 data products Tech Rep S5P-KNMI-L2-0005-RP Koninklijk Nederlands Meteorologisch Instituut (KNMI). https://sentinels.copernicus.eu/documents/247904/2476257/Sentinel-5P-TROPOMI-ATBD-NO2-data-products, CI-7430-ATBD, issue 1.4.0

van Sebille E, Wilcox C, Lebreton L, Maximenko N, Hardesty BD, Van Franeker JA (2015) A global inventory of small floating plastic debris. Environ Res Lett 10(12):124006. https://doi.org/10.1088/1748-9326/10/12/124006

Veefkind JP, Aben EAA, McMullan K, Forster H, de Vries J, Otter G et al (2012) TROPOMI on the ESA Sentinel-5 Precursor: a GMES mission for global observations of the atmospheric composition for climate air quality and ozone layer applications. Remote Sens Environ 120:70–83. https://doi.org/10.1016/j.rse.2011.09.027

Viatte C, Wang T, Van Damme M, Dammers E, Meleux F, Clarisse L, Shephard MW, Whitburn S, Coheur PF, Cady-Pereira KE, Clerbaux C (2020) Atmospheric ammonia variability and link with PM formation: a case study over the Paris area. Atmos Chem Phys. https://doi.org/10.5194/acp-20-577-2020

Williamson P, Smythe-Wright D, Burkill P (2016) A non-governmental scientific perspective on seven marine research issues of G7 interest. Future of the ocean and its seas. ICSU-IAPSO-IUGG-SCOR, Paris

World Health Organization (2017) https://www.who.int/airpollution/ambient/about/en/. Accessed 30 Oct 2019

Zanis P, Hadjinicolaou P, Pozzer A, Tyrlis E, Dafka S, Mihalopoulos N, Lelieveld J (2014) Summertime free-tropospheric ozone pool over the eastern Mediterranean/Middle East. Atmos Chem Phys 14:115–132. https://doi.org/10.5194/acp-14-115-2014

Zheng B, Tong D, Li M, Liu F, Hong C, Geng G, Li H, Li X, Peng L, Qi J, Yan L, Zhang Y, Zhao H, Zheng Y, He K, Zhang Q (2018) Trends in China’s anthropogenic emissions since 2010 as the consequence of clean air actions. Atmos Chem Phys 18:14095–14111. https://doi.org/10.5194/acp-18-14095-2018

Acknowledgements

The authors would like to acknowledge the International Space Science Institute (ISSI) in Bern (Switzerland) for organizing inspiring workshops and their help during this book’s conception. A special mention is addressed to Anny Cazenave and the other organizers for their availability and time.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Viatte, C., Clerbaux, C., Maes, C. et al. Air Pollution and Sea Pollution Seen from Space. Surv Geophys 41, 1583–1609 (2020). https://doi.org/10.1007/s10712-020-09599-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10712-020-09599-0