Abstract

This paper has two aims. First, it points out a crucial difference between the standard argument from fine-tuning for the multiverse and paradigmatic instances of anthropic reasoning. The former treats the life-friendliness of our universe as the evidence whose impact is assessed, whereas the latter treat the life-friendliness of our universe as background information. Second, the paper develops a new fine-tuning argument for the multiverse which, unlike the old one, parallels the structure of paradigmatic instances of anthropic reasoning. The main advantage of the new argument is that it is not susceptible to the inverse gambler’s fallacy charge.

Similar content being viewed by others

1 Introduction

The fact that the universe supports life seems to depend delicately on various of its fundamental characteristics, notably on the form of the laws of nature, on the values of some constants of nature, and on aspects of the universe’s conditions in its very early stages. An example of such fine-tuning for life is the difference between the masses of the two lightest quarks: the up- and down-quark [1,2,3, Sect. 4]. Small changes in this difference would undermine the stability properties of the proton and neutron, which are bound states of these quarks, and lead to a much less complex universe where bound states of quarks other than the proton and neutron dominate.

Life would apparently also have been impossible if the mass of the electron, which is roughly ten times smaller than the mass difference between the down- and the up-quark, had been somewhat larger in relation to that difference. Fine-tuning of the lightest quark masses with respect to the strength of the weak nuclear force has been found as well [4]. Further suggested instances of fine-tuning concern the strength of gravity, the strengths of the strong and weak nuclear forces, the mass of the Higgs boson, the vacuum energy density, the total energy density of the universe in its very early stages, the relative amplitude of energy density fluctuations in the very early universe, the initial entropy of the universe, and aspects of the very form of the known laws of nature. (See [5,6,7, Sect. 1]) for some overviews.) It should be noted that some expert physicists—e.g. [8]—question some of the popular fine-tuning claims. The present paper operates under the assumption that at least some laws and constants are indeed fine-tuned for life in the sense just sketched. (For up-to-date defences of this assumption, see [9, 10].)

One of the most popular and most discussed responses to the universe’s fine-tuning for life is to suggest that the laws and constants might be environmental, i.e. that they might differ in different regions of space-time (see [11] for a review of the evidence) and/or in different spatio-temporally disconnected universes. A collection of multiple universes with different constants and laws is commonly referred to as a “multiverse.” Since the version of the idea that the laws and constants are environmental that is most often discussed is the multiverse idea, I will simply use the term “multiverse” here when referring to any hypothesis according to which the laws and constants are environmental. The most intensely discussed concrete multiverse scenario is the landscape multiverse [12], which is taken to be suggested by a combination of string theory (see [13] for a comprehensive discussion of philosophical issues) and eternal inflation [14].

The idea that underlies the suggested inference from the finding that life requires fine-tuned laws and constants to a multiverse is that, if there is a sufficiently vast and diverse multiverse, it is only to be expected that it contains at least one universe where the laws and constants are right for life. The famous (weak) anthropic principle states that “we must be prepared to take account of the fact that our location [\(\ldots \)] is necessarily privileged to the extent of being compatible with our existence as observers” [15, p. 293] emphasis due to Carter). As this principle highlights, given that we are observers, the universe (or space–time region) in which we find ourselves has to be one where the conditions are compatible with the existence of observers. This suggests that, on the assumption that there is a sufficiently diverse multiverse, it is neither surprising that there is at least one universe that is hospitable to life nor—since we could not have found ourselves in a life-hostile universe—that we find ourselves in a life-friendly one. Many physicists (e.g. [12, 16, 17]) and philosophers (e.g. [18,19,20]) regard this line of thought as suggesting the inference to a multiverse as a rational response to the finding that the conditions are right for life in our universe despite the required fine-tuning. The most prominent objection against the inference from fine-tuning to a multiverse [21, 22] is that it commits the inverse gambler’s fallacy [23].

The aims of this paper are twofold. First, I point out that the argument from fine-tuning for the multiverse in its standard version, as just sketched, differs crucially from paradigmatic instances of anthropic reasoning such as, notably, Dicke’s [24] and Carter’s [15] accounts of coincidences between large numbers in cosmology. The key difference is that the standard fine-tuning argument for the multiverse treats the existence of observers as calling for a response and suggests to infer the existence of a multiverse as the best such response. Anthropic reasoning of the type championed by Dicke and Carter, in contrast, assumes the existence of observers as background knowledge when assessing whether the large number coincidences are to be expected given the competing theories. These are clearly different argumentative strategies, which should not be confused.

The second aim of this paper is to explore a novel fine-tuning argument for the multiverse, which, unlike the old one, partly mirrors the structure of Dicke’s and Carter’s accounts of large number coincidences. The new argument turns out to have the virtue of being immune to the inverse gambler’s fallacy charge. As will become clear, it rests on one key assumption, namely, that the considerations according to which life requires fine-tuned laws and constants do not independently make it less attractive to believe in a multiverse.

The remainder of this paper is structured as follows: Sect. 2 reviews the old fine-tuning argument for the multiverse and the inverse gambler’s fallacy charge. Section 3 turns to a classic instance of anthropic reasoning and shows how it structurally differs from the old fine-tuning argument for the multiverse. Section 4 introduces the new fine-tuning argument for the multiverse. Section 5 concludes the paper by highlighting the most significant attraction and the most significant limitation of the new argument.

2 The Old Fine-Tuning Argument for the Multiverse

The old argument from fine-tuning for the multiverse is usually presented in Bayesian terms, using subjective probability assignments. In a simple exposition, probability assignments which are aimed at being reasonable are compared for a general single-universe theory \(T_U\) and a multiverse theory \(T_M\). One can think of \(T_U\) as a disjunction of specific single-universe theories \(T_U^\lambda \) that differ on the value of some parameter \(\lambda \) which collectively describes the (by assumption uniform) laws and constants in the one universe. The multiverse theory \(T_M\) should be thought of as entailing that the laws and/or constants are different in the different universes (or regions) that exist according to \(T_M\) and scan over a wide range.

As the evidence whose impact is assessed the old argument uses the proposition R that there is (at least) one universe with the right laws and constants for life. Thus the argument studies the impact of the finding that we are able to exist in that there is a life-friendly universe against the backdrop of the insight that a life-friendly universe requires delicate fine-tuning of the laws and constants. For the sake of perspicuity it will be useful to explicitly include our background knowledge \(B_0\) in all formulas. The prior probabilities assigned to \(T_M\) and \(T_U\) before taking into account R are written as \(P(T_M|B_0)\) and \(P(T_U|B_0)\). It is extremely difficult to make well-motivated value assignment to these quantities because they refer to the epistemic situation of an agent who is unaware of R, i.e. unaware that there is a life-friendly universe. Concerns on the possibility of rational probability assignments in this situation are articulated in [25] and taken up in [26]. The posterior probabilities \(P^+(T_M)\) and \(P^+(T_U)\), in turn, are supposed to reflect the rational credences of an agent who not only knows that a life-friendly universe requires fine-tuning, but also knows R, i.e. that there is indeed a life-friendly universe.

Updating of the probability assignments from the prior to the posterior probabilites is done in accordance with Bayesian conditioning, where \(P^+(H)=P(H|R,B_0)\) for the posterior probabilites. For the ratio of the posteriors assigned to \(T_M\) and \(T_U\) we obtain

If the multiverse according to \(T_M\) is sufficiently vast and varied, life appears almost unavoidably somewhere in it, so the conditional probability \(P(R|T_M,B_0)\) is very close to 1:

If we assume that, on the assumption that there is only a single universe, it is improbable that it has the right conditions for life, the conditional prior \(P(R|T_U,B_0)\) will be much smaller than 1:

Equation (3) is supposed to be the probabilistic implementation of the arguments according to which life requires fine-tuned laws and constants.

Equations (2) and (3) together yield \(P(R|T_M,B_0) \gg P(R|T_U,B_0)\), which entails \(P(R|T_M,B_0)/P(R|T_U,B_0)\gg 1\), i.e. a ratio of posteriors that is much larger than the ratio of the priors:

So, unless we have very strong prior reasons to dramatically prefer a single universe over the multiverse, i.e. unless \(P(T_U|B_0)\gg P(T_M|B_0)\), the ratio \(P^+(T_M)/P^+(T_U)\) of the posteriors will be much larger than 1 and we can be rather confident that there is a multiverse.

The most-discussed objection against this argument is that it commits the inverse gambler’s fallacy, originally identified by Ian Hacking [23]. This fallacy consists in inferring from an event with a remarkable outcome that there have likely been many more events of the same type in the past, most with less remarkable outcomes. For example, the inverse gambler’s fallacy is committed by someone who enters a casino and, upon witnessing a remarkable outcome at the nearest table—say, a five-fold six in a toss of five dice—concludes that the toss is most likely part of a large sequence of tosses. This inference is fallacious if, as seems plausible, the outcomes of the tosses can be assumed to be probabilistically independent. According to critics of the argument from fine-tuning for the multiverse, the argument commits this fallacy by, as White puts it, “supposing that the existence of many other universes makes it more likely that this one—the only one that we have observed—will be life-permitting.” [21, p. 263] Versions of this criticism are endorsed by Draper and Pust [27] and Landsman [22].Footnote 1

Adherents of the inverse gambler’s fallacy charge against the argument from fine-tuning for the multiverse claim that it is misleading to focus on the impact of the proposition R—that the conditions are right for life in some universe. As they highlight, much closer to our full evidence is the more specific proposition H: that the conditions are right for life here, in this universe. According to proponents of the inverse gambler’s fallacy charge, if we replace R by H, the fine-tuning argument for the multiverse no longer goes through because finding this universe to be life-friendly does not increase the likelihood of there being any other universes.

Many philosophers defend the argument from fine-tuning for the multiverse against the inverse gambler’s fallacy charge [20, 28,29,30,31,32]. Notably, Bradley [20] discusses a number of candidate casino analogies—among them one already suggested by McGrath [28]—which, according to him, suggest that the inverse gambler’s fallacy charge is to be rejected. However, Landsman [22] recently disputed Bradley’s analysis, and the question of whether the fine-tuning argument for the multiverse is valid or fallacious seems to be as controversial as ever. The present paper does not aim to resolve this dispute.

3 Anthropic Reasoning: Dicke/Carter-Style

The argument from fine-tuning for the multiverse differs crucially from arguments to which Carter [15] appeals as exemplary instances of anthropic reasoning, notably astrophysicist Robert Dicke’s accounts of coincidences between large numbers in cosmology [24]. A prominent such coincidence concerns the fact that the relative strength of electromagnetism and gravity as acting on an electron/proton pair is of roughly the same order of magnitude (namely, \(10^{40}\)) as the age of the universe, measured in natural units of atomic physics. Impressed by this and other coincidences with numbers of similar size, Dirac had stipulated some decades earlier that they might hold universally and as a matter of physical principle [33]. Based on this stipulation, Dirac had conjectured that the strength of gravity may decrease as the age of the universe increases, which would be necessary for the large number coincidence to hold across all cosmic times.

Dicke [24], criticizing Dirac, argues that standard cosmology with time-independent gravity suffices to account for the coincidence, provided that we take into consideration the fact that our existence is tied to the simultaneous presence of mainline stars like the sun and various chemical elements produced in supernovae. As Dicke shows, given this requirement, we could only have found ourselves in a cosmic period in which the coincidence (approximately) holds. Accordingly, to make the coincidence unsurprising there is no need to assume that gravity varies with time. Carter [15] and Leslie [18, Chap. 6] describe Dicke’s account as an “anthropic explanation” of the large number coincidence, and Leslie discusses it continuously with the argument from fine-tuning for the multiverse.Footnote 2 But this is somewhat misleading: whereas Dicke’s account of the large number coincidence uses life’s existence in our galaxy, in the present cosmic era as background knowledge to show that standard cosmology suffices to make the coincidence expected, the argument from fine-tuning for the multiverse treats life’s existence as requiring a theoretical response and advocates the multiverse hypothesis as the best such response.

To make Dicke’s reasoning more transparent using the Bayesian formalism, let us denote by C the large number coincidence, by S the standard view according to which gravity is spatio-temporally uniform and by A Dirac’s alternative theory according to which the coincidence holds universally and gravity varies with time. Dirac’s idea is that the approximate identity of the two apparently unrelated large number is a surprising coincidence on the standard theory, whereas it is to be expected and not at all surprising on the alternative theory. This suggests the inequality

where B stands for the assumed scientific background knowledge. Probabilities assigned to S and A based on this background knowledge B prior to considering the impact of C will presumably privilege standard cosmology S over Dirac’s alternative A:

By Bayes’ theorem, posterior probabilities \(P^+(S)=P(S|C,B)\) and \(P^+(A)=P(A|C,B)\) can be computed which take into account the impact of C. In line with Dirac’s argument, due to Eq. (5) and in spite of Eq. (6) they may end up privileging Dirac’s alternative theory A over standard cosmology S.

Dicke’s considerations, however, reveal that considering Eq. (5) is not helpful. Plausibly, our background knowledge B will include some basic information about ourselves, including that we are forms of life whose existence depends on the presence of a mainline star in the vicinity. But, according to standard physics, mainline stars can exist only in that period of cosmic evolution where the coincidence approximately holds, so it is misleading to consider only the evidential impact of C. What comes closer to our full evidence is the proposition [C]: that that the coincidence holds here and now, in our cosmic era. Dicke’s reasoning can be expressed as the insight that we should use [C] rather C as our evidence and that, instead of a probability distribution P that conforms to Eq. (5), we should use a probability distribution \(P'\) for which

According to Eq. (7) it no longer seems that finding the coincidence C realized provides any strong support for the alternative (varying gravity) theory A over the standard theory S. Since Eq. (6) plausibly carries over from \(P(\,)\) to \(P'(\,)\), we have

Combining Eqs. (7) and (8) and applying Bayes’ theorem means that the posteriors will likely still privilege the standard theory over the alternative theory. This, in essence, is Dicke’s conclusion, contra Dirac.

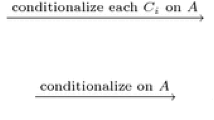

Whether one thinks Dicke’s reasoning is compelling or not, the key aspect of it that matters for the purposes of this paper is that there is a key difference between this type of anthropic reasoning and the standard fine-tuning argument for the multiverse as reviewed in Sect. 2, namely, how they treat the information that the conditions are life-friendly in our universe: Dicke/Carter-style reasoning uses it as part of the background knowledge B, whereas the standard fine-tuning argument for the multiverse uses it as the information whose evidential impact is assessed in the light of the fine-tuning considerations, i.e. not as background knowledge. As we saw in Sect. 2, the standard fine-tuning argument for the multiverse is potentially susceptible to the inverse gambler’s fallacy charge. The following section develops a new argument from fine-tuning for life for a multiverse that parallels Dicke/Carter-style anthropic reasoning inaasmuch as it treats the information that our universe is life-friendly as background knowledge. This makes the new argument by construction immune against the inverse gambler’s fallacy charge.

4 A New Fine-Tuning Argument for the Multiverse

The basic idea of the new fine-tuning argument for the multiverse is that the fine-tuning considerations contribute to a partial erosion of the main theoretical advantage that empirically adequate single-universe theories tend to have over empirically adequate multiverse theories, namely, that their empirical consequences are far more specific. In what follows I give an exposition of the new argument.

The new argument contrasts multiverse theories and single-universe theories with respect to their abilities to account for the measured value of some numerical parameter \(\lambda \). The parameter \(\lambda \) can be thought of as collectively encoding relevant aspects of the laws and constants.

For the sake of simplicity, I assume that there is only one fundamental multiverse theory \(T_M\) worth taking seriously. This \(T_M\) should be empirically adequate in the very weak sense that it is compatible with the existence of at least some (sub-)universe where the parameter \(\lambda \) has a value \(\lambda _0\) that is consistent with our measurements in this universe. Next, I assume that, as the main rival to \(T_M\), there is only one candidate fundamental single-universe theory \(T_U^{\lambda _0}\) worth taking seriously. This should also be empirically adequate, which means that the value \(\lambda _0\) that it ascribes to the parameter \(\lambda \) over the entire universe must be one that our measurements in this universe are consistent with.

Let us suppose now that \(\lambda \) is a parameter for which physicists come up with considerations F according to which its value requires fine-tuning to be compatible with life. Thus, only values very close to \(\lambda _0\) lead to a life-friendly universe. Do those considerations F make it rational to increase one’s credence in the multiverse theory \(T_M\)?

To answer this question, let us first consider our evidential situation prior to taking into account the considerations F according to which \(\lambda \) requires fine-tuning for life. An evidential advantage that we can expect the single-universe theory \(T_U^{\lambda _0}\) to have over the multiverse \(T_M\) is that it makes a highly specific and, as it turns out, empirically adequate “prediction” of the value of \(\lambda \). The multiverse theory \(T_M\), in contrast, entails the existence of universes with many different values of \(\lambda \). Observing any of those values in our own universe would have been compatible with \(T_M\). This reflects the main methodological drawback of multiverse theories, often emphasized by their critics, that they tend to make few testable predictions, if any.

One can express this consideration in Bayesian terms, denoting by “\([\lambda _0]\)” the proposition “The value of \(\lambda \) is \(\lambda _0\) in our own universe.” If \(T_U^{\lambda _0}\) holds, finding the value of \(\lambda \) to be \(\lambda _0\) is guaranteed, whereas if \(T_M\) holds, finding \(\lambda _0\) is by no means guaranteed because one might be in a sub-universe where the value of \(\lambda \) is different. So, in terms of subjective probabilities and assuming that we have been able to specify suitable background information \(B_l\) which includes that our universe is life-friendly but leaves open the value of \(\lambda \):

with the inequality possibly being substantial.

It is interesting to note that \(T_M\) may have comparative advantages over \(T_U^{\lambda _0}\) that allow it to partially compensate for the drawback encoded in Eq. (9), even before taking account of the fine-tuning considerations F: For many constants of fundamental physics whose values are known by observation, physicists have not found any theoretical reason as to why the actual values might be somehow systematically preferred. Notably, in the light of standard criteria of theory choice such as elegance, simplicity, and “naturalness” (see [35] and [7, Sect. 5]) for introductions to the latter notion aimed at philosophers) one would have expected at least some constants to have very different values [36]. The single-universe theory \(T_U^{\lambda _0}\) may therefore not rank very high in the light of these theoretical virtues. One can imagine, in contrast, a multiverse theory \(T_M\) that is conceptually elegant and has only few, if any, systematically unconstrained constants as input. In that scenario (which, however, is not required for the new fine-tuning argument for the multiverse to go through) it may be plausible to assign a larger prior to \(T_M\) than to \(T_U^{\lambda _0}\):

Due to Bayes’ theorem, the result of the competition between \(T_M\) and \(T_U^{\lambda _0}\) is encoded in the ratio:

How the competition between \(T_M\) and \(T_U^{\lambda _0}\) plays out numerically will of course depend on the specific empirical considerations based on which all the probabilities appearing in Eqs. (9) and (10) are assigned.

Let us now turn to assessing the impact of the fine-tuning considerations F. These considerations reveal, to recall, that the value of \(\lambda \) must be very close to \(\lambda _0\) for there to be life. Thus they imply that our background knowledge \(B_l\)—which in analogy to Dicke/Carter-style reasoning now includes the proposition that our universe is life-friendly—constrains values of \(\lambda \) that we could have possibly found to a very narrow range around \(\lambda _0\). So, effectively, what the fine-tuning considerations show is that based on \(B_l\) alone we could have predicted that we will find a value of \(\lambda \) that is (very close to) \(\lambda _0\). Specifically, if there is a multiverse as entailed by \(T_M\), all life forms in it that are capable of making measurements of \(\lambda \) will find values very close to \(\lambda _0\). Using Bayesian language we can take this insight into account by reconsidering the conditional probability of finding \(\lambda _0\)given\(T_M\) in the light of the fine-tuning considerations F and assign it a larger value than while F was still ignored. So, plausibly, if we denote by “\(P^F([\lambda _0]|T_M,B_l)\)” the revised version of \(P([\lambda _0]|T_M,B_l)\), now assigned in the light of the fine-tuning considerations F, we have

The notation “\(P^F([\lambda _0]|T_M,B_l)\)” is used instead of “\(P([\lambda _0]|T_M,B_l,F)\)” because it is doubtful whether taking into account the fine-tuning considerations F can be modelled by Bayesian conditioning. After all, considering F is not a matter of gaining any new evidence but amounts to better understanding the empirical consequences of the theories \(T_M\) and \(T_U\), namely, that they are compatible with the existence of life only for very specific configurations of the parameter \(\lambda \).

In contrast, the conditional probabilities \(P^F([\lambda _0]|T_U^{\lambda _0},B_l)\) and \(P([\lambda _0]|T_U^{\lambda _0},B_l)\) cannot possibly differ from each other as they are both 1. As a consequence of Eq. (12), the revised version of the inequality Eq. (9), taking into account the fine-tuning considerations F, will be less pronounced than the original inequality, i.e. \(P^F([\lambda _0]|T_M,B_l)\) will be less tiny compared with \(P^F([\lambda _0]|T_U^{\lambda _0},B_l)\) than \(P([\lambda _0]|T_M,B_l)\) was compared with \(P([\lambda _0]|T_U^{\lambda _0},B_l)\).

Now the one key assumption on which the new fine-tuning argument for the multiverse rests is that the fine-tuning considerations F do not have any further, independent, impact on our assessment of the two main rival theories’ comparative virtues. Using Bayesian terminology, this key assumption translates into the statement that the ratio of the priors \(P^F(T_M|B_l)\) and \(P^F(T_U^{\lambda _0}|B_l)\) assigned after taking into account the fine-tuning considerations F will not differ markedly from the ratio of the originally assigned priors \(P(T_M|B_l)\) and \(P(T_U^{\lambda _0}|B_l)\), i.e.

Arguably, Eq. (13) is a plausible assumption: the fine-tuning considerations F are about the physico-chemical pre-conditions for the existence of life. These are ostensibly unrelated to the systematic virtues and vices of \(T_M\) and \(T_U^{\lambda _0}\) based on which the assignments of the priors \(P(T_M|B_l)\) and \(P(T_U^{\lambda _0}|B_l)\) were made. There seems to be no systematic reason as to why the fine-tuning considerations F would inevitably privilege \(T_U^{\lambda _0}\) with respect to \(T_M\) in any relevant way to be reflected in the priors.

If Eq. (13) is assumed, the inequality Eq. (12) encodes the only major shift in probability assignments when taking the fine-tuning considerations F into account. Thus we obtain

i.e. the fine-tuning considerations increase our credence in the multiverse theory \(T_M\). Depending on the result of the original competition between \(T_M\) and \(T_U^{\lambda _0}\) as encoded in Eq. (11)—i.e. dependent on whether we had an initially attractive multiverse theory \(T_M\) to begin with—and on how pronounced the inequality Eq. (12) is we may end up with a higher credence in \(T_M\) than \(T_U^{\lambda _0}\). This completes the new fine-tuning argument for the multiverse.

5 Main Attraction and Limitation of the New Argument

The main attraction of the new fine-tuning argument for the multiverse is that, unlike the old argument, it is not susceptible to the inverse gambler’s fallacy charge: since it treats the life-friendliness of our universe as background knowledge rather than as evidence whose impact we assess, one cannot fault it for failing to consider that the existence of many other universe would not make it more likely that our universe is right for life. Another attraction of the new argument in comparison with the old one is that, whereas the old one required assigning prior probabilities \(P(T_U|B_0)\) and \(P(T_M|B_0)\) from the curious vantage point of someone who is supposedly unaware that there is a life-friendly universe, the new one does not do so.

The main limitation of the new argument is its reliance on the assumption that the fine-tuning considerations F do not (more than minimally) affect the trade-off between the leading multiverse theory \(T_M\) and the leading single-universe theory \(T_U^{\lambda _0}\) inasmuch as encoded in the assignment of priors \(P(T_M|B_l)\) and \(P(T_U^{\lambda _0}|B_l)\). But in the absence of convincing reasons to doubt this assumption it seems reasonable to suspect that the fine-tuning considerations may indeed make it rational to at least somewhat increase our degree of belief in the hypothesis that we live in a multiverse.

Notes

Somewhat curiously, Hacking [23] himself regards only those versions of the argument from fine-tuning for the multiverse as guilty of the inverse gambler’s fallacy that infer the existence of multiple universes in a temporal sequence.

I leave it open here whether Dicke’s account really qualifies as a genuine scientific explanation of the large number coincidence. See [34, p. 309] for the (plausible, I think) view that it should not be regarded as such.

References

Carr, B.J., Rees, M.J.: The anthropic principle and the structure of the physical world. Nature 278, 605 (1979)

Hogan, C.J.: Why the universe is just so? Rev. Mod. Phys. 72, 1149 (2000)

Hogan, C.J.: Quarks, electrons, and atoms in closely related universes. In: Carr, B. (ed.) Universe of Multiverse?, pp. 221–230. Cambridge University Press, Cambridge (2007)

Barr, S.M., Khan, A.: Anthropic tuning of the weak scale and of \(m_u/m_d\) in two-Higgs-doublet models. Phys. Rev. D 76, 045002 (2007)

Rees, M.: Just Six Numbers: The Deep Forces That Shape the Universe. Basic Books, New York (2000)

Lewis, G.J., Barnes, L.A.: Fortunate Universe: Life in a Finely Tuned Cosmos. Cambridge University Press, Cambridge (2016)

Friederich, S.: Fine-tuning. In: Zalta, F.N. (ed.) The Stanford Encyclopedia of Philosophy, spring 2018 edn. Metaphysics Research Lab, Stanford University, Stanford (2018)

Adams, F.C., Grohs, E.: On the habitability of universes without stable deuterium. Astropart. Phys. 91, 90 (2017)

Barnes, L.A.: The fine-tuning of the universe for intelligent life. Publ. Astron. Soc. Aust. 29, 529 (2012)

Barnes, L.A.: Fine-tuning in the context of Bayesian theory testing. Eur. J. Philos. Sci. 8, 253 (2018)

Uzan, J.P.: The fundamental constants and their variation: observational and theoretical status. Rev. Mod. Phys. 75, 403 (2003)

Susskind, L.: The Cosmic Landscape: String Theory and the Illusion of Intelligent Design. Back Bay Books, New York (2005)

Dawid, R.: String Theory and the Scientific Method. Cambridge University Press, Cambridge (2013)

Guth, A.H.: Eternal inflation and its implications. J. Phys. A 40, 6811 (2007)

Carter, B.: Large number coincidences and the anthropic principle in cosmology. In: Longair, M.S. (ed.) Confrontation of Cosmological Theory with Astronomical Data, pp. 291–298. Reidel, Dordrecht (1974)

Greene, B.: The Hidden Reality. Vintage, New York (2011)

Tegmark, M.: Our Mathematical Universe: My Quest for the Ultimate Nature of Reality. Knopf, New York (2014)

Leslie, J.: Universes. Routledge, London (1989)

Smart, J.J.C.: Our Place in the Universe: A Metaphysical Discussion. Blackwell, Oxford (1989)

Bradley, D.J.: Multiple universes and observation selection effects. Am. Philos. Q. 46, 61 (2009)

White, R.: Fine-tuning and multiple universes. Nous 34, 260 (2000)

Landsman, K.: The fine-tuning argument: exploring the improbability of our own existence. In: Landsman, K., van Wolde, E. (eds.) The Challenge of Chance, pp. 111–128. Springer, Heidelberg (2016)

Hacking, I.: The inverse Gambler’s fallacy: the argument from design. The anthropic principle applied to wheeler universes. Mind 96, 331 (1987)

Dicke, R.H.: Dirac’s Cosmology and Mach’s Principle. Nature 192, 440 (1961)

Juhl, C.: Fine-tuning and old evidence. Nous 41, 550 (2007)

Friederich, S.: Fine-tuning as old evidence, double-counting, and the multiverse. International Studies in the Philosophy of Science (fthc)

Draper, K., Pust, J.: Probabilistic arguments for multiple universes. Pac. Philos. Q. 88, 288 (2007)

McGrath, P.J.: The inverse Gambler’s fallacy and cosmology: a reply to hacking. Mind 97, 331 (1988)

Leslie, J.: No inverse Gambler’s fallacy in cosmology. Mind 97, 269 (1988)

Bostrom, N.: Anthropic Bias: Observation Selection Effects in Science and Philosophy. Routledge, New York (2002)

Manson, N.A., Thrush, M.J.: Fine-tuning, multiple universes, and the ‘This Universe’ objection. Pac. Philos. Q. 84, 67 (2003)

Juhl, C.: Fine-tuning, many worlds, and the ‘Inverse Gambler’s Fallacy’. Nous 39, 337 (2005)

Dirac, P.A.M.: A new basis for cosmology. Proc. R. Soc. A 165, 199 (1938)

Earman, J.: The SAP also rises: a critical examination of the anthropic principle. Am. Philos. Q. 24, 307 (1987)

Williams, P.: Naturalness, the Autonomy of Scales, and the 125GeV Higgs. Studies in History and Philosophy of Modern Physics 51, 82 (2015)

Donoghue, J.F.: The fine-tuning problems of particle physics and anthropic mechanisms. In: Carr, B. (ed.) Universe of Multiverse, pp. 231–246. Cambridge University Press, Cambridge (2007)

Acknowledgements

I am grateful to Jan-Willem Romeijn for many useful discussions of the ideas presented here. Stimulating feedback was provided by audiences in Munich, Turku, Sydney, Brisbane, and Bristol. Work on this article was financed by the Netherlands Organization for Scientific Research (NWO), Veni Grant 275-20-065.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Friederich, S. A New Fine-Tuning Argument for the Multiverse. Found Phys 49, 1011–1021 (2019). https://doi.org/10.1007/s10701-019-00246-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10701-019-00246-2