Abstract

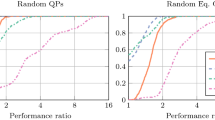

We consider the variational inequality problem formed by a general set-valued maximal monotone operator and a possibly unbounded “box” in \({{\mathbb R}^n}\), and study its solution by proximal methods whose distance regularizations are coercive over the box. We prove convergence for a class of double regularizations generalizing a previously-proposed class of Auslender et al. Using these results, we derive a broadened class of augmented Lagrangian methods. We point out some connections between these methods and earlier work on “pure penalty” smoothing methods for complementarity; this connection leads to a new form of augmented Lagrangian based on the “neural” smoothing function. Finally, we computationally compare this new kind of augmented Lagrangian to three previously-known varieties on the MCPLIB problem library, and show that the neural approach offers some advantages. In these tests, we also consider primal-dual approaches that include a primal proximal term. Such a stabilizing term tends to slow down the algorithms, but makes them more robust.

Similar content being viewed by others

References

H. Attouch and M. Théra, “A general duality principle for the sum of two operators,” Journal of Convex Analysis, vol. 3, pp. 1–24, 1996.

A. Auslender, M. Teboulle, and S. Ben-Tiba, “A logarithmic-quadratic proximal method for variational inequalities,” Computational Optimization and Applications, vol. 12, pp. 31–40, 1999.

A. Auslender, M. Teboulle, and S. Ben-Tiba, “Interior proximal and multiplier methods based on second order homogeneous kernels,” Mathematics of Operations Research, vol. 24, pp. 645–668, 1999.

A. Auslender and M. Teboulle, “Lagrangian duality and related multiplier methods for variational inequality problems,” SIAM Journal on Optimization, vol. 10, pp. 1097–1115, 2000.

D.P. Bertsekas, Nonlinear Programming. Athena Scientific, 1995.

R. Burachick, M.G. Drummond, A.N. Iusem, and B.F. Svaiter, “Full convergence of the steepest descent method with inexact line searches,” Optimization, vol. 10, pp. 1196–1211, 1995.

J.V. Burke and M.C. Ferris, “Weak sharp minima in mathematical programming,” SIAM Journal on Control and Optimization, vol. 31, pp. 1340–1356, 1993.

Y. Censor and S.A. Zenios, “Proximal minimization algorithm with D-functions,” Journal of Optimization Theory and Applications, vol. 73, pp. 451–464, 1992.

Y. Censor, A.N. Iusem, and S.A. Zenios, “An interior point method with Bregman functions for the variational inequality problem with paramonotone operators,” Mathematical Programming, vol. 81, pp. 373–400, 1998.

C. Chen and O.L. Mangasarian, “A class of smoothing functions for nonlinear and mixed complementarity problems,” Computational Optimization and Applications, vol. 5, pp. 97–138, 1996.

S.P. Dirkse and M.C. Ferris, “MCPLIB: A collection on nonlinear mixed complementarity problems,” Optimization Methods and Software, vol. 2, pp. 319–345, 1995.

S.P. Dirkse and M.C. Ferris, “Modeling and solution environments for MPEC: GAMS & MATLAB,” in Reformulation: Nonsmooth, Piecewise Smooth, Semismooth and Smoothing Methods (Lausanne, 1997), Applied Optimization, vol. 22. Kluwer Academic Publisher, Dordrecht, 1999, pp. 127–147.

E.D. Dolan and J.J. Moré, Benchmarking optimization software with performance profiles, Mathematical Programming, vol. 91, no. 2, pp. 201–213, 2002.

J. Eckstein, “Nonlinear proximal point algorithms using Bregman functions, with applications to convex programming,” Mathematics of Operations Research, vol. 18, pp. 202–226, 1993.

J. Eckstein, “Approximate iterations in Bregman-function-based proximal algorithms,” Mathematical programming, vol. 83, pp. 113–123, 1998.

J. Eckstein and M.C. Ferris, “Smooth methods of multipliers for complementarity problems,” Mathematical Programming, vol. 86, pp. 65–90, 1999.

M.C. Ferris, “Finite termination of the proximal point algorithm,” Mathematical Programming, vol. 50, pp. 359–366, 1991.

P.E. Gill, W. Murray, and M.H. Wright, Practical Optimization. Academic Press, 1981.

A.N. Iusem, “On some properties of paramonotone operators,” Journal of Convex Analysis, vol. 5, pp. 269–278, 1998.

C.T. Kelley, Iterative Methods for Linear and Nonlinear Equations. SIAM: Philadelphia, 1995.

K.C. Kiwiel, “On the twice differentiable cubic augmented Lagrangian,” Journal of Optimization Theory and Applications, vol. 88, pp. 233–236, 1996.

L. Lewin (Ed.) Structural Properties of Polylogarithms. Mathematical Surveys and Monographs, vol. 37, American Mathematical Society, Providence, 1991.

U. Mosco, “Dual variational inequalities,” Journal of Mathematical Analysis and Applications, vol. 40, pp. 202–206, 1972.

D.J. Newman, A Problem Seminar. Springer-Verlag: New York, 1982.

R.A. Polyak, “Nonlinear rescaling vs. smoothing techinique in convex optimization,” Mathematical Programming, vol. 92, pp. 197–235, 2002.

S.M. Robinson, “Composition duality and maximal monotonicity,” Mathematical Programming, vol. 85, pp. 1–13, 1999.

R.T. Rockafellar, Convex Analysis. Princeton University Press: Princeton, 1970.

R.T. Rockafellar, “Monotone operators and the proximal point algorithm,” SIAM Journal on Control and Optimization, vol. 14, pp. 877–898, 1976.

R.T. Rockafellar, “Augmented Lagrangians and applications of the proximal point algorithm in convex programming,” Mathematics of Operations Research, vol. 1, pp. 97–116, 1976.

R.T. Rockafellar and R.J.-B. Wets, Variational Analysis. Springer-Verlag: Berlin, 1998.

P.J.S. Silva, J. Eckstein, and C. Humes Jr., “Rescaling and stepsize selection in proximal methods using separable generalized distances,” SIAM Journal on Optimization, vol. 12, pp. 238–261, 2001.

M. Teboulle, “Entropic proximal mappings with applications to nonlinear programming,” Mathematics of Operations Research, vol. 17, pp. 670–690, 1992.

P. Tseng and D. Bertsekas, “On the convergence of the exponential multiplier method for convex programming,” Mathematical Programming, vol. 60, pp. 1–19, 1993.

A.E. Xavier, Hyperbolic Penalty: A New Method for Solving Optimization Problems,” M.Sc. Thesis, COPPE, Federal University of Rio de Janeiro, Brazil (in Portuguese), 1982.

Author information

Authors and Affiliations

Corresponding author

Additional information

This author was partially supported by CNPq, Grant PQ 304133/2004-3 and PRONEX-Optimization.

Rights and permissions

About this article

Cite this article

Silva, P.J.S., Eckstein, J. Double-Regularization Proximal Methods, with Complementarity Applications. Comput Optim Applic 33, 115–156 (2006). https://doi.org/10.1007/s10589-005-3065-0

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-005-3065-0