Abstract

In order to be convergent, linear multistep methods must be zero stable. While constant step size theory was established in the 1950’s, zero stability on nonuniform grids is less well understood. Here we investigate zero stability on compact intervals and smooth nonuniform grids. In practical computations, step size control can be implemented using smooth (small) step size changes. The resulting grid \(\{t_n\}_{n=0}^N\) can be modeled as the image of an equidistant grid under a smooth deformation map, i.e., \(t_n = {\varPhi }(\tau _n)\), where \(\tau _n = n/N\) and the map \({\varPhi }\) is monotonically increasing with \({\varPhi }(0)=0\) and \({\varPhi }(1)=1\). The model is justified for any fixed order method operating in its asymptotic regime when applied to smooth problems, since the step size is then determined by the (smooth) principal error function which determines \({\varPhi }\), and a tolerance requirement which determines N. Given any strongly stable multistep method, there is an \(N^*\) such that the method is zero stable for \(N>N^*\), provided that \({\varPhi }\in C^2[0,1]\). Thus zero stability holds on all nonuniform grids such that adjacent step sizes satisfy \(h_n/h_{n-1} = 1 + {\mathrm {O}}(N^{-1})\) as \(N\rightarrow \infty \). The results are exemplified for BDF-type methods.

Similar content being viewed by others

1 Introduction

A linear multistep method, discretizing an initial value problem \(\dot{y} = f(t,y)\), is represented by a difference equation of order k,

Here the step size \(h = t_{n+k}-t_{n+k-1} > 0\) is assumed constant. We denote the forward shift operator by \({\mathrm {E}}\) and write the method \(h^{-1}\rho ({\mathrm {E}})y_n = \sigma ({\mathrm {E}})f(t_n,y_n)\), with generating polynomials

These are arranged to have no common factors, and coefficients are normalized by \(\sigma (1)=1\). Zero stability is necessary for convergence, and requires that all roots of \(\rho (\zeta )=0\) lie inside or on the unit circle, with no multiple unimodular roots. Since consistent methods have \(\rho (\zeta ) = (\zeta -1)\cdot \rho _R(\zeta )\) as indicated above, zero stability is a condition on the extraneous operator \(\rho _R(\zeta )\). Its zeros are referred to as the extraneous roots. Strong zero stability requires that all extraneous roots are strictly inside the unit circle; this is a condition on the k coefficients \(\{\gamma _j\}_{j=0}^{k-1}\).

Since the extraneous operator is void in Adams–Moulton and Adams–Basforth methods, these methods are trivially zero stable for variable steps [9, p. 407]. The most important case having a nontrivial extraneous operator is the BDF methods, known to be zero stable for \(1\le k\le 6\), cf. [5, 9, p. 381]. Some (nonstiff) method suites, such as the dcBDF and IDC methods [1], are based on the BDF \(\rho \) operator, and have the same zero stability properties for \(k\ge 2\). Other examples of nontrivial extraneous operators are the weakly stable explicit midpoint method (two-step method of order 2) and the lesser used weakly stable implicit Milne methods [9, p. 363].

Adaptive computations are of particular importance for stiff problems, as widely varying time scales call for correspondingly large variations in step size. Of the methods mentioned above, only the BDF family has unbounded stability regions specifically designed for stiff problems. Thus the BDF methods must handle step size variations well, in spite of its extraneous operator, explaining why studies of variable step size zero stability mostly center on the BDF methods [9, p. 402ff].

Although there are several ways to construct multistep methods on nonuniform grids, we shall only consider the grid-independent representation of multistep methods [2]. This represents a multistep method on any nonuniform grid using a fixed parametrization, defining a computational process where the coefficients \(\alpha _{j,n}, \beta _{j,n}\) vary along the solution and depend on \(k-1\) consecutive step size ratios. For simplicity, but without loss of generality, let us consider a quadrature problem \(\dot{y} = f(t)\) on [0, 1] using variable steps. The multistep method (1.1) becomes

where \(h_{n+k-1} = t_{n+k}-t_{n+k-1}\). Letting \(y\in C^{p+1}\) denote the exact solution, we obtain

provided that y is sufficiently differentiable, and where \(\vartheta \in [t_n,t_{n+k}]\). Subtracting (1.4) from (1.3) gives

where the global error at \(t_n\) is \(e_{n} = y_n - y(t_n)\). Here, the local error \(c_n h^{p}_{n+k-1} y^{(p+1)}(\vartheta )\) goes to zero if \(h_{n+k-1}\rightarrow 0\) (consistency), but convergence (\(e_n\rightarrow 0\)) in addition requires that solutions to the homogeneous problem

remain bounded. Thus zero stability on nonuniform grids is investigated in terms of the problem \(\dot{y} = 0\) and finding sufficient conditions on the grid partitioning of [0, 1], such that the numerical solution \(\{y_n\}_0^N\) is uniformly bounded as \(N\rightarrow \infty \). This problem has been approached in several different ways, see e.g. [3, 4, 7, 8], usually with the aim of finding precise bounds on the step size ratios, such that the method remains convergent. Since the method coefficients change from step to step, most analyses become highly complicated. For example, the problem can be addressed by studying infinite products of companion matrices associated with the recursion (1.6) [9, p. 403], or by considering the nonuniform grid as a “perturbation” of an equidistant grid, by letting the step size vary smoothly [6].

An overview is given in [9, p. 402ff], but the classical results focus on the existence of local step size ratio bounds that guarantee zero stability. By constrast, our focus is on grid smoothness. Using (near) Toeplitz operators, our aim is to develop a proof methodology for adaptive computation, aligned with the formal convergence analysis in the Lax–Stetter framework, cf. [15]. We let the grid points be given by a strictly increasing sequence \(\{t_n\}_0^N\) and define the step sizes by \(h_n = t_{n+1}-t_n\), requiring that \(h_n\rightarrow 0\) for every n as \(N\rightarrow \infty \). If the grid is smooth enough, then any multistep method which is strongly zero stable on a uniform grid is also zero stable on the nonuniform grid for N large enough.

The main result has the following structure. Every multistep method is associated with two constants, \(C_0\) and \(C_k\), where the former only depends on constant step size theory, and is bounded if the method is strongly zero stable on a uniform grid. The second constant depends on the first order variation of the method’s coefficients for infinitesimal step size variations, and is computable using a suitable computer algebra system such as Maple or Mathematica. Finally, grid smoothness will be characterized in terms of a differentiable grid deformation map, requiring a bound on a function of the form \(\varphi '/\varphi \). Under these conditions, the method is zero stable on the non-uniform grid provided that

This separates method properties and grid properties, and only requires that the total number of steps N is large enough. The important issues are to generate a smooth step size sequence (which automatically manages step size ratios), and using a sufficiently small error tolerance, which determines N. Although such step size sequences can easily be constructed in adaptive computation [12], most multistep codes still use comparatively large step size changes, violating the smoothness conditions required for zero stability. This has been demonstrated to be a likely cause of poor computational stability observed in practice [13]. In production codes it is often thought that frequent, small step size changes are not “worthwhile,” but the present paper and classical theory only support such step size changes.

Our approach is intended as an analysis tool for deriving a rigorous convergence proof for adaptive multistep methods, redefining practical implementation principles. A full convergence analysis of the initial value problem \(\dot{y} = f(t,y)\) requires further attention to detail, as it also involves the Lipschitz continuity of the vector field f with respect to y, as well as (for implicit methods) the solvability of equations of the form \(v = \gamma \, hf(t,v) + w\). The solvability will depend on the magnitude of the Lipschitz constant \(L[\gamma \,hf]\) or the logarithmic Lipshitz constant \(M[\gamma \,hf]\), see e.g. [14]. Likewise, error bounds will depend on these quantities. Here, however, we only focus on zero stability, which can be fully characterized in the simpler setting of a quadrature problem. We shall return to the full convergence analysis on smooth nonuniform grids in a forthcoming study.

2 Smooth nonuniform grids

If an initial value problem has a smooth solution, then the step size sequence, keeping the local error (nearly) constant, is also smooth [6, 11]. A smooth sequence is also known to be necessary in connection with e.g. Hamiltonian problems [10], as well as in finite difference methods for boundary value problems. For these reasons, we shall model nonuniform grids by a smooth deformation of an equidistant grid. We only consider compact intervals.

Adaptive computation. The asymptotic behavior of the local error per unit step in a multistep method is of the form \(l_n = ch_n^{p}y^{(p+1)}\). The most common step size control in adaptive computation aims to keep \(\Vert l_n\Vert \) constant, equal to a given local error tolerance \(\varepsilon \). Representing the step size in terms of a step size modulation function \(\mu (t)\) allows the step size at time t to be expressed as \(h = \mu (t)/N\), so that the “ideal” step size sequence can be modeled by

It follows that \(N \sim \varepsilon ^{-1/p}\). In other words, the local error tolerance determines N. By contrast, \(\mu (t)\) is determined by the problem. It is smooth if \(y^{(p+1)}(t)\) is smooth, since

In real adaptive computations, a step size control of the form \(h_n = r_{n-1}h_{n-1}\) is used, where the step ratio sequence is processed by a digital filter to generate a smooth step size sequence [12]. This may e.g. take the form

where \(l_{n-1}\) and \(l_{n-2}\) are local error estimates and \((b_1,b_2,a_1)\) are the filter parameters. The controller keeps the local error close to the tolerance \(\varepsilon \). As a consequence the step ratios will remain near 1. Further, reducing the tolerance \(\varepsilon \) increases N, reducing step sizes as well as step ratios. Thus it is justified to model a nonuniform grid by a smooth grid deformation, and such a grid can be generated using a proper filter to continually adjust the step size. It also corresponds well to the behavior observed in computational practice when such step size controllers are employed.

Modeling a smooth nonuniform grid. Let \({\varPhi }:\tau \mapsto t\) be a smooth, strictly increasing map in \(C^2[0,1]\), satisfying \({\varPhi }(0)=0\) and \({\varPhi }(1)=1\). Further, let its derivative \({\varPhi }' = {\mathrm {d}}{\varPhi }/{\mathrm {d}}\tau \) be denoted by \(\varphi \) and assume that \(\varphi '/\varphi \in L^{\infty }[0,1]\). Now, given N, let \(\tau _n = n/N\) and construct a smooth nonuniform grid \(\{t_n\}_{n=0}^N\) by

Since \(t = {\varPhi }(\tau )\) we have the differential relation \({\mathrm {d}}t = \varphi (\tau )\,{\mathrm {d}}\tau \). By a discrete correspondence, mesh widths are related by \({\varDelta }t \approx \varphi (\tau )\, {\varDelta }\tau \). Thus we model the step size sequence \(\{h_n\}_{n=0}^{N-1}\) by

Hence \(h_n \rightarrow 0\) as \(N\rightarrow \infty \). This allows us to study zero stability on nonuniform grids in terms of the single-parameter limit \(N\rightarrow \infty \). This does not substantially restrict \(h_{\max }/h_{\min }\) during the overall integration, although adjacent step ratios will be small.

Step ratios. The coefficients of a multistep method on a nonuniform grid depend on the ratio of adjacent step sizes. By (2.2) the step ratios \(\{r_n\}_{n=0}^{N-2}\) are given by

Hence the step ratios approach 1 as \(N\rightarrow \infty \), i.e., locally the method behaves like a constant step size method for N large enough, since we assumed \(\varphi '/\varphi \in L^{\infty }[0,1]\).

It is also of interest to represent the step size change as a relative step size increment, which, in view of (2.3), is defined by

Thus \(v_{n-1}\rightarrow 0\) as \(N\rightarrow \infty \), and in practical computations the relative step size increment is invariably small.

The assumption \(\varphi '/\varphi \in L^{\infty }[0,1]\) requires that \(\log \varphi \in C^1[0,1]\). By a stronger assumption, \(\log \varphi \in C^2[0,1]\), we can also estimate the change in the step size ratios,

where \(\varphi \) and its derivatives are evaluated at \(\tau _n\). Thus the ratio of successive step ratios approach 1 even faster than the step ratios themselves. The interpretation is that step ratios change slowly, and there may be long strings of consecutive steps where the step size “ramps up” as the solution to the ODE gradually becomes smoother after a transient phase. This corresponds to a gradual stretching of the mesh width.

Step sizes and ratios as a function of t . Using \(t={\varPhi }(\tau )\) and \({\mathrm {d}}t = \varphi \,{\mathrm {d}}\tau \), the step size modulation function \(\mu (t)\) and the derivative \(\varphi (\tau )\) satisfy the functional relation

Differentiating (2.5) with respect to t and denoting time derivatives by a dot to distinguish them from derivatives with respect to \(\tau \), we obtain \({{\dot{\mu }}} \,{\mathrm {d}}t = \varphi ' \, {\mathrm {d}}\tau \). Hence

allowing us to express step sizes, step ratios, and relative step size increments along the solution of the differential equation, as functions of t,

Obviously, the previous assumption \(\varphi '/\varphi \in L^{\infty }[0,1]\) is equivalent to \({\dot{\mu }} \in L^{\infty }[0,1]\). Since \(\mu (t) \sim \Vert y^{(p+1)}(t)\Vert ^{-1/p}\), the assumptions on the deformation map \({\varPhi }\) are realistic and reflect problem regularity.

3 Deflation and operator factorization

The variable step size difference equation

can be rewritten in matrix–vector form as

where the vector y contains all successive approximations \(\{y_n\}_{n=1}^{N}\). The vector \(Y_0\) is constructed from the initial conditions, \(y_0, \dots y_{-k+1}\). Further, \(A_{N}(\varphi )\) is an \(N\times N\) matrix containing the method coefficients, and is associated with a nonuniform grid characterized by the function \(\varphi \). The step sizes are represented by a diagonal matrix \(H_N = {\tilde{\varphi }}/N\),

where \(\varphi _{j+1/2} \approx \varphi (\tau _{j+1/2})\). For example, if \(k=2\), the matrix \(H_N^{-1}A_{N}(\varphi )\) takes the lower tridiagonal form

We will investigate zero stability as a question of whether there exists a constant \(C_{\varphi }\), independent of N, and an \(N^*\), such that \(\Vert A_{N}^{-1}(\varphi )H_N\Vert \le C_{\varphi }\) for all \(N > N^*\). As \(\varphi (\tau ) \equiv 1\) corresponds to a uniform grid, \(A_{N}(1)\) denotes the Toeplitz matrix of method coefficients for constant step size \(H_N = I/N\). Then zero stability is equivalent to \(\Vert A_{N}^{-1}(1)/N\Vert \le C_1\) for all N.

Just as the principal root can be factored out of \(\rho \) to construct the extraneous operator \(\rho _R(\zeta )\), satisfying \(\rho (\zeta ) = (\zeta - 1)\rho _R(\zeta )\), a similar factorization holds for the (near) Toeplitz operators. Thus, due to preconsistency (\(\rho (1)=0\)), the \(n{\mathrm {th}}\) full row sum of the matrix \(A_{N}(\varphi )\) is

even on a nonuniform grid. Denoting the \(n{\mathrm {th}}\) row of nonzeros in \(A_{N}(\varphi )\) by a \(k+1\) vector \(a_n^{{\mathrm {T}}}(\varphi )\), and letting \({\mathbf {1}}_{k+1} = (1\quad 1\dots 1)^{{\mathrm {T}}}\) denote a \(k+1\) vector of unit elements, preconsistency can be written

Hence \(a_n^{{\mathrm {T}}}(\varphi )\) contains a difference operator. It can therefore be written as a convolution of a k-vector \(c_n^{{\mathrm {T}}}(\varphi )\) and the backward difference operator \(\nabla = (-1\quad 1)\), i.e.,

For example, for the constant step size BDF2 method, corresponding to \(\alpha _0 = 1/2\), \(\alpha _1 = -2\) and \(\alpha _2 = 3/2\), the convolution can be represented as

implying that

Thus the vector \(c_n^{{\mathrm {T}}}(1)\) is the \(n{\mathrm {th}}\) full row of nonzero elements of the extraneous operator, corresponding to the coefficients \(\gamma _0 = -1/2\) and \(\gamma _1 = 3/2\) of the deflated polynomial \(\rho _R(\zeta )\). Table 1 lists the row elements \(a_n^{{\mathrm {T}}}(1)\) and \(c_n^{{\mathrm {T}}}(1)\), respectively, for all zero stable BDF methods of step numbers \(k\ge 2\).

Unlike generating polynomials, the (near) Toeplitz operators have the advantage of applying also to nonuniform grids. The following factorization of \(H_N^{-1}A_{N}(\varphi )\) is then a matrix representation of the deflation operation described above.

Theorem 3.1

Consider a linear multistep method on a nonuniform grid characterized by \(\varphi \), and let \(H_N\) and \({\tilde{\varphi }}\) be defined by (3.3). Then \(H_N^{-1}A_{N}(\varphi )\) has a factorization

where \(R_N(\varphi )\) is the extraneous operator, dependent on the nonuniform grid, and

The simple integrator \({\mathbf {D}}_N^{-1}\) is stable, and for all \(N\ge 1\) it holds that \(\Vert {\mathbf {D}}_N^{-1}\Vert _{\infty } = 1\).

Proof

We only need to prove the latter statement. By induction we see that the integrator is a cumulative summation operator,

and it immediately follows that \(\Vert {\mathbf {D}}_N^{-1}\Vert _{\infty } = 1\) for all N. \(\square \)

To establish zero stability we need to show that \((H_N^{-1}A_{N}(\varphi ))^{-1} = {\mathbf {D}}_N^{-1} R_N^{-1}(\varphi ){\tilde{\varphi }}\) is uniformly bounded as \(N\rightarrow \infty \). We shall use the uniform norm throughout. Since it formally holds that

where \(\Vert {\tilde{\varphi }}\Vert _{\infty }\) is bounded for all smooth grids, the remaining difficulty is to show that \(\Vert R_N^{-1}(\varphi )\Vert _{\infty }\le C_{\varphi }\) for all \(N>N^*\), and how this depends on grid regularity. For a unform grid, zero stability is determined by the roots of the extraneous operator; this needs to be translated into norm conditions. A simple possibility is to use the fact that

where \(m_{\infty }[R_N(1)]\) is the lower logarithmic norm of \(R_N(1)\), see [14]. The condition \(m_{\infty }[R_N(1)] > 0\) is equivalent to diagonal dominance. For example, by Table 1, the BDF2 matrix \(NA_{2,N}(1)\) associated with the \(\rho \) operator has the factorization

where the nonzero coefficients correspond to the \(c_n^{{\mathrm {T}}}(1)\) vector of Table 1. Since

it follows that \(\Vert R_{2,N}^{-1}(1) \Vert _{\infty } \le 1/m_{\infty }[ R_{2,N}(1) ] = 1\) and that the BDF2 method is zero stable. The same technique works for the BDF3 method, since

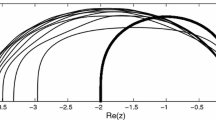

However, it fails for the BDF4 method and higher, since the extraneous operator is then no longer diagonally dominant. By instead computing e.g. the Euclidean norm numerically, the above technique can be extended to BDF4 and BDF5, but it again fails for BDF6. For this reason, we need a general result, based on sharper estimates.

Theorem 3.2

For every strongly stable k-step method on a uniform grid, there is a constant \(C_0 < \infty \), such that \(\Vert R_{k,N}^{-1}(1)\Vert _{\infty } \le C_0\) for all \(N\ge 1\).

Proof

Let \(T_0\) denote the lower triangular Toeplitz matrix \(R_{k,N}(1)\) representing the extraneous operator. Then \(T_0^{-1}\) is lower triangular too, albeit full. More importantly, \(T_0^{-1}\) is also Toeplitz. By (1.2), \(\rho _R(\zeta ) = \sum _{j=0}^{k-1} \gamma _j\zeta ^j\). Noting that \(\alpha _k = \gamma _{k-1}\), and illustrating the matrix \(T_0\) for \(k=3\), we have

where, in the general case, \(\delta _j = \gamma _j/\alpha _{k}\) are the elements of the scaled matrix \({\hat{T}}_0\), with Toeplitz inverse

Hence \(\Vert {\hat{T}}_0^{-1}\Vert _{\infty } \le C\) as \(N\rightarrow \infty \) if and only if the sequence \(u = \{u_n\}_{n=1}^{N}\) (where we define \(u_1=1\)) is in \(l^1\), i.e., the sequence u must be absolute summable as \(N\rightarrow \infty \). By construction, u satisfies the difference equation \(\rho _R({\mathrm {E}}) u = 0\), where \({\mathrm {E}}\) is the forward shift operator. By assumption \(\rho _R(\zeta )\) satisfies the strict root condition. Therefore u is bounded, i.e., \(u\in l^{\infty }\). Let \(\rho _R(\zeta _{\nu })=0\) and let

where equality applies whenever the maximum modulus root is simple. Then there is a constant \(K < \infty \) such that \(|u_n| \le K \cdot q^n\) for all \(n\ge 1\). Hence \(u\in l^{1}\), as

Since \(\Vert {\hat{T}}_0^{-1}\Vert _{\infty } = \Vert u\Vert _1\) due to the Toeplitz structure of \({\hat{T}}_0^{-1}\), we have, for all \(N\ge 1\),

and the proof is complete. \(\square \)

4 Zero stability on nonuniform grids—the BDF2 method

The general proof of variable step size zero stability is based on the operator factorization given by Theorem 3.1. Beginning with an example, the variable step size BDF2 discretization of \(\dot{y} = 0\) is

where \(r_n = h_{n+1}/h_n\) is the step ratio. Rearranging terms, we obtain

Using \(h_{n+1}=\varphi _{n+1/2}/N\), we can factor out the simple integrator to obtain

Introducing \(u_n/N = y_{n+1}-y_n\), the “extraneous recursion” becomes

As the subsequent Euler integration \(y_{n+1} = y_n + u_n/N\) is stable (cf. Theorem 3.1), the composite scheme is stable provided that the one-step recursion (4.4) is stable. Obviously, \(|u_{n+1}| \le |u_n|\) provided that

which holds for \(0 < r_n \le 1 + \sqrt{2}\). This bound on the step ratio is the same as the classical bound found in [9, pp. 405–406].

In terms of the (near) Toeplitz operators used above, the variable step size extraneous operator is given by

The operator \(R^{-1}_{2,N}(\varphi )\) is bounded whenever the lower logarithmic max norm,

along the range of step ratios r. Diagonal dominance requires that \(1+2r-r^2 > 0\), which holds if \(0< r < 1 + \sqrt{2}\), so the classical bound is obtained once more. As we assume a smooth grid in terms of (2.3), with \({\dot{\mu }} = \varphi '/\varphi \in L^{\infty }[0,1]\), the condition \(r_n < 1 + \sqrt{2}\) is fulfilled for

In general, however, a method can be zero stable without diagonal dominance, requiring more elaborate techniques to establish zero stability. The variable step size discretization (3.1) of \(\dot{y}=0\) is factorized to obtain the difference equation corresponding to the extraneous operator only,

where the coefficients \(\gamma _{j,n}\) are multivariate rational functions of \(k-1\) consecutive step size ratios. If the sequence u is bounded (zero stability), then the original solution y of (3.1) is obtained by simple Euler integration, \(y_{n+1} = y_n + u_n/N\), where \(h=1/N\) is a constant step size and \(N\rightarrow \infty \). Since the latter integration is stable, we only need to bound the solutions u of (4.7). Using (2.4), we write the step ratios

where, for smooth grids,

Thus, the larger the value of N, the closer is \(|v_n|\) to zero. Now, for \(v_n\equiv 0\) we obtain the classical constant step size method. The difference equation (4.7) can then be rearranged as a Toeplitz system \(T_0 u = U_0\), where \(T_0 = R_{2,N}(1)\) and \(u = \{u_n\}_1^N\) denotes the entire solution. The vector \(U_0\) contains initial data as needed. By Theorem 3.2, we have \(\Vert T_0^{-1}\Vert _{\infty } \le C_0\) for all \(N\ge 1\).

With variable steps, the system will depend on the step ratios, and the overall system matrix will no longer be Toeplitz. Nevertheless, for the BDF2 example used above, we have seen that the extraneous system matrix can be written

Thus we can write

where the \(T_j\) are Toeplitz and \(V = \mathrm {diag}(v_j)\) is a diagonal matrix. Since \(T_0^{-1}\) is uniformly bounded, a sufficient condition for \(R_{2,N}(\varphi )\) to be invertible is

and we obtain the bound

Here the \(\Vert T_jT_0^{-1}\Vert _{\infty }\) are method dependent constants, and

We can now determine a sufficient condition on \(\Vert V\Vert _{\infty }\) in general, and on N in particular, such that (4.9) is satisfied. Because \(w := \Vert V\Vert _{\infty } = {\mathrm {O}}(N^{-1})\) if the grid is regular, there is always an N large enough to satisfy this condition. Considering the equation

we find that we have to take N large enough to guarantee that

The quantity on the right hand side depends only on the method coefficients, and the left hand side depends only on the total number of steps, and the regularity of the nonuniform grid, as measured by \(\Vert \varphi '/\varphi \Vert _{\infty }\).

5 Zero stability on nonuniform grids—higher order methods

In a k-step method using variable steps, the coefficients depend on \(k-1\) step ratios. This makes the problem significantly more difficult. Without loss of generality, we will only consider an approach linear in V below. Note that while \(\Vert V\Vert _{\infty } = {\mathrm {O}}(N^{-1})\), it follows that higher powers of V are \(\Vert V\Vert _{\infty }^k = {\mathrm {O}}(N^{-k})\), implying that they are significantly smaller than the first order term when N is large and the grid is smooth. For example, in (4.12) above, we have \(w={\mathrm {O}}(N^{-1})\) implying that the \(w^2\) is negligible as \(N\rightarrow \infty \); it is therefore sufficient to consider terms of order \({\mathrm {O}}(N^{-1})\) only. This overcomes the added difficulty of considering k-step methods.

The procedure for a general k-step method follows the same pattern as the in the previous examples. Neglecting quadratic and higher order terms in V, the extraneous operator is

The diagonal matrices \(V_j\) only differ in the diagonal elements being successively shifted down the diagonal. Assume that \(\log \varphi \in C^2[0,1]\). By (2.4) and the mean value theorem,

evaluating \(\varphi \) and its derivatives at \(\tau _{n+1/2}\). It follows that \(V_{j+1} = V_j + {\mathrm {O}}(N^{-2})\), and that all \(V_j\) can be replaced by a single matrix, V, while only incurring \({\mathrm {O}}(N^{-2})\) perturbations. Further (4.11) holds for all \(V_j\).

Since \(\Vert T_0^{-1}\Vert _{\infty } \le C_0\), a sufficient condition for the extraneous operator \(R_{k,N}(\varphi )\) to have a uniformly bounded inverse is

This condition separates grid smoothness \(\Vert \varphi '/\varphi \Vert _{\infty }\) from method parameters, as represented by the Toeplitz matrices \(T_j\). Thus, in order to prove zero stability as \(N\rightarrow \infty \), we need \(\Vert T_j\Vert \le C_j\) for \(j\ge 1\). The latter condition is easily established, once the coefficients’ dependence on the step ratios has been established. Hence we have the following general result.

Theorem 5.1

For all smooth maps \({\varPhi }\) there exist constants \(N^*\) and \(C_{\varphi }\) (independent of N) such that \(\Vert R_{k,N}^{-1}(\varphi )\Vert _{\infty } \le C_{\varphi }\) for \(N > N^*\), whenever \(\Vert R_{k,N}^{-1}(1)\Vert _{\infty } \le C_0\) for all N.

To illustrate the general theory, we consider the variable step size BDF3 method. Slightly modifying the conventions set out in Sect. 2, we define

where \(r_1=1+v_1\) and \(r_2=1+v_2\) denote the step ratios that will occur in a single row of the Toeplitz operator. Naturally, these values change from one row to the next, as they depend on n as indicated by (5.3). Within this setting, after deflating the operator, we obtain a recursion on a nonuniform grid corresponding to

where

The coefficients are normalized so that \(\beta _{3,n}=1\). [In a general analysis, they are normalized by \(\beta _{k,n}=1\), cf. (1.3)]. By writing \(r_j=1+v_j\), where \(v_j = {\mathrm {O}}(N^{-1})\), we obtain

Since \(v_j = {\mathrm {O}}(N^{-1})\) we drop higher order terms to obtain

We can now identify three lower triangular Toeplitz operators, with diagonal elements in boldface,

These correspond to the \(T_j\) matrices in (5.2), and the matrices \(V_1\) and \(V_2\) are just diagonal matrices collecting the sequences of \(v_1\) and \(v_2\) values along the grid.

Because \(v_2-v_1 = {\mathrm {O}}(N^{-2})\), we may consider a further simplification, putting \(v_2 = v_1\), or, equivalently, \(r_2 = r_1\). This corresponds to “ramping up” the step size at an exponential rate, and is particularly challenging to zero stability. In such a case, we may consider \(T_0 + V(T_1+T_2)\), with elements rescaled to have a common denominator,

Here the diagonal dominance of \(T_0\) is sufficient to derive a condition for zero stability. We can thus compute the lower logarithmic max norm,

where we have assumed that \(v > -1/4\), allowing the removal of absolute values. Thus \(m_{\infty }[T_0 + (T_1+T_2)v] > 0\) if \(v < 2/19\). By requiring

the operator \(T_0 + V\cdot (T_1+T_2)\) has a uniformly bounded inverse. The corresponding zero stability condition is

For BDF3 [9, p. 406] cite Grigorieff’s (1983) sufficient conditions for zero stability,

Our BDF3 bounds for ramp-up provide the conditions

The differences between these results depend on the methodology, and not least on the choice of norm. The deflation approach used here is similar to the technique used in [7], while smooth grid maps are akin to the assumptions used in [6].

It is important to note that we do not try to determine the greatest possible step size increase, but instead prove that every strongly stable method will be zero stable on smooth grids. We have also seen that the complexity of determining exact stability bounds quickly becomes overwhelming, which is why we argue that an alternative proof, revealing the dependence on smoothness and method parameters, is sufficient.

6 Conclusions

In this paper we have demonstrated that any linear multistep method which is strongly stable on a uniform grid is also zero stable on any smooth nonuniform grid. Grid smoothness is (in theory) determined by a grid map \({\varPhi }:[0,1] \rightarrow [0,1]\), satisfying \({\varPhi }(0)=0\) and \({\varPhi }(1)=1\), and having a strictly positive derivative \(\varphi = {\varPhi }'\). The grid map transforms a uniform grid of N steps into a nonuniform grid, which is smooth if \(\log \varphi \) is continuously differentiable.

In practice, this corresponds to a smooth step size variation, where the step size at time \(t\in [0,1]\) can be represented by a continuous modulation function, so that \(h(t) = \mu (t)/N\). Here \({\dot{\mu }}(t) = \varphi '/\varphi \), which must remain bounded. The modulation function \(\mu (t)\) is determined by the solution of the differential equation, while N is determined by the accuracy requirement as specified by the tolerance \(\varepsilon \).

The main result is that every k-step method is associated with k bounded Toeplitz operators \(T_0,\dots T_{k-1}\), where \(T_0\) is associated with the constant step size method. If that method is strongly zero stable, then \(T_0\) has a bounded inverse. Smooth step size variation is characterized locally by the function \(\varphi '/\varphi \), the magnitude of which determines how many steps N that need to be taken in order to guarantee variable step size zero stability. Thus, if

the numerical solution to \(\dot{y} = 0\) is stable. Examples are given for BDF methods.

This result is also practically significant as it implies that time step adaptivity must be implemented using smooth step size changes, such that consecutive step ratios are \(r = 1 + {\mathrm {O}}(h)\). This can easily be achieved, as there is a wide range of smooth controllers available for dedicated purposes [12]. These are based on digital filter theory, and control \(\log h\) in small increments, changing the step size on every step. Since \(h \sim \varphi /N\), such a controller keeps \(\log \varphi \) smooth, in line with the assumptions of Theorem 5.1. The smoothness requirement is local, and does not imply any bound on \(h_{\mathrm {max}}/h_{\mathrm {min}}\). It is therefore not a limitation in stiff computation, where overall step size variation necessarily is large.

References

Arévalo, C., Führer, C., Söderlind, G.: Regular and singular \(\beta \)-blocking for nonstiff index 2 DAEs. Appl. Numer. Math. 35, 293–305 (2000)

Arévalo, C., Söderlind, G.: Grid-independent construction of multistep methods. J. Comput. Math. 35, 670–690 (2017)

Butcher, J.C., Heard, A.D.: Stability of numerical methods for ordinary differential equations. Numer. Algorithms 31, 59–73 (2002)

Crouzeix, M., Lisbona, F.J.: The convergence of variable-stepsize, variable formula, multistep methods. SINUM 21, 512–534 (1984)

Cryer, C.W.: On the instability of high order backward-difference multistep methods. BIT 12, 17–25 (1972)

Gear, C.W., Tu, K.W.: The effect of variable mesh size on the stability of multistep methods. SINUM 11, 1025–1043 (1974)

Grigorieff, R.D.: Stability of multistep-methods on variable grids. Numer. Math. 42, 359–377 (1983)

Guglielmi, N., Zennaro, M.: On the zero-stability of variable stepsize multistep methods: the spectral radius approach. Numer. Math. 88, 445–458 (2001)

Hairer, E., Nørsett, S.P., Wanner, G.: Solving Ordinary Differential Equations I, 2nd edn. Springer, Berlin (1993)

Hairer, E., Söderlind, G.: Explicit, time reversible, adaptive step size control. SISC 26, 1838–1851 (2005)

Shampine, L.F.: The step sizes used by one-step codes for ODEs Appl. Numer. Math. 1, 95–106 (1985)

Söderlind, G.: Digital filters in adaptive time-stepping. ACM-TOMS 29, 1–26 (2003)

Söderlind, G., Wang, L.: Adaptive time-stepping and computational stability. JCAM 185, 225–243 (2006)

Söderlind, G.: Logarithmic norms. History and modern theory. BIT 46, 631–652 (2006)

Stetter, H.J.: Analysis of Discretization Methods for Ordinary Differential Equations. Springer, Berlin (1973)

Acknowledgements

The authors gratefully acknowledge the contribution of Prof. Carmen Arévalo, who provided the grid-independent variable step size coefficients for the BDF3 method, computed in Maple.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Christian Lubich.

Imre Fekete: Supported by the ÚNKP-17-4 New National Excellence Program of the Ministry of Human Capacities; István Faragó: Supported by the Hungarian Scientific Research Fund OTKA under Grants Nos. 112157 and 125119

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Söderlind, G., Fekete, I. & Faragó, I. On the zero-stability of multistep methods on smooth nonuniform grids. Bit Numer Math 58, 1125–1143 (2018). https://doi.org/10.1007/s10543-018-0716-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-018-0716-y

Keywords

- Initial value problems

- Linear multistep methods

- BDF methods

- Zero stability

- Nonuniform grids

- Variable step size

- Convergence