Abstract

The seismic design of structures according to current codes is generally carried out using a uniform-hazard spectrum for a fixed return period, and by employing a deterministic approach that disregards many uncertainties, such as the contribution of earthquake ground motions with return periods other than that assumed for the design. This results in uncontrolled values of the failure probability, which vary with the structure and the location. Risk targeting has recently emerged as a tool for overcoming these limitations, allowing achievement of consistent performance levels for structures with different properties through the definition of uniform-risk design maps. Different countries are implementing the concepts of risk targeting in different ways, and new methods have recently emerged. In the first part of this article, the most well-known approaches for risk targeting are reviewed, with particular focus on the one implemented in recent American design codes, the one based on the use of risk-targeted behaviour factors (RTBF), and an approach based on direct estimation of hazard curves for inelastic response of single-degree-of-freedom systems. The effect of the linearization of the hazard curve is investigated first. A validation of the RTBF approach is then provided, based on comparison with the results of uniform-risk design spectral accelerations for single-degree-of-freedom systems with elastic-perfectly plastic behaviour for two different sites. The effectiveness of the current risk-targeting framework applied in the United States is also investigated. In the last part of the paper, uniform-risk design maps for Europe are developed using the RTBF approach, showing how the seismic design levels may change when moving from a uniform-hazard to a uniform-risk concept.

Similar content being viewed by others

1 Introduction

The seismic assessment and design of structures is continuously evolving, as demonstrated by the rapid development of new procedures, best exemplified by the PEER performance-based earthquake engineering framework (Porter 2003). Numerous studies have aimed to incorporate probability concepts into seismic performance evaluation, with consideration of the uncertainties related to not only the seismic input, but also the structural properties, the capacity, and the model (e.g. Dolšek 2009; Liel et al. 2009; Tubaldi et al. 2011; Fib 2012). Moreover, increasing attention has been given to achieving an explicit control of the probability that a structure exceeds prefixed performance objectives during its design life (e.g. Collins et al. 1996; Wen 2001; Fragiadakis and Papadrakakis 2008; Barbato and Tubaldi 2013; Gidaris and Taflanidis 2015; Castaldo et al. 2017; Altieri et al. 2018; Franchin et al. 2018). It is widely acknowledged that in the long term, risk-based assessment and design criteria will be recommended, or will even be mandatory, in design codes (Vamvatsikos et al. 2015; Fajfar 2018). For instance, the United States has already incorporated such criteria in its seismic design codes ASCE 7-16 (2017) and FEMA P-750 (2009a); the new version of Eurocode 8 Part 1 will also include an Informative Annex on probabilistic assessment of structures (Fajfar 2018).

Probability concepts have already entered into current codes in the definition of the seismic action. The basis of the seismic input definition is often probabilistic seismic hazard analysis (PSHA) (e.g. McGuire 2008; Baker 2015), which estimates the probability distribution of a seismic intensity measure (IM), such as the peak ground acceleration (PGA) or the pseudo-spectral acceleration (Sa). This information can be used to build a uniform hazard response spectrum (UHS) for a given return period TR or probability of exceedance in the design lifetime. The value of TR depends on the target performance objective and on the importance of the structure. For example, a return period TR = 475 years, corresponding to a 10% probability of exceedance in 50 years (given the standard assumption of a Poisson process), is often associated to ultimate limit state conditions (e.g. Eurocode 8-1-2.1, CEN 2004).

Having defined the seismic input, the structural response can be estimated by using various analysis methods, the most advanced one consisting in selecting a set of ground motions, and evaluating, via nonlinear time-history analysis, the mean or maximum demand for the considered records. The seismic code approach is still, however, essentially deterministic (Bradley 2011), and does not allow direct evaluation of the probability of exceedance of the engineering demand parameters (EDPs) of interest for the performance assessment. This is mainly a consequence of the dispersion in the EDP-IM relationship and in the system capacity (Cornell 2005). The consequence of this dispersion is that hazard levels corresponding to a probability of exceedance other than that of the UHS need to be considered (e.g. Cornell 1996; Iervolino et al. 2017; Tubaldi et al. 2015). Moreover, for design purposes, seismic codes prescribe the use of a UHS divided by a behaviour factor (or response modification factor) relevant to the structural system under study. This approach has been shown to result in inconsistent values of the risk of failure, which differ for systems with different vibration periods, and also for the same structure located in areas characterized by different hazard. Again, this inconsistency is the result of the record-to-record variability effects (i.e. the variability of the frequency content and other characteristics of the ground motion for a given IM level) that generally result in dispersion in the EDP-IM relationship for multi-degree of freedom (MDOF) or nonlinear structural systems. The many safety margins introduced by seismic codes (e.g. material design values, capacity design and minimum member sizes) are also responsible for the uncontrollable risk levels that are generally different from the hazard levels (e.g. Collins et al. 1996; Silva et al. 2016; Iervolino et al. 2017).

Given these limitations, more advanced approaches have been developed to achieve an explicit control of the seismic structural performance in the assessment and design stage (Fragiadakis and Papadrakakis 2008; Barbato and Tubaldi 2013; Gidaris and Taflanidis 2015; Castaldo et al. 2017; Altieri et al. 2018; Franchin et al. 2018). Parallel to these reliability-based assessment and design approaches, simplified methods have been proposed, fostering a gradual introduction of probability concepts into practice. Most of these methods are based on the probabilistic framework outlined in Kennedy and Short (1994) and Cornell (1996), which led to the development of the SAC-FEMA framework (Cornell et al. 2002) for structural design of steel moment resisting frames under seismic action, later enhanced by others (e.g. Lupoi et al. 2002; Vamvatsikos 2013). This framework introduces some simplifying assumptions to allow for a closed-form approximation of the mean annual frequency (MAF) of limit state exceedance. Based on the concepts and procedures developed by these methods, Fajfar and Dolšek (2012) introduced a practice-oriented approach for seismic risk assessment. This method employs pushover analysis instead of more time consuming dynamic analyses for response assessment and considers a default value of the dispersion to account for the record-to-record variability effects. Moreover, Žižmond and Dolšek (2017) developed the concept of risk-targeted behaviour factors, as a means to control the risk of exceedance of different limit states by the structure during the design procedure. Vamvatsikos and Aschheim (2016) introduced the concept of yield frequency spectra, enabling the direct design of a structure subject to a set of performance objectives. Such spectra can be used to provide the risk-targeted yield strength of a system that satisfies an acceptable ductility response level.

In the United States, following the work of Luco et al. (2007), the concept of risk-targeting has emerged, aiming to define ground motion maps adopting a “uniform risk” rather than a “uniform hazard” concept. With this approach, the seismic uniform-hazard ground motion maps are modified to obtain more consistent levels of the collapse probability across the country. While risk targeted design maps have been already implemented in American seismic design codes (see Luco et al. 2015), they have not yet been introduced in practice in Europe (Douglas and Gkimprixis 2018), where the implementation of probabilistic behaviour factor concepts in Eurocode 8 is still under consideration (Fajfar 2018).

Finally, since the work of Sewell (1989), seismologists have produced ground motion prediction equations (GMPEs) for predicting inelastic ductility demands of structural systems (e.g. Sewell 1989; Tothong and Cornell 2006; Rupakhety and Sigbjörnsson 2009; Bozorgnia et al. 2010a, b). Such GMPEs depend on the actual yield strength of the system and are more structure specific than typical GMPEs for PGA or Sa. Thus, they have been developed for elasto-plastic single degree of freedom (SDOF) systems only, since it is not feasible to derive them for every type of MDOF system. Nevertheless, they could be used within PSHA to construct uniform-risk inelastic spectra, ensuring consistent probabilities of exceeding different ductility demand levels. In this way, it is possible to avoid over- or under-design associated with the use of displacement reduction factors, at least for structures behaving as SDOF systems.

This article aims to review and compare the abovementioned approaches for the implementation of uniform-risk concepts in the performance-based design of structures. In the first part of the paper, the risk-targeted behaviour factor (RTBF) approach, Luco’s risk-targeting approach and the inelastic GMPEs approach are introduced together with their simplifying assumptions. A unified notation is adopted by changing, when necessary, the symbols used in the original papers and providing slightly different but equivalent derivations of the relevant equations. In the second part of the article, the effect of the linearization of the hazard curve, at the base of the framework developed by Kennedy and Short (1994) and Cornell (1996), is investigated. Subsequently, an elastic-perfectly plastic SDOF system is used to validate the RTBF approach for generating risk-targeted design spectra. Then, the choices made when applying risk-targeting in practice are examined, by giving suggestions for future revisions. In the final part of the article, risk-targeted design maps for Europe are generated using the RTBF approach, showing how existing design maps may change if this approach was adopted.

2 Critical review of various risk-targeting approaches

The aim of any risk-targeting approach is to control the risk of exceeding a limit state related to an unsatisfactory performance of the structure. This risk can be expressed in terms of the MAF of exceedance of the limit state, \(\lambda_{LS}\). Obviously, the event of limit state exceedance may result from the occurrence of earthquakes of different intensities (Cornell 2005). Herein, we consider the spectral acceleration \(S_{a} \left( {T,\xi } \right)\) at the fundamental period of vibration of the structure and for the damping ratio \(\xi\) as the IM. The MAF of limit state exceedance \(\lambda_{LS}\) can be expressed through the total probability theorem (e.g. Benjamin and Cornell 1970) as:

where the symbol “\(d\)” denotes the differentiation operator, \(H(S_{a} )\) is the hazard curve, providing the MAF of exceeding \(S_{a}\), from PSHA (McGuire 2008; Baker 2015), and \(P\left( {\left. C \right|S_{a} } \right)\) corresponds to the conditional probability of exceeding the limit state under an earthquake with intensity \(S_{a}\). This probability is given by:

where \(S_{a}^{c}\) is the limit state capacity, i.e., the value of the spectral acceleration causing the exceedance of the limit state. It is noteworthy that this probability must account for the so called record-to-record variability effects (reflected in the variability of \(S_{a}^{c}\), which assumes different values for different records) and the effect of the uncertainty in the structural capacity, as done in Cornell (1996).

In the following subsections, alternative approaches for risk-targeting are reviewed.

2.1 The risk-targeted behaviour factor (RTBF) approach

This approach is based on the work of Kennedy and Short (1994) and Cornell (1996), who developed a simple and practice-oriented way for estimating the seismic risk of a structural system and for designing the system’s strength corresponding to a target reliability level. In particular, a closed-form expression of the MAF of failure of the system \(\lambda_{LS}\) can be obtained by introducing a series of simplifying assumptions, reviewed below. In the following, reference is made to the formulation of Cornell (1996), and the limit state definition is based on a measure of the global ductility of the system, \(\mu\). This entails defining explicitly a yield condition and a “failure” or “collapse” condition, which can be kinematically related to each other. Different choices can be made when defining these conditions, which may require identifying an elasto-plastic SDOF system equivalent to the structure under investigation (Cornell 1996; Aschheim 2002). Hereinafter, the condition of “failure” corresponds to the ductility demand \(\mu_{d}\) imposed by the earthquake exceeding the ductility capacity \(\mu_{c}\). The corresponding MAF of limit state exceedance is denoted hereinafter as \(\lambda_{c}\), to highlight the fact that failure corresponds to exceedance of the ductility capacity. Obviously, other engineering demand parameters can be employed to describe the system performance, as done e.g. in Cornell et al. (2002) and Lupoi et al. (2002).

An important assumption concerns the seismic hazard, \(H\left( {S_{a} } \right)\), which is represented by a linear equation in log–log space:

According to Cornell (1996), the limit state capacity \(S_{a}^{c}\), can be expressed in terms of the following product:

where \(S_{a}^{y}\) is the spectral acceleration inducing yielding of the system, \(q_{{\mu_{c} }}\) is the ductility-dependent contribution of the behaviour factor, denoting the factor by which a specific acceleration time history capable of causing incipient first yield must be scaled up to produce a ductility demand equal to the median capacity \(\hat{\mu }_{c}\), and \(\varepsilon_{{\mu_{c} }}\) is a lognormal random variable with unit median and lognormal standard deviation \(\beta_{{\mu_{c} }}\) that captures the variability of the ductility capacity in spectral acceleration terms.

It is noteworthy that \(S_{a}^{y}\) and \(q_{{\mu_{c} }}\) are also generally random variables, due to record-to-record variability effects. In fact, the seismic intensity corresponding to the yield limit state or other more severe limit states for a MDOF system is different for different records due to higher mode effects. Cornell (1996) assumes that these two random variables follow a lognormal distribution, with median values equal to \(\hat{S}_{a}^{y}\) and \(\hat{q}_{{\mu_{c} }}\) respectively, and lognormal standard deviations, or dispersions, \(\beta_{{S_{a}^{y} }}\) and \(\beta_{{q_{{\mu_{c} }} }}\). Moreover, in the case of a deterministic SDOF system, if the pseudo-spectral acceleration is used as the IM, then the yield acceleration has zero dispersion, i.e., \(\beta_{{S_{a}^{y} }}\) = 0, because it is directly related to the yield displacement \(u_{y}\) through the relation \(S_{a}^{y} = \omega^{2} \cdot u_{y}\). This is generally not true in the more general case of MDOF systems, due to the influence of higher modes of vibration (Luco and Cornell 2007).

The product of lognormal random variables is also a lognormal random variable. Thus, based on the previous assumptions, the limit state capacity \(S_{a}^{c}\) follows a lognormal distribution with median \(\hat{S}_{a}^{c} = \hat{q}_{{\mu_{c} }} \cdot \hat{S}_{a}^{y}\) and lognormal standard deviation \(\beta = \sqrt {\beta_{{S_{a}^{y} }}^{2} + \beta_{{q_{{\mu_{c} }} }}^{2} + \beta_{{\mu_{c} }}^{2} }\). Under the assumptions discussed above, the MAF of limit state exceedance, can be expressed as (Kennedy and Short 1994; Cornell 1996):

This equation can be inverted to find the median value of \(\hat{S}_{a}^{y}\) corresponding to a prefixed value of the MAF of failure. However, in order to exploit this formulation for design purposes, it is better to introduce the overstrength of the system \(q_{s}\), similarly to Žižmond and Dolšek (2017). This overstrength is defined as the ratio between the spectral acceleration at yield of the system and the design spectral acceleration \(S_{a}^{d}\) (Kappos 1999):

Substituting Eq. (6) into Eq. (5) gives the following expression of the MAF of failure, where now the dependence on the design spectral acceleration is made explicit:

where \(f_{hc} = \hat{q}_{{\mu_{c} }}^{{ - k_{1} }} \cdot q_{s}^{{ - k_{1} }} \cdot e^{{0.5 \cdot \left( {k_{1} \cdot \beta } \right)^{2} }}\).

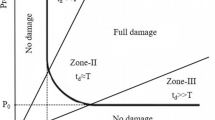

A risk curve \(\lambda_{c} \left( {S_{a}^{d} } \right)\) can be built by plotting the values of \(\lambda_{c}\) against the values of the design spectral acceleration \(S_{a}^{d}\). Figure 1a plots and compares the relation between the hazard curve \(H\left( {S_{a}^{d} } \right)\) and the risk curve \(\lambda_{c} \left( {S_{a}^{d} } \right)\). The hazard curve for \(S_{a}^{d}\) is obtained by linearizing the site hazard curve (more insight into the effect of the linearization is given in Sect. 3.1). If the hazard curve is linear, then so is the risk curve by virtue of Eq. (7). Figure 1 also plots the yield curve \(\lambda_{y} \left( {S_{a}^{d} } \right)\) corresponding to the MAF of yielding for a system designed with a spectral acceleration \(S_{a}^{d}\). This can be obtained by setting \(\hat{\mu }_{c}\) = 1, which also corresponds to \(\hat{q}_{{\mu_{c} }}\) = 1 in Eq. (7):

where \(f_{hy} = q_{s}^{{ - k_{1} }} \cdot e^{{0.5 \cdot \left( {k_{1} \cdot \beta } \right)^{2} }}\).

Again, if the hazard curve is linear, then so is the risk and yield curves by virtue of Eqs. (7) and (8). While the analytical equation for risk calculation, Eq. (5), is provided in the literature, it has been rearranged here into Eqs. (7) and (8) to make the relation between hazard and risk and their dependence on the design spectral acceleration more explicit.

The design pseudo-spectral acceleration corresponding to a target value of the MAF of collapse \(\lambda_{c}\) for a system with median ductility capacity \(\hat{\mu }_{c}\), can be obtained from Eq. (7) as:

By plotting the values of \(S_{a}^{d}\) against T for a given ductility capacity and MAF of collapse, the uniform-risk design spectrum for a site can be obtained. In contrast to the inelastic spectrum in design codes, this spectrum provides a consistent level of the risk of failure for systems with different vibration periods. Figure 1b shows the uniform hazard spectrum (UHS), the corresponding uniform risk spectrum (URS) and the yield spectrum (YS), derived for the same target MAF of exceedance (i.e. 1/2500), assuming \(q_{s}\) = 2 and a ductility level of 4, for an example site (see following section). The values of these spectral ordinates for T = 1 s can be obtained by intersecting the hazard and risk curves with a horizontal line at target MAF of 1/2500 in Fig. 1a.

In seismic codes, the design seismic input is often expressed in terms of a UHS for a given reference MAF of its exceedance, \(\lambda_{ref}\), which does not necessarily coincide with the target MAF of limit state exceedance \(\lambda_{c}\). Let \(S_{a}^{ref} = \left( {\frac{{k_{0} }}{{\lambda_{ref} }}} \right)^{{1/k_{1} }}\) denote the spectral ordinate of the system with period T, obtained by inverting the hazard curve of \(S_{a}\) for the MAF of exceedance \(\lambda_{ref}\). After dividing \(S_{a}^{ref}\) by \(S_{a}^{d}\), the expression for the risk-targeted behaviour factor (Žižmond and Dolšek 2017; Fajfar 2018) is obtained:

where \(\gamma_{{_{IM} }} = \frac{{\hat{S}_{a}^{c} }}{{S_{a}^{ref} }} = \left( {\frac{{\lambda_{ref} }}{{\lambda_{c} }}} \right)^{{1/k_{1} }} \cdot e^{{0.5 \cdot k_{1} \cdot \beta_{{}}^{2} }}\) is a factor accounting for the difference between the MAF of the seismic design input and the target MAF of collapse.

To summarize, the spectral ordinate \(S_{a}^{ref}\), corresponding to the elastic response spectrum and the MAF of exceedance \(\lambda_{ref}\), should be divided by q to design a system reaching the target performance, i.e., a MAF of collapse equal to \(\lambda_{c}\). This factor is equal to the product of three components: \(q_{s}\) accounting for the system’s overstrength, \(\hat{q}_{{\mu_{c} }}\) for the system’s ductility capacity, and \(\gamma_{{_{IM} }} = \frac{{\hat{S}_{a}^{c} }}{{S_{a}^{ref} }}\) for the difference in the MAF of exceedance of the input and of collapse. Figure 2 illustrates the relation between the spectral ordinates and the various components of q in the acceleration-displacement response spectrum plane.

2.2 Luco’s approach

Luco et al. (2007)’s approach for risk targeting was introduced to ensure a uniform collapse probability for structures located in regions across the United States characterized by different shapes of the hazard curve. The approach [see e.g. Douglas and Gkimprixis (2018) for an overview] was developed from the seminal work of Kennedy and Short (1994) and is based on the assumption that the structural capacity follows a lognormal distribution with median \(\hat{S}_{a}^{c}\) and dispersion \(\beta\). The value of \(\hat{S}_{a}^{c}\) corresponding to the target MAF of collapse for the structure can be evaluated through an iterative procedure, having made an assumption on the value of β. For example, β = 0.8 and 0.6 are used in FEMA P-750 (2009a) and ASCE 7-16 (2017), respectively. These values are quite high because they also account for epistemic uncertainties and the uncertainty in the capacity. The risk-targeted spectral acceleration, to be considered for design purposes, is the value of the spectral acceleration \(S_{a}^{c,X}\) corresponding to a probability of failure X (Fig. 3).

Under the assumption of a lognormally-distributed capacity curve, the relation between the median capacity \(\hat{S}_{a}^{c}\) and the risk-targeted spectral acceleration \(S_{a}^{c,X}\) can be expressed as follows (see Kennedy and Short 1994):

where \(\varPhi^{ - 1} (X)\) is the inverse of the standard normal cumulative distribution function (also called the probit function) for a probability X, such that \(\varPhi^{ - 1} (0.5) = 0\) and \(\varPhi^{ - 1} (0.1) = - 1.2816\).

Both Luco et al. (2007) and the aforementioned American regulations prescribe the use of X = 0.1 when implementing risk-targeting. This value was based on the results of previous studies where different structural systems were analysed (e.g. NIST 2012; FEMA 2009b; NIST 2010; Kircher et al. 2014). However, this assumption was questioned by some studies. For example, the review by Douglas and Gkimprixis (2018) presents a summary of the literature suggesting lower values of X (between 10−5 and 10−1). It is noteworthy that \(S_{a}^{c,X}\) cannot be compared directly with the risk-targeted design acceleration \(S_{a}^{d}\) introduced in the previous section, since it needs to be reduced further for design purposes. For example, according to the ASCE 7-16 (2017), the risk-targeted acceleration values should be multiplied by 2/3 and then divided by a response modification coefficient, which accounts for the ductility and overstrength of the system (see ASCE 7-16-C12.1.1, 2017). Application of these coefficients may again result in an uncontrolled level of the risk of failure. This issue is investigated more in detail in Sect. 3.

2.2.1 Analytical solution

Under the assumption of a linear hazard curve in the log–log plane, a closed-form expression of \(S_{a}^{c,X}\) can be obtained. Recalling the definition of \(\gamma_{{_{IM} }}\) given in Sect. 2.1, \(\hat{S}_{a}^{c}\) can be expressed as:

After substituting this into Eq. (11), the following expression of \(S_{a}^{c,X}\) is obtained:

Dividing \(S_{a}^{c,X}\) by \(S_{a}^{ref}\), the following expression for the risk coefficient \(C_{R}\) can be obtained:

From Eq. (14), it is found that for \(X = 0.5\), \(C_{R} = \gamma_{{_{IM} }}\) and \(S_{a}^{c,X} = \hat{S}_{a}^{c}\). In other words, under the assumption of a linear hazard curve in log–log space, Luco’s approach can be seen as the first step of the RTBF approach to design: it provides the risk-targeted spectral acceleration \(\hat{S}_{a}^{c}\) by starting from \(S_{a}^{ref}\) and taking \(X = 0.5\).

Equation (14) can also be rearranged to provide an expression for the MAF of failure:

Setting \(C_{R}\) = 1 in Eq. (15), which corresponds to assuming \(S_{a}^{c,X} = S_{a}^{ref}\) and \(\lambda_{c} = \alpha \cdot \lambda_{ref}\), one obtains:

where \(\alpha = e^{{0.5 \cdot k_{1}^{2} \cdot \beta^{2} + \varPhi^{ - 1} (X) \cdot \beta \cdot k_{1} - \ln \left( {C_{R} } \right) \cdot k_{1} }}\).

This equation is at the base of the “Simplified Hybrid Method” of Kennedy (1999), providing an estimate of the seismic risk directly from the value of the hazard level of the spectral acceleration corresponding to a failure probability X. According to Kennedy (1999), \(\alpha\) can always be taken equal to 0.5 for \(X\) = 0.1, given its low sensitivity with respect to k1 and \(\beta\). Later on, Hirata et al. (2012) provided a similar expression, with the same aim of obtaining a simple risk estimate without recourse to the convolution between the hazard and the conditional probability of failure. According to that study, \(\alpha\) ranges between 0.5 and 1, depending on the value of X and the desired degree of conservativeness.

Similarly to Kennedy (1999) and Hirata et al. (2012), it is possible to find the value of X such that \(\alpha\) = 1, i.e., \(\lambda_{c} = H\left( {S_{a}^{c,X} } \right)\). This value of X corresponds to:

where \(\varPhi ( \cdot )\) is the standard normal cumulative distribution function.

2.3 Inelastic GMPEs approach

In engineering seismology, research efforts often focus on estimating the ground motion at the site of interest, often defined in terms of IMs, resulting from a future earthquake. Usually this is achieved by application of GMPEs. GMPEs provide, via a relatively simple closed-form function, the distribution of an IM given the magnitude, the source-to-site distance and other parameters such as the local site conditions and the faulting mechanism. IMs are generally assumed to be lognormally distributed given magnitude, distance and the other independent parameters of the GMPEs. Therefore, a GMPE provides an estimate of the median IM and its standard deviation, which is often roughly 0.7 in terms of natural logarithms for response spectral IMs [see e.g. Fig. 10 of Gregor et al. (2014) for elastic spectral accelerations and Fig. 11 of Bozorgnia et al. (2010a) for inelastic spectral accelerations].

PSHA provides the MAF of exceeding different levels of a given IM by combining models of the probability of different earthquake scenarios (defined in terms of magnitude, geographical location and other source parameters, e.g. faulting mechanism) with GMPEs providing estimates of the probabilities of different levels of the IM at the considered site given these scenarios (e.g. McGuire 2008; Baker 2015). The probabilities of different earthquake scenarios are often estimated using Gutenberg-Richter power laws expressing the annual chances of different size earthquakes (i.e. \(\ln N = a - b \cdot M\), where N is the number of earthquakes of magnitude M or larger per year and a and b are empirical coefficients derived from analysis of the seismicity of the region surrounding the site) coupled with polygonal area sources where the chance of an earthquake occurring anywhere within the polygon is uniform.

Many hundreds of GMPEs are available in the literature (Douglas 2019), but most of them predict PGA or Sa (T, ξ). In contrast, Sewell (1989), Bozorgnia et al. (2010a) and Rupakhety and Sigbjörnsson (2009), for example, have developed GMPEs for the capacity demand of SDOFs systems with elastic-perfectly plastic behaviour and constant ductility, using the same functional form as for the elastic demand in terms of Sa (T, ξ). These GMPEs depend on the actual yield strength of the SDOF systems and hence are more structure-specific than GMPEs for PGA or Sa (T, ξ). PSHA can be conducted for a given site using these GMPEs, as in Bozorgnia et al. (2010b), to obtain uniform-risk spectra directly.

3 Investigation of the assumptions in the various approaches

Each of the methods presented in the former paragraphs is based on different assumptions, which affect to some extent the estimates of the seismic risk of structural systems. In this section, the effect of the hazard curve linearization, which is at the base of the RTBF approach, is investigated first, by considering two example sites. Subsequently, one of these sites is considered to calculate uniform-risk design spectral accelerations for SDOF systems with elastic-perfectly plastic behaviour via the inelastic GMPEs approach. The obtained results are compared to those obtained via the RTBF approach, in order to evaluate the accuracy of the latter. Subsequently, the problem of the choice of X when applying Luco’s risk targeting approach is investigated, together with the consequences of the choice of X on the value of the response modification factor to be employed for the simplified design. Finally, risk-targeted design maps for Europe are developed using the analytical RTBF approach.

3.1 Effect of hazard curve linearization

Even though higher-order models have been proposed in the literature for approximating the hazard curve (Bradley et al. 2007; Vamvatsikos 2013), the power law model (Sewell et al. 1991) is still widely employed because of its simplicity. Several methods have been proposed for fitting this model to a hazard curve. For example, in Jalayer and Cornell (2003) it is suggested to fit the curve through seismic hazard estimates at the American codes’ Design Basis Earthquake (DBE) and Maximum Considered Earthquake (MCE) intensity levels, with 10% and 2% probabilities of exceedance in 50 years, respectively. Cornell (1996) suggests fitting between two points: one equal to the targeted MAF and one with a MAF ten times higher.

This subsection investigates the impact of the linearization of the hazard curve of Rhodes (Fig. 4a) and of Lourdes (France) (Fig. 4b) on seismic risk estimates. The hazard curve of Rhodes refers to the spectral acceleration Sa (1 s, 5%) (further information regarding its derivation is given in the next subsection), whereas the hazard curve of Lourdes is for the PGA (more details about its derivation are given in Douglas et al. 2013).

Risk targeting is carried out using the exact hazard curve [see e.g. Figure 1 of Douglas and Gkimprixis (2018)] for \(\lambda_{c}\) = 2 × 10−4 years−1 and assuming different values of X: 10−5 according to Douglas et al. (2013), 0.1 according to the American codes (ASCE 7-16, 2017), and 0.5 following the discussion of Sect. 2.2. Figure 5 illustrates the resulting risk-targeted spectral acceleration values corresponding to different values of \(\beta\). In the same figure, the acceleration values obtained by using Eq. (13) and the linearized hazard curves fitted with different criteria are illustrated and compared. In particular, following Jalayer’s (Jalayer and Cornell 2003) and Cornell’s (1996) approaches, the fitting is carried out between the MAF levels 1/475–1/2475 and 1/475–1/4975, respectively.

It is observed that Cornell’s linearization approach provides in general the best approximation for the different cases considered, with a solution very close to that obtained without making any assumption on the hazard curve shape. Other values of \(X\) (in the range between 10−5 to 0.1) and \(\lambda_{c}\) (2 × 10−3 and 2 × 10−5) have also been investigated for various sites across Europe using the 2013 European seismic hazard model (ESHM) (Giardini 2013; Woessner et al. 2015), showing similarly good results when using Cornell’s recommendation for the fitting. These results are not reported here due to space constraints. It is worth noting that depending on the hazard curve shape, there are cases were the linearized curve is above the exact and others where it is below. In general, if the part that contributes more in the convolution is above the exact one, the MAF is overestimated.

It is also interesting to observe in Fig. 5 that the risk-targeted accelerations exhibit very different trends of variation with \(\beta\). These can be better understood by looking at Eq. (13) and noting that the argument of the exponent consists of two terms: a first order term in \(\beta\), which is negative for cases of \(X\) lower than 0.5, and zero for X = 0.5, and a second order term in \(\beta\), which can affect the concavity of the curve. When the second term is zero (i.e. for X = 0.5, Fig. 5c, f), the curve’s sensitivity to β increases with k1. Therefore, this sensitivity is higher for the case of Lourdes because it has a steeper hazard curve (higher k1), compared to Rhodes.

3.2 Validation of the RTBF approach through comparison with the inelastic GMPEs approach

In this section, the RTBF and the inelastic GMPEs approaches are compared using PSHA results for the Greek island of Rhodes as an example. In order to make the comparison, a deterministic SDOF system with vibration period T and ductility capacity \(\mu_{c}\) is considered. Other structural systems cannot be considered since inelastic GMPEs have been developed only for SDOF models.

3.2.1 Application of the inelastic GMPEs approach

The seismic source model (geometries of the source zones, activity rates and maximum magnitudes) for the calculations presented in this section was provided in November 2011 by Dr Laurentiu Danciu (ETH, Zurich, Switzerland). This model was an extract, at that date, of the ESHM developed for the wider Europe region during the European Commission’s Framework 7 SHARE project (Woessner et al. 2015). The seismic source model used for the definitive calculations of SHARE is likely slightly different from the one used here but this is not important for the purposes of our study. The model consists of the nine source zones closest to the Greek island of Rhodes (36.445°N–28.225°E), an area of active shallow crustal seismicity close to a subduction zone (Hellenic Arc). The seismicity of this region is roughly typical of areas of moderate to high seismic hazard in Europe and hence it is used as an example here.

In order to apply risk-targeting with the inelastic GMPEs approach, the selected seismic source model is coupled with the GMPEs of Rupakhety and Sigbjörnsson (2009). These GMPEs are chosen because: they were derived for Europe and the Middle East (and hence are consistent with our seismic source model), they have a simple functional form (which is computationally convenient), and, finally, the data used to derive these GMPEs are freely available and can be used also for computing the uniform-hazard elastic spectrum which is used as input for the RTBF approach (see the following subsection). The GMPEs of Rupakhety and Sigbjörnsson (2009) are for structural periods between 0 (equivalent to PGA) and 2.5 s, and for ductility levels of 1 (elastic), 1.5, 2 and 4. The software CRISIS 2015 (Ordaz et al. 2015) is used for the PSHA. For comparison purposes, the results are presented together with the results of the RTBF approach.

3.2.2 Application of the RTBF approach

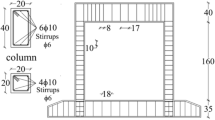

The application of the RTBF approach for risk targeting requires performing a series of time-history analyses under a set of records representative of the most likely seismic scenarios. A disaggregation of the seismic hazard for a ductility level of 1, carried out for the MAFs of interest, shows that the hazard is dominated by moderate earthquakes (moment magnitudes between 5.0 and 6.5) close to Rhodes (source-to-site distances less than 15 km), which is common in areas of high seismicity. For consistency with the results of the disaggregation and the strong-motion data used by Rupakhety and Sigbjörnsson (2009) to derive their GMPEs, the database of Ambraseys et al. (2004) is used to select 25 strong-motion records from earthquakes with 5.0 ≤ Mw ≤ 6.5 and RJB ≤ 15 km from Europe and the Middle East.

The software OpenSees (McKenna et al. 2006) is employed to run time-history analyses of inelastic SDOF systems with different properties in terms of period T and deterministic ductility capacity \(\mu_{c}\), using the selected records. In particular, 21 different values of T in the range between 0.01 s and 2 s, and values of \(\mu_{c}\) equal to 1, 2, 4 and 6 are considered. For each combination of these parameters, 25 analyses (one for each of the strong-motion records) are performed, leading to a total of 2100 time-history analyses. The median \(\hat{q}_{{\mu_{c} }}\) and lognormal standard deviation \(\beta = \beta_{{q_{{\mu_{c} }} }}\) are evaluated for each value of T and \(\mu_{c}\), and the results are illustrated in Fig. 6. As expected, \(\hat{q}_{{\mu_{c} }}\) is equal to 1 for very stiff systems and then increases and approaches the ductility capacity of the system for long periods (T > 1 s), for which the equal displacement rule holds. The dispersion \(\beta\) is equal to zero for T = 0 s, it increases and attains a maximum for periods in the range between 0.25 s and 0.5 s and then it decreases for higher values of T.

FEMA P695 (2009b) provides a good introduction to different sources of uncertainty in the assessment of structural capacity, namely those of the ground motion records (\(\beta_{RTR} =\) 0.20–0.40), those of the design requirements (\(\beta_{DR} =\) 0.10–0.50), those inherent to the test data (\(\beta_{TD} =\) 0.10–0.50) and to the modelling issues (\(\beta_{MDL} =\) 0.10–0.50). The square root of the sum of the squares results in a global dispersion in the range 0.275–0.950. In our example, only the uncertainty due to record-to-record variability is considered, and the values of \(\beta_{{q_{{\mu_{c} }} }}\), within the range 0–0.50 (Fig. 6), are similar to those suggested by FEMA P695 (2009b) for \(\beta_{RTR}\).

Figure 7 illustrates in log–log space the hazard curve of Rhodes for a system with natural period T = 1 s and 5% damping ratio, evaluated via PSHA, and the linearized approximation, which is fitted through the points corresponding to MAFs of exceedance of 1/250 and 1/2500. Using Eq. (7) and the results of the time-history analyses, the risk curves \(\lambda_{c} \left( {S_{a}^{d} } \right)\) can be built for different target ductility levels through the RTBF approach. For the purpose of comparison, the overstrength \(q_{s}\) is assumed equal to 1. As explained in Sect. 2.1, the risk curves for the different target ductility levels are parallel.

The curves in Fig. 7a refer to a system with period T = 1 s. The same procedure can be repeated for systems with different vibration periods T to generate the design spectra of Fig. 7b for a target \(\lambda_{c}\) = 1/2500 years−1. Obviously, by increasing the ductility capacity, the design spectral acceleration reduces. Moreover, for high values of μc the risk-targeted spectrum does not exhibit a peak unlike for low μc values. The reduction is also much higher by passing from μc= 1 to μc = 2, than from μc= 4 to μc = 6.

Figure 8 compares the risk curves for two systems with periods T = 0.4 s and T = 1 s and ductility capacity μc= 4, evaluated according to the RTBF and the inelastic GMPEs approaches. RTBF approach provides a very good approximation of the risk curve in the range of MAF of interest. It is also worth observing that the risk curve according to the inelastic GMPEs approach is almost parallel to the hazard curve, at least in the range of MAFs of interest. The discrepancy on the results of the two methods is due to the assumptions inherent to the RTBF approach, namely the linearity of the hazard curve and the lognormality of distribution of \(S_{a}\).

To further demonstrate the accuracy of the RTBF method, in Fig. 9 the uniform risk spectra according to the two approaches are compared. These spectra are generated by considering three different levels of the MAF of collapse, namely 1/250, 1/1000, and 1/2500, and two different levels of the ductility capacity (μc= 2 and μc= 4). It can be observed that the RTBF approach provides estimates of the risk-targeted spectral accelerations that are quite close to the estimates of the inelastic GMPEs approach. The agreement between the two approaches is better for high values of the target MAF of exceedance.

3.3 Effect of the assumptions in Luco’s approach for risk targeting

As stated before, Luco’s approach has been implemented in many design codes, including ASCE 7-16 (2017). In this section, a study is performed to evaluate under which conditions the method can ensure a uniform-risk design. In particular, the choice of the value of X to be used for risk-targeting is examined, together with the implications of this choice on the values of the response modification factor to be employed when a simplified analysis/design procedure is implemented.

3.3.1 Which value of X?

As mentioned in Sect. 2.2, many researchers have tried to investigate the value of X to be used when applying Luco’s approach for risk targeting in practice. For example, Kennedy and Short (1994) performed a sensitivity analysis for values of k1 between 1.66 and 3.32 and found that the variation of the risk-targeted acceleration with \(\beta\) is minimized when X = 0.1. This can also be observed in Fig. 5 of Sect. 3.1, showing reduced variations of the risk-targeted acceleration with \(\beta\) varying from 0.5 to 0.8 when X = 0.1 (Fig. 5b, e), and higher variations when X = 10−5 (Fig. 5 a, d) and X = 0.5 (Fig. 5c, f). It is noteworthy that this value of X also ensures reduced deviations of the risk-targeted IM levels from the IM levels corresponding to TR= 2475 years (\(\lambda_{ref}\) = 4 × 10−4 years−1) which were employed for hazard maps in previous versions of the United States codes. In fact, assuming X = 0.1 and \(\beta\) = 0.6, and substituting the target risk level λc= 2 × 10−4 years−1 set by the ASCE 7-16 (2017), and \(\lambda_{ref}\) = 4 × 10−4 years−1 in Eq. (14), the value of \(C_{R}\) is then close to unity for k1 varying between 1 and 4. In other words, when using the value of 0.1, the risk-targeted acceleration values do not deviate significantly from the reference ones obtained via hazard analysis.

Figure 10 plots the values of \(\lambda_{ref} /\lambda_{c}\) against X obtained by solving Eq. (15) for different values of k1, \(C_{R}\) = 1 and \(\beta\) = 0.6. As already discussed, when targeting \(\lambda_{ref} /\lambda_{c}\) close to 2, then X is in the order of 0.1, irrespective of the slope of the hazard curve.

In order to display the combined effects of \(\beta\) and k1 on risk targeting, the nomogram shown in Fig. 11 can be drawn. This nomogram is built by setting \(C_{R}\) = 1 in Eq. (14) and repeatedly solving for X, using different values of \(\beta\) and k1. In the nomogram, the choices of Douglas et al. (2013) for France are compared to the ones of Luco et al. (2007). The line passing through \(\lambda_{ref} /\lambda_{c}\) = 2 and X = 0.1 is almost parallel to the portion of the nomogram curve corresponding to \(\beta\) = 0.6, confirming that the sensitivity of X to k1 in this case is low. This is not the case for the values of Douglas et al. (2013), with the results changing significantly by varying k1 and \(\beta\). Moreover, the X values of Douglas et al. (2013) are very low. This is the consequence of the choice of targeting \(\lambda_{c}\) = 10−5 years−1 (value of the MAF of collapse consistent with Eurocode 0, CEN 2002) with \(\lambda_{ref}\) = 2.1 × 10−3 years−1. Overall, considering that the exact value of \(\beta\) is not known with precision, it would be preferable to choose low ratios of \(\lambda_{ref} /\lambda_{c}\), in the range between 1 to 5. Thus, if very low values of \(\lambda_{c}\) need to be achieved, reference intensity levels higher than the ones currently suggested in the European codes would need to be considered, corresponding to MAF of exceedance \(\lambda_{ref}\) lower than 2.1 × 10−3 years−1 (Jack Baker, written communication, 2018).

Nevertheless, it should be noted here that ductility and overstrength factors are not considered in the development of risk-targeted acceleration maps of the United States. Thus, it is in design calculations that the risk-targeted acceleration is translated to a seismic load if some sort of simplified analysis is performed. For example, ASCE prescribes to multiply \(S_{a}^{c,X}\) by 2/3 (ASCE 7-16-21.3, 2017) and then divide it by the response modification coefficient R (ASCE 7-16-C12.1.1, 2017). The risk levels achieved through this approach are investigated in the following paragraph, by considering the linearized hazard curve of Rhodes.

3.3.2 Effect of the choice of X on the response modification factors

This subsection aims to evaluate the risk of failure that is obtained if the simplified approach of ASCE 7-16 (2017), based on the use of response modification factors, is applied together with risk targeting for structural design. For this purpose, the case of a simplified SDOF system with T = 1 s and the hazard curve of Rhodes for Sa (1 s, 5%) linearized according to Cornell’s recommendations (Fig. 4) are considered. To define the design spectral acceleration of the system, \(S_{a}^{c,X}\) must be evaluated first via Eq. (13). The design spectral acceleration for the SDOF system under consideration is then set equal to \(S_{a}^{d} = \frac{{S_{a}^{c,X} }}{R} \cdot \frac{2}{3}\), where R is the code’s reduction factor (response modification coefficient). In general, the proposed values of R given by the code will differ from \(\hat{q}_{{\mu_{c} }} \cdot q_{s}\). The corresponding MAF of failure of the system \(\lambda_{{c,{\text{calc}}}}\) can be evaluated based on the median failure capacity \(S_{a}^{d} \cdot \hat{q}_{{\mu_{c} }} \cdot q_{s}\). It can be shown that:

where \(q^{*} = \frac{2}{3} \cdot R\) is the factor by which \(S_{a}^{c,X}\) is reduced according to ASCE 7-16 (2017).

Figure 12 shows the variation of the ratio between the calculated and the targeted MAF of failure, for different values of \(q^{*}\) and different values of \(\beta\). It can be observed that the calculated risk is very different from the targeted one, even when the actual value of the reduction factor \(\hat{q}_{{\mu_{c} }} \cdot q_{s}\) is employed.

These results show that the application of the code may lead to non-consistent levels of the risk, which change for different locations and are sensitive to the choice of the reduction factor R and \(\beta\). Again, this confirms that the development of risk-targeted maps must also involve a revision of the reduction factors to be employed for design if a simplified analysis or design approach using the reduction factors is to be employed in conjunction with risk-targeted hazard maps. It is noteworthy that Kircher et al. (2014) have already acknowledged this issue. To clarify this, for risk targeting to be effective one must have \(\lambda_{{c,{\text{calc}}}} = \lambda_{{c,{\text{target}}}}\). Based on Eq. (18), this is only possible if:

Figure 13 provides the relation between the value of X assumed in risk targeting, and the corresponding value of the reduction factor to be considered in order to achieve the targeted MAF of failure. As expected, if X = 0.5, then the SDOF system should be designed with a reduction factor \(q^{*} = \hat{q}_{{\mu_{c} }} \cdot q_{s}\). This is equivalent to carrying out the design according to the procedure outlined in Sect. 2.1. If X = 0.1 and \(\beta\) = 0.6, as suggested in the American codes, then the reduction factor should be \(q^{*} = 0.46 \cdot \hat{q}_{{\mu_{c} }} \cdot q_{s}\).

It is noteworthy that the values of the behaviour factors suggested in current design codes are usually lower than the actual values of \(\hat{q}_{{\mu_{c} }} \cdot q_{s}\), due to extra requirements and safety factors that usually serve to increase the strength and, therefore, reduce further the probability of collapse. For example, Žižmond and Dolšek (2013) designed an 11-storey and an 8-storey structure by gradually applying different criteria of compliance with the Eurocodes and reporting how each safety measure affects the final design. Assuming \(q^{*}\) = 3.9, they found \(q^{*}/(\hat{q}_{{\mu_{c} }} \cdot q_{s} )\) to range between 0.4 and 1, depending on the code requirements and factors of safety taken into account.

4 Risk-targeted design maps for Europe

This section employs the analytical equations of the RTBF method to show some example risk-targeted maps for Europe. The 2013 ESHM (Giardini et al. 2013; Woessner et al. 2015) provides hazard information across Europe. The target risk level is set equal to 2 × 10−4 years−1, a value proposed in ASCE 7-16 (2017), roughly corresponding to a 1% probability of exceedance in 50 years. The power law hazard model is fitted through two points (in accordance with the suggestions of paragraph 3.1): one corresponding to a MAF of exceedance equal to the target risk level, and one to a MAF of exceedance ten times higher, i.e., 2 × 10−3 years−1 (roughly corresponding to a 10% probability of exceedance in 50 years).

Figures 14a, 15a and 16a show the values of \(PGA^{ref}\) and \(S_{a}^{ref} (T)\) according to PSHA and a reference return period of 475 years, whereas the values of k1 corresponding to the slope of the fitted curve are plotted in Figs. 14b, 15b and 16b. A high variation of the slope of the hazard curve is observed for different locations, even within the same country. There are cases were the curve is quite steep with k1 > 3, whilst other locations have hazard curves with very low slopes, for instance 0.7.

Figures 14c, 15c and 16c show the risk-targeted values of the design acceleration, evaluated via Eq. (9). For the case of the PGA, \(\beta\) = 0 and \(\hat{q}_{{\mu_{c} }} = 1\), whereas for \(S_{a}\)(T = 0.5 s) and \(S_{a}\)(T = 1 s), \(\hat{q}_{{\mu_{c} }}\) is assumed equal to 4 and \(\beta\) = 0.6, as per ASCE 7-16 (2017). In all cases the contribution of overstrength was considered as well, by assuming \(q_{s} = 2\).

The values of the risk-targeted behaviour factor, evaluated according to Eq. (10), are given in the remaining figures. It is recalled that the factor q is the ratio of the reference design acceleration (MAF of exceedance of 2 × 10−3 years−1) and the risk-targeted design acceleration. A value higher than one means that the reference design acceleration should be decreased in order to satisfy the risk acceptance criteria.

In general, low values of q are obtained. However, it should be pointed out that this result is significantly affected by the assumed risk target (\(\lambda_{c}\) = 2 × 10−4 years−1), leading to values of \(\gamma_{{_{IM} }}\) generally higher than 4 that tend to balance out the effect of \(\hat{q}_{{\mu_{c} }} \cdot q_{s}\), which would yield design accelerations lower than the reference one. Of course, this conclusion is sensitive to the assumptions made for the ductility and overstrength of the system. A significant variation of the factor q in areas of low seismicity is noticed, but in any case the values of the accelerations remain low. This is discussed also by Silva et al. (2016), who considered only areas with acceleration values higher than 0.05 g.

Focusing on the areas of high seismicity, for instance Italy, Greece, Romania and Turkey, for the case of \(S_{a} (T = 0.5\,s)\), q is in the range between 1.31 to 2.16 and a similar range is noticed for T = 1 s, as well. For the case of the PGA though, the factor q is lower than one at all locations. This means that the reference PGA should be increased rather than decreased to achieve the target risk level.

5 Conclusions

The basic philosophy of current seismic design codes relies on the concept of ‘uniform hazard spectrum’. This leads to structures exposed to inconsistent levels of risk, even when they are designed according to the same regulation. Acknowledging this, research efforts have proposed alternative design approaches aiming at controlling the risk of failure of structures. It can, however, be quite hard to follow the literature, due to inconsistent nomenclature amongst researchers and no single resource comparing the different approaches. The main goal of this article is to present using a consistent terminology and compare three widespread approaches for risk-targeting, highlighting the assumptions they are based on and their effect on the risk-targeting results. To the authors’ knowledge, this is the first time that approaches employed in different fields (structural engineering and engineering seismology) and different countries (US and those covered by Eurocode 8) are compared.

The probabilistic framework developed by Kennedy and Short (1994) and Cornell (1996), leading to the definition of risk-targeted behaviour factors (RTBFs), is discussed first, followed by Luco’s approach, which is implemented in recent American design codes, and by the inelastic GMPEs approach, based on the use of ground motion prediction equations (GMPEs) for inelastic single-degree-of-freedom systems. It is shown that one of the main assumptions at the base of the RTBF approach, concerning the linearization of the hazard curve, does not significantly affect the accuracy of the risk-targeting results in most cases, if the fitting is carried out for mean annual frequencies of exceedance equal to the targeted level and ten times higher than this level. The inelastic GMPEs approach is innovatively used to validate the RTBFs approach, considering the case of a single-degree-of-freedom system. For the case study considered, it is shown that the RTBF approach provides accurate risk-targeted design results, if compared to the results obtained with the inelastic GMPEs approach. Luco’s risk-targeting approach, if coupled with the response modification factors proposed in design codes, could lead to inconsistent risk levels for different system properties. This is because these response modification factors of design codes (e.g. ASCE 7-16, 2017) are generally not based on probabilistic analyses. Thus, a revision of the reduction factors to be used for design purposes should be carried out, if a simplified analysis or design approach using reduction factors is to be employed in conjunction with risk-targeted hazard maps.

In the last part of the article, it is shown how seismic design spectra of Europe may change when moving from uniform-hazard to uniform-risk concepts. In the case studies, an overstrength factor equal to 2 is considered. The ductility-dependent component of the behaviour factor for the PGA is equal to 1, while for systems with period 0.5 s and 1 s it is considered equal to 4. It is found that to satisfy the commonly proposed risk-target of mean annual frequency of exceedance of 2 × 10−4 years−1, the design PGA should be increased compared to the uniform-hazard value corresponding to a return period TR of 475 years. On the other hand, the values of the design spectral acceleration can be significantly lower compared to the reference values corresponding to TR = 475 years.

Given the importance that force-based seismic design still has in current design codes, it is anticipated that any of the approaches discussed in this article could be employed to revise current values of behaviour factors based on risk-control criteria, helping to promote the use of probabilistic concepts in design practice.

Abbreviations

- \(\beta\) :

-

Standard deviation of \(S_{a}^{c}\)

- \(\gamma_{{_{IM} }}\) :

-

Component of q accounting for the difference between \(\lambda_{c}\) and \(\lambda_{ref}\)

- \(\lambda_{ref}\) :

-

MAF of exceedance of \(S_{a}^{ref}\)

- \(\lambda_{y}\), \(\lambda_{c}\) :

-

MAF of yielding and collapse, respectively

- \(\mu\) :

-

Ductility of the system

- \(\varPhi ( \cdot )\) :

-

Standard normal cumulative distribution function

- \(\varPhi^{ - 1} (X)\) :

-

Inverse of the standard normal cumulative distribution function for probability X

- \(C_{R}\) :

-

Risk coefficient, ratio between \(S_{a}^{c,X}\) and \(S_{a}^{ref}\)

- \(H\left( {S_{a} } \right)\) :

-

MAF of exceedance of \(S_{a}\)

- \(k_{0}\), \(k_{1}\) :

-

Parameters of the linear-in-log–log-space approximation of the hazard curve

- \(PGA^{ref}\) :

-

Peak ground acceleration corresponding to \(\lambda_{ref}\)

- \(P(\left. C \right|S_{a} )\) :

-

Probability of collapse, conditional to \(S_{a}\)

- \(q\) :

-

Risk-targeted behaviour factor

- \(q^{*}\) :

-

Reduction factor according to seismic design codes

- \(q_{{\mu_{c} }}\) :

-

Ductility-dependent component of the behaviour factor

- \(\hat{q}_{{\mu_{c} }}\) :

-

Median of the lognormally distributed \(q_{{\mu_{c} }}\)

- \(q_{s}\) :

-

Component of \(q\) accounting for overstrength

- R :

-

Response modification coefficient (ASCE 7-16, 2017)

- \(S_{a}\) :

-

Pseudo-spectral acceleration

- \(S_{a}^{c}\) :

-

Pseudo-spectral acceleration at collapse

- \(\hat{S}_{a}^{c}\) :

-

Median value of the lognormally distributed \(S_{a}^{c}\)

- \(S_{a}^{c,X}\) :

-

Pseudo-spectral acceleration corresponding to a probability of failure X

- \(S_{a}^{d}\) :

-

Risk-targeted design pseudo-spectral acceleration

- \(S_{a}^{ref}\) :

-

Pseudo-spectral acceleration corresponding to \(\lambda_{ref}\)

- \(S_{a}^{y}\) :

-

Pseudo-spectral acceleration causing yielding of the system

- \(\hat{S}_{a}^{y}\) :

-

Median value of the lognormally distributed \(S_{a}^{y}\)

- \(S_{d}\) :

-

Spectral displacement

References

Altieri D, Tubaldi E, De Angelis M, Patelli E, Dall’Asta A (2018) Reliability-based optimal design of nonlinear viscous dampers for the seismic protection of structural systems. Bull Earthq Eng 16(2):963–982

Ambraseys NN, Douglas J, Sigbjörnsson R, Berge-Thierry C, Suhadolc P, Costa G, Smit PM (2004) Dissemination of European strong-motion data. In: Proceedings of 13th world conference on earthquake engineering, vol 2, paper no. 32

ASCE (2017) Minimum design loads and associated criteria for buildings and other structures, ASCE/SEI 7-16. American Society of Civil Engineers, Reston

Aschheim M (2002) Seismic design based on the yield displacement. Earthq Spectra 18(4):581–600. https://doi.org/10.1193/1.1516754

Baker JW (2015) Introduction to probabilistic seismic hazard analysis. White paper version 2.1, 77 pp

Barbato M, Tubaldi E (2013) A probabilistic performance-based approach for mitigating the seismic pounding risk between adjacent buildings. Earthq Eng Struct Dyn 42(8):1203–1219

Benjamin JR, Cornell CA (1970) Probability, statistics, and decision for civil engineers. McGraw-Hill, New York

Bozorgnia Y, Hachem MM, Campbell KW (2010a) Ground motion prediction equation (“attenuation relationship”) for inelastic response spectra. Earthq Spectra 26(1):1–23. https://doi.org/10.1193/1.3281182

Bozorgnia Y, Hachem MM, Campbell KW (2010b) Deterministic and probabilistic predictions of yield strength and inelastic displacement spectra. Earthq Spectra 26(1):25–40

Bradley BA (2011) Design seismic demands from seismic response analyses: a probability-based approach. Earthq Spectra 27(1):213–224

Bradley BA, Dhakal RP, Cubrinovski M, Mander JB, MacRae GA (2007) Improved seismic hazard model with application to probabilistic seismic demand analysis. Earthq Eng Struct Dyn 36(14):2211–2225

Castaldo P, Amendola G, Palazzo B (2017) Seismic fragility and reliability of structures isolated by friction pendulum devices: seismic reliability-based design (SRBD). Earthq Eng Struct Dyn 46(3):425–446

CEN (2002) EN 1990:2002 + A1 Eurocode—basis of structural design. European Committee for Standardization, Brussels

CEN (2004) EN 1998-1:2004 Eurocode 8: design of structures for earthquake resistance—part 1: general rules, seismic actions and rules for buildings. European Committee for Standardization, Brussels

Collins KR, Wen YK, Foutch DA (1996) Dual-level seismic design: a reliability-based methodology. Earthq Eng Struct Dyn 25(12):1433–1467

Cornell CA (1996) Calculating building seismic performance reliability: a basis for multi-level design norms. In: Proceedings of 11th world conference on earthquake engineering, Acapulco

Cornell CA (2005) On earthquake record selection for nonlinear dynamic analysis. In: Proceedings of the Luis Esteva symposium, Mexico

Cornell CA, Jalayer F, Hamburger RO, Foutch DA (2002) Probabilistic basis for 2000 SAC Federal Emergency Management Agency steel moment frame guidelines. J Struct Eng 128(4):526–533

Dolšek M (2009) Incremental dynamic analysis with consideration of modeling uncertainties. Earthq Eng Struct Dyn 38(6):805–825

Douglas J (2019) Ground motion prediction equations 1964–2018. http://www.gmpe.org.uk

Douglas J, Gkimprixis A (2018) Risk targeting in seismic design codes: the state of the art, outstanding issues and possible paths forward. In: Vacareanu R, Ionescu C (eds) Seismic hazard and risk assessment—updated overview with emphasis on Romania. Springer, Berlin. https://doi.org/10.1007/978-3-319-74724-8_14

Douglas J, Ulrich T, Negulescu C (2013) Risk-targeted seismic design maps for mainland France. Nat Hazards 65(3):1999–2013

Fajfar P (2018) Analysis in seismic provisions for buildings: past, present and future. In European conference on earthquake engineering, Thessaloniki, Greece, Springer, Cham, pp 1–49

Fajfar P, Dolšek M (2012) A practice-oriented estimation of the failure probability of building structures. Earthq Eng Struct Dyn 41(3):531–547

FEMA (2009a) NEHRP recommended seismic provisions for new buildings and other structures (FEMA P750). Federal Emergency Management Agency

FEMA (2009b) Quantification of building seismic performance factors (FEMA P695). Federal Emergency Management Agency

Fib (2012) Probabilistic performance-based seismic design, bulletin 68, International federation of structural concrete, Lausanne, CH

Fragiadakis M, Papadrakakis M (2008) Performance-based optimum seismic design of reinforced concrete structures. Earthq Eng Struct Dyn 37(6):825–844

Franchin P, Petrini F, Mollaioli F (2018) Improved risk-targeted performance-based seismic design of reinforced concrete frame structures. Earthq Eng Struct Dyn 47(1):49–67

Giardini D et al (2013) Seismic hazard harmonization in Europe (SHARE): online data resource. https://doi.org/10.12686/sed-00000001-share

Gidaris I, Taflanidis AA (2015) Performance assessment and optimization of fluid viscous dampers through life-cycle cost criteria and comparison to alternative design approaches. Bull Earthq Eng 13(4):1003–1028

Gregor N, Abrahamson NA, Atkinson GM, Boore DM, Bozorgnia Y, Campbell KW, Chiou BS-J, Idriss IM, Kamai R, Seyhan E, Silva W, Stewart JP, Youngs R (2014) Comparison of NGA-West2 GMPEs. Earthq Spectra 30(3):1179–1197. https://doi.org/10.1193/070113EQS186M

Hirata K, Nakajima M, Ootori Y (2012) Proposal of a simplified method for estimating evaluation of structures seismic risk of structures. In: Proceedings of 15th world conference on earthquake engineering, Lisboa

Iervolino I, Spillatura A, Bazzurro P (2017) RINTC project-assessing the (implicit) seismic risk of code-conforming structures in Italy. In: COMPDYN 2017-6th ECCOMAS thematic conference on computational methods in structural dynamics and earthquake engineering, Rhodes Island, Greece, pp 15–17

Jalayer F, Cornell CA (2003) A technical framework for probability-based demand and capacity factor design (DCFD) seismic formats. PEER Report 2003/08, Pacific Earthquake Engineering Center, College of Engineering, University of California Berkeley, November

Kappos AJ (1999) Evaluation of behavior factors on the basis of ductility and overstrength studies. Eng Struct 21(9):823–835

Kennedy RP (1999) Overview of methods for seismic PRA and margin analysis including recent innovations. In: Proceedings of the OECD-NEA workshop on seismic risk, Tokyo, Japan

Kennedy RP, Short SA (1994) Basis for seismic provisions of DOE-STD-1020. Rep. No. UCRL-CR-111478, Lawrence Livermore National Laboratory, Livermore, Calif., and Rep. No. BNL-52418, Brookhaven National Laboratory, Upton, N.Y

Kircher CA, Harris JL, Heintz JA, Hortacsu A (2014) ATC-84 project: improved seismic performance factors for design of new buildings. In: Proceedings of 10th U.s. national conference on earthquake engineering, Anchorage, Alaska

Liel AB, Haselton CB, Deierlein GG, Baker JW (2009) Incorporating modeling uncertainties in the assessment of seismic collapse risk of buildings. Struct Saf 31(2):197–211. https://doi.org/10.1016/j.strusafe.2008.06.002

Luco N, Cornell CA (2007) Structure-specific scalar intensity measures for near-source and ordinary earthquake ground motions. Earthq Spectra 23(2):357–392

Luco N, Ellingwood BR, Hamburger RO, Hooper JD, Kimball JK, Kircher CA (2007) Risk-targeted versus current seismic design maps for the conterminous United States. In: SEAOC 2007 convention proceedings

Luco N, Bachman RE, Crouse CB, Harris JR, Hooper JD, Kircher CA, Caldwell PJ, Rukstales KS (2015) Updates to building-code maps for the 2015 NEHRP recommended seismic provisions. Earthq Spectra 31(S1):S245–S271

Lupoi G, Lupoi A, Pinto PE (2002) Seismic risk assessment of RC structures with the” 2000 SAC/FEMA” method. J Earthq Eng 6(04):499–512

McGuire RK (2008) Probabilistic seismic hazard analysis: early history. Earthq Eng Struct Dyn 37:329–338. https://doi.org/10.1002/eqe.765

McKenna F, Fenves GL, Scott MH (2006) OpenSees: open system for earthquake engineering simulation. Pacific Earthquake Engineering Center, University of California: Berkeley, CA

NIST (2010) Evaluation of the FEMA P-695 methodology for quantification of building seismic performance factors, NIST GCR 10-917-8. Prepared by the NEHRP consultants joint venture, a partnership of the Applied Technology Council and the Consortium of Universities for Research in Earthquake Engineering for the National Institute of Standards and Technology, Gaithersburg, Maryland

NIST (2012) Tentative framework for development of advanced seismic design criteria for new buildings, NIST GCR 12-917-20. Prepared by the NEHRP Consultants Joint Venture, a partnership of the Applied Technology Council and the Consortium of Universities for Research in Earthquake Engineering for the National Institute of Standards and Technology, Gaithersburg, Maryland

Ordaz M, Faccioli E, Martinelli F, Aguilar A, Arboleda J, Meletti C, D’Amico V (2015) CRISIS 2015 software. https://sites.google.com/site/codecrisis2015/home

Porter KA (2003) An overview of PEER’s performance-based earthquake engineering methodology. In: Proceedings of ninth international conference on applications of statistics and probability in civil engineering

Rupakhety R, Sigbjörnsson R (2009) Ground-motion prediction equations (GMPEs) for inelastic response and structural behaviour factors. Bull Earthq Eng 7:637–659

Sewell RT (1989) Damage effectiveness of earthquake ground motion: characterizations based on the performance of structures and equipment. PhD thesis, Stanford University, California, USA

Sewell RT, Toro GR, McGuire RK (1991) Impact of ground motion characterisation on conservatism and variability in seismic risk estimates. NUREG/CR-6467. U.S. Nuclear Regulatory Comission, Washingtion, DC

Silva V, Crowley H, Bazzurro P (2016) Exploring risk-targeted hazard maps for Europe. Earthq Spectra 32(2):1165–1186

Tothong P, Cornell AC (2006) An empirical ground-motion attenuation relation for inelastic spectral displacement. Bull Seismol Soc Am 96:2146–2164. https://doi.org/10.1785/0120060018

Tubaldi E, Barbato M, Dall’Asta A (2011) Influence of model parameter uncertainty on seismic transverse response and vulnerability of steel–concrete composite bridges with dual load path. J Struct Eng 138(3):363–374

Tubaldi E, Ragni L, Dall’Asta A (2015) Probabilistic seismic response assessment of linear systems equipped with nonlinear viscous dampers. Earthq Eng Struct Dyn 44(1):101–120

Vamvatsikos D (2013) Derivation of new SAC/FEMA performance evaluation solutions with second-order hazard approximation. Earthq Eng Struct Dyn 42(8):1171–1188

Vamvatsikos D, Aschheim MA (2016) Performance-based seismic design via yield frequency spectra. Earthq Eng Struct Dyn 45(11):1759–1778

Vamvatsikos D, Kazantzi AK, Aschheim MA (2015) Performance-based seismic design: avant-garde and code-compatible approaches. ASCE-ASME J Risk Uncertain Eng Syst Part A Civ Eng 2(2):C4015008

Wen YK (2001) Reliability and performance-based design. Struct Saf 23(4):407–428

Woessner J, Danciu L, Giardini D, Crowley H, Cotton F, Grunthal G, Valensise G, Arvidsson R, Basili R, Demircioglu MB, Hiemer S, Meletti C, Musson RW, Rovida AN, Sesetyan K, Stucchi M, The SHARE Consortium (2015) The 2013 European seismic hazard model: key components and results. Bull Earthq Eng 13(12):3553–3596. https://doi.org/10.1007/s10518-015-9795-1

Žižmond J, Dolšek M (2013) Deaggregation of seismic safety in the design of reinforced concrete buildings using Eurocode 8. In: Proceedings of 4th ECCOMAS thematic conference on computational methods in structural dynamics and earthquake engineering

Žižmond J, Dolšek M (2017) The formulation of risk-targeted behaviour factor and its application to reinforced concrete buildings. In: Proceedings of 16th world conference on earthquake engineering, paper no. 1659

Acknowledgements

The authors would like to thank Prof. Jack Baker for his useful comments on aspects of this study. We also want to thank Dr. Graeme Weatherill for discussion of k1 from the SHARE data and Dr. Laurentiu Danciu for providing the hazard model for Rhodes. The comments of the two anonymous reviewers were useful in revising this article. The first author is undertaking a PhD funded by the University of Strathclyde “Engineering The Future” studentship, for which we are grateful.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Gkimprixis, A., Tubaldi, E. & Douglas, J. Comparison of methods to develop risk-targeted seismic design maps. Bull Earthquake Eng 17, 3727–3752 (2019). https://doi.org/10.1007/s10518-019-00629-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10518-019-00629-w