Abstract

This paper explores the question of whether or not the law is a computable number in the sense described by Alan Turing in his 1937 paper ‘On computable numbers with an application to the Entscheidungsproblem.’ Drawing upon the legal, social, and political context of Alan Turing’s own involvement with the law following his arrest in 1952 for the criminal offence of gross indecency, the article explores the parameters of computability within the law and analyses the applicability of Turing’s computability thesis within the context of legal decision-making.

Similar content being viewed by others

In a recent article, ‘Command Theory, Control and Computing: A Playwright’s Perspective on Alan Turing and the Law’ (Huws 2014), Huws posed the question of whether the legal system could be compared to a Turing-compliant machine as described by Turing in ‘On Computable Numbers with an Application to the Entscheidungsproblem’ (hereafter ‘On Computable Numbers’) (Turing 1937). Instinctively, the law is something that ought to be computable, in that it ought to be possible to convert the processes of legal decision-making into an algorithm—a set of simple instructions and decisions—and thus render it computable.

In essence, the notion that law should be computable is the appeal to legal certainty that forms the basis of Hohfeld’s conception of law as a formal system (Hohfeld 1913), and emphasised by Liebwald (2015) as a justification for devising laws that are sufficiently precise and unambiguous in a way that would enable a more extensive use of machine–lawyers.

There is indeed much within the legal process that may be undertaken by machines, and this ranges from identifying whether the process-based elements of litigation have been completed within the required timescale, to identifying potentially relevant precedents (Prakken and Sartor 1996), to calculating the probabilities of different possible causes of a crime or a tort (Vlek et al. 2016).

Furthermore, a machine may learn to identify relevant factors from a fact scenario, and predict the probability of a particular outcome, by identifying precedents and weighting them based on relevance and currency (Berman and Hafner 1987; Hafner and Berman 2002) then applying those precedents to a new case using case-based reasoning, a well-known machine learning technique (Aamodt and Plaza 1994). More recent variants have been able to create a hierarchy of relevant factors, including weighting recent cases more heavily than earlier cases to take account of changing social mores, taking account of the objective to be achieved by the legislation and also taking into account the procedural context of the decision—such as whether it was heard before a first instance court or before an appellate court (Aleven and Ashley 1997; Ashley and Brüninghaus 2009). Within these parameters, it is possible to conclude that some areas of the law are computable according to Turing’s conception of computability. However, the success of machine learning used in this way presupposes that legal decision-making occurs solely with reference to factors internal to the law—the relevant legislation and the applicable precedents, and the policy that informed those precedents (Loui 2016).

In this article therefore, by combining the methodology of ‘On Computable Numbers,’ and Alan Turing’s own involvement with the law as a result of being prosecuted for the offence of gross indecency in 1952, we intend to demonstrate that there are limitations on the computability of the law and therefore on the scope of machine lawyers to replace human lawyers:

-

(a)

firstly, because the systems of the law, as equivalents of the m-configurations of Turing machines, are constant over neither time nor space;

-

(b)

and secondly, because the information upon which the ‘machine’ of the law works—analogous to the input of a Turing machine—consists of considerably more than the bare facts of the case, incorporating societal and indeed personal aspects that vary across time and place, and that these cannot be known to a putative decision machine. Therefore, although the law has the capacity to be a formal system where algorithms can be used to predict (reasonably accurately in many cases) issues of culpability and liability, as in the examples explored by Hafner and Berman (2002) using the HYPO system, these do not, and cannot take into account, the abundance of factors that exist outside the law.

1 On computable numbers and the law

In the early twentieth century, mathematics was in the throes of a ‘foundational crisis’. Mathematicians of the ‘formalist’ school (led by David Hilbert) believed that mathematics could ultimately be reduced to a set of mechanical rules by developing a ‘formal system’ within which all mathematical statements could be stated. Such a system consists of a set of symbols (letters, numbers, arithmetical signs etc.) which can be organised into sequences called ‘strings’. The system also has an initial set of strings called ‘axioms’, and a set of simple typographical rules for transforming strings into new strings. If the symbols, axioms and rules have interpretations consistent with mathematics, then any strings thus derived (‘theorems’) are also true statements of mathematics. Hilbert referred to this as a ‘formula game’, with strict, game-like moves (Hilbert 1967).

Attempts to find such a foundation of mathematics had run into difficulties, one of which, providing a flavour of such problems, is Russell’s paradox: does the set of all sets which do not contain themselves contain itself? If it does contain itself, then it cannot be a member of the set and so should not contain itself; and if it is a member of the set, then it cannot contain itself and so should not be a member of that set. Hilbert’s programme (Zach 2016) was a proposed pathway to a solution of this crisis. The key outcomes would be:

-

a formalisation for all mathematics: the set of axioms and rules described above;

-

a completeness proof that all true mathematical statements can be proved using the formalisation: starting from the axioms, one can use the simple rules provided to arrive at any true statement;

-

a consistency proof that no contradiction can be similarly derived;

-

a decidability proof that an algorithm (a ‘mechanical’ method with well-defined steps and decisions) exists for determining the truth or falsity of any mathematical statement.

Gödel’s first incompleteness theorem (Gödel 1931) showed that there is no consistent (i.e. contradiction-free) formalisation whose theorems can be listed by some algorithm which can prove all true statements about the arithmetic of the natural numbers (0, 1, 2 and so on). Crudely speaking, Gödel achieved this by showing that it was possible to produce an analogue of the paradoxical statement ‘this statement is not derivable in this system’ in any formal system sufficiently powerful to encompass arithmetic. If it is true, then it is not derivable (thus the system is incomplete), if it is false then it should be derivable (which it should not be, since it is false). Thus, such systems must be incomplete: they contain statements whose truth cannot be established. The second incompleteness theorem built on this by showing that such a system cannot prove its own consistency.

Thus, two planks of the Hilbert program were removed: there can be no formal system (in the sense defined above, made up of simple rules applied to axioms) sufficiently powerful to perform even basic arithmetic, which can be complete (able to generate all true statements) and provably consistent.

In 1936, two independent papers by Alan Turing and the American mathematician Alonzo Church removed the last plank of the Hilbert program by demonstrating that within a formal system as defined above, there can be no general algorithm for the determination of truth (i.e. derivability) of a statement. Although Church’s proof was published slightly earlier than Turing’s (Church 1936), Alan Turing’s methods, using the analogy of a simple machine, were the more intuitive and almost immediately had wide-ranging consequences, showing that even a very simple machine could compute anything that was ‘effectively computable’ (the ‘Church–Turing Thesis’). ‘Effectively computable’ here is a term of art in computability theory: a process M is effectively computable if and only if:

-

1.

‘M is set out in terms of a finite number of exact instructions (each instruction being expressed by means of a finite number of symbols);

-

2.

M will, if carried out without error, produce the desired result in a finite number of steps;

-

3.

M can (in practice or in principle) be carried out by a human being unaided by any machinery save paper and pencil;

-

4.

M demands no insight or ingenuity on the part of the human being carrying it out’ (Copeland 1996).

The computing machine Turing hypothesised about provided a boost for early computer designs, demonstrating that while such machines might have limitations, a very simple machine could perform any task which could be performed by a far more advanced machine, given enough storage space (although it might require far more time).

There are interesting parallels between Gödel’s and Turing’s approaches to their respective problems: both approaches involve encoding an entity as a number (a statement in Gödel’s case, a ‘machine’ or algorithm in Turing’s), so that statements can be made about encoded statements, or machines designed to operate on encoded machines—or their own encoded descriptions. Gödel uses this encoding to create an (indirectly) self-referential statement, while Turing uses it to describe algorithms which operate on descriptions of themselves.

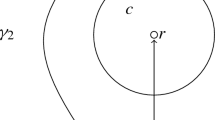

Much of Turing’s paper ‘On computable numbers’ (Turing 1937) is taken up with the definition of the ‘Turing machine’, his chosen formal system. A Turing machine operates on symbols printed on a finite, but unbounded, length of tape. It can read, print and erase these symbols, but can only operate on the current symbol, and it can move one step left or right on the tape. The machine has a finite table of what are now called states, but Turing termed ‘m-configurations’. The machine can only be in one state at a time, and one of these is the initial state. There may also be final or ‘accepting’ states, at which the machine will stop, its calculation being complete. This state table, together with the initial state, constitute the machine’s description or ‘program’.

Each state contains actions to perform after a particular symbol is read: print (or erase) the symbol; then move along the tape left, right or not at all; then change to a new state (or remain in the current state). The operation of the machine consists of writing the starting symbols on the tape (which constitute the input of the machine), setting the initial tape position, setting the initial state, then repeatedly reading the current symbol and performing the appropriate action given the current state.

A simple example of a state table is given below. This table adds one to the binary number given on the tape. Thus, if the number on the tape is ‘1011’ and the machine is pointing at the first 1, then the result will be ‘1100’ (i.e. denary 11 to denary 12). State a moves the machine until it is operating on the last digit, state b moves left, switching 0 with 1 and vice versa until we reach a 0, state c moves right until we are back at the last digit.

State | Symbol read | Write | Move | New state |

|---|---|---|---|---|

a (initial) | Blank | Blank | L | b |

a | 0 | 0 | R | a |

a | 1 | 1 | R | a |

b | Blank | 1 | R | c |

b | 0 | 1 | L | c |

b | 1 | 0 | L | b |

c | Blank | Blank | L | STOP |

c | 0 | 0 | R | c |

c | 1 | 1 | R | c |

This is an extremely simple model of computation, but given an appropriate state table and set of symbols it can perform any algorithm. One important feature is that a Turing machine’s description (the state table) and its input can be encoded as symbols and provided as input to another Turing machine, called the Universal Turing Machine (UTM). The UTM is programmed in such a way that it can ‘emulate’ the Turing machine whose description it has been given, and produce the output that machine would have produced. Turing provides a description of a UTM as part of his proof.

Turing’s actual proof is famously complex and nothing more than the barest outline will be given here. An extensively annotated version by Petzold (2008) allows the proof to be followed and provides a great deal of background detail on the ideas Turing uses. He begins by describing and demonstrating the Turing machine, and discusses the concept of ‘computable numbers’—those numbers which can be output by an appropriately programmed Turing machine. Because each machine producing such a number can itself be described by a ‘description number’ (for reading and emulation by a UTM), and such numbers are finite, they can be enumerated. Since each description number produces one computable number, these must also be enumerable. However, if there were a process actually to find the nth computable number, Cantor’s diagonal argument (Cantor 1891) could be applied to make this sequence non-enumerable (by producing a new number not in the enumeration) (Simmons 1993). Therefore, the computable numbers, and the description numbers with which they are associated, cannot be enumerated.

Dissatisfied with this proof (‘…it may leave the reader with the feeling that “there must be something wrong”…’ (Turing 1937), Turing turns his attention to enumerating the ‘satisfactory’ machines: those which produce infinite streams of numbers, and shows that a machine which could enumerate the satisfactory machines is impossible. Simply put, such a machine would fall into an infinite regression when asked to test its own satisfactoriness (as it must at some point do). Thus, while the satisfactory machines are enumerable, they cannot be enumerated by finite means. Additionally, no program can tell whether another program is ‘satisfactory’, a very similar finding to that of the later Halting Problem: no program can tell whether another program will run forever or eventually stop.

Turing uses this result to show that there can be no program which can infallibly tell whether another program will print a particular symbol—if there were, we could combine these to show whether the machine could print any symbol infinitely, and this would be logically equivalent to telling if it were ‘satisfactory’ and so must be impossible.

Finally, he constructs a statement in mathematical logic equivalent to determining whether a machine ever prints a given symbol. Given that this determination is not possible, he has now produced a mathematical statement whose result cannot be determined, and thus has his counterexample: he has shown that ‘the Entscheidungsproblem cannot be solved’ (Turing 1937).

Turing, in showing that there could be no solution to the Entscheidungsproblem, demonstrated that it was impossible to devise an algorithm that would indicate whether a mathematical statement was provable from a set of axioms given a set of rules—in essence, whether it was true or false, given it had total knowledge within its domain. If the law is a formal system—that is, if a legal decision can be arrived at by processing a given a set of axioms (the facts of a case) with a set of rules for their manipulation—then the law is subject to the Entscheidungsproblem. If the law is not a formal system (because the information required or the processing rules cannot be enumerated), then there can also be no decision process, and again we cannot predict the decision by algorithmic means. The legal system is partially formal in that some of the processing rules can be enumerated. Examples include whether eligibility requirements based on age, duration of employment, submission of relevant documents within the required timeframe etc. have been fulfilled. However, other areas of law cannot be enumerated so precisely—questions such as whether a person is a trespasser for the purposes of the offence of burglary (Theft Act 1968, s.9) may require more nuanced arguments in situations where the delineation between where a person is legitimately entitled to be, and where they exceed the bounds of the permission granted and become a trespasser may be subject to equally compelling arguments from both advocates. As Sctutton LJ explained The Calgarth [1926] P. 93:

When you invite a person into your house to use the staircase you do not invite him to slide down the banisters.

Our contention in this article is that the question of whether the individual’s conduct, let us say, of sliding down the staircase on a tin tray, is more analogous to the use of the staircase (because the mischief that the property owner seeks to prevent is injury caused by undue reliance on a rickety balustrade), or whether it is more analogous to the use of the bannisters (because the mischief the property owner seeks to prevent is unruly and undecorous behaviour), and whether or not the quality of the staircase is material to the decision, is not a question whose answer is computable in Turing’s terms because the immediate context of the instant case cannot be predicted. For a Turing machine to emulate another machine precisely, it must have identical configurations (i.e. legal rules) and input (i.e. facts, both ponderable and imponderable). If the facts of one case are even slightly different from the facts of another case, the output of the machine could be wildly different from that which was predicted: legal rules are such that ‘even slight differences in the facts of cases result in wildly disparate judicial outcomes’ (Scott 1993).

2 The Criminal Law Amendment Act 1885

Let us explain this with reference to a specific law. Given that the legislation under which Alan Turing was prosecuted for gross indecency in 1952 was s.11 of the Criminal Law Amendment Act (1885), this is the example we shall use. The m-configurations of the legal system can therefore be identified as incorporating the following:

-

(a)

The enactment of legislation by an authorised legislature.

-

(b)

Investigation by an authorised investigative authority.

-

(c)

Prosecution by an authorised prosecutor.

-

(d)

Hearing before a properly constituted court.

-

(e)

Verdict.

-

(f)

Sentence authorised by law.

-

(g)

Implementation of appeal process.

The operation of these states causes the legal system to operate according to certain behaviours whose results may be expressed as symbols: for example, Y and N can be used to express the result of each test. The legal system thus computes whether a person’s actions constitute a criminal offence, whether they should be prosecuted, whether they are found guilty and how they should be punished. Accordingly, in principle a person would only be found guilty of gross indecency if the elements of the offence are fulfilled, namely

-

(a)

that the defendant is a male person;

-

(b)

that the act is committed in public or private;

-

(c)

that an act of gross indecency is committed;

-

(d)

or the defendant is a party to an act of gross indecency;

-

(e)

or the defendant attempts to procure the commission of an act of gross indecency;

-

(f)

that the other party is a male person.

Thus, the legal process in this context contains many features of computability such as the finite range of possible decisions on evidence (admissible/inadmissible), a finite range of possible decisions on outcome (proved on the balance of probability or beyond reasonable doubt, or not thus proved) and the finite range of the possible means of redress (a fine or a period of imprisonment for example may be possible outcomes, but being placed in stocks for the purposes of public humiliation may not).

If the binary configurations of the legislation were the whole of the law, it would be possible to conclude that the law is computable in accordance with Turing’s analysis. What this analysis overlooks however is twofold. Firstly, the m-configurations of the law cannot be explained in a sufficiently unambiguous way to allow it to be computable—much as McCulloch (1955) concluded in 1955 in relation to the human brain and Turing-equivalent machines. Many of the decisions which make up legal ‘computation’ are hidden processes within the minds of human beings who are members of society, and these societal and personal decisions cannot be analysed with accuracy. A decision on criminal liability is attributable to far more than the statutory elements of an offence, as will be demonstrated later in this article.

Secondly, because the outcome of litigation is influenced by too many factors that rest outside of the legislation that render it impossible to predict whether earlier conditions will be replicated in later cases. In essence, in order for the law to be computable, the tape must contain the whole of society. By using the analogy of an individual case as an input to a putative predictive legal Turing machine it becomes apparent that it is impossible to predict the outcome of a specific case—it is impossible to effectively generate the data required as the input to permit accurate prediction, and it is impossible to generate the description of the machine because of the ‘unknowable’ human factors involved in the processing.

In order to illustrate this proposition, we will explain how Alan Turing’s own experience of the law was determined with reference to a wide range of factors beyond the legislation, and that the timing of his arrest and prosecution came about as a result of a combination of factors that were not replicated either earlier or later—if R v Turing were to be encoded as a Turing machine, its outcome could not be predicted from the outcomes of similar machines encoded for previous cases, because no previous case precisely matched those of R v Turing in every respect.

These external influences may be categorised as

-

(a)

a wider conception of the legal system beyond the statutory rules of the legislation,

-

(b)

the political environment,

-

(c)

the social structure, and

-

(d)

Alan Turing’s personal circumstances.

The combination of all of these factors underpin the fact, as Weeks (1990) explains, that capture and conviction of criminal conduct is not solely attributable to the wording of statutes, and that the law, unlike a computable number, is not calculable by finite means. Thus, although the law aims to be an internally complete system, decidable only with reference to its own materials, external factors operate on its decision-making processes that obstruct the predictability of a legal outcome because it is not possible to know all the factors which should be added to the input. It is also not possible to know these factors will affect the system’s decisions: thus, the m-configurations of the law in the previous cases may be subtly different from those in the later cases, in ways not reflected in statute or precedent, or indeed ways that are possible to predict.

3 Alan Turing and the law

In 1952, Alan Turing was convicted of six counts of gross indecency contrary to s.11 of the Criminal Law Amendment Act (1885), and was ordered to be given a course of hormone therapy as a condition of his probation order. Despite s.11 having been in force for 67 years before Alan Turing’s conviction, and that it remained in force for some fifteen years after he was convicted, the period between 1948 and 1953 represents a high-water mark in terms of the political, legal and social factors that opposed homosexual behaviour. It was in 1885 that homosexuality was first criminalised. Earlier common law and and legislation had criminalised buggery (Buggery Act 1533) and attempted buggery (Offences Against the Person Act 1861, s.62), but these had not been specifically homosexual offences, as both the pre-1533 common law and the statutes of 1533 and 1861 prohibited all anal sexual intercourse irrespective of the gender of the participants. On the other hand, the Criminal Law Amendment Act (1885), by virtue of s.11, specifically prohibited acts of gross indecency between men.

Despite this law having been in force for 82 years, the conviction statistics (HM Government 2015) show interesting patterns for the crimes of gross indecency, sexual assault, and buggery. Conviction rates for gross indecency did not exceed 300 convictions until 1936. However, there was a significant spike (over 1000 convictions) between 1951 and 1955, and a second spike is seen between 1971 and 1984. Convictions for buggery follow a similar pattern. Only in 1929 do we see more than 100 convictions for buggery, but again this escalates from 1941 onwards, and again the high point is in 1954 followed by a subsequent diminution. The third crime is that of indecent assault against a male, and here again, there is a rapid increase in the rate of convictions seen from 1943 onwards and a high point is reached in 1951.

Alan Turing’s arrest in 1952 therefore occurred just at the point when convictions for homosexual offences reached their zenith. What this demonstrates is that the legislation—the configurations that compute whether a person has been grossly indecent—remain constant, yet the calculations in respect of liability vary. The variations, we submit, is because of the effects of politics, and the effects of societal and personal factors on the decision-making powers of the courts. Despite the law remaining constant for nearly 100 years, the irregularity in the pattern of conviction statistics demonstrates that the likelihood of being pursued and thereafter found guilty is not possible to predict. As such, while the configurations of the legislation remain constant, the data upon which they act is subtly different due to the wide-ranging external effects described above, and thus it is impossible to predict whether a later case would follow an earlier one. What follows therefore is an exploration of the range of factors that caused the early 1950s to be a period where the risk of being convicted of a homosexual offence was much greater than it had been earlier, and also much greater than it was to be later in the decade, and why these factors inhibit the capacity of the law to be computable.

4 The legal influences that led to Alan Turing’s conviction

Three legal factors beyond the legislation may be identified as creating a situation in the early 1950s where homosexuality was more likely to lead to a criminal conviction. The first of these was the police, the second was the courts and the third was the expansion of probation as an alternative to imprisonment.

During the early 1950s the police were investigating homosexual offences more frequently (Report of the Departmental Committee on Homosexual Offences and Prostitution para. 33), and s.11 of the Criminal Law Amendment act (1885) provided the police with an opportunity to pursue an offence that, unlike buggery, was easy to prosecute but difficult to defend. Buggery was difficult to prove because, in many cases, only the parties involved could provide the necessary evidence of the offence having taken place, and where the activity was consensual, such evidence was unlikely to be forthcoming. Furthermore, the prosecution had to prove that a specific activity and specific conduct (i.e. anal sexual intercourse) had taken place.

Gross indecency on the other hand was a much more nebulous offence. Any type of conduct could be construed as being grossly indecent and this included acts undertaken in private. Furthermore, gross indecency did not even require physical contact to have taken place between the participants, as the case of R v Hunt [1950] 2 All ER 291 confirms, where all that was required was that the conduct was such that it could be regarded as indecent in the perception of a hypothetical beholder, as Lord Goddard CJ further explains in Hunt:

If a third person had walked into this shed, he would have seen the most shocking indecency between the appellants.

This meant that the courts could hypothesise about the sort of person who could find the defendants’ conduct to be grossly indecent, and it would be very difficult for the defendants to argue that such a person did not exist.

The availability of a range of similar offences also meant that the police had the scope to charge a defendant with multiple offences (buggery, indecent assault and gross indecency, as well as their inchoate equivalents of attempt and procurement), and then persuading the defendant to plead guilty to the lesser charge of gross indecency if the more serious charge were to be withdrawn (Westwood 1960). This type of bargaining was particularly attractive to the police in the late 1940s and early 1950s because arrest rates by the police began to be monitored and austerity meant that police forces were keen to justify their continued existence (Higgins 1996). Accordingly, behaviours that could constitute multiple criminal offences were investigated more extensively than had been the case in previous years and offences that did not require any specific conduct were particularly attractive to pursue. Gross indecency was one such offence particularly as each grossly indecent act was the subject of four separate charges. Accordingly, when Alan Turing reported the burglary of his home to the police, the police were able to press charges for 12 counts of gross indecency on the basis of 3 encounters between Turing and his lover, Arnold Murray. The pressure on the police to increase arrest rates introduced an additional configuration into the legal process that could not have been predicted by the Criminal Law Amendment Act (1885).

The second influential factor outside the legislation, but within the parameters of the legal process, was the courts. Within the court process, there was a high degree of circularity to the prosecution of homosexual offences. More prosecutions meant a greater number of guilty pleas partly because the police encouraged defendants to plead guilty to a lesser charge; gross indecency rather than buggery, and partly because legal representatives sought to minimise the impact of a by using the guilty plea as a basis for arguing in favour of a lower sentence. Once medical treatment was permitted as an alternative to a criminal conviction (as a result of the Criminal Justice Act 1948), more men were advised to plead guilty at the earliest opportunity, and to accept treatment as an alternative to imprisonment. This then justified the continued reliance on gross indecency as the preferred charge by prosecutors, because there was a greater likelihood of securing a conviction.

The attitudes of the courts towards the punishment of offenders was also influential. The expansion of probation (Green 2014) as an alternative to imprisonment had significant consequences for homosexual defendants—although these may be viewed as both positive and negative in their effects. Although a probation order had the positive effects of allowing homosexual men to avoid imprisonment, and thus to maintain their connection with their family and to remain in employment, it also meant that the courts were more willing to return a guilty verdict (Arnot and Usborne 2002) because the consequences of a guilty verdict were less severe.

Also, because homosexuality was perceived at the time as having a medical cause, it meant that a guilty verdict could be justified more easily because it enabled those who were, by the nature of the offence they had committed, classified as ‘ill’ to have access to medical treatment. From 1948 onwards, the combination of the existence of the criminal offence of gross indecency and the availability of a probation order introduces new configurations to the legal system—there is a viable and attractive alternative to imprisonment that makes a guilty verdict seem less harsh and therefore more willingly contemplated than might have been the case earlier in the 1940s when imprisonment was the only possible sentence. This means that the experiences of homosexual defendants after 1948 cannot be predicted with reference to what occurred previously. The availability of alternative sentences dilutes the predictability of the courts’ decision-making process as harsher punishments may encourage more robust defences, while more lenient outcomes may encourage a greater willingness to pled guilty but thereafter to present pleas in mitigation. Again, these inputs into the legal process change the behaviour of the law machine and cause it—potentially at least, to behave differently from that which has been encountered earlier.

This lack of predictability presumes that, at the very least, the courts are applying the law correctly and consistently within these new parameters. However, even within the context of a legal system where homosexual men were more likely to be caught and thereafter more likely to be found guilty of gross indecency, Alan Turing’s experiences do not appear to be something that can have been predicted from the behaviour of Knutsford Assizes at the time. Turing (2015) explains, for example, that others convicted of the same offence on the same day were treated far less harshly than Alan Turing, with sentences ranging from a £15 fine to a 3-year period of probation. Significantly, Alan Turing was sentenced far more harshly than his co-defendant Arnold Murray, who was given a conditional discharge, despite the fact that Murray was also being sentenced for the offence of theft.

Dermot Turing’s view on this (Turing 2015) is that the Knutsford Assizes erred on the law applicable in relation to the orders available to the court. In Alan Turing’s case, a probation order was imposed under s.3 of the Criminal Justice Act (1948), a provision which specified that the order could also require the defendant to comply with any specific conditions considered necessary in order to ensure the defendant’s good conduct. However, s.3 makes no specific reference to medical treatment as being one of those conditions. On the other hand, s.4 of the 1948 Act does refer to a requirement to undergo treatment, but specifies that medical treatment may only be ordered for a mental condition, and it was as a result of s.4, not s.3 that oestrogen injections were administered to Alan Turing. However, no evidence had been put to the court that Alan Turing had any form of mental disorder. Dermot Turing concludes that the court should not have made an order for treatment because it had not established that a mental condition that would justify such a treatment existed. It is doubtful that this argument would have succeeded if Turing’s conviction had been the subject of an appeal because it is possible that no medical evidence needed to be adduced regarding any mental condition because the World Health Organisation had classified homosexuality as a mental illness in 1948 (World Health Organisation 1968) and therefore there was no need for this to be proved specifically in individual cases. Nevertheless, the principle that the predictability of the law’s configurations may be undermined by a misunderstanding of the law is a further significant restriction on the computability of the law. A computer cannot compute the possibility that the law may, legitimately or otherwise, be interpreted in a different manner from that which is expected, or from that which occurred in another case, decided by a different judge.

5 The political influences that led to Alan Turing’s conviction

In addition to the decision-making processes—the m-configurations—of the law however, Alan Turing’s capture and conviction was attributable as much to the influences of politics as it was to the legal system, and the political landscape of the early 1950s affected both the processes by which guilt or innocence were established, and the hidden societal and personal data upon which those processes acted, just as much as the law.

The early 1950s saw the birth of the welfare state, but with it came the rhetoric of selflessness, of making sacrifices for the greater good, and of working together to build a better world (Higgins 1996). The Wildean stereotype of the flamboyantly dressed homosexual (Hornsey 2010) was demonised as being a frivolous spendthrift who was more concerned with his own appearance than with contributing to the common purse (David 1997). Not all homosexuals were aesthetes of course, and beauty does not preclude generosity, but the popular logic was that all homosexuals are aesthetes, and all aesthetes are selfish, therefore all homosexuals are selfish (Higgins 1996).

Society was also more restrictive than it had been 10 years earlier or that it would be 10 years later. This was compounded by the fact that marriage and family values were social priorities and the unmarried man was seen as a threat to this ideal (David 1997), especially as political rhetoric also sought to connect homosexuality and paedophilia in people’s minds (Higgins 1996). In truth, homosexuality was probably no more or less prevalent in the 1950s than it had been at any other time, but presenting it as a threat to a social order that had been fragmented by wartime separations and deaths meant that the first half of the 1950s was a period when the association of homosexuality and ‘badness’ played on people’s fears about fragmented families and moral collapse and there was therefore an increased feeling of justification by politicians that they should be seen to be doing something about a perceived problem.

The personalities in politics at the time were also relevant. Sir David Maxwell Fife was the Home Secretary, Sir John Nott-Bower was the Commissioner of the Metropolitan Police, and Theobald Mathew was the Director of Public Prosecutions. The former is on record as stating that he was greatly concerned there had been a ‘serious increase’ in homosexual offences since the war (Maxwell-Fife 1954), while Nott-Bower was the Commissioner of the Metropolitan Police at a time when the Metropolitan police were using agents provocateurs in order to entrap men into soliciting (Hyde 1970) Mathew meanwhile was very vocal in his objection to homosexuality, and Higgins (1996) explains that there was a sharp increase in the number of prosecutions instigated by the office of the Director of Public Prosecutions for offences involving homosexuality, during Mathew’s tenure in that role. This meant that there was a very powerful homophobic vein in the very sections of the administration that could implement harsh policies to pursue and punish homosexuals (McGhee 2001). According to David (1997), Maxwell-Fife for example, was responsible for bringing about the resignation of William Field MP after he had been found guilty of importuning, and is also suspected to have been instrumental in encouraging the police to prosecute both the writer, Rupert Croft-Cooke and the actor, John Gielgud (Vincent 2014). Yet, personalities in power at a given time are like shifting sands—those in power during one administration are on the opposition benches in the next, and therefore the attitudes of one tranche of politicians may have very little influence once there has been a change of Government or even a Cabinet reshuffle. The consequence is that whose influence is stamped on policing and judicial policy at any given time is impossible to measure. Transgressions of the law that may have been dismissed as being unimportant during the tenure of one Government is magnified and problematized in another, and in 1952, one such problem was homosexuality. One specific reason for the characterisation of homosexuality as a societal problem was that, by 1952, being a homosexual was perceived as a risk to state security, and therefore a homosexual man working for the secret services, as Alan Turing did, was particularly vulnerable to being removed from a position where he might be privy to State secrets.

The Criminal Law Amendment Act (1885) had long been regarded as a blackmailer’s charter (Higgins 1996), but the events of 1951 had compounded this. After the spies, Guy Burgess and Donald Maclean, defected to the Soviet Union, their lifestyles, including Burgess’s homosexuality were luridly described in the press (David 1997), and as a result the term ‘homosexual’ came to be perceived as a euphemism for traitor. It was suggested at the time that the Burgess and Maclean scandal caused the British Government to institute a purge of homosexuals from strategically significant roles—roles that were therefore vulnerable to attempts by enemy organisations to expose weaknesses that could lead to the disclosure of classified information (David 1997). Weeks (1990) further comments that the US State department had ‘already conducted a purge on homosexuals in its own echelons’ and argues that it is conceivable that this may have influenced the UK Government. If this is perception is accurate, then Alan Turing would have been a particular target, not only because of his work with the British secret services, but also because of his work with the American civil service as well, and there would have been a very strong incentive to remove him from Government work if his sexuality (which Turing was not reticent about disclosing to those around him) was a perceived threat.

Although Turing (2015) considers the possibility of a threat to state security to have been unlikely to have influenced the Manchester police at the time of his arrest, it is significant nevertheless that there was a volte face in the court’s attitude between Turing’s committal on February 27 and the trial on March 31. At the committal proceedings, Turing was bailed, while his co-defendant Arnold Murray was remanded in custody. However, at trial, it was Turing who was more harshly treated, while Murray was released. Furthermore, the circumstances of his capture and arrest also has some curious features that suggest that it is at least possible that his capture is attributable to something more than misfortune. His office at the University of Manchester had been broken into a short time before the burglary of his home (Turing 1952) suggesting that this was not the work of an opportunistic thief. The very personal character of the items stolen from Turing’s home (2 medals, 3 clocks, 2 shavers, 2 pairs of shoes, 1 compass, 1 watch, 1 suitcase, 1 part bottle of sherry, 1 shirt, 1 pullover and 1 case of fish knives and forks) (Turing 2015) also suggests that the burglary was undertaken in order to intimidate its victim as opposed to having been undertaken for some financial gain. There is of course no way of knowing what either the Manchester police or the Knutsford Assizes knew of such sensitive information concerning Alan Turing’s work, and therefore all this article is able to claim is that the possibility of their having been influenced by fears about State security cannot be entirely discounted.

In the political landscape of the early 1950s therefore, the homosexual man that undertook confidential work for the Government was at a far greater risk than he would have been five years earlier or even five years later. By 1957, the Wolfenden Committee’s recommendations had made it acceptable to express the view that the private conduct of two individuals was not the business of the criminal courts, and that homosexuality should be decriminalised (Report of the Departmental Committee on Homosexual Offences and Prostitution 1957). The other factors that had caused the early 1950s to be a perfect storm, such as the defections of Burgess and Maclean and the influence of personalities such as Maxwell-Fife and Nott-Bower, had diminished in importance. Thus, although the legislation remained constant, the role of politics and political personalities introduces significant elements of inconsistency into the legal process making it incapable of being computed and incomparable with other similarly configured instances of legal intervention, and is the equivalent in a Turing machine of introducing changes to the tape.

6 The societal influences that led to Alan Turing’s conviction

Outside the spheres of law and politics, a number of societal influences were also significant in terms of increasing the likelihood that a homosexual man would be caught and punished for his homosexuality. Firstly, the medicalisation of homosexuality meant that from the 1940s onwards several different medical and therapeutic approaches were developed which purportedly cured homosexuality—or promised at least to enable patients better to cope with (i.e. to conceal) their sexuality. As the belief that homosexuality had a medical cause became more prevalent, further research, including the work of Glass et al. (1940), sought to demonstrate that it could also be cured by medicine. The perception of homosexuality as having an underlying medical cause is likely to have resulted in more guilty pleas by those who believed that being cured would improve their quality of life, more pleas in mitigation by legal representatives expressing the willingness of the defendant to undergo medical treatment (as in Alan Turing’s case) and possibly a greater willingness on the part of the courts to convict because it would facilitate access to treatment. The medicalisation of homosexuality in the 1950s was something that the legislation of 1885 could not have predicted, and therefore the behaviour of the legal system in 1952 cannot be predicted with reference to its behaviour prior to this date, again demonstrating that the configurations of the legal rules do not represent the totality of the factors in judicial decision-making.

This unpredictability is further manifested by the fact that, by 1955, the medical profession was beginning to doubt its assertions that homosexuality could be cured (Davidson 2009). Doctors became concerned about the consequences of hormone injections and began to view their use as extremely damaging (Evidence of Drs Inch and Boyd to the Wolfenden Committee PRO HO345/15 HP Trans 4). Furthermore, many argued that psychoanalysis was less successful than had been claimed because those who were treated had been very carefully selected to create a positive feedback loop. Furthermore, it was of course possible—indeed probable- that some of those who has been treated were likely to have claimed that the treatment had been successful, in order to ensure that the treatments were discontinued (Westwood 1960).

The second societal factor was the press. The press fuelled public antipathy towards homosexuality, and its reporting practices at the time comprised of a curious combination of silence and scurrility. Higgins (1996) comments that some newspapers did not report trials for gross indecency, claiming that their readers would find them distasteful. Others were more willing to portray homosexuality as a growing problem (HC Deb 03 November 1949, vol. 469 cc577–9) and were using the criminal convictions statistics for gross indecency to substantiate those claims. At the same time, the more scandal-focused sections of the press provided more lurid accounts of prosecutions (Mort 1988) for gross indecency with the result that some readers were being presented with a narrative of homosexuality as vice-ridden immorality while others were being influenced by a more middle-class sense of distaste that was fuelled by the suspicion that vice was taking place (because it was being reported in ‘other’ newspapers) but that it must be extremely sordid because it was not being reported in ‘the sort of newspaper they read’ (Lewis 2013).

An appropriate analogy here may be the case of R v Penguin Books [1961] Crim LR 176 where the concern of the prosecution centred around the impact of D.H. Lawrence’s novel Lady Chatterley’s Lover on more impressionable members of society—the question was not whether the book would deprave and corrupt the jurors themselves, but rather whether it would deprave or corrupt one’s servants.

That homosexuality was a problem (Altman 1972) that needed to be solved was a strong element of public and political rhetoric with the perceptions of the one influencing the behaviour of the other. A strong emphasis was placed on identifying instances of homosexuality among those who worked with children, thus fostering the perception that homosexuality and paedophilia were synonymous (Higgins 1996: 176) and the nomenclature of the Wolfenden Committee (Report of the Departmental Committee on Homosexual Offences and Prostitution) also caused the public to conflate homosexuality and prostitution. Public opinion, despite being diverse also has a tendency to converge on the same conclusion despite applying different processes. Those who opposed homosexuality argued that those practising homosexuality should be punished pour decourager les autres. On the other hand, those who had no objection to homosexuality per se, concluded, reluctantly, that perhaps it should be discouraged because of the impact that the prejudices of others was likely to have upon a person’s social status and employment prospects.

The symbiosis of these factors in the early 1950s is something whose impact on legal decision-making cannot be quantified. It is possible that these factors may not have influenced some courts at all, but their impact on other judges in other cases may have been highly significant. Furthermore, the individuals within the legal process may be unaware of the extent to which they are influenced by social mores—its influence may be something that judges and juries do not consciously recognise. Again, this points to the law being something that does not have identifiable patterns of behaviour, and that legal decision-making does not have precisely identifiable boundaries, thus rendering its outcomes unpredictable less predictable than might be anticipated.

7 The individual influences on Alan Turing and the law

The viewpoints held by those who are liable to influence a defendant are also indicative factors, as is the character and presentation of the defendant himself. In Alan Turing’s case, his friends had advised him to plead not guilty, on the basis that a court would be unlikely to convict (Turing 2015). However, his family’s advice favoured pleading guilty on the basis that the sentence would be lower and that the court would be more likely to accept a plea in mitigation.

The differences in age and social class between Turing and his co-defendant, Arnold Murray are also likely to have been persuasive factors in the case. The former was a 39-year-old university reader, while the latter was 19 years old (and therefore below the age of majority) and worked as a photo-printer when the offence took place. Turing attempted to protect Murray and therefore attempted to mislead the police in order to conceal his suspicions regarding Murray’s involvement (Hodges 1983). A defendant with different influences and a different set of aggravating or mitigating factors may have been treated very differently by the courts as the extent to which the defendant elicits a court’s understanding and sympathy may also be stronger influences on culpability than is imagined by the configurations of legislation. Again, we see how the law’s configurations, while they may be static, are often markedly affected by external factors beyond the bare facts of the case, and how the application of the same law may lead to very different outcomes.

8 Is the law computable?

Alan Turing’s experiences of the legal system in the early 1950s came about because of a complex multiplicity of factors, coming together at one period in time. That period can be pinpointed even more narrowly to the early spring of 1952 in that at no other period in the entire history of England would an indictment for the offence of gross indecency have had to be hastily amended to indicate that the prosecuting authority was no longer The King, but was rather The Queen. This is particularly unfortunate because the term ‘Queen’ or ‘Quean’ was a term that was used at the time as a derogatory term for a homosexual man. This misfortune would only have befallen a person accused of gross indecency from the date of Elizabeth II’s accession, until a time, shortly afterwards, when the standard forms would have been reprinted. Elizabeth II acceded to the throne on February 6th 1952. On that very day, Alan Turing was arrested for the criminal offence of gross indecency, and his indictment does indeed have the words ‘The King’ crossed out, and the word ‘The Queen’ handwritten in its place. The social, legal and political factors that made the early 1950s a period where the risk of capture and punishment for homosexuality are particularly great, are compounded further therefore in the spring of 1952.

What this demonstrates about the computability of the law is that a decision regarding liability or otherwise cannot be predicted solely with reference to the formulae of legislation. Both the data and the processing—the input to the machine and the description of the machine itself—are constantly changing and largely unknowable, incorporating the societal and personal backgrounds of every individual involved in each case. Accordingly, the law is a machine that is touted as being axiomatic, in that a person can only be punished for conduct that is contrary to the law, but the law’s processes involve the operation of factors outside the “formal system” of the legislation itself. Therefore, during some periods in history, that which is contrary to statute may have little impact on whether a person is caught and punished, in that if there is no political, legal or social imperative to prosecute, conduct that is criminal according to the legislation goes undetected and unpunished. During other periods, efforts to bring an individual’s conduct within the sphere of criminality are intensified. Accordingly, after the Sexual Offences Act (1967) decriminalised homosexual acts committed in private, the conviction rate increased as the police and the courts defined ‘in private’ very narrowly with any acts committed where third parties were likely to be present being regarded as contrary to the law. Even though the intention of the 1967 Act therefore was to legitimise homosexuality, the narrow interpretation of the statute meant that homosexual men were in even greater danger of being prosecuted for gross indecency. For example, in the case of R v Knuller (Publishing, Printing and Promotions) [1972] QB 179, Fenton-Atkinson LJ concluded (p. 187) that:

Even if those services included what the defendants refer to as flag and perv, and the fact that Parliament has now said that acts of this kind between adults in private shall not be a crime does not carry with it, in our view, the consequence that such conduct may not be calculated to corrupt public morals.

We conclude therefore by asking whether, with reference to Turing’s own experience whether the law is therefore computable? Is it possible to predict whether the legal system will answer YES, and thus criminalise the individual or will it answer NO? What is demonstrated by Alan Turing’s experience of the law is that the law’s m-configurations and the facts of the case do not predict whether a person will be pursued in respect of a criminal offence or not. Although we may predict that law, politics and social mores may influence the outcome of certain legal processes, what cannot be predicted is the extent and character of these influences at any one time. Despite the appearance of certainty and consistency, even if the configurations of the law were to remain constant, the actions of those configurations would differ due to the multitude of factors which need to be considered as part of the law’s “input” beyond the bare facts of the case, and it is not therefore possible to conclude that like cases will behave in a like manner, just as we cannot predict the output of a given Turing machine. The core reason for this is that the law is not contained solely within its written parameters. It is influenced by a myriad of shifting external factors. Thus, a written law may be relied upon very extensively in some contexts in order to deter particular conduct or to demonstrate that particular conduct is a growing problem, or, conversely, in other contexts, it may fall into disuse. It may also be subject to shifting patterns in terms of the extent to which it is regarded as an accurate barometer of public morality, or a catalyst for change by being used to emphasise that the law is outdated and unjust. Furthermore, it is impossible to predict whether, given similar conditions a court will arrive at the same conclusion. This lack of consistency, as demonstrated by Alan Turing’s experience of the law leads us to two important conclusions—one pertaining to the nature of the law and the other relating to the limitations of artificial intelligence in legal reasoning.

The hypothesis of law is that it should be certain—certainty is identified as one of the principles of European Law (Case C-158/07 Förster v Hoofddirectie van de Informatie Beheer Groep [2008] ECR WE-8507) while the three certainties are essential elements in the creation of a valid trust (Knight v Knight 1840, 3 Beav 148). The prohibition under Article 7 of the European Convention on Human Rights and Fundamental Freedoms (1950) against retrospective criminality would also appear to be a central tenet of the rule of law. However, Alan Turing’s experience of the legal process demonstrates that although the law under which he was prosecuted predicted his act of criminality, the question of whether that law would be used was decided retrospectively. In essence, although the possibility of being prosecuted for acts of gross indecency is prospective, the circumstances whereby actually being prosecuted becomes a likelihood are decided after the legislation has come into force and after the transgressive act has been committed. Our first conclusion then is that law is not computable because its outcomes cannot be calculated by finite means, and the reason for that is that the inputs change in a manner that cannot be predicted, and these configurations—these decision processes—may rely on data which cannot be finitely encoded.

The second important aspect that Alan Turing’s experience of the law shows us the limitations of artificial intelligence in relation to legal reasoning that arises partly because there is a tendency to conflate ‘artificial intelligence’ with ‘the use of computers.’ In the latter situation, although electronic processes may be implemented to undertake a task that would be painstaking and time-consuming for an individual human, the computer is not displaying intelligent behaviour. Much work has been done on the use of computers to identify patterns (Walton 2010) in terms of similar facts and predicting outcomes of cases through the use of artificial intelligence (Unwin 2008). Work has also been undertaken on designing robot lawyers that will undertake legal research and identify salient case precedents (Carey 2013) and giving legal advice based on the information given (Al Abdulkarim 2016). However, the machine is not ‘thinking’ in the sense that Alan Turing conceives of in his other most famous work, ‘Computing Machinery and Intelligence’ (Turing 1950) in other words emulating the thinking behaviour of humans. Computerised legal advice operates either by responding to its programming (El Jelali et al. 2015; Dalke 2013) or learning to identify patterns (Le et al. 2015) in terms of word usage and frequency, identifying factual similarities (Dalke 2013) and explaining probabilities (Franklin 2012).

Accordingly, a computer may be programmed to prompt a user to respond to a series of questions, and can give advice on liability, or the availability of a claim based on the responses given. It was soon discovered however that although this works well with straightforward binary tests, where a yes/no answer may be given (Sergot et al. 1986) it is less capable of dealing with more complex issues where several pieces of legislation must be referred to (Bench-Capon et al. 1987). Despite significant advances having been made in the scope for computers to apply legislation through the use of ontologies (Van Kralingen et al. 1999), Al Abdulkarim (2016) comments that much of the work on artificial intelligence and the law has focused on ‘rule based systems, based on formalisations of legislation of some kind’ and explains that computers have been less adept at recognising less direct connections, such as inferential knowledge. However, more “advanced” forms of artificial intelligence with more powerful inferential techniques may be able to identify trends in legal decision-making and identifying changing patterns in terms of offences that come before the courts. Such artificial intelligence may be able to track sentencing patterns ad to identify common factors in offences (Aleven 1997) that receive punishments at the upper boundaries of the courts’ sentencing powers.

Alan Turing’s experience of the law demonstrates to us that the law is influenced far more extensively by what is not known and by what is not predictable than lawyer imagine. If we return therefore to the requirements under s.11 of the Criminal Law Amendment act (1885), a computer could, on the basis of Alan Turing having answered all the questions pertaining to the elements of the offence in the affirmative, predict that Alan Turing would be guilty of gross indecency. The computational process could be configured along the following pattern:

- COMPUTER::

-

Are you a male person?

- ALAN::

-

Yes. I am.

- COMPUTER::

-

Was the act conducted in public or in private?

- ALAN::

-

Yes it was.

- COMPUTER::

-

Was an act of gross indecency committed?

- ALAN::

-

Yes.

- COMPUTER::

-

Were you party to an act of gross indecency?

- ALAN::

-

Yes.

- COMPUTER::

-

Did you attempt to procure the commission of an act of gross indecency?

- ALAN::

-

Yes.

- COMPUTER::

-

Was the other party a male person?

- ALAN::

-

Yes.

- COMPUTER::

-

Then you are guilty of an offence under s.11 of the Criminal Law Amendment Act (1885).

However, as this article has demonstrated, this was not the problematic issue in the case of R. v Turing and Murray. That Alan Turing was factually guilty of the offence was never greatly in dispute. What was not predictable was the fact that this offence would be pursued so extensively, and that Turing would be found guilty so readily because a non-custodial sentence and the opportunity to provide treatment were available to the courts. Thus, we conclude that the law machine cannot learn by the law’s examples because what the law is does not emanate solely from the law’s texts. Even when the law has a vast number of examples to draw upon, its behaviour will not be consistent. We conclude then that the law machine cannot be programmed because ultimately it is never the same machine. We cannot build a machine to emulate it, because we cannot know the machine and the data upon which it acts.

9 Conclusion

This article was inspired by a visit to Bletchley Park in 2011, and by the juxtaposition within that museum of Alan Turing’s life and his work. In his life, his sexuality, and the response of the legal system of giving female hormones to a male problematised the question of ‘what is the difference between a man and a woman. In his work, the question of whether a machine can think is also framed in terms of asking whether one is able to differentiate between a man and a woman. In this respect, Turing’s life is in his work and Turing’s work is in his life. What this article demonstrates is that the same interconnectedness of life and work is encountered when we juxtapose Alan Turing’s experience of the law with his investigations into what is computable. For the lawyer, Alan Turing’s experiences of the law demonstrates to us that what the law states is not the whole of what the law does. For the computer scientist, analysing Alan Turing the mathematician alongside Alan Turing the homosexual shows us that artificial intelligence cannot necessarily solve the problems of the law. Law is not a computable number. The law’s Entscheidungsproblem cannot be solved. The Alan Turing Problem explains it.

References

Aamodt A, Plaza E (1994) Case-based reasoning: foundational issues, methodological variations, and system approaches. AI Commun 7(1):39–59

Al Abdulkarim L (2016) A methodology for designing systems to reason with legal cases using abstract dialectical frameworks. Artif Intell Law 24(1):1

Aleven V (1997) Teaching case-based argumentation through a model and examples. Ph.D. thesis, University of Pittsburgh

Aleven V, Ashley KD (1997) Teaching case-based argumentation through a model and examples empirical evaluation of an intelligent learning environment. Artif Intell Educ 39:87–94

Altman D (1972) Homosexual: oppression and liberation. Angus and Robertson, London

Arnot M, Usborne C (2002) Gender and crime in modern Europe. Routledge, Abingdon

Ashley KD, Brüninghaus S (2009) Automatically classifying case texts and predicting outcomes. Artif Intell Law 17(2):125–165

Bench-Capon TJM, Robinson GO, Routen T, Sergot MJ (1987) Logic programming for large scale applications in law: a formalisation of supplementary benefit legislation. In: Proceedings of the first international conference on artificial intelligence and law, pp 190–198

Berman D, Hafner C (1987) Indeterminacy: a challenge to logic-based models of legal reasoning. Yearbook of Law Comput Technol 3:1–35

Buggery Act 1533 (25 Hen. 8 c. 6)

Cantor G (1891) Ueber eine elementare Frage der Mannigfaltigkeitslehre. Jahresbericht der Deutschen Mathematiker-Vereinigung 1890–1891

Carey M (2013) Holdings about holdings: modelling contradictions in judicial precedent. Artif Intell Law 21(3):341

Case C-158/07 Förster v Hoofddirectie van de Informatie Beheer Groep [2008] ECR WE-8507

Church A (1936) An unsolvable problem of elementary number theory. Am J Math 58:345

Copeland B (1996) The Church–Turing Thesis. In: Zalta A (ed) Stanford encyclopaedia of philosophy. Stanford University, Stanford

Criminal Justice Act 1948 (1948 c.58)

Criminal Law Amendment Act 1885 (48 & 49 Vict c.69)

Dalke DL (2013) Can computers replace lawyers, mediators and judges? The Advocate 71(5):703

David H (1997) On Queer Street. Harper Collins, London

Davidson R (2009) Law, medicine and the treatment of homosexual offenders in Scotland 1950–1980. In: Goold WE, Kelly C (eds) Lawyer’s medicine: the legislature, the courts and medical practice 1760–2000. Hart Publishing, Oxford, p 125

El Jelali S, Fersini E, Messina E (2015) Legal retrieval as support to eMediation: matching disputant’s case and court decisions. Artif Intell Law 23:1

European Convention on Human Rights and Fundamental Freedoms 1950

Franklin J (2012) How much of common sense and legal reasoning is formalizable: a review of conceptual obstacles. Law Probab Risk 11:225

Glass SJ, Deuel HJ, Wright CA (1940) Sex hormone studies in male homosexuality. Endocrinology 26(4):590

Green S (2014) Crime, community and morality. Routledge, Abingdon

Gödel K (1931) Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme I. Monatshefte Math Phys 38(1):173

HM Government (2015) A summary of recorded crime data from 1898 to 2001/02. https://www.gov.uk/government/statistics/historical-crime-data. Accessed 23 Sept 2015

Hafner C, Berman D (2002) The role of context in case-based legal reasoning: teleological, temporal, and procedural. Artif Intell Law 10(1):19

Hansard HC Deb 03 November 1949 vol. 469 cc577–9

Higgins P (1996) Heterosexual dictatorship. Fourth Estate, London, p 159

Hilbert D (1967) The foundations of mathematics. In: Heijenoort V (ed) From Frege to Gödel: a sourcebook on mathematical logic 1879–1931. Harvard University Press, Cumberland, p 475

Hodges A (1983) Alan Turing: The Enigma. Vintage, London

Hohfeld W (1913) Fundamental legal conceptions as applied in judicial reasoning. Yale Law J 23:16

Hornsey R (2010) The Spiv and The Architect: Unruly life in Post-war London. Regents University of Minnesota, Minneapolis

Huws CF (2014) Command Theory, Control and Computing: A Playwright’s Perspective on Alan Turing and the Law. Liverpool Law Rev 35(1):7–23

Hyde HM (1970) The other love: an historical and contemporary survey of homosexuality in Britain. Heinemann, London

Knight v Knight (1840) 3 Beav 148

Le TTN, Shirai K, Nguyen ML, Shimazu A (2015) Extracting indices from Japanese legal documents. Artif Intell Law 23(4):315–344

Lewis B (2013) British Queer history: new approaches and perspectives. Manchester University Press, Manchester

Liebwald D (2015) On transparent law; good legislation and accessibility to legal information: towards an integrated information system. Artif Intell Law 23(3):301

Loui RP (2016) From Berman and Hafner’s teleological context to Baude and Sachs’ interpretive defaults: an ontological challenge for the next decades of AI and Law. Artif Intell Law 24(4):371

Maxwell-Fife D (1954) Sexual Offences, Cabinet Office Record, 17 February. 1954, PRO: CAB 129/66

McCulloch WS (1955) Mysterium Iniquitatis of sinful man aspiring into the place of God. Sci Mon 80(1):36

McGhee D (2001) Homosexuality, law and resistance. Routledge, Abingdon

Mort F (1988) Cityscapes: consumption, masculinities, and the mapping of London. Urban Stud 35:5–6

Offences Against the Person Act 1861 (24 a& 25 Vict. c.100)

Petzold C (2008) The annotaed Turing: a guided tour through Alan Turing’s historic paper on computability and the Turing machine. Wiley, Indianapolis

Prakken H, Sartor G (1996) Modelling reasoning with precedents in a formal dialogue game. Artif Intell Law 6(2):127

R v Hunt [1950] 2 All ER 291

R v Knuller (Publishing, Printing and Promotions) [1972] QB 179

R v Penguin Books [1961] Crim LR 176

Report of the Departmental Committee on Homosexual Offences and Prostitution. London; HMSO, 1957

Scott RE (1993) Chaos theory and the justice paradox. William Mary Law Rev 35:329

Sergot MJ, Sadri F, Kowalski RA, Kriwaczek F, Hammond P, Cory HT (1986) The British nationality act as a logic program. Commun ACM 29(5):370–386

Sexual Offences Act 1967 (1967 c.60)

Simmons K (1993) Universality and the liar: an essay on truth and the diagonal argument. Cambridge University Press, Cambridge

The Calgarth [1926] P. 93

Theft Act 1968 (1968 c.60)

Turing AM (1937) On computable numbers with an application to the Entscheidungsproblem. Proc Lond Math Soc 42(2):230

Turing AM (1950) Computing Machinery and Intelligence. Mind 49:433–460

Turing AM (1952) Letter to Fred Clayton, quoted in Hodges A (2012) Alan Turing: The Enigma. Princeton University Press, Princeton

Turing D (2015) Prof: Alan Turing decoded. The History Press, Stroud

Unwin C (2008) An object model for use in oral and written advocacy. Artif Intell Law 16(4):384

Van Kralingen RW, Visser PR, Bench-Capon TJ, Van Den Herik HJ (1999) A principled approach to developing legal knowledge systems. Int J Hum Comput Stud 51(6):1127–1154

Vincent J (2014) LGBT people and the UK cultural sector: the response of libraries, museums, archives and heritage since 1950. Routldge, Abingdon

Vlek C, Prakken H, Renooik S, Verheij B (2016) A method for explaining Bayesian networks for legal evidence with scenarios. Artif Intell Law 24(3):285–324

Walton D (2010) Similarity, precedent and argument from analogy. Artif Intell Law 18(3):217

Weeks J (1990) Coming out: homosexual politics in Britain from the 19th century to the present. Quartet Books, London, p 12

Westwood G (1960) A minority. Longmans Green, London

World Health Organisation (1968) International Statistical Classification of Diseases and Related Health Problems International Classification of Diseases 6. World Health Organisation, Geneva

Zach R (2016) Hilbert’s Program. In: Zalta E (ed) The Stanford encyclopedia of philosophy. Metaphysics Research Lab, Stanford University, Stanford

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Huws, C.F., Finnis, J.C. On computable numbers with an application to the AlanTuringproblem . Artif Intell Law 25, 181–203 (2017). https://doi.org/10.1007/s10506-017-9200-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10506-017-9200-2