Abstract

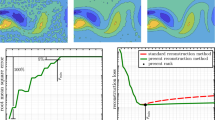

Surrogate models are usually used to perform global sensitivity analysis (GSA) by avoiding a large ensemble of deterministic simulations of the Monte Carlo method to provide a reliable estimate of GSA indices. However, most surrogate models such as polynomial chaos (PC) expansions suffer from the curse of dimensionality due to the high-dimensional input space. Thus, sparse surrogate models have been proposed to alleviate the curse of dimensionality. In this paper, three techniques of sparse reconstruction are used to construct sparse PC expansions that are easily applicable to computing variance-based sensitivity indices (Sobol indices). These are orthogonal matching pursuit (OMP), spectral projected gradient for L 1 minimization (SPGL1), and Bayesian compressive sensing with Laplace priors. By computing Sobol indices for several benchmark response models including the Sobol function, the Morris function, and the Sod shock tube problem, effective implementations of high-dimensional sparse surrogate construction are exhibited for GSA.

Similar content being viewed by others

References

Saltelli, A., Ratto, M., Andres, T., Campolongo, F., Cariboni, J., Gatelli, D., Saisana, M., and Tarantola, S. Global Sensitivity Analysis: the Primer, Wiley, England (2008)

Saltelli, A. Sensitivity analysis for importance assessment. Risk Analysis, 22, 579–590 (2002)

Sobol, I. M. Sensitivity estimates for nonlinear mathematical models. Mathematical Modeling and Computational Experiment, 1, 407–414 (1993)

Homma, T. and Saltelli, A. Importance measures in global sensitivity analysis of model output. Reliability Engineering and System Safety, 52, 1–17 (1996)

Borgonovo, E. A new uncertainty importance measure. Reliability Engineering and System Safety, 92, 771–784 (2007)

Saltelli, A. Making best use of model evaluations to compute sensitivity indices. Computer Physics Communication, 145, 280–297 (2002)

Wei, P., Lu, Z., and Yuan, X. Monte Carlo simulation for moment-independent sensitivity analysis. Reliability Engineering and System Safety, 110, 60–67 (2013)

Oakley, J. E. and O’Hagan, A. Probabilistic sensitivity analysis of complex models: a Bayesian approach. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 66, 751–769 (2004)

Sudret, B. Global sensitivity analysis using polynomial chaos expansions. Reliability Engineering and System Safety, 93, 964–979 (2008)

Xiu, D. and Karniadakis, G. E. The Wiener-Askey polynomial chaos for stochastic differential equations. SIAM Journal on Scientific Computing, 24, 619–644 (2002)

Le Maitre, O. P. and Knio, O. M. Spectral Methods for Uncertainty Quantification: with Applications to Computational Fluid Dynamics, Springer, Netherlands (2010)

Blatman, G. and Sudret, B. An adaptive algorithm to build up sparse polynomial chaos expansions for stochastic finite element analysis. Probabilistic Engineering Mechanics, 25, 183–197 (2010)

Blatman, G. and Sudret, B. Efficient computation of global sensitivity indices using sparse polynomial chaos expansions. Reliability Engineering and System Safety, 95, 1216–1229 (2010)

Blatman, G. and Sudret, B. Adaptive sparse polynomial chaos expansion based on least angle regression. Journal of Computational Physics, 230, 2345–2367 (2011)

Cameron, R. and Martin, W. The orthogonal development of nonlinear functionals in series of Fourier-Hermite functionals. Annals of Mathematics, 48, 385–392 (1947)

Field, R. V. Numerical methods to estimate the coefficients of the polynomial chaos expnsion. Proceedings of the 15th ASCE Engineering Mechanics Conference, ASCE, New York (2002)

Choi, S. K., Grandhi, R. V., Canfield, R. A., and Pettit, C. L. Polynomial chaos expansion with Latin hypercube sampling for estimating response variability. AIAA Journal, 45, 1191–1198 (2004)

Radović, I., Sobol, I. M., and Tichy, R. F. Quasi-Monte Carlo methods for numerical integration: comparison of different low discrepancy sequences. Monte Carlo Methods and Applications, 2, 1–14 (1996)

Smolyak, S. A. Quadrature and interpolation formulas for tensor products of certain classes of functions. Soviet Mathematics Doklady, 4, 240–243 (1963)

Foucart, S. and Rauhut, H. A Mathematical Introduction to Compressive Sensing, Springer, New York (2013)

Doostan, A. and Owhadi, H. A non-adapted sparse approximation of PDEs with stochastic inputs. Journal of Computational Physics, 230, 3015–3034 (2011)

Mathelin, L. and Callivan, K. A. A compressed sensing approach for partial differential equations with random input data. Communications in Computational Physics, 12, 919–954 (2012)

Peng, J., Hampton, J., and Doostan, A. A weighted-minimization approach for sparse polynomial chaos expansions. Journal of Computational Physics, 267, 92–111 (2014)

Tropp, J. A. and Wright, S. J. Computational methods for sparse solution of linear inverse problems. Proceedings of the IEEE, 98, 948–958 (2010)

Mallat, S. and Zhang, Z. Matching pursuits with time-frequency dictionaries. IEEE Transactions on Signal Processing, 41, 3397–3415 (1993)

Pati, Y. C., Rezaiifar, R., and Krishnaprasad, P. S. Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition. The 27th Annual Asilomar Conference on Signals, Systems and Computers, 1, 40–44 (1993)

Davis, G., Mallat, S., and Avellaneda, M. Adaptive greedy approximation. Constructive Approximation, 13, 57–98 (1997)

Needell, D. and Tropp, J. A. CoSaMP: iterative signal recovery from incomplete and inaccurate samples. Applied and Computational Harmonic Analysis, 26, 301–321 (2009).

Chen, S., Donoho, D., and Saunders, M. Atomic decomposition by basis pursuit. SIAM Journal on Scientific Computing, 20, 33–61 (1998)

Chen, S., Donoho, D., and Saunders, M. Atomic decomposition by basis pursuit. SIAM Review, 43, 129–159 (2001)

Donoho, D. L. and Tsaig, Y. Fast solution of l1-norm minimization problems when the solution may be sparse. IEEE Transactions on Information Theory, 54, 4789–4812 (2008)

Van den Berg, E. and Friedlander, M. Probing the Pareto frontier for basis pursuit solutions. SIAM Journal on Scientific Computing, 31, 890–912 (2008)

Daubechies, I., DeVore, R., Fornasier, M., and Güntürk, C. S. Iteratively reweighted least squares minimization for sparse recovery. Communications on Pure and Applied Mathematics, 63, 1–38 (2010)

Efron, B., Hastie, T., Johnstone, I., and Tibshirani, R. Least angle regression. Annals of Statistics, 32, 407–499 (2004)

Tipping, M. E. Sparse Bayesian learning and the relevance vector machine. Journal of Machine Learning Research, 1, 211–244 (2001)

Wipf, D. and Rao, B. Sparse Bayesian learning for basis selection. IEEE Transactions on Signal Processing, 52, 2153–2164 (2004)

Ji, S., Xue, Y., and Carin, L. Bayesian compressive sensing. IEEE Transactions on Signal Processing, 56, 2346–2356 (2008)

Babacan, S. D., Molina, R., and Katsaggelos, A. K. Bayesian compressive sensing using Laplace priors. IEEE Transactions on Image Processing, 19, 53–63 (2010)

Cand`es, E., Romberg, J., and Tao, T. Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Transactions on Information Theory, 52, 489–509 (2006)

Donoho, D. L. Compressed sensing. IEEE Transactions on Information Theory, 52, 1289–1306 (2006)

Birgin, E. G., Martinez, J. M., and Raydan, M. Inexact spectral projected gradient methods on convex sets. IMA Journal of Numerical Analysis, 23, 539–559 (2003)

Tipping, M. and Faul, A. Fast marginal likelihood maximisation for sparse Bayesian models. Proceedings of the Ninth International Workshop on Artificial Intelligence and Statistics, Morgan Kaufmann Publishers, Florida (2003)

Sobol, I. M., Turchaninov, V. I., Levitan, Y. L., and Shukhman, B. V. Quasi-Random Sequence Generators, Russian Acamdey of Sciences, Moscow (1992)

Narayan, A. and Zhou, T. Stochastic collocation methods on unstructured meshes. Computer Physics Communication, 18, 1–36 (2015)

Sobol, I. M. Theorems and examples on high dimensional model representation. Reliability Engineering and System Safety, 79, 187–193 (2003)

Sod, G. A. A Survey of several finite difference methods for systems of nonlinear hyperbolic conservation laws. Journal of Computational Physics, 27, 1–31 (1978)

Author information

Authors and Affiliations

Corresponding author

Additional information

Project supported by the National Natural Science Foundation of China (Nos. 11172049 and 11472060) and the Science Foundation of China Academy of Engineering Physics (Nos. 2015B0201037 and 2013A0101004)

Rights and permissions

About this article

Cite this article

Hu, J., Zhang, S. Global sensitivity analysis based on high-dimensional sparse surrogate construction. Appl. Math. Mech.-Engl. Ed. 38, 797–814 (2017). https://doi.org/10.1007/s10483-017-2208-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10483-017-2208-8

Key words

- global sensitivity analysis (GSA)

- curse of dimensionality

- sparse surrogate construction

- polynomial chaos (PC)

- compressive sensing