Abstract

This paper first establishes consistency of the exponential series density estimator when nuisance parameters are estimated as a preliminary step. Convergence in relative entropy of the density estimator is preserved, which in turn implies that the quantiles of the population density can be consistently estimated. The density estimator can then be employed to provide a test for the specification of fitted density functions. Commonly, this testing problem has utilized statistics based upon the empirical distribution function, such as the Kolmogorov-Smirnov or Cramér von-Mises, type. However, the tests of this paper are shown to be asymptotically pivotal having limiting standard normal distribution, unlike those based on the edf. For comparative purposes with those tests, the numerical properties of both the density estimator and test are explored in a series of experiments. Some general superiority over commonly used edf based tests is evident, whether standard or bootstrap critical values are used.

Similar content being viewed by others

1 Introduction

Testing whether a sample of data has been generated from a hypothesized distribution is one of the fundamental problems in statistics and econometrics. Traditionally such tests have been constructed from the empirical distribution function (edf). Even under the simplest of sampling schemes such tests are known to be not asymptotically pivotal, e.g. see Stephens (1976), Conover (1999) and Babu and Rao (2004). Moreover, under more sophisticated sampling schemes such tests can become prohibitively complex, see Bai (2003) and Corradi and Swanson (2006).

Instead, this paper provides tests based on a generalization of the consistent series density estimator of Crain (1974) and Barron and Sheu (1991) . Consistency is maintained when nuisance parameters are estimated as a preliminary step. This, when applied to the infinite dimensional likelihood ratio test of Portnoy (1988) generalizes the tests of Claeskens and Hjort (2004) and Marsh (2007) to test for specification.

The proposed procedure offers three advantages over those tests based on the edf. First they are asymptotically pivotal, and numerical experiments are designed and reported in support of this. This also implies automatic validity, including second-order as in Beran (1988), of bootstrap critical values. Valid bootstrap critical values for the non-pivotal edf based tests, e.g. as in Kojadinovic and Yan (2012), do not benefit from this. Second, they are generally more powerful than the most commonly used edf based tests. Again numerical evidence is presented to support this. Lastly, because they are based on a consistent density estimator, in the event of rejection the density estimator itself can be used to, for instance, consistently estimate the quantiles of the underlying variable.

The plan for the paper is as follows. The next section presents the density estimator and demonstrates that it converges in relative entropy to the population density. A corollary provides consistent quantile estimation, with accuracy demonstrated in numerical experiments. Section 3 provides the nonparametric test, establishes that it is asymptotically pivotal and consistent against fixed alternatives. A corollary establishes validity of bootstrap critical values. Numerical experiments are presented in support of these results as well as demonstrating some superiority over edf based tests. Section 4 concludes while two appendices present the proofs of two theorems and tables containing the outcome of the experiments, respectively.

2 Consistent nonparametric estimation of possibly misspecified densities

2.1 Theoretical results

Suppose that our sample \(\underline{y}=\left\{ Y_{i}\right\} _{i=1}^{n}\) consists of independent copies of a random variable Y having distribution, \(G\left( y\right) =\Pr [Y\le y]\) and density \(g\left( y\right) =dG\left( y\right) /dy.\) For this sample we fit the parametric likelihood, \( L=\prod _{i=1}^{n}f\left( Y_{i};\beta \right) \) for some chosen density function \(f\left( y;\beta \right) ,\) where \(\beta \) is an unknown \(k\times 1\) parameter. Denote the (quasi) maximum likelihood estimator for \(\beta \) by \( {\hat{\beta }}_{n}.\)

In this context the hypothesis to be tested is:

where \(F\left( y;\beta \right) =\int _{-\infty }^{y}f\left( z;\beta \right) dz \) and for some (unknown) value \(\beta _{0}.\) Tests for \(H_{0}\) will be detailed in the next Section. First, however, we assume the following, whether or not \(H_{0}\) holds:

Assumption 1

-

(i)

The density \(f\left( y;\beta \right) \) is measurable in y for every \(\beta \in {\varvec{B}}\), a compact subset of \(p-\)dimensional Euclidean space, and is continuous in \( \beta \) for every y.

-

(ii)

\(G\left( y\right) \) is an absolutely continuous distribution function, \(E\left[ \log [g\left( y\right) \right] \)exists and \( \left| \log f\left( y,\beta \right) \right| <v\left( y\right) \) for all \(\beta \) where \(v\left( .\right) \) is integrable with respect to \(G\left( .\right) .\)

-

(iii)

Let

$$\begin{aligned} I\left( \beta \right) =E\left[ \ln \left[ \frac{g\left( y\right) }{f\left( y,\beta \right) }\right] \right] =\int _{y}\ln \left[ \frac{g\left( y\right) }{f\left( y,\beta \right) }\right] g\left( y\right) , \end{aligned}$$then \(I\left( \beta \right) \)has a unique minimum at some \(\beta _{*}\in {\varvec{B}}.\) (iv) \(F\left( Y;\beta \right) \) is continuously differentiable with respect to \(\beta \), such that \(H\left( \beta \right) =\partial F\left( Y_{i},\beta \right) /\partial \beta \) is finite, for all \(\beta \) in a closed ball of radius \(\epsilon >0,\) around \(\beta _{*}.\) (v) Both \(\log \left[ g\left( y\right) \right] \) and \(\log \left[ f\left( y;\beta \right) \right] \) have \(r\ge 2\) derivatives in y which are absolutely continuous and square integrable.

Immediate from White (1982, Theorems 2.1, 2.2 and 3.2) is that under Assumption 1(i–iii) \({\hat{\beta }}_{n}\) exists and

That is \({\hat{\beta }}_{n}\) is a \(\sqrt{n}\) consistent Quasi maximum likelihood estimator for the pseudo-true value \(\beta _{*}.\) Note that under \(H_{0}\) we have \(\beta _{*}=\beta _{0}.\) To proceed denote \({\hat{X}} _{i}=F\left( Y_{i},{\hat{\beta }}_{n}\right) \) having mean value expansion,

where \(\beta ^{+}\) lies on a line segment joining \({\hat{\beta }}_{n}\) and \( \beta _{*}\). As a consequence we can write

where \({\bar{X}}_{i}=F\left( Y_{i},\beta _{*}\right) \) and by construction and as a consequence of Assumption 1 (iv),

that is \(e_{i}\) is both bounded and degenerate.

Since the \({\bar{X}}_{i}\) are IID denote their common distribution and density function by \(U\left( x\right) =\Pr \left[ {\bar{X}}<x\right] \) and \(u\left( x\right) =dU\left( x\right) /dx,\) respectively. Here we will apply the series density estimator of Crain (1974) and Barron and Sheu (1991) to consistently estimate \(u\left( x\right) \) and thus quantiles of \(U\left( x\right) \), from which the quantiles of \(G\left( y\right) \) can be consistently recovered. Application of the density estimator requires choice of approximating basis, here we choose the simplest polynomial basis, similar to Marsh (2007).

We will approximate \(u\left( x\right) \) via the exponential family,

where \(\psi _{m}\left( \theta \right) \) is the cumulant function, defined so that \(\int _{0}^{1}p_{x}(\theta )dx=1.\)

From Assumption 1 \(\log \left[ u\left( x\right) \right] \) has, at least, \( r-1 \) absolutely continuous derivatives and its \(r^{th}\) derivative is square integrable. According to Barron and Sheu (1991) there exists a unique \(\theta _{\left( m\right) }=\left( \theta _{1},\ldots ,\theta _{m}\right) ^{\prime }\) satisfying

and, as \(\,m\rightarrow \infty ,\)\(p_{x}\left( \theta _{\left( m\right) }\right) \) converges, in relative entropy, to \(u\left( x\right) \) at rate \( m^{-2r},\) meaning that

as \(m\rightarrow \infty .\) Moreover, if a sample \(\left\{ {\bar{X}} _{i}\right\} _{1}^{n}\) were available then if \(m^{3}/n\rightarrow 0\) and letting \({\bar{\theta }}_{\left( m\right) }\) be the unique solution to

then \(p_{x}\left( {\bar{\theta }}_{\left( m\right) }\right) \) converges in relative entropy to \(u\left( x\right) ,\)

see Theorem 1 of Barron and Sheu (1991).

Here, however, the sample \(\left\{ {\bar{X}}_{i}\right\} _{1}^{n}\) is not available, instead we only observe \(\left\{ {\hat{X}}_{i}\right\} _{1}^{n}\) and consequently have \({\hat{\theta }}_{\left( m\right) }\) as the unique solution to

Note that the Eqs (5), (6) and (7 ) define one-to-one mappings between the sample space \(\varOmega _{\left( m\right) }\in {\mathbb {R}}^{m}\) and the parameter space \(\varTheta _{\left( m\right) }\in {\mathbb {R}}^{m}\) in the exponential family, see Barndorff-Nielsen (1978). We can therefore define three pairs of m dimensional parameter and statistics, respectively as \(\left\{ \theta _{\left( m\right) }:\mu _{\left( m\right) }\right\} ,\)\(\left\{ {\bar{\theta }} _{\left( m\right) }:{\bar{X}}_{\left( m\right) }\right\} \) and \(\left\{ \hat{ \theta }_{\left( m\right) }:{\hat{X}}_{\left( m\right) }\right\} ,\) where \(\mu _{\left( m\right) }=\left\{ \mu _{k}\right\} _{k=1}^{m}\), \({\bar{X}}_{\left( m\right) }=\left\{ n^{-1}\sum _{i=1}^{n}{\bar{X}}_{i}^{k}\right\} _{k=1}^{m}\) and \({\hat{X}}_{\left( m\right) }=\left\{ n^{-1}\sum _{i=1}^{n}{\hat{X}} _{i}^{k}\right\} _{k=1}^{m}.\) Generically these mappings can be expressed via

The uniqueness of these mappings can be exploited in the following Theorem, proved in Appendix A, to show that the density estimator \(p_{x}\left( {\hat{\theta }}_{\left( m\right) }\right) \) converges in relative entropy at the same rate as \(p_{x}\left( {\bar{\theta }}_{\left( m\right) }\right) .\)

Theorem 1

Let \({\hat{\theta }}_{(m)}\) denote the estimated exponential parameter determined by (7) then under Assumption 1 and for \(m,n\rightarrow \infty \) with \(m^{3}/n\rightarrow 0,\)

According to Theorem 1, in terms of the density estimator, at least, the effect of observing \(\left\{ {\hat{X}}_{1},\ldots ,{\hat{X}}_{n}\right\} \) rather than \(\left\{ X_{1},\ldots ,X_{n}\right\} \) is asymptotically negligible under Assumption 1 and for either choice of basis. Moreover, if the goal were only nonparametric estimation of the density, then the optimal choice of the dimension m is the same as when no parameters are estimated, i.e. \( m_{opt}\propto n^{\frac{1}{1+2r}}\) (with a mini-max rate of \(m_{n}^{*}=O\left( n^{-1/5}\right) ,\) since \(r\ge 2\) by assumption). The optimal rate the rate of convergence of the estimator remains of order \(O_{p}\left( n^{-\frac{2r}{1+2r}}\right) .\) It should not be surprising that the rate of convergence is unaffected when parameters are replaced by \(\sqrt{n}\) consistent estimators. Theorem 1 thus generalises the results of Crain (1974) and Barron and Sheu (1991), as summarized in Lemma 1 of Marsh (2007), by permitting estimation of nuisance parameters as a preliminary step.

Additionally, we may recover the quantiles of Y from those implied by the approximating series density estimator. This is captured in the following Corollary, which follows immediately since convergence in relative entropy implies convergence in law.

Corollary 1

Let \({\hat{T}}_{n,m}\in \left( 0,1\right) \) be a random variable having density function \(p_{t}\left( {\hat{\theta }} _{\left( m\right) }\right) \) where \({\hat{\theta }}_{\left( m\right) } \) is defined by (7), then

as \(n,m\rightarrow \infty ,m^{3}/n\rightarrow 0.\) I.e. \({\hat{T}}_{n,m}\) converges in law to the random variable \({\bar{X}}. \)

2.2 Numerical application of a quantile estimator

The consequence of Corollary 1 is that the quantiles associated with \( T_{n,m} \) converge to those of Y, i.e. letting \(q_{A}\left( \pi \right) ,\) for \(0<\pi <1,\) denote the quantile function of the random variable A, we have

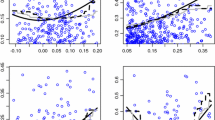

The following set of experiments compare the Mean Square Errors (MSE) of estimators for the quantiles of Y based on those of \({\hat{T}}_{n,m}\) for \( m=3,9\) and for quantiles calculated at the probabilities, \(\pi =.05,.25,.50,.75,.95\). We also compare the accuracy of estimated quantiles when unknown parameters are estimated against cases where they are not.

First suppose that \(Y_{i}\sim IID\,Y:=t_{\left( 4\right) }\) but we estimate the Gaussian likelihood implied by \(N\left( \mu ,\sigma ^{2}\right) \). Define

the first obtained from the (misspecified) Gaussian model imposing zero mean and unit variance, and the second from the Gaussian model with estimated mean and variance.

Following the development above, as well as that of Barron and Sheu (1991) , let \(\theta _{\left( m\right) }^{*}\) and \({\hat{\theta }}_{\left( m\right) } \) and denote the estimated parameters for the exponential series density estimators for the samples \(\left\{ X_{i}^{*}\right\} _{1}^{n}\) and \( \left\{ {\hat{X}}_{i}\right\} _{1}^{n}\), respectively. Let \(T_{n,m}^{*}\) have density \(p_{t}\left( \theta _{\left( m\right) }^{*}\right) \) (note that this is just straight forward application of the original set-up of Barron and Sheu 1991) and let \({\hat{T}}_{n,m}\) have density \(p_{t}\left( {\hat{\theta }}_{\left( m\right) }\right) ,\) as in Corollary 1. The pairs of estimated quantiles for Y are then constructed as in

The MSE of these quantiles, for each probability \(\pi ,\) are presented in Appendix B, Tables 1a for \(m=3\) and 1b for \(m=9.\)

Next suppose that \(Y_{i}\sim IID\,Y:=\varGamma \left( 1.2,1\right) \) and define

Analogous to above let \(T_{n,m}^{*}\) and \({\hat{T}}_{n,m}\) have densities \( p_{t}\left( \theta _{\left( m\right) }^{*}\right) \) and \(p_{t}\left( {\hat{\theta }}_{\left( m\right) }\right) \) and so pairs of estimated quantiles for Y are constructed via,

The MSE of these quantiles, for each probability \(\pi ,\) are presented in Table 1c \((m=3)\) and 1d \((m=9).\)

The consistency of the quantiles obtained from, in particular, \({\hat{T}} _{m,n} \) is illustrated clearly in Table 1. More relevant, however, is that estimating the parameters of the fitted model as a preliminary step produces quantile estimators that can be superior, as the sample size becomes large, to those obtained by simply imposing parameter values, as can be clearly seen by comparing the right and left panels in Table 1. Note also that although the larger value of m yields more accurate quantile estimates in these cases, this is at some computational cost and, in other cases, potential numerical instability. Although this latter possibility is greatly mitigated, since the \(\left\{ {\hat{X}}_{i}\right\} _{i=1}^{n}\) are bounded.

3 Consistent, asymptotically pivotal tests for goodness of fit

3.1 Main results

Here we provide a test of the null hypothesis that the fitted likelihood is correctly specified as in (1).The previous section generalized the Barron and Sheu (1991) series density estimator and the resulting nonparametric likelihood ratio test then generalizes the test of Marsh (2007).

To proceed note that when \(H_{0}\) is true then in Assumption 1, \(\beta _{*}=\beta _{0}\) and in (2) \({\bar{X}}_{i}=F\left( Y_{i},\beta _{0}\right) \sim IIDU\left[ 0,1\right] .\) Direct generalization of the principle in Marsh (2007) means that (1) can be tested via,

in the exponential family (4), where \(\theta _{\left( m\right) }\) is the solution to (5) and \(0_{(m)}\) is an \(m\times 1\) vector of zeros.

The likelihood ratio test of Portnoy (1988) applied via the density estimator of Crain (1974) and Barron and Sheu (1991)obtained from the sample \(\left\{ {\hat{X}}_{1},\ldots ,{\hat{X}}_{n}\right\} \) is

The null hypothesis is rejected for large values of \({\hat{\lambda }}_{m}.\)

Under any fixed alternative \(H_{1}:G\left( y\right) \ne F\left( y;\beta _{0}\right) \) the distribution of \({\bar{X}}_{i}=F_{i}\left( Y_{i};\beta _{*}\right) \) will not be uniform, i.e. \(\theta _{\left( m\right) }\ne 0_{(m)}.\) For every fixed alternative distribution for Y there is a unique alternative distribution for X on (0, 1) and associated with that distribution will be another consistent density estimator given by say, \( p_{x}(\theta _{\left( m\right) }^{1}).\) In practice, of course, \(\theta _{\left( m\right) }^{1}\) will be neither specified nor known. The following Theorem, again proved in Appendix A, gives the asymptotic distribution of the likelihood ratio test statistic both under the null hypothesis (10) and also demonstrates consistency against any such fixed alternative.

Theorem 2

Suppose that Assumption 1 holds, we construct \(\left\{ {\hat{X}}_{i}\right\} _{i=1}^{n}\) as described in (2), and we let \(m,n\rightarrow \infty \) with \(m^{3}/n\rightarrow 0,\) then:

-

(i)

Under the null hypothesis, \(H_{0}:G\left( y\right) =F\left( y;\beta _{0}\right) ,\)

$$\begin{aligned} \,{\hat{\varLambda }}_{m}=\,\frac{{\hat{\lambda }}_{m}-m}{\sqrt{2m}}\rightarrow _{d}N(0,1). \end{aligned}$$ -

(ii)

Under any fixed alternative \(H_{1}:G\left( y\right) \ne F\left( y;\beta \right) ,\) for any \(\beta ,\) and for any finite \(\kappa ,\)

$$\begin{aligned} \,\Pr \left[ {\hat{\varLambda }}_{m}\ge \kappa \right] \rightarrow 1.\quad \end{aligned}$$

Theorem 2 generalizes the test of Marsh (2007) establishing asymptotic normality and consistency against fixed alternatives when \(\beta \) has to be estimated. Via Claeskens and Hjort (2004) it is demonstrated that as \( n\rightarrow \infty \) with \(m^{3}/n\rightarrow 0\), then the test \({\bar{\varLambda }}_{m}\) (i.e. the, here, unfeasible test based on the notional sample \(\left\{ {\bar{X}}_{i}\right\} _{1}^{n}\)) has power against local alternatives parametrized by \(\theta _{\left( m\right) }-0_{(m)}=c\sqrt{\frac{\sqrt{m}}{n} }\) with \(c^{\prime }c=1\). Heuristically, implicit from the proof of Theorem 2 the properties of the test follow from; \({\hat{\varLambda }}_{m}-{\bar{\varLambda }} _{m}=O_{p}\left( \sqrt{\frac{m}{n}}\right) ,\) and so \({\hat{\varLambda }}_{m}\) has power against that same rate of local-alternatives.

3.2 Testing for normality or exponentiality

The likelihood ratio test \({\hat{\varLambda }}_{m}\) is asymptotically pivotal, specifically standard normal. Competitor tests, such as KS, CM and AD (these tests are mathematically detailed in Stephens 1976 or Conover 1999)) are not pivotal, although asymptotic critical values are readily available for all cases of testing for Exponentiality and Normality.

First we will demonstrate that indeed asymptotic critical values for nonparametric likelihood tests do have close to nominal size for large values of n and m. We are interested in testing the null hypotheses

with nominal significance levels 10, 5 and \(1\%\) and based on sample sizes \(n=25,50,100\) and 200. Letting \({\bar{y}}_{n}\) and \({\hat{\sigma }} _{n}^{2}\) be the estimated mean and variance (i.e. \({\hat{\beta }}_{n}={\bar{y}} _{n}\) for \(H_{0}^{E}\) and \({\hat{\beta }}_{n}=\left( {\bar{y}}_{n},{\hat{\sigma }} _{n}^{2}\right) ^{\prime }\) for \(H_{0}^{N}\)) then the tests are constructed from the mapping to \(\left( 0,1\right) ;\)

to test \(H_{0}^{E},\) and

to test \(H_{0}^{N}.\)

Table 2 in Appendix B provides rejection frequencies for the tests constructed for values of \(m=3,5,7,9,11,17.\) The left hand panel of numbers correspond to testing \(H_{0}^{E}\) and the right to \(H_{0}^{N},\) critical values at the 1, 5 and 10% significance level from the standard normal distribution are used throughout.

The purpose of these experiments is only to demonstrate that the finite sample performance of the tests clearly improves as both n and m increase, as predicted by Theorem 2(i). Note the use of three significance levels to better illustrate convergence for large values of both m and n.

Although competitor tests are not asymptotically pivotal (and therefore no comparisons under the null are made) instead Table 3 compares the \(5\%\) size corrected powers of two variants of the tests, with \(m=3\) and \(m=9\) with the three direct competitors for a single sample size of \(n=100\). Table 3a and b present rejection frequencies for these tests and the KS, CM and AD tests for testing \(H_{0}^{N}\) under alternatives that the data is instead drawn from,

Table 3c, d and e, consider alternatives where the moments of the data are not correctly specified, i.e.

where \(1\left( .\right) \) denotes the indicator function. These latter three alternatives represent simplistic variants of common types of misspecification in econometric or financial data, i.e. misspecification of a conditional mean, variance or the possibility of a break in the mean (here half way through the sample). Note that these models imply that (2) will not be IID on \(\left( 0,1\right) \), but ergodicity implies the sample moments will still converge. Finally, Table 3f considers instead testing \( H_{0}^{E}\) against the alternative

In each table the left hand panel corresponds to the case where we construct the test imposing the parameter values specified in the null rather than estimating them (i.e. using the, unfeasible, test of Marsh 2007)). The right hand panel has the rejection frequencies for tests based on estimated values, i.e. using (11) and (12), respectively.

The outcomes in Table 3 imply the following broad conclusions. The nonparametric likelihood test based \({\hat{\varLambda }}_{3}\) is the most powerful almost uniformly, across all alternatives and whether parameters are estimated or not. The observed lack of power of the most commonly used test, KS, is particularly evident, it is consistently the poorest performing test. The other edf based tests and \({\hat{\varLambda }}_{9}\) are broadly comparable in terms of their rejection frequencies, although AD is perhaps on average slightly more powerful and CM less powerful.

3.3 Bootstrap critical values

The proposed tests require a choice of dimension, m. The results presented in Tables 2 and 3 suggest an inevitable compromise, larger values of m imply tests having size closer to nominal, while smaller values of m imply tests having greater power. In order to overcome this compromise we can instead consider the properties of these tests when bootstrap critical values are instead employed.

For these tests the bootstrap procedure is as follows: On obtaining the MLE \( {\hat{\beta }}_{n}\) and calculating \({\hat{\varLambda }}_{3},\) as described above;

-

1.

Generate bootstrap samples \(Y_{i}^{b}\sim IID\,F\left( y;{\hat{\beta }} _{n}\right) \) for \(i=1,\ldots ,n.\)

-

2.

Estimate, via ML, \({\hat{\beta }}_{n}^{b}\) and construct \({\hat{X}} _{i}^{b}=F\left( Y_{i}^{b};{\hat{\beta }}_{n}^{b}\right) \) for \(i=1,\ldots ,n.\)

-

3.

Repeat 1 and 2 B times, obtaining bootstrap versions of the test \(\hat{ \varLambda }_{3}^{b}.\)

-

4.

Order the \({\hat{\varLambda }}_{3}^{b}\) so the bootstrap critical value at size \(\alpha \) is \(\kappa _{B}={\hat{\varLambda }}_{3}^{\left\lfloor (1-\alpha )B/100\right\rfloor }.\)

-

5.

Denote the indicator function \({\hat{I}}_{B}^{\varLambda }=\left\{ \begin{array}{c} 1\quad \text { if }\quad {\hat{\varLambda }}_{3}>\kappa _{\alpha }^{B} \\ 0\quad \text { if }\quad {\hat{\varLambda }}_{3}\le \kappa _{\alpha }^{B} \end{array} \right\} .\)

We then reject \(H_{0}\) if \({\hat{I}}_{B}^{\varLambda }=1.\) First, however, the required asymptotic justification for the bootstrap is automatic given that \( {\hat{\varLambda }}_{m}\rightarrow _{d}N\left( 0,1\right) \) giving the following corollary to Theorem 2.

Corollary 2

Under Assumption 1 and if \(n,m\rightarrow \infty \) with \(m^{3}/n\rightarrow 0,\) then

Here we will compare the performance of bootstrap critical values for \({\hat{\varLambda }}_{3}\) with those of CM and AD by repeating many of the experiments of Kojadinovic and Yan (2012). In this sub-section all experiments described in this sub-section are performed on the basis of \(B=200\) bootstrap replications. All nuisance parameters were estimated via maximum likelihood using Mathematica 8’s own numerical optimization algorithm.

The first set of experiments mimic those presented in Kojadinovic and Yan (2012, Table 1). Specifically we define the following Normal, Logistic, Gamma and Weibull Distributions;

The specific parameter values for \(L^{*},\varGamma ^{*}\) and \(W^{*}\) are chosen to minimize relative entropy (\(I\left( \beta \right) \) in Assumption 1(iii)) for each family to the distribution of \(N^{*}.\) Sample sizes of \( n=25,50,100,200\) are used in the experiments described below.

Table 4a contains the finite sample size of each test. It is clear that, under \(H_{0},\) the parametric bootstrap provides highly accurate critical values for all of the tests. On size alone there is nothing to choose between them. It is however, worth reporting, the computational time of each bootstrap critical value. For the \({\hat{\varLambda }}_{3}\) test critical values were obtained after 2.0 and 3.2 seconds for sample sizes \(n=100\) and 200, respectively. The times for the other tests were similar to each other, taking around 0.9 and 2.9 seconds, respectively.

Table 4b and c contain the finite sample rejection frequencies under various alternative hypotheses, covering all pairwise permutations of the distributions in (13). As with the finite sample sizes it is not possible to pick a clear winner, moreover where they overlap the results are in line with those of Kojadinovic and Yan (2012). There is, of course, no uniformly most powerful test of goodness-of-fit so it is not surprising that the power of \({\hat{\varLambda }}_{3}\) is not always the largest. However its performance over this range of nulls and alternatives is far less volatile and in no circumstance is the test dominated by any of the other two.

4 Conclusions

This paper has generalized the series density estimator of Barron and Sheu (1991) to cover the case where parameters are estimated in the context of misspecified models. The nonparametric likelihood ratio tests of Marsh (2007) can be thus extended to cover the case of estimated parameters. The general aim has been to provide a testing procedure which overcomes the three main criticisms of edf based tests, i.e. that they are not pivotal, have low power, and offer no direction in case of rejection.

Instead the tests of this paper are shown to be asymptotically standard normal and they have power advantages over edf tests, whether critical values are size corrected or obtained by a consistent bootstrap. This suggests the proposed tests will be much simpler to generalize to the settings of Bai (2003) or Corradi and Swanson (2006). Finally, in the event of rejection, the series density estimator upon which the tests are built may be employed to consistently estimate the quantiles of the density from which the sample is taken.

Change history

11 September 2018

The article ‘Nonparametric series density estimation and testing’, written by Patrick Marsh, was originally published electronically on the publisher’s internet portal (currently SpringerLink) on 1 August 2018 with an incorrect copyright line.

References

Babu G, Rao CR (2004) Goodness of fit tests when parameters are estimated. Sankhya 66:63–74

Bai J (2003) Conditional distributions of dynamic models. Rev Econ Stat 85:531–549

Barron AR, Sheu C-H (1991) Approximation of density functions by sequences of exponential families. Ann Stat 19:1347–1369

Barndorff-Nielsen O (1978) Information and exponential families in statistical theory. Wiley, New York

Beran R (1988) Prepivoting test statistics: a bootstrap view of asymptotic refinements. J Am Stat Assoc 83:687–697

Claeskens G, Hjort NL (2004) Goodness of fit via nonparametric likelihood ratios. Scand J Stat 31:487–513

Conover WJ (1999) Practical nonparametric statistics. Wiley, New York

Corradi V, Swanson NR (2006) Predictive Density Evaluation. In: G. Elliott, C. Granger and A. Timmermann (eds.) Handbook of Economic Forecasting, Vol. 1, Elsevier, pp 197–284

Crain BR (1974) Estimation of distributions using orthogonal expansions. Ann Stat 2:454–463

Kojadinovic I, Yan J (2012) Goodness-of-fit testing based on a weighted bootstrap: a fast large-sample alternative to the parametric bootstrap. Can J Stat 40:480–500

Marsh P (2007) Goodness of fit tests via exponential series density estimation. Comput Stat Data Anal 51:2428–2441

Portnoy S (1988) Asymptotic behavior of likelihood methods for exponential families when the number of parameters tends to infinity. Ann Stat 16:356–366

Stephens MA (1976) Asymptotic results for goodness of fit statistics with unknown parameters. Ann Stat 4:357–369

White H (1982) Maximum likelihood estimation of misspecified models. Econometrica 50:1–25

Acknowledgements

This article was funded by University of Nottingham.

Author information

Authors and Affiliations

Corresponding author

Additional information

The original version of this article was revised. The article “Nonparametric series density estimation and testing’, written by Patrick Marsh, was originally published electronically on the publisher’s internet portal (currently SpringerLink) on 1 August 2018 with an incorrect copyright line. The copyright of the article changed on 28 August 2018 to © The Author(s) 2018 and the article is forthwith distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

Appendices

A Appendix A: Proofs

In order to avoid any ambiguity throughout this appendix the order of magnitude symbol O(.) is defined by,

and analogously for the probabilistic versions \(O_{p}(.)\) and \(o_{p}(.).\) If the quantity under scrutiny does not depend upon the dimension m then the condition \(m^{3}/n\rightarrow 0\) becomes redundant.

Proof of Theorem 1:

First recall the definitions note ,

The Euclidean distance between the two polynomial sufficient statistics satisfies,

Taking the \(j^{th}\) element and noting \({\hat{X}}_{i}=X_{i}+e_{i},\)then

Since \({\bar{X}}_{i}\in (0,1)\) while, as in (3), \(e_{i}=O_{p}(n^{-1/2})\) and \(e_{i}\in (-1,1)\) then,

where \(c_{1}<1.\) For finite j (14) is \(O_{p}\left( n^{-s/2}\right) \) while as \(j\rightarrow \infty \) (14) is \( o\left( 1\right) O_{p}\left( n^{-s/2}\right) \) and so,

implying that

uniformly in j, and hence,

Consequently, and also from the definition of Euclidean distance, we have,

Consider now \(\mu _{(m)},\) then from the triangle inequality,

which follows from (15) and noting the same order of magnitude applies for the first distance, as in Barron and Sheu (1991, eq. 6.5), which represents the distance in the case that the sequence \(\left( {\bar{X}}_{i}^{j}\right) _{1}^{n}\) were observed directly.

We thus have \(\left| {\hat{X}}_{(m)}-\mu _{(m)}\right| =O_{p}\left( \sqrt{\frac{m}{n}}\right) \) and \(\left| {\hat{X}}_{(m)}-\mu _{(m)}\right| =O_{p}\left( \sqrt{\frac{m}{n}}\right) ,\) so that utilizing the respective MLEs and extending the decomposition of the Kullback-Leibler divergence of Barron and Sheu (1991, eq. 6.9) we obtain,

Given that Assumption 1 assures the required conditions of Barron and Sheu (1991, Theorem 1) are met then the first two terms in (17) are, respectively, \(O(m^{-2r})\) and \(O_{p}(m/n),\) noting that under Assumption 1, \(\log [u(x)]\in W_{2}^{r}\). Application of Barron and Sheu (1991, Lemma 5), which holds for any two values in \(\varOmega _{m}\subset {\mathbb {R}}^{m},\) here uniquely defined by Eqs (6) and ( 7), implies that

and hence

as required. \(\square \)

Proof of Theorem 2:

Consider the problem of testing \(H_{0}:\theta _{(m)}=0_{\left( m\right) }\) against the alternative \(H_{1}:\theta _{(m)}\ne 0_{\left( m\right) }\) when \( n,m\rightarrow \infty ,\) but \(m^{3}/n\rightarrow 0.\) For notational convenience and comparisons with Portnoy (1988) and Barron and Sheu (1991), expressions involving \(\theta _{\left( m\right) }\) will not be immediately resolved.

Part (i): To proceed we have defined,

where \({\hat{\theta }}_{(m)}\) solves (7), or equivalently,

Similarly the value \(0_{\left( m\right) }\) defines,

The exponential log-likelihood is strictly convex so that the mapping, \(\psi _{m}^{\prime }\left( \theta _{\left( m\right) }\right) =\mu _{(m)}\) is one-to-one between the parameter space \(\varTheta _{m}\subset {\mathbb {R}}^{m}\) and sample space \(\varOmega _{m}\subset {\mathbb {R}}^{m}\), similar to (8). Application of Barron and Sheu (1991, eq. 5.6) and also (16) thus gives,

As a consequence of both (18) and (16) we have that,

and note that the expansions provided in the provided in the proofs of Theorems 3.1 and 3.2 of Portnoy (1988) apply for any two pairs of values, here \(\left( {\bar{\theta }}_{\left( m\right) },0_{\left( m\right) }\right) \) and \(\left( {\bar{X}}_{(m)},\mu _{(m)}\right) .\)

To continue, noting expectations under the null hypothesis can be written here as \(E_{U}\left[ .\right] \) since \({\bar{X}}\sim U:=U\left[ 0,1\right] \), the uniform distribution with density \(p_{0_{\left( m\right) }}\left( x\right) =1,\) we then have expansions analogous to Portnoy (1988, eq. 3.5 and 3.6),

Subtracting (20) from (19) and applying arguments identical to those given below Portnoy (1988, Theorem 3.1, eq. 3.7) yields,

From the definition of the likelihood ratio test we therefore have,

as in Portnoy (1988, eq. 3.12). Let \({\bar{e}}={\hat{X}}_{(m)}-{\bar{X}}_{(m)}\) then from the proof of Theorem 1, we have

Now define the \(m\times 1\) random variable \(V_{m}=\psi _{m}^{^{\prime \prime }}\left( 0_{\left( m\right) }\right) ^{-1/2}\left( {\bar{x}}-\psi _{m}^{\prime }\left( 0_{\left( m\right) }\right) \right) ,\) having density \(p_{V}\left( \theta _{(m)}^{V}\right) ,\) so that \(E\left[ V\right] =0_{\left( m\right) } \) and \(Var[V_{m}]=I_{m}.\) Since the likelihood ratio statistic is parameterization invariant the likelihood ratio test based on observations on \(V_{m}\) would be identical to that based on \({\bar{X}}_{(m)}.\) Rather than defining a new triple of values, analogous to those in (5), (6) and (7) , in both the parameter space \(\varTheta _{m}\) (note that in particular the hypothesized value would no longer satisfy \( \theta _{\left( m\right) }=0_{\left( m\right) }\)) and sample space \(\varOmega _{m}\) we will instead, and without any loss of generality assume a parameterization in which both \(E\left[ {\bar{X}}_{(m)}\right] =0\) and \(V\left[ {\bar{X}}_{(m)}\right] =I_{m}.\) Note, however, that it is the unobserved \({\bar{X}}\) which is assumed to be standardized not the observed \({\hat{X}}_{(m)}.\)

In this parameterization the asymptotic distribution of first \(|{\bar{X}} _{(m)}|^{2}\) and hence \(|{\hat{X}}_{(m)}|^{2}\) (via (22)) and then via (21) for \({\hat{\varLambda }}_{m}=\frac{{\hat{\lambda }}_{m}-m}{\sqrt{2m} }\) follows exactly as in Portnoy (1988, Theorem 4.1). \(\square \)

Part (ii): Under any fixed alternative the density of \({\bar{X}} _{i}=F\left( Y_{i};\beta _{*}\right) \) is

and so let \(\theta _{(m)}^{1}\) be the unique solution to,

The uniqueness of solutions to (23) imply \(\theta _{(m)}^{1}\ne 0_{(m)}.\)

To take the least favorable case, define

and suppose that \(\theta _{k}^{1}\ne 0\) for some finite k but that \( \theta _{j}^{1}=0\) for all \(j\ne k.\) The series density estimator is consistent for \(\theta _{\left( m\right) }^{1},\) under \(H_{1},\) in that \( \left| {\hat{\theta }}_{(m)}-\theta _{\left( m\right) }^{1}\right| =O_{p}\left( \sqrt{\frac{m}{n}}\right) ,\) analogous to (18) above, and so we can write,

We can therefore write the likelihood ratio as

where \({\hat{\lambda }}_{m}^{1}\) is the likelihood ratio for testing \( H_{1}:\theta _{(m)}=\theta _{\left( m\right) }^{1}.\)

Thus, under \(H_{1},\) we can write

Immediate from Part (i) of this theorem is that as \(m,n\rightarrow \infty \) , with \(m^{3}/n\rightarrow 0,\)

i.e. \(\left( {\hat{\lambda }}_{m}^{1}-m\right) /\sqrt{2m}\) is \(O_{p}\left( 1\right) .\) However, since \(\psi _{m}\left( .\right) \) is a uniquely defined cumulant function then

and since \(0<{\hat{X}}_{i}<1\) then \(\frac{1}{n}\sum _{i=1}^{n}{\hat{X}} _{i}^{k}=O_{p}\left( 1\right) \) and positive. Consequently,

since \(m^{3}/n\rightarrow 0\) and hence \(\Pr \left[ {\hat{\varLambda }}_{m}>\kappa \right] \rightarrow 1,\) as required. \(\square \)

B Appendix B: Tables

Rights and permissions

OpenAccess This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Marsh, P. Nonparametric series density estimation and testing. Stat Methods Appl 28, 77–99 (2019). https://doi.org/10.1007/s10260-018-00432-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-018-00432-y