Abstract

Adaptive designs were originally developed for independent and uniformly distributed \(p\)-values. However, in general the type I error rate of a given adaptive design depends on the true dependence structure between the stage-wise \(p\)-values. Since there are settings, where the \(p\)-values of the stages might be dependent with even unknown dependence structure, it is of interest to consider the most adverse dependence structure maximizing the type I error rate of a given adaptive design (worst case). In this paper, we explicitly study the type I error rate in the worst case for adaptive designs without futility stop based on Fisher’s combination test. Potential inflation of the type I error rate is studied if the dependence structure between the \(p\)-values of the stages is not taken into account adequately. It turns out that considerable inflation of the type I error rate can occur. This emphasizes that the examination of the true dependence structure between the stage-wise \(p\)-values and an adequate choice of the conditional error function is crucial when adaptive designs are used.

Similar content being viewed by others

References

Bauer P (1989) Multistage testing with adaptive design. Biom Inf Med Biol 20(4):130–136

Bauer P, Köhne K (1994) Evaluation of experiments with adaptive interim analyses. Biometrics 50:1029–1041

Bauer P, Posch M (2004) Letter to the editor: modification of the sample size and the schedule of interim analyses in survival trials based on data inspections. Stat Med 23:1333–1335

Brannath W, Posch M, Bauer P (2002) Recursive combination tests. J Am Stat Assoc 97:236–244

Frank M, Nelsen R, Schweizer B (1987) Best-possible bounds for the distribution of a sum—a problem of kolmogorov. Probab Theory Relat Fields 74:199–211

Götte H, Hommel G, Faldum A (2009) Adaptive designs with correlated test statistics. Stat Med 28:1429–1444

Hommel G (2001) Adaptive modifications of hypotheses after an interim analysis. Biom J 43(5):581–589

Hommel G, Lindig V, Faldum A (2005) Two-stage adaptive designs with correlated test statistics. J Biopharm Stat 15:613–623

Jennison C, Turnbull B (2000) Group sequential methods with applications to clinical trials. Chapman and Hall, London

Lehmacher W, Wassmer G (1999) Adaptive sample size calculations in group sequential trials. Biometrics 55:1286–1290

Nelsen R (2006) An introduction to copulas, 2nd edn. Springer Science, New York

Schmidt R, Faldum A, Witt O, Gerß J (2014) Adaptive designs with arbitrary dependence structure. Biom J 56:1–21

Sklar A (1959) Fonctions de répartition à \(n\) dimensions et leurs margins. Publ Inst Stat Univ Paris 8:229–231

Wassmer G (2001) Statistische Testverfahren für gruppensequentielle und adaptive Pläne in klinischen Studien, 2nd edn. Verlag Alexander Mönch, München

Wassmer G (2006) Planning and analysing adaptive group sequential survival trials. Biom J 48:714–729

Acknowledgments

We would like to thank two anonymous reviewers, and the editors for constructive suggestions and comments that resulted in an improved version of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Equivalence of Eqs. (8) and (9) for admissible \(c\) and \(\gamma \)

As a consequence of Lemma 1 below, the following statements are equivalent:

Likewise, as a consequence of Lemma 2 below, the following statements are equivalent:

A pair of parameters \(c\) and \(\gamma \) is admissible if \(0 < \alpha _{w}(c,\gamma ) < 1\) with \(\alpha _{w}(c,\gamma )\) from (8). For admissible \(c\) and \(\gamma \), we have

Thus the above shows that Eqs. (8) and (9) are equivalent for admissible \(c\) and \(\gamma \).

Lemma 1

For \(0 \le \alpha _1 \le \alpha _{w} \le 1\), let \(\gamma := F^{-1}(1-\alpha _1)\), and let \(c \in \mathbb {R}\) such that \(\alpha _{w}=2-2 F \left( \frac{c}{\sqrt{2}}\right) \). Then:

Proof

\(c \le \sqrt{2} \gamma \) \(\Leftrightarrow \) \(F\left( \frac{c}{\sqrt{2}}\right) \le F(\gamma )\) \(\Leftrightarrow \) \(1-\frac{\alpha _{w}}{2} \le 1 - \alpha _1\) \(\Leftrightarrow \) \(\alpha _{w} \ge 2 \alpha _1\).\(\square \)

Lemma 2

For \(0 \le \alpha _1 \le \alpha _{w} \le 1\), let \(\gamma := F^{-1}(1-\alpha _1)\), and let \(c \in \mathbb {R}\) such that \(\alpha _{w}= 2- F(\gamma ) - F(\sqrt{2} c -\gamma )\). Then:

Proof

First notice that by assumption \(\sqrt{2} c= \gamma + F^{-1}(1 + \alpha _1 - \alpha _{w})\). Thus, \(c \le \sqrt{2} \gamma \) \(\Leftrightarrow \) \(F^{-1}(1 + \alpha _1 - \alpha _{w}) \le \gamma \) \(\Leftrightarrow \) \(1 + \alpha _1 - \alpha _{w} \le 1- \alpha _1\) \(\Leftrightarrow \) \(2 \alpha _1 \le \alpha _{w}\).\(\square \)

1.2 Proof of Eq. (14)

In order to prove Eq. (14), we distinguish three cases: (i) \(0 < \alpha _1 \le \frac{\alpha _{w}^2}{4}\), (ii) \(\frac{\alpha _{w}^2}{4} \le \alpha _1 \le \frac{\alpha _{w}}{2}\), (iii) \(\alpha _1 \ge \frac{\alpha _{w}}{2}\). In situation (i), \(\alpha _1 \le \frac{\alpha _{w}}{2}\) since \(0 < \alpha _{w} < 1\). We find from (13) that \(c_{ BK}= \frac{\alpha _{w}^2}{4}\). So, \(\alpha _1 \le c_{ BK}\) and \(\alpha _{ind} = c_{ BK} - \log (c_{ BK}) c_{ BK} = \frac{\alpha _{w}^2}{4} \left( 1- \log \left( \frac{\alpha _{w}^2}{4}\right) \right) \) according to (12). In situation (ii), \(c_{ BK}= \frac{\alpha _{w}^2}{4}\) by (13). This implies \(\alpha _1 \ge c_{ BK}\) and consequently \(\alpha _{ind} = \alpha _1 - \log (\alpha _1) \frac{\alpha _{w}^2}{4}\). Finally, in situation (iii), we find from (13) that \(c_{ BK}= \alpha _1(\alpha _{w}-\alpha _1)\). Notice that we necessarily have \(\alpha _{w} > \alpha _1\), since both \(c_{ BK} > 0\) and \(\alpha _1 > 0\). So, \(0 < \alpha _1 < \alpha _{w} < 1\), which yields \(c_{ BK}=\alpha _1(\alpha _{w}-\alpha _1) \le \alpha _1\). This yields \(\alpha _{ind} = \alpha _1 - \log (\alpha _1) \alpha _1(\alpha _{w}-\alpha _1)\) and the proof is finished.

1.3 Proof of Eq. (15)

For the choice \(\alpha _1 = c_{ BK}\) the assertion follows immediately from (14), since \(\alpha _1 = c_{ BK} \ne 0\) implies \(\alpha _1 = \frac{\alpha _{w}^2}{4}\). It remains to consider the case \(\alpha _1 = \frac{\alpha _{ind}}{2}\) and to show that any solution of (14) for given \(0 < \alpha _{w} < 1\) with \(\alpha _{ind}=2 \alpha _1\) has the property \(\frac{\alpha _{w}^2}{4} \le \alpha _1 \le \frac{\alpha _{w}}{2}\). So, let us first assume that we had a solution of (14) with \(\alpha _1 \ge \frac{\alpha _{w}}{2}\) and \(2 \alpha _1 = \alpha _{ind}\). Then \(\alpha _{ind} = \alpha _1 - \log (\alpha _1) \alpha _1(\alpha _{w}-\alpha _1)\), and it follows that \(-\log (\alpha _1) (\alpha _{w}- \alpha _1) = 1\). Since \(-\log (x) < 1/x\) for all \(x > 0\), we obtain \(\alpha _{w}= \alpha _1 - \frac{1}{\log (\alpha _1)} > 2 \alpha _1\) in contradiction to \(\alpha _{w} \le 2 \alpha _1\). If we assume a solution of (14) with \(\alpha _1 \le \frac{\alpha _{w}^2}{4}\) and \(2 \alpha _1 = \alpha _{ind}\), then \(\alpha _1 = \frac{\alpha _{ind}}{2} = \frac{\alpha _{w}^2}{4} \frac{1- \log \left( \frac{\alpha _{w}^2}{4}\right) }{2}\). Thus, \(-\log \left( \frac{\alpha _{w}^2}{4}\right) \le 1\), i.e. \(\alpha _{w}^2 \ge \frac{4}{e}\) with \(e\) denoting Euler’s number. This is in contradiction to \(\alpha _{w} \in (0,1)\). It remains to show that there is an \(\alpha _1\) with \(\frac{\alpha _{w}^2}{4} \le \alpha _1 \le \frac{\alpha _{w}}{2}\) which is a solution of the equation \(2 \alpha _1 = \alpha _{ind} = \alpha _1 - \log (\alpha _1) \frac{\alpha _{w}^2}{4}\). To prove this, let

We claim that \((0,1] \subset \vartheta ((0, \frac{1}{e}])\). This follows from the continuity of \(\vartheta \), since \(\vartheta (\frac{1}{e})= \frac{2}{\sqrt{e}} \ge 1\) and \(\lim _{x \rightarrow 0} \frac{x}{- \log (x)} = 0\). Thus, for all \(\alpha _{w} \in (0,1)\) there is a unique \(\alpha _1 \in (0,\frac{1}{e})\) with \(\vartheta (\alpha _1) = \alpha _{w}\). Uniqueness of \(\alpha _1\) follows, because \(\vartheta \) is strictly increasing on \((0,e)\). Therefore, let us choose \(\alpha _1 \in (0, \frac{1}{e}]\) such that \(\vartheta (\alpha _1) = \alpha _{w}\). Then \(\alpha _1 \ge \frac{\alpha _{w}^2}{4}\), because \(\alpha _1 \le \frac{1}{e} \Leftrightarrow -\frac{1}{\log (\alpha _1)} \le 1\) and thus \(\alpha _{w}^2=\frac{4 \alpha _1}{ - \log (\alpha _1)} \le 4 \alpha _1\). Moreover, \(\alpha _1 \le \frac{\alpha _{w}}{2}\), because \(\alpha _1^2 \le \frac{\alpha _1}{-\log (\alpha _1)} = \frac{\alpha _{w}^2}{4}\) in view of \(-\log (x) \le 1/x\) for all \(x > 0\). Finally notice that \(\vartheta (\alpha _1)= \alpha _{w} \Leftrightarrow \alpha _{ind}= \alpha _1 - \log (\alpha _1) \frac{\alpha _{w}^2}{4}\) if \(\alpha _{ind}= 2 \alpha _1\). All in all we have proven the following result: Let \(\alpha _{ind}=2 \alpha _1\). Then for all \(\alpha _{w} \in (0,1)\) there is an unique \(\alpha _1 \in (0,\frac{1}{e})\) such that \(\alpha _{ind}= \alpha _1 - \log (\alpha _1) \frac{\alpha _{w}^2}{4}\) and \(\frac{\alpha _{w}^2}{4} \le \alpha _1 \le \frac{\alpha _{w}}{2}\). So, if \(\alpha _1= \alpha _{ind}/2\) and if the significance level \(\alpha _{ind}\) is exactly preserved for independent and uniformly distributed \(p\)-values, then the type I error rate in the worst case is \(\alpha _{w} = \sqrt{{2 \alpha _{ind}}/\left( {- \log \left( \frac{\alpha _{ind}}{2}\right) }\right) }\).

1.4 The choice \(\alpha _1= \alpha _{ind}/n\) in the limit \(n \rightarrow 1\)

Let \(\alpha _1, \alpha _{w}\) and \(\alpha _{ind}\) be a solution of (14) with \(0 < \alpha _1 < \alpha _{w} < 1\), and let \(\alpha _1= \frac{\alpha _{ind}}{n}\) for some \(n > 1\). We are going to prove that \(\alpha _{ind} \rightarrow \alpha _{w}\) as \(n \rightarrow 1\). To see this, notice that according to (12) and by definition of \(\psi \) we have: \(n \alpha _1 = \alpha _{ind} \ge \alpha _1 - \log (\psi ) c_{ BK}\), i.e. \(- \log (\psi ) c_{ BK} \le (n-1) \alpha _1\). In the limit \(n \rightarrow 1\), we thus obtain \(- \log (\psi ) c_{ BK} \rightarrow 0\), since \(0 \le \alpha _1, c_{ BK} \le 1\). So, we have \(- \log (\psi ) \rightarrow 0\) or \(c_{ BK} \rightarrow 0\). Now let \(0 < \alpha _{ind} < 1\). We claim that \(- \log (\psi ) \rightarrow 0\), i.e. \(\psi \rightarrow 1\), is impossible. To see this, assume that \(\psi =\max (\alpha _1,c_{ BK}) \rightarrow 1\) as \(n \rightarrow 1\). We obtain \(c_{ BK} \rightarrow 1\), because \(\alpha _1\) converges by assumption to the fixed constant \(\alpha _{ind} < 1\) as \(n \rightarrow 1\). Now, notice that \(\alpha _1(\alpha _{w}-\alpha _1) \le \frac{\alpha _{w}^2}{4}\), because the function \(x \mapsto x (\alpha _{w}-x)\) has a global maximum in \(x=\frac{\alpha _{w}}{2}\). Thus we conclude from (13) that \(\frac{\alpha _{w}^2}{4} \ge c_{ BK} \rightarrow 1\) as \(n \rightarrow 1\) in contradiction to \(\alpha _{w} < 1\). Thus \(c_{ BK} \rightarrow 0\). This implies together with (13) that \(\alpha _{1}(\alpha _{w}-\alpha _1) \rightarrow 0\). Indeed, if \(2 \alpha _1 > \alpha _{w}\), this is clear, and if \(2 \alpha _1 \le \alpha _{w}\), we obtain \(0 \le \alpha _1 (\alpha _{w} - \alpha _1) \le \frac{\alpha _{w}^2}{4} = c_{ BK} \rightarrow 0\). Since \(\alpha _1 > 0\), we obtain \(\alpha _{w} \rightarrow \alpha _1\) and thus \(\alpha _{w} \rightarrow \alpha _{ind}\) as claimed, because \(\alpha _1 \rightarrow \alpha _{ind}\) as \(n \rightarrow 1\).

1.5 Proof of Eq. (8)

Consider the adaptive design defined by (5). Unless otherwise specified, let \(z:= \sqrt{2} c\) and \(\gamma :=F^{-1}(1-\alpha _1)\) below. In order to determine the type I error rate in the worst case for design (5), we see from (7) that it suffices to derive \(\inf _C \mathbb {P}_{H_0}(Z_1 + Z_2 < z, Z_1 < \gamma )\), where the infimum is taken over all copulas \(C\). For this purpose, we first assemble some essential mathematical results in Sect. 6.5.1 of Appendix. The strategy consists in deriving an upper and a lower bound for \(\inf _C \mathbb {P}_{H_0}(Z_1 + Z_2 < z, Z_1 < \gamma )\) and in showing that both the upper and lower bound coincide. In Sect. 6.5.2 of Appendix, a lower bound is derived. A suitable upper bound is derived in Sect. 6.5.3 of Appendix and is shown to coincide with the lower bound mentioned before. On this basis, the type I error rate \(\alpha _{w}\) for uniformly distributed but arbitrarily dependent \(p\)-values stated in Eq. (8) is finally derived in Sect. 6.5.4 of Appendix.

1.5.1 Bounds in the worst case scenario

Let \(X\) and \(Y\) be real-valued random variables. Then according to Schmidt et al. (2014), Corollary A.1, we have

with \(W(u,v):= \max \{ u+v-1,0 \}\) denoting Fréchet-Hoeffding’s lower bound. In particular, the left side of Eq. (19) is a lower bound for \(\inf _C \mathbb {P}_{}^C(X + Y < z, X < \gamma )\), and thus yields an upper bound for the type I error rate in the worst case. Thereby recall that \(\mathbb {P}^C(A)\) denotes the probability of any event \(A \subset \mathbb {R}^2\) given that \(C\) is the copula for \(X\) and \(Y\). To find an upper bound for \(\inf _C \mathbb {P}_{}^C(X + Y < z, X < \gamma )\), we will make use of the following result. Let \(\Delta \) denote the set of all functions \(G:[- \infty , \infty ] \rightarrow [0,1]\) such that \(G\) is left-continuous and non-decreasing on \(\mathbb {R}\), \(G(+\infty )=1\) and \(G(- \infty )=0= \lim _{x \rightarrow -\infty } G(x)\). Then, according to Frank et al. (1987), Theorem 3.1 and Theorem 3.2, for any pair \(X\), \(Y\) of random variables such that \(\mathbb {P}(X<x)\), \(\mathbb {P}(Y<y) \in \Delta \), we have:

Notice that Eqs. (19) and (20) are not restricted to continuous random variables \(X\) and \(Y\), but actually hold for any pair \(X\), \(Y\) of random variables such that \(\mathbb {P}(X<x)\), \(\mathbb {P}(Y<y) \in \Delta \). Equation (20) will be used to derive the desired upper bound.

1.5.2 Derivation of the lower bound

Given the two-stage adaptive design (5) with stage-wise \(p\)-values \(P_1\) and \(P_2\), let \(z:= \sqrt{2} c\) and \(\gamma :=F^{-1}(1-\alpha _1)\). Moreover, let \(Z_i:=F^{-1}(1-P_i)\), \(i=1,2\). Since \(F(0)=0\), \(Z_i\) is a random variable with distribution function

under \(H_0\). In particular, \(Z_i\) has a decreasing density function almost everywhere. The lower bound (19) for \(\inf _C \mathbb {P}_{}^C(Z_1 + Z_2 < z, Z_1 < \gamma )\) is given by the following theorem.

Theorem 1

Let \(\gamma , z \ge 0\), and let \(Z_1\), \(Z_2\) be two random variables with distribution functions \(F_{Z_1}, F_{Z_2}\) as stated in (21). Then

where \(W(u,v):= \max \{ u+v-1,0 \}\) denotes the Fréchet–Hoeffding lower bound.

Proof

Let \(\phi :=\sup _{x < \gamma } \left( F_{Z_1}(x)+ F_{Z_2}(z-x) \right) \). It suffices to show that \(\phi = \max \{2 F\left( \frac{z}{2}\right) ,1\}\) if \(z \le 2 \gamma \) and \(\phi = \max \{ F(\gamma ) + F(z - \gamma ), 1 \}\) for \(z \ge 2 \gamma \). By (21), \(F_{Z_1}(x)=0\) for \(x \le 0\) and \(F_{Z_1}(x)=F(x)\) for \(x \ge 0\). Similarly, \(F_{Z_2}(z-x)=0\) for \(x \ge z\) and \(F_{Z_2}(z-x)=F(z-x)\) for \(x \le z\). We now distinguish two cases: (i) \(z \le 2 \gamma \), (ii) \(z \ge 2 \gamma \).

-

(i)

If \(z \le 2 \gamma \), we find that

$$\begin{aligned} F_{Z_1}(x)+F_{Z_2}(z-x) = {\left\{ \begin{array}{ll} F(z-x), &{} \text { if } \quad x \le 0 \le z \le 2 \gamma , \\ F(x) + F(z-x), &{} \text { if } \quad 0 \le x \le z \le 2 \gamma , \\ F(x), &{} \text { if } \quad 0 \le z \le x \le 2 \gamma . \end{array}\right. } \end{aligned}$$(23)Consequently, \(\phi \) is the maximum of \(\sup _{x \le 0} F(z-x) = 1\), \(\sup _{0 \le x < \min (z, \gamma )} ( F(x) + F(z-x) )\) and \(\sup _{\min (z, \gamma ) \le x < \gamma } F(x)\). If the interval \([\min (z, \gamma ), \gamma )\) is non-empty, then \(\sup _{\min (z, \gamma ) \le x < \gamma } F(x) = F(\gamma ) \le 1\). According to Lemma 3, the supremum of \(F(x)+ F(z-x)\) over \(x\) is taken in \(x_{max}=\frac{z}{2} \in [0, \min (z, \gamma )]\). So, \(\phi = \max \{2 F\left( \frac{z}{2}\right) ,1 \}\).

-

(ii)

If \(z \ge 2 \gamma \), we find that

$$\begin{aligned} F_{Z_1}(x)+F_{Z_2}(z-x) = {\left\{ \begin{array}{ll} F(z-x), &{} \text { if } \quad x \le 0 \le 2 \gamma \le z, \\ F(x) + F(z-x), &{} \text { if } \quad 0 \le x \le 2 \gamma \le z, \\ F(x) + F(z-x), &{} \text { if } \quad 0 \le 2 \gamma \le x \le z, \\ F(x), &{} \text { if } \quad 0 \le 2 \gamma \le z \le x. \end{array}\right. } \end{aligned}$$(24)Consequently, \(\phi \) is the maximum of \(\sup _{x \le 0} F(z-x) = 1\) and \(\sup _{0 \le x < \gamma } (F(x) + F(z-x))\). The maximum of \(F(x)+F(z-x)\) is located at \(\frac{z}{2}\) and \(F(x)+F(z-x)\) is increasing on \((-\infty , \frac{z}{2})\). By assumption on \(z\), we have \(\frac{z}{2} \ge \gamma \). Thus \(\sup _{0 \le x < \gamma } (F(x)+F(z-x)) = F(\gamma )+F(z- \gamma )\), i.e. \(\phi \) is the maximum of \(1\) and \(F(\gamma )+F(z- \gamma )\). \(\square \)

Lemma 3

Let \(z \ge 0\). Let \(F:\mathbb {R} \rightarrow \mathbb {R}\) be a twice continuously differentiable function with \(F''(x)<0\) for all \(x \in \mathbb {R}\). Then \(F(x) + F(z-x)\) has a unique maximum in \(x=\frac{z}{2}\).

Proof

Let \(\varPsi (x):=F(x) + F(z-x)\), let \(f(x):=F'(x)\). Then \(\varPsi '(x)=f(x)-f(z-x)\). \(\varPsi '(x)=0\) implies \(f(x)=f(z-x)\), which yields a unique extremum in \(x=\frac{z}{2}\) by injectivity of \(f\). Since, \(f'(x) < 0\) for all \(x \in \mathbb {R}\), \(x=\frac{z}{2}\) is a maximum. \(\square \)

Lemma 4

Let \(z, \gamma \ge 0\). Let \(F:\mathbb {R} \rightarrow \mathbb {R}\) be a twice continuously differentiable function with \(F''(x)<0\) for all \(x \in \mathbb {R}\). Then \(2 F\left( \frac{z}{2}\right) \ge F(\gamma ) + F(z-\gamma )\).

Proof

Let \(\varPsi _{\gamma }(z):= F(\gamma ) + F(z-\gamma ) - 2 F\left( \frac{z}{2}\right) \). Let \(f(z):=F'(z)\) with prime denoting partial differentiation with respect to \(z\). Then \(\varPsi '_{\gamma }(z) = f(z-\gamma ) - f\left( \frac{z}{2}\right) \). Since \(f\) is strictly decreasing, \(\varPsi '_{\gamma }(z) = 0\) implies \(z=2 \gamma \). Moreover, \(\varPsi ''_{\gamma }(z) = f'(z-\gamma ) - \frac{1}{2} f'\left( \frac{z}{2}\right) \), i.e. \(\varPsi ''_{\gamma }(2\gamma ) = \frac{1}{2} f'(\gamma ) < 0\). All in all, \(\varPsi _{\gamma }\) has a unique extremum at \(z= 2\gamma \), which is a maximum. At the maximum, \(\varPsi _{\gamma }(2\gamma ) = 0\). \(\square \)

1.5.3 Derivation of the upper bound

Given the two-stage adaptive design (5), let \(z:= \sqrt{2} c\) and \(\gamma :=F^{-1}(1-\alpha _1)\). For \(r \ge 1\), let \(f_r:\mathbb {R} \rightarrow \mathbb {R}\) denote the continuous, decreasing function

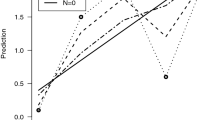

For \(z_1 \in (-\infty ,\gamma ]\), \(f_r\) is a straight line through the origin with slope \(-1\). At \(z_1 = \gamma \), the slope switches to \(-r \le -1\). Notice that \(\{ z_1 + z_2 < z, z_1 < \gamma \} \subset \{z_2-f_r(z_1) < z \} \subset \mathbb {R}^2\) for all \(r \ge 1\) (see Fig. 2). In particular \(\inf _C \mathbb {P}_{H_0}^{\, C}(Z_1 + Z_2 < z, Z_1 < \gamma ) \le \inf _C \mathbb {P}_{H_0}^{\, C}(X + Y < z)\) for all \(r \ge 1\) with \(X:=-f_r(Z_1)\) and \(Y:=Z_2\). The left side of the latter inequality does not depend on \(r\). Consequently, we conclude

We derive the right side of Eq. (26) as follows. According to (20),

By (21), \(F_Y(y)=0\) for \(y \le 0\), and \(F_Y(y)=F(y)\) for \(y \ge 0\). The distribution function \(F_X(x)\) of \(X\) is given as follows:

Lemma 5

Let \(Z\) be a random variable with distribution function \(F_Z\) of type (21), i.e. \(F_Z(z)=0\) for \(z \le 0\) and \(F_Z(z)=F(z)\) for all \(z \ge 0\). Let \(X:=-f_r(Z)\) for some \(r \ge 1\) with \(f_r\) as defined in (25) with \(\gamma \ge 0\). Then

Proof

Let \(h_r(x):=-f_r(x)\). We claim that the inverse of \(h_r\) is given by

Indeed, let \(x \le \gamma \). Then \(g_r(h_r(x)))=g_r(x)=x\). Now let \(x \ge \gamma \). Then \(g_r(h_r(x))) = g_r(r(x-\gamma )+\gamma ) = \frac{\left( r(x-\gamma )+\gamma \right) - \gamma }{r} + \gamma =x\), since \(r(x-\gamma )+\gamma \ge \gamma \), i.e. \(g_r \circ h_r =\) id. Similarly, \(h_r \circ g_r =\) id: For \(y \le \gamma \), \(h_r(g_r(y)))=h_r(y)=y\). For \(y \ge \gamma \), \(h_r(g_r(y))) = h_r\left( \frac{y - \gamma }{r} + \gamma \right) = r\left( \left( \frac{y - \gamma }{r} + \gamma \right) -\gamma \right) +\gamma =y\), because \(\frac{y - \gamma }{r} + \gamma \ge \gamma \). Since \(h_r\) is monotonically increasing, \(\mathbb {P}(-f_r(Z) \le x) = \mathbb {P}(Z \le g_r(x))\). By (29), we are finished. \(\square \)

To maximize \(F_X(x)+F_Y(z-x)\), we consider three scenarios: (i) \(z \le \gamma \), (ii) \(\gamma \le z \le 2 \gamma \), (iii) \(z \ge 2 \gamma \).

-

(i)

If \(z \le \gamma \), we find that

$$\begin{aligned} F_X(x)+F_Y(z-x)= {\left\{ \begin{array}{ll} F(z-x) , &{} \text { if } \quad x \le 0 \le z \le \gamma ,\\ F(x) + F(z-x), &{} \text { if } \quad 0 \le x \le z \le \gamma ,\\ F(x), &{} \text { if } \quad 0 \le z \le x \le \gamma ,\\ F\left( \frac{x+(r-1)\gamma }{r} \right) , &{} \text { if } \quad 0 \le z \le \gamma \le x. \end{array}\right. } \end{aligned}$$(30)Thus \(\psi := \sup _{x+y=z} (F_X(x)+F_Y(y)) = \sup _{x \in \mathbb {R}} (F_X(x)+F_Y(z-x))\) is the maximum of \(\sup _{x \le 0} F(z-x) = 1\), \(\sup _{0 \le x \le z} (F(x)+F(z-x))\), \(\sup _{z \le x \le \gamma } F(x) = F(\gamma ) \le 1\) and \(\sup _{\gamma \le x} F \left( \frac{x+(r-1)\gamma }{r} \right) = 1\). According to Lemma 3, the maximum of \(F(x)+F(z-x)\) is taken at \(x=\frac{z}{2}\). Thus we find that \(\psi = \max \{ 1 , 2F \left( \frac{z}{2} \right) \}\).

-

(ii)

If \(\gamma \le z \le 2 \gamma \),

$$\begin{aligned} F_X(x)+F_Y(z-x)= {\left\{ \begin{array}{ll} F(z-x), &{} \text { if } \quad x \le 0 \le \gamma \le z \le 2\gamma ,\\ F(x) + F(z-x), &{} \text { if } \quad 0 \le x \le \gamma \le z \le 2\gamma ,\\ F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x), &{} \text { if } \quad 0 \le \gamma \le x \le z \le 2\gamma ,\\ F \left( \frac{x+(r-1)\gamma }{r} \right) , &{} \text { if } \quad 0 \le \gamma \le z \le x \le 2\gamma ,\\ F \left( \frac{x+(r-1)\gamma }{r} \right) , &{} \text { if } \quad 0 \le \gamma \le z \le 2\gamma \le x. \end{array}\right. } \end{aligned}$$(31)Thus \(\psi = \sup _{x \in \mathbb {R}} (F_X(x)+F_Y(z-x))\) is the maximum of \(\sup _{x \le 0} F(z-x) = 1\), \(\sup _{0 \le x \le \gamma } (F(x)+F(z-x))\), \(\sup _{\gamma \le x \le z} \left( F\left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x) \right) \) and \(\sup _{z \le x} F \left( \frac{x+(r-1)\gamma }{r} \right) = 1\). The maximum of \(F(x)+F(z-x)\) is taken at \(\frac{z}{2}\). By assumption, \(z \le 2 \gamma \). Thus \(\sup _{0 \le x \le \gamma } (F(x)+F(z-x)) = 2F \left( \frac{z}{2} \right) \). All in all, \(\psi \) is the maximum of \(1\), \(2F \left( \frac{z}{2} \right) \) and \(\sup _{\gamma \le x \le z} \left( F\left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x) \right) \).

-

(iii)

If \(z \ge 2 \gamma \),

$$\begin{aligned} F_X(x)+F_Y(z-x)= {\left\{ \begin{array}{ll} F(z-x), &{} \text { if } \quad x \le 0 \le \gamma \le 2 \gamma \le z,\\ F(x) + F(z-x), &{} \text { if } \quad 0 \le x \le \gamma \le 2\gamma \le z,\\ F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x), &{} \text { if } \quad 0 \le \gamma \le x \le 2 \gamma \le z,\\ F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x), &{} \text { if } \quad 0 \le \gamma \le 2\gamma \le x \le z,\\ F \left( \frac{x+(r-1)\gamma }{r} \right) , &{} \text { if } \quad 0 \le \gamma \le 2\gamma \le z \le x. \end{array}\right. } \end{aligned}$$(32)Thus \(\psi = \sup _{x \in \mathbb {R}} (F_X(x)+F_Y(z-x))\) is the maximum of \(\sup _{x \le 0} F(z-x) = 1\), \(\sup _{0 \le x \le \gamma } (F(x)+F(z-x))\), \(\sup _{\gamma \le x \le z} \left( F\left( \frac{x+(r-1)\gamma }{r}\right) + F(z-x)\right) \) and \(\sup _{z \le x} F \left( \frac{x+(r-1)\gamma }{r} \right) = 1\). The maximum of \(F(x)+F(z-x)\) is taken at \(\frac{z}{2}\) and \(F(x)+F(z-x)\) is increasing on \((-\infty , \frac{z}{2})\). By assumption on \(z\), we have \(\frac{z}{2} \ge \gamma \). Thus \(\sup _{0 \le x \le \gamma } (F(x)+F(z-x)) = F(\gamma )+F(z- \gamma )\). All in all, \(\psi \) is the maximum of \(1\), \(F(\gamma )+F(z- \gamma )\) and \(\sup _{\gamma \le x \le z} \left( F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x) \right) \).

So, it remains to determine \(\sup _{\gamma \le x \le z} \left( F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x) \right) \). For this purpose, we study the extrema of the function

We claim that \(\varPsi _z(x)\) has a unique extremum in \(x=x_e\), which is actually a maximum. The condition for an extremum \(x_{e}\) of (33) is \(G:=\frac{\partial }{\partial x} \varPsi _z(x)=0\), i.e.:

with \(f(x):=F'(x)=\frac{\partial F(x)}{\partial x}\). To prove the existence of an extremum, we have to show that for all \(z, \gamma \ge 0\), \(r \ge 1\) there is an \(x_e \in \mathbb {R}\) such that (34) holds. To see this, notice that the maximum \(x_{e}\) is connected with \(z\) as follows:

By assumption on \(F\), \(\lim _{y \rightarrow 0 +} f^{-1}(y) = \infty \) and \(\lim _{y \rightarrow \infty } f^{-1}(y) = -\infty \). Since \(\lim _{x_e \rightarrow \infty } \frac{1}{r} f \left( \frac{x_e + (r-1) \gamma }{r} \right) = 0\) and \(\lim _{x_e \rightarrow -\infty } \frac{1}{r} f \left( \frac{x_e + (r-1) \gamma }{r} \right) = \infty \), we find that \(\lim _{x_e \rightarrow \pm \infty } z(x_e) = \pm \infty \). Thus, by continuity of \(z(x_e)\) in \(x_e\) there exists an \(x_e\) such that \(z(x_e)=z\) for any given \(z, \gamma \) and \(r \ge 1\). To prove uniqueness, differentiate the function \(F(ax+b) + F(z-x)\), \(a, b \in \mathbb {R}\), twice with respect to \(x\). This yields \(a^2 f'(a x+b)+f'(z-x) < 0\) for all \(x \in \mathbb {R}\). In particular, each extremum is a maximum. Thus, since \(F\) is continuously differentiable, there can be at most one \(x \in \mathbb {R}\) which maximizes \(F(ax+b) + F(z-x)\).

We now study the location of the unique maximum \(x_{e}\) of \(F\left( \frac{x+(r-1)\gamma }{r}\right) + F(z-x)\) in dependence of \(z\) and compare it to the cutoff value \(\gamma \), where the arm of the function \(F_X(x)+F_Y(z-x)\) switches. Recall that \(z, \gamma \ge 0\) and \(r \ge 1\). By (35), the maximum \(x_{e}\) depends on \(z\), i.e. \(x_{e}=x_{e}(z)\). We claim that \(x_{e}(z)\) is a strictly increasing function in \(z\). This follows from implicit differentiation:

because \(f'(x)<0\) for all \(x \in \mathbb {R}\). We claim that \(x_e \le \gamma \) if and only if \(z \le \gamma + f^{-1}(f(\gamma )/r)\). To see this, we first determine the value \(z_{\gamma }\) for \(z\) such that \(x_{e}(z_{\gamma })=\gamma \). From (35), we find with \(x_e=x_{e}(z_\gamma )=\gamma \) that \(z_{\gamma } = \gamma + f^{-1}(f(\gamma )/r)\). Now, according to (36) \(x_{e}(z)\) is an increasing function in \(z\). In particular, \(x_{e} \le \gamma \) if and only if \(z \le \gamma + f^{-1}(f(\gamma )/r)\). All in all, we may summarize as follows:

Proposition 1

Let \(z, \gamma \ge 0\) and \(r \ge 1\). Let \(x_{e}\) denote the unique maximum of \(F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x)\). Then the following statements hold:

-

\(x_{e} \le \gamma \) \(\Leftrightarrow \) \(z \le \gamma + f^{-1}(f(\gamma )/r)\)

-

\(F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x)\) is strictly increasing on \((-\infty , x_{e})\) and strictly decreasing on \((x_{e},\infty )\).

-

As \(x \rightarrow \pm \infty \), \(F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x) \rightarrow - \infty \).

Now we are in a position to determine \(\sup _{\gamma \le x \le z} \left( F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x) \right) \).

Proposition 2

Let \(z, \gamma \ge 0\). Then there exists an \(r_0 \ge 1\) such that for all \(r \ge r_0\)

Proof

According to the characterization given in Proposition 1, the function \(F \left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x)\) has an unique maximum \(x_e\) and is decreasing on \([x_e, \infty )\). Thus it suffices to show that there is an \(r_0\) such that \(x_e \le \gamma \) for all \(r \ge r_0\), which by Proposition 1 is equivalent to showing that \(z \le \gamma + f^{-1}(f(\gamma )/r)\) for all \(r \ge r_0\). Thus, it suffices to show that \(\psi (r):=f^{-1}(f(\gamma )/r)\) is strictly increasing in \(r\) with \(\lim _{r \rightarrow \infty } \psi (r) = \infty \). Now, this holds true, because \(g(r):=f(\gamma )/r\) is strictly decreasing in \(r\) with \(\lim _{r \rightarrow \infty } g(r)=0\) since \(f(\gamma )>0\), and because \(f^{-1}(y)\) is strictly decreasing in \(y\) with \(\lim _{y \rightarrow 0 +} f^{-1}(y)= \infty \). \(\square \)

Theorem 2

Let \(z, \gamma \ge 0\), and let \(X:=-f_r(Z_1)\) and \(Y:=Z_2\) with \(f_r\) defined in (25). Then there is an \(r_0\) such that for all \(r \ge r_0\):

Proof

Let \(\psi := \sup _{x \in \mathbb {R}} (F_X(x)+F_Y(z-x))\). If \(z \le \gamma \), we showed immediately after Eq. (30) that \(\psi = \max \{ 1 , 2F\left( \frac{z}{2} \right) \}\). If \(\gamma \le z \le 2 \gamma \), we showed immediately after Eq. (31) that \(\psi \) is the maximum of \(1\), \(2F\left( \frac{z}{2} \right) \) and \(\sup _{\gamma \le x \le z} \left( F \left( \frac{x+(r-1)\gamma }{r}\right) + F(z-x) \right) \). According to Proposition 2, there is an \(r_0\) such that the latter is equal to \(F(\gamma ) + F(z - \gamma )\) for all \(r \ge r_0\). So choose \(r \ge r_0\). Then, in view of Lemma 4, \(\psi \) is the maximum of \(1\) and \(2F\left( \frac{z}{2}\right) \) if \(\gamma \le z \le 2 \gamma \). Finally assume that \(z \ge 2 \gamma \). Then we showed immediately after Eq. (32) that \(\psi \) is the maximum of \(1\), \(F(\gamma )+F(z- \gamma )\) and \(\sup _{\gamma \le x \le z} \left( F\left( \frac{x+(r-1)\gamma }{r} \right) + F(z-x)\right) \). Again, by Proposition 2, there is an \(r_0\) such that \(\sup _{\gamma \le x \le z} \left( F \left( \frac{x+(r-1)\gamma }{r}\right) + F(z-x) \right) \) is equal to \(F(\gamma ) + F(z - \gamma )\) for all \(r \ge r_0\). So, \(\psi \) is the maximum of \(1\) and \(F(\gamma )+F(z- \gamma )\) in this situation. \(\square \)

Thus, for any fixed \(z, \gamma \ge 0\), Theorem 2 yields in view of Eq. (27)

1.5.4 Worst case designs without futility stop

Let \(0 < \alpha _1 < \alpha _{w} < 1\), and let \(c\) denote the design parameter of a two-stage adaptive design with conditional error function given by (5). The type I error rate \(\alpha _{w}\) for uniformly distributed but arbitrarily dependent \(p\)-values is \( \alpha _{w} = 1- \inf _C \mathbb {P}_{H_0}^C(Z_1 + Z_2 < z, Z_1 < \gamma ) \) with \(z = \sqrt{2} c\), \(\gamma = F^{-1}(1- \alpha _1)\), \(Z_i:=F^{-1}(1-P_i)\), and with \(P_i\) denoting the \(p\) value of stage \(i\), \(i=1,2\) (see also Eq. (7)). According to Eqs. (26) and (19), we have

under \(H_0\) with \(X:=-f_r(Z_1)\), \(f_r\) as defined in (25) and \(Y:=Z_2\). Thus it follows from (39) and (22) that

Thus, in view of (7), we have

Since \(0< \alpha _{w} < 1\) in situations of practical relevance, we restrict attention to those choices for \(z\) and \(\gamma \) which are admissible in the sense that \(0 < 2 \left( 1- F\left( \frac{z}{2}\right) \right) < 1\) if \(z \le 2 \gamma \), and \(0 < 2- F(\gamma ) - F( z - \gamma ) < 1\) if \(z > 2 \gamma \). Other choices for \(z\) and \(\gamma \) are irrelevant in practical situations. For such admissible \(z\) and \(\gamma \), we find that

Rights and permissions

About this article

Cite this article

Schmidt, R., Faldum, A. & Gerß, J. Adaptive designs with arbitrary dependence structure based on Fisher’s combination test. Stat Methods Appl 24, 427–447 (2015). https://doi.org/10.1007/s10260-014-0291-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-014-0291-6