Abstract

This paper considers nonconvex distributed constrained optimization over networks, modeled as directed (possibly time-varying) graphs. We introduce the first algorithmic framework for the minimization of the sum of a smooth nonconvex (nonseparable) function—the agent’s sum-utility—plus a difference-of-convex function (with nonsmooth convex part). This general formulation arises in many applications, from statistical machine learning to engineering. The proposed distributed method combines successive convex approximation techniques with a judiciously designed perturbed push-sum consensus mechanism that aims to track locally the gradient of the (smooth part of the) sum-utility. Sublinear convergence rate is proved when a fixed step-size (possibly different among the agents) is employed whereas asymptotic convergence to stationary solutions is proved using a diminishing step-size. Numerical results show that our algorithms compare favorably with current schemes on both convex and nonconvex problems.

Similar content being viewed by others

References

Ahn, M., Pang, J., Xin, J.: Difference-of-convex learning: directional stationarity, optimality, and sparsity. SIAM J. Optim. 27(3), 1637–1665 (2017). https://doi.org/10.1137/16M1084754

Bertsekas, D.P.: Nonlinear Programming, 2nd edn. Athena Scientific, Belmont (1999)

Bertsekas, D.P., Tsitsiklis, J.N.: Gradient convergence in gradient methods with errors. SIAM J. Optim. 10(3), 627–642 (2000)

Bianchi, P., Jakubowicz, J.: Convergence of a multi-agent projected stochastic gradient algorithm for non-convex optimization. IEEE Trans. Autom. Control 58(2), 391–405 (2013)

Bild, A.H., et al.: Oncogenic pathway signatures in human cancers as a guide to targeted therapies. Nature 439(7074), 353 (2006)

Bottou, L., Curtis, F.E., Nocedal, J.: Optimization methods for large-scale machine learning. SIAM Rev. 60(2), 223–311 (2018)

Bradley, P.S., Mangasarian, O.L.: Feature selection via concave minimization and support vector machines. In: Proceedings of the Fifteenth International Conference on Machine Learning (ICML 1998), vol. 98, pp. 82–90 (1998)

Cattivelli, F.S., Sayed, A.H.: Diffusion LMS strategies for distributed estimation. IEEE Trans. Signal Process. 58(3), 1035–1048 (2010)

Chang, T.H.: A proximal dual consensus ADMM method for multi-agent constrained optimization. IEEE Trans. Signal Process. 64(14), 3719–3734 (2014)

Chang, T.H., Hong, M., Wang, X.: Multi-agent distributed optimization via inexact consensus ADMM. IEEE Trans. Signal Process. 63(2), 482–497 (2015)

Chen, J., Sayed, A.H.: Diffusion adaptation strategies for distributed optimization and learning over networks. IEEE Trans. Signal Process. 60(8), 4289–4305 (2012)

Di Lorenzo, P., Scutari, G.: NEXT: in-network nonconvex optimization. IEEE Trans. Signal Inf. Process. Netw. 2(2), 120–136 (2016)

Di Lorenzo, P., Scutari, G.: Distributed nonconvex optimization over networks. In: Proceedings of the IEEE 6th International Workshop on Computational Advances in Multi-sensor Adaptive Processing (CAMSAP 2015), Cancun, Mexico (2015)

Di Lorenzo, P., Scutari, G.: Distributed nonconvex optimization over time-varying networks. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 16), Shanghai (2016)

Facchinei, F., Lampariello, L., Scutari, G.: Feasible methods for nonconvex nonsmooth problems with applications in green communications. Math. Program. 164(1–2), 55–90 (2017)

Facchinei, F., Scutari, G., Sagratella, S.: Parallel selective algorithms for nonconvex big data optimization. IEEE Trans. Signal Process. 63(7), 1874–1889 (2015)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Friedman, J., Hastie, T., Tibshirani, R.: The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer Series in Statistics, vol. 1. Springer, New York (2009)

Fu, W.J.: Penalized regressions: the bridge versus the lasso. J. Comput. Graph. Stat. 7(3), 397–416 (1998)

Gharesifard, B., Cortés, J.: When does a digraph admit a doubly stochastic adjacency matrix? In: Proceedings of the 2010 American Control Conference, pp. 2440–2445 (2010)

Hong, M., Hajinezhad, D., Zhao, M.: Prox-PDA: the proximal primal–dual algorithm for fast distributed nonconvex optimization and learning over networks. In: Proceedings of the 34th International Conference on Machine Learning (ICML 2017), vol. 70, pp. 1529–1538 (2017)

Jakovetic, D., Xavier, J., Moura, J.M.: Cooperative convex optimization in networked systems: augmented Lagrangian algorithms with directed gossip communication. IEEE Trans. Signal Process. 59(8), 3889–3902 (2011)

Jakovetić, D., Xavier, J., Moura, J.M.: Fast distributed gradient methods. IEEE Trans. Autom. Control 59(5), 1131–1146 (2014)

Kempe, D., Dobra, A., Gehrke, J.: Gossip-based computation of aggregate information. In: Proceedings of the 44th Annual IEEE Symposium on Foundations of Computer Science, Cambridge, MA, USA, pp. 482–491 (2003)

Mokhtari, A., Shi, W., Ling, Q., Ribeiro, A.: DQM: decentralized quadratically approximated alternating direction method of multipliers. arXiv:1508.02073 (2015)

Mokhtari, A., Shi, W., Ling, Q., Ribeiro, A.: A decentralized second-order method with exact linear convergence rate for consensus optimization. IEEE Trans. Signal Inf. Process. Netw. 2(4), 507–522 (2016)

Nedic, A., Olshevsky, A.: Distributed optimization over time-varying directed graphs. IEEE Trans. Autom. Control 60(3), 601–615 (2015)

Nedić, A., Ozdaglar, A., Parrilo, P.A.: Constrained consensus and optimization in multi-agent networks. IEEE Trans. Autom. Control 55(4), 922–938 (2010)

Nedich, A., Olshevsky, A., Ozdaglar, A., Tsitsiklis, J.N.: On distributed averaging algorithms and quantization effects. IEEE Trans. Autom. Control 54(11), 2506–2517 (2009)

Nedich, A., Olshevsky, A., Shi, W.: Achieving geometric convergence for distributed optimization over time-varying graphs. SIAM J. Optim. 27(4), 2597–2633 (2017)

Nedich, A., Ozdaglar, A.: Distributed subgradient methods for multi-agent optimization. IEEE Trans. Autom. Control 54(1), 48–61 (2009)

Palomar, D.P., Chiang, M.: Alternative distributed algorithms for network utility maximization: framework and applications. IEEE Trans. Autom. Control 52(12), 2254–2269 (2007)

Qu, G., Li, N.: Harnessing smoothness to accelerate distributed optimization. arXiv:1605.07112 (2016)

Rao, B.D., Kreutz-Delgado, K.: An affine scaling methodology for best basis selection. IEEE Trans. Signal Process. 47(1), 187–200 (1999)

Sayed, A.H., et al.: Adaptation, learning, and optimization over networks. Found. Trends Mach. Learn. 7(4–5), 311–801 (2014)

Scutari, G., Facchinei, F., Lampariello, L.: Parallel and distributed methods for constrained nonconvex optimization. Part I: theory. IEEE Trans. Signal Process. 65(8), 1929–1944 (2017)

Scutari, G., Facchinei, F., Song, P., Palomar, D.P., Pang, J.S.: Decomposition by partial linearization: parallel optimization of multi-agent systems. IEEE Trans. Signal Process. 62(3), 641–656 (2014)

Shi, W., Ling, Q., Wu, G., Yin, W.: EXTRA: an exact first-order algorithm for decentralized consensus optimization. SIAM J. Optim. 25(2), 944–966 (2015)

Shi, W., Ling, Q., Wu, G., Yin, W.: A proximal gradient algorithm for decentralized composite optimization. IEEE Trans. Signal Process. 63(22), 6013–6023 (2015)

Sun, Y., Daneshmand, A., Scutari, G.: Convergence rate of distributed convex and nonconvex optimization methods based on gradient tracking. Technical report, Purdue University (2018)

Sun, Y., Scutari, G.: Distributed nonconvex optimization for sparse representation. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4044–4048 (2017)

Sun, Y., Scutari, G., Palomar, D.: Distributed nonconvex multiagent optimization over time-varying networks. In: Proceedings of the Asilomar Conference on Signals, Systems, and Computers (2016). Appeared on arXiv on July 1, (2016)

Tatarenko, T., Touri, B.: Non-convex distributed optimization. arXiv:1512.00895 (2016)

Thi, H.L., Dinh, T.P., Le, H., Vo, X.: DC approximation approaches for sparse optimization. Eur. J. Oper. Res. 244(1), 26–46 (2015)

Wai, H.T., Lafond, J., Scaglione, A., Moulines, E.: Decentralized Frank–Wolfe algorithm for convex and non-convex problems. arXiv:1612.01216 (2017)

Wei, E., Ozdaglar, A.: On the \(o(1/k)\) convergence of asynchronous distributed alternating direction method of multipliers. In: Proceedings of the IEEE Global Conference on Signal and Information Processing (GlobalSIP 2013), Austin, TX, USA, pp. 551–554 (2013)

Weston, J., Elisseeff, A., Schölkopf, B., Tipping, M.: Use of the zero-norm with linear models and kernel methods. J. Mach. Learn. Res. 3, 1439–1461 (2003)

Wright, S.J.: Coordinate descent algorithms. Math. Program. 151(1), 3–34 (2015)

Xi, C., Khan, U.A.: On the linear convergence of distributed optimization over directed graphs. arXiv:1510.02149 (2015)

Xi, C., Khan, U.A.: ADD-OPT: accelerated distributed directed optimization. arXiv:1607.04757 (2016). Appeared on arXiv on July 16 (2016)

Xiao, L., Boyd, S., Lall, S.: A scheme for robust distributed sensor fusion based on average consensus. In: Proceedings of the 4th International Symposium on Information Processing in Sensor Networks, Los Angeles, CA, pp. 63–70 (2005)

Xu, J., Zhu, S., Soh, Y.C., Xie, L.: Augmented distributed gradient methods for multi-agent optimization under uncoordinated constant stepsizes. In: Proceedings of the 54th IEEE Conference on Decision and Control (CDC 2015), Osaka, Japan, pp. 2055–2060 (2015)

Zhang, S., Xin, J.: Minimization of transformed \({L}_1\) penalty: theory, difference of convex function algorithm, and robust application in compressed sensing. arXiv:1411.5735 (2014)

Zhu, M., Martínez, S.: An approximate dual subgradient algorithm for multi-agent non-convex optimization. IEEE Trans. Autom. Control 58(6), 1534–1539 (2013)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Part of this work has been presented at the 2016 Asilomar Conference on System, Signal, and Computers [42] and the 2017 IEEE ICASSP Conference [41].

This work was supported by the USA National Science Foundation, Grants CIF 1564044 and CIF 1719205; the Office of Naval Research, Grant N00014-16-1-2244; and the Army Research Office, Grant W911NF1810238.

Appendices

Appendix

Proof of Lemma 3

We begin introducing the following intermediate result.

Lemma 15

In the setting of Lemma 3, the following hold:

-

(i)

The elements of \({\mathbf {A}}^{n:0}\), \(n\in {\mathbb {N}}_+\), can be bounded as

$$\begin{aligned}&\inf _{t\in {\mathbb {N}}_+} \left( \min _{1\le i\le I} \left( {\mathbf {A}}^{t:0}{\mathbf {1}}\right) _{i}\right) \ge \phi _{lb}, \end{aligned}$$(108)$$\begin{aligned}&\sup _{t\in {\mathbb {N}}_+} \left( \max _{1\le i\le I} \left( {\mathbf {A}}^{t:0}{\mathbf {1}}\right) _{i}\right) \le \phi _{ub}, \end{aligned}$$(109)where \(\phi _{lb}\) and \(\phi _{ub}\) are defined in (8);

-

(ii)

For any given \(n, k\in {\mathbb {N}}_+\), \(n\ge k\), there exists a stochastic vector \(\varvec{\xi }^{k}\triangleq [\xi _1^{k},\ldots \xi _I^{k}]^\top \) (i.e., \(\varvec{\xi }^{k}> {\mathbf {0}}\) and \({\mathbf {1}}^\top \, \varvec{\xi }^{k}=1)\) such that

$$\begin{aligned} \left| {\mathbf {W}}^{n:k}_{ij} - \xi _{j}^{k}\right| \le c_{0}\,(\rho )^{\big \lfloor \frac{n-k+1}{(I-1)B}\big \rfloor },\qquad \forall i,j\in [I], \end{aligned}$$(110)where \(c_{0}\) and \(\rho \) are defined in (10).

The proof Lemma 15 follows similar steps as those in [31, Lemma 2, Lemma 4] and thus is omitted, although the results in [31] are established under a stronger condition on \({\mathcal {G}}^n\) than Assumption B.

We prove now Lemma 3. Let \({\mathbf {z}}\in {\mathbb {R}}^{I\cdot m}\) be an arbitrary vector. For each \(\ell =1,\ldots ,m\), define \({\mathbf {z}}_{\ell }\triangleq ({\mathbf {I}}_I \otimes {\mathbf {e}}_{\ell }^\top )\,{\mathbf {z}}\), where \({\mathbf {e}}_{\ell }\) is the \(\ell \)-th canonical vector; we denote by \({z}_{\ell ,j}\) the j-th component of \({\mathbf {z}}_{\ell }\), with \(j\in [I]\). We have

We bound next the above term. Given \(\varvec{\xi }^k\) as in Lemma 15 [cf. (110)], define \({\mathbf {E}}^{n:k}\triangleq {\mathbf {W}}^{n:k} - {\mathbf {1}}(\varvec{\xi }^{k})^\top \), whose ij-th element is denoted by \({E}^{n:k}_{ij}\). We have

Combining (111) and (112) we obtain

Moreover, the matrix difference above can be alternatively uniformly bounded as follows:

where (a) follows from (25) and \( \Vert \widehat{{\mathbf {W}}}^{n:k}\Vert \le \sqrt{I}\). This completes the proof. \(\square \)

Proof of Lemma 11

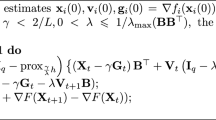

Recall the SONATA update written in vector–matrix form in (43)–(45). Note that the x-update therein is a special case of the perturbed condensed push-sum algorithm (16), with perturbation \(\varvec{\delta }^{n+1} = \alpha ^n \widehat{{\mathbf {W}}}^n {\mathbf {x}}^n\). We can then apply Proposition 1 and readily obtain (71).

To prove (72), we follow a similar approach: noticing that the y-update in (45) is a special case of (16), with perturbation \(\varvec{\delta }^{n+1} = (\widehat{{\mathbf {D}}}_{\varvec{\phi }^{n+1}})^{-1}\left( {\mathbf {g}}^{n+1}-{\mathbf {g}}^{n}\right) \), we can write

This completes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Scutari, G., Sun, Y. Distributed nonconvex constrained optimization over time-varying digraphs. Math. Program. 176, 497–544 (2019). https://doi.org/10.1007/s10107-018-01357-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-018-01357-w