Abstract

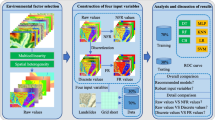

In this study, the cluster analysis (CA), probabilistic methods, and artificial neural networks (ANNs) are used to predict landslide susceptibility. The Geographic Information System (GIS) is used as the basic tool for spatial data management. CA is applied to select non-landslide dataset for later analysis. A probabilistic method is suggested to calculate the rating of the relative importance of each class belonging to each conditional factor. ANN is applied to calculate the weight (i.e., relative importance) of each factor. Using the ratings and the weights, it is proposed to calculate the landslide susceptibility index (LSI) for each pixel in the study area. The obtained LSI values can then be used to construct the landslide susceptibility map. The aforementioned proposed method was applied to the Longfeng town, a landslide-prone area in Hubei province, China. The following eight conditional factors were selected: lithology, slope angle, distance to stream/reservoir, distance to road, stream power index (SPI), altitude, curvature, and slope aspect. To assess the conditional factor effects, the weights were calculated for four cases, using 8 factors, 6 factors, 5 factors, and 4 factors, respectively. Then, the results of the landslide susceptibility analysis for these four cases, with and without weighting, were obtained. To validate the process, the receiver operating characteristics (ROC) curve and the area under the curve (AUC) were applied. In addition, the results were compared with the existing landslide locations. The validation results showed good agreement between the existing landslides and the computed susceptibility maps. The results with weighting were found to be better than that without weighting. The best accuracy was obtained for the case with 5 conditional factors with weighting.

Similar content being viewed by others

References

Akgun A (2012) A comparison of landslide susceptibility maps produced by logistic regression, multi-criteria decision, and likelihood ratio methods: a case study at İzmir, Turkey. Landslides 9:93–106. https://doi.org/10.1007/s10346-011-0283-7

Althuwaynee OF, Pradhan B, Ahmad N (2014) Landslide susceptibility mapping using decision-tree based CHi-squared automatic interaction detection (CHAID) and logistic regression (LR) integration. IOP Conf Ser Earth Environ Sci 20:012032. https://doi.org/10.1088/1755-1315/20/1/012032

Anbalagan R (1992) Landslide hazard evaluation and zonation mapping in mountainous terrain. Eng Geol 32:269–277. https://doi.org/10.1016/0013-7952(92)90053-2

Anbalagan R, Singh B (1996) Landslide hazard and risk assessment mapping of mountainous terrains - a case study from Kumaun Himalaya, India. Eng Geol 43:237–246. https://doi.org/10.1016/S0013-7952(96)00033-6

Ayalew L, Yamagishi H, Marui H, Kanno T (2005) Landslides in Sado Island of Japan: part II. GIS-based susceptibility mapping with comparisons of results from two methods and verifications. Eng Geol 81:432–445. https://doi.org/10.1016/j.enggeo.2005.08.004

Babic V, Vancetovic J, Prodanovic S, Andjelkovic V, Babic M, Kravic N (2012) The identification of drought tolerant maize accessions by two-step cluster analysis. Rom Agric Res 53–61

Baum EB, Haussler D (1989) What size net gives valid generalization? Neural Comput 1:151–160. https://doi.org/10.1162/neco.1989.1.1.151

Beguería S (2006) Validation and evaluation of predictive models in hazard assessment and risk management. Nat Hazards 37:315–329. https://doi.org/10.1007/s11069-005-5182-6

Catani F, Casagli N, Ermini L, Righini G, Menduni G (2005) Landslide hazard and risk mapping at catchment scale in the Arno River basin. Landslides 2:329–342. https://doi.org/10.1007/s10346-005-0021-0

Chiu T, Fang D, Chen J, Wang Y, Jeris C (2001) A robust and scalable clustering algorithm for mixed type attributes in large database environment. Proc. seventh ACM SIGKDD Int. Conf. Knowl. Discov. data Min. pp 263–268. http://doi.acm.org/10.1145/502512.502549

Chung C-JF, Fabbri AG (2003) Validation of spatial prediction models for landslide hazard mapping. Nat Hazards 30:451–472. https://doi.org/10.1023/B:NHAZ.0000007172.62651.2b

Cybenko G (1989) Correction: Approximation by Superpositions of a Sigmoidal Function. Math Control Signals Syst 2:303–314. https://doi.org/10.1007/BF02134016

Ding M, Hu K (2014) Susceptibility mapping of landslides in Beichuan County using cluster and MLC methods. Nat Hazards 70:755–766. https://doi.org/10.1007/s11069-013-0854-0

Ercanoglu M, Gokceoglu C (2004) Landslide susceptibility zoning north of Yenice ( NW Turkey ) by multivariate statistical techniques 32, 1–23. doi:https://doi.org/10.1023/B:NHAZ.0000026786.85589.4a

Fawcett T (2006) An introduction to ROC analysis. Pattern Recogn 27:861–874. https://doi.org/10.1016/j.patrec.2005.10.010

García-Rodríguez MJ, Malpica JA, Benito B et al (2007) Susceptibility assessment of earthquake-triggered landslides in El Salvador using logistic regression. Geomorphology 95(3):172–191. https://doi.org/10.1016/j.geomorph.2007.06.001

Gemitzi A, Falalakis G, Eskioglou P, Petalas C (2011) Evaluating landslide susceptibilty using enviromental factors, fuzzy membership functions and GIS. Glob NEST J 13:28–40

Guzzetti F, Carrara A, Cardinali M, Reichenbach P (1999) Landslide hazard evaluation: a review of current techniques and their application in a multi-scale study, Central Italy. Geomorphology 31:181–216. https://doi.org/10.1016/S0169-555X(99)00078-1

Hecht-Nielsen R (1987) Kolmogorov’s mapping neural network existence theorem, in: Proceedings of the IEEE First International Conference on Neural Networks , San Diego, CA, USA pp 11–13

Jakob M (2000) The impacts of logging on landslide activity at Clayoquot Sound, British Columbia. Catena 38:279–300. https://doi.org/10.1016/S0341-8162(99)00078-8

Kanungo DP, Arora MK, Sarkar S, Gupta RP (2006) A comparative study of conventional, ANN black box, fuzzy and combined neural and fuzzy weighting procedures for landslide susceptibility zonation in Darjeeling Himalayas. Eng Geol 85:347–366. https://doi.org/10.1016/j.enggeo.2006.03.004

Kaufman L, Rousseeuw PJ (1990) Finding groups in data: an introduction to cluster analysis. Wiley, New York. https://doi.org/10.1002/9780470316801

Kulatilake PHSW, Qiong W, Hudaverdi T, Kuzu C (2010) Mean particle size prediction in rock blast fragmentation using neural networks. Eng Geol 114:298–311. https://doi.org/10.1016/j.enggeo.2010.05.008

Lai T, Dragićević S, Schmidt M (2013) Integration of multicriteria evaluation and cellular automata methods for landslide simulation modelling. Geomatics, Nat Hazards Risk 4:355–375. https://doi.org/10.1080/19475705.2012.746243

Lawrence J, Fredrickson J (1998) Brainmaker user’s guide and reference manual, California scientific software. Nevada City, CA

Lee S, Min K (2001) Statistical analyses of landslide susceptibility at Yongin, Korea. Environ Geol 40:1095–1113. https://doi.org/10.1007/s002540100310

Lee S, Pradhan B (2007) Landslide hazard mapping at Selangor, Malaysia using frequency ratio and logistic regression models, in: Landslides, pp 33–41. https://doi.org/10.1007/s10346-006-0047-y

Lee S, Talib JA (2005) Probabilistic landslide susceptibility and factor effect analysis. Environ Geol 47:982–990. https://doi.org/10.1007/s00254-005-1228-z

Lee S, Ryu J-H, Won J-S, Park H-J (2004) Determination and application of the weights for landslide susceptibility mapping using an artificial neural network. Eng Geol 71:289–302. https://doi.org/10.1016/S0013-7952(03)00142-X

Melchiorre C, Matteucci M, Azzoni A, Zanchi A (2008) Artificial neural networks and cluster analysis in landslide susceptibility zonation. Geomorphology 94:379–400. https://doi.org/10.1016/j.geomorph.2006.10.035

Melchiorre C, Castellanos Abella EA, van Westen CJ, Matteucci M (2011) Evaluation of prediction capability, robustness, and sensitivity in non-linear landslide susceptibility models, Guant??namo, Cuba. Comput Geosci 37:410–425. https://doi.org/10.1016/j.cageo.2010.10.004

Michailidou C, Maheras P, Arseni-Papadimititriou A, Kolyva-Machera F, Anagnostopoulou C (2009) A study of weather types at Athens and Thessaloniki and their relationship to circulation types for the cold-wet period, part I: two-step cluster analysis. Theor Appl Climatol 97:163–177. https://doi.org/10.1007/s00704-008-0057-x

Moore ID, Grayson RB (1991) Terrain-based catchment partitionning and runoff prediction usingvector elevation data. Water Resour Res 27:1177–1191. https://doi.org/10.1029/91WR00090

Motamedi M, Liang RY (2014) Probabilistic landslide hazard assessment using Copula modeling technique. Landslides 11:565–573. https://doi.org/10.1007/s10346-013-0399-z

Myronidis D, Papageorgiou C, Theophanous S (2016) Landslide susceptibility mapping based on landslide history and analytic hierarchy process (AHP). Nat Hazards 81:245–263. https://doi.org/10.1007/s11069-015-2075-1

Nefeslioglu HA, Gokceoglu C, Sonmez H (2008) An assessment on the use of logistic regression and artificial neural networks with different sampling strategies for the preparation of landslide susceptibility maps. Eng Geol 97:171–191. https://doi.org/10.1016/j.enggeo.2008.01.004

Pachauri AK, Pant M (1992) Landslide hazard mapping based on geological attributes. Eng Geol 32:81–100. https://doi.org/10.1016/0013-7952(92)90020-Y

Peng L, Niu R, Huang B, Wu X, Zhao Y, Ye R (2014) Landslide susceptibility mapping based on rough set theory and support vector machines: a case of the Three Gorges area, China. Geomorphology 204:287–301

Pérez-Peña JV, Azañón JM, Azor A, Delgado J, González-Lodeiro F (2009) Spatial analysis of stream power using GIS: SLk anomaly maps. Earth Surf Process Landf 34:16–25. https://doi.org/10.1002/esp.1684

Popescu M (2001) A suggested method for reporting landslide remedial measures. Bull Eng Geol Environ 60:69–74. https://doi.org/10.1007/s100640000084

Poudyal CP, Chang C, Oh HJ, Lee S (2010) Landslide susceptibility maps comparing frequency ratio and artificial neural networks: a case study from the Nepal Himalaya. Environ Earth Sci 61:1049–1064. https://doi.org/10.1007/s12665-009-0426-5

Pourghasemi HR, Pradhan B, Gokceoglu C (2012) Application of fuzzy logic and analytical hierarchy process (AHP) to landslide susceptibility mapping at Haraz watershed, Iran. Nat Hazards 63:965–996. https://doi.org/10.1007/s11069-012-0217-2

Pradhan B, Lee S (2010) Landslide susceptibility assessment and factor effect analysis: backpropagation artificial neural networks and their comparison with frequency ratio and bivariate logistic regression modelling. Environ Model Softw 25:747–759. https://doi.org/10.1016/j.envsoft.2009.10.016

Rumelhart DE, Widrow B, Lehr MA (1994a) The basic ideas in neural networks. Commun ACM 37:87–92

Rumelhart DE, Widrow B, Lehr MA (1994b) Neural networks: applications in industry, business and science. Commun ACM 37:93–105

Shahabi H, Hashim M (2015) Landslide susceptibility mapping using GIS-based statistical models and remote sensing data in tropical environment. Sci Rep 5:9899. https://doi.org/10.1038/srep09899

Van Westen CJ (2000) The modeling of landslide hazards using GIS. Surv Geophys 21:241–255. https://doi.org/10.1023/A:1006794127521

Wang X, Zhang L, Wang S, Lari S (2014) Regional landslide susceptibility zoning with considering the aggregation of landslide points and the weights of factors. Landslides 11:399–409. https://doi.org/10.1007/s10346-013-0392-6

Widrow B (1987) Adaline and madaline - 1963, plenary speech, in: IEEE 1st Int. Conf. on Neural Networks. San Diego, CA, p. vol 1, pp 143–158

Wu XL, Niu RQ, Ren F, Peng L (2013) Landslide susceptibility mapping using rough sets and back-propagation neural networks in the Three Gorges, China. Environ Earth Sci 70:1307–1318. https://doi.org/10.1007/s12665-013-2217-2

Wu X, Benjamin Zhan F, Zhang K, Deng Q (2016) Application of a two-step cluster analysis and the Apriori algorithm to classify the deformation states of two typical colluvial landslides in the Three Gorges, China. Environ Earth Sci 75:1–16. https://doi.org/10.1007/s12665-015-5022-2

Xu K, Guo Q, Li Z, Xiao J, Qin Y, Chen D (2015) Landslide susceptibility evaluation based on BPNN and GIS : a case of Guojiaba in the Three Gorges Reservoir Area 8816. https://doi.org/10.1080/13658816.2014.992436

Youssef AM, Pradhan B, Jebur MN, El-Harbi HM (2015) Landslide susceptibility mapping using ensemble bivariate and multivariate statistical models in Fayfa area, Saudi Arabia. Environ Earth Sci 73:3745–3761. https://doi.org/10.1007/s12665-014-3661-3

Zhou W (1999) Verification of the nonparametric characteristics of backpropagation neural networks for image classification. IEEE Trans Geosci Remote Sens 37:771–779. https://doi.org/10.1109/36.752193

Funding

The first author of the paper is grateful to the Chinese Scholarship Council (CSC) for providing a scholarship (Grant No. 201506410043) to conduct a part of the research described in this paper as a Visiting Research Student at the University of Arizona, USA. This work was supported by the National Natural Science Foundation of China (Grant Nos. 41807264 and 41972289), the Fundamental Research Funds for the Central Universities, China University of Geosciences (Wuhan) (Grant No. CUG170686), and the Science and Technology Research Project of the Education Department of Hubei Province (Grant No. B2019452).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1. A brief introduction to two-step cluster analysis

The cluster analysis is a widely used unsupervised learning technique for identifying different patterns in datasets. The goal of the cluster analysis is to allocate objects into groups whose members are similar in some way (Kaufman and Rousseeuw 1990). The k-means, hierarchical, non-hierarchical, expectation-maximization and two-step are some of the techniques used in cluster analysis. The two-step cluster analysis (TSCA) is an algorithm primarily designed to analyze large datasets (Chiu et al. 2001). It has the following desirable features that differentiate it from traditional cluster techniques: (1.) TSCA is able to analyze large datasets efficiently; (2.) TSCA is capable to automatically select the number of clusters; (3.) It has the ability to deal with both quantitative and qualitative variables. Because of these features, TSCA has been applied to different fields, such as biomedical studies (Babic et al. 2012), classification of synoptic systems (Michailidou et al. 2009), landslides deformation states identification (Wu et al. 2016), and so on to study pattern recognition.

As the name implies, the process has two steps termed “pre-clustering” and “clustering.”. In step one, the data are pre-clustered into many small sub-clusters based on a sequential cluster approach. In step two, the sub-clusters are used as inputs and clustered into the final number of clusters. To deal with both continuous and categorical variables, the log-likelihood function is used to derive the distance measurement in both steps. In calculating log-likelihood function, it is assumed that continuous variables are normally distributed, categorical variables are multinomial, and all variables are independent of each other.

Appendix 2. Determination of the relative weights of the conditional factors using BPNN

Assume the input vector, hidden vector, and output vector of the BPNN as X = (x1, x2, ..., xi, ..., xI), Y = (y1, y2, ...yj, ..., yJ), and Z = (z1, z2, ..., zk, ..., zK), respectively. The expected output vector is labeled as O = (o1, o2, ..., ok, ..., oK). The expressions for the jth node in the hidden layer, yj and the kth node in the output layer, zk are respectively given below in Eqs. (9) and (10).

In Eq. (9), wij and aj are the weights and thresholds respectively between the input layer and the hidden layer. Similarly, in Eq. (10), wjk and bk are the weights and thresholds between the hidden layer and the output layer. The main purpose of the BPNN is to adjust the weights and thresholds to minimize the mean square error (MSE) between the expected output values and network output values, as shown in Eq. (11).

The importance of node i with respect to node k is defined as STik and is shown in Eq. (12). The overall importance of node i with respect to the output layer can be calculated as STi and is shown in Eq. (13).

In Eqs. (12) and (13), sij is the normalized importance of node i in the input layer with respect to node j in the hidden layer, tjk is the normalized importance of node j in the hidden layer with respect to node k in the output layer, and tj is the overall importance of node j, and they can be calculated by Eqs. (14), (15), and (16) as given below:

Appendix 3. Rating calculation procedure

After data digitization and non-landslide set determination, the number of cells where landslides occurred and not occurred can be obtained along with the corresponding values for each factor. Then the rating of each class of every factor can be determined as the ratio of landslide occurrence (aim) divided by the ratio of landslide non-occurrence (bim), as expressed by Eq. (17).

In Eq. (17), Rim is the rating of mth class of ith factor; lim is the number of landslide cells falls in mth class of ith factor; nim is the number of non-landslide cells falls in mth class of ith factor; L and N are respectively the total number of cells of landslide occurrence and non-landslide occurrence.

Appendix 4. Model validation

In the present study, for landslide susceptibility index model validation, firstly, a series of testing data consisting of LSI values that have been normalized to the range 0 to 1 are considered. Furthermore, these data should be categorized either under the existing landslide pixels, i.e., actual positive state, or under the non-landslide pixels, i.e., actual negative state. Secondly, a threshold level must be set to estimate the LSI values; above the level is called positive, i.e., landslide will occur, and below the level is called negative, i.e., landslide will not occur. Obviously, the setting threshold can affect the sensitivity and specificity (SPC). The sensitivity (TPR) is also called the proportion of true positive state. It can be calculated by Eq. (18) as the number of true positive pixels (TP) divided by the total number of actual positive pixels (P). The true positive state represents those pixels that are in the actual landslide area which get the positive test result, i.e. the red area in Fig. 8. The specificity (SPC) is the proportion of the actual non-landslide pixels that were identified correctly as negative, i.e. the blue area in Fig. 8. It can be calculated by Eq. (19). In Eq. (19), the false positive rate (FPR) can be calculated by Eq. (20) as the number of false positive pixels (FP) divided by the total number of actual negative pixels (N). The false positive state represents those pixels that are in the actual non-landslide area which get the positive test result, i.e. the marked area with diagonals to the right of 0.5 in Fig. 8. Therefore, when you set a series of thresholds to increase the sensitivity, it will lead to decrease of specificity, and vice versa. Thirdly, by varying the threshold values, a series of points having (TPR, FPR) values can be obtained. By connecting those points, the ROC curve and the area under the ROC curve can be obtained. The SPSS software was used to get the ROC curves and the AUC values.

Rights and permissions

About this article

Cite this article

Tang, RX., Kulatilake, P.H.S.W., Yan, EC. et al. Evaluating landslide susceptibility based on cluster analysis, probabilistic methods, and artificial neural networks. Bull Eng Geol Environ 79, 2235–2254 (2020). https://doi.org/10.1007/s10064-019-01684-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10064-019-01684-y