Abstract

We present an extension of FDR, the model checker for the process algebra CSP, that exploits symmetry to reduce the size of the state space searched. We define what it means for a process to be symmetric with respect to a group of permutations on the transition labels. We factor the state space of the search by symmetry equivalence, mapping each state to a representative of its equivalence class, thereby considering all symmetric states together. We prove a powerful syntactic result, identifying conditions under which a process will be symmetric in a particular type. We show how to implement such a search using the powerful technique of supercombinators used in the implementation of FDR: we identify conditions on a supercombinator for it to be symmetric and explain how to apply a permutation to a state. Finally, we present a novel efficient technique for calculating representatives of equivalence classes, which normally finds unique representatives; our experiments suggest that this technique typically works faster than other techniques and in particular scales better.

Similar content being viewed by others

1 Introduction

FDR [14] is a powerful model checker for the process algebra CSP [35]. FDR takes a list of CSP processes, written in machine-readable CSP (henceforth \(\hbox {CSP}_M\)); it can check whether one process refines another according to the CSP denotational models (e.g. the traces, failures and failures–divergences models), or it can check other properties, including deadlock freedom, livelock freedom and determinism. FDR has been widely used both within industry and in academia for verifying systems [13, 24, 33]. It is also used as a verification back end for several other tools including: Casper [27] which verifies security protocols; SVA [36] which can verify simple shared-variable programs; and several industrial tools (e.g. ModelWorks and ASD). The last few years have seen significant advances in FDR, leading to FDR3 [14] and FDR4, exploiting multi-core algorithms and using more efficient internal representations of processes, and also supporting large compute clusters; these advances have made a step change in the class of systems that can be analysed.

Many systems that one might want to model check contain symmetries. In this paper, we present an extension of FDR4 that exploits these symmetries: this gives considerable speed-ups in model checking (see Table 1); more importantly, we can now check much larger systems, including systems that, without symmetry reduction, would have well over \(10^{26}\) states and so would be too large to check (on the same architecture) by a factor of more than \(10^{16}\). Symmetry reduction has been applied previously in other model checkers: we give a review in Sect. 1.3.

Our main interest in symmetry reduction arises from our analysis of concurrent datatypes, particularly those based on linked lists [28]. Here, each node in the linked list is modelled by a CSP process, say of the form Node(me, datum, next), where me is the node’s identity, datum is some piece of data, and next is the identity of the next node in the list or a special value Null. Threads that operate on these nodes are also modelled as CSP processes. One can then analyse a system with some number n of nodes and some number t of threads. Clearly, a list of a particular length l can be formed in \(n!/(n-l)!\) different ways by using different nodes, but all such states that correspond to the same sequence of datum values are symmetric. Further, different states can be symmetric in the type of the datums: for example, a list holding the sequence \(\langle A,B,A\rangle \) is symmetric to one holding \(\langle B,C,B\rangle \), say. Finally, the system is symmetric in the type of the thread identities.

Our approach is also applicable to network communication protocols in a dynamic network, where links may be broken or re-made, or where hosts may choose to use a particular sub-network for communication. Here the system is symmetric in the identities of hosts: different states of the network may be symmetric under permutations of these identities.

In general, our technique applies to systems that are fully symmetric in a particular type; however, we sketch (in Sect. 8) an example based on a ring of processes to show that, nevertheless, it can be applied to systems with a restricted form of symmetry.

We describe relevant background and formalise our notion of symmetry in Sect. 2: we define what it means for a labelled transition system (LTS) to be symmetric with respect to a group G of permutations on the labels of transitions and for a pair of states to be related under a permutation \(\pi \in G\) (\(\pi \)-bisimilar).

By verifying the behaviour of the system from one state, we can deduce its correctness in all symmetric states. In Sect. 3 we present the idea behind the symmetry reduction. We map each state to a representative member of its (G-bisimilarity) equivalence class. FDR performs model checking by searching in the product automaton formed from the LTSs for the specification and implementation processes. We show how to perform a symmetry reduction on this product automaton and how to exploit this in a model checking algorithm. Our approach assumes only that the initial states of the specification and implementation processes are symmetric with respect to some group G of permutations (Definition 11); this contrasts with several other approaches which assume that every state of the specification is G-symmetric.

In Sect. 4 we consider how to identify syntactically that a system is symmetric in particular types \(T_1,\ldots ,T_N\). We make certain assumptions about the CSP script principally that the script uses no constants of the relevant types. We show that the set of values associated with each channel or datatype constructor is invariant under permutations on each of \(T_1,\ldots ,T_N\). Further, we show that—for any \(\hbox {CSP}_M\) expression e, any environment \(\rho \) giving values to free variables, and any permutation \(\pi \)—evaluating e in \(\rho \) and then applying \(\pi \) gives the same result as first applying \(\pi \) to the values in \(\rho \) and then evaluating e: we denote this \(\pi (\mathsf{eval}\rho ~e) = \mathsf{eval}(\pi \mathbin {\scriptstyle \circ }\rho )~e\). In particular, this means that in the initial environment \(\rho _1\), \(\pi (\mathsf{eval}\rho _1~e) = \mathsf{eval}\rho _1~e\) (since \(\pi \mathbin {\scriptstyle \circ }\rho _1 = \rho _1\)), and hence that the semantics of each process is symmetric under \(\pi \). \(\hbox {CSP}_M\) includes, as a sub-language, a lazy functional language, roughly equivalent to Haskell without type classes, but with the addition of sets, mappings and associative concatenation (“dot”). This sub-language is very convenient for modelling complex data, but considerably complicates reasoning about the full language.

Internally, FDR represents an LTS by a supercombinator, consisting of LTSs for component processes, with rules describing how component transitions are combined. Supercombinators are a powerful and efficient technique for modelling LTSs. They are generally applicable for modelling systems built from a number of components. In Sect. 5 we describe supercombinators and identify conditions on a supercombinator under which the corresponding LTS is symmetric.

In Sect. 6 we build on the syntactic result of Sect. 4. We show how to identify symmetries within a supercombinator. We then show how to apply a particular permutation to a state of the supercombinator.

In Sect. 7 we describe a way to calculate representative members of equivalence classes. This is believed to be a difficult problem, in general [6]. Our technique does not always give unique representatives (although nearly always does), but allows representatives to be calculated efficiently. Our approach works well in practice.

In Sect. 8 we report the results of experiments using our extension. The experiments show that the symmetry reduction provides considerable speed-ups in model checking; further, it allows us to analyse much larger systems than would otherwise have been possible. We also compare experimentally our technique for finding representative members of equivalence classes with two existing techniques; our results suggest that our approach is typically faster and in particular scales better.

We conclude in Sect. 9.

In the interests of exposition, we slightly simplify some aspects in the body of the paper and concentrate on the main ideas. In particular, in the body we restrict to the traces model of CSP; the stable failures and failures–divergences models (which require a different automaton for the specification) are dealt with in “Appendix B”; these require a generalisation of LTSs, which we present in “Appendix A”. Further, in the body we give a simplified version of supercombinators; full supercombinators are described in “Appendix C”, and symmetry techniques over them are described in “Appendix D”. In the interest of space, we omit some straightforward proofs; these can be found in [15].

Our main contributions, then, are:

-

The identification of general syntactic conditions under which a system will be symmetric, based on a powerful language supporting complex datatypes;

-

A general technique for finding representative members of equivalence classes for systems built from components, which seems to perform better than previous techniques;

-

The adaptation of symmetry reduction to model checking based upon the powerful technique of supercombinators, in a way that makes fewer assumptions about the specification than some previous approaches; and

-

The implementation of these techniques in an easy-to-use way, within an industrial-strength model checker, giving informative counterexamples when refinements do not hold.

1.1 A brief overview of CSP

In this section we give a brief overview of the fragment of \(\hbox {CSP}_M\) that we will use in this paper. (Our technique applies to the whole of \(\hbox {CSP}_M\), but we omit here operators that we will not use in the paper.) For more details on CSP, see [35, 39].

CSP is a process algebra for describing programs or processes that interact with their environment by communication. A CSP script contains definitions of datatypes, values, functions, channels and processes and also contains assertions to be checked by FDR; we explain these in more detail below. Figure 1 contains a full script; we explain this in detail in the next section, but use it to illustrate particular points here. (Scripts are written in ASCII; the script in Fig. 1 has been pretty printed.)

User-defined types may be introduced using the keyword datatype. For example, line 2 of the script introduces a type NodeIDType that contains seven atomic values. Such types may contain nonatomic values. For example, the declaration

creates a type that contains all values of the form Just.x where x is an Int, and the distinguished value Nothing; note how values are constructed using the dot operator “.”. Such datatype declarations may be recursive. For example, the declaration

defines a type that is isomorphic to finite lists containing Ints.

\(\hbox {CSP}_M\) contains, as a sub-language, a strongly typed functional language, similar to Haskell but without type classes, and with the addition of sets and mappings. Thus, a script may contain definitions of values and of functions. Line 3 of the script defines a value NodeId which is a set containing six values. (diff is the set difference function.) The following function returns the length of an element of the above IntList type.

Built-in functions of the functional sub-language are described in [39].

Processes communicate via atomic events. Events often involve passing values over channels; for example, the event c.3 represents the value 3 being passed on channel c. Channels may be declared using the keyword channel. For example, line 11 contains a declaration of a channel freeNode whose events are of the form freeNode.t.n for each \(t \in \textsf {ThreadID}\) and \(n \in \textsf {NodeID}\) (so 18 events in total). Each channel has a fixed type, but channels can be declared to pass arbitrary values (excluding processes).

The simplest process is STOP, which represents a deadlocked process that cannot communicate with its environment. The process \(a \rightarrow P\) offers its environment the event a; if the event is performed, the process then acts like P. The process \(c?x \rightarrow P\) is initially willing to input an arbitrary value v on channel c, i.e. it is willing to perform any event of the form c.v; it binds the variable x to the value v received and then acts like P (which may use x). For example, line 26 defines a simple process Lock that repeatedly will perform any event of the form lock.t for \(t \in {\textsf {ThreadID}}\) and then performs the corresponding unlock.t event. The process \(c!v \rightarrow P\) outputs value v on channel c. Inputs and outputs may be mixed within the same communication, for example, the construct getDatum?t!me!datum (line 16) indicates that the process is willing to perform any event of the form getDatum.t.me.datum for \(t \in {\textsf {ThreadID}}\), but using the current values of me and datum (from the process’s parameters).

The process \(P \Box Q\) can act like either P or Q, the choice being made by the environment: the environment is offered the choice between the initial events of P and Q. For example, the Top process (line 22 of Fig. 1) is willing to communicate on either the getTop or setTop channel. By contrast,  may act like either P or Q, with the choice being made internally (i.e. nondeterministically), not under the control of the environment. The process \({\mathbf {\mathsf{{if}}}}\, b\, {\mathbf {\mathsf{{then}}}}\, P {\mathbf {\mathsf{{else}}}}\, Q\) represents a conditional. \( b \& P\) is a guarded process that makes P available only if b is true; it is equivalent to \({\mathbf {\mathsf{{if}}}}\, b\, {\mathbf {\mathsf{{then}}}}\, P {\mathbf {\mathsf{{else}}}}\, STOP\).

may act like either P or Q, with the choice being made internally (i.e. nondeterministically), not under the control of the environment. The process \({\mathbf {\mathsf{{if}}}}\, b\, {\mathbf {\mathsf{{then}}}}\, P {\mathbf {\mathsf{{else}}}}\, Q\) represents a conditional. \( b \& P\) is a guarded process that makes P available only if b is true; it is equivalent to \({\mathbf {\mathsf{{if}}}}\, b\, {\mathbf {\mathsf{{then}}}}\, P {\mathbf {\mathsf{{else}}}}\, STOP\).

The process SKIP terminates immediately, represented by the special event \(\surd \). P; Q represents the sequential composition of P and Q: P is run, but when it terminates, Q is run.

The process P [|A|] Q runs P and Q in parallel, synchronising on events from A. The process \(P |||Q\) interleaves P and Q, i.e. runs them in parallel with no synchronisation. The process \(P \ A\) acts like P, except the events from A are hidden, i.e. turned into internal \(\tau \) events.

Each of the binary operators has a corresponding indexed operator. For example,  (sometimes written as

(sometimes written as  ) is an indexed nondeterministic choice, with the choice being made over the processes P(x) for x in X, and

) is an indexed nondeterministic choice, with the choice being made over the processes P(x) for x in X, and  (sometimes written as

(sometimes written as  ) is a parallel composition of the processes P(t) for \(t \in T\), where each P(t) is given alphabet A(t), and processes synchronise on events in the intersection of their alphabets.

) is a parallel composition of the processes P(t) for \(t \in T\), where each P(t) is given alphabet A(t), and processes synchronise on events in the intersection of their alphabets.

CSP can be given an operational semantics in terms of labelled transition systems. From this, a denotational semantics can be defined. (Alternatively, the denotational semantics can be defined directly over the syntax, compositionally [35].) We describe these formally in Sect. 2 and Appendix A.

The simplest denotational model is the traces model. A trace of a process is a sequence of (visible) events that a process can perform. We say that P is refined by Q in the traces model, written \(P \sqsubseteq _T Q\), if every trace of Q is also a trace of P. FDR can test such refinements automatically, for finite-state processes. Typically, P is a specification process, describing what traces are acceptable; this test checks whether Q has only such acceptable traces. Refinement assertions are written in \(\hbox {CSP}_M\) scripts using the assert keyword (e.g. line 49).

FDR supports various compression functions that can be applied to processes. Most of these transform the labelled transition system in a way that preserves the denotational semantics, but normally gives a transition system with fewer states, so as to make model checking faster. (Some compression functions do not preserve the denotational semantics and are designed for special-purpose checks.)

1.2 A running example

We introduce here a running example, which we use to illustrate some of our techniques. (Our main interest is in more sophisticated concurrent datatypes than this that aim to be lock-free and linearisable [17]; however, we choose a simpler example here.) The example is of a concurrent lock-based stack that uses a linked list of nodes. The CSP model is presented in Fig. 1. This particular model includes six nodes, four possible data values that can be stored, and three threads (lines 2–5), but these parameters can easily be changed.

Each node is represented by a process that is initially free (FreeNode(me)), but may be initialised by a thread to hold a datum and a reference to another node (Node(me, datum, next)); subsequently, the datum or next reference may be read, or the node freed.

A variable holding the top of the stack is also represented by a process (Top(top)), where the top may be read or set. Likewise, the lock is represented by a process (Lock) which may be alternately locked and unlocked.

Finally, each thread is represented by a process (Thread(me)). A thread may perform a push by obtaining the lock, reading the top, initialising a node appropriately to reference the previous top, setting the top to reference the new node, signalling completion and releasing the lock. It may perform a pop by obtaining the lock and reading the top; if the top is Null, then it signals that the pop failed because the stack is empty, and releases the lock; otherwise, it obtains the node referenced by the top node, updates the top to reference it, reads the datum from the previous top, signals completion, frees the node and releases the lock.

The processes are combined in parallel (lines 41–45), with all events hidden except those signalling completion of operations (process System). Figure 2 gives an illustration of a state of the system.

An illustration of a state of the system. Thread T1 is pushing node N2 onto the stack and is about to set the top variable to point to N2 (in the syntactic state \( \rightarrow \ldots \)). Each box in the figure represents a process. Each edge illustrates where a variable of one process holds the identity of another

The specification is that of a stack. This is captured by the process Spec(s) (lines 47–48); the sequence s represents the contents of the stack. In the state illustrated in Fig. 2, we would expect the specification process to be in state Spec(\({\mathsf {<}}\)C,B\({\mathsf {>}}\)).Footnote 1 If the stack is nonempty, the top element can be popped, and otherwise a pop may fail; if the stack is not full, an element can be pushed on. The refinement check (line 49) tests whether the system refines the specification, i.e. whether every trace of the system is allowed by the specification, meaning that each sequence of push and pop operations on the system satisfies the normal properties of a stack.

FDR can verify the refinement check. However, it is slow, taking about thirty minutes on a 16-core machine and exploring 7.8 billion states and 21.4 billion transitions. However, there is a lot of symmetry in the system: it is symmetric in the types Data of data and ThreadID of thread identities and the subtype NodeID of real node identities. (Note that it is not symmetric in the type NodeIDType, which includes NodeID, because Null is treated as a distinguished value.) In Fig. 2, applying any permutation to each of these types gives a state that is equivalent, in a sense that we will make formal later. Applying the symmetry reduction technique of this paper to this system, for these three types, reduces the number of states to 99 thousand and reduces the checking time to less than a second.

Note, in particular, that while the initial state of the specification is symmetric, it can evolve into a state where it is holding data, where it is not symmetric with respect to the type Data. This is in contrast to several other approaches to symmetry reduction which require every state of the specification to be symmetric.

1.3 Related work

There have been several previous works applying symmetry reduction to model checking. Excellent surveys appear in [31, 41].

Clarke et al. [6, 7] consider symmetry in the context of symbolic temporal logic model checking. They consider symmetries that permute components within system states; however, the permutations do not affect the values in shared variables, and so they do not capture all symmetries present when one process can hold the identity of another, as in our motivating example. They use the representative technique: they adapt the transition relation so as to produce representative members of each equivalence class of states, thereby factoring the transition system with respect to equivalence. They then show that, subject to certain restrictions, the specification \(\phi \) (in \(\hbox {CTL}^*\)) is satisfied in the reduced transition system iff it is satisfied in the original system. More precisely, they require that equivalent states have the same set of propositional labels from \(\phi \): this means that the specification cannot talk directly about symmetric values, which makes it more restrictive than our approach. They show that finding unique representatives, in general, is at least as hard as the graph isomorphism problem, which is widely accepted as being difficult (although not known to be NP-complete). They therefore adapt their technique to use representatives that might not be unique. Their approach requires the user to define how to choose representatives.

Emerson and Sistla [11] also consider symmetry in the context of temporal logic model checking. Their focus is on systems containing many identical or isomorphic components. They show how symmetry of the model can be deduced from symmetry of the system’s structure. As with [7], they factor the transition system with respect to equivalence and prove that the specification (in \(\hbox {CTL}^*\) or Mu-Calculus) is satisfied in the reduced transition system iff it is satisfied in the original system. They allow the group actions to also operate on the labels of the state; however, they require that the group actions preserve certain significant sub-formulas of the specification, which makes their approach more restrictive than ours. In [12], the same authors extend their approach, so as to model check against a specification written in propositional linear temporal logic, relaxing the above condition, using an approach similar to ours.

Sistla et al. [38] describe a model checker, SMC, that builds on the ideas of [12]. In addition to factoring the transition system with respect to symmetric equivalence, they employ a second reduction strategy known as state symmetry: if there are several symmetric transitions from a particular state (so the successor states are symmetric), then only one such transition is expanded. (We leave the investigation of this reduction strategy within FDR as future work: it seems somewhat harder in our setting, because multiple processes synchronise on each transition.) They store previously seen states in a hash table using a hash function that respects symmetries. (So symmetric states are placed in the same bucket.) When a new state s is encountered, for every state \(s'\) that hashes to the same value, the algorithm tries to test whether s and \(s'\) are indeed symmetric (using an approximating algorithm that sometimes fails to identify symmetries).

Ip and Dill [19] investigate symmetry using the Mur\(\phi \) model checker. They introduce the notion of a scalarset: effectively a type where all elements are treated equivalently: this is analogous to our symmetric types. They show that any Mur\(\phi \) program using such scalarsets is symmetric in each scalarset. They then factor the transition system with respect to the symmetry relation, as with the previous papers. They restrict to certain simple correctness properties: error freedom and deadlock freedom.

Bošnački et al. [3] describe an extension to the Spin model checker to support symmetry, building on the techniques of [19]. They present several strategies for defining representative functions; we compare these with our own approach in Sects. 7 and 8.

The work closest to ours is that by Moffat et al. [32], who investigate the use of symmetry in CSP model checking. They introduce the notion of permutation bisimulations—informally, renaming transitions of an LTS according to some permutation on the events—which we adapt. They then factor the LTS according to the induced equivalence. They present structured machines—a restricted form of supercombinators—to represent CSP systems and present some algebraic rules that can be used to deduce symmetries between components. They then present a model checking algorithm based on these ideas, restricting the specification process to one such that every state is symmetric (in contrast to our approach). Our advances over this work are: a much more general technique for identifying symmetry; a more general form of representing processes (i.e. supercombinators), which scales far better; fewer restrictions on the type of specification process that can be used; an efficient, general representative choosing algorithm; and the incorporation of these techniques within an industrial-strength model checker.

Jensen [21] applies symmetry reduction to the state space of coloured Petri nets; correctness conditions are rather limited, in particular considering only properties that are fully symmetric. Chiola et al. [5] perform a similar reduction, but also factor the firings of the net according to symmetry. Schmidt [37] also studies symmetry reduction in the context of Petri nets. Junttila [22] studies the complexity of this problem.

Leuschel et al. [25] give an extension for ProB, a model checker for B, that uses symmetry reduction. This uses a different technique called permutation flooding where every symmetric permutation of a state is added to the set of visited states.

TopSPIN [9, 10] is an extension to SPIN that enables symmetry reduction on a wider variety of scripts than other approaches. Specifically, it allows processes to hold references to other processes (like we allow in this paper, but unlike [6], as discussed above). The authors show how to extend any algorithm used to find representatives when no process holds such references to an algorithm for when processes do hold references. The result is an exact algorithm that always finds a unique representative, but may take exponential time to do so.

2 Background

In this section we describe relevant background material concerning model checking. We describe how FDR represents CSP processes in terms of labelled transition systems (LTSs) and present some operations over those LTSs. We give here a slightly simplified description, in the interests of exposition. In particular, in the body of the paper we restrict to the traces model, which will mean that we can represent processes by labelled transition systems (Definition 1). In the appendices, we will generalise, so as to be able to consider the other semantic models; this will require a generalisation of labelled transition systems.

We also briefly review permutations and permutation groups and then formalise the notion of symmetry over LTSs.

2.1 Labelled transition systems

We assume a set of events \(\varSigma \) with \(\tau ,\surd \notin \varSigma \). Let \({\varSigma ^{\surd }}= \varSigma \cup \{\surd \}\) and \({\varSigma ^{\tau \surd }}= \varSigma \cup \{\surd ,\tau \}\). Let \({\varSigma ^{\surd }}^*\) denote all sequences of events from \({\varSigma ^{\surd }}\) such that \(\surd \) occurs only as the last event (if at all); we call such a sequence a trace.

Definition 1

A labelled transition system (LTS) is a tuple \(L = (S, \varDelta , init)\) where:

-

S is a set of states;

-

\(\varDelta \subseteq S \times {\varSigma ^{\tau \surd }}\times S\) is a transition relation;

-

\(init\in S\) is the initial state.

In the remainder of this paper we restrict to connected LTSs, i.e. where every state is reachable from the initial state by zero or more transitions.

If \((s,a,s') \in \varDelta \), we write \(s \xrightarrow {a} s'\) (we decorate the arrow with “L” if this is not implicit from the context); this indicates that the process from state s can perform the event a and move into state \(s'\). We write \(s \xrightarrow {a}\) iff \(\exists s' \cdot s \xrightarrow {a} s'\). For \(tr = a_1 \ldots a_n \in ({\varSigma ^{\tau \surd }})^*\), we write  iff there exists \(s_0, \ldots , s_n\) such that \(s = s_0 \xrightarrow {a_1} s_1 \cdots s_{n-1} \xrightarrow {a_n} s_n = s'\). We write

iff there exists \(s_0, \ldots , s_n\) such that \(s = s_0 \xrightarrow {a_1} s_1 \cdots s_{n-1} \xrightarrow {a_n} s_n = s'\). We write  iff s can perform zero or more \(\tau \)-events to become \(s'\). We sometimes write \(s \in L\) to mean \(s \in S\).

iff s can perform zero or more \(\tau \)-events to become \(s'\). We sometimes write \(s \in L\) to mean \(s \in S\).

We can then define the traces of a state s of an LTS:Footnote 2

If L is an LTS, we will write traces(L) for the traces of the initial state of L.

Let S and I be LTSs, representing a specification and implementation, respectively. We define refinement between S and I in the traces model of CSP as follows.

FDR translates CSP processes into LTSs and then tests for the above refinement.

When FDR performs a refinement check of the form \(Spec \sqsubseteq Impl\), it starts by normalising the specification Spec [35, Section 16.1]. We remind the reader of the definition of bisimilarity.

Definition 2

(Bisimilarity) Let \(L_1 = (S_1, \varDelta _1, init_1)\), and \(L_2 = (S_2, \varDelta _2, init_2)\) be LTSs. We say that \(\mathord {\sim } \subseteq S_1 \times S_2\) is a bisimulation between\(L_1\)and\(L_2\) iff whenever \((s_1, s_2) \in \mathord {\sim }\) and \(a \in {\varSigma ^{\tau \surd }}\):

-

If \(s_1\xrightarrow {a} s_1'\) then \(\exists s_2' \in S_2 \cdot s_2 \xrightarrow {a} s_2' \wedge s_1' \sim s_2'\);

-

If \(s_2 \xrightarrow {a} s_2'\) then \(\exists s_1' \in S_1 \cdot s_1 \xrightarrow {a} s_1' \wedge s_1' \sim s_2'\).

We say that \(s_1, s_2 \in S\) are bisimilar iff there exists a bisimulation relation \(\sim \) such that \(s_1 \sim s_2\).

In many cases (e.g. the Spec process from Fig. 1), normalisation leaves the specification unchanged. However, normalisation has an effect, in particular, when the specification contains nondeterminism: the critical property of the normalised process (Lemma 4) is that it reaches a unique state after each trace.

Definition 3

Given an LTS \(L = (S, \varDelta , init)\), its prenormal form is an LTSFootnote 3\(N = ({\mathbf{P }S - \{\{\}\}}, \varDelta _N, init_N)\) defined as follows. Each state is a nonempty element of \(\mathbf{P }S\). The initial state is  , i.e. all states reachable (in L) from \(init\) by zero or more \(\tau \)-transitions. For each state \(\hat{s} \in \mathbf{P }S - \{\{\}\}\), and for each non-\(\tau \) event a that can be performed by a member of \({\hat{s}}\), we include in \(\varDelta _N\) an a-transition from \({\hat{s}}\) to

, i.e. all states reachable (in L) from \(init\) by zero or more \(\tau \)-transitions. For each state \(\hat{s} \in \mathbf{P }S - \{\{\}\}\), and for each non-\(\tau \) event a that can be performed by a member of \({\hat{s}}\), we include in \(\varDelta _N\) an a-transition from \({\hat{s}}\) to  , i.e. all states reached (in L) from s by an a-transition followed by zero or more \(\tau \)-transitions.

, i.e. all states reached (in L) from s by an a-transition followed by zero or more \(\tau \)-transitions.

The normal form for L, denoted norm(L), is calculated by taking the prenormal form for L, restricting to reachable states and then factoring by strong bisimulation (i.e. compressing the LTS, combining bisimilar states). We say that an LTS is normalised if it is the normal form of some LTS.

Figure 3 gives an example.

Note that the normal form for a process has no \(\tau \) transitions, no pair of transitions from the same state with the same label and no strongly bisimilar states. The following lemma shows that in a normalised LTS, the state reached after a particular trace is unique.

Lemma 4

If LTS \(P = (S, \varDelta , init)\) is normalised, and  and

and  then \(p = p'\).

then \(p = p'\).

Lemma 5

Let \(N = norm(P)\). Then the traces of P and N are equal.

In order to check whether \(P \sqsubseteq _T Q\), FDR explores the product automaton of P and Q.

Definition 6

Let \(P = (S_P, \varDelta _P, init_P)\) be a normalised LTS, and \(Q = (S_Q, \varDelta _Q, init_Q)\) be an LTS. The product automaton of P and Q is a tuple \((S, \varDelta , init)\) such that

-

\(S = S_P \times S_Q\);

-

\(((p, q), a, (p', q')) \in \varDelta \) iff \(q \xrightarrow {a}_Q q'\), and if \(a \ne \tau \) then \(p \xrightarrow {a} p'\) else \(p = p'\);

-

\(init= (init_P, init_Q)\).

Note that P contains no \(\tau \) transitions, hence the asymmetry in the definition.

Example 7

Let channels l, m and r pass data from type \(T = \{A,B\}\), and consider the processes

This represents two one-place buffers chained together. Its specification is a two-place buffer:

The LTSs for Q, P (which is already normalised) and the product automaton are shown in Fig. 4.

The LTSs for the processes from Example 7 and their product automaton. We write, for example, \(L \parallel R\) as shorthand for

The following lemma relates sequences of transitions of the product automaton to sequences of transitions of the components.

Lemma 8

Suppose M is the product automaton of P and Q. Then

Further, \(p_1\) is unique (for a given choice of tr).

2.2 Permutations

Let X be a set. A permutation on X is a bijection \(\pi : X \rightarrow X\). We denote the inverse of a permutation \(\pi \) by \(\pi ^{-1}\). We write \(\pi ;\pi '\) for the forward composition of \(\pi \) and \(\pi '\), and \(\pi \mathbin {\scriptstyle \circ }\pi '\) for the backwards composition:

We let \(id_X\) denote the identity permutation on X; we write this simply as id when the underlying set is clear from the context.

The set of all permutations of a set X forms a group under backwards composition, which we denote \( Sym (X)\). In the Introduction, we mentioned permutations of the (sub-)types NodeID, Data and ThreadID from Fig. 1. If G is a group, then \(G' \le G\) denotes that \(G'\) is a subgroup of G.

Our main focus is on event permutations. However, we do not want to change the semantic events, \(\surd \) and \(\tau \). Thus, we let \( EvSym \le Sym ({\varSigma ^{\tau \surd }})\) denote the largest symmetry subgroup such that for all permutations \(\pi \in EvSym \), \(\pi (\tau ) = \tau \) and \(\pi (\surd ) = \surd \). \(\pi \) is an event permutation iff \(\pi \in EvSym \).

We will often consider systems that are symmetric in one or more disjoint datatypes, say \(T_1,\ldots ,T_n\); the system in the running example is symmetric in NodeID, Data and ThreadID. In this case, let \(\pi _i \in Sym(T_i)\), for \(i = 1,\ldots ,n\); then consider \(\pi = \bigcup _{i=1}^n \pi _i\). We say that \(\pi \) is type-preserving, since it maps elements of each \(T_i\) onto \(T_i\). Note that \(\pi \in Sym(\bigcup _{i=1}^n T_i)\); further, the group of all such type-preserving permutations is isomorphic to the direct product of \(Sym(t_1)\), ..., \(Sym(t_n)\).

Given a type-preserving permutation \(\pi \) on atomic values, \(\pi \) can be lifted to events in \({\varSigma ^{\tau \surd }}\) by point-wise application; for example, \(\pi (getDatum.t.n.d) = getDatum.\pi (t).\pi (n).\pi (d)\).

2.3 Symmetric LTSs

We now define what it means for an LTS to be symmetric. For this Sects. 3 and 5, we consider symmetries from an arbitrary event permutation group \(G \le EvSym \). In later sections, we specialise the group to be formed from permutations on datatypes.

The following definition is adapted from [32].

Definition 9

(Permutation bisimilarity) Let \(L_1 = (S_1, \varDelta _1, init_1)\), and \(L_2 = (S_2, \varDelta _2, init_2)\) be LTSs, and let \(\pi \in G\) be an event permutation. We say that \(\mathord {\sim } \subseteq S_1 \times S_2\) is a \(\pi \)-bisimulation between\(L_1\)and\(L_2\) iff whenever \((s_1, s_2) \in \mathord {\sim }\) and \(a \in {\varSigma ^{\tau \surd }}\):

-

If \(s_1\xrightarrow {a} s_1'\) then \(\exists s_2' \in S_2 \cdot s_2 \xrightarrow {\pi (a)} s_2' \wedge s_1' \sim s_2'\);

-

If \(s_2 \xrightarrow {a} s_2'\) then \(\exists s_1' \in S_1 \cdot s_1 \xrightarrow {\pi ^{-1}(a)} s_1' \wedge s_1' \sim s_2'\).

We say that \(s_1, s_2 \in S\) are \(\pi \)-bisimilar, denoted \(s_1 \sim _\pi s_2\) iff there exists a \(\pi \)-bisimulation relation \(\sim \) such that \(s_1 \sim s_2\). We say that \(L_1\) and \(L_2\) are \(\pi \)-bisimilar, denoted \(L_1 \sim _\pi L_2\), iff \(init_1 \sim _\pi init_2\).

Note that the case \(\pi = id\) corresponds to strong bisimulation.

For example, in Fig. 4, the states \(P'(A)\) and \(P'(B)\) (second column of the second LTS) are \(\pi \)-bisimilar, where \(\pi (A) = B\) and \(\pi (B) = A\). Further, the initial state of each LTS is \(\pi \)-bisimilar to itself, for every \(\pi \in Sym(\{A,B\})\).

The following lemma follows immediately from the above definition.

Lemma 10

Let \(\pi \) and \(\pi '\) be event permutations.

-

1.

If \(s_1 \sim _\pi s_2\) then \(s_2 \sim _{\pi ^{-1}} s_1\);

-

2.

If \(s_1 \sim _\pi s_2\) and \(s_2 \sim _{\pi '} s_3\) then \(s_1 \sim _{\pi ;\pi '} s_3\).

The techniques we present in the following sections will require the specification and implementation LTSs to be symmetric in the following sense.

Definition 11

Let L be an LTS and \(\pi \in EvSym\) be an event permutation. We say that L is \(\pi \)-symmetric iff \(L \sim _\pi L\). Let \(G \le EvSym \). We say that L is G-symmetric iff for all \(\pi \in G\), L is \(\pi \)-symmetric.

For example, each LTS in Fig. 4 is \(Sym(\{A,B\})\)-symmetric. Note that every LTS is \(\{id\}\)-symmetric.

Lemma 12

If L is \(\pi \)-symmetric then for every state s of L, there exists a state \(s'\) in L such that \(s \sim _\pi s'\).

3 Refinement checking on symmetric LTSs

In this section we present—at a fairly high level of abstraction—our refinement checking algorithms for the traces model. In Sect. 3.1 we consider relevant properties of the specification, in particular that normalising a symmetric specification LTS preserves symmetry. As noted in introduction, our basic approach is to map each state encountered in the search to a representative member of its G-equivalence class; we define such representatives in Sect. 3.2. In Sect. 3.3 we present the reduced product automaton, which is explored by the model checking algorithm, created by replacing each state by its representative; we then present relevant results about the reduced automaton. In Sect. 3.4, we translate the refinement relationship into a property of the product automaton and so present the model checking algorithm itself.

Fix an event permutation group \(G \le EvSym \).

3.1 Symmetric normalised specifications

Recall that FDR normalises the specification before exploring the product automaton. We prove that symmetry is preserved by normalisation.

Lemma 13

If \(P = (S_P,\varDelta _P,init_P)\) is a G-symmetric LTS, then norm(P) is a G-symmetric normalised LTS.

Proof

(sketch). Let \(N = (S_N,\varDelta _N,init_N)\) be the prenormal form for P. Let \(\pi \in G\), and suppose \(\sim \) is a \(\pi \)-bisimulation over \(S_P\). Define a corresponding relation \(\approx \) over \(S_N\) as follows:

It is then straightforward to show that \(\approx \) is a \(\pi \)-bisimulation [15]. Hence N is G-symmetric.

Clearly neither factoring by strong bisimulation nor removing unreachable states breaks G-symmetry. Hence norm(P) is G-symmetric. \(\square \)

We will need to apply permutations to states of the specification. The following lemma justifies the soundness of this.

Lemma 14

Let P be a G-symmetric normalised LTS. Then, for each \(s\in P\) and \(\pi \in G\), there exists a unique\(s'\in P\) such that \(s\sim _\pi s'\).

Proof

The existence of \(s'\) follows directly from Lemma 12. In order to show \(s'\) is unique, suppose \(s \sim _\pi s''\). Then \(s' \sim _{\pi ^{-1};\pi } s''\), i.e. \(s' \sim _{id} s''\), that is, s and \(s''\) are strongly bisimilar, so \(s' = s''\) by definition of normalisation. \(\square \)

Definition 15

Let P be a G-symmetric normalised LTS, and let \(s\in P\) and \(\pi \in G\). Then, we write \(\pi (s)\) for the unique \(s'\), implied by the above lemma, such that \(s\sim _\pi s'\).

3.2 Representative members

The following definition formalises the notion of a representative member of an equivalence class.

Definition 16

Let \(L = (S, \varDelta , init)\) be a G-symmetric LTS. Write \(s_1 \sim _G s_2\) iff there exists \(\pi \in G\) such that \(s_1 \sim _\pi s_2\). Note that \(\sim _G\) is an equivalence relation.

We say that \(rep : S \rightarrow S\) is a G-representative function forL if \({s \sim _G rep(s)}\) for every \(s \in S\). We define \(rep(S) = \{rep(s) \mid s \in S\}\), the set of representative states; we abuse notation and write rep(L) for the same set.

We say that rep gives unique representatives if \(\forall s, s' \in S \cdot s \sim _G s' \implies rep(s) = rep(s')\), i.e. rep selects a unique representative of each equivalence class.

For the rest of this section, we assume the existence of a G-representative function rep for Q.

Ideally, we would like our representative functions to produce unique representatives, because this will give the greatest reduction in the state space. However, finding unique representatives is hard, in general [6]. Therefore, our approach will not assume this. Section 7 considers how to define a suitable efficient representative function that produces unique representatives in most cases.

3.3 The reduced product automata

We now show how to factor the product automaton using a G-representative function. Our subsequent model checking algorithm will search in this reduced product automaton.

Throughout this section, let \(P = (S_P, \varDelta _P, init_P)\) be a normalised G-symmetric LTS, \(Q = (S_Q, \varDelta _Q, init_Q)\) be a G-symmetric LTS, and rep be a G-representative function on Q.

Definition 17

We lift rep to pairs of states from \(S_P \times S_Q\) by defining

That is: we map q to its representative rep(q), and we map p according to a corresponding permutation \(\pi \). (There may be several such \(\pi \), in which case we choose an arbitrary one.) Below we use names like \((\hat{p}, \hat{q})\) for such representative pairs of states.

The rep-reduced product automaton of P and Q is a product automaton \((S_P \times rep(Q), \varDelta , init)\), such that

-

\((\hat{p}, \hat{q}) \xrightarrow {a} rep(p',q')\) in \(\varDelta \) if \(\hat{q}\in rep(Q)\), and \((\hat{p}, \hat{q}) \xrightarrow {a} (p', q')\) in the standard product automaton of P and Q (i.e. \(\hat{q}\xrightarrow {a}_Q q'\), and if \(a \ne \tau \) then \(\hat{p}\xrightarrow {a}_P p'\) else \(\hat{p}= p'\)).

-

\(init= rep(init_P, init_Q)\).

Example 18

Recall the processes from Example 7. The product automaton there has five equivalence classes for states. Figure 5 gives the reduced product automaton, based on a function rep that gives unique representatives.

Reduced product automaton for the processes from Example 7

Throughout the rest of this section, let S be the standard product automaton of P and Q, and R the reduced product automaton of P and Q.

The following lemma, illustrated below, shows how steps of the standard product automaton are matched by steps of the reduced product automaton, and vice versa.

Lemma 19

-

1.

If \((p_1, q_1) \xrightarrow {a}_S (p_2, q_2)\) and \(q_1 \sim _\pi {\hat{q}}_1\) with \({\hat{q}}_1 \in rep(Q)\), then

$$\begin{aligned} \begin{aligned}&\exists {\hat{q}}_2 \in rep(Q), \pi ' \in G \cdot \\&\qquad (\pi (p_1), \hat{q}_1) \xrightarrow {\pi (a)}_R (\pi '(p_2), \hat{q}_2) \wedge q_2 \sim _{\pi '} \hat{q}_2. \end{aligned} \end{aligned}$$ -

2.

If \(({\hat{p}}_1, \hat{q}_1) \xrightarrow {a}_R (\hat{p}_2, \hat{q}_2)\), \(q_1 \sim _\pi \hat{q}_1\) and \(\hat{p}_1 = \pi (p_1)\), then

$$\begin{aligned} \begin{aligned}&\exists p_2, q_2, \pi ' \cdot (p_1, q_1) \xrightarrow {\pi ^{-1}(a)}_S (p_2, q_2) \\&\qquad \wedge q_2 \sim _{\pi '} \hat{q}_2 \wedge \hat{p}_2 = \pi '(p_2). \end{aligned} \end{aligned}$$

Proof

-

1.

Since \((p_1, q_1) \xrightarrow {a}_S (p_2, q_2)\) we have that \(q_1 \xrightarrow {a}_Q q_2\). Then since \({q_1 \sim _\pi {\hat{q}}_1}\), there exists \(q' \in S_Q\) such that \( \hat{q}_1 \xrightarrow {\pi (a)}_Q q' \wedge {q_2 \sim _\pi q'} \). Let \(\hat{q}_2 = rep(q')\) and \(\pi ''\) be such that \(q' \sim _{\pi ''} \hat{q}_2\). Then, \(q_2 \sim _{\pi '} \hat{q}_2\) where \(\pi ' = \pi ;\pi ''\).

If \(a \ne \tau \), then \(p_1 \xrightarrow {a}_P p_2\) by the definition of S. Hence, \(\pi (p_1) \xrightarrow {\pi (a)}_P \pi (p_2)\). So by the definition of R, \((\pi (p_1), \hat{q}_1) \xrightarrow {\pi (a)}_R (\pi ''(\pi (p_2)), \hat{q}_2)\). But \(\pi ''(\pi (p_2)) = \pi '(p_2)\), as required.

The case \(a = \tau \) is similar, except \(p_1 = p_2\) and \(\pi (p_1) = \pi (p_2)\).

-

2.

From the definition of R, there exists \(q_2'\) such that \(\hat{q}_1 \xrightarrow {a}_Q q_2'\) and \(\hat{q}_2 = rep(q_2')\). Then by the definition of \(\sim _\pi \), there exists \(q_2\) such that \(q_1 \xrightarrow {\pi ^{-1}(a)}_Q q_2\) and \(q_2 \sim _\pi q_2'\). Let \(\pi ''\) be such that \(q_2' \sim _{\pi ''} \hat{q}_2\). Then \(q_2 \sim _{\pi '} \hat{q}_2\) where \(\pi ' = \pi ;\pi ''\).

If \(a \ne \tau \), then by the definition of R, there exists \(p_2'\) such that \(\hat{p}_1 \xrightarrow {a}_P p_2'\) and \(\hat{p}_2 = \pi ''(p_2')\). Then, since \(\hat{p}_1 = \pi (p_1)\), there exists \(p_2\) such that \(p_1 \xrightarrow {\pi ^{-1}(a)}_P p_2\) and \(p_2' = \pi (p_2)\). Hence \(\hat{p}_2 = \pi '(p_2)\). Then from the definition of S, \( (p_1, q_1) \xrightarrow {\pi ^{-1}(a)}_S (p_2, q_2) \).

The case \(a = \tau \) is similar, except \(\hat{p}_1 = p_2'\) and \(p_1 = p_2\).

\(\square \)

The following lemma shows that for every trace of the standard product automaton, there is a corresponding trace of the reduced product automaton, and vice versa.

Lemma 20

Let tr be a trace in \({\varSigma ^{\tau \surd }}^*\).

-

1.

Suppose in the standard product automaton:

Then in the reduced product automaton:

-

2.

Suppose in the reduced product automaton:

Then in the standard product automaton:

Proof

Both parts follow by a straightforward induction, making use of Lemma 19. More precisely, we can show the following.

-

1.

If the standard product automaton has transitions as follows:

$$\begin{aligned} \begin{aligned}&(p_0, q_0) \xrightarrow {a_0}_S (p_1, q_1) \xrightarrow {a_1}_S \ldots \xrightarrow {a_{n-1}}_S (p_n, q_n) \\&\quad \wedge q_0 \sim _{\pi _0} {\hat{q}}_0 \in rep(Q) . \end{aligned} \end{aligned}$$Then the reduced product automaton has transitions as follows:

$$\begin{aligned} \begin{aligned}&(\pi _0(p_0), \hat{q}_0) \xrightarrow {\pi _0(a_0)}_R (\pi _1(p_1), \hat{q}_1) \xrightarrow {\pi _1(a_1)}_R \ldots \\&\qquad \qquad \xrightarrow {\pi _{n-1}(a_{n-1})}_R (\pi _n(p_n), \hat{q}_n)\wedge \\&\qquad \qquad \forall i \in \{0,\ldots ,n\} \cdot q_i \sim _{\pi _i} \hat{q}_i , \end{aligned} \end{aligned}$$for some \({\hat{q}}_1, \ldots , {\hat{q}}_n \in rep(Q)\), \(\pi _1, \ldots , \pi _n \in G\).

-

2.

If the reduced product automaton has transitions as follows:

$$\begin{aligned} \begin{aligned}&({\hat{p}}_0, \hat{q}_0) \xrightarrow {a_0}_R (\hat{p}_1, \hat{q}_1) \xrightarrow {a_1}_R \ldots \xrightarrow {a_{n-1}}_R (\hat{p}_n, \hat{q}_n) \\&\qquad \wedge q_0 \sim _{\pi _0} \hat{q}_0 \wedge \hat{p}_0 = \pi _0(p_0) . \end{aligned} \end{aligned}$$Then the standard product automaton has transitions as follows:

$$\begin{aligned} \begin{aligned}&(p_0, q_0) \xrightarrow {\pi _0^{-1}(a_0)}_S (p_1, q_1) \xrightarrow {\pi _1^{-1}(a_1)}_S \ldots \\&\qquad \xrightarrow {\pi _{n-1}^{-1}(a_{n-1})}_S (p_n, q_n)\wedge \\&\qquad \qquad \forall i \in \{0,\ldots ,n\} \cdot q_i \sim _{\pi _i} \hat{q}_i \wedge \hat{p}_i = \pi _i(p_i). \end{aligned} \end{aligned}$$for some \(p_1, \ldots , p_n \in S_P\), \(q_1, \ldots , q_n \in S_Q\), \(\pi _1, \ldots , \pi _n \in G\).\(\square \)

3.4 Refinement checking algorithm for the traces model

We now present the model checking algorithm for the traces model. Throughout this section, let \(P = (S_P, \varDelta _P, init_P)\) be a normalised G-symmetric LTS, \(Q = (S_Q, \varDelta _Q, init_Q)\) be a G-symmetric LTS, rep be a G-representative function on Q, S be the standard product automaton of P and Q, and R be the reduced product automaton of P and Q.

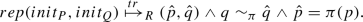

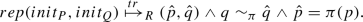

The following proposition shows how trace refinements are exhibited in the reduced product automaton.

Proposition 21

\(P \sqsubseteq _T Q\) iff

Proof

(\(\Rightarrow \)) We prove the contrapositive. Suppose

Then by Lemma 20, there exist a trace \(tr'\), states p and q, and \(\pi \in G\) such that

Also, since \(\hat{q}\xrightarrow {a}_Q\) and  , we have

, we have  HenceFootnote 4\((tr' \ \tau ) \frown \langle \pi ^{-1}(a)\rangle \in traces(init_Q)\), but (by the uniqueness of the state p reached after \(tr'\), Lemma 4) \((tr' \ \tau ) \frown \langle \pi ^{-1}(a)\rangle \notin traces(init_P)\). Hence \(P \not \sqsubseteq _T Q\).

HenceFootnote 4\((tr' \ \tau ) \frown \langle \pi ^{-1}(a)\rangle \in traces(init_Q)\), but (by the uniqueness of the state p reached after \(tr'\), Lemma 4) \((tr' \ \tau ) \frown \langle \pi ^{-1}(a)\rangle \notin traces(init_P)\). Hence \(P \not \sqsubseteq _T Q\).

(\(\Leftarrow \)) We prove the contrapositive. Suppose \(P \not \sqsubseteq _T Q\). Then there exist states p and q, \(tr' \in {\varSigma ^{\tau \surd }}^*\), \(b \in {\varSigma ^{\surd }}\) such that

Then by Lemma 20, there exist a trace \(tr \in {\varSigma ^{\tau \surd }}^*\), states \(\hat{p}\) and \(\hat{q}\), and \(\pi \in G\) such that

Further,

Taking \(a = \pi (b)\) we have the result. \(\square \)

For example, in the reduced product automaton of Example 18, the refinement holds, and each visible transition of a state of Q is matched by a transition of the corresponding state of P.

The above proposition justifies the model checking algorithm in Fig. 6. The algorithm searches the reduced product automaton for a state \((\hat{p}, \hat{q})\) such that  . We do not explicitly build the product automaton: instead we explore it on the fly, based on the specification and implementation of LTSs. The algorithm maintains a set seen of all states seen so far and a set pending of states that still need to be expanded; FDR implements pending as a queue, so as to perform a breadth-first search. Note that the algorithm is identical to the standard algorithm [14, 34], except for the application of rep to obtain the representative member. Hence, the highly optimised refinement algorithms of [14] can be used.

. We do not explicitly build the product automaton: instead we explore it on the fly, based on the specification and implementation of LTSs. The algorithm maintains a set seen of all states seen so far and a set pending of states that still need to be expanded; FDR implements pending as a queue, so as to perform a breadth-first search. Note that the algorithm is identical to the standard algorithm [14, 34], except for the application of rep to obtain the representative member. Hence, the highly optimised refinement algorithms of [14] can be used.

3.5 Counterexample generation

When FDR detects that a refinement assertion does not hold, it presents the user with an informative counterexample that explains why it does not hold. For example, if \(P \sqsubseteq _T Q\) fails, then the counterexample is of the form \(tr\frown \langle a\rangle \) where \(tr \in traces(P) \cap traces(Q)\), but \(tr\frown \langle a\rangle \in traces(Q)\setminus traces(P)\). FDR also allows the user to discover the contribution that each component makes to the counterexample.

Whenever FDR finds a new state, it records its predecessor state in the exploration. This allows FDR to construct the path followed through the reduced product automaton, i.e. a sequence of states \(({\hat{p}}_0, \hat{q}_0), (\hat{p}_1, \hat{q}_1), \ldots (\hat{p}_n, \hat{q}_n)\). However, it does not record the labels of the transitions, nor the permutations used to produce representatives, in order to reduce memory usage. In order to produce the counterexample, it needs to find the corresponding path through the standard product automaton, together with the labels of transitions. The construction is illustrated in Fig. 7. Below we write \((p,q) \sim _\pi (p',q')\) for \(\pi (p) = p' \wedge q \sim _\pi q'\).

The path in the standard product construction starts at the initial state \((p_0,q_0) = (init_P, init_Q)\). FDR can calculate the permutation \(\pi _0\) such that \((p_0,q_0) \sim _{\pi _0} (\hat{p}_0, \hat{q}_0) = rep(p_0,q_0)\).

Suppose, inductively, we have a state \((p_i,q_i)\) of the standard product automaton and a permutation \(\pi _i\) such that \((p_i,q_i) \sim _{\pi _i} (\hat{p}_i, \hat{q}_i)\). FDR needs to find b, \((p_{i+1}, q_{i+1})\) and \(\pi _{i+1}\) such that \((p_{i}, q_{i}) \xrightarrow {b}_S (p_{i+1}, q_{i+1})\) and \((p_{i+1}, q_{i+1}) \sim _{\pi _{i+1}} (\hat{p}_{i+1}, \hat{q}_{i+1})\).

Consider the transition \((\hat{p}_i, \hat{q}_i) \xrightarrow { }_R (\hat{p}_{i+1}, \hat{q}_{i+1})\) in the reduced product automaton. FDR searches over the transitions of \((\hat{p}_i,\hat{q}_i)\) in the standard product automaton to find a transition \((\hat{p}_i,\hat{q}_i) \xrightarrow {a_i}_S (p_{i+1}',q_{i+1}')\) such that \(rep(p_{i+1}',q_{i+1}') = (\hat{p}_{i+1}, \hat{q}_{i+1})\). Then \((\hat{p}_i,\hat{q}_i) \xrightarrow {a_i}_R (\hat{p}_{i+1},\hat{q}_{i+1})\) by construction of the reduced automaton. Also, since \((p_i,q_i) \sim _{\pi _i} (\hat{p}_i, \hat{q}_i)\), we have \((p_i,q_i) \xrightarrow {\pi _i^{-1}(a_i)}_S (p_{i+1}, q_{i+1})\) for some \((p_{i+1}, q_{i+1})\) such that \((p_{i+1}, q_{i+1})\sim _{\pi _i} (p_{i+1}', q_{i+1}')\). Let \(\pi _i'\) be such that \((p_{i+1}',q_{i+1}') \sim _{\pi _i'} (\hat{p}_{i+1},\hat{q}_{i+1})\). (The permutation \(\pi _i'\) is the one used by the representative function rep, so is easily found.) Then letting \(\pi _{i+1} = \pi _i ; \pi _i'\), we have \((p_{i+1},q_{i+1}) \sim _{\pi _{i+1}} (\hat{p}_{i+1}, \hat{q}_{i+1})\), as required.

Continuing in this way, we can construct the path corresponding to the counterexample through the standard product automaton. This algorithm works efficiently in practice.

4 Symmetric datatypes

\(\hbox {CSP}_M\) scripts are often defined using symbolic datatypes and are symmetric in subtypes of one or more such datatypes. The linked list-based example of the Introduction makes use of a datatype definition of the form

The resulting system is symmetric in the subtype excluding the special value Null.

We consider disjoint sets \(T_1\),...,\(T_N\), where each \(T_i\) is a subset of a datatype \({{\hat{T}}}_i\) and contains only atomic values. We call the \(T_i\)distinguished subtypes and the \({{\hat{T}}}_i\)distinguished supertypes. In the running example, the system is symmetric in the subtype NodeID of “real” node identities, but not in the containing supertype NodeIDType, which includes the special value Null. Likewise it is symmetric in the type Data and the type ThreadID. Let \({\mathcal {T}}= \bigcup _{i=1}^N T_i\). For the rest of this section, let \(\pi \in Sym({\mathcal {T}})\) be a type-preserving permutation on \({\mathcal {T}}\), i.e. for each i, \(\pi \) maps values of type \(T_i\) to \(T_i\).

We assume a well-typed script. Below we will show that, subject to certain assumptions—mainly that the CSP script contains no constants from \({\mathcal {T}}\)—the value of every expression is symmetric in those types.

We sketch the semantics of \(\hbox {CSP}_M\). As described in Sect. 1.1, \(\hbox {CSP}_M\) is a large language, with a powerful functional sub-language. We omit the full details here and refer the interested reader to [15]. We use an environment mapping identifiers (variables) to values:Footnote 5 . We write \(\rho \), \(\rho '\), etc., for environments. The type Expr represents expressions, including those that correspond to both processes and nonprocess values. The semantics of expressions is defined using a function

. We write \(\rho \), \(\rho '\), etc., for environments. The type Expr represents expressions, including those that correspond to both processes and nonprocess values. The semantics of expressions is defined using a function  such that \(\mathsf{eval}\rho ~e\) gives the value of expression e in environment \(\rho \). In particular, when \(\mathsf{eval}\) is applied to an expression that represents a process, it will return the corresponding LTS, augmented as follows. For later convenience, we label each state with the corresponding syntactic expression—or control state—and environment. We define a label to be a pair \((P,\rho )\) where P is a syntactic expressionFootnote 6 and \(\rho \) is an environment.

such that \(\mathsf{eval}\rho ~e\) gives the value of expression e in environment \(\rho \). In particular, when \(\mathsf{eval}\) is applied to an expression that represents a process, it will return the corresponding LTS, augmented as follows. For later convenience, we label each state with the corresponding syntactic expression—or control state—and environment. We define a label to be a pair \((P,\rho )\) where P is a syntactic expressionFootnote 6 and \(\rho \) is an environment.

Definition 22

An augmented LTS is an LTS where each state is given a label, as above.

Thus \(\mathsf{eval}\rho ~P\) will give an LTS whose root node has label \((P,\rho )\); equivalently, a node with label \((P,\rho )\) has semantics equal to \(\mathsf{eval}\rho ~P\). For brevity, we will sometimes identify a state with its label.

Let \(\pi \) be a type-preserving permutation on \({\mathcal {T}}\). We extend \(\pi \) to other values in the obvious way; for example:

-

For values x not depending on \({\mathcal {T}}\) we have \(\pi (x) = x\).

-

We lift \(\pi \) to dotted values, including events, by \(\pi (v_1.\ldots .v_n) = \pi (v_1).\ldots .\pi (v_n)\).

-

We lift \(\pi \) to tuples, sets, sequences, maps and values from datatypes by point-wise application.

-

We lift \(\pi \) to functions, considered as sets of maplets, by \(\pi (f) = \{ \pi (x) \mapsto \pi (y) \mid x \mapsto y \in f \}\); equivalently, in terms of a lambda abstraction, \(\pi (f) = \lambda z \cdot \pi (f(\pi ^{-1}(z)))\).

-

We lift \(\pi \) to labels by \(\pi (P,\rho ) = (P,\pi \mathbin {\scriptstyle \circ }\rho )\).

-

We lift \(\pi \) to augmented LTSs by application of \(\pi \) to the events of the transitions and to the labels of states.

Note that if L is an augmented LTS, then \(L \sim _\pi \pi (L)\).

Note that this lifting of \(\pi \) forms a bijection on Value.

The following definition captures our main assumption about the CSP script.

Definition 23

A CSP script is constant-free for \({\mathcal {T}}\) if

-

1.

The only constants from \({\mathcal {T}}\) that appear are within the definition of the distinguished types constituting \({\mathcal {T}}\) itself.

-

2.

The script makes no use of the built-in functions seq, mapToList, mtransclose or show, or the compression functions deter, chase or chase_nocache [39].

Clearly, processes that use constants from \({\mathcal {T}}\) might not be symmetric: for example, \(c!A \rightarrow STOP\), where \(A \in {\mathcal {T}}\), is not symmetric in \({\mathcal {T}}\).

The built-in functions listed in item 2 can be used to introduce constants from \({\mathcal {T}}\) and so can break symmetry. For example, seq(s) converts the set s into a sequence (in an implementation-dependent way), so x = head(seq(T)) (where T is a distinguished subtype) effectively sets x to be a constant from T.

The compression functions in item 2 prune an LTS by removing transitions according to certain rules (but in an implementation-dependent way). Thus they can also break symmetry. For example, each of them could convert the LTS corresponding to  into the LTS corresponding to \(c!A \rightarrow STOP\), for an arbitrary \(A \in T\).

into the LTS corresponding to \(c!A \rightarrow STOP\), for an arbitrary \(A \in T\).

The following is the main result of this section.

Proposition 24

Suppose a script is constant-free for \({\mathcal {T}}\), and let \(\pi \) be a type-preserving permutation on \({\mathcal {T}}\).

-

1.

The set of events is closed under \(\pi \): \(\pi ({\varSigma ^{\tau \surd }}) = {\varSigma ^{\tau \surd }}\). And likewise the set of values in each datatype is closed under \(\pi \).

-

2.

For every expression e in the script (which could correspond to a process or a nonprocess value), and every environment \(\rho \),

$$\begin{aligned} \pi (\mathsf{eval}\rho ~ e)= & {} \mathsf{eval}(\pi \mathbin {\scriptstyle \circ }\rho ) e . \end{aligned}$$

Proof

(sketch). The proof proceeds by a large structural induction over the syntax of \(\hbox {CSP}_M\); it includes subsidiary results concerning other aspects of \(\hbox {CSP}_M\), namely pattern matching, binding of variables, declarations and generators and qualifiers of set or sequence comprehensions. The proof is in [15]. \(\square \)

Corollary 25

Suppose a script is constant-free for \({\mathcal {T}}\), and let \(\pi \) be a type-preserving permutation on \({\mathcal {T}}\). Let \(\rho _1\) be the environment formed from the top-level declarations in the script. Then for every expression e in the script,

Proof

This follows immediately from Proposition 24, since \(\pi \mathbin {\scriptstyle \circ }\rho _1 = \rho _1\). \(\square \)

For example, this corollary shows that the LTSs representing the specification and implementation processes of each refinement check are \(\pi \)-symmetric.

The above results show that structural symmetry induces operational symmetry: when a system is constructed in a way that treats a family of processes symmetrically—as is required by the constant-free condition—then the induced LTS is symmetric in the identities of those processes. Further, when a system uses data with no distinguished values, then the induced LTS is symmetric in the type of that data.

We have extended FDR4 based on the above proposition. FDR can identify the largest subtype of a type for which the script is constant-free. Alternatively it can check that the script is indeed constant-free for a particular type, giving an informative error if not.

Related work. Previous approaches to symmetry reduction have been based on a much smaller language, with less support for complex datatypes, often performing symmetry reduction only on atomic values held in shared variables. Leuschel et al. [25] consider symmetry within the context of B, which includes deferred sets (similar to our distinguished types) together with tuples and sets; they prove a result similar to ours, in particular that the values of all invariants (predicates) are preserved by permutations of deferred types. Ip and Dill [19] and Bošnački et al. [3] also provide support for shared variables that store records and arrays; Ip and Dill give restrictions that ensure that elements of their symmetric types (called scalarsets) are treated symmetrically. Junttila provides support for lists, records, sets, multisets, associative arrays and union types. Each of these approaches is equivalent to a sub-language of the functional sub-language of \(\hbox {CSP}_M\).

Donaldson and Miller [10] consider a language that allows processes to hold the identities of other processes, but not more complex datatypes.

To our knowledge, no other approach to symmetry reduction supports such a powerful and convenient language, supporting both a wide range of datatypes and the ability for processes to hold values based on symmetric types.

5 Symmetry reduction on supercombinators

The algorithm in Sect. 3 was at a fairly high level of abstraction. We now consider how to implement it within the context of FDR.

Internally, FDR uses a powerful and efficient implicit representation of an LTS, called a supercombinator [14]. It consists of some component LTSs, along with rules that describe how transitions of the components should be combined to give transitions of the whole system: FDR automatically determines which processes should be modelled as component LTSs, and automatically builds corresponding rules. This allows the process to be model checked on the fly, without explicitly constructing the whole state space. Supercombinators are generally applicable for modelling systems built from a number of components.

In the running example, FDR would create an LTS for each of the threads, each of the nodes, the lock and the top variable. FDR would then automatically build rules for the supercombinator that would combine the transitions of these LTSs, corresponding to the way the processes are combined using parallel composition and hiding. Strictly speaking, this is just one possible choice, since FDR uses various heuristics to optimise supercombinators, but it is the most natural choice. Each rule combines transitions of a subset of the components and determines the event the supercombinator performs.

In this section, in the interest of exposition, we give a slightly simplified version of supercombinators, which is adequate to deal with the vast majority of examples, in particular systems built from a number of sequential components, combined using a combination of parallel composition, hiding and renaming. In appendix, we generalise and give the full definition of supercombinators, which can represent arbitrary CSP combinations of processes.

Below, we formally define supercombinators and the induced LTS. We then prove a result that identifies circumstances under which two supercombinators induce \(\pi \)-bisimilar LTSs, where \(\pi \) is an event permutation.

Let − be a value, not in \({\varSigma ^{\tau \surd }}\); we use it to denote a process performing no event. Let \(\varSigma ^- = {\varSigma ^{\tau \surd }}\cup \{-\}\).

Definition 26

A simplified supercombinator is a pair \(({\mathcal {L}}, {\mathcal {R}})\) where

-

\({\mathcal {L}} = \langle L_1, \ldots , L_n\rangle \) is a sequence of component LTSs;

-

\({\mathcal {R}}\) is a set of supercombinator rules (e, a) where

\(e \in (\varSigma ^-)^n\) specifies the action each component must perform, where − indicates that it performs none;

and \(a \in {\varSigma ^{\tau \surd }}\) is the event the supercombinator performs.

Given a supercombinator, a corresponding LTS can be constructed.

Definition 27

Let \({\mathcal {S}} = (\langle L_1, \ldots , L_n\rangle , {\mathcal {R}})\) be a supercombinator where \(L_i = (S_i, \varDelta _i, init_i)\). The LTS induced by\({\mathcal {S}}\) is the LTS \((S, \varDelta , init)\) such that:

-

States are tuples consisting of the state of each component: \(S \subseteq S_1 \times \cdots \times S_n\).

-

The initial state is the tuple containing the initial states of each of the components:

$$\begin{aligned} init= & {} (init_1, \ldots , init_n). \end{aligned}$$ -

The transitions correspond to the supercombinator rules firing. Let

$$\begin{aligned} \sigma = (s_1, \ldots , s_n), \qquad \sigma ' = (s'_1, \ldots , s'_n). \end{aligned}$$Then \((\sigma , a, \sigma ') \in \varDelta \) iff there exists \(((b_1, \ldots , b_n),a) \in \mathcal {R}\) such that for each component i:

if \(b_i \ne -\) then \(s_i \xrightarrow {b_i}_i s_i'\); and if \(b_i = -\) then \(s_i' = s_i\); i.e. component i performs \(b_i\), or does nothing if \(b_i = \mathord -\).

It is straightforward to define supercombinators corresponding to most CSP operators, including parallel composition, hiding and renaming, and to compose supercombinators hierarchically so as to define a single supercombinator for a system. The following example illustrates the ideas.

Example 28

Consider the system  . Let \(T = \{t_1,\ldots ,t_n\}\) and let the LTS for \(P(t_i)\) be \(L_i\), for each i. Then a possible supercombinator for this system would be

. Let \(T = \{t_1,\ldots ,t_n\}\) and let the LTS for \(P(t_i)\) be \(L_i\), for each i. Then a possible supercombinator for this system would be

where

Each rule in the first set corresponds to a synchronisation on event a between all processes \(P(t_i)\) such that \(a \in A(t_i)\); that event a is then replaced by \(\tau \) if \(a \in X\). Each rule in the second set corresponds to the system performing \(\tau \) as a result of \(P(t_j)\) performing \(\tau \).

5.1 Symmetries between supercombinators

We now consider symmetries between supercombinators, and how these correspond to symmetries between the corresponding LTSs. We are mainly interested in showing that the supercombinator corresponding to the implementation process in a refinement check is symmetric, i.e. \(\pi \)-bisimilar to itself for every event permutation \(\pi \) in some group G. However, we want to do this without creating that complete LTS: instead we just perform checks on the components and rules. The following definition captures the relevant properties.

For the remainder of this section, fix an event permutation group \(G \le EvSym \). Given a permutation \(\pi \in G\), we extend it to \(\varSigma ^-\) by defining \(\pi (-) = \mathord {-}\).

Definition 29

Consider a supercombinator \({\mathcal {S}} = ({\mathcal {L}}, {\mathcal {R}})\) with \(\mathcal {L} = \langle L_1, \ldots , L_n\rangle \). Let \(\pi \) be an event permutation, and let \(\alpha \) be a bijection from \(\{1,\ldots ,n\}\) to itself; we call \(\alpha \) a component bijection. We say that \({\mathcal {S}}\) is \(\pi \)-mappable to itself using \(\alpha \) if:

-

for every i, \(L_i \sim _\pi L_{\alpha (i)}\);

-

if \((e,a) \in {\mathcal {R}}\) then there is a rule \((e',\pi (a)) \in {\mathcal {R}}\) such that \(\forall i \in \{1,\ldots ,n\} \cdot e'(\alpha (i)) = \pi (e(i))\);

-

if \((e',a) \in {\mathcal {R}}\) then there is a rule \((e,\pi ^{-1}(a)) \in {\mathcal {R}}\) such that \(\forall i \in \{1,\ldots ,n\} \cdot e'(\alpha (i)) = \pi (e(i))\).

The first item shows how to map \(L_i\) onto a \(\pi \)-bisimilar LTS \(L_{\alpha (i)}\). The latter two items say that \({\mathcal {R}}\) acts on each LTS \(L_i\) in the same way as it acts on \(L_{\alpha (i)}\), but with the latter’s events renamed under \(\pi \).

We sometimes write \(\alpha \) as \(\alpha _\pi \), to emphasise the event permutation \(\pi \).

Example 30

Consider, again, the process

and its supercombinator from Example 28. Suppose, also, that the processes P(t) use data from some polymorphic type V, and that the script is constant-free for the types T and V.

Let \(\pi \) be an event permutation formed by lifting permutations on T and V. For each \(i \in \{1,\ldots ,n\}\), define \(\alpha _{\pi }(i) = j\) such that \(\pi (t_i) = t_j\); note that such a j exists, and that \(\alpha _\pi \) is a bijection. Proposition 24 then tells us that

-

the LTSs for \(P(t_i)\) and \(P(\pi (t_i))\) are \(\pi \)-bisimilar: \(L_i \sim _\pi L_{\alpha (i)}\);

-

\(\pi (A(t_i)) = A(t_{\alpha (i)})\);

-

\(\pi (X) = X\).

The second and third items can be used to show that the rule set satisfies the conditions of Definition 29. For example, given the rule \((e_a, \hbox {if }a \in X \hbox {then }\tau \hbox {else }a)\), we can consider the rule \((e_{\pi (a)}, \hbox {if }\pi (a) \in X \hbox {then }\tau \hbox {else }\pi (a))\), and show that, for each i

as required. Hence the supercombinator is \(\pi \)-mappable to itself using \(\alpha \).

We now show that \(\pi \)-mappable supercombinators induce \(\pi \)-bisimilar LTSs.

Lemma 31

Let \(\pi \) be an event permutation, and let \(\mathcal {S} = (\langle L_1, \ldots , L_n\rangle , {\mathcal {R}})\) be \(\pi \)-mappable to itself using component bijection \(\alpha \). Consider the relation \(\approx _\pi \) defined over states of the induced LTSs by

Then \(\approx _\pi \) is a \(\pi \)-bisimulation. Further, the initial states of the two LTSs are related by \(\approx _\pi \).

The proposition below follows easily from the above lemma.

Proposition 32

Suppose \({\mathcal {S}}\) is a supercombinator that is \(\pi \)-mappable to itself for every \(\pi \in G\). Then the induced LTS is G-symmetric.

We now show that the property of supercombinators being mappable is compositional in the obvious way.

Lemma 33

Consider a supercombinator \({\mathcal {S}} = ({\mathcal {L}}, {\mathcal {R}})\). Suppose \({\mathcal {S}}\) is \(\pi \)-mappable to itself using \(\alpha _\pi \) and is \(\pi '\)-mappable to itself using \(\alpha _{\pi '}\). Then \({\mathcal {S}}\) is \((\pi ;\pi ')\)-mappable to itself using \(\alpha _{\pi } ; \alpha _{\pi '}\).

Proof

Suppose \({\mathcal {L}}= \langle L_1,\ldots ,L_n\rangle \). Consider \(L_i\), \(L_{\alpha _\pi (i)}\) and \(L_{\alpha _{\pi '}(\alpha _\pi (i))}\). Then \(L_i \sim _\pi L_{\alpha _\pi (i)}\), and \(L_{\alpha _\pi (i)} \sim _{\pi '} L_{\alpha _{\pi '}(\alpha _\pi (i))}\). Hence \(L_i \sim _{\pi ; \pi '} L_{\alpha _{\pi '}(\alpha _\pi (i))}\).

Now consider the rules. Suppose \((e,a) \in {\mathcal {R}}\). Then there is a rule \((e',\pi (a)) \in {\mathcal {R}}\) such that \(e'(\alpha _\pi (i)) = \pi (e(i))\) for each \(i \in \{1,\ldots ,n\}\). But then there is a rule \((e'', (\pi ;\pi ')(a)) \in {\mathcal {R}}\) such that \(e''(\alpha _{\pi '}(i)) = \pi '(e'(i))\) for each i. Hence, for each i, \(e''((\alpha _\pi ;\alpha _{\pi '})(i)) = e''(\alpha _{\pi '}(\alpha _{\pi }(i))) = \pi '(e'(\alpha _\pi (i))) = (\pi ;\pi ')(e(i))\). The reverse condition is very similar. \(\square \)

6 Identifying symmetries and applying permutations in supercombinators

In the last section we described sufficient conditions (\(\pi \)-mappability) for the LTS induced by a supercombinator to be \(\pi \)-symmetric. We now build on this for systems with symmetric datatypes. Let \({\mathcal {T}}\) be a collection of datatypes. Throughout this section we assume a constant-free script for \({\mathcal {T}}\). Let \(\pi \) be an event permutation formed from lifting a type-preserving permutation on \({\mathcal {T}}\) to events; we write \(EvSym({\mathcal {T}})\) for the set of all such event permutations. Let \({\mathcal {S}}_{impl}\) be the supercombinator for the implementation. In Sect. 6.1, we explain how we verify algorithmically that \({\mathcal {S}}_{impl}\) is \(\pi \)-mappable to itself, for every \(\pi \in EvSym({\mathcal {T}})\), and describe pre-calculations that help in subsequent calculations of component bijections. We explain how to actually apply an event permutation to a state of a supercombinator in Sect. 6.2.

6.1 Checking mappability

In this section we explain how we verify that the supercombinator for the implementation process is \(\pi \)-mappable to itself for every \(\pi \in EvSym({\mathcal {T}})\); by Proposition 32, this will mean that it is \(EvSym({\mathcal {T}})\)-symmetric. Assuming a constant-free script, FDR will nearly always produce such a supercombinator. (Recall that Corollary 25 talks about the LTS corresponding to the implementation process, rather than the supercombinator that represents that LTS.) However, it is not feasible to verify that FDR will always produce such a supercombinator: FDR uses various heuristics to decide how to construct supercombinators, which makes it too complex to reason about directly. We are also aware of a corner-case where this is not (currently) the case. We therefore verify mappability for each supercombinator generated. If this turns out not to be the case, our approach fails, and we give up (but we have never known this to happen on a noncontrived example).

We also describe some pre-calculations we make concerning component bijections, for use when exploring the product automaton. Note that we want to avoid pre-calculating and storing all such component bijections, since there are simply too many; instead, we calculate enough information for them to be calculated efficiently subsequently.