Abstract

We develop a family of efficient plane-sweeping interval join algorithms for evaluating a wide range of interval predicates such as Allen’s relationships and parameterized relationships. Our technique is based on a framework, components of which can be flexibly combined in different manners to support the required interval relation. In temporal databases, our algorithms can exploit a well-known and flexible access method, the Timeline Index, thus expanding the set of operations it supports even further. Additionally, employing a compact data structure, the gapless hash map, we utilize the CPU cache efficiently. In an experimental evaluation, we show that our approach is several times faster and scales better than state-of-the-art techniques, while being much better suited for real-time event processing.

Similar content being viewed by others

1 Introduction

Temporal data are found in many financial, business, and scientific applications running on top of database management systems (DBMSs), i.e., supporting these applications through efficient temporal operator implementations is crucial. For example, Kaufmann states that there are several temporal queries in the hundred most expensive queries executed on SAP ERP [23], many of which have to be implemented in the application layer, as the underlying infrastructure does not directly support the processing of temporal data. According to [23], customers of SAP desperately need (advanced) temporal operators for efficiently running queries pertaining to legal, compliance, and auditing processes.

Although the introduction of temporal operators into the SQL standard has started with SQL:2011 [29], current implementations are far from complete or lacking in performance. There is a renewed interest in temporal data processing, and researchers and developers are busy filling the gaps. An example is join operators involving temporal predicates: there are several recent publications on overlap interval joins [8, 14, 36]. However, this is not the only possible join predicate for matching (temporal) intervals. Allen defined a set of binary relations between intervals originally designed for reasoning about intervals and interval-based temporal descriptions of events [2]. These relations have been extended for event detection by parameterizing them [20]. Strictly speaking, all these relationships could be formulated in regular SQL WHERE clauses (see also the right-hand column of Table 1 for a formal definition of Allen’s relations and extensions). The evaluation of these predicates using the implementation present in contemporary relational database management systems (RDBMSs) would be very inefficient, though, as a lot of inequality predicates are involved [26]. We also note that the predicates in the aforementioned overlap interval joins ( [8, 14, 36]) only check for any form of overlap between intervals. Basically, they do not distinguish between many of the relationships defined by Allen and do not cover the before and meets relations at all. Additionally, many of the approaches so far lack parameterized versions, in which further range-based constraints can be formulated directly in the join predicate.

In Fig. 1 we see an example relation showing which employees (\(r_1\), \(r_2\), \(r_3\), and \(r_4\)) were working on a certain project during which month. The tuple validity intervals (visualized as line segments) are as follows: tuple \(r_1\) is valid from time 0 to time 2 (exclusive) with a starting time stamp \(T_s = 0\) and an ending time stamp \(T_e = 2\), tuple \(r_2\) is valid on interval [0, 5) with \(T_s = 0\) and \(T_e = 5\), and so on. With a simple overlap interval join we can merely detect that \(r_1\) and \(r_2\) worked together on the project for some time (as did \(r_2\) and \(r_3\)). However, we may also be interested in who started working at the same time (\(r_1\) and \(r_2\)), who started working after someone had already left (\(r_3\) coming in after \(r_1\) had left and \(r_4\) starting after everyone else had left), or even who took over from someone else, i.e., the ending of one interval coincides with the beginning of another one (\(r_3\) and \(r_4\)). For even more sophisticated queries, we may want to add thresholds: who worked together with someone else and then left the project within two months of the other person (\(r_3\) and \(r_2\)).

Allen’s relationships are not only used in temporal databases, but also in event detection systems for temporal pattern matching [20, 27, 28]. In this context, it is also important to be able to specify concrete time frames within which certain patterns are encountered, introducing the need for parameterized versions of Allen’s relationships. For instance, Körber et al. use their TPStream framework for analyzing real-time traffic data [27, 28], while we previously employed a language called ISEQL to specify events in a video surveillance context [20]. Event detection motivated us to develop an approach that is also applicable for event stream processing environments, meaning that our join operators are non-blocking and produce output tuples as early as logically possible, without necessarily waiting for the intervals to finish. Moreover, we demonstrate how these joins can be processed efficiently in temporal databases using a sweeping-based framework that is supported by cache-efficient data structures and by a Timeline Index—a flexible and general temporal index—supporting a wide range of temporal operators, used in a prototype implementation of SAP HANA [25]. We therefore extend the set of operations Timeline Index supports, increasing its usefulness even further.

In particular, we make the following contributions:

-

We develop a family of plane-sweeping interval join algorithms that can evaluate a wide range of interval relationship predicates going even beyond Allen’s relations.

-

At the core of this framework sits one base algorithm, called interval-time stamp join, that can be parameterized using a set of iterators traversing a Timeline Index. This offers an elegant way of configuring and adapting the base algorithm for processing different interval join predicates, improving code maintainability.

-

Additionally, our algorithm utilizes the CPU cache efficiently, relying on a compact hash map data structure for managing data during the processing of the join operation. Together with the index, in many cases we can achieve linear run time.

-

In an experimental evaluation, we show that our approach is faster than state-of-the-art methods: an order of magnitude faster than a direct competitor and several orders of magnitude faster than an inequality join.

2 Related work

There is a renewed interest in employing Allen’s interval relations in different areas, e.g., for describing complex temporal events in event detection frameworks [20, 27, 28] as well as for querying temporal relationships in knowledge graphs via SPARQL [11]. One reason is that it is more natural for humans to work with chunks of information, such as labeled intervals, rather than individual values [21].

2.1 Allen’s interval relations joins

Leung and Muntz worked on efficiently implementing joins with predicates based on Allen’s relations in the 1990s [30, 31], and it turns out that their solution is still competitive today. In fact, they also apply a plane-sweeping strategy, but impose a total order on the tuples of a relation. Theoretically, there are four different orders tuples can be sorted in for this algorithm: \(T_s\) ascending, \(T_s\) descending, \(T_e\) ascending, and \(T_e\) descending. When joining two relations, they can be sorted in different orders independently of each other.

The actual algorithm is similar to a sort-merge join. A tuple is read from one of the relations (outer or inner) and placed into the corresponding set of active tuples for that relation. Each tuple in the set of the other relation is checked whether it matches the tuple that was just read. When a matching pair is found, it is transferred to the result set. While searching for matching tuples, the algorithm also performs a garbage collection, removing tuples that will no longer be able to find a matching partner. (Not all join predicates and sort orders allow for a garbage collection, though.) A heuristic, based on tuple distribution and garbage collection statistics, decides from which relation to read the next tuple. In a follow-up paper, further strategies for parallelization and temporal query processing are discussed [32].

In contrast to our approach, in which we handle tuple starting and ending events separately (an idea also covered more generally in [15, 33, 39]), the algorithm of Leung and Muntz requires streams of whole tuples. A tuple is not complete until its ending endpoint \(T_e\) is encountered. This has a major impact for applications such as real-time event detection. Waiting for a tuple to finish can delay the whole joining process, as tuples following it in the sort order cannot be reported yet.

Chekol et al. claim to cover the complete set of Allen’s relations in their join algorithm for intervals in the context of SPARQL, but the description for some relations is missing [11]. It seems they are using our algorithm from [36] as a basis. They are not able to handle parameterized versions and have to create different indexes for different relations, though.

There is also research on integrating Allen’s predicate interval joins in a MapReduce framework [10, 37]. However, these approaches focus on the effective distribution of the data over MapReduce workers rather than on effective joins.

2.2 Overlap interval joins

One of the earliest publications to look at performance issues of temporal joins is by Segev and Gunadhi [18, 38], who compare different sort-merge and nested-loop implementations of their event join. They refined existing algorithms by applying an auxiliary access method called an append-only tree, assuming that temporal data are only appended to relations and never updated or deleted.

Some of the work on spatial joins can also be applied to interval joins. Arge et al. [4] used a sweeping-based interval join algorithm as a building block for a two-dimensional spatial rectangle join, but did not investigate it as a standalone interval join. It was picked up again by Gao et al. [16], who give a taxonomy of temporal join operators and provide a survey and an empirical study of a considerable number of non-index-based temporal join algorithms, such as variants of nested-loop, sort-merge, and partitioning-based methods.

The fastest partitioning join, the overlap interval partitioning (OIP) join, was developed by Dignös et al. [14]. The (temporal) domain is divided into equally-sized granules and adjacent granules can be combined to form containers of different sizes. Intervals are assigned to the smallest container that covers them and the join algorithm then matches intervals in overlapping containers.

The Timeline Index, introduced by Kaufmann et al. [25], and the supported temporal operators have also received renewed attention recently. Kaufmann et al. showed that a single index per temporal relation supports such operations as time travel, temporal joins, overlap interval joins and temporal aggregation on constant intervals (temporal grouping by instant). The work was done in the context of developing a prototype for a commercial temporal database and later extended to support bitemporal data [24].

In earlier work [36], we defined a simplified, but functionally equivalent version of the Timeline Index, called Endpoint Index (from now on we will use both terms interchangeably). We introduced a cache-optimized algorithm for the overlap interval join, based on the Endpoint Index, and showed that the Timeline Index is not only a universal index that supports many operations, but that it can also outperform the state-of-the-art specialized indexes (including [14]). The technique of sweeping-based algorithms has recently been applied to temporal aggregation as well [9, 35], extending the set of operations supported by the Timeline Index even further.

The basic idea of Bouros and Mamoulis is to do a forward scan on the input collection to determine the join partners; hence, their algorithm is called forward scan (FS) join [8]. In contrast, our approach does a backward scan by traversing already encountered intervals, which have to be stored in a hash table. In FS, both input relations, \(\varvec{r}\) and \(\varvec{s}\), are sorted on the starting endpoint of each interval and then the algorithm sweeps through the endpoints of \(\varvec{r}\) and \(\varvec{s}\) in order. Every time in encounters an endpoint of an interval, it scans the other relation and joins the interval with all matching intervals. Bouros and Mamoulis introduce a couple of optimizations to improve the performance. First, consecutively swept intervals are grouped and processed in batch (this is called gFS). Second, the (temporal) domain is split into tiles and intervals starting in such a tile are stored in a bucket associated with this tile. While scanning for join partners for a tuple r, all intervals in buckets corresponding to tiles that are completely covered by the interval of r can be joined without further comparisons. Combined with the previous technique, this results in a variant called bgFS. Doing a forward scan or a backward scan has certain implications. Introducing their optimizations to FS, Bouros and Mamoulis showed that forward scanning is on a par with backward scanning, without the need of a data structure to store already encountered tuples. However, there is also a downside: forward scanning needs to have access to the complete relations to work, while backward scanning considers only already encountered endpoints, i.e., backward scanning can be utilized in a streaming context (for forward scanning this is not possible). We take a closer look at parallelization in Sect. 7.6.

2.3 Generic inequality joins

As we will see in Table 1, most of the interval joins can be broken down into inequality joins, which becomes very inefficient as soon as more than one inequality predicate is involved: Khayyat et al. [26] point out that these joins are handled via naive nested-loop joins in contemporary RDBMSs. They develop a more efficient inequality join (IEJoin), which first sorts the relations according to the join attributes. For the sake of simplicity, we just consider two inequality predicates here, i.e., for every relation \(\varvec{r}\), we have two versions, \(\varvec{r^1}\) and \(\varvec{r^2}\) sorted by the two join attributes, which helps us to find the values satisfying an inequality predicates more efficiently. (The connections between tuples from \(\varvec{r^1}\) and \(\varvec{r^2}\) are made using a permutation array.) Some additional data structures, offset arrays and bit arrays, help the algorithm to take shortcuts, but essentially the basic join algorithm still consists of two nested loops, leading to a quadratic run-time complexity (albeit with a performance that is an order of magnitude better than a naive nested-loop join).

3 Background

Interval Data

We define a temporal tuple as a relational tuple containing two additional attributes, \(T_s\) and \(T_e\), denoting the start and end of the half-open tuple validity interval \(T = [T_s, T_e)\). We will use a period (.) to denote an attribute of a tuple, e.g., \(r.T_s\) or s.T. The length of the tuple validity interval, \(|r|\), is therefore \(r.T_e - r.T_s\). We use the terms interval and tuple interchangeably. With r and s we denote the left-hand-side and the right-hand-side tuples in a join, respectively. We use integers for the time stamps to simplify the explanations. Our approach would work with any discrete time domain: we require a total order on the time stamps and a fixed granularity, i.e., given a time stamp we have to be able to unambiguously determine the following one.

Interval Relations

As intervals accommodate the human perception of time-based patterns much better than individual values [21], intervals and their relationships are a well-known and widespread approach to handle temporal data [22]. Here we look at two different ways to define binary relationships between intervals: Allen’s relations [2] and the Interval-based Surveillance Event Query Language (ISEQL) [6, 20].

Allen designed his framework to support reasoning about intervals and it comprises thirteen relations in total. The seven basic Allen’s relations are shown in the top half of Table 1. For example, interval r meets interval s when r finishes immediately before s starts. This is illustrated by the doodle in the table. We will use smaller versions of the doodles also in the text: ( ). The first six relations in the table also have an inverse counterpart (hence thirteen relations). For example, relation ‘r inverse meets s’ describes s immediately finishing before r starts: (

). The first six relations in the table also have an inverse counterpart (hence thirteen relations). For example, relation ‘r inverse meets s’ describes s immediately finishing before r starts: ( ).

).

ISEQL originated in the context of complex event detection and covers a different set of requirements. The list of the five basic ISEQL relations is presented in the bottom half of Table 1; each of them has an inverse counterpart. Additionally, ISEQL relations are parameterized. The parameters control additional constraints and allow a much more fine-grained definition of join predicates. This is similar to the simple temporal problem (STP) formalism, which defines an interval that restricts the temporal distance between two events [3, 13]. Let us consider the before ISEQL relation ( ). It has one parameter \(\delta \), which controls the maximum allowed distance between the intervals (events). When \(\delta = 0\), this relation is equivalent to the Allen’s meets (

). It has one parameter \(\delta \), which controls the maximum allowed distance between the intervals (events). When \(\delta = 0\), this relation is equivalent to the Allen’s meets ( ). When \(\delta > 0\), it is a disjunction of Allen’s meets and before, and the maximum allowed distance between the events is \(\delta \) time points. Any ISEQL relation parameter can be relaxed (set to infinity), which removes the corresponding constraint.

). When \(\delta > 0\), it is a disjunction of Allen’s meets and before, and the maximum allowed distance between the events is \(\delta \) time points. Any ISEQL relation parameter can be relaxed (set to infinity), which removes the corresponding constraint.

Joins on Interval Relations

For each binary interval relation (Allen’s or ISEQL) we define a predicate P(r, s) as its indicator function: its value is ‘true’ if the argument tuples satisfy the relation and ‘false’ otherwise. From now on we will use the terms ‘predicate’ and ‘binary interval relation’ interchangeably. We perceive ISEQL relation parameters, if not relaxed, as part of the definition of P (e.g., \(P_{\delta }\)). We also define a temporal relation (not to be confused with binary relations described before) as a set of temporal tuples: \(\varvec{r} = \{r_1, r_2, \dots , r_n\}\).

Let us take a generic relational predicate P(r, s). We define the P-join of two relations \(\varvec{r}\) and \(\varvec{s}\) as an operator that returns all pairs of \(\varvec{r}\) and \(\varvec{s}\) tuples that satisfy predicate P. We can express this in pseudo-SQL as ‘SELECT * FROM \(\varvec{r}\), \(\varvec{s}\) WHERE P(r, s)’. For example, we define the ISEQL left overlap join as ‘SELECT * FROM \(\varvec{r}\), \(\varvec{s}\) WHERE r LEFT OVERLAP s’. If the predicate is parameterized, the join operator will also be parameterized.

Example 1

As an example (see Fig. 2), let us assume that we have two temporal relations: \(\varvec{r} = \{ r_1 = [0, 1), r_2 = [1, 3), r_3 = [2, 5)\}\) and \(\varvec{s} = \{ s_1 = [1, 3), s_2 = [3, 4)\}\).

Let us now take the ISEQL before join ( ) with the parameter \(\delta = 1\). Its result consists of two pairs \(\langle r_1, s_1\rangle \) and \(\langle r_2, s_2\rangle \), because only they satisfy this particular join predicate. If we relax the parameter (set \(\delta \) to \(\infty \)), then an additional third pair \(\langle r_1, s_2\rangle \) would be added to the result of the join.

) with the parameter \(\delta = 1\). Its result consists of two pairs \(\langle r_1, s_1\rangle \) and \(\langle r_2, s_2\rangle \), because only they satisfy this particular join predicate. If we relax the parameter (set \(\delta \) to \(\infty \)), then an additional third pair \(\langle r_1, s_2\rangle \) would be added to the result of the join.

By replacing the relational predicate by its formal definition (Table 1), we can implement an interval join in any relational database. However, such an implementation results in a relational join with inequality predicates, which is not efficiently supported by RDBMSs: they have to fall back on the nested-loop implementation in this case [26].

4 Formalizing our approach

We opt for a relational algebra representation to be able to make formal statements (e.g., proofs) about the different operators. Before fully formalizing our approach, we first introduce a map operator (\(\chi \)) as an addition to the standard selection, projection, and join operators in traditional relational algebra. This operator is used for materializing values as described in [7]. We also introduce our new interval-time stamp join ( ), allowing us to replace costly non-equi-join predicates with an operator that, as we show later, can be implemented much more efficiently.

), allowing us to replace costly non-equi-join predicates with an operator that, as we show later, can be implemented much more efficiently.

Definition 1

The map operator \(\chi _{a:e}(\varvec{r})\) evaluates the expression e on each tuple of \(\varvec{r}\) and concatenates the result to the tuple as attribute a:

If the attribute a already exists in a tuple, we instead overwrite its value.

Definition 2

The interval-time stamp join  matches the intervals in the tuples of relation \(\varvec{r}\) with the time stamps of the tuples in \(\varvec{s}\). It comes in two flavors, depending on the time stamp chosen for s, i.e., \(T_s\) or \(T_e\). So, the interval-starting-time stamp join is defined as

matches the intervals in the tuples of relation \(\varvec{r}\) with the time stamps of the tuples in \(\varvec{s}\). It comes in two flavors, depending on the time stamp chosen for s, i.e., \(T_s\) or \(T_e\). So, the interval-starting-time stamp join is defined as

with \(\theta \in \{<, \leqslant \}\), whereas the interval-ending-time stamp join boils down to

with \(\theta \in \{<, \leqslant \}\).

Now we are ready to formulate the joins on interval relations shown in Table 1 in relational algebra extended by our new join operator  . We first cover the non-parameterized versions (i.e., setting \(\delta \) and \(\varepsilon \) to infinity) and then move on to the parameterized ones. Table 2 gives an overview of the relational algebra formulations. Proof for the correctness of our rewrites can be found in “Appendix A”. Proof for the correctness of our rewrites can be found in “Appendix A” of our technical report.Footnote 1

. We first cover the non-parameterized versions (i.e., setting \(\delta \) and \(\varepsilon \) to infinity) and then move on to the parameterized ones. Table 2 gives an overview of the relational algebra formulations. Proof for the correctness of our rewrites can be found in “Appendix A”. Proof for the correctness of our rewrites can be found in “Appendix A” of our technical report.Footnote 1

4.1 Non-parameterized joins

The non-parameterized joins include all the Allen’s relations and the ISEQL joins with relaxed parameters. The equals, starts, finishes, and meets predicates could be evaluated using a regular equi-join. Nevertheless, we formulate them via interval-time stamp joins, which are better suited to streaming environments and can be processed more quickly for low numbers of matching tuples. We present the joins roughly in the order of their complexity, i.e., how many other different operators we need to define them.

ISEQL Start Preceding Join

The start preceding predicate ( ) joins two tuples r and s if they overlap and r does not start after s (we relax the parameter \(\delta \) here). This can be expressed in our extended relational algebra in the following way:

) joins two tuples r and s if they overlap and r does not start after s (we relax the parameter \(\delta \) here). This can be expressed in our extended relational algebra in the following way:

ISEQL End Following Join

As before, we first consider the ISEQL end following predicate ( ) with a relaxed \(\varepsilon \) parameter, meaning that tuples r and s should overlap and r is not allowed to end before s. In relational algebra this boils down to

) with a relaxed \(\varepsilon \) parameter, meaning that tuples r and s should overlap and r is not allowed to end before s. In relational algebra this boils down to

Overlap Join

If we look at the left overlap join ( ), we notice that it looks very similar to a start preceding join. The main difference is that it has one additional constraint: the \(\varvec{r}\) tuple has to end before the \(\varvec{s}\) tuple. Formulated in relational algebra this is equal to

), we notice that it looks very similar to a start preceding join. The main difference is that it has one additional constraint: the \(\varvec{r}\) tuple has to end before the \(\varvec{s}\) tuple. Formulated in relational algebra this is equal to

For the right overlap join ( ), we could just swap the roles of \(\varvec{r}\) and \(\varvec{s}\), or we could use an end following join combined with a selection predicate stating that the \(\varvec{s}\) tuple has to start before the \(\varvec{r}\) tuple:

), we could just swap the roles of \(\varvec{r}\) and \(\varvec{s}\), or we could use an end following join combined with a selection predicate stating that the \(\varvec{s}\) tuple has to start before the \(\varvec{r}\) tuple:

For the more strict Allen’s left overlap and inverse overlap joins we use ‘<’ for the \(\theta \) of the join and the selection predicate (or, alternatively, the parameterized version of the overlap join, which is introduced later).

During Join

For the during join ( ), we have to swap the roles of \(\varvec{r}\) and \(\varvec{s}\). Formulated with the help of a start preceding join, it becomes

), we have to swap the roles of \(\varvec{r}\) and \(\varvec{s}\). Formulated with the help of a start preceding join, it becomes

or, alternatively, with an end following join we get

A reverse during join maps more naturally to a start preceding or end following join, i.e., we do not have to swap the roles of \(\varvec{r}\) and \(\varvec{s}\). For the Allen relation we use ‘<’ for the \(\theta \) of the join and the selection predicate (or the parameterized version of the during join).

Before Join

In the case of the before predicate ( ), the tuples should not overlap at all. We achieve this by converting the ending events of \(\varvec{r}\) into starting ones and setting the ending events to infinity (see Fig. 3, the dashed lines are the original tuples and the solid lines the newly created ones). Formulated in relational algebra we get for the ISEQL version of before:

), the tuples should not overlap at all. We achieve this by converting the ending events of \(\varvec{r}\) into starting ones and setting the ending events to infinity (see Fig. 3, the dashed lines are the original tuples and the solid lines the newly created ones). Formulated in relational algebra we get for the ISEQL version of before:

For the Allen relation we use \(\theta = \)‘<’ for the join.

Meets Join

For the meets predicate ( ) each tuple of relation \(\varvec{r}\) should only be active for a short interval of length one when it ends. We achieve this by converting the end events of tuples in \(\varvec{r}\) into start events and adding a new end event that shifts the old end event by one. Expressed in relational algebra this looks as follows:

) each tuple of relation \(\varvec{r}\) should only be active for a short interval of length one when it ends. We achieve this by converting the end events of tuples in \(\varvec{r}\) into start events and adding a new end event that shifts the old end event by one. Expressed in relational algebra this looks as follows:

Equals Join

For the equals predicate ( ) we check that starting events match via an interval-timestamp join and then add a selection to check the ending events:

) we check that starting events match via an interval-timestamp join and then add a selection to check the ending events:

Starts Join

For a starts predicate ( ) we first check that the starting events are the same, the ending events are again compared in a selection afterward. Although one of the predicates uses a comparison based on inequality, this happens in the selection, not the join:

) we first check that the starting events are the same, the ending events are again compared in a selection afterward. Although one of the predicates uses a comparison based on inequality, this happens in the selection, not the join:

Finishes Join

The finishes predicate ( ) works similar to a starts predicate. We use an interval-ending-time stamp join and swap the roles of the starting and ending events (due to the different definition of the

) works similar to a starts predicate. We use an interval-ending-time stamp join and swap the roles of the starting and ending events (due to the different definition of the  join, we also have to shift the time stamps by one):

join, we also have to shift the time stamps by one):

4.2 Parameterized joins

We now move on to the parameterized versions of the ISEQL join operators found in Table 1.

Start Preceding Join with \(\delta \)

Defining a value for the parameter \(\delta \) for the ISEQL start preceding join ( ) means that the tuple from \(\varvec{s}\) has to start between the start of the \(\varvec{r}\) tuple and within \(\delta \) time units of the \(\varvec{r}\) tuple starting or the end of the \(\varvec{r}\) tuple (whichever happens first). Basically, we shorten the long tuples. Expressed in relational algebra, this becomes

) means that the tuple from \(\varvec{s}\) has to start between the start of the \(\varvec{r}\) tuple and within \(\delta \) time units of the \(\varvec{r}\) tuple starting or the end of the \(\varvec{r}\) tuple (whichever happens first). Basically, we shorten the long tuples. Expressed in relational algebra, this becomes

End Following Join with \(\varepsilon \)

For the parameterized end following ( ) join we have to make sure that the \(\varvec{s}\) tuple ends within \(\varepsilon \) time units distance from the end of the \(\varvec{r}\) tuple (but after the start of the \(\varvec{r}\) tuple). The formal definition in relational algebra is

) join we have to make sure that the \(\varvec{s}\) tuple ends within \(\varepsilon \) time units distance from the end of the \(\varvec{r}\) tuple (but after the start of the \(\varvec{r}\) tuple). The formal definition in relational algebra is

The Before Join with \(\delta \)

For the parameterized before join we have to make sure that the \(\varvec{s}\) tuple starts within a time window of length \(\delta \) after the \(\varvec{r}\) tuple ends:

Overlap Join with \(\delta \) and \(\varepsilon \)

Similar to the non-parameterized version we use the start preceding join to define the parameterized left overlap join:

For the parameterized right overlap join we can either swap the roles of \(\varvec{r}\) and \(\varvec{s}\) or use the end following join:

During Join with \(\delta \) and \(\varepsilon \)

We can use a parameterized start preceding or end following join as a building block for a parameterized during join, swapping the roles of \(\varvec{r}\) and \(\varvec{s}\). With a start preceding join we get

whereas with an end following join it boils down to

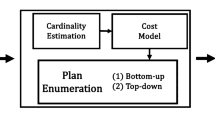

5 Our framework

After introducing the interval joins formally, we now turn to their efficient implementation. We develop a framework to express the different interval joins with the help of just one core join algorithm. The framework also includes an index and several iterators for scanning through sets of intervals to increase the performance and flexibility.

5.1 The endpoint index

We can gain a lot of speedup by sweeping through the interval endpoints in chronological order using an Endpoint Index, which is a simplified version of the Timeline Index [25]. The idea of the Endpoint Index is that intervals, which can be seen as points in a two-dimensional space, are mapped onto one-dimensional endpoints or events.

Let \({\mathbf {r}}\) be an interval relation with tuples \(r_i\), where \(1\leqslant i\leqslant n\). A tuple \(r_i\) in an Endpoint Index is represented by two events of the form \(e = \langle timestamp , type ,tuple\_id\rangle \), where \( timestamp \) is the \(T_s\) or \(T_e\) of the tuple, \( type \) is either a \(\mathrm {start}\) or \(\mathrm {end}\) flag, and \( tuple\_id \) is the tuple identifier, i.e., the two events for a tuple \(r_i\) are \(\langle r_i.T_s,\mathrm {start},i\rangle \) and \(\langle r_i.T_e,\mathrm {end},i\rangle \). For instance, for \(r_3.T = [3,5)\), the two events are \(\langle 3,\mathrm {start},3\rangle \) and \(\langle 5,\mathrm {end},3\rangle \), which can be seen as ‘at time 3 tuple 3 started’ and ‘at time 5 tuple 3 ended’.

Since events represent time stamps, we can impose a total order among events, where the order is according to \( timestamp \) and ties are broken by \( type \). In our case of half-open intervals, the order of \( type \) values is: \(\mathrm {end} < \mathrm {start}\). Endpoints with equal time stamps and types but different tuple identifiers are considered equal. An Endpoint Index for interval relation \({\mathbf {r}}\) is built by first extracting the interval endpoints from the relation and then creating the ordered list of events \(\left[ e_1, e_2, \dots , e_{2n}\right] \) sorted in ascending order. In case of event detection, the endpoints (events) can be taken directly from the event stream and we do not even have to construct an index.

Consider exemplary interval relation \(\varvec{r}\) from Fig. 2. The Endpoint Index for it is \([\langle 0,\mathrm {start},1\rangle \), \( \langle 1,\mathrm {end}, 1\rangle \), \( \langle 1,\mathrm {start},2\rangle \), \( \langle 2,\mathrm {start},3\rangle \), \( \langle 3,\mathrm {end}, 2\rangle \), \( \langle 5,\mathrm {end}, 3\rangle ]\).

5.2 Endpoint iterators

Before continuing with the join algorithms, we introduce the concept of the Endpoint Iterator, upon which our family of algorithms is based. An Endpoint Iterator represents a cursor, which allows forward traversing a list of endpoints (e.g., an Endpoint Index). More formally, it is an abstract data type (an interface), which supports three operations:

-

getEndpoint: returns the endpoint, which the iterator is currently pointing to (initially returns the first endpoint in the list);

-

moveToNextEndpoint: advances the cursor to the next endpoint;

-

isFinished: return true if the cursor is pointing beyond the last endpoint of the list, false otherwise.

More details on the implementation of Endpoint Iterators can be found in “Appendix A”.

The basic implementation of the Endpoint Iterator is the Index Iterator, which provides an Endpoint Iterator interface to a physical Endpoint Index. Given an instance of the index, such an iterator traverses all Endpoint Index elements using the native method applicable to the Endpoint Index. In the text and in the algorithm descriptions we use the terms ‘Endpoint Index’ and ‘Index Iterator’ interchangeably, i.e., we create an Index Iterator for an Endpoint Index implicitly if needed.

There are also wrapping iterators. Such iterators do not have direct access to an Endpoint Index, but modify, filter and/or combine the output of one or several source Endpoint Iterators. In software design pattern terminology such iterators are called decorators. We conclude this subsection by introducing one such wrapping iterator. We introduce more of them later, as needed. The simplest wrapping Endpoint Iterator is the Filtering Iterator. It receives, upon construction, a source Endpoint Iterator and an endpoint type (\(\mathrm {start}\) or \(\mathrm {end}\)). It then traverses only endpoints having the specified type.

5.3 The core algorithm JoinByS

We are now ready to define the core algorithm, which forms the basis of all our joins. This algorithm receives the relations to be joined \(\varvec{r}\) and \(\varvec{s}\), Endpoint Iterators for them, a comparison predicate, and a callback function that will be called for each result pair. The algorithm performs the interleaved scan of the endpoint iterators. While doing so, it maintains the set of active \(\varvec{r}\) tuples. Every endpoint for relation \(\varvec{s}\) triggers the output—the Cartesian product of the corresponding tuple s and the set of active \(\varvec{r}\) tuples. The comparison predicate is used to define the order in which equal endpoints of different relations are handled (‘equal’ meaning endpoints having the same time stamp and type).

The pseudocode for the core algorithm JoinByS is presented in Algorithm 1. The algorithm starts by initializing an active \(\varvec{r}\) tuple set implemented via a map (an associative array) of tuple identifiers to tuples.

The main loop (line 2) and the main ‘if’ (line 3) implement the interleaved scan of the endpoint indices (like in a sort-merge join). The tricky part here is that instead of a hardwired comparison operator (‘<’ or ‘\(\leqslant \)’), we use the function \(\textsf {comp}\), that we pass as an argument to the algorithm. In case of the start preceding join, for instance, if both current endpoints of \(\varvec{r}\) and \(\varvec{s}\) are equal, we have to handle the \(\varvec{r}\) endpoint first (Sect. 4.1 on page 6), and thus we have to use the ‘\(\leqslant \)’ predicate. In case of the end following join, on the other hand, if both current endpoints of \(\varvec{r}\) and \(\varvec{s}\) are equal, we have to handle the \(\varvec{s}\) endpoint first (Sect. 4.1 on page 6), and thus we have to use the ‘<’ predicate. Having the predicate as an argument of the algorithm allows us to choose the needed predicate upon using the algorithm, which prevents code duplication.Footnote 2

The rest of the algorithm consists of two parts. The first part (lines 4–10) handles an \(\varvec{r}\) endpoint and manages the active \(\varvec{r}\) tuple set. When a tuple starts, the algorithm loads it from the relation by the tuple identifier stored in the endpoint and puts the tuple in the map using the identifier as the key. When a tuple ends, the algorithm removes it from the active tuple map, again using the tuple identifier as the key.

The second part (lines 12–15) handles an \(\varvec{s}\) endpoint. It first loads the corresponding tuple s from the relation. Then it iterates through all elements in the active \(\varvec{r}\) tuple map. For every active r tuple the algorithm outputs the pair \(\langle r, s\rangle \) by passing it into the \( consumer \) function, which is another function-type argument of the algorithm. In some cases, the consumer has to do additional work, e.g., evaluating a selection predicate. We call these consumers \( filteringConsumers \). If they have access to the full tuple, they can check the predicate and immediately output the result. In a streaming environment, we do not have access to the end events immediately, which means that a filteringConsumer also needs to buffer data until these events become available, but as soon as this happens, the output can be generated.

6 Assembling the parts

We now show how to construct the different interval relations using our JoinByS operator and iterators. We start with the expressions from Sect. 4 that do not include map operators, followed by those that do.

6.1 Expressions without map operators

Start Preceding and End Following Joins

These two join predicates are the easiest to implement, as they can be mapped directly to the JoinByS operator. For the start preceding join ( ) we have to keep track of the active \(\varvec{r}\) tuples, and trigger the output by the start of an \(\varvec{s}\) tuple. If two tuples start at the same time, we have to handle the \(\varvec{r}\) tuple first. Therefore, we call the JoinByS function, passing to it only the starting \(\varvec{s}\) endpoints. This is achieved by using a Filtering Iterator (Section 5.2 on page 8). We also have to pass the ‘\(\leqslant \)’ predicate as the comparison function. A start preceding join then boils down to a single call of JoinByS (see Algorithm 2).

) we have to keep track of the active \(\varvec{r}\) tuples, and trigger the output by the start of an \(\varvec{s}\) tuple. If two tuples start at the same time, we have to handle the \(\varvec{r}\) tuple first. Therefore, we call the JoinByS function, passing to it only the starting \(\varvec{s}\) endpoints. This is achieved by using a Filtering Iterator (Section 5.2 on page 8). We also have to pass the ‘\(\leqslant \)’ predicate as the comparison function. A start preceding join then boils down to a single call of JoinByS (see Algorithm 2).

The algorithm StartPrecedingJoin receives iterators to the Endpoint Indexes. When using this algorithm with Endpoint Indices, we simply wrap each index in an Index Iterator—an operation, which, as noted before, we consider implicit.

We define the algorithm for the end following join ( ) similarly, but filter the ending endpoints of \(\varvec{s}\), and pass the ‘<’ as the comparison function. The pseudocode of the EndFollowingJoin is presented in Algorithm 3.

) similarly, but filter the ending endpoints of \(\varvec{s}\), and pass the ‘<’ as the comparison function. The pseudocode of the EndFollowingJoin is presented in Algorithm 3.

Overlap Joins

The left overlap join ( ) can be implemented using the StartPrecedingJoin algorithm with an additional constraint \(r.T_e \leqslant s.T_e\). The pseudocode is shown in Algorithm 4. The right overlap join (

) can be implemented using the StartPrecedingJoin algorithm with an additional constraint \(r.T_e \leqslant s.T_e\). The pseudocode is shown in Algorithm 4. The right overlap join ( ) is implemented along similar lines using the EndFollowingJoin algorithm and the selection predicate \(s.T_s \leqslant r.T_s\).

) is implemented along similar lines using the EndFollowingJoin algorithm and the selection predicate \(s.T_s \leqslant r.T_s\).

For the Allen versions of the overlap joins, we use strict versions of Algorithms 2 and 3, StartPrecedingStrictJoin and EndFollowingStrictJoin, which do not allow a tuple r to start with a tuple s or a tuple s to end with a tuple r, respectively. They are just simple variations: StartPrecedingStrictJoin merely replaces the ‘\(\leqslant \)’ in Algorithm 2 with ‘<’ and EndFollowingStrictJoin replaces the ‘<’ in Algorithm 3 with ‘\(\leqslant \)’. Additionally, we change the ‘\(\leqslant \)’ in the selection predicates in the filteringConsumer functions to ‘<’.

During Joins

Implementing the during join ( ) is similar to Algorithm 4: we just have to swap the arguments for \(\varvec{r}\) and \(\varvec{s}\) (alternatively, we could also use the end following variant). For the Allen version of during joins, we replace the StartPrecedingJoin, EndFollowingJoin, and selection predicates with their strict counterparts.

) is similar to Algorithm 4: we just have to swap the arguments for \(\varvec{r}\) and \(\varvec{s}\) (alternatively, we could also use the end following variant). For the Allen version of during joins, we replace the StartPrecedingJoin, EndFollowingJoin, and selection predicates with their strict counterparts.

If we simply call an algorithm with swapped arguments, the elements of the result pairs appear in a different order, i.e., \(\langle s, r\rangle \) instead of the expected \(\langle r, s\rangle \). If this is an issue, we can swap them back using a lambda function as the consumer. Putting everything together, we get Algorithm 5.

6.2 Expressions with map operators

In order to avoid physically changing tuple values or even the Endpoint Index, we apply the changes made by the map operators virtually with an iterator. While performing an interleaved scan of two Endpoint Indexes, instead of simply comparing the two endpoints \(r^e\) and \(s^e\) (as in \(r^e < s^e\)), we shift the time stamp of one of them when comparing: \(r^e + \delta < s^e\). In this way the algorithm performs an interleaved scan of the indexes as if we had shifted all \(\varvec{r}\) tuples in time by \(+\delta \).

During an interleaved scan, instead of forcing the iterators of the two Endpoint Indexes (for the relations \(\varvec{r}\) and \(\varvec{s}\)) to move synchronously as in all the operators so far, now one of the iterators lags behind by a constant offset. This behavior can be easily incorporated into our framework by using a special Endpoint Iterator that shifts the time stamp of every endpoint it returns on-the-fly.

There is a second issue: the new starting endpoint often is actually a shifted ending endpoint or vice versa. Consequently, we have to change the endpoint type as well. With the help of our Shifting Iterator, we can shift time stamps and also change endpoint types. As input parameters a shifting iterator receives a source Endpoint Iterator, the shifting distance, and an endpoint type (start or end).

The final issue is separately shifting the starting and ending endpoints by different amounts. We solve this by having independent iterators for both starting and ending endpoints and merging them on the fly in an interleaved fashion. The input parameters of the Merging Iterator are two other iterators, the events of which it merges. See “Appendix A” for more details.

Before and Meets Joins

We are now ready to create a GeneralBeforeJoin (see Algorithm 6 and Fig. 4 for a schematic representation); we already handle the parameterized version here as well. This algorithm performs a virtual three-way sort-merge join of the two Endpoint Indexes. One pointer will traverse the Endpoint Index for relation \(\varvec{s}\), and two pointers will traverse the Endpoint Index for relation \(\varvec{r}\), all three pointers moving synchronously, but at different positions. This is why we had to (implicitly) create two Index Iterators for the same index (lines 4 and 6)—each of them represents a physical pointer to the same Endpoint Index; therefore we need two of them.

We express the Allen’s before join ( ) by substituting 1 and \(+\infty \) for \(\beta \) and \(\delta \), respectively; Allen’s meets join (

) by substituting 1 and \(+\infty \) for \(\beta \) and \(\delta \), respectively; Allen’s meets join ( ) by substituting 0 and 0, respectively; and the ISEQL before join by substituting 0 for \(\beta \) and only using the \(\delta \) for the parameterized version. The parameter \(\beta \) distinguishes between the strict (Allen) and non-strict (ISEQL) versions of the operator.

) by substituting 0 and 0, respectively; and the ISEQL before join by substituting 0 for \(\beta \) and only using the \(\delta \) for the parameterized version. The parameter \(\beta \) distinguishes between the strict (Allen) and non-strict (ISEQL) versions of the operator.

Equals and Starts Joins

For the equals join ( ) we keep the original starting endpoints of \(\varvec{r}\) and use as ending endpoints the starting endpoints shifted by one and then execute a StartPrecedingJoin. This matches tuples from \(\varvec{r}\) and \(\varvec{s}\) with the same starting endpoints. We check that we have matching ending endpoints in the filteringConsumer function, which receives the actual tuples as input and thus has access to the time stamp attributes of the original tuples (see Algorithm 7) for the pseudocode.

) we keep the original starting endpoints of \(\varvec{r}\) and use as ending endpoints the starting endpoints shifted by one and then execute a StartPrecedingJoin. This matches tuples from \(\varvec{r}\) and \(\varvec{s}\) with the same starting endpoints. We check that we have matching ending endpoints in the filteringConsumer function, which receives the actual tuples as input and thus has access to the time stamp attributes of the original tuples (see Algorithm 7) for the pseudocode.

For a starts join ( ) we just have to change the predicate in the filteringConsumer function from ‘\(=\)’ to ‘<’.

) we just have to change the predicate in the filteringConsumer function from ‘\(=\)’ to ‘<’.

Finishes Join

For the tuples in \(\varvec{r}\) we turn the ending events into starting events and shift the ending events by one before joining them to the tuples in \(\varvec{s}\) via an EndFollowingJoin (Algorithm 3). Finally, we check that the tuple from \(\varvec{s}\) started before the one from \(\varvec{r}\). For the pseudocode of the finishes join ( ), see Algorithm 8.

), see Algorithm 8.

Parameterized Start Preceding Join

We now turn to the parameterized variant of the start preceding join ( ), which has the parameter \(\delta \) constraining the maximum distance between tuple starting endpoints. The basic idea is to take the starting endpoints of relation \(\varvec{r}\), shift them by \(\delta + 1\), change their type to ending endpoints, and add these virtual endpoints to the original endpoints of \(\varvec{r}\). This way each r tuple will be represented by three endpoints: the original starting and ending endpoints and the virtual ending endpoint. Then the parameterless StartPrecedingJoin algorithm (Algorithm 2) is applied to both streams of \(\varvec{r}\) and \(\varvec{s}\) endpoints. When encountering the second ending endpoint in the merged iterator, it can simply be ignored when its corresponding tuple cannot be found in the active tuple set (see “Appendix A”). Algorithm 9 depicts the pseudocode.

), which has the parameter \(\delta \) constraining the maximum distance between tuple starting endpoints. The basic idea is to take the starting endpoints of relation \(\varvec{r}\), shift them by \(\delta + 1\), change their type to ending endpoints, and add these virtual endpoints to the original endpoints of \(\varvec{r}\). This way each r tuple will be represented by three endpoints: the original starting and ending endpoints and the virtual ending endpoint. Then the parameterless StartPrecedingJoin algorithm (Algorithm 2) is applied to both streams of \(\varvec{r}\) and \(\varvec{s}\) endpoints. When encountering the second ending endpoint in the merged iterator, it can simply be ignored when its corresponding tuple cannot be found in the active tuple set (see “Appendix A”). Algorithm 9 depicts the pseudocode.

Parameterized End Following Join

A similar parameterized end following join ( ) is more complicated. The problem here is that each r tuple will have to be represented by two starting endpoints. The algorithm must consider a tuple activated only if both starting endpoints (and no ending endpoint) have been encountered.

) is more complicated. The problem here is that each r tuple will have to be represented by two starting endpoints. The algorithm must consider a tuple activated only if both starting endpoints (and no ending endpoint) have been encountered.

We achieve this by introducing an iterator, called Second Start Iterator, that stores the tuple identifiers of events for which we have only encountered one starting endpoint in a hash set (see “Appendix A”). Only the second starting endpoint of this tuple will return the starting event. The pseudocode for the parameterized end following join is shown in Algorithm 10.

Parameterized Overlap Join

Now that we have an algorithm for the parameterized StartPrecedingJoin, we can define the parameterized left overlap join ( ) by combining PStartPrecedingJoin with a filteringConsumer function, similarly to what we have done for the non-parameterized overlap join. Algorithm 11 shows the pseudocode. Alternatively, we can use a PEndFollowingJoin and then check the predicate for the starting endpoint of the s tuple in the filteringConsumer function.

) by combining PStartPrecedingJoin with a filteringConsumer function, similarly to what we have done for the non-parameterized overlap join. Algorithm 11 shows the pseudocode. Alternatively, we can use a PEndFollowingJoin and then check the predicate for the starting endpoint of the s tuple in the filteringConsumer function.

The right overlap join ( ) uses a PEndFollowingJoin with the corresponding predicate in the filteringConsumer function.

) uses a PEndFollowingJoin with the corresponding predicate in the filteringConsumer function.

Parameterized During Join

The parameterized during join ( ) looks similar to Algorithm 11, we apply changes along the lines of those shown in the paragraph for the non-parameterized during join. (There is also an alternative version using an PEndFollowingJoin.)

) looks similar to Algorithm 11, we apply changes along the lines of those shown in the paragraph for the non-parameterized during join. (There is also an alternative version using an PEndFollowingJoin.)

6.3 Correctness of algorithms

Showing the correctness of our algorithms boils down to illustrating that we handle the map operators correctly and demonstrating the correctness of the StartPreceding and EndFollowing joins, as our algorithms are either StartPreceding and EndFollowing joins or are built on top them.

Iterators and Map Operators

Here we show how to implement map operators with the help of iterators. Instead of materializing the result (e.g., on disk), we make the corresponding changes in a tuple as it passes through an iterator. If we still need a copy of the old event later on, we feed this event through another iterator and merge the two tuple streams using a merge iterator.

StartPreceding Join

We have to show that all tuples created by Algorithm 2 satisfy the predicate \(r.T_s \leqslant s.T_s < r.T_e\). A Filter Iterator removes all the ending events from \(\varvec{s}\), so we only have to deal with starting events from \(\varvec{s}\) and with both types of events from \(\varvec{r}\). As comparison operator we use ‘\(\leqslant \)’. This determines the order in which events are dealt with.

First, let us look at the case that both upcoming events in itR and itS are starting events. If \(r.T_s \leqslant s.T_s\), then r will be inserted into the active tuple set before s is processed, meaning that the (later) arrival of s will trigger the join with r. If \(r.T_s > s.T_s\), then s will be processed first, not encountering r in the active tuple set, meaning that the two will not join.

Second, if the next event in \(\varvec{r}\) is an ending event and the next event in \(\varvec{s}\) a starting event, then the two events can never be equal. Even if they have the same time stamp, the ending endpoint of r will always be considered less than the starting endpoint of s. Therefore, if \(r.T_e \leqslant s.T_s\), r will be removed first, so r and s will not join, and if \(r.T_e > s.T_s\), s will still join with r.

So, in summary, all the tuples generated by Algorithm 2 satisfy the predicate \(r.T_s \leqslant s.T_s < r.T_e\).

For a StrictStartPreceding join we run Algorithm 2 with the comparison operator ‘<’, yielding output tuples that satisfy the predicate \(r.T_s< s.T_s < r.T_e\). If both upcoming events in itR and itS are starting events, we get the correct behavior: \(r.T_s < s.T_s\) will lead to a join, \(r.T_s \geqslant s.Ts\) will not. If the r event is an ending event and the s event is a starting one, we also get the correct behavior: \(r.T_e \leqslant s.T_s\) will not join the r and s tuple, \(r.T_e > s.T_s\) will (the ending event of r is always less than the starting event of s).

EndFollowing Join

We show that all tuples created by Algorithm 3 satisfy the predicate \(r.T_s < s.T_e \leqslant r.T_e\). This time a Filter Iterator removes all the starting events from \(\varvec{s}\), so we only have to deal with ending events from \(\varvec{s}\) and with both types of events from \(\varvec{r}\). The comparison operator used for the non-strict version is ‘<’.

First, assume that the next event in itR is a starting event and the next event in itS is an ending event. As an ending event takes precedence over a starting event, if \(r.T_s = s.T_e\), the s event will come first. In turn this means that if \(r.T_s < s.T_e\), r is added to the active set first, resulting in a join, and if \(r.T_s \geqslant s.T_e\), s is processed first, meaning there is no join.

Second, we now look at the case that both events are ending events. Due to the comparison operator ‘<’, the events are handled in the right way: if \(r.T_e < s.T_e\), we remove r first, so there is no join, and if \(r.T_e \geqslant s.T_e\) we handle s first, resulting in a join.

For a StrictEndFollowing join we run Algorithm 3 with ‘\(\leqslant \)’ as comparison operator to obtain tuples that satisfy the predicate \(r.T_s< s.T_e < r.T_e\). Let us first look at a starting event for \(\varvec{r}\) and an ending event for \(\varvec{s}\). As ending events are processed before starting events with the same time stamp, we get: if \(r.T_s < s.T_e\), then r is added first, resulting in a join, and if \(r.T_s \geqslant s.T_e\), then s is removed first, meaning there is no join. Finally, we investigate the case that both events are ending events: if \(r.T_e \leqslant s.T_e\), then r is removed first, i.e., no join, and if \(r.T_e > s.T_e\), then s is processed first, joining r and s.

7 Implementation considerations

In this section we look at techniques to implement our framework efficiently, in particular how to represent an active tuple set, utilizing contemporary hardware. We also investigate the overhead caused by our heavy use of abstractions (such as iterators).

7.1 Managing the active tuple set

For managing the active tuple set we need a data structure into which we can insert key-value pairs, remove them, and quickly enumerate (scan) one by one all the values contained in the data structure via the operation getnext. In our case, the keys are tuple identifiers and the values are the tuples themselves. The data structure of choice here is a map or associative array.

The most efficient implementation of a map optimizing the insert and remove operations is a hash table (with O(1) time complexities for these operations). However, hash tables are not well suited for scanning. The std::unordered_map class in the C++ Standard Template Library and the java.util.HashMap in the Java Class Library, for instance, scan through all the buckets of a hash table, making the performance of a scan operation linear with respect to the capacity of the hash table and not to the actual amount of elements in it.

In order to achieve an O(1) complexity for getnext, the elements in the hash table can be connected via a doubly linked list (see Fig. 5). The hash table stores pointers to elements, which in turn contain a key, a value, two pointers for the doubly linked list (list prev and list next) and a pointer for chaining elements of the same bucket for collision resolution (pointer bucket next). This approach is employed in the java.util.LinkedHashMap in the Java Class Library.

While this data structure offers a constant complexity for getnext, the execution times of different calls of getnext can vary widely in practice, depending on the memory footprint of the map. After a series of insertions and deletions the elements of the linked list become randomly scattered in memory, which has an impact on caching: sometimes the next list element is still in the cache (resulting in fast retrieval), sometimes it is not (resulting in slow random memory accesses). Additionally, the pointer structure make it hard for a prefetcher to determine where the next elements are located. However, for our approach it is crucial that getnext can be executed very efficiently, as it is typically called much more often than insert and remove. We will see in Sect. 7.4 how to implement a hash map more efficiently.

7.2 Lazy joining of the active tuple set

The fastest getnext operations are actually those that are not executed. We modify our algorithm to boost its performance by significantly reducing the number of getnext operations needed to generate the output.

We illustrate our point using the example setting in Fig. 6. Assume we have just encountered the left endpoint of \(s_1\), which means that our algorithm now scans the tuple set \(active^r\), which contains \(r_1\) and \(r_2\). After that we scan it again and again when encountering the left endpoints of \(s_2\), \(s_3\), and \(s_4\). However, since no endpoints of \({\mathbf {r}}\) were encountered during that time, we scan the same version of \(active^r\) four times. We can reduce this to one scan if we keep track of the tuples \(s_1\), \(s_2\), \(s_3\), and \(s_4\) in a (contiguous) buffer, delaying the scan until there is about to be a change in \(active^r\).

To remedy this situation, we collect all consecutively encountered \(\varvec{s}\) tuples in a small buffer that fits into the L1 cache. Scanning the active tuple set when producing the output now requires only one traversal. Thanks to the design of our join algorithms we can incorporate this optimization into the whole framework by modifying JoinByS. The optimized version is shown in Algorithm 12. This technique has been introduced for overlap joins in [36], here we generalize it to the JoinByS algorithm. We recommend using a size for the buffer c that is smaller than the size of the L1d CPU cache (usually 32 Kilobytes) for this method to be effective.

For the sake of simplicity, we only refer to the JoinByS algorithm in the following section. It can be replaced by the LazyJoinByS algorithm without any change in functionality.

7.3 Features of contemporary hardware

Before describing further optimizations, we briefly review mechanisms employed by contemporary hardware to decrease main memory latency. This latency can have a huge impact, as fetching data from main memory may easily use up more than a hundred CPU cycles.

Mechanisms

Usually, there is a hierarchy of caches, with smaller, faster ones closer to CPU registers. Cache memory has a far lower latency than main memory, so a CPU first checks whether the requested data are already in one of the caches (starting with the L1 cache, working down the hierarchy). Not finding data in a cache is called a cache miss, and only in the case of cache misses on all levels, main memory is accessed. In practice an algorithm with a small memory footprint runs much quicker, because in the ideal case, when an algorithm’s data (and code) fit into the cache, the main memory only has to be accessed once at the very beginning, loading the data (and code) into the cache.

Besides the size of a memory footprint, the access pattern also plays a crucial role, as contemporary hardware contains prefetchers that speculate on which blocks of memory will be needed next and preemptively load them into the cache. The easier the access pattern can be recognized by a prefetcher, the more effective it becomes. Sequential access is a pattern that can be picked up by prefetchers very easily, while random access effectively renders them useless.

Also, programs do not access physical memory directly, but through a virtual memory manager, i.e., virtual addresses have to be mapped to physical ones. Part of the mapping table is cached in a so-called translation lookaside buffer (TLB). As the size of the TLB is limited, a program with a high level of locality will run faster, as all lookups can be served by the TLB.

Out-of-order execution (also called dynamic execution) allows a CPU to deviate from the original order of the instructions and run them as the data they process become available. Clearly, this can only be done when the instructions are independent of each other and can be run concurrently without changing the program logic.

Finally, certain properties of DRAM (dynamic random access memory) chips also influence latency. Accessing memory using fast page or a similar mode means accessing data stored within the same page or bank without incurring the overhead of selecting it. This mechanism favors memory accesses with a high level of locality.

Performance Numbers

We provide some numbers to give an impression of the performance of currently used hardware. For contemporary processors, such as ‘Core’ and ‘Xeon’ by IntelFootnote 3, one random memory access within the L1 data (L1d) cache (32 KB per core) takes 4 CPU cycles. Within the L2 cache (256 KB per core) one random memory access takes 11–12 cycles. Within the L3 cache (3–45 MB) one random memory access takes 30–40 CPU cycles. Finally, one random physical RAM access takes around 70–100 ns (200–300 processor cycles). It follows that the performance gap between an L1 cache access and a main memory access is huge: two orders of magnitude.

7.4 Implementation of the active tuple set

As we will see later in an experimental evaluation, managing the active tuple set efficiently in terms of memory accesses is crucial for the performance of the join algorithm. Otherwise we run the risk of starving the CPU while processing a join. Our goals have to be to store the active tuple set as compactly as possible and to access it sequentially, allowing the hardware to get the data to the CPU in an efficient manner.

We store the elements of our hash map in a contiguous memory area. For the insert operation this means that we always append a new element at the end of the storage area. Removing the last element from the storage area is straightforward. If the element to be removed is not the last in the storage area, we swap it with the last element and then remove it. When doing so, we have to update all the references to the swapped elements. Scanning involves stepping through the contiguous storage area sequentially. We call our data structure a gapless hash map (see Fig. 7).

We also separate the tuples from the elements, storing them in a different contiguous memory area in corresponding locations. Assuming fixed-size records, all basic element operations (append and move) are mirrored for the corresponding tuples. This slightly increases the costs for insertions and removal of tuples. However, scanning the tuples is as fast as it can become, because we do not need to read any metadata, only tuple information.

The hash table stores pointers to elements, which contain a key, a pointer for chaining elements of the same bucket when resolving collisions (pointer bucket next, solid arrows), and a pointer bucket prev to a hash table entry or an element (whichever holds the forward pointer to this element, dashed arrows). The latter is used for updating the reference to an element when changing the element position. The main difference to the random memory access of a linked hash map (Fig. 5) is the allocation of all elements in a contiguous memory area, allowing for fast sequential memory access when enumerating the values.

Example 2

Assume we want to remove tuple 7 from the structure depicted in Fig.7. First of all, the bucket-next pointer of the element with key 5 is set to NULL. Next, the last element in the storage area (tuple 2) is moved to the position of the element with key 7. Following the bucket-prev pointer of the just moved element we find the reference to the element in the hash table and update it. Finally, the variable tail is decremented to point to the element with key 9.

7.5 Overhead for abstractions

All the abstractions we use (iterators, predicates passed as function arguments, and lambda functions) allow us to express all joins by means of a single function, which is extremely practical due to the huge simplification of implementation and subsequent maintenance of the code. In this section we explain why the impact of this architecture on the performance is minimal for C++ and not significant for Java.

We compare our implementation empirically to a manual rewrite without abstractions of a selected join algorithm. Here, we show the results for our most complicated implementation, Algorithm 6. We compare its performance to a version that was fully inlined manually into a single leaf function. We did so for C++ and also for Java. We then launched each one of the four versions separately using the synthetic dataset of \(10^6\) tuples with an average number of active tuples equal to 10 (see Sect. 9.1 for the dataset). Each version was executing the join several times sequentially to allow the JVM to perform all necessary optimizations. The results are shown in Fig. 8. We see that the C++ version is several times faster than the Java version. Moreover, we see that the C++ compiler was able to optimize our abstracted code so well that its performance is indistinguishable from the manually optimized version. The situation with Java is more complicated, in the end the manually optimized version was \({\sim }10\%\) faster.

C++

This language was designed to support penalty-free abstractions. Not all abstractions in C++ are penalty free, though. We first implemented the family of Endpoint Iterators as a hierarchy of virtual classes and found that the compilers we used (GCC and Clang) were not able to inline virtual method calls (even though they had all the required information to do so). We then rewrote the code using templates and functors, each iterator becoming a non-virtual class, passed into the join algorithm as template argument. The comparator used by the core algorithm was a functor std::less or std::less_equals. The consumers were defined as C++11 lambda-functions, also passed as a template parameter. This time both compilers were able to inline all method calls and generate very optimized code with all variables (including iterator fields) kept in CPU registers.

Java

We face a different situation with Java, as the optimization is not performed by the compiler, but the Java virtual machine (JVM) during runtime. The JVM (in particular, the standard Oracle HotSpot implementation) compiles, optimizes and recompiles the code while executing it. It can potentially apply a wider range of optimizations (e.g., speculative optimization) than a C++ compiler can, as it actively learns about the actual workload, but in the case of Java we have limited control over this process. As we show in Fig. 8, Java does in fact optimize the code with abstractions. Not as well as C++, but the performance difference is very small compared to a manually rewritten join.

7.6 Parallel execution

While parallelization is not a main focus of this paper, we know how to parallelize our scheme and have implemented a parallel version of our earlier EBI-Join operator [36]. We give a brief description here: the tuples in both input relations, \(\varvec{r}\) and \(\varvec{s}\), are sorted by their starting time and then partitioned in a round-robin fashion, i.e., the ith tuple of a relation is assigned to partition \((i \mod k)\) of that relation, where k is the number of partitions. By assigning close neighbors to different partitions, we lower the size of the active tuple sets, which is a crucial parameter for the performance of our algorithm. We then do a pairwise join between all partitions of \(\varvec{r}\) with all partitions of \(\varvec{s}\). As all partitions are disjoint, the joins can run in parallel independently of each other. A downside of this approach is that we need \(k^2\) processes. Nevertheless, we achieved an average speedup of 2.7, 4.3, and 5.3 for \(k = \) 2, 3, and 4, respectively, on a machine with two CPUs (eight cores each). Bouros and Mamoulis utilize domain-based rather than hash-based partitioning, which gives FS a slight edge over EBI-Join during parallel execution [8]. However, as also shown in [8], domain-based partitioning can also be applied to EBI-Join, resulting in comparable performance.

Furthermore, one major difference between JoinByS and EBI-Join is that JoinByS maintains only one active tuple set (for \(\varvec{r}\)), whereas EBI-Join maintains two (one for \(\varvec{r}\) and one for \(\varvec{s}\)). So, in order to keep the active tuple set small, for JoinByS we only need to partition \(\varvec{r}\), resulting in one process for each of the k partitions, while still being able to do this on the fly. The tuples in \(\varvec{s}\) are then fed to each of these processes.

8 Theoretical analysis

One-dimensional Overlap

Our approach is related to finding all the intersecting line segments, or intervals, given a set of n segments in one-dimensional space. The optimal (plane-sweeping) algorithm for doing so has complexity \(O(n \log n + k)\), where k is the number of intersecting segments [12]. In the worst case, when we have a large number of intersecting segments, the complexity becomes \(O(n \log n + n^2)\). In this case, the run time of the algorithm is dominated by the output cardinality.

Each segment \(s_i\) is split up into a left starting event \(\langle l_i, \mathrm {start} \rangle \) and a right ending event \(\langle r_i, \mathrm {end} \rangle \). Afterward the events of all segments are sorted, which takes \(O(n \log n)\) time. We then traverse the sorted list of events. When encountering a left endpoint, we insert it into a data structure D, which keeps track of the currently active segments. When encountering a right endpoint, we remove it from D and join it with all the segments currently stored in D. If we use a balanced search tree for D (e.g., a red-black tree), then inserting and removing an endpoint will cost us \(O(\log n)\). As we have 2n endpoints, we arrive at a total of \(O(2n \log 2n) = O(n \log n)\). Generating all the output will take \(O(k)\). If we use a hash table, insertion and removal of endpoints can be done in \(O(1)\), for a total of \(O(n)\). As long as we make sure that the entries in the hash table are linked or packed compactly (as in our gapless hash map), this will have an overall complexity of \(O(k)\).

Generalization

Joins with predicates involving Allen or ISEQL relations are not exactly the same as the one-dimensional line segment intersection. Nevertheless, the joins can be mapped onto orthogonal line segment intersection, which is a special case of two-dimensional line segment intersection that can also be done in \(O(n \log n + k)\), with \(k = n^2\) in the worst case, using a plane-sweeping algorithm that traverses the segments sorted by one dimension [12]. This also explains why there were no further developments for interval joins recently, as the state-of-the-art algorithms achieve this complexity. However, when generating the output, we cannot just join a segment with all active ones, we need to check additional constraints: two segments can overlap on the x-axis, but may or may not do so on the y-axis. As we will see shortly, this has implications for the data structure D.

Complexity of Different Join Predicates

Let us now have a closer look at the different join predicates. For all of them, we need the relations \(\varvec{r}\) and \(\varvec{s}\) to be sorted. Either we keep them in a Timeline Index or operate in a streaming environment, in which they are already sorted, or we need to sort them in \(O(n \log n)\). The non-parameterized and parameterized versions of start preceding, end following, and before (which includes meets in its parameterized version) are not hard to analyze. They all have a complexity of \(O(n \log n + k)\). For start preceding, we maintain the active tuple set of \(\varvec{r}\) in a gapless hash map, which means \(O(1)\) for the insertion and removal of a single tuple, or \(O(2n) = O(n)\) in total. Additionally, whenever we encounter a starting event of \(\varvec{s}\), we generate result tuples, resulting in a total of \(O(k)\) for generating all the output. For the parameterized version, we merely shift the endpoints of the tuples in \(\varvec{r}\). end following is very similar, the only differences being that we generate output when encountering ending events of \(\varvec{s}\) and for the parameterized variant, we shift the starting points of \(\varvec{r}\). before is not much different, we shift both events of tuples in \(\varvec{r}\) and whenever we encounter starting events of \(\varvec{s}\), we generate the output.