Abstract

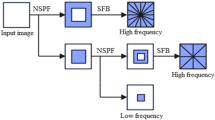

Recently, deep learning has been shown effectiveness in multimodal image fusion. In this paper, we propose a fusion method for CT and MR medical images based on convolutional neural network (CNN) in the shearlet domain. We initialize the Siamese fully convolutional neural network with a pre-trained architecture learned from natural data; then, we train it with medical images in a transfer learning fashion. Training dataset is made of positive and negative patch pair of shearlet coefficients. Examples are fed in two-stream deep CNN to extract features maps; then, a similarity metric learning based on cross-correlation is performed aiming to learn mapping between features. The minimization of the logistic loss objective function is applied with stochastic gradient descent. Consequently, the fusion process flow starts by decomposing source CT and MR images by the non-subsampled shearlet transform into several subimages. High-frequency subbands are fused based on weighted normalized cross-correlation between feature maps given by the extraction part of the CNN, while low-frequency coefficients are combined using local energy. Training and test datasets include pairs of pre-registered CT and MRI taken from the Harvard Medical School database. Visual analysis and objective assessment proved that the proposed deep architecture provides state-of-the-art performance in terms of subjective and objective assessment. The potential of the proposed CNN for multi-focus image fusion is exhibited in the experiments.

Similar content being viewed by others

References

Gao XW, Hui R (2016) A deep learning based approach to classification of CT brain images. In: 2016 SAI computing conference (SAI), London, 13–15 July 2016, pp 28–31

Yang H, Sun J, Li H, Wang L, Xu Z (2016) Deep fusion net for multi-atlas segmentation: application to cardiac MR images. In: Ourselin S, Joskowicz L, Sabuncu M, Unal G, Wells W (eds) Medical image computing and computer-assisted intervention—MICCAI 2016, Lecture Notes in Computer Science, vol 9901. Springer, Cham, pp 521–528

Nie D, Zhang H, Adeli E, Liu L, Shen D (2016) 3D deep learning for multi-modal imaging-guided survival time prediction of brain tumor patients. In: Ourselin S, Joskowicz L, Sabuncu M, Unal G, Wells W (eds) Medical image computing and computer-assisted intervention—MICCAI 2016, Lecture Notes in Computer Science, vol 9901. Springer, Cham, pp 212–220

James AP, Belur VD (2014) Medical image fusion: a survey of the state of the art. Inf Fusion 19:4–19

Li S, Kang X, Fang L, Hu J, Yin H (2017) Pixel-level image fusion: a survey of the state of the art. Inf Fusion 33:100–112

James AP, Belur VD (2015) A review of feature and data fusion with Medical Images. CoRR 491–507. https://www.semanticscholar.org/paper/A-Review-of-Feature-and-Data-Fusion-with-Medical-James-Dasarathy/24c8bbc5993157c9fa675995059afb4903dfb767?tab=referencestab=references

Mangai UG, Samanta S, Das S, Chowdhury PR (2010) A survey of decision fusion and feature fusion strategies for pattern classification. IETE Tech Rev 27(4):293–307

Wu D, Yang A, Zhu L, Zhang C (2014) Survey of multi-sensor image fusion. In: Life system modeling and simulation, pp 358–367

Luo W, Schwing AG, Urtasun R (2016) Efficient deep learning for stereo matching. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 5695–5703

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, Torr PHS (2016) Fully-convolutional siamese networks for object tracking. In Computer vision—ECCV 2016 workshops, pp 850–865

Simonovsky M, Gutiérrez-Becker B, Mateus D, Navab N, Komodakis N (2016) A deep metric for multimodal registration. In: Medical image computing and computer-assisted intervention—MICCAI 2016, pp 10–18

Nirmala DE, Vaidehi V (2015) Comparison of pixel-level and feature level image fusion methods. In: 2015 2nd international conference on computing for sustainable global development (INDIACom), pp 743–748

Ghassemian H (2016) A review of remote sensing image fusion methods. Inf Fusion 32(Part A):75–89

Du J, Li W, Lu K, Xiao B (2016) An overview of multi-modal medical image fusion. Neurocomputing 215:3–20

Kutyniok G, Labate D (2012) Introduction to shearlets. In: Kutyniok G, Labate D (eds) Shearlets: multiscale analysis for multivariate data. Birkhäuser, Boston

Easley G, Labate D, Lim WQ (2008) Sparse directional image representations using the discrete shearlet transform. Appl Comput Harmon Anal 25(1):25–46

Hermessi H, Mourali O, Zagrouba E (2016) Multimodal image fusion based on non-subsampled Shearlet transform and neuro-fuzzy. In: Representations, analysis and recognition of shape and motion from imaging data, pp 161–175

Guo Y, Liu Y, Oerlemans A, Lao S, Wu S, Lew MS (2016) Deep learning for visual understanding: a review. Neurocomputing 187:27–48

Liu W, Wang Z, Liu X, Zeng N, Liu Y, Alsaadi FE (2017) A survey of deep neural network architectures and their applications. Neurocomputing 234:11–26

Greenspan H, van Ginneken B, Summers RM (2016) Guest editorial deep learning in medical imaging: overview and future promise of an exciting new technique. IEEE Trans Med Imaging 35(5):1153–1159

Shin HC et al (2016) Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans Med Imaging 35(5):1285–1298

Tajbakhsh N et al (2016) Convolutional neural networks for medical image analysis: full training or fine tuning? IEEE Trans Med Imaging 35(5):1299–1312

Zhong J, Yang B, Huang G, Zhong F, Chen Z (2016) Remote sensing image fusion with convolutional neural network. Sens Imaging 17(1):10

Liu Y, Chen X, Peng H, Wang Z (2017) Multi-focus image fusion with a deep convolutional neural network. Inf Fusion 36:191–207

Kong Y, Deng Y, Dai Q (2015) Discriminative clustering and feature selection for brain MRI segmentation. IEEE Signal Process Lett 22(5):573–577

Deng Y, Bao F, Deng X, Wang R, Kong Y, Dai Q (2016) Deep and structured robust information theoretic learning for image analysis. IEEE Trans Image Process 25(9):4209–4221

Singh S, Gupta D, Anand RS, Kumar V (2015) Non-subsampled shearlet based CT and MR medical image fusion using biologically inspired spiking neural network. Biomed Signal Process Control 18:91–101

Nobariyan BK, Daneshvar S, Foroughi A (2014) A new MRI and PET image fusion algorithm based on pulse coupled neural network. In: 2014 22nd Iranian conference on electrical engineering (ICEE), pp 1950–1955

LeCun Y, Bengio Y, Hinton G (2015) A review: deep learning. Nature 521(7553):436–444

Rezaeilouyeh H, Mollahosseini A, Mahoor MH (2016) Microscopic medical image classification framework via deep learning and shearlet transform. J Med Imaging 3(4):044501

Li Z et al (2017) Convolutional neural network based clustering and manifold learning method for diabetic plantar pressure imaging dataset. J Med Imaging Health Inf 7(3):639–652

Wang D et al (2017) Image fusion incorporating parameter estimation optimized gaussian mixture model and fuzzy weighted evaluation system: a case study in time-series plantar pressure data set. IEEE Sens J 17(5):1407–1420

Williams T, Li R (2016) Advanced image classification using wavelets and convolutional neural networks. In: 2016 15th IEEE international conference on machine learning and applications (ICMLA), pp 233–239

Sirinukunwattana K, Raza SEA, Tsang YW, Snead David R J, Cree Ian A, Rajpoot NM (2016) Locality sensitive deep learning for detection and classification of nuclei in routine colon cancer histology images. IEEE Trans Med Imaging 35(5):1196–1206

Li Y et al (2015) No-reference image quality assessment with shearlet transform and deep neural networks. Neurocomputing 154:94–109

Luo X, Zhang Z, Zhang B, Wu X (2017) Image fusion with contextual statistical similarity and nonsubsampled shearlet transform. IEEE Sens J 17(6):1760–1771

Nair V, Hinton G (2010) Rectified linear units improve restricted Boltzmann machines. In: Proceedings of 27th international conference on machine learning, pp 807–814

LeCun Y, Bottou L, Orr GB, Müller K-R (1998) Efficient BackProp. In: Orr GB, Müller K-R (eds) Neural networks: tricks of the trade. Springer, Berlin, pp 9–50

Shearlet webpage. www.shearlab.org. Accessed 02 Jun 2017

Zagoruyko S, Komodakis N (2015) Learning to compare image patches via convolutional neural networks. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR), pp 4353–4361

Cheng X, Zhang L, Zheng Y (2016) Deep similarity learning for multimodal medical images. Comput Methods Biomech Biomed Eng Imaging Vis. https://doi.org/10.1080/21681163.2015.1135299. https://www.tandfonline.com/action/showCitFormats?doi=10.1080%2F21681163.2015.1135299

Krig S (2016) Feature learning and deep learning architecture survey. In: Computer vision metrics. Springer, Cham, pp 375–514

Bronstein MM, Bronstein AM, Michel F, Paragios N (2010) Data fusion through cross-modality metric learning using similarity-sensitive hashing. In: 2010 IEEE computer society conference on computer vision and pattern recognition, pp 3594–3601

The Whole Brain Atlas, Harvard Medical School. http://www.med.harvard.edu/aanlib/. Accessed 15 May 2017

Pezeshk A, Petrick N, Chen W, Sahiner B (2017) Seamless lesion insertion for data augmentation in CAD training. IEEE Trans Med Imaging 36(4):1005–1015

Moonon A-U, Hu J (2015) Multi-focus image fusion based on NSCT and NSST. Sens Imaging 16(1):4

Vedaldi A, Lenc K (2015) MatConvNet: convolutional neural networks for MATLAB. In: Proceedings of the 23rd ACM international conference on multimedia, New York, NY, USA, pp 689–692

Naji MA, Aghagolzadeh A (2015) Multi-focus image fusion in DCT domain based on correlation coefficient. In: 2015 2nd international conference on knowledge-based engineering and innovation (KBEI), pp 632–639

Wang L, Li B, Tian L (2014) EGGDD: an explicit dependency model for multi-modal medical image fusion in shift-invariant shearlet transform domain. Inf Fusion 19:29–37

Geng P, Wang Z, Zhang Z, Xiao Z (2012) Image fusion by pulse couple neural network with shearlet. Opt Eng 51(6):067005-1

Jagalingam P, Hegde AV (2015) A review of quality metrics for fused image. Aquat Proc 4:133–142

Github Matlab code for image fusion metrics. https://github.com/zhengliu6699/imageFusionMetrics. Accessed 25 May 2017

Chen Y, Blum RS (2009) A new automated quality assessment algorithm for image fusion. Image Vis Comput 27(10):1421–1432

Liu Z, Blasch E, Xue Z, Zhao J, Laganiere R, Wu W (2012) Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: a comparative study. IEEE Trans Pattern Anal Mach Intell 34(1):94–109. https://doi.org/10.1109/TPAMI.2011.109

Acknowledgements

The authors would like to thank Dr. Yu Liu, School of Instrument Science and Opto-electronics Engineering, Hefei University of Technology, China, for making his code and trained CNN model available online. Authors would also like to acknowledge the reviewers for their invaluable and constructive comments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Hermessi, H., Mourali, O. & Zagrouba, E. Convolutional neural network-based multimodal image fusion via similarity learning in the shearlet domain. Neural Comput & Applic 30, 2029–2045 (2018). https://doi.org/10.1007/s00521-018-3441-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3441-1