Abstract

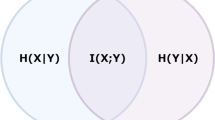

Recent studies have proven the potential of using termsets to enrich the conventionally used bag-of-words-based representation of electronic documents by forming composite feature vectors. In this approach, some of the member terms may become redundant due to being strongly correlated with the corresponding termsets. On the other hand, the co-occurrence of terms may be more informative than their individual appearance. In these cases, removal of the member terms should be addressed to avoid the curse of dimensionality during model generation. In this study, elimination of member terms that become redundant due to employing 2-termsets is firstly addressed and two novel algorithms are developed for this purpose. The proposed algorithms are based on evaluating the relative discriminative powers and correlations of member terms and corresponding 2-termsets. As a third approach, evaluating redundancies of all terms when 2-termsets are used and discarding the terms that are most correlated with the 2-termsets is addressed. Simulations conducted on five benchmark datasets have verified the importance of eliminating redundant terms and effectiveness of the proposed algorithms.

Similar content being viewed by others

References

Sebastiani F (2002) Machine learning in automated text categorization. ACM Comput Surv 34(1):1–47

Jaillet S, Laurent A, Teisseire M (2006) Sequential patterns for text categorization. Intell Data Anal 10(3):199–214

Chen J, Huang H, Tian S, Qu Y (2009) Feature selection for text classification with naive Bayes. Expert Syst Appl 36:5432–5435

Yang J, Liu Y, Zhu X, Liu Z, Zhang X (2012) A new feature selection based on comprehensive measurement both in inter-category and intra-category for text categorization. Inf Process Manag 48(4):741–754

Liu Y, Loh HT, Sun A (2009) Imbalanced text classification: a term weighting approach. Expert Syst Appl 36:690–701

Zhang L, Jiang L, Li C (2016) A new feature selection approach to naive Bayes text classifiers. Int J Pattern Recognit Artif Intell 30(02):1650003

Debole F, Sebastiani F (2003) Supervised term weighting for automated text categorization. In: SAC’03: proceedings of the 2003 ACM symposium on applied computing, ACM, New York, NY, USA. pp 784–788

Junejo KN, Karim A, Hassan MT, Jeon M (2016) Terms-based discriminative information space for robust text classification. Inf Sci 372:518–538

Haddoud M, Mokhtari A, Lecroq T, Abdeddaïm S (2016) Combining supervised term-weighting metrics for svm text classification with extended term representation. Knowl Inf Syst 49(3):909–931

Altınel B, Ganiz MC (2016) A new hybrid semi-supervised algorithm for text classification with class-based semantics. Knowl Based Syst 108:50–64

Jiang L, Li C, Wang S, Zhang L (2016) Deep feature weighting for naive Bayes and its application to text classification. Eng Appl Artif Intell 52:26–39

Zhang L, Jiang L, Li C, Kong G (2016) Two feature weighting approaches for naive Bayes text classifiers. Knowl Based Syst 100:137–144

Li Y, Luo C, Chung SM (2012) Weighted naive Bayes for text classification using positive term-class dependency. Int J Artif Intell Tools 21(01):1250008

Lewis DD (1992) An evaluation of phrasal and clustered representations on a text categorization task. In: Proceedings of the 15th annual international ACM SIGIR conference on research and development in information retrieval, SIGIR ’92, ACM, New York, USA, pp 37–50

Boulis C, Ostendorf M (2005) Text classification by augmenting the bag-of-words representation with redundancy compensated bigrams. In: Proceedings of the international workshop on feature selection in data mining, in conjunction with SIAM SDM-05, pp 9–16

Keikha M, Khonsari A, Oroumchian F (2009) Rich document representation and classification: an analysis. Knowl Based Syst 22(1):67–71

Bekkerman R, Allan J (2004) Using bigrams in text categorization. Technical Report IR-408, Center of Intelligent Information Retrieval, UMass Amherst

Figueiredo F, Rocha L, Couto T, Salles T, Gonçalves MA, Meira W (2011) Word co-occurrence features for text classification. Inf Syst 36(5):843–858

Tesar R, Poesio M, Strnad V, Jezek K (2006) Extending the single words-based document model: a comparison of bigrams and 2-itemsets. In: Proceedings of the 2006 ACM symposium on Document engineering, ACM, New York, USA, pp 138–146

Badawi D, Altınçay H (2014) A novel framework for termset selection and weighting in binary text classification. Eng Appl Artif Intell 35:38–53

Özgür L, Güngör T (2010) Text classification with the support of pruned dependency patterns. Pattern Recogn Lett 31(12):1598–1607

Fürnkranz J (1998) A study using n-gram features for text categorization. Technical Report OEFAI-TR-98-30, Austrian Research Institute for Artificial Intelligence, Austria

Tan CM, Wang YF, Lee CD (2002) The use of bigrams to enhance text categorization. Inf Process Manag 38:529–546

Yang L, Li C, Ding Q, Li L (2013) Combining lexical and semantic features for short text classification. Proced Comput Sci 22:78–86

Uysal AK (2016) An improved global feature selection scheme for text classification. Expert Syst Appl 43:82–92

Feng G, Guo J, Jing BY, Sun T (2015) Feature subset selection using naive Bayes for text classification. Pattern Recogn Lett 65:109–115

Peng H, Long F, Ding C (2005) Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

Zeng XQ, Li GZ (2014) Supervised redundant feature detection for tumor classification. BMC Med Genomics 7(Suppl2):S5

Yu L, Liu H (2004) Efficient feature selection via analysis of relevance and redundancy. J Mach Learn Res 5:1205–1224

Battiti R (1994) Using mutual information for selecting features in supervised neural net learning. IEEE Trans Neural Netw 5(4):537–550

Wang J, Wei JM, Yang Z, Wang SQ (2017) Feature selection by maximizing independent classification information. IEEE Trans Knowl Data Eng 29(4):828–841

Lan M, Tan CL, Su J, Lu Y (2009) Supervised and traditional term weighting methods for automatic text categorization. IEEE Trans Pattern Anal Mach Intell 31(4):721–735

Erenel Z, Altınçay H, Varoğlu E (2011) Explicit use of term occurrence probabilities for term weighting in text categorization. J Inf Sci Eng 27(3):819–834

Ng HT, Goh WB, Low KL (1997) Feature selection, perceptron learning, and a usability case study for text categorization. In: Proceedings of the 20th annual international SIGIR conference on research and development in information retrieval, pp 7–73

Zheng Z, Wu X, Srihari R (2004) Feature selection for text categorization on imbalanced data. SIGKDD Explor Newsl 6(1):80–89

Debole F, Sebastiani F (2004) An analysis of the relative hardness of Reuters-21578 subsets. J Am Soc Inf Sci Technol 56(6):584–596

Joachims T (1998) Text categorization with support vector machines: Learning with many relevant features. In: Proceedings of the 10th European conference on machine learning, ECML ’98, Springer, London, UK, pp 37–142

Erenel Z, Altınçay H (2012) Nonlinear transformation of term frequencies for term weighting in text categorization. Eng Appl Artif Intell 25:1505–1514

Buckley C (1985) Implementation of the smart information retrieval system. Technical report, Cornell University, Ithaca, USA

Porter MF (1980) An algorithm for suffix stripping. Program 14(3):130–137

Joachims T (1999) Making large-scale SVM learning practical. In: Schölkoph B, Burges CJC, Smola AJ (eds) Advances in kernel methods—support vector learning. MIT Press, Cambridge, pp 169–184

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Badawi, D., Altınçay, H. Effective use of 2-termsets by discarding redundant member terms in bag-of-words representation. Neural Comput & Applic 31, 5401–5418 (2019). https://doi.org/10.1007/s00521-018-3371-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3371-y