Abstract

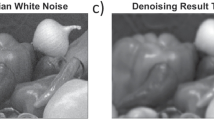

In this paper, a modified Polak–Ribière–Polyak (PRP) method, which possesses the following desired properties for unconstrained optimization problems, is presented. (i) The search direction of the given method has the gradient value and the function value. (ii) A non-descent backtracking-type line search technique is proposed to obtain the step size \(\alpha _k\) and construct a point. (iii) The method inherits an important property of the classical PRP method: the tendency to turn towards the steepest descent direction if a small step is generated away from the solution, preventing a sequence of tiny steps from happening. (iv) The strongly global convergence and R-linear convergence of the modified PRP method for nonconvex optimization are established under some suitable assumptions. (v) The numerical results show that the modified PRP method not only is interesting in practical computation but also has better performance than the normal PRP method in estimating the parameters of the nonlinear Muskingum model and performing image restoration.

Similar content being viewed by others

References

Birgin EG, Martínez JM (2001) A spectral conjugate gradient method for unconstrained optimization. Appl Math Optim 43:117–128

Cohen A (1972) Rate of convergence of several conjugate gradient algorithms. SIAM J Numer Anal 9:248–259

Cheng W (2007) A two-term PRP-based descent method. Numer Funct Anal Optim 28:1217–1230

Dai Y (2001) New properties of nonlinear conjugate gradient algorithms. J Numer Math 89:83–98

Dai Y (2002) Conjugate gradient methods with Armijo-type line searches. Acta Math Appl Sin (English Series) 18:123–130

Dolan ED, Moré JJ (2002) Benchmarking optimization software with performance profiles. Math Program 91:201–213

Dennis JE, Moré JJ (1974) A characterization of superlinear convergence and its applications to quasi-Newton methods. Math Comput 28:549–560

Dai Y, Yuan Y (1999) A nonlinear conjugate gradient with a strong global convergence property. SIAM J Optim 10(1):177–182

Fletcher R (1997) Practical method of optimization, vol I: unconstrained optimization. Wiley, New York

Fletcher R, Reeves CM (1964) Function minimization by conjugate gradients. J Comput 7:149–154

Gilbert JC, Nocedal J (1992) Global convergence properties of conjugate gradient methods for optimization. SIAM J Optim 2:21–42

Grippo L, Lucidi S (1997) A globally convergent version of the Polak-Ribière-Polyak conjugate gradient method. Math Program 78:375–391

Geem ZW (2006) Parameter estimation for the nonlinear Muskingum model using the BFGS technique. J Irrig Drain Eng 132:474–478

Hestenes MR, Stiefel E (1952) Method of conjugate gradient for solving linear equations. J Res Natl Bureau Stand 49:409–436

Khoda KM, Liu Y, Storey C (1992) Generalized Polak–Ribière algorithm. J Optim Theory Appl 75:345–354

Li X, Zhao X, Duan X (2015) A conjugate gradient algorithm with function value information and N-step quadratic convergence for unconstrained optimization. PLoS ONE 10(9):e137166

Liu Y, Storey C (2000) Effcient generalized conjugate gradient algorithms part 1: theory. J Optim Theory Appl Math 10:177–182

Nocedal J, Wright SJ (2006) Numberical optimization, 2nd edn. Springer Series in Operations Research. Springer, New York

Ouyang A, Liu L, Sheng Z, Wu F (2015) A class of parameter estimation methods for nonlinear Muskingum model using hybrid invasive weed optimization algorithm. Math Probl Eng 2015:15 (Article ID 573894)

Ouyang A, Tang Z, Li K, Sallam A, Sha E (2014) Estimating parameters of Muskingum model using an adaptive hybrid PSO algorithm. Int J Pattern Recogn Artif Intell 28:29 (Article ID 1459003)

Ortega JM, Rheinboldt WC (1970) Iterative solution of nonlinear equation in seveal variables. Academic Press, Cambridge

Polak E, Ribière G (1969) Note Sur la convergence de directions conjugèes. Rev. Fr Inf Rech Operationelle, 3e Annèe. 16:35–43

Polyak BT (1969) The conjugate gradient method in extreme problems. USSR Comput Math Math Phys 9:94–112

Powell MJD (1984) Nonconvex minimization calculations and the conjugate gradient method, vol 1066. Lecture Notes in Mathematics. Spinger, Berlin

Powell MJD (1986) Convergence properties of algorithm for nonlinear optimization. SIAM Rev 28:487–500

Rockafellar RT (1970) Convex analysis. Princeton University Press, Princeton

Shanno DF (1978) Conjugate gradient methods with inexact line searches. Math Oper Res 3:244–256

Shi Z (2002) Restricted PR conjugate gradient method and its global convergence. Adv Math 31(1):47–55

Yuan G, Li T, Hu W (2020) A conjugate gradient algorithm for large-scale nonlinear equations and image restoration problems. Appl Numer Math 147:129–141

Yuan G, Lu J, Wang Z (2020) The PRP conjugate gradient algorithm with a modified WWP line search and its application in the image restoration problems. Appl Numer Math 152:1–11

Yuan G, Wei Z (2010) Convergence analysis of a modified BFGS method on convex minimizations. Comput Optim Appl 47(2):237–255

Yuan G, Wei Z, Lu X (2017) Global convergence of the BFGS method and the PRP method for general functions under a modified weak Wolfe-Powell line search. Appl Math Modell 47:811–825

Yuan Y (1993) Analysis on the conjugate gradient method. Optim Methematics Softw 2:19–29

Yuan Y, Sun W (1999) Theory and methods of optimization. Science Press of China, Beijing

Zhou W, Li D (2014) On the convergence properties of the unmodified PRP method with a non-descent line search. Optim Methods Softw 29:484–496

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 11661009), the High Level Innovation Teams and Excellent Scholars Program in Guangxi institutions of higher education (Grant No. [2019]52)), the Guangxi Natural Science Key Fund (No. 2017GXNSFDA198046), and the Special Funds for Local Science and Technology Development Guided by the Central Government (No. ZY20198003).

Conflict of interest

The authors declare that there no conflicts of interest regarding the publication of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the National Natural Science Foundation of China (Grant No. 11661009), the High Level Innovation Teams and Excellent Scholars Program in Guangxi institutions of higher education (Grant No. [2019]52), the Guangxi Natural Science Foundation (2020GXNSFAA159069) and the Guangxi Natural Science Key Fund (No. 2017GXNSFDA198046).

Rights and permissions

About this article

Cite this article

Yuan, G., Lu, J. & Wang, Z. The modified PRP conjugate gradient algorithm under a non-descent line search and its application in the Muskingum model and image restoration problems. Soft Comput 25, 5867–5879 (2021). https://doi.org/10.1007/s00500-021-05580-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-021-05580-0