Abstract

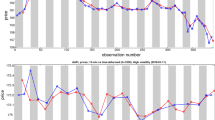

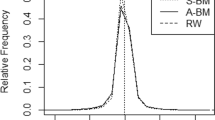

In this paper, we propose an innovative algorithm for modelling the news impact curve. The news impact curve provides a nonlinear relation between past returns and current volatility and thus enables to forecast volatility. Our news impact curve is the solution of a dynamic optimization problem based on variational calculus. Consequently, it is a non-parametric and smooth curve. The technique we propose is directly inspired from noise removal techniques in signal theory. To our knowledge, this is the first time that such a method is used for volatility modelling. Applications on simulated heteroskedastic processes as well as on financial data show a better accuracy in estimation and forecast for this approach than for standard parametric (symmetric or asymmetric ARCH) or non-parametric (Kernel-ARCH) econometric techniques.

Similar content being viewed by others

Notes

That is the part of \(y_t\) decomposed on a particular frequency at a particular date.

Our method is iterative: we alternate the estimation of \(x_t\) given g and of g given \(x_t\). We initiate the iteration by the estimation of \(x_t\) for a basic constant volatility term g.

Our model is written in Eq. (1) in discrete time, with the time t in a subset of integers, like in most of the literature about ARCH models. However, some papers deal with the continuous limit of ARCH models (Nelson 1991) or even of GARCH models (Badescu et al. 2015; Corradi 2000). In such a continuous framework, our model would be the limit, when \(\tau \rightarrow 0\), of

$$\begin{aligned} \left\{ \begin{array}{lll} y_{t \tau } &{} = &{} x_{t \tau }+\varepsilon _{t \tau } \\ \varepsilon _{t \tau }&{} = &{} \sqrt{h_{t \tau }} z_{t \tau } \\ \sqrt{h_{t \tau }} &{} = &{} g(\varepsilon _{(t-1)\tau },\ldots ,\varepsilon _{(t-l)\tau }), \end{array}\right. \end{aligned}$$for t still an integer. We thus indifferently write a discrete sum like in Eq. (3), which is consistent with the classical ARCH models, or a continuous integral like in Eq. (4), which is consistent with the variational framework. In particular, when we write a derivative \(d{\mathcal {G}}(t)/dt\) in this continuous setting, like in Eq. (5), it must be interpreted, in the basic discrete framework, as \(({\mathcal {G}}(t)-{\mathcal {G}}(t-\tau ))/\tau \) with \(\tau =1\).

These lines of pseudocode only present the main architecture of the algorithm. They refer to functions with explicit name, which also exist in many programming language but under another name. For instance, in the R package: “wavelets,” GetWaveletCoefficients is the modwt function,GetWaveletReconstruction is the imodwt function. Specifically, GetWaveletCoefficients creates a vector of wavelet coefficients, Median calculates a median, GetFilteredCoefficients applies a threshold filter to wavelet coefficients, GetWaveletReconstruction computes an inverse wavelet transform, and GetOrderingIndexes provides the permutation allowing to sort in ascending order the coordinates of a vector. The loops iterate until NumberIteration1 and NumberIteration2. A convergence criterion can be added so as to break the loop as soon as the likelihood of the model reaches a steady state.

The Euler-Lagrange equation is numerically solved in C++ while other parts of the paper are done using the R software. Notably, the wavelet part is done using the wavelets package.

An estimate of the realized volatility for S&P 500, FTSE 100 and DAX is available on Oxford-Man Institute realized library. The realized volatility is estimated using the Kernel method. This estimate is a consistent estimate of the daily volatility (Barndorff-Nielsen et al. 2008).

For a practical use, more iterations can provide a slightly higher accuracy in the estimation of the WV-ARCH model. However, by doing so, the drift incorporates iterated estimations of the news impact curve. Therefore, in order to make a fair comparison of all the models, we restrict to only one iteration so that the drift does not depend on the estimated non-parametric news impact curve. It can thus be used as a mutual drift for all the models.

More precisely, for any time t and for \(i\ge 1\), \({\mathcal {E}}_{i-1}(t)=(y(t-1)-x_{i-1}(t-1),\ldots ,y(t-l)-x_{i-1}(t-l))\). For the estimate \(x_i\), we use the threshold \(\varLambda _{i,j,k}\), which thus depends on the previous estimate \(x_{i-1}\). However, for \(i=0\), \(x_{i-1}\) is not defined and thus cannot be used in estimating \({\mathcal {E}}\). But since \(g_0\) is a constant function, \(\varLambda _{0,j,k}\) will be the same, whatever the choice made for \({\mathcal {E}}_{-1}\). As a consequence, \(\varLambda _{0,j,k}\) is not level-dependent.

More details about choosing \(\theta _i\) are provided in “Appendix A.1.2.”

\({\mathcal {G}}_{i+1}\) will be more clearly defined if we write its domain and codomain: \({\mathcal {G}}_{i+1}:\{0,\ldots ,T\}\rightarrow {\mathbb {R}}\), since it is obtained by the composition of \(\theta _i:\{0,\ldots ,T\}\rightarrow \{0,\ldots ,T\}\) with \({\mathcal {E}}_i:\{0,\ldots ,T\}\rightarrow {\mathbb {R}} ^h\) and \(g_{i+1}:{\mathbb {R}} ^h\rightarrow {\mathbb {R}}\).

References

Anatolyev S, Petukhov A (2016) Uncovering the skewness news impact curve. J Financ Econom 14(4):746–771

Aubert G, Aujol J-F (2008) A variational approach to removing multiplicative noise. SIAM J Appl Math 68(4):925–946

Badescu A, Elliott RJ, Ortega J-P (2015) Non-Gaussian GARCH option pricing models and their diffusion limits. Eur J Oper Res 247(3):820–830

Barndorff-Nielsen OE, Hansen PR, Lunde A, Shephard N (2008) Designing realized kernels to measure the ex post variation of equity prices in the presence of noise. Econometrica 76(6):1481–1536

Bollerslev T (1986) Generalized autoregressive conditional heteroskedasticity. J Econom 31(3):307–327

Bühlmann P, McNeil AJ (2002) An algorithm for nonparametric GARCH modelling. Comput Stat Data Anal 40(4):665–683

Cai TT, Brown LD (1998) Wavelet shrinkage for nonequispaced samples. Ann Stat 26(5):1783–1799

Caporin M, Costola M (2019) Asymmetry and leverage in GARCH models: a news impact curve perspective. Appl Econ 51(31):3345–3364

Chambolle A, Lions P-L (1997) Image recovery via total variation minimization and related problems. Numer Math 76(2):167–188

Chen WY, Gerlach RH (2019) Semiparametric GARCH via Bayesian model averaging. J Bus Econ Stat to appear

Cont R (2001) Empirical properties of asset returns: stylized facts and statistical issues. Taylor & Francis, Abingdon

Corradi V (2000) Reconsidering the continuous time limit of the GARCH(1, 1) process. J Econ 96(1):145–153

Deelstra G, Grasselli M, Koehl PF (2004) Optimal design of the guarantee for defined contribution funds. J Econ Dyn Control 28(11):2239–2260

Diebold FX, Mariano RS (2002) Comparing predictive accuracy. J Bus Econ Stat 20(1):134–144

Donoho D, Johnstone I (1994) Ideal spatial adaptation by wavelet shrinkage. Biometrika 81(3):425–455

Donoho D, Johnstone I (1995) Adapting to unknown smoothness via wavelet shrinkage. J Am Stat Assoc 90(432):1200–1244

Engle RF (1982) Autoregressive conditional heteroscedasticity with estimates of variance of United Kingdom inflation. Econometrica 50(4):987–1008

Engle RF, Ng VK (1993) Measuring and testing the impact of news on volatility. J Finance 48(5):1749–1778

Fan J, Yao Q (1998) Efficient estimation of conditional variance functions in stochastic regression. Biometrika 85(3):645–660

Franke J, Diagne M (2006) Estimating market risk with neural networks. Stat Decis 24(2):233–253

Fu Y, Zheng Z (2019) Volatility modeling and the asymmetric effect for China’s carbon trading pilot market. Phys A Stat Mech Appl to appear

Garcin M (2015) Empirical wavelet coefficients and denoising of chaotic data in the phase space. In: Skiadas C (ed) Handbook of applications of chaos theory. CRC/Taylor & Francis, Abingdon

Garcin M (2016) Estimation of time-dependent Hurst exponents with variational smoothing and application to forecasting foreign exchange rates. Phys A 483:462–479

Garcin M (2019) Fractal analysis of the multifractality of foreign exchange rates. Working paper

Garcin M, Guégan D (2014) Probability density of the empirical wavelet coefficients of a noisy chaos. Phys D 276:28–47

Garcin M, Guégan D (2016) Wavelet shrinkage of a noisy dynamical system with non-linear noise impact. Phys D 325:126–145

Giaquinta M, Hildebrandt S (2013) Calculus of variations II. Springer, Berlin

Glosten LR, Jagannathan R, Runkle D (1993) On the relationship between the expected value and the volatility of the nominal excess return on stocks. J Finance 48(5):1779–1801

Gouriéroux C, Monfort A (1992) Qualitative threshold ARCH models. J Econ 52(1):159–199

Hafner CM, Linton O (2010) Efficient estimation of a multivariate multiplicative volatility model. J Econ 159(1):55–73

Han H, Kristensen D (2014) Asymptotic theory for the QMLE in GARCH-X models with stationary and nonstationary covariates. J Bus Econ Stat 32(3):416–429

Han H, Kristensen D (2015) Semiparametric multiplicative GARCH-X model: adopting economic variables to explain volatility. Working Paper

Härdle W, Tsybakov A (1997) Local polynomial estimators of the volatility function in nonparametric autoregression. J Econom 81(1):223–242

Klaassen F (2002) Improving GARCH volatility forecasts with regime-switching GARCH. Advances in Markov-switching models. Springer, Berlin, pp 223–254

Lahmiri S (2015) Long memory in international financial markets trends and short movements during 2008 financial crisis based on variational mode decomposition and detrended fluctuation analysis. Phys A 437:130–138

Linton O, Mammen E (2005) Estimating semiparametric ARCH(\(\infty \)) models by kernel smoothing methods. Econometrica 73(3):771–836

Liu LY, Patton AJ, Sheppard K (2015) Does anything beat 5-minute RV? A comparison of realized measures across multiple asset classes. J Econom 187(1):293–311

Mallat S (2000) Une exploration des signaux en ondelettes. Éditions de l’École Polytechnique

Malliavin P, Thalmaier A (2006) Stochastic calculus of variations in mathematical finance. Springer, Berlin

Mandelbrot B (1963) The variation of certain speculative prices. J Bus 36(1):392–417

Nelson DB (1991) Conditional heteroskedasticity in asset returns: a new approach. Econometrica 59(2):347–370

Newey WK, West KD (1986) A simple, positive semi-definite, heteroskedasticity and autocorrelation consistent covariance matrix. Econometrica 55(3):703–708

Nigmatullin RR, Agarwal P (2019) Direct evaluation of the desired correlations: verification on real data. Phys A 534:121558

Pagan AR, Schwert GW (1990) Alternative models for conditional stock volatility. J Econom 45(1):267–290

Patton AJ (2011) Volatility forecast comparison using imperfect volatility proxies. J Econom 160(1):246–256

Patton AJ, Sheppard K (2009) Evaluating volatility and correlation forecasts. Handbook of financial time series. Springer, Heidelberg, pp 801–838

Poon S, Granger CW (2005) Practical issues in forecasting volatility. Financ Anal J 61(1):45–56

Rudin L, Osher S, Fatemi E (1992) Nonlinear total variation based noise removal algorithms. Phys D 60(1):259–268

Rudin L, Lions P-L, Osher S (2003) Multiplicative denoising and deblurring: theory and algorithms. Geometric level set methods in imaging, vision, and graphics. Springer, New York, pp 103–119

Stein C (1981) Estimation of the mean of a multivariate normal distribution. Ann Stat 9(6):1135–1151

Wang L, Feng C, Song Q, Yang L (2012) Efficient semiparametric GARCH modeling of financial volatility. Stat Sin 22:249–270

Weitzman ML (2009) Income, wealth, and the maximum principle. Harvard University Press, Cambridge

West KD (1996) Asymptotic inference about predictive ability. Econometrica 64(5):1067–1084

Xu K-L, Phillips PCB (2011) Tilted nonparametric estimation of volatility functions with empirical applications. J Bus Econ Stat 29(4):518–528

Zheng Z, Qiao Z, Takaishi T, Stanley HE, Li B (2014) Realized volatility and absolute return volatility: a comparison indicating market risk. PLoS ONE 9(7):e102940

Acknowledgements

This work was achieved through the Laboratory of Excellence on Financial Regulation (Labex ReFi) supported by PRES heSam under the reference ANR10LABX0095. It benefited from a French government support managed by the National Research Agency (ANR) within the project Investissements d’Avenir Paris Nouveaux Mondes (investments for the future Paris New Worlds) under the reference ANR11IDEX000602.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by M. Squillante.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We thank for their valuable comments: Christophe Boucher, Éric Gautier, Dominique Guégan, Florian Ielpo, Philippe de Peretti and Todd Prono. This acknowledgement does not make them accountable for any remaining mistake.

Appendices

A Algorithm

For this section, we do not use the econometric subscript anymore: y(t) replaces \(y_t\). It will allow a greater clarity since subscripts are used here for other purposes, such as indexing the iteration in the estimation. The parenthesis choice is also consistent with the use in functional analysis, where wavelets and variational problems come from. Note that, in this paper, we try to separate signal from noise, using wavelets and variational calculus, but many other possible techniques are possible, depending on the nature of the signal to denoise. Among them, we can cite some techniques dedicated to a particular kind of signal, for example correlation (Nigmatullin and Agarwal 2019).

1.1 A.1 Overview of the algorithm

The estimation of \(x_t\) and g is based on an iterative algorithm, since both the estimations require distinct techniques. However, a similar transformation of the data is used in each iterations. Therefore, it can be extracted from the iterative loop and it can be executed only once. It must be considered as a preliminary step of the algorithm. This step relates to the wavelet approach for estimating \(x_t\). Besides, the estimation of g is based on a variational approach, which supposes the computation of a line integral over ranked innovations. Since the innovations change after each estimate of \(x_t\), their ranking also changes at each iteration. It is thus not a preliminary step of the algorithm. However, we present this ranking technique apart so as not to overload the global presentation of the iteration with such a technical specificity.

1.1.1 A.1.1 Preliminary step of the algorithm

The preliminary step of our estimation algorithm is devoted to the decomposition of the signal y in a wavelet basis. This basis \((\psi _{j,k})\) of functions is obtained by dilatations and translations from a unique real mother wavelet, \(\varPsi \in {\mathcal {L}}^2({\mathbb {R}})\):

where \(j\in {\mathbb {Z}}\) is the scale parameter and \(k\in {\mathbb {Z}}\) is the translation parameter. As the observations are equispaced, we define the empirical wavelet coefficient \(\langle y,\psi _{j,k}\rangle \) of y, for the parameters j and k, by:

In fact, we decompose the signal in gross structure and details. Details are given by wavelet coefficients for a unique scale parameter j. In our examples, j is set to 4. The gross structure is given by scaling coefficients at the same scale j. They are given by:

where \(\phi \) is the scaling function related to the wavelet function. Further details on wavelets and its use to denoise time series can be found in (Mallat 2000). More precisely, the rationale behind thresholding wavelet coefficients is the following. The decomposition of a smooth signal with wavelets is sparse, that is to say, many wavelet coefficients are equal to zero. When adding noise, the signal loses its smoothness and its wavelet decomposition is not sparse anymore: indeed, many small but non-null wavelet coefficients appear. To get rid of this noise and recover a smooth signal, a well-known and efficient technique consists in shrinking these small coefficients towards zero. An illustration of soft and hard thresholds is presented in Fig. 3. For the applicative part of the paper, we used a Daubechies wavelet with four vanishing moments.

1.1.2 A.1.2 Permutation of the innovations

This step of the algorithm relates to the variational approach and is repeated just before each iteration of the estimation of g. In the variational method, we will minimize an integral of a function in which g appears. When g is multidimensional, that is when \(l>1\), we can face an empirical multidimensional integral with an irregular grid.

Due to its simplicity, we choose a distortion of the grid (Cai and Brown 1998; Garcin 2015) and more precisely we use a line integral. We thus select a bijective function \(\theta :\{0,\ldots ,T\}\rightarrow \{0,\ldots ,T\}\). \(\theta \) links the new time variable t to the natural observation time \(\theta (t)\). It leads to the path \({\mathcal {E}}\circ \theta \) along which the integral of g is empirically calculated. The idea is to minimize the Euclidean distance between \({\mathcal {E}}(\theta (t))\) and \({\mathcal {E}}(\theta (t+1))\) for all \(t\in \{0,\ldots ,T-1\}\). For example, if \(l=1\), we choose \(\theta \) so that the innovations are sorted: \({\mathcal {E}}(\theta (0))\le {\mathcal {E}}(\theta (1))\le \cdots \le {\mathcal {E}}(\theta (T))\). For higher l, the choice of \(\theta \) may be related to the travelling salesman problem, for which an approximation algorithm may be used. Whatever the choice made for \(\theta \), there will be an impact on the estimate of g when \(l>1\). Indeed, in our variational problem, we aim to minimize the squared derivative of g over all the observations. But this derivative is a derivative in only one direction while using the line integral, instead of a derivative thought as a gradient. Therefore, when we choose a particular \(\theta \), we may incidentally favour the smoothness of g at each observation point in one direction and not necessarily in all the directions. However, this limitation does not appear in dimension \(l=1\).

1.2 A.2 The algorithm for estimating x and g

We achieve the estimation of x and g iteratively:

-

1.

We begin by initializing the series of estimators: \(g_0=M/0.6745\), where M is the median of the absolute value of the wavelet coefficients of y at the finer scale, as usually done for wavelet denoising techniques with an homogeneous variance of the noise (Donoho and Johnstone 1994). Indeed, M/0.6745 is a robust estimator for the Gaussian noise standard deviation.

-

2.

We assume that we have already an estimate \(g_i\) of g, where \(i\in {\mathbb {N}}\). Then, estimating x matches the quite classical problem of estimating a variable linearly disrupted by an inhomogeneous Gaussian noise. We can achieve it using wavelets filtering, like SureShrink, for example. More precisely, we have decomposed the signal y in a basis of wavelet functions. The coefficients of this decomposition are a noisy version of the pure coefficients \(\langle x,\psi _{j,k}\rangle \). In order to get rid of this additive noise, we filter the coefficients and we build an estimate of x thanks to the inverse wavelet transform. Since the noise is Gaussian, we propose to use a soft-threshold filter. It means that the filtered wavelet coefficients are \(F_{i,j,k}(\langle y,\psi _{j,k}\rangle )\), where:

$$\begin{aligned}&F_{i,j,k}:c\in {\mathbb {R}}\mapsto (c-\varLambda _{i,j,k})\mathbf 1 _{c\ge \varLambda _{i,j,k}} \\&\quad +\, (c+\varLambda _{i,j,k})\mathbf 1 _{c\le -\varLambda _{i,j,k}}, \end{aligned}$$for a level-dependent threshold \(\varLambda _{i,j,k}=\lambda _i\sqrt{\langle (g_i\circ {\mathcal {E}}_{i-1})^2,\psi _{j,k}^2\rangle }\) where \(\lambda _i\) is a parameter and \({\mathcal {E}}_{i-1}\) is the \((i-1)\)th estimate of \({\mathcal {E}}\).Footnote 9 Examples indeed show that a level-dependent threshold much better performs than a constant threshold (Garcin and Guégan 2016). The choice for \(\lambda _i\) may be arbitrary, but we prefer to optimize it, that is to choose the value of \(\lambda _i\) which minimizes an estimate of the reconstruction error. This is the aim of SureShrink (Stein 1981; Donoho and Johnstone 1995; Mallat 2000). The estimate of the reconstruction error is

$$\begin{aligned} \bar{{\mathcal {S}}}_i=\sum _{k}{{\mathcal {S}}_{i,j,k}(\langle y,\psi _{j,k}\rangle )}, \end{aligned}$$where

$$\begin{aligned}&{\mathcal {S}}_{i,j,k}:c\in {\mathbb {R}}\mapsto \left\{ \begin{array}{ll} (\lambda _i^2+1)\langle (g_i\circ {\mathcal {E}}_{i-1})^2,\psi _{j,k}^2\rangle &{} \text {if } |c|\ge \lambda _i\sqrt{\langle (g_i\circ {\mathcal {E}}_{i-1})^2,\psi _{j,k}^2\rangle } \\ c^2-\langle (g_i\circ {\mathcal {E}}_{i-1})^2,\psi _{j,k}^2\rangle &{} \text {else,} \end{array}\right. \end{aligned}$$because \(\langle (g_i\circ {\mathcal {E}}_{i-1})^2,\psi _{j,k}^2\rangle \) is the estimated variance of the empirical wavelet coefficient \(\langle y,\psi _{j,k}\rangle \) (Garcin and Guégan 2014). Conditionally to y, \(\bar{{\mathcal {S}}}_i\) is an unbiased estimate of the reconstruction error. Any basic optimization algorithm enables then to get the \(\lambda _i\) minimizing \(\bar{{\mathcal {S}}}_i\). Thus, \(\varLambda _{i,j,k}\) and \(F_{i,j,k}\) for all j and k are now defined. We can hence write the estimate \(x_i\) of the function x as:

$$\begin{aligned} x_i(t)= & {} \sum _{k}{\langle y,\phi _{j,k}\rangle \phi _{j,k}(t)}\\&+\,\sum _{k}{F_{i,j,k}(\langle y,\psi _{j,k}\rangle )\psi _{j,k}(t)}, \end{aligned}$$for each \(t\in \{0,\ldots ,T\}\).

-

3.

We now use the ith estimate of x to estimate g. This is similar to estimating a signal disrupted by a multiplicative noise. We can then use a variational approach to estimate g. In the literature devoted to multiplicative noise, the case of a Gaussian variable is often excluded since the noisy signal is positive. However, in our case, the estimate of g, which stems from the estimate \(x_i\), is evaluated from the noisy signal \(y-x_i\), which is not expected to be positive at each t. The idea of the variational method is to find a function \(g_{i+1}\) which will be the solution of an optimization problem. This optimization problem consists, for each observation time, in maximizing the likelihood of \(y-x_i\) conditionally to \(g_{i+1}\) given that the noise is a Gaussian noise. In addition to that local criterion, we add a global constraint. This constraint is a penalty term which favours the smoothness of \(g_{i+1}\) along a given path \(\theta _i\), which is re-estimated at each iteration.Footnote 10 Our method is partially inspired by the one proposed by Aubert and Aujol (2008) for removing Gamma multiplicative noise. It leads to the following equation for the estimate \(g_{i+1}\circ {\mathcal {E}}_i\) of \(g\circ {\mathcal {E}}\), where we introduce \({\mathcal {G}}_{i+1}\) which we defineFootnote 11 by \({\mathcal {G}}_{i+1} = g_{i+1} \circ {\mathcal {E}}_i \circ \theta _i\):

$$\begin{aligned} \mu \frac{({\mathcal {G}}_{i+1})^2-(y\circ \theta _i-x_i\circ \theta _i)^2}{({\mathcal {G}}_{i+1})^3} - \frac{\mathrm{d}^2}{\mathrm{d}t^2} {\mathcal {G}}_{i+1}=0, \end{aligned}$$(8)where \(\mu >0\) is a parameter which allows to tune the priority between smoothness of \(g_{i+1}\) and accuracy of the model by means of the maximum-likelihood approach. More precisely, the smoothness of \(g_{i+1}\) increases when \(\mu \) decreases. Details about how this equation is obtained are given in “Appendix B.2.” Then, in order to solve numerically this equation, we use a dynamical version of it which is expected, like for Aubert and Aujol (2008), to lead to a steady state after some iterations of the series of estimators \(({\mathcal {G}}_{i+1,n})_n\) of \({\mathcal {G}}_{i+1}\):

$$\begin{aligned}&\frac{{\mathcal {G}}_{i+1,n+1}-{\mathcal {G}}_{i+1,n}}{\delta } = \frac{\mathrm{d}^2}{\mathrm{d}t^2} {\mathcal {G}}_{i+1,n} \\&\quad -\, \mu \frac{({\mathcal {G}}_{i+1,n})^2-(y\circ \theta _i-x_i\circ \theta _i)^2}{({\mathcal {G}}_{i+1,n})^3}, \end{aligned}$$where \(\delta \) is a parameter controlling the speed to which \(({\mathcal {G}}_{i+1,n})_n\) evolves. More precisely, for each \(t{\in }\{0,\ldots ,T\}\), the series \(({\mathcal {G}}_{i+1,n}(t))_n\) is iteratively defined by:

$$\begin{aligned} \left\{ \begin{array}{l} {\mathcal {G}}_{i+1,0}(t) = Median\{|y(s)-x_i(s)|\} /0.6745 \\ {\mathcal {G}}_{i+1,n+1}(t)= {\mathcal {G}}_{i+1,n}(t) +\delta \left[ {\mathcal {G}}_{i+1,n}(t+1)-2{\mathcal {G}}_{i+1,n}(t)\right. \\ \qquad \qquad \qquad \left. +{\mathcal {G}}_{i+1,n}(t-1) -\mu \frac{{\mathcal {G}}_{i+1,n}(t)^2-(y(\theta _i(t)) -x_i(\theta _i(t)))^2}{{\mathcal {G}}_{i+1,n}(t)^3}\right] . \end{array}\right. \end{aligned}$$\({\mathcal {G}}_{i+1,n}\) is expected to converge towards \({\mathcal {G}}_{i+1}\) when n tends towards infinity. The convergence is sensitive to the choice of parameters. In particular, the higher \(\delta \), the faster the initial estimator \({\mathcal {G}}_{i+1,0}\) of \({\mathcal {G}}_{i+1}\) will be distorted. However, if \(\delta \) is too big, fine adjustments from \({\mathcal {G}}_{i+1,n}\) to \({\mathcal {G}}_{i+1,n+1}\) will often be excluded and the convergence towards a steady state will be compromised.

B Improving the algorithm

Some refinements, concerning the initial condition \({\mathcal {G}}_{i+1,0}\) given i or the number of iterations N used to lead to the estimate \({\mathcal {G}}_{i+1}\), can be made in order to improve the algorithm, even though the standard conditions provided in the previous paragraph in general lead to satisfying results.

The main motivation for modifying these conditions is the fact that the choice of \(\delta \) has an impact on the way the series of estimators \(({\mathcal {G}}_{i+1,n}(t))_n\) evolves and finally on the accuracy of the estimated news impact curve. The choice of \(\delta \) or of N can hence be optimized so that the innovations \((z_t)_t\) better fit a unit Gaussian distribution.

Besides, some specific financial conditions may need a particular processing. For example, when different volatility regimes appear, one may prefer to initiate the series \(({\mathcal {G}}_{i+1,n}(t))_n\) with the constant function \({\mathcal {G}}_{i+1,0}\) estimated on some quantile of the residuals rather than on their median.

1.1 B.1 Proof of Proposition 1

We consider the estimation problem of g, from the model:

Let \(\delta _{z}\) be the probability density function of a unit Gaussian random variable:

Since g is assumed to be positive, we can apply the standard relation (Aubert and Aujol 2008):

Thus, in a time \(\theta (t)\), we get:

1.2 B.2 Justification of Eq. (8)

The maximum-likelihood problem consists in maximizing the right-hand side of Eq. (9). It is therefore equivalent to minimizing the opposite of the logarithm of the left-hand side of the same equation, that is excluding constant terms, for each time t:

Summing that function over all the observations by the path \(\theta \) leads to the following continuous form of the minimization problem:

where \({\mathcal {G}}=g\circ {\mathcal {E}}\circ \theta \). Moreover, we impose a condition of smoothness for g, as an additional objective of minimizing its quadratic variations over the path \(\theta \). Therefore, we now aim to minimize, for each time t:

where

where \(\mu >0\) is a given parameter. \({\mathcal {G}}\) is therefore the solution of the corresponding Euler–Lagrange equation:

C Statistical tests

Student t test

We consider the following vector of random variable: \((x_1,\ldots ,x_n)\) of mean \(\mu \). To test whether \(\mu \) equals \(\mu _0\), we implement the following Student t test:

In this article, we set \(\mu _0=0\). The test statistic follows a Student t distribution with \(n-1\) degree of freedom and it is defined as:

where \({\overline{x}} = \frac{1}{n}\sum _{i=1}^{n}x_i \) and \(s = \sqrt{\frac{1}{n-1}\sum _{i=1}^{n}(x_i-{\overline{x}})^{2}}\).

Fisher test ( F test)

We consider the following vector of random variable: \((x_1,\ldots ,x_n)\) of mean \(\mu \). To test whether the array variance equals \(\sigma _0^2\), we implement the following Fisher test:

In this article, we set \(\sigma _0^2=1\).

The test statistic follows a Fisher distribution, and it is defined as:

In the case of variance equality, t has an F-distribution with \(n-1\) degree of freedom.

D’Agostino test

We consider the following vector of random variable: \((x_1,\ldots ,x_n)\) of mean 0. To test whether the array skewness equals 0, we implement the following D’Agostino test:

The test statistic is defined as follows:

where \(s.e.= \sqrt{\frac{6n(n-1)}{(n-2)(n+1)(n+3)} }\).

Notably, when the data are normally distributed, t is also normally distributed.

Anscombe–Glynn test

We consider the following vector of random variable: \((x_1,\ldots ,x_n)\) of mean 0. To test whether the array kurtosis minus 3 equals 0, we implement the following Anscombe–Glynn test:

The test statistic is given by:

with :

-

\(c_1= 6+ \frac{8}{c_2}\left( \frac{2}{c_2} + \sqrt{1+\frac{4}{c_2} } \right) ,\)

-

\(c_2= \frac{6\left( n^2-5n+2 \right) }{(n+7)(n+9)} \left( \frac{6(n+3)(n+5)}{n(n-2)(n-3)} \right) ^{1/2},\)

-

\(c_3= \left( \kappa -3 \frac{n-1}{n+1}\right) / \left( \frac{24n(n-2)(n-3)}{(n+1)^2} (n+3)(n+5) \right) ^{1/2}\)

This test statistic is approximatively normal.

Rights and permissions

About this article

Cite this article

Garcin, M., Goulet, C. Non-parametric news impact curve: a variational approach. Soft Comput 24, 13797–13812 (2020). https://doi.org/10.1007/s00500-019-04607-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-019-04607-x