Abstract

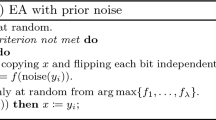

In many real-world optimization problems, the objective function evaluation is subject to noise, and we cannot obtain the exact objective value. Evolutionary algorithms (EAs), a type of general-purpose randomized optimization algorithm, have been shown to be able to solve noisy optimization problems well. However, previous theoretical analyses of EAs mainly focused on noise-free optimization, which makes the theoretical understanding largely insufficient for the noisy case. Meanwhile, the few existing theoretical studies under noise often considered the one-bit noise model, which flips a randomly chosen bit of a solution before evaluation; while in many realistic applications, several bits of a solution can be changed simultaneously. In this paper, we study a natural extension of one-bit noise, the bit-wise noise model, which independently flips each bit of a solution with some probability. We analyze the running time of the (\(1+1\))-EA solving OneMax and LeadingOnes under bit-wise noise for the first time, and derive the ranges of the noise level for polynomial and super-polynomial running time bounds. The analysis on LeadingOnes under bit-wise noise can be easily transferred to one-bit noise, and improves the previously known results. Since our analysis discloses that the (\(1+1\))-EA can be efficient only under low noise levels, we also study whether the sampling strategy can bring robustness to noise. We prove that using sampling can significantly increase the largest noise level allowing a polynomial running time, that is, sampling is robust to noise.

Similar content being viewed by others

References

Aizawa, A.N., Wah, B.W.: Scheduling of genetic algorithms in a noisy environment. Evol. Comput. 2(2), 97–122 (1994)

Akimoto, Y., Astete-Morales, S., Teytaud, O.: Analysis of runtime of optimization algorithms for noisy functions over discrete codomains. Theoret. Comput. Sci. 605, 42–50 (2015)

Arnold, D.V., Beyer, H.G.: A general noise model and its effects on evolution strategy performance. IEEE Trans. Evol. Comput. 10(4), 380–391 (2006)

Auger, A., Doerr, B.: Theory of Randomized Search Heuristics: Foundations and Recent Developments. World Scientific, Singapore (2011)

Bäck, T.: Evolutionary Algorithms in Theory and Practice: Evolution Strategies, Evolutionary Programming. Genetic Algorithms. Oxford University Press, Oxford (1996)

Branke, J., Schmidt, C.: Selection in the presence of noise. In: Proceedings of the 5th ACM Conference on Genetic and Evolutionary Computation (GECCO’03), pp. 766–777. Chicago, IL (2003)

Chang, Y., Chen, S.: A new query reweighting method for document retrieval based on genetic algorithms. IEEE Trans. Evol. Comput. 10(5), 617–622 (2006)

Corus, D., Dang, D.C., Eremeev, A.V., Lehre, P.K.: Level-based analysis of genetic algorithms and other search processes. In: Proceedings of 13th International Conference on Parallel Problem Solving from Nature (PPSN’14), pp. 912–921. Ljubljana, Slovenia (2014)

Dang, D.C., Lehre, P.K.: Efficient optimisation of noisy fitness functions with population-based evolutionary algorithms. In: Proceedings of the 13th ACM Conference on Foundations of Genetic Algorithms (FOGA’15), pp. 62–68. Aberystwyth, UK (2015)

Doerr, B., Goldberg, L.A.: Adaptive drift analysis. Algorithmica 65(1), 224–250 (2013)

Doerr, B., Hota, A., Kötzing, T.: Ants easily solve stochastic shortest path problems. In: Proceedings of the 14th ACM Conference on Genetic and Evolutionary Computation (GECCO’12), pp. 17–24. Philadelphia, PA (2012)

Doerr, B., Johannsen, D., Winzen, C.: Multiplicative drift analysis. Algorithmica 64(4), 673–697 (2012)

Droste, S.: Analysis of the (1 + 1) EA for a noisy OneMax. In: Proceedings of the 6th ACM Conference on Genetic and Evolutionary Computation (GECCO’04), pp. 1088–1099. Seattle, WA (2004)

Droste, S., Jansen, T., Wegener, I.: On the analysis of the (1 + 1) evolutionary algorithm. Theoret. Comput. Sci. 276(1–2), 51–81 (2002)

Feldmann, M., Kötzing, T.: Optimizing expected path lengths with ant colony optimization using fitness proportional update. In: Proceedings of the 12th ACM Conference on Foundations of Genetic Algorithms (FOGA’13), pp. 65–74. Adelaide, Australia (2013)

Friedrich, T., Kötzing, T., Krejca, M., Sutton, A.: Robustness of ant colony optimization to noise. Evol. Comput. 24(2), 237–254 (2016)

Friedrich, T., Kötzing, T., Krejca, M., Sutton, A.: The compact genetic algorithm is efficient under extreme gaussian noise. IEEE Trans. Evol. Comput. 21(3), 477–490 (2017)

Friedrich, T., Kötzing, T., Quinzan, F., Sutton, A.: Resampling vs recombination: A statistical run time estimation. In: Proceedings of 14th ACM Conference on Foundations of Genetic Algorithms (FOGA’17), pp. 25–35. Copenhagen, Denmark (2017)

Gießen, C., Kötzing, T.: Robustness of populations in stochastic environments. Algorithmica 75(3), 462–489 (2016)

He, J., Yao, X.: Drift analysis and average time complexity of evolutionary algorithms. Artif. Intell. 127(1), 57–85 (2001)

Jin, Y., Branke, J.: Evolutionary optimization in uncertain environments—a survey. IEEE Trans. Evol. Comput. 9(3), 303–317 (2005)

Ma, P., Chan, K., Yao, X., Chiu, D.: An evolutionary clustering algorithm for gene expression microarray data analysis. IEEE Trans. Evol. Comput. 10(3), 296–314 (2006)

Neumann, F., Witt, C.: Bioinspired Computation in Combinatorial Optimization: Algorithms and Their Computational Complexity. Springer, Berlin (2010)

Oliveto, P., Witt, C.: Simplified drift analysis for proving lower bounds in evolutionary computation. Algorithmica 59(3), 369–386 (2011)

Oliveto, P., Witt, C.: Erratum: Simplified drift analysis for proving lower bounds in evolutionary computation. CORR abs/1211.7184 (2012)

Prügel-Bennett, A., Rowe, J., Shapiro, J.: Run-time analysis of population-based evolutionary algorithm in noisy environments. In: Proceedings of the 13th ACM Conference on Foundations of Genetic Algorithms (FOGA’15), pp. 69–75. Aberystwyth, UK (2015)

Qian, C., Bian, C., Jiang, W., Tang, K.: Running time analysis of the (1+1)-EA for OneMax and LeadingOnes under bit-wise noise. In: Proceedings of the 19th ACM Conference on Genetic and Evolutionary Computation (GECCO’17), pp. 1399–1406. Berlin, Germany (2017)

Qian, C., Yu, Y., Tang, K., Jin, Y., Yao, X., Zhou, Z.H.: On the effectiveness of sampling for evolutionary optimization in noisy environments. Evol. Comput. 26(2), 237–267 (2018)

Qian, C., Yu, Y., Zhou, Z.H.: Analyzing evolutionary optimization in noisy environments. Evol. Comput. 26(1), 1–41 (2018)

Rowe, J.E., Sudholt, D.: The choice of the offspring population size in the (1,\(\lambda \)) evolutionary algorithm. Theoret. Comput. Sci. 545, 20–38 (2014)

Shevtsova, I.G.: Sharpening of the upper bound of the absolute constant in the Berry–Esseen inequality. Theory Probab. Appl. 51(3), 549–553 (2007)

Sudholt, D.: A new method for lower bounds on the running time of evolutionary algorithms. IEEE Trans. Evol. Comput. 17(3), 418–435 (2013)

Sudholt, D., Thyssen, C.: A simple ant colony optimizer for stochastic shortest path problems. Algorithmica 64(4), 643–672 (2012)

Yu, Y., Qian, C., Zhou, Z.H.: Switch analysis for running time analysis of evolutionary algorithms. IEEE Trans. Evol. Comput. 19(6), 777–792 (2015)

Acknowledgements

We want to thank the reviewers for their valuable comments. This work was supported by the NSFC (61603367, 61672478), the YESS (2016QNRC001), the Science and Technology Innovation Committee Foundation of Shenzhen (ZDSYS201703031748284), and the Royal Society Newton Advanced Fellowship (NA150123).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A preliminary version of this paper has appeared at GECCO’17 [27]

Rights and permissions

About this article

Cite this article

Qian, C., Bian, C., Jiang, W. et al. Running Time Analysis of the (\(1+1\))-EA for OneMax and LeadingOnes Under Bit-Wise Noise. Algorithmica 81, 749–795 (2019). https://doi.org/10.1007/s00453-018-0488-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00453-018-0488-4