Abstract

We prove a conjecture raised by the work of Diaconis and Shahshahani (Z Wahrscheinlichkeitstheorie Verwandte Geb 57(2):159–179, 1981) about the mixing time of random walks on the permutation group induced by a given conjugacy class. To do this we exploit a connection with coalescence and fragmentation processes and control the Kantorovich distance by using a variant of a coupling due to Oded Schramm as well as contractivity of the distance. Recasting our proof in the language of Ricci curvature, our proof establishes the occurrence of a phase transition, which takes the following form in the case of random transpositions: at time cn / 2, the curvature is asymptotically zero for \(c\le 1\) and is strictly positive for \(c>1\).

Similar content being viewed by others

1 Introduction

1.1 Main results

Let \({\mathcal {S}}_n\) denote the multiplicative group of permutations of \(\{1,\dots ,n\}\). Let \(\Gamma \subset {\mathcal {S}}_n\) be a fixed conjugacy class in \({\mathcal {S}}_n\), i.e., \(\Gamma = \{g \gamma g^{-1} : g \in {\mathcal {S}}_n\}\) for some fixed permutation \(\gamma \in {\mathcal {S}}_n\). Alternatively, \(\Gamma \) is the set of permutation in \({\mathcal {S}}_n\) having the same cycle structure as \(\gamma \). Let \(X^\sigma =(X_0, X_1, \ldots )\) be discrete-time random walk on \({\mathcal {S}}_n\) induced by \(\Gamma \), started in the permutation \(\sigma \in {\mathcal {S}}_n\), and let \(Y^\sigma \) be the associated continuous time random walk. These are the processes defined by

where \(\gamma _1,\gamma _2,\dots \) are i.i.d. random variables which are distributed uniformly in \(\Gamma \); and \((N_t, t \ge 0)\) is an independent Poisson process with rate 1. Then Y is a Markov chain which converges to an invariant measure \(\mu \) as \(t \rightarrow \infty \). If \(\Gamma \subset {\mathcal {A}}_n\) (where \({\mathcal {A}}_n\) denotes the alternating group) then \(\mu \) is uniformly distributed on \({\mathcal {A}}_n\) and otherwise \(\mu \) is uniformly distributed on \({\mathcal {S}}_n\). The simplest and most well known example of a conjugacy class is the set T of all transpositions, or more generally of all cyclic permutations of length \(k\ge 2\). This set will play an important role in the rest of the paper. Note that \(\Gamma \) depends on n but we do not indicate this dependence in our notation.

The main goal of this paper is to study the cut-off phenomenon for the random walk X. More precisely, recall that the total variation distance \(\Vert X-Y\Vert _{TV}\) between two random variables X, Y taking values in a set S is given by

For \(0< \delta <1\), the mixing time \({{\mathrm{\textit{t}_{mix}}}}(\delta )\) is by definition given by

where

and \(\mu \) is the invariant measure defined above.

In the case where \(\Gamma = T\) is the set of transpositions, a famous result of Diaconis and Shahshahani [10] is that the cut-off phenomenon takes place at time \((1/2) n \log n\) asymptotically as \(n\rightarrow \infty \). That is, \({{\mathrm{\textit{t}_{mix}}}}(\delta )\) is asymptotic to \((1/2) n \log n\) for any fixed value of \(0< \delta <1\). It has long been conjectured that for a general conjugacy class such that \(|\Gamma | = o(n)\) (where here and in the rest of the paper, \(|\Gamma |\) denotes the number of non fixed points of any permutation \(\gamma \in \Gamma \)), a similar result should hold at a time \((1/|\Gamma |) n \log n\). This has been verified for k-cycles with a fixed \(k\ge 2\) by Berestycki et al. [6]. This is a problem with a substantial history which will be detailed below. The primary purpose of this paper is to verify this conjecture. Hence our main result is as follows.

Theorem 1.1

Let \(\Gamma \subset {\mathcal {S}}_n\) be a conjugacy class and suppose that \(|\Gamma |=o(n)\). Define

Then for any \(\epsilon >0\),

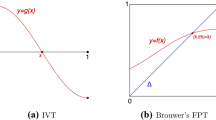

The first limit of (5) is proved in Appendix A. The rest of the paper focuses on the second limit. Our main tool for this result is the notion of discrete Ricci curvature as introduced by Ollivier [18], for which we obtain results of independent interest. We briefly discuss this notion here; however we point out that this turns out to be equivalent to the more well-known path coupling method and transportation metric introduced by Bubley and Dyer [8] and Jerrum [14] (see for instance Chapter 14 of the book [16] for an overview). However we will cast our results in the language of Ricci curvature because we find it more intuitive. Recall first that the definition of the \(L^1\)-Kantorovich distance (sometimes also called Wasserstein or transportation metric) between two random variables X, Y taking values in a metric space (S, d) is given by

where the infimum is taken over all couplings \(({\hat{X}},{\hat{Y}})\) which are distributed marginally as X and Y respectively. Ollivier’s definition of Ricci curvature of a Markov chain \((X_t, t \ge 0)\) on a metric space (S, d) is as follows:

Definition 1.1

Let \(t>0\). The curvature between two points \(x, x'\in S\) with \(x \ne x'\) is given by

where \(X_t^x\) and \(X_t^{x'}\) denote Markov chains started from x and \(x'\) respectively. The curvature of X is by definition equal to

In the terminology of Ollivier [18], this is in fact the curvature of the discrete-time random walk whose transition kernel is given by \(m_x(\cdot ) = \mathbb {P}(X_t = \cdot | X_0 = x)\). We refer the reader to [18] for an account of the elegant theory which can be developed using this notion of curvature, and point out that a number of classical properties of curvature generalise to this discrete setup.

For our results it will turn out to be convenient to view the symmetric group as a metric space equipped with the metric d which is the word metric induced by the set T of transpositions (we will do so even when the random walk is not induced by T but by a general conjugacy class \(\Gamma \)). That is, the distance \(d(\sigma , \sigma ')\) between \(\sigma , \sigma ' \in {\mathcal {S}}_n\) is the minimal number of transpositions one must apply to get from one element to the other (one can check that this number is independent of whether right-multiplications or left-multiplications are used).

For simplicity we focus in this introduction on the case where the random walk is induced by the set of transpositions T. (A more general result will be stated later on the paper). For \(c>0\) and \(\sigma \ne \sigma '\), let

and define \(\kappa _c(\sigma ,\sigma )=1\). That is, \(\kappa _c(\sigma , \sigma ') = \kappa _{\lfloor cn/2\rfloor }(\sigma , \sigma ')\) with our notation from (7). In particular, \(\kappa _c\) depends on n but this dependency does not appear explicitly in the notation. It is not hard to see that \(\kappa _c(\sigma , \sigma ') \ge 0\) (apply the same transpositions to both walks \(X^\sigma \) and \(X^{\sigma '}\)). For parity reasons it is obvious that \(\kappa _c(\sigma , \sigma ')=0\) if \(\sigma \) and \(\sigma '\) do not have the same signature. Thus we only consider the curvature between elements of even distance. For \(c>0\) define

where the infimum is taken over all \(\sigma ,\sigma '\in {\mathcal {S}}_n\) such that \(d(\sigma ,\sigma ')\) is even. Our main result states that \(\kappa _c\) experiences a phase transition at \(c=1\). More precisely, the curvature \(\kappa _c\) is asymptotically zero for \(c \le 1\) but for \(c>1\) the curvature is strictly positive asymptotically. In order to state our result, we introduce the quantity \(\theta (c)\), which is the largest solution in [0, 1] to the equation

It is easy to see that \(\theta (c) = 0\) for \(c\le 1\) and \(\theta (c) >0\) for \(c>1\). In fact, \(\theta (c)\) is nothing else but the survival probability of a Galton–Watson tree with Poisson offspring distribution with mean c.

Theorem 1.2

For any \(c>0\), we have:

In particular, \(\lim _{n \rightarrow \infty } \kappa _c = 0\) if and only if \(c \le 1\), while \(\liminf _{n \rightarrow \infty } \kappa _c >0\) otherwise.

A more general version of this theorem will be presented later on, which gives results for the curvature of a random walk induced by a general conjugacy class \(\Gamma \). This will be stated as Theorem 2.3.

We believe that the upper bound is the sharp one here, and thus make the following conjecture.

Conjecture 1.3

For \(c>0\),

Of course the conjecture is already established for \(c\le 1\) and so is only interesting for \(c>1\).

1.2 Relation to previous works on the geometry of random transpositions

The transition described by Theorem 1.2 says that the discrete Ricci curvature increases abruptly (asymptotically) from zero to a positive quantity as c increases past the critical value \(c=1\), and so as we consider longer portions of the random walk. It is related to a result proved by the first author in [2]. There it was shown that the triangle formed by the identity and two independent samples \(X_t\) and \(X'_t\) from the random walk run for time \(t=cn/4\), is thin (in the sense of Gromov hyperbolicity) if and only if \(c<1\). Note that by reversibility, the path running from \(X_t\) to \(X'_t\) (via the identity) is a random walk run for time cn / 2. In other words, the result from [2] implies that the permutation group appears Gromov hyperbolic from the point of view of a random walker so long as it takes fewer than cn / 2 steps with \(c< 1\).

Hence, in both Theorem 1.2 and [2], there is a change of geometry (as perceived by a random walker) from low to high curvature after running for exactly \(t = cn/2\) steps with \(c=1\). At this point, we do not know of a formal way to relate these two observations, so they simply seem analogous. In a private conversation with the first author in 2005, Gromov had suggested that the hyperbolicity transition of [2] could be translated more canonically into the language of Ricci curvature and was an effect of the global positive curvature of \(\mathcal {S}_n\) rather than a breakdown in hyperbolicity. In a sense, Theorem 1.2 can be seen as a formalisation and justification of his prediction.

1.3 Relation to previous works on mixing times

Mixing times of Markov chains were initiated independently by Aldous [1] and by Diaconis and Shahshahani [10]. In particular, as already mentioned, Diaconis and Shahshahani proved Theorem 1.1 in the case where \(\Gamma \) is the set T of transpositions. Their proof relies on some deep connections with the representation theory of \({\mathcal {S}}_n\) and bounds on so-called character ratios. The conjecture about the general case appears to have first been made formally in print by Roichman [20] but it has no doubt been asked privately before then. We shall see that the lower bound \({{\mathrm{\textit{t}_{mix}}}}(\delta ) \ge (1+ o(1))(1/|\Gamma |)n \log n\) is fairly straightforward (it is carried out in Appendix A and is as usual based on a coupon-collector type argument); the difficult part is the corresponding upper bound.

Flatto et al. [13] built on the earlier work of Vershik and Kerov [25] to obtain that \({{\mathrm{\textit{t}_{mix}}}}(\delta ) \le (1/2 + o(1))n \log n\) when \(|\Gamma |\) is bounded (as is noted in [9, pp. 44–45]). This was done using character ratios and this method was extended further by Roichman [20, 21] to show an upper bound on \({{\mathrm{\textit{t}_{mix}}}}(\delta )\) which is sharp up to a constant when \(|\Gamma | = o(n)\) (and in fact, more generally when \(|\Gamma |\) is allowed to grow to infinity as fast as \((1-\delta )n\) for any \(\delta \in (0,1)\)). Again using character ratios Lulov and Pak [17] showed the cut-off phenomenon as well as \({{\mathrm{\textit{t}_{mix}}}}= (1/|\Gamma |)n \log n\) in the case when \(|\Gamma | \ge n/2\). Roussel [22, 23] obtains the correct value of the mixing time and establishes the cut-off phenomenon for the case \(|\Gamma |\le 6\).

Finally, let us discuss two more recent papers to which this work is most closely related to. Berestycki et al. [6], show using coupling arguments and a connection to coalescence–fragmentation processes that the cutoff phenomenon occurs at \({{\mathrm{\textit{t}_{mix}}}}= (1/k)n \log n\) in the case when \(\Gamma \) consists only of cycles of length k for any \(k\ge 2\) fixed.

Shortly after, Bormashenko [7] devised a path coupling argument for the coagulation-fragmentation process associated to random transpositions to obtain a new proof of a slightly weaker version of the Diaconis–Shahshahani result: her argument implies that the mixing time of random transpositions is \(O( n \log n)\) (unfortunately the implicit multiplicative constant is not sharp, so this is not sufficient to obtain cutoff). See also [19] for another discussion of her results together with a reformulation in the language of coarse Ricci curvature. In a way her approach is very similar to ours, to the point that it can be considered a precursor to our work, since our method is also based on a certain path coupling for the coagulation-fragmentation process which exploits certain remarkable properties of Schramm’s coupling [6, 24].

Comparison with [6] The authors in Berestycki et al. [6] remark that their proof can be extended to cover the case when \(\Gamma \) is a fixed conjugacy class and indicate that their methods can probably be pushed to cover the case when \(|\Gamma |=o(n^{1/2})\), but it is clear that new ideas are needed if \(|\Gamma |\) is larger. Indeed, their argument uses very delicate estimates about the behaviour of small cycles, together with a variant of a coupling due to Schramm [24] to deal with large cycles. The most technical part of their argument is to analyse the distribution of small cycles, using delicate couplings and carefully bounding the error made in these couplings.

However, when \(k = |\Gamma |\) is larger than \(n^{1/2}\), we can no longer think of the points in the conjugacy class as being sampled independently (with replacement) from \(\{1, \ldots , n\}\), by the birthday problem. This introduces many more ways in which errors in the above coupling arguments could occur. These seem quite hard to control, and hence new ideas are required for the general case.

The proof in this paper relies on similar observations as [6], and in particular the connection with coalescence–fragmentation process as well as Schramm’s coupling argument play a crucial role. The key new idea however, is to try to prove mixing not just in the total variation sense but in the stronger sense of the \(L^1\)-Kantorovich distance (Ricci curvature) and to estimate it at a time well before the mixing time, roughly O(n / k) instead of \(O(n(\log n) / k)\). This may seem counterintuitive initially, however studying the random walk at this time scale allows us to make precise comparisons between the random walk and an associated random graph process. It turns out the random graph at these time scales can be described rather precisely. Furthermore, due to the contraction properties of the Kantorovich distance, somehow (and rather miraculously, we find), the estimate we obtain can be bootstrapped with sufficient precision to yield mixing exactly at the time \({{\mathrm{\textit{t}_{mix}}}}= (1/k) n \log n\).

In particular, since the heart of the proof consists in studying the situation at a time well before mixing, and purely to take advantage of the giant component at such times, we never have to study the distribution of small cycles. This is really quite surprising, given that the small cycles (in particular, the fixed points) are responsible for the occurrence of the cutoff at time \({{\mathrm{\textit{t}_{mix}}}}\).

1.4 Organisation of the paper

We stress that compared to [6], the main arguments are quite elementary. The heart of the proof is contained in Sects. 4.2 and 2 . Readers who are familiar with [6] are encouraged to concentrate on these two short sections.

The paper is organised as follows. In Sect. 2 we state and discuss Theorem 2.3, which is a general curvature theorem (of which Theorem 1.2 is the prototype). We also discuss why this implies the main theorem (Theorem 1.1). In Sect. 3.1 we study the associated random hypergraph process. The main result in that section is Theorem 3.1, which proves the existence and uniqueness of the giant component. Curiously this is the most technical aspect of the paper, and really the only place where the myriad of ways in which the conjugacy class \(\Gamma \) might be really big plays a role and needs to be controlled. Section 4 contains a proof of the main curvature theorem (Theorem 2.3), starting with the easy upper bound on curvature (Sect. 4.1) and following up with the slightly more complex lower bound (Sect. 4.2), which really is the heart of the proof. The two appendices contain respectively a proof of the lower bound on the mixing time (certainly known in the folklore, essentially a version of the coupon collector lemma); and an adaptation of Schramm’s argument [24] for the Poisson–Dirichlet structure of cycles inside the giant component, which is needed in the proof.

2 Curvature and mixing

2.1 Curvature theorem

As discussed above, the lower bound (5) is relatively easy and is probably known in the folklore; we give a proof in Appendix A. We now start the proof of the main results of this paper, which is the upper bound (the right hand side) of (5). In this section, we first state the more general version of Theorem 1.2 discussed in the introduction, and we will then show how this implies the desired result for the upper bound on \({{\mathrm{\textit{t}_{mix}}}}(\delta )\). To begin, we define the cycle structure \((k_2,k_3, \dots )\) of \(\Gamma \) to be a vector such that for each \(j \ge 2\), there are \(k_j\) cycles of length j in the cycle decomposition of any \(\gamma \in \Gamma \) (note that this does not depend on \(\tau \in \Gamma \)). Then \(k_j=0\) for all \(j>n\) and we have that \(k :=|\Gamma | = \sum _{j=2}^\infty j k_j\).

In the case for the transposition random walk the quantity \(\theta (c)\) which appears in the bounds is the survival probability of a Galton–Watson process with offspring distribution given by a Poisson random variable with mean c. Our first task is to generalise \(\theta (c)\). We do so via a fixed point equation, which is more complex here. Define

and note that \(\alpha _j \in [0,1]\) (\(\alpha _j\) is the proportion of the mass in cycles of size j for any \(\gamma \in \Gamma \)). Thus \((\alpha _j)_{j \ge 2}\) is compact in the product topology (the topology of pointwise convergence). Suppose that the limit

exists, where the limit is taken to be pointwise. It follows that for each \(j \ge 2\), \({\bar{\alpha }}_j \in [0,1]\) and \(\sum _{j=2}^\infty {\bar{\alpha }}_j \le 1\) by Fatou’s lemma. Note that the sum is strictly less than 1 when a positive fraction of the mass of conjugacy class \(\Gamma \) comes from cycles whose size tends to \(\infty \). This will be an important distinction in what follows. For \(x \in [0,1]\) and \(c>0\) define

Note that for each \(c>0\), \(x \mapsto \psi (x,c)\) is convex on [0, 1]. Moreover, the function \( x \mapsto \psi (1-x,c)\) is the generating function of a random variable whose law depends on c and is degenerate if \(\sum _{j \ge 2} {\bar{\alpha }}_j <1\). Note that in the case \(\Gamma = T\) of transpositions, \(\psi (x,c) = e^{-cx}\) so that random variable is simply Poisson (c).

Lemma 2.1

Define

Then for \(c>c_\Gamma \) there exists a unique \(\theta (c) \in (0,1)\) such that

For \(c>c_\Gamma \), \(c \mapsto \theta (c)\) is increasing, continuous and differentiable. Further \(\lim _{c \downarrow c_\Gamma }\theta (c)=0\) and \(\lim _{c \uparrow \infty }\theta (c)=1\).

Proof

For \(x \in [0,1]\) and \(c>0\) define \(f_c(x):=1-\psi (x,c)-x\). There are two cases to consider. First suppose that \(z=\sum _{j=2}^\infty {\bar{\alpha }}_j<1\). Then we have that

As \(x \mapsto f_c(x)\) is concave on [0, 1] it follows that there exists a unique \(\theta (c) \in (0,1)\) such that \(f_c(\theta (c))=0\).

Next suppose that \(\sum _{j=2}^\infty {\bar{\alpha }}_j=1\), then

Moreover we have that

Hence for \(c> c_\Gamma \) we have that \(\frac{d}{dx}f_c(x)|_{x=0} >0\) and again by concavity it follows that there exists a unique \(\theta (c) \in (0,1)\) such that \(f_c(\theta (c))=0\).

For the rest of the statements suppose that \(c>c_\Gamma \). The fact that \(c \mapsto \theta (c)\) is increasing follows from the definition of \(\psi (x,c)\) and the fact that \(\theta (c)=\psi (\theta (c),c)\). Continuity and differentiability for \(c> c_\Gamma \) is a straightforward application of the inverse function theorem.

Notice that \(\theta (c) \in [0,1]\) and is monotone, hence \(\theta (c)\) converges as \(c \downarrow c_\Gamma \) to a limit L. Then it follows that L solves the equation \(L=1-\psi (L,c_\Gamma )\). This equation has only a zero solution and thus \(L=0\) and hence \(\lim _{c \downarrow c_\Gamma }\theta (c)=0\). The limit as \(c \uparrow \infty \) follows from a similar argument. \(\square \)

Remark 2.2

In the case when \(\Gamma =T\) is the set of transpositions we have that \(k'_2 = 1\) and \({\bar{\alpha }}_j = 0\) for \(j\ge 3\), hence \(\psi (x,c)=e^{-cx}\) and thus the definition of \(\theta (c)\) above agrees with the definition given in the introduction.

Having introduced \(\theta (c)\) we now introduce the notion of Ricci curvature we will use in the general case. For \(c>0\) and \(\sigma \ne \sigma '\), let

where d is the graph distance associated with transpositions (even in the case \(\Gamma \ne T\)). Define \(\kappa _c(\sigma ,\sigma )=1\). Then let

where the infimum is taken over all \(\sigma ,\sigma '\in {\mathcal {S}}_n\) such that \(d(\sigma ,\sigma ')\) is even. That is, \(\kappa _c(\sigma , \sigma ') = \kappa _{\lfloor cn/k\rfloor }(\sigma , \sigma ')\) with our notation from (7). We now state a more general form of Theorem 1.2 which in particular covers the case of Theorem 1.2.

Theorem 2.3

Let \(\Gamma \subset {\mathcal {S}}_n\) be a conjugacy class such that \(k = |\Gamma |=o(n)\) and the convergence in (C) holds. Recall the definition of \(c_\Gamma \) from (12). Then for \(c\le c_\Gamma \),

On the other hand, for \(c>c_\Gamma \)

where \(\theta (c)\) is the unique solution in (0, 1) of

where \(\psi \) is given by (11).

2.2 Curvature implies mixing

We now show how Theorem 2.3 implies the second limit in Theorem 1.1. First suppose that \(\Gamma =\Gamma (n)\) is a sequence of conjugacy classes for which the limit (C) holds and \(|\Gamma |=o(n)\). Again fix \(\epsilon >0\) and define \(t= (1+2\epsilon )(1/k)n \log n\) and let \(t' = \lfloor (1+\epsilon )(1/k)n \log n\rfloor \) where \(k=|\Gamma |\). We are left to prove that \(d_{TV}(t) \rightarrow 0\) as \(n \rightarrow \infty \). For \(s \ge 0\) let

where the sup is taken over all permutations at even distances. We first claim that it suffices to prove that

Indeed, assume that \({\bar{d}}_{TV}(t') \rightarrow 0\) as \(n \rightarrow \infty \). Then there are two cases to consider. Assume that \(\Gamma \subset {\mathcal {A}}_n\). Then \(X_s \in {\mathcal {A}}_n\) for all \(s\ge 1\) and \(\mu \) is uniform on \({\mathcal {A}}_n\). Then by Lemma 4.11 in [16],

Hence Theorem 1.1 (or more precisely the second limit in that theorem) follows from (17) in this case. In the second case, \(\Gamma \subset {\mathcal {A}}_n^c\). In this case \(X_s \in {\mathcal {A}}_n\) for s even, and \(X_s \in {\mathcal {A}}_n^c\) for s odd. Using the same lemma, we deduce that if \(s \ge t'\) is even,

where \(\mu _1\) is uniform on \({\mathcal {A}}_n\). However, if \(s\ge t'\) is odd,

where this time \(\mu _2\) is uniform on \({\mathcal {A}}_n^c\). Let \(N=(N_s:s \ge 0)\) be the Poisson clock of the random walk Y. Then \(\mathbb {P}(N_s \text { even} ) \rightarrow 1/2\) as \(s\rightarrow \infty \), \(\mu = (1/2)(\mu _1+ \mu _2)\), and \(\mathbb {P}(N_t \ge t') \rightarrow 1\) as \(n \rightarrow \infty \). Thus we deduce that

Again, the second limit in Theorem 1.1 follows. Hence it suffices to prove (17).

Note that for any two random variables X, Y on a metric space (S, d) we have the obvious inequality \(\Vert X - Y\Vert _{TV} \le W_1(X,Y)\) provided that \(x\ne y \) implies \(d(x,y) \ge 1\) on S. This is in particular the case when \(S= {\mathcal {S}}_n\) and d is the word metric induced by the set T of transpositions. In other words it suffices to prove mixing in the \(L^1\)-Kantorovich distance.

Note that by definition of \(\kappa _c\), if \(\sigma \), \(\sigma '\) are at an even distance then

so that, iterating as in Corollary 21 of [18] (and noting that the distance between \(X^{\sigma }_{\lfloor cn/k \rfloor }\) and \(X^{\sigma '}_{\lfloor cn/k \rfloor }\) is again even), we have for each \(s \ge 1\),

since the diameter of \({\mathcal {S}}_n\) is equal to \(n-1\). Solving \( n (1-\kappa _c)^s \le \delta \) we get that

Thus if \(u = scn/k \ge s \lfloor cn/k\rfloor \), it suffices that

Now, Theorem 2.3 gives

Lemma 2.4

We have that

Proof

Using L’Hopital’s rule twice we have that

Next we have that \(\lim _{c \rightarrow \infty } \theta (c)=1\) and hence

\(\square \)

Consequently we have that for \(u\ge t'=\lfloor (1+\epsilon )(1/k)n \log n\rfloor \)u satisfies (20) for some sufficiently large \(c>c_\Gamma \). Hence \(\limsup _{n \rightarrow \infty } {\bar{d}}_{TV}(t')\rightarrow 0\) and thus (17) holds, which shows Theorem 1.1 for conjugacy classes such that the limit in (C) exists and \(|\Gamma |=o(n)\).

Now suppose that \(\Gamma \) is a conjugacy class such that \(|\Gamma |=o(n)\). Let \(t' = \lfloor (1+\epsilon )(1/|\Gamma |)n \log n\rfloor \) and notice that \(d_{TV}(t')\) is bounded. Along any subsequence \(\{n_i\}_{i \ge 1}\) such that \(\lim _{n_i\rightarrow \infty }d_{TV}(t')\) exists, we can extract a further sub-sequence \(\{n_{i_j}\}_{j \ge 1}\) such that (C) holds since \((\alpha _j)_{j \ge 2} \in [0,1]^\infty \) which is compact under the product topology. Then we see that \(\lim _{n_{i_j}\rightarrow \infty }d_{TV}(t')=0\) and consequently \(\lim _{n_i\rightarrow \infty }d_{TV}(t')=0\). Since \(d_{TV}(t')\) is bounded and converges to 0 along any convergent subsequence, we conclude that \(\lim _{n\rightarrow \infty }d_{TV}(t')=0\), thus concluding the proof.

2.3 Stochastic commutativity

To conclude this section on curvature, we state a simple but useful lemma. Roughly, this says that the random walk is “stochastically commutative”. This can be used to show that the \(L^1\)-Kantorovich distance is decreasing under the application of the heat kernel. In other words, initial discrepancies for the Kantorovich metric between two permutations are only smoothed out by the application of random walk.

Lemma 2.5

Let \(\sigma \) be a random permutation with distribution invariant by conjugacy. Let \(\sigma _0\) be a fixed permutation. Then \(\sigma _0 \circ \sigma \) has the same distribution as \(\sigma \circ \sigma _0 \).

Proof

Define \(\sigma ' = \sigma _0 \circ \sigma \circ \sigma _0^{-1}\). Then since \(\sigma \) is invariant under conjugacy, the law of \(\sigma '\) is the same as the law of \(\sigma \). Furthermore, we have \( \sigma _0 \circ \sigma = \sigma ' \circ \sigma _0\) so the result is proved. \(\square \)

This lemma will be used repeatedly in our proof, as it allows us to concentrate on events of high probability for our coupling.

3 Preliminaries on random hypergraphs

For the proof of Theorem 1.1 we rely on properties of certain random hypergraph processes. The reader who is only interested in a first instance in the case of random transpositions, and is familiar with Erdős–Renyi random graphs and with the result of Schramm [24] may safely skip this section.

3.1 Hypergraphs

In this section we present some preliminaries which will be used in the proof of Theorem 2.3. Throughout we let \(\Gamma \subset {\mathcal {S}}_n\) be a conjugacy class and let \((k_2,k_3,\dots )\) denote the cycle structure of \(\Gamma \). Thus \(\Gamma \) consists of permutations such that in their cycle decomposition they have \(k_2\) many transpositions, \(k_3\) many 3-cycles and so on. Note that we have suppressed the dependence of \(\Gamma \) and \((k_2,k_3,\dots )\) on n. We assume that (C) is satisfied so that for each \(j\ge 2\), \(j k_j/|\Gamma | \rightarrow {\bar{\alpha }}_j\) as \(n \rightarrow \infty \). We also let \(k=|\Gamma |\) so that \(k=\sum _{j \ge 2} j k_j\), as usual.

Definition 3.1

A hypergraph \(H=(V,E)\) is given by a set V of vertices and \(E \subset \mathcal {P}(V)\) of edges, where \(\mathcal {P}(V)\) denotes the set of all subsets of V. An element \(e \in E\) is called a hyperedge and we call it a j-hyperedge if \(|e|=j\).

Consider the random walk \(X= (X_t: t = 0,1 \ldots )\) on \({\mathcal {S}}_n\) where \(X_t = X^{{{\mathrm{id}}}}_t\) with our notations from the introduction. Hence

where the sequence \((\gamma _i)_{i\ge 1}\) is i.i.d. uniform on \(\Gamma \). A given step of the random walk, say \(\gamma _s\), can be broken down into cycles, say \(\gamma _{s,1} \circ \cdots \gamma _{s,r}\) where \(r = \sum _j k_j\). We will say that a given cyclic permutation \(\gamma \) has been applied to X before time t if \(\gamma = \gamma _{s,i}\) for some \(s\le t\) and \(1 \le i \le r\).

To X we associate a certain hypergraph process \(H=(H_t:t=0,1, \ldots )\) defined as follows. For \(t =0,1 ,\ldots \), \(H_t\) is a hypergraph on \(\{1,\dots ,n\}\) where a hyperedge \(\{x_1,\dots ,x_j\}\) is present if and only if a cyclic permutation consisting of the points \(x_1,\dots ,x_j\) in some arbitrary order has been applied to the random walk X prior to time t as part of one of the \(\gamma _i\)’s for some \(i \le t\). Thus at every step, we add to \(H_t\)\(k_j\) hyperedeges of size j sampled uniformly at random without replacement, and these edges are independent from step to step. However, note that the presence of hyperedges themselves are not in general independent.

3.2 Giant component of the hypergraph

In the case \(\Gamma =T\), the set of transpositions, the hypergraph \(H_s\) is a realisation of an Erdős–Renyi graph. Analogous to Erdős–Renyi graphs, we first present a result about the size of the components of the hypergraph process \(H=(H_t:t= 0,1,\dots )\) (where by size, we mean the number of vertices in this component). For the next result recall the definition of \(\psi (x,c)\) in (11). Recall that for \(c> c_\Gamma \), where \(c_\Gamma \) is given by (12), there exists a unique root \(\theta (c)\in (0,1)\) of the equation \(\theta (c)=1-\psi (\theta (c),c)\).

Theorem 3.1

Consider the random hypergraph \(H_s\) and suppose that \(s=s(n)\) is such that \(sk/n \rightarrow c\) as \(n \rightarrow \infty \) for some \(c> c_\Gamma \). Then there is a universal constant \(D>0\) such that with probability tending to one all components but the largest have size at most \( D n^{2/3} (\log (n))^3\). Furthermore, the size of the largest component, normalised by n, converges to \(\theta (c)\) in probability as \(n\rightarrow \infty \).

Of course, this is the standard Erdős–Renyi theorem in the case where \(\Gamma = T\) is the set of transpositions. See for instance [12], in particular Theorem 2.3.2 for a proof. In the case of k-cycles with k fixed and finite, this is the case of random regular hypergraphs analysed by Karoński and Łuczak [15]. For the slightly more general case of bounded conjugacy classes, this was proved by Berestycki [4].

Discussion Note that the behaviour of \(H_s\) in Theorem 3.1 can deviate markedly from that of Erdős–Renyi graphs. The most obvious difference is that \(H_s\) can contain mesoscopic components, something which has of course negligible probability for Erdős–Renyi graphs. For example, suppose \(\Gamma \) consists of \(n^{1/2}\) transpositions and one cycle of length \(n^{1/3}\). Then the giant component appears at time \(n^{1/2}/2\) with a phase transition (i.e., \(c_\Gamma >0\), because in this case \(\sum {\bar{\alpha }}_j =1\), as most of the mass comes from microscopic cycles). Yet even at the first step there is a component of size \(n^{1/3}\). Nevertheless we will see that once there is a giant component there is a limit to how big can the nongiant component be (we show this is less that \(O(n^{2/3})\) up to logarithmic terms; this is certainly not optimal).

From a technical point of view this has nontrivial consequences, as proofs of the existence of a giant component are usually based on the dichotomy between microscopic components and giant components. Furthermore, when the conjugacy class is large and consists of many small or mesoscopic cycles, the hyperedges have a strong dependence, which makes the proof very delicate.

In effect, perhaps surprisingly this will be the only place of the proof where all the possible ways in which the conjugacy class \(\Gamma \) might be big (potentially of size very close to n), needs to be handled. The difficulty of the proof below is to find an argument which works no matter how \(\Gamma \) is made up, so long as \(k = |\Gamma | = o(n)\). This is of course also the problem in the original question of studying the mixing time of the random walk induced by \(\Gamma \). However, what we have gained here compared to this original question, is the monotonicity of component sizes when hyperedges are added to \(H_s\).

Preliminaries: exploration Suppose that \(s=s(n)\) is such that \(sk/n \rightarrow c\) for some \(c> 0\) as \(n \rightarrow \infty \) for some \(c\ge 0\). We reveal the vertices of the component containing a fixed vertex \(v \in \{1,\dots ,n\}\) using breadth-first search exploration, as follows. There are three states that each vertex can be: unexplored, removed or active. Initially v is active and all the other vertices are unexplored. At each step of the iteration we select an active vertex w according to some prescribed rule among the active vertices at this stage (say with the smallest label). The vertex w becomes removed and every unexplored vertex which is joined to w by a hyperedge becomes active. We repeat this exploration procedure until there are no more active vertices. At stage \(i = 0,1, \ldots \) of this exploration process, we let \(A_i\), \(R_i\) and \(U_i\) denote the set of active, removed and unexplored vertices respectively. Thus initially \(A_0=\{v\}\), \(U_0=\{1,\dots ,n\}\backslash \{v\}\) and \(R_0=\emptyset \). We will let \(a_i = |A_i|, u_i = |U_i|, r_i = |R_i|\).

For \(t =1,\dots ,s\) we call the hyperedges which are associated with the permutation \(\gamma _t\) the t-th packet of hyperedges. Thus note that each packet consists of \(k_j\) hyperedges of size j, \(j \ge 2\), which are sampled uniformly at random without replacement from \(\{1, \ldots ,n\}\). In particular, within a given packet, hyperedges are not independent. However, crucially, hyperedges from different packets are independent. We will need to keep track of the hyperedges we reveal and where they “came from” (i.e., which packet they were part of), in order to deal with these dependencies. More precisely, as we explore the hypergraph \(H_s\), we discover various hyperedges of various sizes in \(H_s\) and this may affect the likelihood of other types of hyperedges in subsequent steps of the exploration process. To account for this, we introduce for \(t=1,\dots ,s\) and for \(j \ge 2\), the random subset of \(\{1, \ldots , n\}\), \(Y^{(t)}_{j} (i)\), which is defined to be the hyperedges of size j in the t-th packet that were revealed in the exploration process prior to step i. We let \(y_j^{(t)}(i) = |Y_j^{(t)}(i)|\) denote the number of such hyperedges.

Additional notations Let \(i \ge 0\) and let \(\mathcal {H}_i\) denote the filtration generated by the exploration process up to stage i, including the information of the number of hyperedges of each size in each packet that were revealed up to step i of the exploration process. That is,

Our first goal will be to give uniform stochastic bounds on the distribution of \(a_{i+1} - a_i\), so long as i is not too large. We will thus fix i (a step in the exploration process) and in order to ease notations we will often suppress the dependence on i, in \(Y^{(t)}_j(i)\): we will thus simply write \(Y^{(t)}_j\) and \(y_j^{(t)}\). Note that by definition, for each \(t=1,\dots ,s\) and \(j \ge 2\), \(Y^{(t)}_j \le k_j\) and

where the right hand side counts the total number of vertices explored by stage i, while the left hand side counts the sum of the sizes of all hyperedges revealed by stage i, so the \(\ge \) sign accounts for possible intersections between the hyperedges.

Let w be the vertex being explored for stage \(i+1\). For \(t = 1, \ldots , s\) let \(M_t\) be the indicator that w is part of an (unrevealed) hyperedge in the t-th packet. Thus, \((M_t)_{1\le t \le s}\) are independent conditionally given \(\mathcal {H}_i\), and

since \(k_j - y_j^{(t)}\) counts the number of hyperedges of size j still unrevealed in the t-th packet. If w is part of a hyperedge in the t-th packet, let \(V_t\) be the size of the (unique) hyperedge of that packet containing it. Then

Note that when \(M_t=1\) it implies that the denominator above is non-zero and thus (23) is well defined. When \(M_t = 0\) we simply put \(V_t = 1\) by convention. Then we have the following almost sure inequality:

This would be an equality if it were not for possible self-intersections, as hyperedges connected to w coming from different packets may share several vertices in common. In order to get a bound in the other direction, we simply truncate the \(a_{i+1} - a_i\) at \(n^{1/4}\). Let \(I_i\) be the indicator that among the first \(n^{1/4}\) vertices to which w is connected, no self-intersection or intersection with the past occurs. Note that \(\mathbb {E}(I_i) \ge p_n = 1- n^{-1/2}\), by straightforward bounds on the birthday problem. We then have

Organisation of proof of Theorem 3.1 We will stop the exploration process once we have discovered enough vertices, or if the active set dies out, whichever comes first. We aim to show that starting from a given vertex v, with probability approximately \(\theta (c)\) the cluster of v contains about order n vertices. However, we proceed in stages as different arguments are needed in order to reach so many vertices. In Step 1, we first show that the cluster contains about \((\log n)^2\) vertices with probability approximately \(\theta (c)\). Then in Step 2, given that the exploration of the cluster has discovered \((\log n)^2\) vertices, we show that with high probability the exploration will in fact discover \(n^{2/3}\) vertices. Finally, in Step 3 we show using the sprinkling technique that any two clusters that reach a size of about \(n^{2/3}\) can be connected using only very few additional edges, which implies the result.

Main quantitative lemma We define

We set \(T = T^\uparrow \wedge T^\downarrow \). Hence our first goal (which we will show at the end of Step 2) will be to show that \(T = T^\uparrow \) with probability \(\theta (c)\): in fact we will show that \(T_\downarrow \) occurs before \(T^\uparrow \) or \(n^{2/3}\) with probability approximately \(1- \theta (c)\). Either way, this means that the component is greater than \(n^{2/3}\) with probability approximately \(\theta (c)\). To do this we need to study the distribution of \(a_{i+1} - a_i\); the next lemma shows that these random variables converge in distribution to a sequence of i.i.d. (possibly degenerate) random variables, uniformly for \(i <T\): the limit is improper if \(\sum _j {\bar{\alpha }}_j < 1\).

Equivalently, the active process \(|A_i|\) converges (at least for finite dimensional marginals) to the exploration process of a Galton–Watson tree whose offspring distribution is given by the limit of \(a_{i+1} - a_i + 1\) and thus has a moment generating function given by \(\psi (1-\cdot , c)\).

It is perhaps surprising that the lemma below is sufficient for the proof of Theorem 3.1: the lemma below essentially only records whether a cycle is microscopic (finite) or “more than microscopic”; in particular, whether the mass of \(\Gamma \) comes from many small mesoscopic or fewer big cycles makes no difference.

Lemma 3.2

For each \(q_0 \in [0,1)\), there exists some deterministic function \(w:\mathbb {N}\rightarrow {\mathbb {R}}\) such that \(w(n) \rightarrow 0\) as \(n \rightarrow \infty \) with the following property:

almost surely.

Proof

Suppose \(T>i\). From (24) we have that

Recall from (23) that

and from (22) that

by definition of \(T^\uparrow \). Therefore, using \( 1- x \ge e^{-x - x^2 }\) for all x sufficiently small,

Hence, since \(sk/n = O(1)\) in the regime we are concerned with,

where the o(1) term is non random and independent of i, and for the last inequality we have used that

which follows from the fact that \(q \le q_0 <1\) and the dominated convergence theorem, as \( jk_j/k\) is uniformly bounded by 1. Note that the above estimate is uniform in \(i \ge 1\).

For the upper bound, we use (25). Let \(\epsilon _n \rightarrow 0\) sufficiently slowly that \(\varepsilon _n n^{1/3}\rightarrow \infty \). For concreteness take \(\varepsilon _n = n^{-1/6}\). Define \(G:=\{t\in \{1,\dots ,s\}: \sum _{m\ge 2} m y^{(t)}_m \le \epsilon _n k\},\) and let \(I = G^c\). Packets \(t \in I\) are the bad packets for which a significant fraction of the mass corresponding to that packet (at least \(\varepsilon _n\)) was already discovered at step i; by contrast packets \(t \in G\) are those for which a fraction at least \((1- \varepsilon _n)\) remains to be discovered in the exploration. In the case where the conjugacy class contains only one type of cycles, say k-cycles, then I coincides with the set of hyperedges already revealed. At the other end of the spectrum, when the conjugacy class \(\Gamma \) is broken down into many small cycles, then I is likely to be empty. But in all cases, |I| satisfies the trivial bound \( |I| \le \tfrac{2n^{2/3}}{\varepsilon _n k} \) by definition of \(T^\uparrow \), and in particular

This turns out to be enough for our purposes.

Note that \(\mathbb {E}( q^{ \sum _{t=1}^s M_t (V_t -1 )} ) \) and \(\mathbb {E}( q^{ n^{1/4} \wedge \sum _{t=1}^s M_t (V_t -1 )} ) \) can only differ by at most \(q^{n^{1/4}}\), which is exponentially small in \(n^{1/4}\) for a fixed \(q\le q_0 <1\), so we can neglect this difference. Then we may write, counting only hyper edges from good packets, using the fact that \(1- x \le e^{-x}\) for all \(x\in \mathbb {R}\), and (30) (recalling that \(I_i\) is the indicator of the event that no self-intersection occurs among the first \(n^{1/4}\) vertices connected to w):

where the o(1) term again is non random and uniform in \(i\ge 1\), but might depend on q (the last inequality again from comes from (29)). The proof is complete. \(\square \)

Lemma 3.2 above tells us that, at the level of generating functions, the distribution of \(a_{i+1} - a_i\) behaves very much like a sequence of i.i.d. random variables with distribution determined by \(\psi \), even if we don’t ignore self-intersections. It is thus easy to build martingales from quantities of the form \(q^{a_i}\), which behave as if the increments of \(a_i\) were i.i.d., at least until we reach size \(n^{2/3}\). Hence this will allow us to reach a size of \(n^{2/3}\) for \(a_i\) almost as if there were no self-intersections, and so with probability approximately \(\theta (c)\). Fundamentally, this is because even if self-intersections do occur, they are rare and do not cause a significant loss of mass. Technically, it is easier to have a separate argument for bringing the cluster to a polylogarithmic size before using this information to show that the cluster reaches size \(n^{2/3}\) with essentially the same probability. This is what we achieve in Step 1, which we are now ready for.

Step 1. We show that the cluster containing a given vertex v is at least logarithmically large with probability approximately \(\theta (c)\), and furthermore the number of vertices for which this occurs is approximately \(n \theta (c)\) in the sense of convergence in probability.

Lemma 3.3

Let \(\mathcal {C}_v\) denote the component containing v. We have that

Proof

We start with the upper bound of (32), for which we simply make a comparison with a Galton–Watson process: to reach size \(\log n\) the exploration process must survive more than a finite number of steps. More precisely, we make the following observation. Let \(m \ge 1\) be some arbitrary fixed large integer, and observe \(\mathbb {P}( |\mathcal {C}_v| > (\log n)^2) \le \mathbb {P}( |\mathcal {C}_v| \ge m)\) trivially. Now, whether \(|\mathcal {C}_v|\) reaches size m is something that can be decided by performing the breadth-first search exploration of the cluster on a finite (at most m) number of steps: i.e., if we let \(X_{i+1} = | A_{i+1} \setminus A_i | \), then a direct and crude consequence of Lemma 3.2 is that \((X_1, \ldots , X_m)\) converge to i.i.d. random variables \(({\bar{X}}_1, \ldots , {\bar{X}}_m)\) (which are possibly improper, if \(\sum {\bar{\alpha }}_j < 1\)) having as generating function \(\mathbb {E}(q^{{\bar{X}}}) = \psi (1- q, c) \). Formally, the \({\bar{X}}_i\) have the same distribution as

where the Poisson random variables are independent. Consequently, if W is the total progeny of a Galton–Watson branching process with offspring distribution \({\bar{X}}_i\) (note in particular that \(W = \infty \) as soon as one nodes in the tree has offspring \({\bar{X}}_i = \infty \)). We conclude that \(\mathbb {P}( |\mathcal {C}_v| \ge m) \rightarrow \mathbb {P}( W\ge m)\), and hence, taking the limsup and letting \(m \rightarrow \infty \),

This proves the upper bound in (32).

We now discuss the lower bound to (32), which is essentially the same argument, together with the observation that self-intersections are unlikely to occur before \((\log n)^2\) vertices have been explored. For this we can assume without loss of generality that \(\theta (c)>0\), otherwise there is nothing to prove. Let

we will prove the slightly stronger result that \(\liminf _{n \rightarrow \infty } \mathbb {P}( T_1 < T_\downarrow ) \ge \theta (c)\). (This is slightly stronger, because \(|\mathcal {C}_v|\) could in principle be greater than \((\log n)^2\) without the active set ever reaching that size). Let \(X_i\) be i.i.d. random variables with generating function given by

so that, by (23), \(a_{1} - a_0 \) has the same distribution as \(X_1\) when \(A_0 = \{v\}\) (see e.g. (28) where a similar calculation is carried). We can use the random variables \(X_i\) to generate the breadth first exploration of \(\mathcal {C}_v\) until we find a self-intersection. Thus let \({\tilde{Y}}_i\) be a collection of randomly chosen vertices of \(\{1, \ldots , n\}\) of size \(X_i\), and at each time step, add to the active set \({\tilde{A}}_{i+1}\) the set \({\tilde{Y}}_i\) and remove the currently explored vertex. Then we can couple \(A_i\) and \({\tilde{A}}_i\) so that \(A_i = {\tilde{A}}_i\) until the first time \(T_{\text {inter}}\) such that \({\tilde{Y}}_i \cap ({\tilde{Y}}_j \cup \{v\}) \ne \emptyset \) for some \(i \ne j \le T_{\text {inter}}\). Furthermore, until \(T_{\text {inter}}\), \({\tilde{A}}_i\) is the breadth-first search exploration of a branching process with offspring distribution (33). It becomes extinct with a probability \(q_n\), and we claim that \(q_n\) satisfies \(q_n \rightarrow 1- \theta (c)\) as \(n \rightarrow \infty \) by (29). Indeed, \(\psi _n\) clearly converges uniformly to \(\psi (\cdot , c)\) on \([0, x_0]\) for \(x_0 <1\) by Lemma 3.2 and this is the regime we are interested in since by assumption \(\theta (c)>0\).

Hence, it is clear that if \(W_n\) is the total progeny of this branching process, then \(\mathbb {P}( W_n \ge (\log n)^2) \ge \mathbb {P}( W_n = \infty ) = 1- q_n \rightarrow \theta (c)\), and combining with the argument in the upper bound on (32) we deduce that \(\mathbb {P}( W_n \ge (\log n)^2) \rightarrow \theta (c)\). On the other hand, \(T_1 < T_{\text {inter}}\) with probability tending to 1 as \(n \rightarrow \infty \) by the birthday problem, and so in fact \(\mathbb {P}( T_1 < T_\downarrow ) = \mathbb {P}( W_n \ge (\log n)^2 ) + o(1)\), so we are done. \(\square \)

It is important to note that self-intersections may occur at the very step that \(a_i\) exceeds \((\log n)^2\) (for instance, think about the case when the conjugacy class has some of its mass coming from cycles larger than \(n^{1/2}\): discovering such a cycle would immediately produce a self-intersection). Even so, the active set reaches size \((\log n)^2\) before such a self-intersection is discovered.

As announced at the beginning of Step 1, we complement this with a law of large numbers:

Lemma 3.4

in probability as \(n \rightarrow \infty \).

Proof

Let \(Z = \sum _{v = 1}^n 1_{\{| \mathcal {C}_v | \ge (\log n)^2 \}}\), so by the previous lemma we know that \(\mathbb {E}(Z) / n \rightarrow \theta \) by (32). Hence if we show that \({{\mathrm{Var}}}(Z) \le \varepsilon n^2\) for any \(\varepsilon >0\) and any n sufficiently large, then (34) follows by Chebyshev’s inequality. In particular, it suffices to show that for \(v \ne w \in \{1, \ldots , n \}\),

or equivalently,

On the other hand, (35) can be proved in exactly the same way as the upper bound of (32) above: for both \(| \mathcal {C}_v |\) and \(|\mathcal {C}_w|\) to be larger than \((\log n)^2 \), both must be greater than m where \(m \ge 1\) is fixed. This is an event which depends on a finite number of steps (at most 2m) in the explorations of \(\mathcal {C}_v\) and \(\mathcal {C}_w\), and so can be approximated by Lemma 3.2 by the same event for two independent branching processes. Letting \(m \rightarrow \infty \) finishes the proof. \(\square \)

For the rest of the proof we now assume that \(c> c_\Gamma \) so that \(\theta (c) >0\). Hence fix \(q \in [0,1)\) such that \(\psi (1-q, c)/ q < 1\), and note that using Lemma 3.2, we can suppose that, for some fixed \(\epsilon >0\), n is large enough so that

almost surely on \(\{T>i\}\).

Step 2. We now extrapolate the information obtained in the previous step to show that, still with probability approximately \(\theta (c)\), the active set of \(\mathcal {C}_v\) can reach a size of at least \(O(n^{2/3})\). To do so we suppose our exploration from Step 1 yields an active set of size at least \((\log n)^2\) (which, as discussed, occurs with probability \(\theta (c) + o(1)\). We will restart the exploration from that point on, calling this time \(i=0\) again. Hence the setup is the same as before, except that at time \(i=0\) we have \(a_0 = \lfloor (\log n)^2 \rfloor \): we only keep the first \((\log n)^2\) of the active vertices discovered at time \(T_1\), and declare all further active vertices at time \(T_1\) to be removed at time \(i=0\) in the exploration of Step 2.

Recall our notations for \(T^\downarrow \) and \(T^\uparrow \) in (26) and (27). Our goal in this step is to show the following control:

Lemma 3.5

Suppose that given \(\mathcal {H}_0\), it is a.s. the case that \(a_0 = \lfloor (\log n)^2 \rfloor \), and \(r_0 \le n^{2/3}\).Then

Proof

Set \(S =n^{2/3} \wedge T^\uparrow \wedge T^\downarrow \) and for \(i \ge 0\), let

so \(M=(M_i:i=0,\dots )\) is a supermartingale in the filtration \((\mathcal {H}_0, \mathcal {H}_{1}, \ldots )\). Observe that \(S \le n^{2/3}\) so M is bounded. Note that on the event \(\{ S = T^\downarrow \}\),

hence by the optional stopping theorem (since M is bounded), given \(\mathcal {H}_0\) and under the assumptions of the lemma on \(\mathcal {H}_0\),

as desired. \(\square \)

Consequently, since the error bound in Eq. (37) is \(o(n^{-1})\), we deduce that if

then \(\mathcal {G}= {\tilde{\mathcal {G}}}\) with high probability, and hence in particular

in probability as \(n \rightarrow \infty \).

Step 3. We now show that if v and \(v'\) are two vertices such that \(\mathcal {C}_v = \mathcal {C}_v(s)\) and \(\mathcal {C}_{v'} = \mathcal {C}_{v'}(s)\) are both larger at time s than \(n^{2/3}\) then they are highly likely to be connected at some slightly later time \(s+s'\). This follows from a so-called “sprinkling” argument, as follows. That is, suppose we add \(s'\) packets, with

for some \(D>0\) to be chosen later on. Note that \(s'k/n \rightarrow 0\) so that \( (s+ s') k /n \rightarrow c\). Since \(s = s(n)\) is an arbitrary sequence such that \(s k / n \rightarrow c\) it suffices to show that v and \(v'\) are then connected at time \(s+ s'\). In fact we will check that the two clusters can be connected using smaller edges that the hyperedges making each packet, as follows. For each hyperedge of size j we will only reveal a subset of \(\lfloor j/2 \rfloor \) edges (of size 2) with disjoint support. Since \(\lfloor j/2 \rfloor \ge j /3\) for any \(j \ge 2\), this gives us at least k / 3 edges for each packet; these are sampled uniformly at random without replacement from \(\{1, \ldots , n\}\). We will check that a connection occurs between the two clusters within these \(s' k /3 \) edges, with high probability.

Call the two clusters A and \(A'\) for simplicity; these are two arbitrary sets of size \(\lfloor n^{2/3}\rfloor \) which we can assume to be disjoint otherwise there is nothing to prove. Call a packet of edges good if their intersections with each of A and \(A'\) contains at most \(\lfloor n^{2/3} /2 \rfloor \) vertices, and call it bad otherwise. We reveal the edges in a given packet one by one, sampling without replacement. Note that so long as packet of edges has not been observed to be bad, the probability that the next edge connects A and \(A'\) is at least \(n^{4/3}/(16(n-k)^2) \ge n^{-2/3}/32\). (Note that if \(k \le n^{2/3} /2\) then every packet is necessarily good). Hence the probability that no connection between A and \(A'\) occurs for a good packet is at most

Now, each packet is bad independently of each other, with probability tending to 0 by Markov’s inequality (since the expected intersection of a pack of edges with A is at most \(|A| k /n = o (|A|)\)) and hence less than 1 / 2 say. So by standard Chernoff bounds on Binomial random variables, with probability at least \(1-\exp ( - h \times s')\) (where \(h>0 = (1/4) \log (1/4) + (3/4) \log (3/4) + \log 2 \) is a universal constant), at least \(s'/4\) packs are good. Putting together these two observations, we deduce that the probability that there are no connections between A and \(A'\) after \(s'\) packs of edges have been added is at most

By choosing \(D = 1201\), this is \(o(n^{-3})\) at least if \(k \ge n^{2/3}/2\) (so that \(s' \ge 2 D \log n\)). However, if \(k \le n^{2/3}\), then every packet is good, and so (40) holds without the second term on the right hand side. Either way,

Proof of Theorem 3.1

We are now ready to conclude that vertices are either in small component at time s or connected at time \(s+ s'\). Recall our notation \(\mathcal {G} = \{ v : |\mathcal {C}_v(s)| > n^{2/3}\}\). Then by (39), we know that \(|\mathcal {G}|/n\rightarrow \theta (c)\) in probability as \(n \rightarrow \infty \). We now aim to show that \(\mathcal {G}\) is connected at time \(s+s'\), with high probability. For \(v, v' \in \{1,\dots ,n\}\), write \(v \leftrightarrow v'\) to indicate that v is connected to \(v'\). Then by Step 3 (more specifically, (41)),

Hence \(\mathcal {G}\) is entirely connected at time \(s+s'\) with probability tending to 1. This proves that \(H_{s+s'}\) contains a component of relative size converging to \(\theta (c)\) in probability. Let us now check that every other component at time \(s+s'\) is small. Note that since \(\mathcal {G}= {\tilde{\mathcal {G}}}\) with probability tending to one (where \({\tilde{\mathcal {G}}}\) is defined in (38)), any component disjoint from \(\mathcal {G}\) at time \(s+s'\) must have been smaller than \((\log n)^2\) at time s. Since at most \(s'k\) connections are added, this means that, on the event \(\mathcal {G}= {\tilde{\mathcal {G}}}\), the maximal size of a component at time \(s+s'\) disjoint from \(\mathcal {G}\) is smaller than \(s' k (\log n)^2 \le D n^{2/3} (\log n)^3\). This shows that every other component is \(O(n^{2/3} ( \log n)^3)\) on an event of high probability.

The proof of Theorem 3.1 is complete, since \(s+s'\) in an arbitrary sequence such that \((s+s')k/n \rightarrow c\). \(\square \)

3.3 Poisson–Dirichlet structure

The renormalised cycle lengths \(\mathfrak {X}(\sigma )\) of a permutation \(\sigma \in {\mathcal {S}}_n\) is the cycle lengths of \(\sigma \) divided by n, written in decreasing order. In particular we have that \(\mathfrak {X}(\sigma )\) takes values in

We equip \(\Omega _\infty \) with the topology of pointwise convergence. If \(\sigma _n\) is uniformly distributed in \({\mathcal {S}}_n\) then \(\mathfrak {X}(\sigma _n) \rightarrow Z\) in distribution as \(n \rightarrow \infty \) where Z is known as a Poisson–Dirichlet random variable. It can be constructed as follows. Let \(U_1,U_2,\dots \) be i.i.d. uniform random variables on [0, 1]. Let \(Z^*_1=U_1\) and inductively for \(i \ge 2\) set \(Z^*_i=U_i(1-\sum _{j=1}^{i-1} Z^*_j)\). Then \((Z^*_1,Z^*_2,\dots )\) can be ordered in decreasing size and the random variable Z has the same law as \((Z^*_1,Z^*_2,\dots )\) ordered by decreasing size.

The next result is a generalisation of Theorem 1.1 in [24] to the case of general conjugacy classes. The proof is a simple adaptation of the proof of Schramm and we provide the details in an appendix.

Theorem 3.6

Suppose \(s=s(n)\) is such that \(sk/n \rightarrow c\) as \(n \rightarrow \infty \) for some \(c>c_\Gamma \). Then we have that for any \(m\in \mathbb {N}\)

in distribution as \(n \rightarrow \infty \) where \(Z=(Z_1,Z_2,\dots )\) is a Poisson–Dirichlet random variable.

4 Proof of curvature theorem

4.1 Proof of the upper bound on curvature

We claim that it is enough to show the upper bound for \(c>c_\Gamma \) in (15). Indeed, notice that \(c \mapsto \kappa _c\) is nondecreasing. Hence let \(c\le c_\Gamma \) and suppose we know that \(\limsup _{n\rightarrow \infty }\kappa _{c'} \le \theta (c')^2\) holds for all \(c' > c_\Gamma \). Then we have that \(\limsup _{n \rightarrow \infty }\kappa _c \le \theta (c')^2\) for each \(c'> c_\Gamma \). Taking \(c' \downarrow c_\Gamma \) and using the fact that \(\lim _{c' \downarrow c_\Gamma } \theta (c')=0\) shows that \(\lim _{n\rightarrow \infty }\kappa _c=0\).

Fix \(c>c_\Gamma \) and let \(t:=\lfloor cn/k\rfloor \). We need to show the upper bound in (15). In other words, we wish to prove that for some \(\sigma , \sigma ' \in {\mathcal {S}}_n\)

We will choose \(\sigma = {{\mathrm{id}}}\) and \(\sigma ' = \tau _1 \circ \tau _2\), where \(\tau _1, \tau _2\) are independent uniformly chosen transpositions. To prove the lower bound on the Kantorovich distance we use the dual representation of the distance \(W_1(X,Y)\) between two random variables X, Y:

Let \(f(\sigma ) = d({{\mathrm{id}}}, \sigma )\) be the distance to the identity (using only transpositions, as usual). Then observe that f is 1-Lipschitz. It suffices to show

We will now show (44) by a coupling argument. Construct the two walks \(X^{\tau _1\circ \tau _2}\) and \(X^{{{\mathrm{id}}}}\) as follows. Let \(\gamma _1,\gamma _2,\dots \) be a sequence of i.i.d. random variables uniformly distributed on \(\Gamma \), independent of \((\tau _1, \tau _2)\). Using Lemma 2.5 with \(\sigma _0 = \tau _1 \circ \tau _2\), which is independent of \(X^{{{\mathrm{id}}}}\), we can construct \(X^{\tau _1\circ \tau _2}_{t}\) as

Next we couple \(X^{{{\mathrm{id}}}}_{t}\) by constructing it as

Thus under this coupling we have that \(X^{\tau _1\circ \tau _2}_{t}=X^{{{\mathrm{id}}}}_{t} \circ \tau _1\circ \tau _2\). Let \(X=X^{{{\mathrm{id}}}}\), then from (44) the problem reduces to showing

We recall that a transposition can either induce a fragmentation or a coalescence of the cycles. Indeed, a transposition involving elements from the same cycle generates a fragmentation of that cycle, and one involving elements from different cycles results in the cycles being merged. (This property is the basic tool used in the probabilistic analysis of random transpositions, see e.g. [5] or [24]). Hence either \(\tau _{1}\) fragments a cycle of \(X_{t}\) or \(\tau _{1}\) coagulates two cycles of \(X_{t}\). In the first case, \(d({{\mathrm{id}}},X_t \circ \tau _1) = d({{\mathrm{id}}}, X_t \circ \tau _1) - 1\), and in the second case we have \(d({{\mathrm{id}}},X_t\circ \tau _1) = d({{\mathrm{id}}},X_t\circ \tau _1) + 1\). Let F denote the event that \(\tau _{1}\) causes a fragmentation. Then

Using the Poisson–Dirichlet structure described in Theorem 3.6 it is not hard to show that \(\mathbb {P}(F) \rightarrow \theta (c)^2/2\) (see, e.g., Lemma 8 in [6]). Applying the same reasoning to \(X_{t} \circ \tau _1\circ \tau _2\) and \(X_{t}\circ \tau _1\) we deduce that

from which the lower bound (45) and in turn the upper bound in (10) follow readily.

4.2 Proof of lower bound on curvature

We now assume that \(c>c_\Gamma \) and turn out attention to the lower bound on the Ricci curvature, which is the heart of the proof. Throughout we let \(k=|\Gamma |\) and \(t=\lfloor cn/k \rfloor \). With this notation in mind we wish to prove that

for some appropriate coupling of \(X^\sigma \) and \(X^{\sigma '}\), where the supremum is taken over all \(\sigma ,\sigma '\) with even distance. Note that we can make several reductions: first, by vertex transitivity we can assume \(\sigma = {{\mathrm{id}}}\) is the identity permutation. Also, by the triangle inequality (since \(W_1\) is a distance), we can assume that \(\sigma '= (i,j )\circ (\ell ,m)\) is the product of two distinct transpositions. There are two cases to consider: either the supports of the transpositions are disjoint, or they overlap on one vertex. We will focus in this proof on the first case where the support of the transpositions are disjoint; that is, i, j, l, m are pairwise distinct. The other case is dealt with very much in the same way (and is in fact a bit easier).

Clearly by symmetry \(\mathbb {E}d(X^{{{\mathrm{id}}}}_{t}, X^{(i,j)\circ (\ell ,m)}_{t} )\) is independent of i, j, \(\ell \) and m, so long as they are pairwise distinct. Hence it is also equal to \(\mathbb {E}d(X^{{{\mathrm{id}}}}_{t}, X^{\tau _1\circ \tau _2}_{t} )\) conditioned on the event A that \(\tau _1,\tau _2\) having disjoint support, where \(\tau _1\) and \(\tau _2\) are independent uniform random transpositions. This event has an overwhelming probability for large n, thus it suffices to construct a coupling between \(X^{{{\mathrm{id}}}}\) and \(X^{\tau _1\circ \tau _2}\) such that

Indeed, it then immediately follows from stochastic commutativity (Lemma 2.5) that the same is true with the expectation replaced by the conditional expectation given A, since the distance is bounded by two.

Next, let X be a random walk on \({\mathcal {S}}_n\) which is the composition of i.i.d. uniform elements of the conjugacy class \(\Gamma \). We decompose the random walk X into a walk \({\tilde{X}}\) which evolves by applying transpositions at each step as follows. For \(t =0,1, \ldots , \) write out

where \(\gamma _1,\gamma _2,\dots \) are i.i.d. uniformly distributed in \(\Gamma \). As before we decompose each step \(\gamma _s\) of the walk into a product of cyclic permutations, say

where \(r = \sum _{j\ge 2} k_j\). The order of this decomposition is irrelevant and can be chosen arbitrarily. For concreteness, we decide that we start from the cycles of smaller sizes and progressively increase to cycles of larger sizes. We will further decompose each of these cyclic permutation into a product of transpositions, as follows: for a cycle \(c = (x_1, \ldots , x_j)\), write

This allows to break any step \(\gamma _s\) of the random walk X into a number

of elementary transpositions, and hence we can write

where \(\tau ^{(j)}_s\) are transpositions. Note that the vectors \(( \tau ^{(i)}_s; 1\le i \le \rho )\) in (48) are independent and identically distributed for \(s = 1,2, \ldots \) and for a fixed s and \(1\le i \le \rho \), \(\tau ^{(i)}_s\) is a uniform transposition, by symmetry. However it is important to observe that the transpositions \(\tau _s^{(i)}\) are not independent. Nevertheless, they obey a crucial conditional uniformity which we explain now. First we have to differentiate between the set of times when a new cycle starts and the set of times when we are continuing an old cycle.

Definition 4.1

(Refreshment Times) We call a time s a refreshment time if s is of the form \(s=\rho \ell + \sum _{j=2}^m (j-1) k_j\) for some \(\ell \in \mathbb {N}\cup \{0\}\) and \(m \in \mathbb {N}\backslash \{1\}\).

We see that s is a refreshment time if the transposition being applied to \({\tilde{X}}\) at time s is the start of a new cycle. Using this we can describe the law of the transpositions being applied to \({\tilde{X}}\).

Proposition 4.1

(Conditional Uniformity) For \(s \in \mathbb {N}\) and \(i \le \rho \), the conditional distribution of \(\tau ^{(i)}_s\) given \(\tau ^{(1)}_s, \ldots , \tau ^{(i-1)}_s\) can be described as follows. We write \(\tau ^{(i)}_s = (x,y)\) and we will distinguish between the first marker x and the second marker y. There are two cases to consider:

-

(i)

\(s\rho + i\) is a refreshment time and thus \(\tau ^{(i)}_s\) corresponds to the start of a new cycle

-

(ii)

\(s\rho + i\) is not a refreshment time and so \(\tau ^{(i)}_s\) is the continuation of a cycle.

In case (i) x is uniformly distributed on \(S_i: = \{1, \ldots , n\} \setminus \text {Supp} (\tau _s^{(1)} \circ \cdots \circ \tau ^{(i-1)}_s)\) and y is uniformly distributed on \(S_i \setminus \{x\}\). In case (ii) x is equal to the second marker of \(\tau ^{(i-1)}_s\) and y is uniformly distributed in \(S_i\).

Note that in either case, the second marker y is conditionally uniformly distributed among the vertices which have not been used so far. This conditional independence property is completely crucial, and allows us to make use of methods (such as that of Schramm [24]) developed initially for random transpositions) for general conjugacy classes, so long as \(|\Gamma | = o(n)\). Indeed in that case the second marker y itself is not very different from a uniform random variable on \(\{1, \ldots , n\}\).

We will study this random walk using this new transposition time scale. We thus define a process \({\tilde{X}}=({\tilde{X}}_u: u = 0,1,\ldots )\) as follows. Let \(u\in \{ 0,1, \ldots \} \) and write \(u=s\rho +i\) where s, i are nonnegative integers and \(i<\rho \). Then define

Thus it follows that for any \(s \ge 0\), \({\tilde{X}}_{s\rho }=X_s\). Notice that \({\tilde{X}}\) evolves by applying successively transpositions with the above mentioned conditional uniformity rules.

Now consider our two random walks, \(X^{{{\mathrm{id}}}}\) and \(X^{\tau _1\circ \tau _2}\) respectively, started respectively from id and \(\tau _1 \circ \tau _2\), and let \({\tilde{X}}^{{{\mathrm{id}}}}\) and \({\tilde{X}}^{\tau _1 \circ \tau _2}\) be the associated processes constructed using (49), on the transposition time scale. Thus to prove (46) it suffices to construct an appropriate coupling between \({\tilde{X}}^{{{\mathrm{id}}}}_{t \rho }\) and \({\tilde{X}}^{\tau _1 \circ \tau _2}_{t\rho }\). Next, recall that for a permutation \(\sigma \in {\mathcal {S}}_n\), \(\mathfrak {X}(\sigma )\) denotes the renormalised cycle lengths of \(\sigma \), taking values in \(\Omega _\infty \) defined in (42). The walks \({\tilde{X}}^{{{\mathrm{id}}}}\) and \({\tilde{X}}^{\tau _1\circ \tau _2}\) are invariant by conjugacy and hence both are distributed uniformly on their conjugacy class. Thus ultimately it will suffice to couple \(\mathfrak {X}({\tilde{X}}^{{{\mathrm{id}}}}_{t \rho })\) and \(\mathfrak {X}({\tilde{X}}^{\tau _1\circ \tau _2}_{t \rho })\).

Fix \(\delta >0\) and let \(\Delta =\lceil \delta ^{-9} \rceil \). Define

Our coupling consists of three intervals \([0,s_1]\), \((s_1,s_2]\) and \((s_2,s_3]\).

Let us informally describe the coupling before we give the details. In what follows we will couple the random walks \({\tilde{X}}^{{{\mathrm{id}}}}\) and \({\tilde{X}}^{\tau _1\circ \tau _2}\) such that they keep their distance constant during the time intervals \([0,s_1]\) and \((s_2,s_3]\). In particular we will see that at time \(s_1\), the walks \({\tilde{X}}^{{{\mathrm{id}}}}\) and \({\tilde{X}}^{\tau _1\circ \tau _2}\) will differ by two independently uniformly chosen transpositions. Thus at time \(s_1\) most of the cycles of \({\tilde{X}}^{{{\mathrm{id}}}}\) and \({\tilde{X}}^{\tau _1\circ \tau _2}\) are identical but some cycles may be different. We will show that given that the cycles that differ at time \(s_1\) are all reasonably large, then we can reduce the distance between the two walks to zero during the time interval \((s_1,s_2]\). Otherwise if one of the differing cycles is not reasonably large, then we couple the two walks to keep their distance constant during the time interval \([0,s_1]\), \((s_1,s_2]\) and \((s_2,s_3]\).

More generally, our coupling has the property that \(d(X^{{{\mathrm{id}}}}_t, X^{\tau _1 \circ \tau _2}_t)\) is uniformly bounded, so that it will suffice to concentrate on events of high probability in order to get a bound on the \(L^1\)-Kantorovich distance \(W(X^{{{\mathrm{id}}}}_t, X^{\tau _1 \circ \tau _2}_t )\).

4.2.1 Coupling for \([0,s_1]\)

First we describe the coupling during the time interval \([0,s_1]\). Let \({\tilde{X}}=({\tilde{X}}_s:s \ge 0)\) be a walk with the same distribution as \({\tilde{X}}^{{{\mathrm{id}}}}\), independent of the two uniform transpositions \(\tau _1\) and \(\tau _2\). Then we have that by Lemma 2.5 for any \(s \ge 0\), \({\tilde{X}}^{\tau _1\circ \tau _2}_s\) has the same distribution as \({\tilde{X}}_s \circ \tau _1 \circ \tau _2\). Thus we can couple \(\mathfrak {X}({\tilde{X}}^{{{\mathrm{id}}}}_{s_1 })\) and \(\mathfrak {X}({\tilde{X}}^{\tau _1\circ \tau _2}_{s_1 })\) such that

4.2.2 Coupling for \((s_1,s_2]\)

For \(s \ge 0\) define \({\bar{X}}_s=\mathfrak {X}({\tilde{X}}^{{{\mathrm{id}}}}_{s+s_1 })\) and \({\bar{Y}}_s = \mathfrak {X}({\tilde{X}}^{\tau _1\circ \tau _2}_{s+s_1 })\). Here we will couple \({\bar{X}}_s\) and \({\bar{Y}}_s\) for \(s =0,\dots , \Delta \). During this time we aim to show that the discrepancies between \({\bar{X}}_0\) and \({\bar{Y}}_0\) resulting from performing the transpositions \(\tau _1\) and \(\tau _2\) at the end of the previous phase can be resolved. Our main tool for doing this will be a variant of a coupling of Schramm [24], which was already used in [6].

At each step s we try to create a matching between \({\bar{X}}_s\) and \({\bar{Y}}_s\) by matching an element of \({\bar{X}}_s\) to at most one element of \({\bar{Y}}_s\) of the same size. At any time s there may be several entries that cannot be matched. By parity the combined number of unmatched entries is an even number, and observe that this number cannot be equal to two. Now \({\tilde{X}}^{{{\mathrm{id}}}}_{s_1}\) and \({\tilde{X}}^{\tau _1\circ \tau _2}_{s_1}\) differ by two transpositions as can be seen from (50). This implies that in particular initially (i.e., at the beginning of \((s_1,s_2]\)), there are four, six or zero unmatched entries between \({\bar{X}}_0\) and \({\bar{Y}}_0\).

Fix \(\delta >0\) and let \(A(\delta )\) denote the event that the smallest unmatched entry between \({\bar{X}}_{0}\) and \({\bar{Y}}_0\) has size greater than \(\delta >0\). We will show that on the event \(A(\delta )\) we can couple the walks such that \({\bar{X}}_{\Delta }={\bar{Y}}_{\Delta }\) with high probability. On the complementary event \(A(\delta )^c\), couple the walks so that their distance remains O(1) during the time interval \((s_1,s_2]\), similar to the coupling during \([0,s_1]\).

It remains to define the coupling during the time interval \((s_1,s_2]\) on the event \(A(\delta )\). We begin by estimating the probability of \(A(\delta )\).

Lemma 4.2

For any \(c>1\) and \(\delta >0\),

where \(p(\delta ) \rightarrow 0\) as \(\delta \rightarrow 0\).

Proof

Recall that by construction \({\bar{X}}_0\) and \({\bar{Y}}_0\) only differ because of the two transpositions \(\tau _{1} \) and \(\tau _{2}\) appearing in (50).

Recall the hypergraph \(H_{s_1/\rho }\) on \(\{1,\dots ,n\}\) defined in the beginning of Sect. 3.1. Since \(c>c_\Gamma \), by Theorem 3.1, \(H_{s_1/\rho }\) has a (unique) giant component with high probability. Let \(A_1\) be the event that the four points composing the transpositions \(\tau _{1}, \tau _{2}\) fall within the largest component of the associated hypergraph \(H_{s_1/\rho }\). Since the relative size of the giant component converges in probability \(\theta (c)\) by Lemma 3.1, note that \(\mathbb {P}(A_1) \rightarrow \theta (c)^4\).

Furthermore, it follows from Theorem 3.6 that conditionally on the event \(A_1\), the asymptotic relative size of the cycles containing the four points making the transpositions \(\tau _1, \tau _2\) can be thought of as the size of four independent samples from a Poisson-Dirichlet distribution, multiplied by \(\theta (c)\). Hence the lemma is proved with \(p(\delta )\) being the probability that one of the four samples has a size smaller than \(\delta /\theta (c)\). Clearly \(p(\delta ) \rightarrow 0\) so the result is proved. \(\square \)

Recall that the transpositions which make up the walks \({\tilde{X}}^{{{\mathrm{id}}}}\) and \({\tilde{X}}^{\tau _1\circ \tau _2}\) obey what we called conditional uniformity in Proposition 4.1. For the duration of \((s_1,s_2]\) we will assume the relaxed conditional uniformity assumption, which we describe now.

Definition 4.2

(Relaxed Conditional Uniformity) For \(s=s_1+1,\dots ,s_2\) suppose we apply the transposition (x, y) at time s. Then

-

(i)

if s is a refreshment time then x is chosen uniformly in \(\{1,\dots ,n\}\),

-

(ii)

if s is not a refreshment time then x is taken to be the second marker of the transposition applied at time \(s-1\).

In both cases we take y to be uniformly distributed on \(\{1,\dots ,n\}\backslash \{x\}\).

In making the relaxed conditional uniformity assumption we are disregarding the constraints on (x, y) given in Proposition 4.1. However the probability we violate this constraint at any point during the interval \((s_1,s_2]\) is at most \(2(s_2-s_1)\rho /n=2\Delta k/n \rightarrow 0 \); and on the event that this constraint is violated the distance between the random walks can increase by at most \((s_2-s_1)=\Delta \). Hence we can without a loss of generality assume that during the interval \((s_1,s_2]\) both \({\tilde{X}}^{{{\mathrm{id}}}}\) and \({\tilde{X}}^{\tau _1\circ \tau _2}\) satisfy the relaxed conditional uniformity assumption.

Now we show that on the event \(A(\delta )\) we can couple the walks such that \({\bar{X}}_{\Delta }={\bar{Y}}_{\Delta }\) with high probability. The argument uses a coupling of Berestycki et al. [6], itself a variant of a beautiful coupling introduced by Schramm [24]. We first introduce some notation. Let

Notice that the walks \({\bar{X}}\) and \({\bar{Y}}\) both take values in \(\Omega _n\).

Marginal evolution Let us describe the evolution of the random walk \({\bar{X}}=({\bar{X}}_s: s = 0,1,\dots )\). Suppose that \(s\ge 0\) and \({\bar{X}}_{s}= (x_1,\dots ,x_n)\). Now imagine the interval (0, 1] tiled using the intervals \((0,x_1],\dots ,(0, x_n]\) (the specific tiling rule does not matter). Initially for \(s=0\), and more generally if s is a refreshment time, we select \(u \in \{1/n,\dots ,n/n\}\) uniformly at random and then call the tile that contains u the marked tile. If \(s \ge 1\) is not a refreshment time then the marked tile is the one containing the second marker y of Proposition 4.1 from the previous step. Either way, we have a distinguished tile (the tile containing the ‘first marker’ at the beginning of each step \(s = 0, 1, \ldots \)

We now describe the marginal evolution of this tiling for one step. In fact this evolution takes as an input a tiling \({\bar{X}}_s\) and a marked tile I. The output will be another tiling \({\bar{X}}_{s+1}\) and a new marked tile for the next step. Let I be the tile containing the first marker at the beginning of the step, and place I first from left. (I represents the cycle containing the first marker u and we imagine that u is the leftmost point of that tile, i.e., in position 1 / n). Select \(v \in \{2/n,\dots ,n/n\}\) uniformly at random and let \(I'\) be the tile that v falls into. Then there are two possibilities:

-

if \(I' \ne I\) then we merge the tiles I and \(I'\). The new tile we created is now marked for the next step.

-

If \(I=I'\) then we split I into two fragments, corresponding to where v falls. Thus, one of size \(v-1/n\) and the other of size \(|I|-(v-1/n)\). The rightmost one of these two tiles, containing v, is now marked for the next step. Now \({\bar{X}}_{s+1}\) is the sizes of the tiles in the new tiling we have created (with additional reordering of tiles in decreasing order). .

This defines a transformation \(T( {\bar{X}}_s, I, v)\). The evolution described above has the law of the projection onto \(\Omega _n\) of \({\bar{X}}\). Indeed, suppose we apply the transposition (x, y) to \({\bar{X}}_s\) in order to obtain \({\bar{X}}_{s+1}\). The marked tile at time s corresponds to the cycle of \({\bar{X}}_{s}\) containing x: if s is a refreshment time then \(x\in \{1,\dots ,n\}\) is chosen uniformly, otherwise x is the second marker from the previous step.

Coupling We now recall the coupling of [6]. Let \(s \ge 0\). Suppose that \({\bar{X}}_{s}={\bar{X}}=(x_1,\dots ,x_n)\) and \({\bar{Y}}_s= {\bar{Y}}=(y_1,\dots ,y_n)\). Then we can differentiate between the entries that are matched and those that are unmatched: we say that two entries from \({\bar{X}}\) and \({\bar{Y}}\) are matched if they are of identical size. Our goal will be to create as many matched parts as possible and as quickly as possible. When putting down the tilings \({\bar{X}}\) and \({\bar{Y}}\), associated with \({\bar{X}}\) and \({\bar{Y}}\) respectively, we will do so in such a way that all matched parts are to the right of the interval (0, 1] and the unmatched parts occupy the left part of the interval.