Abstract

Non-isometric surface registration is an important task in computer graphics and computer vision. It, however, remains challenging to deal with noise from scanned data and distortion from transformation. In this paper, we propose a Huber-\(L_1\)-based non-isometric surface registration and solve it by the alternating direction method of multipliers. With a Huber-\(L_1\)-regularized model constrained on the transformation variation and position difference, our method is robust to noise and produces piecewise smooth results while still preserving fine details on the target. The introduced as-similar-as-possible energy is able to handle different size of shapes with little stretching distortion. Extensive experimental results have demonstrated that our method is more accurate and robust to noise in comparison with the state-of-the-arts.

Similar content being viewed by others

1 Introduction

Surface registration has drawn intensive attention in computer graphics and computer vision with massive applications such as film production and computer games. With the development of geometry acquisition technology, 3D scanning systems allow us to capture high-resolution and highly detailed 3D geometries even for dynamic scenes. However, these scanned data often contain noise, especially from commodity depth sensors such as Microsoft Kinect. To tackle this problem, surface registration is introduced to deform a high quality model (template) so that it aligns with the scanned shape (target). Often, the size and details of the template differ from that of the target. Hence, registration methods which are robust to noise and capable of handling different sizes and details have been investigated.

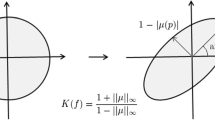

According to the type of deformation mapping [6], the surface registration is generally categorized into two groups: rigid registration and non-rigid registration. The rigid registration [2, 3] aims to find a rigid-body transformation between two shapes, and thus, it cannot handle deformable (non-rigid) shapes. The non-rigid registration, including isometric registration and similar registration, is to find a set of local transformations that align two shapes. The isometric registration which deforms the shape in an as-rigid-as-possible (ARAP) manner has been widely used between isometric shapes [7, 10, 25]. However, it is not capable of handling shapes with different sizes since it tries to preserve the length of edges. To address this limitation, similar registration approaches [8, 29] introduce a scale factor into each local transformation and formulate as an as-similar-as-possible (ASAP) energy, which allows local scalability to handle the size difference.

For the accuracy and robustness of registration, the transformation variation and position difference constraints are usually formulated as a smoothness term and a data term, respectively, to measure the smoothness of the neighboring transformation and the closeness of registration shapes, respectively. Most works [1, 8, 10] use the classic squared \(L_2\)-norm on both constraints (\(L_2\)-\(L_2\)). However, the smoothness term in \(L_2\)-norm tends to penalize large transformation variation. It is not suitable for articulated models where large deformation variations exist at their joints. This could also be seen in image processing where discontinuities are allowed to highlight the sharp edges in image denoising [5]. The classic model in image denoising is ROF model [18], where the total variation (TV) term is an \(L_1\)-norm-based regularization and the data term is in squared \(L_2\)-norm (TV-\(L_2\)). Based on ROF model, Yang et al. [27] propose a sparse non-rigid registration method with an \(L_1\)-norm regularized on the smoothness term. However, ROF tends to produce over-regularized results as the \(L_2\)-norm strives to distribute errors evenly, thus fitting the result evenly on the noisy parts. To tackle this issue, TV-\(L_1\) model [30] is proposed to efficiently remove the outliers while preserving fine details. Based on TV-\(L_1\) model, Yang et al. [11] propose a dual sparsity registration approach on both position and transformation sparsity, allowing the positional error to concentrate on small regions. However, TV-\(L_1\) model tends to produce piecewise constant results as shown in Werlberger et al. [26]. We propose a Huber-\(L_1\)-based non-isometric surface registration to reduce the staircasing artifacts known from TV. A Huber-norm is applied on the transformation variation, which significantly reduces artificial discontinuities and produces piecewise smooth results. Meanwhile, an \(L_1\)-norm is applied on the position difference, solving the over-regularized issue that appeared in \(L_2\)-norm. The ASAP energy is introduced to handle shapes of different sizes and poses. To reduce the risks of surface foldover, we adopt Laplacian energy to smooth the template on both primal and dual domains. We compare Huber-\(L_1\) to other state-of-the-art models in the experiments on clean, noisy and real scanned data, demonstrating the advantage and robustness of our method.

The main contributions of this work are summarized as follows:

-

We propose a Huber-\(L_1\)-based non-isometric registration method regularized on transformation variation and position difference. The Huber-\(L_1\) model is solved by the alternating direction method of multipliers (ADMM) with each energy term being represented in matrix form. The proposed model is robust to noise and produces piecewise smooth results with the target’s fine details being well preserved.

-

We incorporate ASAP energy in the registration method, which is not only able to handle shapes with different sizes but can also reduce local stretch and distortion. Since ASAP energy preserves the overall geometry of the template while allowing local scaling, it demands a few user efforts to provide a good initial shape estimation.

-

We introduce Laplacian energy to relax the template on both primal and dual domains, improving the template mesh quality and decreasing the foldover occurrence.

2 Related work

Over last two decades, surface registration has been deeply researched. A complete survey can be referred in [24]. Here, we briefly review the related works on three categories: rigid registration, isometric registration and non-isometric registration.

Rigid registration The most classic algorithm in rigid registration is iterative closest point (ICP) [2]. It alternates between closest point searching and optimal transformation solving. To improve the converge rate of ICP, Low et al. [13] used a point-to-plane metric in which the energy function is the sum of the squared distance between a point and the tangent plane at its correspondence point. One main issue for ICP and its variants is that they are sensitive to outliers and missing data. To tackle this issue, Bouaziz et al. [3] propose a new formulation of ICP using sparsity inducing norms. However, methods in this category cannot deal with deformable shapes registration.

Isometric registration Huang et al. [7] present a non-rigid registration of a pair of partially overlapping surfaces, constraining transformation locally as-rigid-as-possible (ARAP). Rouhani et al. [17] propose a non-rigid registration between two clouds of points, where the source set is clustered into small patches and then deformed rigidly to align with the target. Sussmuth et al. [23] slide the template mesh along the time–space surface in ARAP manner to reconstruct animated meshes from a series of time-deforming point clouds. Wand et al. [25] take a set of time-varying unstructured sample points as input and reconstruct a single shape and a local deformation field. Li et al. [10] introduce a registration algorithm for partial range scans of deforming shapes. They deform local features as rigidly as possible to avoid shearing and stretching artifacts. Yang et al. [11] propose a dual-sparsity-based non-rigid registration, which adds orthogonality constraints on the local transformation to preserve local rigidity.

Non-isometric registration Based on shape matching [15], Papazov et al. [16] compute shape transitions based on local similarity transforms which allow to model not only as-rigid-as-possible deformations but also local and global scalability. Liao et al. [12] reconstruct complete 3D deformable models over time by a single depth camera. They constrain local transformation to isotropic scales and rotations. Amberg et al. [1] propose an optimal step non-rigid ICP algorithm for surface registration, where an affine transformation is assigned to each vertex and a stiffness term is used to minimize the difference in the transformation of neighboring vertices. Sumner et al. [20] compute the set of affine transformations induced by the deformation of the source mesh, and the smoothness term is added to constrain the transformation matrices for adjacent triangles to be equal. Yeh et al. [28] introduce a template fitting method for 3D surface meshes and optimize the local transformation by applying bi-Laplacian energy. Yoshiyasu et al. [29] present a non-isometric surface registration method that restricts deformations locally as conformal as possible. However, the discretized energy they adopted is not a consistent discretization of a continuous energy and thus may produce foldover and distortion. To tackle this issue, Jiang et al. [8] propose a consistent as-similar-as-possible surface registration method. They apply the \(L_2\)-norm regularization on both the transformation variation and position difference (\(L_2\)–\(L_2\)), which results in consistent discretization for surfaces and improves the quality of the surface deformation and registration. However, the approach tends to produce over-smoothed results given a noisy target. Yang et al. [27] propose a sparse non-rigid registration method with an \(L_1\)-norm regularized on the transformation variation and an \(L_2\)-norm regularized on the position difference (\(L_1\)-\(L_2\)). The \(L_1\)-norm-regularized smoothness term usually results in artificial discontinuities, and the \(L_2\)-norm-regularized data term often loses fine details while smoothing the noise [5]. To tackle these issues, we regularize the smoothness term in Huber-norm, which significantly reduces the artificial discontinuities. Unlike most work using the squared \(L_2\)-norm in position difference constraints, we apply an \(L_1\)-norm-regularized data term, which is not only able to remove noise but also preserve fine details.

Huber-\(L_1\) algorithm: a sampled points (yellow dots); b remeshing from the sampled points as embedded coarse mesh; c input of target surface; d 9 feature points specified by user (red dots for target and green dots for template); e coarse fitting; f mid-scale fitting; g reconstructed through embedded deformation; h fine fitting on the dual domain; i fine fitting on the primal domain; j subdivision; k the final result

3 Surface registration

In this paper, we adopt a coarse-to-fine fitting strategy to implement the whole registration process in three steps: coarse fitting, mid-scale fitting and fine fitting. For each step, different energy terms are used and combined, which will be introduced at their first appearance. The comparison results with and without some energy terms will be illustrated to stress the significance of these energies.

3.1 Notations

Suppose the template mesh is composed of n vertices \({\mathcal {P}} \triangleq \{{\mathbf {p}}_1, \ldots ,{\mathbf {p}}_n\}\), where \({\mathbf {p}}_i \triangleq [x_i, y_i, z_i]^\top \) is a 3D vertex position in Euclidean coordinate. In coarse fitting step, n is the vertex number of coarse template mesh. The vertices of the target are denoted as \({\mathcal {Q}} = \{{\mathbf {q}}_1, \ldots ,{\mathbf {q}}_m\}\). For non-rigid registration, a \(3 \times 4\) affine transformation matrix \({\mathbf {A}}_i \triangleq [{\mathbf {X}}_i, {{\mathbf {t}}}_i]\) is associated with each vertex \({\mathbf {p}}_i\) of the template, where \({\mathbf {X}}_i\) is a \(3 \times 3\) linear transformation matrix and \({{\mathbf {t}}}_i\) is a \(3 \times 1\) translation vector. For simplification, we concatenate \({\mathbf {p}}_i, {\mathbf {q}}_i, {\mathbf {X}}_i, {{\mathbf {t}}}_i\) into a \(n \times 3\) matrix \({\mathbf {P}} \triangleq [{\mathbf {p}}_1 \ldots {\mathbf {p}}_n]^\top \), a \(m \times 3\) matrix \({\mathbf {Q}} \triangleq [{\mathbf {q}}_1 \ldots {\mathbf {q}}_m]^\top \), a \(3n \times 3\) matrix \({\mathbf {X}} \triangleq [{\mathbf {X}}_1 \ldots {\mathbf {X}}_n]^\top \) and a \(n \times 3\) matrix \({\mathbf {T}} \triangleq [{{\mathbf {t}}}_1 \ldots {{\mathbf {t}}}_n]^\top \), respectively. Similarly, the vertices on the template dual mesh \({\mathcal {P}}^*\) are denoted by \({\mathbf {P}}^* \triangleq [{\mathbf {p}}^*_1 \ldots {\mathbf {p}}^*_{n^*}]^\top \), where \(n^*\) is the number of the vertices on the template dual mesh, which is also equal to the number of triangle faces on the template primal mesh. Again, a translation vector will be assigned to each dual vertex, all of which can be concatenated as \({\mathbf {T}}^* \triangleq [{{\mathbf {t}}}^*_1 \ldots {{\mathbf {t}}}^*_{n^*}]^\top \).

3.2 Coarse fitting

Instead of fitting the fine template surface from the beginning, a coarse mesh extracted from the origin template mesh is used to fit for efficiency, as the coarse mesh involves less unknown parameters. We employ the farthest point sampling approach [14] to sample certain number of vertices to approximately represent the shape of the template (Fig. 1a). Note that all the sampled vertices are the subset of the original vertex set. The geodesic remeshing technique [22] is then applied to generate the coarse mesh out of the samples vertices (Fig. 1b). Afterward, the feature points between the coarse template mesh and the target are specified by users (Fig. 1d). The coarse template is then fitted to the specified feature points to approximate the overall size of the target (Fig. 1e). The total energy in coarse fitting step is composed of four energies: \({\textit{E}}_{\mathrm {f}}\) penalizes the distances between the feature points of template and target surface; \({\textit{E}}_{\mathrm {ASAP}}\) constrains deformation ASAP; \({\textit{E}}_{\mathrm {r}}\) penalizes the transformation variation; \({\textit{E}}_{\mathrm {l}}\) penalizes the edge lengths difference locally:

Each energy term will be introduced as follows, and \(w_{\mathrm {f}}\), \(w_{\mathrm {ASAP}}\), \(w_{\mathrm {r}}\) and \(w_{\mathrm {l}}\) are the weights which represent the influence of each energy term.

3.2.1 Feature point constraints

To pull feature points on the template toward their correspondence on the target, we define the feature point constraint energy as:

where \({\mathcal {F}}\) is the template index set of the feature points, \({\mathbf {p}}_i\) is the position of ith feature point on the template, \(\mathrm {idx}(i)\) is the index of the corresponding feature point on the target. To fit this energy (and other energies subsequently) into ADMM [4] for optimization, we need to rewrite the energy (and other energies subsequently introduced) in matrix form. We define two sparse matrices \({\mathbf {C}}_\mathrm {f}\), \({\mathbf {D}}_\mathrm {f}\) which select the feature point pairs between the template and the target. Assuming the rth feature point pair is \({\mathbf {p}}_i\) on the template and \({\mathbf {q}}_{\mathrm {idx}(i)}\) on the target, then

Therefore, we can rewrite \({\mathrm {E}}_{\mathrm {f}}\) as

3.2.2 ASAP energy

The transformation from the template to the target can involve large deformation. To prevent shear distortion (Fig. 2), we constrain the deformation matrix \({\mathbf {X}}_i\) to an orthogonal rotation matrix \({\mathbf {R}}_i\). As we deal with non-isometric registration, the size between the template and the target can be different. Therefore, we add a scalar \(s_i\) to make the deformation scalable. The ASAP energy is defined as

where \(\Vert \cdot \Vert _F\) denotes the Frobenius norm, \({\mathbf {I}}_3\) is a \(3 \times 3\) identity matrix and \({\mathrm {det}}(\cdot )\) is the determinant of a matrix. To write the energy in matrix form, we concatenate \(s_i, {\mathbf {R}}_i\) into a \(n \times 1\) vector \({\mathbf {s}} = [s_1\ldots s_n]^\top \) and a \(3n \times 3\) matrix \({\mathbf {R}} \triangleq [{\mathbf {R}}_1 \ldots {\mathbf {R}}_n]^\top \). The ASAP energy can then be expressed as:

where \({\mathrm {diag}}(\cdot )\) returns the block-wise diagonal matrix of each row vector in the input matrix and \(\otimes \) denotes the operator of Kronecker product.

3.2.3 Regularization

We assign an affine transformation to each vertex. These transformations are not independent with each other, and the nearby transformations should have overlapping influence. Therefore, the computed transformations should be consistent with respect to one another. In practice, articulated animals only have large transformation deviation at joints, so the transformation on the parts between joints should be piecewise smooth with only small deviations. To produce a piecewise smooth result (Fig. 3), we apply a Huber-norm-regularized model constrained on the transformation variation. This metric behaves like an \(L_2\)-norm below a certain threshold \(\varepsilon \) and like an \(L_1\)-norm above. The regularization energy is defined as:

where \({ {\mathbf {e}}}_{ij}\) is a directional edge from vertex i to vertex j, \(\mathbf {{\mathcal {E}}}\) is the half-edge set of the template mesh. Note that an edge has two opposite directional half-edges. \(\Vert \cdot \Vert _\varepsilon \) is the Huber-norm defined as:

\(\varepsilon > 0\) is a threshold, \(\Vert \cdot \Vert _1, \Vert \cdot \Vert _2\) are the \(L_1, L_2\) norm, respectively. In order to express the regularization energy with respective to \({\mathbf {X}}\) and \({\mathbf {T}}\), we introduce a selection matrix \({\mathbf {J}} \in \{1\}^{|\mathbf {{\mathcal {E}}}|\times n}\) and a directional differential matrix \({{\mathbf {K}}} \in \{-1, 1\}^{|\mathbf {{\mathcal {E}}}|\times n}\). Specifically, each row of \({\mathbf {J}}\) and \({{\mathbf {K}}}\) corresponds to a half-edge in \(\mathbf {{\mathcal {E}}} \) and each column of them corresponds to a vertex in \({\mathcal {P}}\). Without loss of generality, we assume the r-th rows in \({\mathbf {J}}\) and \({{\mathbf {K}}}\) are associated with the half-edge \({ {\mathbf {e}}}_{ij}\). Each row in \({\mathbf {J}}\) denoted by \({\mathbf {J}}(r,\cdot )\) only has one nonzero entry, whose column corresponds to the start vertex \({\mathbf {p}}_i\) of the half edge \({ {\mathbf {e}}}_{ij}\), i.e., \({\mathbf {J}}(r,i) = 1\). Each row in \({{\mathbf {K}}}\) denoted by \({{\mathbf {K}}}(r,\cdot )\) contains two nonzero entries. The entry linked to the reference vertex \({\mathbf {p}}_i\) is set at − 1, while the one linked to the neighboring vertex \({\mathbf {p}}_j\) is set at 1, i.e., \({{\mathbf {K}}}(r, i) = -\,1\), \({{\mathbf {K}}}(r, j) = 1\). Then, the regularization term can be rewritten as:

3.2.4 Laplacian energy

To improve the mesh quality, we apply the uniform Laplacian operator on the vertex position, enforcing each vertex to strive to lie in the centroid of its one-ring neighbors and thus the edge lengths strive to be locally equalized. The Laplacian energy is defined as follows:

where \({\mathbf {L}}\) is the uniform Laplacian matrix corresponding to the mesh connectivity:

where \(d_i\) is the valence of vertex i.

3.3 Mid-scale fitting

This fitting step deforms the coarse mesh as close as possible to the target. Apart from those constraints adopted in the previous step, the data constraint is also applied to attract the coarse mesh toward the target gradually (Fig. 1f). The energy in this step is denoted by:

3.4 Data constraint

To pull the template toward the target, we need to determine the reliable correspondences between the template and the target. For each vertex \({\mathbf {p}}_i\) on the template, we project it onto the target along its normal direction. The projection \({\mathbf {c}}_i\) is regarded as a reliable correspondence only if:

-

\({\mathbf {c}}_i\) is inside a triangle of the target.

-

The distance between \({\mathbf {c}}_i\) and \({\mathbf {p}}_i\) is under a threshold \(\alpha \).

-

The angle between the normals at \({\mathbf {c}}_i\) and \({\mathbf {p}}_i\) is under a threshold \(\Theta \).

We apply \(L_1\) norm on the position difference to allow a small fraction of regions with large positional error, which is more robust against outliers and fits to the target’s details better. The data term is defined as:

where \({\mathcal {C}}\) is the template index set of the correspondence. In order to express the data term in regard to \({\mathbf {T}}\), we stack all \({\mathbf {c}}_i\) into a matrix \({\mathbf {C}}\). Similar to \({\mathbf {C}}_\mathrm {f}\), we define a sparse matrix \({\mathbf {C}}_\mathrm {d}\) to indicate the corresponding vertices on the template. Therefore, we can rewrite the data term as:

3.5 Fine fitting

In this step, the dense mesh is first reconstructed from the mid-scale fitting result via embedded deformation method [21] (Fig. 1g). To avoid foldovers and improve the mesh quality, the dual-domain relaxation strategy [28] is applied. We alternatively perform the relaxation algorithm between the primal domain and the dual domain. The reconstructed primal mesh is first transformed into its corresponding dual mesh, and then, the relaxation algorithm is applied on the dual domain (Fig. 1h). The energy in the dual domain is defined as:

After that, the relaxation algorithm is applied in the primary domain (Fig. 1i), whose energy can be expressed as:

where \({\textit{E}}_\mathrm {c}\) is the consistent energy transforming the template from the dual domain to the primary domain.

3.6 Consistency constraint

It is well known that one dual mesh corresponds to a primal mesh and their vertices should be consistent: the ith dual vertex should be equal to the centroid vertex of the ith triangle of the primal mesh (Fig. 4). The consistency energy is defined as:

where \(\{i_1, i_2, i_3\}\) are the indices of the primal vertices participating in the ith triangle of the primal mesh. Again, in matrix form, we could rewrite this energy as:

where \({\mathbf {C}}_{\mathrm {c}}\) is a \(n^* \times n\) matrix:

If the resolution of the template mesh is insufficient to fit the target tightly, a uniform or adaptive subdivision approach can be employed. Here, we adopt 1–4 uniform subdivision method [9] to subdivide the template (Fig. 1j), and then, the dual-domain relaxation algorithm is performed on the subdivided template mesh again.

4 Optimization

The optimization process for each fitting step is quite similar. We take the mid-scale step as an example to explain the optimization process as it includes all kinds of norm appeared in the algorithm. Expanding each term in (6) gives us:

To solve the non-differential Huber-norm and \(L_1\)-norm, we introduce two auxiliary variables \({\mathbf {F}}\), \({\mathbf {G}}\) to change the minimization of (11) into the following form:

where \({\mathbf {H}} = \mathrm {diag}({{\mathbf {K}}} {\mathbf {P}})({\mathbf {J}}\otimes {\mathbf {I}}_3)\) is introduced for conciseness.

To solve the constrained minimization (12), we transform the original problem to iterative minimization of its augmented Lagrangian form:

where \(\rho _1\) and \(\rho _2\) are positive constants, \({\mathbf {Y}}_1\) and \({\mathbf {Y}}_2\) are Lagrangian multipliers and \(\langle \cdot , \cdot \rangle _F\) is the Forbenius inner product. We solve this problem by using the ADMM algorithm, the detailed derivation of which is referred to “Appendix A.”

Now we could summarize the optimization in mid-scale fitting step in Algorithm 1. The optimization consists of two loops: the outer loop adjusts the weights and searches the correspondences to construct \({\mathbf {E}}_\mathrm {d}\), while the inner loop optimizes the translation \({\mathbf {T}}\) for each template vertex. Once the inner loop converges, \({\mathbf {T}}\) will be used to update the template vertex position, and then, a new outer iteration starts again. In this algorithm, l and k represent the indices of the outer and inner iteration, respectively. The optimization problems in coarse fitting and fine fitting steps can be solved in the same way.

5 Experiments

We evaluate the performances of the proposed approach by comparing with state-of-the-art algorithms on clean datasets, noisy datasets and real scans, respectively. The number of feature points, vertices and faces of models used in each example is shown in Table 1. All the algorithms are implemented in MATLAB, and all the statistics are measured on an Intel Xeon E5 3.4 GHz 64-bit workstation with 16GB of RAM.

5.1 Parameters and weights

In the data constraints, we set \(\Theta = 90^\circ \), \(\alpha = 0.05r_{\mathrm {box}}\), where \(r_{\mathrm {box}}\) is the length of the target bounding box diagonal. In the regularization term, \(\epsilon = 0.1\) is used, \(\rho = 1\) for the optimization. As for the weights, we use \(w_{\mathrm {ASAP}} = 1\), \(w_{\mathrm {r}}=10\), \(w_{\mathrm {f}}=1000\), \(w_{\mathrm {l}}=10\) for the whole coarse fitting step to highlight the feature point constraints. In the mid-scale step, \(w_{\mathrm {d}}\) is initialized to 0.1 and then increased by 0.1 for each outer iteration. \(w_{\mathrm {l}}\) is initialized to 100 and then decreased by 10 for each outer iteration until reaching 1. We gradually increase \(w_{\mathrm {d}}\) and reduce \(w_{\mathrm {l}}\) so that the good quality of the template mesh can be maintained during the registration to avoid the foldover occurrence. The other weights are the same as those in the coarse fitting step. In the fine fitting step, we set \(w_{\mathrm {d}}=0.1\), \(w_{\mathrm {r}}=0.1\) for (8); \(w_{\mathrm {c}}=1000\) for (9); \(w_{\mathrm {l}}\) is initialized to 100 and then decreased by 10 for each outer iteration until reaching 1 through the whole fine fitting step; \(w_{\mathrm {d}}\) is increased by 0.1 for each outer iteration as the same reason in the mid-scale step.

5.2 Results on clean data

We compare our method with state-of-the-art non-isometric registration methods (Fig. 5): consistent as-similar-as-possible surface registration (CASAP) [8], as-conformal-as-possible surface registration (ACAP) [29], the shape matching-based registration method that minimizes the as-similar-as-possible energy (SM-ASAP) [16] and the registration method that utilizes the point-based deformation smoothness regularization (PDS) [1]. To evaluate the registration accuracy quantitatively, we follow the criterion used in [8, 11] and measure (1) distance error, which is the average distance from the vertices of the deformed template to the corresponding points on the target relative to the target bounding box diagonal, (2) intersection error, which is the number of self-intersecting faces, (3) Hausdorff error, the largest distance between two shapes with respective to the target bounding box diagonal. The statistics data can be found in Table 2. It is obvious that our method Huber-\(L_1\) produces least errors without any foldover generated. Although CASAP and ACAP can fit the template close to the target, as shown at the left ankle of the bouncing model, they still suffer from the foldover issue when the deformation is dramatic. SM-ASAP does not require feature points, but it is only able to handle surfaces with close initial alignment and similar poses. PDS allows for affine transformation for each vertex, which makes it too weak against shear distortions as shown at both arms of the bouncing model. Table 3 shows the iteration steps and time taken by each method. The total time cost in SM-ASAP and PDS is relatively small; however, their results are undesirable with large errors and many foldovers. The total time used in the rest of three methods is almost on the same level, but our method gets the best result with no foldover occurred.

5.3 Results on noisy data

In this subsection, we set up two different experiments to demonstrate the robustness of our method. In both experiments, the targets are polluted with noise along the normal direction of each vertex by multiplying the standard deviation of the average length of the edges in the target.

First, we compare our method with the start-of-the-arts on the noisy data in Fig. 6. The quantitative evaluation is shown in Table 4. Affected by the noise, CASAP and ACAP get poor initial shape estimation and regard some noise as correspondence, which makes parts of template fitted to noise as shown at the right waist of CASAP and the left arm of ACAP. SM-ASAP and PDS still produce poor results as on the clean data. Thanks to the dual relaxation and Huber-\(L_1\) regularization, our method is robust against noise and achieves more accurate results than other methods without any foldover generated.

Second, instead of comparing with a particular method (e.g., CASAP), we compare our Huber-\(L_1\) with different norms applied on the regularization term and data term, including TV-\(L_1\), TV-\(L_2\) and \(L_2\)–\(L_2\), as shown in Fig. 7. To ensure fair comparison, the correspondences among different approaches are the same and have been given as priors. As the \(L_2\)-norm is easily affected by the outliers, TV-\(L_2\) and \(L_2\)-\(L_2\) produce larger errors than Huber-\(L_1\) and TV-\(L_1\), especially at the places with large deformation (seen at the shoulder and butt of the gorilla in SNR and \(L_2\)–\(L_2\)). Due to the \(L_1\)-norm’s tendency on favoring sparse solution, the effect caused by the regularizer leads to piecewise constant solutions (shown at the tail of the dog in TV-\(L_1\)). This effect can be reduced significantly by using a quadratic penalization for small gradient magnitudes while sticking to linear penalization for larger magnitudes to maintain the discontinuity properties known from TV, which is the Huber-norm we adopted here. The quantitative evaluation is shown in Table 5. As the benchmark models also involve foldovers, we ignore the intersection error in the table. With Huber-\(L_1\) regularization scheme, our method is not only robust against noise but also produces piecewise smooth results with least errors.

5.4 Results on real scans

Finally, we compare our method with the state-of-the-art approaches on real scan in Fig. 8. The quantitative evaluation is shown in Table 6. In terms of distance error and Hausdorff error, CASAP and ACAP have competitive results with our method. However, they still produce foldovers at the canthus and nostril and especially generate poor results around the noisy boundary and the missing parts of the target (seen from the side view). Compared to CASAP and ACAP, SM-ASAP and PDS produce less foldovers; however, they have larger distance errors and Hausdorff errors so that their results even look dissimilar to the target intuitively. On the contrary, Huber-\(L_1\) produces least errors with no foldover generated, which demonstrates our method is more robust to a noisy and incomplete target.

6 Conclusions

We have proposed a novel non-isometric surface registration approach based on the Huber-\(L_1\) model. The Huber-norm regularizes on transformation variation, which is robust to noise and produces piecewise smooth result. The position difference is regularized in \(L_1\)-norm, which preserves the fine details on the target while smoothing the noise. The ASAP energy allows us to handle shapes in different sizes. To improve the efficiency and robustness of registration, a coarse-to-fine strategy is adopted. The Laplacian energy relaxes the template on the primal and dual domain, reducing self-intersection and foldover occurrence and improving the mesh quality. The experiments on various data have shown our method is more robust and accurate than other state-of-the-art approaches.

In the future, we will detect the inversion of the elements to prevent the occurrence of foldover completely during registration. In addition, if the feature points can be detected automatically from deep learning, the whole registration process will be implemented without any user intervention. This would be another interesting research topic.

References

Amberg, B., Romdhani, S., Vetter, T.: Optimal step nonrigid ICP algorithms for surface registration. In: IEEE Conference on Computer Vision and Pattern Recognition, 2007, CVPR’07, pp. 1–8. IEEE (2007)

Besl, P.J., McKay, N.D.: A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 14(2), 239–256 (1992)

Bouaziz, S., Tagliasacchi, A., Pauly, M.: Sparse iterative closest point. In: Computer graphics forum, vol. 32, pp. 113–123. Wiley (2013)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J., et al.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends® Mach. Learn. 3(1), 1–122 (2011)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imag. Vis. 40(1), 120–145 (2011)

Floater, M.S., Hormann, K.: Surface parameterization: a tutorial and survey. In: Dodgson, N., Floater, M.S., Sabin, M. (eds.) Advances in Multiresolution for Geometric Modelling, pp. 157–186. Springer, Berlin (2005)

Huang, Q.X., Adams, B., Wicke, M., Guibas, L.J.: Non-rigid registration under isometric deformations. In: Computer Graphics Forum, vol. 27, pp. 1449–1457. Wiley (2008)

Jiang, T., Qian, K., Liu, S., Wang, J., Yang, X., Zhang, J.: Consistent as-similar-as-possible non-isometric surface registration. Vis. Comput. 33(6–8), 891–901 (2017)

Lee, A., Moreton, H., Hoppe, H.: Displaced subdivision surfaces. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, pp. 85–94. ACM Press/Addison-Wesley Publishing Co. (2000)

Li, H., Sumner, R.W., Pauly, M.: Global correspondence optimization for non-rigid registration of depth scans. In: Computer Graphics Forum, vol. 27, pp. 1421–1430. Wiley (2008)

Li, K., Yang, J., Lai, Y., Guo, D.: Robust non-rigid registration with reweighted position and transformation sparsity. IEEE Trans. Vis. Comput. Graphics 25(6), 2255–2269 (2019)

Liao, M., Zhang, Q., Wang, H., Yang, R., Gong, M.: Modeling deformable objects from a single depth camera. In: IEEE 12th International Conference on Computer Vision, 2009, pp. 167–174. IEEE (2009)

Low, K.L.: Linear Least-Squares Optimization for Point-to-Plane ICP Surface Registration, vol. 4. University of North Carolina, Chapel Hill (2004)

Moenning, C., Dodgson, N.A.: Fast marching farthest point sampling. Technical report, University of Cambridge, Computer Laboratory (2003)

Müller, M., Heidelberger, B., Teschner, M., Gross, M.: Meshless deformations based on shape matching. ACM Trans. Gr. 24, 471–478 (2005)

Papazov, C., Burschka, D.: Deformable 3D shape registration based on local similarity transforms. In: Computer Graphics Forum, vol. 30, pp. 1493–1502. Wiley (2011). https://doi.org/10.1111/j.1467-8659.2011.02023.x

Rouhani, M., Boyer, E., Sappa, A.D.: Non-rigid registration meets surface reconstruction. In: 2014 2nd International Conference on 3D Vision (3DV), vol. 1, pp. 617–624. IEEE (2014)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 60(1–4), 259–268 (1992)

Sorkine, O., Alexa, M.: As-rigid-as-possible surface modeling. In: Symposium on Geometry Processing, vol. 4, p. 30 (2007)

Sumner, R.W., Popović, J.: Deformation transfer for triangle meshes. ACM Trans. Gr. 23, 399–405 (2004)

Sumner, R.W., Schmid, J., Pauly, M.: Embedded deformation for shape manipulation. ACM Trans. Gr. 26, 80 (2007)

Surazhsky, V., Surazhsky, T., Kirsanov, D., Gortler, S.J., Hoppe, H.: Fast exact and approximate geodesics on meshes. ACM Trans. Gr. 24, 553–560 (2005)

Süßmuth, J., Winter, M., Greiner, G.: Reconstructing animated meshes from time-varying point clouds. In: Computer Graphics Forum, vol. 27, pp. 1469–1476. Wiley (2008)

Tam, G.K., Cheng, Z.Q., Lai, Y.K., Langbein, F.C., Liu, Y., Marshall, D., Martin, R.R., Sun, X.F., Rosin, P.L.: Registration of 3d point clouds and meshes: a survey from rigid to nonrigid. IEEE Trans. Vis. Comput. Gr. 19(7), 1199–1217 (2013)

Wand, M., Adams, B., Ovsjanikov, M., Berner, A., Bokeloh, M., Jenke, P., Guibas, L., Seidel, H.P., Schilling, A.: Efficient reconstruction of nonrigid shape and motion from real-time 3d scanner data. ACM Trans. Gr. 28(2), 15 (2009)

Werlberger, M., Trobin, W., Pock, T., Wedel, A., Cremers, D., Bischof, H.: Anisotropic huber-l1 optical flow. In: BMVC, vol. 1, p. 3 (2009)

Yang, J., Li, K., Li, K., Lai, Y.K.: Sparse non-rigid registration of 3D shapes. In: Computer Graphics Forum, vol. 34, pp. 89–99. Wiley (2015). https://doi.org/10.1111/cgf.12699

Yeh, I.C., Lin, C.H., Sorkine, O., Lee, T.Y.: Template-based 3d model fitting using dual-domain relaxation. IEEE Trans. Vis. Comput. Gr. 17(8), 1178–1190 (2011)

Yoshiyasu, Y., Ma, W.C., Yoshida, E., Kanehiro, F.: As-conformal-as-possible surface registration. In: Computer Graphics Forum, vol. 33, pp. 257–267. Wiley (2014). https://doi.org/10.1111/cgf.12451

Zach, C., Pock, T., Bischof, H.: A duality based approach for realtime TV-l 1 optical flow. In: Joint Pattern Recognition Symposium, pp. 214–223. Springer (2007)

Acknowledgements

We would like to thank Gabriel Peyre and Alec Jacobson for their help in rendering experimental results in MATLAB.

Funding

This study was funded by EU H2020 under the REA Grant Agreement (Grant Number 691215)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

The ADMM algorithm is employed to optimize unknown variables in (13) alternatively. The kth iteration can be summarized as follows:

The \({\mathbf {F}}\)-subproblem has the following closed solution:

where S is the soft thresholding operator acting on each element of the given matrix:

The \({\mathbf {G}}\)-subproblem can be solved as:

Following the work of [19], we solve the \({\mathbf {R}}_i\)-subproblem by using singular value decomposition of \({\mathbf {X}}_i\):

If \(\mathrm {det}({\mathbf {R}}_i) < 0\), we change the sign of the column of \({\mathbf {U}}_i\) corresponding to the smallest singular value.

Dividing the \(s_i\)-subproblem by \(s_i\) and setting its derivative to zero yields:

In order to solve the \({\mathbf {X}}\)-subproblem, we first zero its derivative and then write the equation in its equally stacked form:

where

\(\text {sqrt}(\cdot )\) is the square root function.

Similarly, \({\mathbf {T}}\) can be obtained by solving the following equation:

where

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Jiang, T., Yang, X., Zhang, J. et al. Huber-\(L_1\)-based non-isometric surface registration. Vis Comput 35, 935–948 (2019). https://doi.org/10.1007/s00371-019-01670-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-019-01670-1