Abstract

The power word problem for a group \(\varvec{G}\) asks whether an expression \(\varvec{u_1^{x_1} \cdots u_n^{x_n}}\), where the \(\varvec{u_i}\) are words over a finite set of generators of \(\varvec{G}\) and the \(\varvec{x_i}\) binary encoded integers, is equal to the identity of \(\varvec{G}\). It is a restriction of the compressed word problem, where the input word is represented by a straight-line program (i.e., an algebraic circuit over \(\varvec{G}\)). We start by showing some easy results concerning the power word problem. In particular, the power word problem for a group \(\varvec{G}\) is \(\varvec{\textsf{uNC}^{1}}\)-many-one reducible to the power word problem for a finite-index subgroup of \(\varvec{G}\). For our main result, we consider graph products of groups that do not have elements of order two. We show that the power word problem in a fixed such graph product is \(\varvec{\textsf{AC} ^0}\)-Turing-reducible to the word problem for the free group \(\varvec{F_2}\) and the power word problems of the base groups. Furthermore, we look into the uniform power word problem in a graph product, where the dependence graph and the base groups are part of the input. Given a class of finitely generated groups \(\varvec{\mathcal {C}}\) without order two elements, the uniform power word problem in a graph product can be solved in \(\varvec{\textsf{AC} ^0[\textsf{C}_=\textsf{L} ^{{{\,\textrm{UPowWP}\,}}(\mathcal {C})}]}\), where \(\varvec{{{\,\textrm{UPowWP}\,}}(\mathcal {C})}\) denotes the uniform power word problem for groups from the class \(\varvec{\mathcal {C}}\). As a consequence of our results, the uniform knapsack problem in right-angled Artin groups is \(\varvec{\textsf{NP}}\)-complete. The present paper is a combination of the two conference papers (Lohrey and Weiß 2019b, Stober and Weiß 2022a). In Stober and Weiß (2022a) our results on graph products were wrongly stated without the additional assumption that the base groups do not have elements of order two. In the present work we correct this mistake. While we strongly conjecture that the result as stated in Stober and Weiß (2022a) is true, our proof relies on this additional assumption.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Algorithmic problems in group theory have a long tradition, going back to the work of Dehn from 1911 [13]. One of the fundamental group theoretic decision problems introduced by Dehn is the word problem for a finitely generated group G (with a fixed finite generating set \(\Sigma \)): does a given word \(w \in \Sigma ^*\) evaluate to the group identity? Novikov [55] and Boone [11] independently proved in the 1950’s the existence of finitely presented groups with undecidable word problem. On the positive side, in many important classes of groups the word problem is decidable, and in many cases also the computational complexity is quite low. Famous examples are finitely generated linear groups, where the word problem belongs to deterministic logarithmic space (\(\textsf{L} \) for short) [37] and hyperbolic groups where the word problem can be solved in linear time [30] as well as in \(\textsf{LOGCFL}\) [38].

In recent years, also compressed versions of group theoretical decision problems, where input words are represented in a succinct form, have attracted attention. One such succinct representation are so-called straight-line programs, which are context-free grammars that produce exactly one word. The size of such a grammar can be much smaller than the word it produces. For instance, the word \(a^n\) can be produced by a straight-line program of size \(\mathcal {O}(\log n)\). For the compressed word problem for the group G the input consists of a straight-line program that produces a word w over the generators of G and it is asked whether w evaluates to the identity element of G. This problem is a reformulation of the circuit evaluation problem for G. The compressed word problem naturally appears when one tries to solve the word problem in automorphism groups or semidirect products [39, Section 4.2]. For the following classes of groups, the compressed word problem is known to be solvable in polynomial time: finite groups (where the compressed word problem is either P-complete or in \(\textsf{uNC}^{2}\) [9]), finitely generated nilpotent groups [35] (where the complexity is even in \(\textsf{uNC}^{2}\)), hyperbolic groups [31] (in particular, free groups), and virtually special groups (i.e, finite extensions of subgroups of right-angled Artin groups) [39]. The latter class covers for instance Coxeter groups, one-relator groups with torsion, fully residually free groups and fundamental groups of hyperbolic 3-manifolds. For finitely generated linear groups there is a randomized polynomial time algorithm for the compressed word problem [39, 41]. Simple examples of groups where the compressed word problem is intractable are wreath products \(G \wr \mathbb {Z}\) with G finite non-solvable: for every such group the compressed word problem is \(\textsf{PSPACE}\)-complete [8], whereas as the (ordinary) word problem for \(G \wr \mathbb {Z}\) is in \(\textsf{uNC}^{1} \) [61].

In this paper, we study a natural restriction of the compressed word problem called the power word problem. An input for the power word problem for the group G is a tuple \((u_1, x_1, u_2, x_2, \ldots , u_n, x_n)\) where every \(u_i\) is a word over the group generators and every \(x_i\) is a binary encoded integer (such a tuple is called a power word); the question is whether \(u_1^{x_1} u_2^{x_2}\cdots u_n^{x_n}\) evaluates to the group identity of G. This problem naturally arises in the context of the so-called knapsack problem; we will explain more about this later.

From a power word \((u_1, x_1, u_2, x_2, \ldots , u_n, x_n)\) one can easily (e. g., by an \(\textsf{uAC}^{0} \)-reduction) compute a straight-line program for the word \(u_1^{x_1} u_2^{x_2}\cdots u_n^{x_n}\). In this sense, the power word problem is at most as difficult as the compressed word problem. On the other hand, both power words and straight-line programs achieve exponential compression in the best case; so the additional difficulty of the compressed word problem does not come from a higher compression rate but rather because straight-line programs can generate more “complex” words.

Our main results for the power word problem are the following; in each case we compare our results with the corresponding results for the compressed word problem:Footnote 1

-

The power word problem for every finitely generated nilpotent group is in \(\textsf{uTC}^{0} \) and hence has the same complexity as the word problem (or the problem of multiplying binary encoded integers). The proof is a straightforward adaption of a proof from [52]. There, the special case, where all words \(u_i\) in the input power word are single generators, was shown to be in \(\textsf{uTC}^{0} \). The compressed word problem for every finitely generated nilpotent group belongs to the class \(\textsf{DET} \subseteq \textsf{uNC}^{2} \) and is hard for the counting class \(\textsf{C}_{=}\textsf{L}\) in case of a torsion-free nilpotent group [35].

-

The power word problem for the Grigorchuk group is \(\textsf{uAC} ^0\)-many-one-reducible to its word problem. Since the word problem for the Grigorchuk group is in \(\textsf{L} \) [8, 23], also the power word problem is in \(\textsf{L} \). Moreover, in [8], it is shown that the compressed word problem for the Grigorchuk group is \(\textsf{PSPACE} \)-complete. Hence, the Grigorchuk group is an example of a group for which the compressed word problem is provably more difficult than the power word problem. Note that, while the word problem of the Grigorchuk group can be solved in linear time [50], we do not know whether this also applies to the power word problem.

-

The power word problem for a finitely generated group G is \(\textsf{uNC}^{1}\)-many-one-reducible to the power word problem for any finite index subgroup of G. An analogous result holds for the compressed word problem as well [35].

-

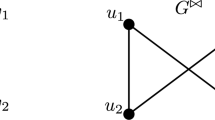

If G is a graph product of finitely generated groups \(G_1, \ldots , G_n\) (the so-called base groups) not containing any elements of order two, then the power word problem in G can be decided in \(\textsf{uAC} ^0\) with oracle gates for (i) the word problem for the free group \(F_2\) and (ii) the power word problems for the base groups \(G_i\). In order to define a graph product of groups \(G_1, \ldots , G_n\), one needs a graph with vertices \(1, \ldots , n\). The corresponding graph product is obtained as the quotient of the free product of \(G_1, \ldots , G_n\) modulo the commutation relations that allows elements of \(G_i\) to commute with elements of \(G_j\) iff i and j are adjacent in the graph. Graph products were introduced by Green in 1990 [25]. The compressed word problem for a graph product is polynomial time Turing-reducible to the compressed word problems for the base groups [28].

-

A right-angled Artin group (RAAG) can be defined as a graph product of copies of \(\mathbb {Z}\). As a corollary of our transfer theorem for graph products, it follows that the power word problem for a RAAG can be decided in \(\textsf{uAC} ^0\) with oracle gates for the word problem for the free group \(F_2\). The same upper complexity bound was shown before by Kausch [33] for the ordinary word problem for a RAAG and in [43] for the power word problem for a finitely generated free group. As a consequence of our new result, the power word problem for a RAAG is in \(\textsf{L}\) (for the ordinary word problem this follows from the well-known fact that RAAGs are linear groups together with the above mentioned result of Lipton and Zalcstein [37]). The compressed word problem for every RAAG is in P (polynomial time) and P-complete if the RAAG is non-abelian [39].

In all the above mentioned results, the group is fixed, i.e., not part of the input. In general, it makes no sense to input an arbitrary finitely generated group, since there are uncountably many such groups. On the other hand, if we restrict to finitely generated groups with a finitary description, one may also consider a uniform version of the word problem/power word problem/compressed word problem, where the group is part of the input. We will consider the uniform power word problem for graph products for a fixed countable class \(\mathcal {C}\) of finitely generated groups. We assume that the groups in \(\mathcal {C}\) have a finitary description.Footnote 2 Then a graph product is given by a list \(G_1, \ldots G_n\) of base groups from \(\mathcal {C}\) together with an undirected graph on the indices \(1, \ldots , n\). For this setting Kausch [33] proved that the uniform word problem for graph products belongs to \(\textsf{C}_=\textsf{L} ^{{{\,\textrm{UWP}\,}}(\mathcal {C})}\), i.e., the counting logspace class \(\textsf{C}_=\textsf{L} \) with an oracle for the uniform word problem for the class \(\mathcal {C}\) (we write \({{\,\textrm{UWP}\,}}(\mathcal {C})\) for the latter). We extend this result to the power word problem under the additional assumption that no group in \(\mathcal {C}\) contains an element of order two. More precisely, we show that the uniform power word problem for graph products over that class \(\mathcal {C}\) of base groups belongs to the closure of \(\textsf{C}_=\textsf{L} ^{{{\,\textrm{UPowWP}\,}}(\mathcal {C})}\) under \(\textsf{uAC} ^0\)-Turing-reductions, where \({{\,\textrm{UPowWP}\,}}(\mathcal {C})\) denotes the uniform power word problem for the class \(\mathcal {C}\). Analogous results for the uniform compressed word problem are not known. Indeed, whether the uniform compressed word problem for RAAGs is solvable in polynomial time is posed as an open problem in [40].

Our result for the uniform power word problem for graph products implies that the uniform power word problem for RAAGs can be solved in polynomial time. We can apply this result to the knapsack problem for RAAGs. The knapsack problem is a classical optimization problem that originally has been formulated for the integers. Myasnikov et al. introduced the decision variant of the knapsack problem for an arbitrary finitely generated group G: Given \(g_1, \dots , g_n, g \in G\), decide whether there are \(x_1, \dots , x_n \in \mathbb {N}\) such that \(g_1^{x_1} \cdots g_n^{x_n} = g\) holds in the group G [51], see also [19, 22, 34, 44] for further work. For many groups G one can show that, if such \(x_1, \ldots , x_n \in \mathbb {N}\) exist, then there exist such numbers of size \(2^{\text {poly}(N)}\), where N it the total length of all words representing the group elements \(g_1, \dots , g_n, g\). This holds for instance for RAAGs. In this case, one nondeterministically guesses the binary encodings of numbers \(x_1, \ldots , x_n\) and then verifies, using an algorithm for the power word problem, whether \(g_1^{x_1} \cdots g_n^{x_n} g^{-1} = 1\) holds. In this way, it was shown in [44] that for every RAAG the knapsack problem belongs to \(\textsf{NP}\) (using the fact that the compressed word problem and hence the power word problem for a fixed RAAG belongs to P). Moreover, if the commutation graph of the RAAG G contains an induced subgraph \(C_4\) (cycle on 4 nodes) or \(P_4\) (path on 4 nodes), then the knapsack problem for G is \(\textsf{NP} \)-complete [44]. However, membership of the uniform version of the knapsack problem for RAAGs in \(\textsf{NP} \) remained open. Our polynomial time algorithm for the uniform power word problem for RAAGs yields the missing piece: the uniform knapsack problem for RAAGs is indeed \(\textsf{NP} \)-complete.

1.1 Related Work

Implicitly, (variants of) the power word problem have been studied long before. In the commutative setting, Ge [24] has shown that one can verify in polynomial time an identity \(\alpha _1^{x_1} \alpha _2^{x_2}\cdots \alpha _n^{x_n} = 1\), where the \(\alpha _i\) are elements of an algebraic number field and the \(x_i\) are binary encoded integers.

In [27], Gurevich and Schupp present a polynomial time algorithm for a compressed form of the subgroup membership problem for a free group F where group elements are represented in the form \(a_1^{x_1} a_2^{x_2}\cdots a_n^{x_n}\) with binary encoded integers \(x_i\). The \(a_i\) must be, however, standard generators of the free group F. This is the same input representation as in [52] (for nilpotent groups) and is more restrictive then our setting, where we allow powers of the form \(w^x\) for w an arbitrary word over the group generators (on the other hand, Gurevich and Schupp consider the subgroup membership problem, which is more general than the word problem).

Recently, the power word problem has been investigated in [19]. In [19] it is shown that the power word problem for a wreath product of the form \(G \wr \mathbb {Z}\) with G finitely generated nilpotent belongs to \(\textsf{uTC} ^0\). Moreover, the power word problem for iterated wreath products of the form \(\mathbb {Z}^r \wr (\mathbb {Z}^r \wr (\mathbb {Z}^r \cdots ))\) belongs to \(\textsf{uTC} ^0\). By a famous embedding theorem of Magnus [47], it follows that the power word problem for a free solvable groups is in \(\textsf{uTC} ^0\). Finally, in [45] Zetzsche and the first author of this work showed that the power word problem for a solvable Baumslag-Solitar group \(\textsf{BS}(1,q)\) belongs to \(\textsf{uTC} ^0\).

The present paper is a combination of the two conference papers [43] (by the first and third author) and [58] (by the second and third author). Here we also correct a mistake that occurred in [58]: there, our results on graph products were stated without the additional assumption that the base groups do not have elements of order two. While we strongly conjecture this result to be true, our proof only works with this additional assumption. The key lies in the proof of Lemma 51 (which corresponds to Lemma 15 in [58, 59]) – indeed, the only place where we need this additional assumption. We give more technical details in Remarks 44 and 52.

2 Preliminaries

For integers \(a \le b\) we write [a, b] for the interval \(\{x \in \mathbb {Z}a \le x \le b\}\). For an integer \(z \in \mathbb {Z}\) let us define \(\llbracket x \rrbracket = [0,x]\) if \(x \ge 0\) and \(\llbracket x \rrbracket = [x,0]\) if \(x < 0\).

2.1 Words

An alphabet is a (finite or infinite) non-empty set \(\Sigma \); an element \(a \in \Sigma \) is called a letter. The free monoid over \(\Sigma \) is denoted by \(\Sigma ^*\); its elements are called words. The multiplication of the free monoid is concatenation of words. The identity element is the empty word 1.

Consider a word \(w = a_1 \cdots a_n\) with \(a_i \in \Sigma \). For \(A \subseteq \Sigma \) we write \(\left|w\right|_{A}\) for the number of \(i \in [1,n]\) with \(a_i \in A\) and we set \(\vert w\vert = \vert w\vert _\Sigma \) (the length of w) and \(|w|_a = |w|_{\{a\}}\) for \(a \in \Sigma \). A word w has period k if \(a_i= a_{i+k}\) for all i with \(i,i+k \in [1,n]\).

2.2 Monoids

Let M be an arbitrary monoid. Later, we will consider finitely generated monoids M, where elements of M are described by words over an alphabet of monoid generators. To distinguish equality as words from equality as elements of M, we also write \(x=_My\) (or \(x=y\) in M) to indicate equality in M (as opposed to equality as words). Let \(x =_M uvw\) for some \(x, u, v, w \in M\). We say u is a prefix of x, v is a factor of x, and w is a suffix of x. We call u a proper prefix if \(u \ne x\). Similarly, v is a proper factor if \(v \ne x\) and w is a proper suffix if \(w \ne x\).

An element \(u\in M\) is primitive if \(u\ne _M v^k\) for any \(v \in M\) and \(k >1\). Two elements \(u, v \in M\) are transposed if there are \(x, y \in M\) such that \(u =_M xy\) and \(v =_M yx\). We also say that v is a transposition of u. We call u and v conjugate if there is an element \(t \in M\) such that \(ut = tv\) (note that this is sometimes called left-conjugate in the literature). For a free monoid \(\Sigma ^*\), two words u, v are transposed if and only if they are conjugate. In this case, we also say that the word u is a cyclic permutation of the word v.

2.3 Rewriting Systems over Monoids

A rewriting system over the monoid M is a subset \(S \subseteq M \times M\). We write \(\ell \rightarrow r\) if \((\ell , r) \in S\). The corresponding rewriting relation \(\underset{S}{\Longrightarrow }\) over M is defined by: \(u \underset{S}{\Longrightarrow }\ v\) if and only if there exist \(\ell \rightarrow r\in S\) and \(s,t \in M\) such that \(u =_M s\ell t\) and \(v =_M sr t\). We also say that u can be rewritten to v in one step. Let \(\overset{+}{\underset{S}{\Longrightarrow }}\) be the transitive closure of \(\underset{S}{\Longrightarrow }\) and \(\overset{*}{\underset{S}{\Longrightarrow }}\) the reflexive and transitive closure of \(\overset{S}{\Longrightarrow }\). We write \(u \overset{\le k}{\underset{S}{\Longrightarrow }} v\) (resp. \(u \overset{K}{\underset{S}{\Longrightarrow }} v\)) to denote that u can be rewritten to v using at most (resp. exactly) k steps. We say that \(w \in M\) is irreducible with respect to S if there is no \(v \in M\) with \(w \underset{S}{\Longrightarrow }\ v\). The set of irreducible monoid elements is denoted as \( {{\,\textrm{IRR}\,}}(S) = \left\{ w \in M \mid w \text { is irreducible} \right\} \). A rewriting system S is called confluent if, whenever \(x\overset{*}{\underset{S}{\Longrightarrow }}y\) and \(x\overset{*}{\underset{S}{\Longrightarrow }}z\), then there is some w with \(y\overset{*}{\underset{S}{\Longrightarrow }}w\) and \(z\overset{*}{\underset{S}{\Longrightarrow }}w\). Note that if S is confluent, then for each v there is at most one \(w \in {{\,\textrm{IRR}\,}}(S)\) with \(v\overset{*}{\underset{S}{\Longrightarrow }}w\). A rewriting system S is called terminating if there is no infinite chain

We write M/S for the quotient monoid \(M/\equiv _S\), where \(\equiv _S\) is the smallest congruence relation on M that contains S.

The above notion of a rewriting system over a monoid M is a generalization of the notion of a string rewriting system, which is a rewriting system over a free monoid \(\Sigma ^*\). For further details on rewriting systems we refer to [10, 32].

2.4 Partially Commutative Monoids

In this subsection, we introduce a few basic notations concerning partially commutative monoids. More information can be found in [14].

Let \(\Sigma \) be an alphabet of symbols. We do not require \(\Sigma \) to be finite. Let \(I \subseteq \Sigma \times \Sigma \) be a symmetric and irreflexive relation. The partially commutative monoid defined by \((\Sigma , I)\) is the quotient monoid

Thus, the relation I describes which generators commute; it is called the commutation relation or independence relation. The relation \(D = (\Sigma \times \Sigma ) \setminus I\) is called dependence relation and \((\Sigma , D)\) is called a dependence graph. The monoid \(M(\Sigma , I)\) is also called a trace monoid and its elements are called traces or partially commutative words. Note that for words \(u,v \in \Sigma ^*\) with \(u =_{M(\Sigma , I)} v\) we have \(\left| u\right| _a=\left| v\right| _a\) for every \(a \in \Sigma \). Hence, the length \(\left| w\right| \) and \(\left| w\right| _a\) for a trace \(w \in M(\Sigma , I)\) is well-defined and we use this notation henceforth.

A letter a is called a minimal letter of \(w \in M(\Sigma ,I)\) if \(w =_{M(\Sigma , I)} au\) for some \(u\in M(\Sigma ,I)\). Likewise a letter a is called a maximal letter of w if \(w =_{M(\Sigma , I)} ua\) for some \(u\in M(\Sigma ,I)\). When we say that a is minimal (maximal) in \(w \in \Sigma ^*\), we mean that a is minimal (maximal) in the trace represented by w. Note that if both a and \(b \ne a\) are minimal (maximal) letters of w, then \((a,b) \in I\). A trace rewriting system is simply a rewriting system over a trace monoid \(M(\Sigma , I)\) in the sense of Section 2.3. If \(\Delta \subseteq \Sigma \) is a subset, we write \(M(\Delta , I)\) for the submonoid of \(M(\Sigma , I)\) generated by \(\Delta \).

Be aware of the ambiguity between the dependence graph \((\Sigma , D)\) describing which generators commute, and the dependence graph of a trace w as described in the following.

Elements of a partially commutative monoid can be represented by node-labelled directed acyclic graphs: Let \(w = a_1 \cdots a_n\) with \(a_i \in \Sigma \). We define the dependence graph of w as follows: The node set is [1, n], node i is labelled with \(a_i\) and there is an edge \(i \rightarrow j\) if and only if \(i < j\) and \((a_i, a_j) \in D\). Then, for two words \(u,v \in \Sigma ^*\) we have \(u =_{M(\Sigma ,I)} v\) if and only if the dependence graphs of u and v are isomorphic (as node-labelled directed graphs). The dependence graph of a trace \(v \in M(\Sigma , I)\) is the dependence graph of (any) word representing v. The trace v is said to be connected if its dependence graph is weakly connected (i.e., connected if we forget the direction of edges), or, equivalently, if the induced subgraph of \((\Sigma ,D)\) consisting only of the letters occurring in v is connected. The connected components of the trace v are the weakly connected components of the dependence graph of v.

2.4.1 Levi’s Lemma

As a consequence of the representation of traces by dependence graphs, one obtains Levi’s lemma for traces (see e.g. [14, p. 74]), which is one of the fundamental facts in trace theory. The formal statement is as follows.

Lemma 1

(Levi’s lemma) Let \(M = M(\Sigma ,I)\) be a trace monoid and \(u_1, \ldots , u_m, v_1, \) \( \ldots , v_n \in M \). Then

if and only if there exist \(w_{i,j} \in M(\Sigma ,I)\) (for \(i \in [1,m]\), \(j \in [1,n]\)) such that

-

\(u_i =_M w_{i,1}w_{i,2}\cdots w_{i,n}\) for every \(i \in [1,m]\),

-

\(v_j =_M w_{1,j}w_{2,j}\cdots w_{m,j}\) for every \(j \in [1,n]\), and

-

\((w_{i,j}, w_{k,\ell }) \in I\) if \(1 \le i < k \le m\) and \(n \ge j > \ell \ge 1\).

The situation in the lemma will be visualized by a diagram of the following kind. The i–th column corresponds to \(u_i\), the j–th row (read from bottom to top) corresponds to \(v_j\), and the intersection of the i–th column and the j–th row represents \(w_{i,j}\). Furthermore, \(w_{i,j}\) and \(w_{k,\ell }\) are independent if one of them is left-above the other one. So, for instance, all \(w_{i,j}\) in the red part are independent from all \(w_{k,\ell }\) in the blue part.

Usually, Levi’s lemma is formulated for the case that the alphabet \(\Sigma \) is finite. But the case that \(\Sigma \) is finite already implies the general case with \(\Sigma \) possibly infinite: simply replace the trace monoid \(M(\Sigma ,I)\) by \(M(\Sigma ',I')\), where \(\Sigma '\) contains all symbols occurring in one of the traces \(u_i, v_j\) and \(I'\) is the restriction of I to \(\Sigma '\).

A consequence of Levi’s Lemma is that trace monoids are cancellative, i.e., \(usv=utv\) implies \(s=t\) for all traces \(s,t,u,v\in M\).

2.4.2 Projections to Free Monoids

It is a well-known result [17, 18, 62] that every trace monoid can be embedded into a direct product of free monoids. In this section we recall the corresponding results.

Consider a trace monoid \(M = M(\Sigma , I)\) with the property that there exist finitely many sets \(A_i \subseteq \Sigma \) (\(i \in [1,k]\) for some \(k\in \mathbb {N}\)) fulfilling the following property:

Since D is reflexive this implies that for every \(a\in \Sigma \) there is an i such that \(a\in A_i\). property if one takes for the \(A_i\) the maximal cliques in the dependence graph \((\Sigma ,D)\) [18]. If \(\Sigma \) is finite, one can take for the \(A_i\) also all sets \(\{a, b\}\) with \((a, b) \in D\) together with all singletons \(\{a\}\) with a an isolated vertex in \((\Sigma ,D)\) [17].

Let \(\pi _i:\Sigma ^* \rightarrow A_i^*\) be the projection to the free monoid \(A_i^*\) defined by \(\pi _i(a) = a\) for \(a \in A_i\) and \(\pi _i(a) = 1\) otherwise. We define a projection \(\Pi :\Sigma ^* \rightarrow A_1^*\times \cdots \times A_k^*\) to a direct product of free monoids by \(\Pi (w) = (\pi _1(w), \dots , \pi _k(w))\). It is straightforward to see that, if \(u =_M v\), then also \(\Pi (u) = \Pi (v)\). Hence, we can consider \(\Pi \) also as a monoid morphism \(\Pi :M \rightarrow A_1^*\times \cdots \times A_k^*\) (which from now on we denote by the same letter \(\Pi \)). We will make use of the following two lemmata presented in [18].

Lemma 2

([62, Lemma 1], [18, Proposition 1.2]) Let \(M = M(\Sigma , I)\). For \(u, v \in \Sigma ^*\) we have \(u =_M v\) if and only if \(\Pi (u) = \Pi (v)\).

Thus, \(\Pi \) is an injective monoid morphism \(\Pi :M \rightarrow A_1^*\times \cdots \times A_k^*\).

Lemma 3

[18, Proposition 1.7]) Let \(M = M(\Sigma , I)\), \(w \in \Sigma ^*\) and \(t > 1\). Then, there is \(u \in \Sigma ^*\) with \(w =_M u^t\) if and only if there is a tuple \(\textbf{v} \in \prod _{i=1}^k A_i^*\) with \(\Pi (w) = \textbf{v}^t\).

In [18] these lemmata are only proved for the case that \(\Sigma \) is finite, but as for Levi’s Lemma one obtains the general case by restricting \((\Sigma ,I)\) to those letters that appear in the traces involved.

Projections onto free monoids were used in [18] in order to show the following lemmata.

Lemma 4

([18, Corollary 3.13]) Let \(M = M(\Sigma , I)\) and \(u,v \in M\). Then there is some \(x \in M\) with \(xu =_M vx\) if and only if u and v are related by a sequence of transpositions, i. e., there are \(y_1, \dots , y_k\) such that \(u = y_1\), \(v = y_k\) and \(y_{i+1}\) is a transposition of \(y_i\).

Lemma 4 gives us a tool for checking conjugacy in \( M(\Sigma , I)\); indeed, from now on, we will most of the time use that conjugate elements are related by a sequence of transpositions.

Lemma 5

([18, Proposition 3.5]) Let \(M = M(\Sigma , I)\) and \(u,v,p,q \in M\) such that \(u = p^k\) and \(v = q^\ell \) with p and q primitive and \(k,\ell \ge 1\). Then u and v are conjugate if and only if \(k = \ell \) and p and q are conjugate.

Note that Lemma 5 implies that if u is conjugate to a primitive trace, then u must be primitive as well.

2.5 Trace Monoids Defined by Finite Graphs

As a first step towards graph products let us introduce the following notation for trace monoids: Let \(\mathcal {L}\) be a finite set of size \(\sigma = |\mathcal {L}|\) and \(I \subseteq \mathcal {L}\times \mathcal {L}\) be irreflexive and symmetric (i. e., \((\mathcal {L},I)\) is a finite undirected simple graph). Moreover, assume that for each \(\zeta \in \mathcal {L}\) we are given a (possibly infinite) alphabet \(\Gamma _\zeta \) such that \(\Gamma _\zeta \cap \Gamma _\xi = \emptyset \) for \(\zeta \ne \xi \). By setting \(\Gamma = \bigcup _{\zeta \in \mathcal {L}}\Gamma _\zeta \) and \(I_\Gamma = \{(a,b) (\zeta ,\xi ) \in I, a\in \Gamma _\zeta , b\in \Gamma _\xi \}\), we obtain a trace monoid \(M = M(\Gamma , I_\Gamma )\). Henceforth, we simply write I for \(I_\Gamma \). For \(a \in \Gamma \) we define \({{\,\textrm{alph}\,}}(a) = \zeta \) if \(a \in \Gamma _\zeta \). For \(u= a_1\cdots a_k \in \Gamma ^*\) we define \({{\,\textrm{alph}\,}}(u) = \{{{\,\textrm{alph}\,}}(a_1), \dots , {{\,\textrm{alph}\,}}(a_k)\}\).

The following lemma characterizes the shape of a prefix, suffix or factor of a power in the above trace monoid M.

Lemma 6

Let \(p \in M\) be connected and \(k \in \mathbb {N}\). Then we have:

-

1.

If \(p^k =_M uw\) for traces \(u,w \in M(\Gamma , I)\), then there exist \(s < \sigma \), \(\ell ,m \in \mathbb {N}\) and factorizations \(p = u_i w_i\) for \(i \in [1,s]\) such that

-

\(k = \ell +s+m\),

-

\(u_i \ne 1 \ne w_i\) for all \(i \in [1,s]\) and \((w_i,u_j) \in I\) for \(i < j\),

-

\(u =_M p^{\ell }u_1\cdots u_s\) and \(w =_M w_1\cdots w_s p^m\).

-

-

2.

Given a factor v of \(p^k\) at least one of the following is true.

-

\(v = u_1\cdots u_a v_1 \cdots v_b w_1 \cdots w_c\) where \(a, b, c \in \mathbb {N}\), \(a + b + c \le 2\sigma - 2\), \(u_i\) is a proper suffix of p for \(i \in [1,a]\), \(v_i\) is a proper factor of p for \(i \in [1,b]\) and \(w_i\) is a proper prefix of p for \(i \in [1,c]\).

-

\(v = u_1\cdots u_a p^b w_1 \cdots w_c\) where \(a, b, c \in \mathbb {N}\), \(a, c < \sigma \), \(u_i\) is a proper suffix of p for \(i \in [1,a]\) and \(w_i\) is a proper prefix of p for \(i \in [1,c]\).

-

Figure 1 illustrates case (i) of Lemma 6.

A factorization of \(p^k\) as in case 1 of Lemma 6

Proof

Let us start with the first statement. We apply Levi’s Lemma to the identity \(p^k =_M uw\) and obtain the following diagram:

We have \((w_i, u_{i+1}) \in I\) and hence \({{\,\textrm{alph}\,}}(w_i) \cap {{\,\textrm{alph}\,}}(u_{i+1}) = \emptyset \) for all \(i \in [1,k-1]\). Since \({{\,\textrm{alph}\,}}(p) = {{\,\textrm{alph}\,}}(u_j) \cup {{\,\textrm{alph}\,}}(w_j)\) for all \(j \in [1,k]\), this implies \({{\,\textrm{alph}\,}}(u_{i+1}) \subseteq {{\,\textrm{alph}\,}}(u_i)\). Now assume that \(i \in [1,k-1]\) is such that \(u_i \ne 1 \ne w_i\). We have \((u_{i+1}, w_i) \in I\). Since we cannot have \((u_i, w_i) \in I\) (p is connected), we cannot have \({{\,\textrm{alph}\,}}(u_i) \subseteq {{\,\textrm{alph}\,}}(u_{i+1})\). Therefore, \({{\,\textrm{alph}\,}}(u_{i+1}) \subsetneq {{\,\textrm{alph}\,}}(u_{i})\) whenever \(u_i \ne 1 \ne w_i\). It follows that there are \(\ell ,m \ge 0\) and \(s < \sigma \) such that \(k = \ell +s+m\) and

-

\(u_i = p\), \(w_i = 1\) for \(i \in [1,x]\),

-

\(u_i \ne 1 \ne w_i\), \(u_i w_i = p\) for \(i \in [x+1,x+s]\), and

-

\(u_i = 1\), \(w_i = p\) for \(i \in [x+s+1,k]\).

By renaming \(u_{x+i}\) and \(w_{x+i}\) into \(u_i\) and \(w_i\), respectively, for \(i \in [1,s]\) we obtain factorizations \(u =_M p^\ell u_1 \cdots u_s\) and \(w =_M w_1 \cdots w_s p^m\) for some \(s < \sigma \) and traces \(u_i, w_i \in M\setminus \{1\}\) with \(p =_M u_i w_i\). This yields statement 1.

To derive statement 2, consider the factorization \(p^k =_M u (vw)\). Applying the final conclusion of the previous paragraph, we obtain factorizations \(u =_M p^x u_1 \cdots u_s\) and \(vw =_M x_1 \cdots x_s p^\ell \) where \(s < \sigma \), the \(u_i\) are proper prefixes of p, the \(x_i\) are proper suffixes of p and \(k = x + s + \ell \).

We then consider two cases: if \(\ell = 0\), then \(vw =_M x_1 \cdots x_s\). Applying Levi’s Lemma to this factorization yields the following diagram:

Hence, \(v =_M v_1 v_2 \cdots v_s\), where every \(v_i\) is a prefix of the proper suffix \(x_i\) of p. Therefore, \(v_i\) is a proper factor of p.

Now assume that \(\ell > 0\). Applying Levi’s Lemma to \(vw =_M x_1 \cdots x_s p^\ell \) yields a diagram of the following form:

To the factorizations \(p =_M v_{s+i} w_{s+i}\) (\(i \in [1,\ell ]\)) we apply the arguments used for the proof of statements 1 and 2. There are \(y,z \ge 0\) and \(t < \sigma \) such that \(\ell = y+t+z\) and

-

\(v_{s+i} = p\), \(w_{s+i} = 1\) for \(i \in [1,y]\),

-

\(v_{s+i} \ne 1 \ne w_{s+i}\), \(v_{s+i} w_{s+i} =_M p\) for \(i \in [y+1,y+t]\), and

-

\(v_{s+i} = 1\), \(w_{s+i} = p\) for \(i \in [y+t+1,\ell ]\).

We obtain \(v =_M v_1 \cdots v_s p^y v_{s+y+1} \cdots v_{s+y+t}\) where every \(v_{s+y+i}\) (\(i \in [1,t]\)) is a proper prefix of p. If \(y > 0\) then \((v_{s+1}, w_1 \cdots w_s) \in I\) implies \(w_1 \cdots w_s = 1\). Hence, every \(v_i\) (\(i \in [1,s]\)) is proper suffix of p (by a symmetric argument, we could write v also as a concatenation of \(s < \sigma \) many proper suffixes of p followed by \(t < \sigma \) many proper factors of p).

Finally, assume that \(y=0\). We get \(v =_M v_1 \cdots v_s v_{s+y+1} \cdots v_{s+y+t}\) with every \(v_i\) (\(i \in [1,s]\)) a proper factor of p and every \(v_{s+y+i}\) (\(i \in [1,t]\)) a proper prefix of p. \(\square \)

For a trace \(u\in M = M(\Gamma ,I)\) and \(\zeta \in \mathcal {L}\), we write \(\left| u\right| _\zeta = \left| u\right| _{\Gamma _\zeta } = \sum _{a\in \Gamma _\zeta } \left| u\right| _a\). Note that, while the sum might be infinite, only finitely many summands are non-zero.

Lemma 7

Let \(r,s,t,u\in M\) with \(rs =_M tu\) and, for all \(\zeta \in \mathcal {L}\), \(\left| s\right| _\zeta \ge \left| u\right| _\zeta \) or, equivalently, \(\left| r\right| _\zeta \le \left| t\right| _\zeta \). Then, as elements of M, u is a suffix of s and r is a prefix of t. In particular, if for all \(\zeta \in \mathcal {L}\) we have \(\left| s\right| _\zeta = \left| u\right| _\zeta \), then \(s =_M u\) and \(r =_M t\).

Proof

By Levi’s Lemma, there are \(p,q,x,y \in M\) with \((x,y) \in I\) and \(r=px\), \(t = py\), \(s =yq\), and \(u = xq\). Because of the condition \(\left| s\right| _\zeta \ge \left| u\right| _\zeta \) for all \(\zeta \in \mathcal {L}\), x must be the empty trace.

The second part of the lemma follows by using the first part for the two inequalities \(\left| s\right| _\zeta \ge \left| u\right| _\zeta \) and \(\left| u\right| _\zeta \ge \left| s\right| _\zeta \). \(\square \)

Lemma 8

Let \(p^\sigma u=_Mvp^\sigma \) (where \(\sigma = |\mathcal {L}|\) as above) for some primitive and connected trace p and let \(u \in M\) be a prefix of \(p^k\) for some \(k\in \mathbb {N}\). Then we have \(u=v=p^\ell \) for some \(\ell \in [0,k]\).

Proof

If u is the empty prefix, we are done. Hence, from now on, we can assume that u is non-empty. First consider the case that p is a prefix of u. Then, \(p^{\sigma +1}\) is a prefix of \(vp^\sigma \). Hence, there is a trace q with \(vp^\sigma =_M pq\), where \(p^\sigma \) is a factor of q. Then Lemma 7 implies that p is a prefix of v.

If \(v =_M pv'\) and \(u =_M p u'\), we obtain \(p^{\sigma +1} u' =_M p v' p^{\sigma }\). Cancelling p yields \(p^\sigma u' =_M v' p^\sigma \). Since \(u'\) is a prefix of \(p^{k-1}\) we can replace u and v by \(u'\) and \(v'\), respectively. Therefore, we can assume that p is not a prefix of u. Since u is a prefix of some \(p^k\), Lemma 6 implies that u is already a prefix of \(p^\sigma \).

Let us next show that \(u=v\). To do so, we write \(p^\sigma =_M uw\). Then we have

Since \(\left| wu\right| _a = \left| uw \right| _a\) for all \(a \in \Gamma \), Lemma 7 implies \(u=v\).

Now, we have \(p^\sigma u=_Mup^\sigma \). Since p is connected, [17, Proposition 3.1] implies that there are \(i,j \in \mathbb {N}\) with \(p^{\sigma \cdot i} =_M u^j\). Then, by [17, Theorem 1.5] it follows that there are \(t \in M\) and \(\ell ,m \in \mathbb {N}\) with \(p=_Mt^m\) and \(u=_Mt^\ell \). As p is primitive, we have \(m = 1\) and \(t=p\) and hence \(u =_M = p^\ell \). Since u is a prefix of \(p^k\), we have \(\ell \le k\). \(\square \)

To prove the next lemma, we want to apply Lemma 2. To do so, we use the following projections suitable for our use case. Let

where \(\zeta \) is isolated if there is no \(\xi \ne \zeta \) with \((\zeta , \xi ) \in D\) (and \(D= \mathcal {L}\times \mathcal {L}\setminus I\)). Notice that even though \(\Gamma \) might be infinite, \(\mathcal {A}\) is finite in any case (because \(\mathcal {L}\) is finite). Let us write \(\mathcal {A}=\{A_1, \ldots , A_k\}\) and \(\pi _i\) for the projection \(M(\Gamma ,I) \rightarrow A_i^*\).

Lemma 9

Let \(u,v,p,q \in M\) and \(k\in \mathbb {N}\) with \(uqv =_M p^k\) and \(\left| p \right| _\zeta = \left| q \right| _\zeta \) for all \(\zeta \in \mathcal {L}\). Then p and q are conjugate in M.

Proof

First, we are going to show that the transposition qvu of uqv is equal to \(q^k\) in M. Consider the projections \(\pi _i\) onto \(A_i\) from above. By the assumption \(\left| p \right| _\zeta = \left| q \right| _\zeta \) for all \(\zeta \in \mathcal {L}\), it follows that \(\left| \pi _i(p)\right| = \left| \pi _i(q)\right| \). As \(\pi _i(p^k)\) has a period \(\left| \pi _i(p)\right| \), so has its cyclic permutation \(\pi _i(qvu)\). As its first \(\left| \pi _i(p)\right| \) letters are exactly \(\pi _i(q)\), it follows that \(\pi _i(qvu) = \pi _i(q^k)\). Since this holds for all i, it follows by Lemma 2 that \(qvu =_M q^k\).

Now, observe that \(q^k =_M qvu\) and \(p^k =_M uqv\) are conjugate in M. Hence, it remains to apply Lemma 5 to conclude that p and q are conjugate: we write \(p=\tilde{p}^i\) and \(q=\tilde{q}^j\) for primitive traces \(\tilde{p}, \tilde{q}\). Then Lemma 5 tells us that \(i=j\) and \(\tilde{p}\) and \(\tilde{q}\) are conjugate. Hence, also p and q are conjugate. \(\square \)

2.6 Groups

If G is a group, then \(u, v \in G\) are conjugate if and only if there is a \(g \in G\) such that \(u =_G g^{-1}vg\) (note that this agrees with the above definition for monoids).

2.6.1 Free Groups

Let X be a set and \(\overline{X} = \{\overline{a }~\vert ~a \in X\}\) be a disjoint copy of X. We extend the mapping \(a \mapsto \overline{a}\) to an involution without fixed points on \(\Sigma = X \cup \overline{X}\) by \(\overline{\overline{a}} = a\) and finally to an involution on \(\Sigma ^*\) by \(\overline{a_1 a_2 \cdots a_n} = \overline{a_n} \cdots \overline{a_2} \;\overline{a_1}\). The only fixed point of the latter involution is the empty word 1. The string rewriting system

is strongly confluent and terminating meaning that for every word \(w \in \Sigma ^*\) there exists a unique word \(\hat{w} \in {{\,\textrm{IRR}\,}}(S_{\textrm{free}})\) with \(w \overset{*}{\underset{S_{\textrm{free}}}{\Longrightarrow }} \hat{w}\). Words from \({{\,\textrm{IRR}\,}}(S_{\textrm{free}})\) are called freely reduced. The system \(S_{\textrm{free}}\) defines the free group \(F(X) = \Sigma ^*/ S_{\textrm{free}}\) with basis X. Let \(\eta :\Sigma ^* \rightarrow F(X)\) denote the canonical monoid homomorphism. Then we have \(\eta (w)^{-1} = \eta (\overline{w})\) for all words \(w \in \Sigma ^*\). If \(|X|=2\), then we write \(F_2\) for F(X). It is known that for every countable set X, \(F_2\) contains an isomorphic copy of F(X).

2.6.2 Finitely Generated Groups and the Word Problem

A group G is called finitely generated (f.g.) if there exists a finite set X and a surjective group homomorphism \(h :F(X) \rightarrow G\). In this situation, the set \(\Sigma = X \cup \overline{X}\) is called a finite (symmetric) generating set for G. Usually, we write \(X^{-1}\) instead of \(\overline{X}\) and \(a^{-1}\) instead of \(\overline{a}\) for \(a \in \Sigma \). Thus, for an integer \(z < 0\) and \(w \in \Sigma ^*\) we write \(w^z\) for \((\overline{w})^{-z}\).

In many cases we can think of \(\Sigma \) as a subset of G, but, in general, we can also have more than one letter for the same group element. The group identity of G is denoted with 1 as well (this fits to our notation 1 for the empty word which is the identity of F(X)).

For words \(u,v \in \Sigma ^*\) we usually say that \(u = v\) in G or \(u =_G v\) in case \(h(\eta (u)) = h(\eta (v))\) and we do not write \(\eta \) nor h from now on. The word problem for the finitely generated group G, \(\text {WP}(G)\) for short, is defined as follows:

- \(\textsf {Input}\):

-

a word \(w \in \Sigma ^*\).

- \(\textsf {Output}\):

-

Does \(w=_G 1\) hold?

2.6.3 The Power Word Problem

A power word (over \(\Sigma \)) is a tuple \((u_1,x_1,u_2,x_2,\ldots ,u_n,x_n)\) where \(u_1, \dots , u_n \in \Sigma ^*\) are words over the group generators and \(x_1, \dots , x_n\in \mathbb {Z}\) are integers that are given in binary notation. Such a power word represents the word \(u_1^{x_1} u_2^{x_2}\cdots u_n^{x_n}\). Quite often, we will identify the power word \((u_1,x_1,u_2,x_2,\ldots ,u_n,x_n)\) with the word \(u_1^{x_1} u_2^{x_2}\cdots u_n^{x_n}\). Moreover, if \(x_i=1\), then we usually omit the exponent 1 in a power word. The power word problem for the finitely generated group G, \({{\,\textrm{PowWP}\,}}(G)\) for short, is defined as follows:

- \(\textsf {Input}\):

-

a power word \((u_1,x_1,u_2,x_2,\ldots ,u_n,x_n)\).

- \(\textsf {Output}\):

-

Does \(u_1^{x_1} u_2^{x_2}\cdots u_n^{x_n}=_G 1\) hold?

Due to the binary encoded exponents, a power word can be seen as a succinct description of an ordinary word. Hence, a priori, the power word problem for a group G could be computationally more difficult than the word problem. An example, where this happens (under standard assumptions from complexity theory) is the wreath product \(S_5 \wr \mathbb {Z}\) (where \(S_5\) is the symmetric group on 5 elements). The word problem for this group can be easily solved in logspace, whereas the power word problem for \(S_5 \wr \mathbb {Z}\) is \(\textsf{coNP} \)-complete [43].

Let \(\mathcal {C}\) be a countable class of groups, where every group has a finite description. We also assume that the description of \(G \in \mathcal {C}\) contains a generating set for G. We write \({{\,\textrm{UPowWP}\,}}(\mathcal {C})\) for the uniform power word problem:

- \(\textsf {Input}\):

-

a group \(G \in \mathcal {C}\) and a power word \((u_1,x_1,u_2,x_2,\ldots ,u_n,x_n)\) over the generating set of G.

- \(\textsf {Output}\):

-

Does \(u_1^{x_1} u_2^{x_2}\cdots u_n^{x_n}=_G 1\) hold?

2.6.4 Right-angled Artin Groups

Right-angled Artin groups are defined similarly to partially commutative monoids. Again we have a symmetric and irreflexive commutation relation \(I \subseteq X \times X\). Note that we have \(M(X,I) \subseteq G(X,I)\).

We can view G(X, I) also as follows: let \(\Sigma = X \cup \overline{X}\) where \(\overline{X}\) is a disjoint copy of X and \(\overline{\overline{a}} = a\) for \(a \in \Sigma \) (like for free groups). Extend I to a symmetric relation on \(\Sigma \) by requiring that \((a, b) \in I\) if and only if \((\overline{a}, b) \in I\) for \(a,b \in \Sigma \). Then G(X, I) is the quotient of \(M(\Sigma ,I)\) defined by the relations \(a\overline{a}=1\) for \(a \in \Sigma \). A trace \(w \in M(\Sigma ,I)\) is called reduced if it does not contain a factor \(a\overline{a}\) for \(a \in \Sigma \). For every trace \(u \in M(\Sigma ,I)\) there is a unique reduced trace v (the reduced normal form of u) with \(u = v\) in G(X, I). Like for free groups, it can be computed using the confluent and terminating trace rewriting system \(\{ a \overline{a} \rightarrow 1 \mid a \in \Sigma \}\).

2.6.5 Graph Products

Let \(\left( G_\zeta \right) _{\zeta \in \mathcal {L}}\) be a family of so-called base groups and \(I \subseteq \mathcal {L}\times \mathcal {L}\) be an irreflexive and symmetric relation (the independence relation). As before, we assume that \(\mathcal {L}\) is always finite and we write \(\sigma = \left| \mathcal {L}\right| \). The graph product \( {{\,\textrm{GP}\,}}(\mathcal {L}, I, \left( G_\zeta \right) _{\zeta \in \mathcal {L}})\) is defined as the free product of the \(G_\zeta \) modulo the relations expressing that elements from \(G_\zeta \) and \(G_\xi \) commute whenever \((\zeta ,\xi ) \in I\). Below, we define this group by a group presentation.

Let \(\Gamma _\zeta = G_\zeta \setminus \{ 1 \}\) be the set of non-trivial elements of the group \(G_\zeta \) for \(\zeta \in \mathcal {L}\). We assume w.l.o.g. that the sets \(\Gamma _\zeta \) are pairwise disjoint. We then define \(\Gamma \) and \(I_{\Gamma }\) as in Section 2.5: \(\Gamma = \bigcup _{\zeta \in \mathcal {L}} \Gamma _\zeta \) (note that typically, \(\Gamma \) will be infinite) and \(I_\Gamma = \{ (a, b) \in \Gamma \times \Gamma \mid ({{\,\textrm{alph}\,}}(a), {{\,\textrm{alph}\,}}(b)) \in I\}\). As in Section 2.5 we write I instead of \(I_\Gamma \). For \(a,b \in G_\zeta \) we write [ab] for the element of \(G_\zeta \) obtained by multiplying ab in \(G_{\zeta }\) (whereas ab denotes a two-letter word in \(\Gamma ^*\)). Here, we identify \(1\in G_\zeta \) with the empty word 1. The relation I is extended to \(\Gamma ^*\) by \(I = \{ (u,v) \in \Gamma ^* \times \Gamma ^* \mid {{\,\textrm{alph}\,}}(u) \times {{\,\textrm{alph}\,}}(v) \subseteq I \}\) (where \({{\,\textrm{alph}\,}}(u) \subseteq \mathcal {L}\) is defined as in Section 2.5). With these definitions we have

Example 10

If \(I = \emptyset \), then \( {{\,\textrm{GP}\,}}(\mathcal {L}, I, \left( G_\zeta \right) _{\zeta \in \mathcal {L}})\) is simply the free product \(*_{\zeta \in \mathcal {L}} \, G_\zeta \).

Example 11

If all the base groups are the infinite cyclic group (i. e., for each \(\zeta \in \mathcal {L}\) we have \(G_\zeta = \mathbb {Z}\)), then the graph product \( {{\,\textrm{GP}\,}}(\mathcal {L}, I, \left( G_\zeta \right) _{\zeta \in \mathcal {L}})\) is the RAAG \(G(\mathcal {L}, I)\).

Let \(G = {{\,\textrm{GP}\,}}(\mathcal {L}, I, \left( G_\zeta \right) _{\zeta \in \mathcal {L}})\) be a graph product and \(M = M(\Gamma , I)\) the corresponding trace monoid (see Section 2.4). Notice that M satisfies the setting of Section 2.5 – so these results and definitions apply to the case of graph products. We can represent elements of G by elements of M. More precisely, there is a canonical surjective homomorphism \(h :M \rightarrow G\). A reduced representative of a group element \(g \in G\) is a trace w of minimal length such that \(h(w) = g\). We also say that w is reduced. Equivalently, \(w \in M\) is reduced if there is no two-letter factor ab of w such that \({{\,\textrm{alph}\,}}(a) = {{\,\textrm{alph}\,}}(b)\). A trace \(w \in M\) is called cyclically reduced if all transpositions of w are reduced. Equivalently, w is cyclically reduced if it is reduced and it cannot be written in the form axb with \(a,b \in \Gamma _\zeta \) for some \(x \in M\). Note that this definition agrees with [33], whereas in [19] a slightly different definition is used. We call a trace \(w \in M\) composite if \(\left| {{\,\textrm{alph}\,}}(w)\right| \ge 2\). Notice that a trace w, where every connected component is composite, is cyclically reduced if and only if ww is reduced (then, every \(w^k\) with \(k \ge 2\) is reduced). A word \(w \in \Gamma ^*\) is called reduced/cyclically reduced/composite if the trace represented by w is reduced/cyclically reduced/composite.

Remark 12

Note that a word \(w \in \Gamma ^*\) is cyclically reduced if and only if every cyclic permutation of the word w is reduced as a trace (be aware of the subtle difference between a cyclic permutation of a word w and a transposition of the trace represented by w): If the trace represented by w is cyclically reduced, then clearly every cyclic permutation of w must be reduced. On the other hand, assume that \(w =_M a w' b\) with \(a,b \in \Gamma _\zeta \). Then we can write the word w as \(w = x a y b z\) such that \((a, xz) \in I\). Then ybzxa is a cyclic permutation of w that is not reduced.

On the free monoid \(\Gamma ^*\) we can define an involution \((\cdot )^{-1}\) by \((a_1 a_2 \cdots a_n)^{-1} = a_n^{-1} \cdots a_2^{-1} a_1^{-1}\), where \(a_i^{-1}\) is the inverse of \(a_i\) in the group \(G_{{{\,\textrm{alph}\,}}(a_i)}\). Note that \(u =_M v\) implies \(u^{-1} =_M v^{-1}\). Therefore, we obtain a well-defined involution \((\cdot )^{-1}\) on M. Moreover, \(u^{-1}\) indeed represents the inverse of u in the group G.

The counterpart of the rewriting system \(S_{\textrm{free}}\) for graph products is the trace rewriting system

Note that \(G = M / T\) and that \({{\,\textrm{IRR}\,}}(T)\) is the set of reduced traces. Moreover, T is terminating and confluent; the latter is shown in [36, Lemma 6.1]. The following lemma can be found in [28, Lemma 24].

Lemma 13

Let \(u, v \in \Gamma ^*\). If \(u =_M v\), then also \( u =_G v\). Moreover, if u and v are reduced, then \(u =_M v \) if and only if \(u =_G v\).

The following commutative diagram summarizes the mappings between the sets introduced in this section (\(\hookrightarrow \hspace{-8pt}\rightarrow \) indicates a bijection):

The embedding \(M(\Gamma ,I)\hookrightarrow G(\Gamma ,I)\) is induced by the embedding \(M(\Gamma ,I)\hookrightarrow M(\Gamma \cup \overline{\Gamma },I)\) composed with the projection \(M(\Gamma \cup \overline{\Gamma },I) \twoheadrightarrow G(\Gamma ,I)\) from Section 2.6.4.

Note that, in the trace monoid \(M(\Gamma \cup \overline{\Gamma },I)\) for every symbol \(a \in \Gamma \) there is a formal inverse \(\overline{a}\) such that \((\overline{a},b) \in I\) if and only if \((a,b) \in I\). This formal inverse \(\overline{a}\) is different from the inverse of a in base group \(G_{{{\,\textrm{alph}\,}}(a)}\), but the surjection \(G(\Gamma ,I) \twoheadrightarrow {{\,\textrm{GP}\,}}(\mathcal {L}, I, (G_\zeta )_{\zeta \in \mathcal {L}})\) maps the formal inverse \(\overline{a}\) to the inverse of a in base group \(G_{{{\,\textrm{alph}\,}}(a)}\). A trace \(u \in M(\Gamma \cup \overline{\Gamma },I)\) is reduced with respect to the RAAG \(G(\Gamma ,I)\) if it does not contain a factor \(a\overline{a}\) or \(\overline{a}a\) with \(a \in \Gamma \). In particular, every trace from \(M(\Gamma ,I)\) is reduced with respect to \(G(\Gamma ,I)\), even if it is non-reduced in our sense (i.e., with respect to the graph product \({{\,\textrm{GP}\,}}(\mathcal {L}, I, (G_\zeta )_{\zeta \in \mathcal {L}})\)).

An I-clique is a trace \(a_1 a_2 \cdots a_k \in M\) such that \(a_i \in \Gamma \) and \((a_i,a_j) \in I\) for all \(i \ne j\). Note that \(|v| \le \sigma \) for every I-clique v. The following lemma is a generalization of a statement from [15] (equation (21) in the proof of Lemma 22), where only the case \(q=1\) is considered.

Lemma 14

Let \(p,q,r,s \in M\) such that \(pq, qr, s \in {{\,\textrm{IRR}\,}}(T)\) and \(p\,q\,r \overset{*}{\underset{T}{\Longrightarrow }} s\). Then there exist factorizations

with the following properties:

-

t, v, w are I-cliques with \(tv \overset{*}{\underset{T}{\Longrightarrow }} w\),

-

\({{\,\textrm{alph}\,}}(t) = {{\,\textrm{alph}\,}}(v) = {{\,\textrm{alph}\,}}(w)\), and

-

\((q,tu) \in I\) (hence also \((q,v), (q,w) \in I\)).

Proof

We prove the lemma by induction over the length of \(T\)-derivations (recall that T is terminating). The case that \(p\,q\,r \in {{\,\textrm{IRR}\,}}(T)\) is clear (take \(t = u = v = w = 1\)). Now assume that \(p\,q\,r\) is not reduced. Since \(pq, qr \in {{\,\textrm{IRR}\,}}(T)\), the trace \(p\,q\,r\) must contain a factor ab with \({{\,\textrm{alph}\,}}(a) = {{\,\textrm{alph}\,}}(b)\), where a is a maximal letter of p, b is a minimal letter of r and \((a,q), (b,q) \in I\). Let us write \(p =_M \tilde{p} a\) and \(r =_M b \tilde{r}\).

We distinguish two cases. If \([ab] = 1\), i.e., \(b = a^{-1}\), then

Since \((a,q)\in I\), we must have \(\tilde{p} q, q\tilde{r} \in {{\,\textrm{IRR}\,}}(T)\). Hence, by induction we obtain factorizations

with the following properties:

-

t, v, w are I-cliques with \(tv \overset{*}{\underset{T}{\Longrightarrow }} w\),

-

\({{\,\textrm{alph}\,}}(t) = {{\,\textrm{alph}\,}}(v) = {{\,\textrm{alph}\,}}(w)\), and

-

\((q,tx) \in I\).

If we set \(u = xa\), we obtain exactly the situation from the lemma.

Now assume that \([ab] = c \ne 1\). We obtain

Note that \((c,q) \in I\). Since \(\tilde{p}\, a\,q, \, b\,q\,\tilde{r} \in {{\,\textrm{IRR}\,}}(T)\), we also have \(\tilde{p}\,c\,q, c\,q\,\tilde{r} \in {{\,\textrm{IRR}\,}}(T)\). Hence, by induction we obtain factorizations

with the following properties:

-

\(t',v',w'\) are I-cliques with \(t'v' \overset{*}{\underset{T}{\Longrightarrow }} w'\),

-

\({{\,\textrm{alph}\,}}(t') = {{\,\textrm{alph}\,}}(v') = {{\,\textrm{alph}\,}}(w')\), and

-

\((cq,t'u) \in I\).

We define \(t = t'a\), \(v = v'b\), \(w = w'c\). These are I-cliques (since \((c,t') \in I\)) that satisfy the conditions from the lemma. Moreover, \((c,u) \in I\) implies \((a,u) \in I\) and hence \(p =_M \tilde{p}\,a =_M p' t' u \, a =_M p' t' a \, u =_M p' t \, u\). Similarly, we get \(r =_M u^{-1} v\, r'\) and \(s =_M p' q\, w\, r'\) (using \((c,q) \in I\)). \(\square \)

Since \(\Gamma \) might be an infinite alphabet, for inputs of algorithms, we need to encode elements of \(\Gamma \) over a finite alphabet. For \(\zeta \in \mathcal {L}\) let \(\Sigma _\zeta \) be a finite generating set for \(G_\zeta \) such that \(\Sigma _\zeta \cap \Sigma _\xi = \emptyset \) for \(\zeta \ne \xi \). Then \(\Sigma = \bigcup _{\zeta \in \mathcal {L}} \Sigma _\zeta \) is a generating set for G. Every element of \(\Gamma _\zeta \) can be represented as a word from \(\Sigma _\zeta ^*\). However, in general, representatives are not unique. Deciding whether two words \(w,v \in \Sigma _\zeta ^*\) represent the same element of \(\Gamma _\zeta \) is the word problem for \(G_\zeta \). We give more details how to represent power words in Section 5.1.1.

Let \(\mathcal {C}\) be a countable class of finitely generated groups with finite descriptions. One might for instance take a subclass of finitely (or recursively) presented groups. Then a graph product \({{\,\textrm{GP}\,}}(\mathcal {L}, I, (G_\zeta )_{\zeta \in \mathcal {L}})\) with \(G_\zeta \in \mathcal {C}\) for all \(\zeta \) has a finite description as well: such a group is given by the finite graph \((\mathcal {L}, I)\) and a list of the finite descriptions of the groups \(G_\zeta \in \mathcal {C}\) for \(\zeta \in \mathcal {L}\). We denote with \({{\,\textrm{GP}\,}}(\mathcal {C})\) the class of all such graph products.

2.7 Complexity

We assume that the reader is familiar with the complexity classes P and \(\textsf{NP}\); see e.g. [5] for details. Let \(\mathcal {C}\) be any complexity class and \(K\subseteq \Delta ^*\), \(L \subseteq \Sigma ^*\) languages. Then L is \(\mathcal {C}\)-many-one reducible to K (\(L\le _{\textrm{m}}^{\mathcal {C}} K\)) if there exists a \(\mathcal {C}\)-computable function \(f:\Sigma ^* \rightarrow \Delta ^*\) with \(x\in L \) if and only if \(f(x) \in K\).

2.7.1 Circuit Complexity

We use circuit complexity for classes below deterministic logspace (\(\textsf{L}\) for short). Instead of defining these classes directly, we introduce the slightly more general notion of \(\textsf{AC}^{0}\)-Turing reducibility. A language \(L \subseteq \{0,1\}^*\) is \(\textsf{AC}^{0}\)-Turing-reducible to \(K \subseteq \{0,1\}^*\) if there is a family of constant-depth, polynomial-size Boolean circuits with oracle gates for K deciding L. More precisely, we can define the class of language \(\textsf{AC}^{0} (K)\) which are \(\textsf{AC}^{0}\)-Turing-reducible to \(K \subseteq \{0,1\}^*\): a language \(L \subseteq \{0,1\}^*\) belongs to \(\textsf{AC}^{0} (K)\) if there exists a family \((C_n)_{n \ge 0}\) of Boolean circuits with the following properties:

-

\(C_n\) has n distinguished input gates \(x_1, \ldots , x_n\) and a distinguished output gate o.

-

\(C_n\) accepts exactly the words from \(L \cap \{0,1\}^n\), i.e., if the input gate \(x_i\) receives the input \(a_i \in \{0,1\}\) for all i, then the output gate o evaluates to 1 if and only if \(a_1 a_2 \cdots a_n \in L\).

-

Every circuit \(C_n\) is built up from input gates, not-gates, and-gates, or-gates, and oracle gates for K (which output 1 if and only if their input is in K). The incoming wires for an oracle gate for K have to be ordered since the language K is not necessarily closed under permutations of symbols.

-

All gates may have unbounded fan-in, i. e., there is no bound on the number of incoming wires for a gate.

-

There is a polynomial p(n) such that \(C_n\) has at most p(n) many gates and wires.

-

There is a constant d such that every \(C_n\) has depth at most d (the depth is the length of a longest path from an input gate \(x_i\) to the output gate o).

This is in fact the definition of non-uniform \(\textsf{AC}^{0} (K)\). Here “non-uniform” means that the mapping \(n \mapsto C_n\) is not restricted in any way. In particular, it can be non-computable. For algorithmic purposes one usually adds some uniformity requirement to the above definition. The most “uniform” version of \(\textsf{AC}^{0} (K)\) is \(\textsf{DLOGTIME}\)-uniform \(\textsf{AC}^{0} (K)\). For this, one encodes the gates of each circuit \(C_n\) by bit strings of length \(\mathcal {O}(\log n)\). Then the circuit family \((C_n)_{n \ge 0}\) is called \(\textsf{DLOGTIME}\)-uniform if (i) there exists a deterministic Turing machine that computes for a given gate \(u \in \{0,1\}^*\) of \(C_n\) (\(|u| \in \mathcal {O}(\log n)\)) in time \(\mathcal {O}(\log n)\) the type of gate u, where the types are \(x_1, \ldots , x_n\), not, and, or, oracle gate, and (ii) there exists a deterministic Turing machine that decides for two given gates \(u,v \in \{0,1\}^*\) of \(C_n\) (\(|u|, |v| \in \mathcal {O}(\log n)\)) and a binary encoded integer i with \(\mathcal {O}(\log n)\) many bits in time \(\mathcal {O}(\log n)\) whether u is the i-th input gate for v. In the following, we write \(\textsf{uAC}^0 (K)\) for \(\textsf{DLOGTIME}\)-uniform \(\textsf{AC}^{0} (K)\). For more details on these definitions we refer to [60]. If the language L (or K) in the above definition of \(\textsf{uAC}^0 (K)\) is defined over a non-binary alphabet \(\Sigma \), then one first has to fix a binary encoding of \(\Sigma \) as words in \(\{0,1\}^\ell \) for some large enough \(\ell \in \mathbb {N}\).

If \(\mathcal {C} = \{K_1, \ldots , K_n\}\) is a finite class of languages, then \(\textsf{AC}^{0} (\mathcal {C})\) is the same as \(\textsf{AC}^{0} ( \{ (w,i) \mid i \in [1,n], w \in K_i\} )\). If \(\mathcal {C}\) is an infinite complexity class, then \(\textsf{uAC}^0 [\mathcal {C}]\) is the union of all classes \(\textsf{uAC}^0 (K)\) for \(K \in \mathcal {C}\). Note that \(\textsf{uAC}^0 [\mathcal {C}](K)\) is the same as \(\bigcup _{L \in \mathcal {C}} \textsf{uAC}^0 (K,L)\).

The class \(\textsf{uNC}^{1}\) is defined as the class of languages accepted by \(\textsf{DLOGTIME}\)-uniform families of Boolean circuits having bounded fan-in, polynomial size, and logarithmic depth. As a consequence of Barrington’s theorem [6], we have \(\textsf{uNC}^{1} = \textsf{uAC}^0 (\text {WP}(A_5))\), where \(A_5\) is the alternating group over 5 elements [60, Corollary 4.54]. Moreover, the word problem for any finite group G is in \(\textsf{uNC}^{1} \). If G is finite non-solvable, its word problem is \(\textsf{uNC}^{1} \)-complete – even under \(\textsf{uAC}^0 \)-many-one reductions. Robinson proved that the word problem for the free group \(F_2\) is \(\textsf{uNC}^{1}\)-hard [56], i.e., \(\textsf{uNC}^{1} \subseteq \textsf{uAC}^0 (\text {WP}(F_2))\).

The class \(\textsf{uTC}^0 \) is defined as \(\textsf{uAC}^0 ({\textsc {Majority}})\) where Majority is the language of all bit strings containing more 1s than 0s.

Important problems that are complete (under \(\textsf{uAC}^0\)-Turing reductions) for \(\textsf{uTC}^0\) are:

-

the languages \(\{ w \in \{0,1\}^* \mid |w|_0 \le |w|_1 \}\) and \(\{ w \in \{0,1\}^* \mid |w|_0 = |w|_1 \}\), where \(|w|_a\) denotes the number of occurrences of a in w, see e.g. [60],

-

the computation (of a certain bit) of the binary representation of the product of two or any (unbounded) number of binary encoded integers [29],

-

the computation (of a certain bit) of the binary representation of the integer quotient of two binary encoded integers [29],

-

the word problem for every infinite finitely generated solvable linear group [35],

-

the conjugacy problem for the Baumslag-Solitar group \(\textsf{BS}(1,2)\) [16].

2.7.2 Counting Complexity Classes

Counting complexity classes are built on the idea of counting the number of accepting and rejecting computation paths of a Turing machine. For a non-deterministic Turing machine M, let \({\text {accept}}_M\) (resp., \({\text {reject}}_M\)) be the function that assigns to an input x for M the number of accepting (resp., rejecting) computation paths on input x. We define the function \({\text {gap}}_M :\Sigma ^* \rightarrow \mathbb {Z}\) by \({\text {gap}}_M(x) = {\text {accept}}_M(x) - {\text {reject}}_M(x)\). The class of functions \(\textsf{GapL} \) and the class of languages \(\textsf{C}_=\textsf{L} \) are defined as follows:

We write \(\textsf{GapL} ^K\) and \(\textsf{C}_=\textsf{L} ^{K}\) to denote the corresponding classes where the Turing machine M is equipped with an oracle for the language K. We have the following relationships of \(\textsf{C}_=\textsf{L} \) with other complexity classes; see e. g., [1]:

3 Groups with an Easy Power Word Problem

In this section we start with two easy examples of groups where the power word problem can be solved efficiently.

Theorem 15

If G is a finitely generated nilpotent group, then \({{\,\textrm{PowWP}\,}}(G)\) is in \(\textsf{uTC}^0\).

Proof

In [52], the so-called word problem with binary exponents was shown to be in \(\textsf{uTC}^0\). Here the input is a power word \(u_1^{x_1} \cdots u_n^{x_n}\) but all the \(u_i\) are required to be one of the standard generators of the group G. For arbitrary power words, we can apply the same techniques as in [52]: we compute Mal’cev normal forms of all \(u_i\) using [52, Theorem 5], then we use the power polynomials from [52, Lemma 2] to compute Mal’cev normal forms with binary exponents of all \(u_i^{x_i}\). Finally, we compute the Mal’cev normal form of \(u_1^{x_1} \cdots u_n^{x_n}\) again using [52, Theorem 5]. \(\square \)

Theorem 15 has been generalized in [19], where it is shown that the power word problem for a wreath product \(G \wr \mathbb {Z}\) with G finitely generated nilpotent belongs to \(\textsf{uTC}^0\). Other classes of groups where the power word problem belongs to \(\textsf{uTC} ^0\) are iterated wreath products of the form \(\mathbb {Z}^r \wr (\mathbb {Z}^r \wr (\mathbb {Z}^r \cdots ))\), free solvable groups [19] and solvable Baumslag-Solitar group \(\textsf{BS}(1,q)\) [45].

The Grigorchuk group (defined in [26] and also known as the first Grigorchuk group) is a finitely generated subgroup of the automorphism group of an infinite binary rooted tree. It is a torsion group (every element has order \(2^k\) for some k) and it was the first example of a group of intermediate growth.

Theorem 16

The power word problem for the Grigorchuk group is \(\textsf{uAC}^0\)-many-one-reducible to its word problem (under suitable assumptions on the input encoding). In particular, the power word problem for the Grigorchuk group is in L.

Proof

Let G denote the Grigorchuk group. By [7, Theorem 6.6], every element of G that can be represented by a word of length m over a finite set of generators has order at most \(Cm^{3/2}\) for some constant C. W. l. o. g. \(C = 2^\ell \) for some \(\ell \in \mathbb {N}\). On input of a power word \(u_1^{x_1} \cdots u_n^{x_n}\) with all words \(u_i\) of length at most m, we can compute the smallest k with \(2^k \ge m\) in \(\textsf{uAC}^0\). We have \(2^k \le 2m\). Now, we know that an element of length m has order bounded by \(2^{2k+\ell }\). Since the order of every element of G is a power of two, this means that \(g^{2^{2k+\ell }} = 1\) for all \(g \in G\) of length at most m. Thus, we can reduce all exponents modulo \(2^{2k+\ell }\) (i. e., we drop all but the \(2k+\ell \) least significant bits). Now all exponents are at most \(2^{2k+\ell } \le 4Cm^2\) and the power word can be written as an ordinary word (to do this in \(\textsf{uAC}^0\), we need a neutral letter to pad the output to a fixed word length). Note that this can be done by a uniform circuit family.

The second statement in the theorem follows from the first statement and the fact that the word problem for the Grigorchuk group is in \(\textsf {L}\) (see e. g., [49, 54]). \(\square \)

The first statement of Theorem 16 only holds if the generating set contains a neutral letter. Otherwise, the reduction is in \(\textsf{uTC}^0\).

Recall from the introduction that the compressed word problem for the Grigorchuk group is \(\textsf{PSPACE}\)-complete [8] and hence provably harder than the power word problem (since \(\textsf{L}\) is a proper subset of \(\textsf{PSPACE}\)).

4 Power Word Problems in Finite Extensions

It is easy to see, that the power word problem of a finite group belongs to \(\textsf{uNC}^{1}\). Indeed, we can prove the following more general result (choosing H as the trivial group yields the result for finite groups).

Theorem 17

Let G be finitely generated and let \(H\le G\) have finite index. Then \({{\,\textrm{PowWP}\,}}(G)\) is \(\textsf{uNC}^{1}\)-many-one-reducible to \({{\,\textrm{PowWP}\,}}(H)\).

Proof

Since \(H \le G\) is of finite index, there is a normal subgroup \(N \le G\) of finite index with \(N\le H\) (e. g., \(N= \bigcap _{g\in G} gHg^{-1}\)). As \(N \le H\), \({{\,\textrm{PowWP}\,}}(N)\) is reducible via a homomorphism (i. e., in particular in \(\textsf{uTC}^0\)) to \({{\,\textrm{PowWP}\,}}(H)\). Thus, we can assume that from the beginning H is normal and that \(Q=G/H\) is a finite quotient group. Notice that H is finitely generated as G is so; see e.g. [57, 1.6.11]. Let \(R\subseteq G\) denote a set of representatives of Q with \(1 \in R\). If we choose a finite generating set \(\Sigma \) for H, then \(\Sigma \cup (R \setminus \{1\})\) is a finite generating set for G.

Let \(u = u_1^{x_1} \cdots u_n^{x_n}\) denote the input power word. As a first step, for every exponent \(x_i\) we compute numbers \(y_i,z_i \in \mathbb {Z}\) with \(x_i = y_i\left| Q \right| + z_i\) and \(0 \le z_i < \left| Q \right| \) (i. e., we compute the division with remainder by \(\left| Q \right| \)). This is possible in \(\textsf{uNC}^{1}\) [29]. Note that \(u_i^{\left| Q \right| }\) is trivial in the quotient \(Q = G/H\) and, therefore, represents an element of H. Using the conjugate collection process from [56, Theorem 5.2] we can compute in \(\textsf{uNC}^{1} \) a word \(h_i \in \Sigma ^*\) such that \(u_i^{\left| Q\right| } =_G h_i\).

Let us give some more details on this process: first recall that \(\left| Q\right| \) is a constant. Hence, we can write \(u_i^{\left| Q\right| } \) as a word \(c_1 s_1 \cdots c_ks_k\) with \(c_j \in \Sigma \cup \{1\} \) and \(s_j \in R\). Now, let \(t_j \in R\) denote the representative of \(s_1 \cdots s_{j-1}\) in R (meaning that \(t_j H = s_1 \cdots s_{j-1}H\) and \(t_1 = 1\) and \(t_2 = s_1\)) and \(q_j \in \Sigma ^*\) such that \(q_j =_G t_j s_j t_{j+1}^{-1}\) (note that, indeed, \(q_j\) is contained in H). Then we have

and it follows that

As \(u_i^{\left| Q\right| } \in H\), we have \(t_{k+1} = 1\). We can compute \(t_j\) for all j in \(\textsf{uNC}^{1}\) by solving several instances of the word problem for the finite group Q. After that we can compute \(q_j\) by a table look-up (there are only \(\left| r\right| ^3\) many possibilities for \(t_j\), \(s_j\), and \(t_{j+1}\)). Now it remains to replace each \(t_j c_j t_j^{-1}\) by a word over \(\Sigma ^*\). As there are only finitely many possibilities for \(c_j\) and \(t_j\), this can be done even in \(\textsf{uNC}^{0}\).

Hence, we have obtained a word \(h_i \in \Sigma ^*\) such that \(u_i^{\left| Q\right| } =_G h_i\).

As a next step, we replace in the input word every \(u_i^{x_i}\) by \(h_i^{y_i} u_i^{z_i}\) where we write \(u_i^{z_i}\) as a word without exponents (recall that \(x_i = y_i\left| Q\right| + z_i\) with \(0 \le z_i < \left| Q\right| \)). We have obtained a word where all factors with exponents represent elements of H. Finally, we again proceed like Robinson [56] for the ordinary word problem treating words with exponents as single letters (this is possible because they are in H).

To give some more details for the last step (which works quite similarly to the conjugate collection process described above), let us denote the result of the previous step as \(g_0 h_1^{y_1} g_1 \cdots h_n^{y_n} g_n\) with \(g_i \in (\Sigma \cup R \setminus \{1\})^*\) and \( h_i \in \Sigma ^*\). By [56, Theorem 5.2] we can rewrite in \(\textsf{uNC}^{1}\) \(g_i\) as \(g_i = \tilde{h}_i r_i\) with \(r_i \in R\) and \(\tilde{h}_i \in \Sigma ^*\). Once again, we follow [56] and write \(\tilde{h}_0 r_0 h_1^{y_1} \tilde{h}_1 r_1 \cdots h_n^{y_n} \tilde{h}_n r_n\) as

where \(a_i\) is the representative of \(r_0 \cdots r_{i-1}\) in R (\(a_0 = 1\)) and \(w_i = a_i r_i a_{i+1}^{-1}\). The element \((a_i h_i^{y_i} \tilde{h}_ia_i^{-1})\) belongs to H since H is normal in G. It is obtained from \(h_i^{y_i} \tilde{h}_i\) by conjugation with \(a_i\), i.e., by a homomorphism from a fixed finite set of homomorphisms. Thus, a power word \(P_i\) over the alphabet \(\Sigma \) with \(P_i =_H (a_i h_i^{y_i} \tilde{h}_ia_i^{-1})\) can be computed in \(\textsf{uTC}^0 \). Also all \(w_i\) belong to H, since \(a_i r_i\) and \(a_{i+1}\) belong to the same coset of H. Moreover, every \(w_i\) comes from a fixed finite set (namely \(R \cdot R \cdot R^{-1}\)) and, thus, can be rewritten to a word \(w_i' \in \Sigma ^*\). Now it remains to verify whether \(a_{n+1}= 1\) (solving the word problem for Q, which is in \(\textsf{uNC} ^1\)). If this is not the case, we output any non-identity word in H, otherwise we output the power word \(P = \tilde{h}_0 w_0' P_1 w_1' P_2 w_2' \cdots P_n w_n'\). As \(a_{n+1}= 1\), we have \(P =_G u\). \(\square \)

5 Power Word Problems in Graph Products

The main results of this section are transfer theorems for the complexity of the power word problem in graph products. We will prove such a transfer theorem for the non-uniform setting (where the graph product is fixed) as well as the uniform setting (where the graph product is part of the input). Before, we will consider a special case, the so called simple power word problem for graph products, in Section 5.1. In Section 5.2 we have to prove some further combinatorial results on traces. Finally, in Section 5.3 we prove the transfer theorems for graph products.

5.1 The Simple Power Word Problem for Graph Products

In this section we consider a restricted version of the power word problem for graph products. Later, we will use this restricted version in our algorithms for the unrestricted power word problem.

Let \(G = {{\,\textrm{GP}\,}}(\mathcal {L}, I, (G_\zeta )_{\zeta \in \mathcal {L}})\) be a graph product and define \(\Gamma _\zeta , \Sigma _\zeta , \Gamma , \Sigma \) as in Section 2.6.5. A simple power word is a word \(w = w_1^{x_1} \cdots w_n^{x_n}\), where \(w_1, \dots , w_n \in \Gamma \) and \(x_1, \dots , x_n \in \mathbb {Z}\) is a list of binary encoded integers. Each \(w_i\) encoded as a word over some finite alphabet \(\Sigma _\zeta \). Note that this is more restrictive than a power word: we only allow powers of elements from a single base group. The simple power word problem \({{\,\textrm{SPowWP}\,}}(G)\) is to decide whether \(w =_G 1\), where w is a simple power word. We also consider a uniform version of this problem. With \({{\,\textrm{USPowWP}\,}}({{\,\textrm{GP}\,}}(\mathcal {C}))\) we denote the uniform simple power word problem for graph products from the class \({{\,\textrm{GP}\,}}(\mathcal {C})\) (see the last paragraph in Section 2.6.5).

Proposition 18

For the (uniform) simple power word problem the following holds.

-

Let \(G = {{\,\textrm{GP}\,}}(\mathcal {L}, I, (G_\zeta )_{\zeta \in \mathcal {L}})\) be a fixed graph product of f.g. groups. Then \({{\,\textrm{SPowWP}\,}}(G) \in \textsf{uAC} ^0\bigl (\{\text {WP}(F_2)\} \cup \{ {{\,\textrm{PowWP}\,}}(G_\zeta ) \mid \zeta \in \mathcal {L}\}\bigr )\).

-

Let \(\mathcal {C}\) be a non-trivial class of f.g. groups. Then \({{\,\textrm{USPowWP}\,}}({{\,\textrm{GP}\,}}(\mathcal {C}))\) is in \(\textsf{C}_=\textsf{L} ^{{{\,\textrm{UPowWP}\,}}(\mathcal {C})}\).

These results are obtained by using the corresponding algorithm for the (uniform) word problem [33, Theorem 5.6.5, Theorem 5.6.14] and replacing the oracles for the word problems of the base groups with oracles for the power word problems in the base groups. The proofs for the non-uniform and uniform case are quite different. Indeed, in the non-uniform case, we can work by induction over the size of the (in-)dependence graph, while for the uniform case we rely on an embedding into some linear space of infinite dimension. Therefore, we split the proofs into two subsections: in Section 5.1.2 we work on the non-uniform case and later, in Section 5.1.3, we develop an algorithm for the uniform case.

5.1.1 Input Encoding

Let us give some details how to encode the input for the (simple) power word problem in graph products. There are certainly other ways to represent the input for our algorithms without changing the complexity; but whenever the encoding is important, we assume that is done as described in this section. We will use blocks of equal size to encode the different parts of the input. This makes it possible that parts of the computation can be done in \(\textsf{uAC} ^0\). We assume that there is a letter for \(1 \in \Sigma \) representing the group identity.

The input of the power word problem in a graph product is \(p_1^{x_1}\cdots p_n^{x_n}\) where \(p_i = a_{i,1}\cdots a_{i,m_i} \in \Sigma ^*\) (note that each letter of \(\Gamma \) can be written as a word over \(\Sigma \)). We ensure \(m_i \le n\) for each i, by padding the input word with \(1^1\), increasing the length n. Then, to ensure that each \(p_i\) has length exactly n, we pad with the identity element. Now we can write \(p_i = a_{i,1}\cdots a_{i,n}\) with \(a_{i,j}\in \Sigma \).

We encode each letter \(a \in \Sigma \) as a tuple \((\zeta , a)\) where \(\zeta = {{\,\textrm{alph}\,}}(a)\). In the non-uniform case, there is a constant k, such that k bits are sufficient to encode any element of \(\mathcal {L}\) and any letter of any \(\Sigma _\zeta \) for any \(\zeta \in \mathcal {L}\). In the uniform case we encode the elements of \(\mathcal {L}\) as well as the elements of each \(\Sigma _\zeta \) using n bits. The encoding of a word \(p_i\) is illustrated by the following figure.

Encoding a word \(p_i\) requires 2nk bits in the non-uniform case and \(2n^2\) bits in the uniform case. For the simple power word problem we impose the restriction \({{\,\textrm{alph}\,}}(a_{i,j}) = {{\,\textrm{alph}\,}}(a_{i,k})\) for all \(i,j ,k \in \{1, \dots , n\}\), as mixed powers are not allowed.

We combine the above encoding for the words \(p_i\) with a binary encoding for the exponents \(x_i\) to obtain the encoding of a power word. Each exponent is encoded using n bits. Note that we can do this because, if an exponent is smaller, we can pad it with zeroes and, if an exponent is larger, we can choose a larger n and pad the input word with the identity element 1. This leads us to the following encoding of a power word, which in the non-uniform case uses \((2k+1)n^2\) bits.

In the uniform case, this encoding requires \((2n+1)n^2\) bits. Furthermore, we also need to encode the descriptions of the base groups and the independence graph. By padding the input appropriately, we may assume that there are n base groups and that each can be encoded using n bits. The independence graph can be given as adjacency matrix, using \(n^2\) bits.

5.1.2 The Non-uniform Case

Before solving the simple power word problem in G we prove several lemmata to help us achieve this goal. Lemma 19 below is due to Kausch [33]. For this lemma, we have to introduce first some notation and the notion of a semidirect product: Take two groups H and N with a left action of H on N (a mapping \((h, g) \mapsto h \circ g\) for \(h \in H\), \(g \in N\) such that \(1 \circ g = g\), \((h_1 h_2) \circ g = h_1 \circ (h_2 \circ g)\) and for each \(h \in H\) the map \(g \mapsto h \circ g\) is an automorphism of G). The corresponding semidirect product \(N \rtimes H\) is a group with underlying set \(N \times H\) and the multiplication is defined by \((n_1, h_1) (n_2, h_2) = (n_1 (h_1 \circ n_2), h_1 h_2)\).

If B is a group and u an arbitrary object, we write \(B_u = \{ (g,u) \mid g \in B \}\) for an isomorphic copy of B with multiplication \((g,u) (g',u) = (gg',u)\). In the following let B be finitely generated. We begin by looking at the free product \(G \simeq *_{k \in \mathbb {N}} B_k\) of countable many copies of B. Kausch [33, Lemma 5.4.5] has shown that the word problem for G can be solved in \(\textsf{uAC} ^0\) with oracle gates for \(\text {WP}(B)\) and \(\text {WP}(F_2)\). We show a similar result for the simple power word problem. Our proof is mostly identical to the one presented in [33], with only a few changes to account for the different encoding of the input. We use the following lemma on the algebraic structure of G.

Lemma 19