Abstract

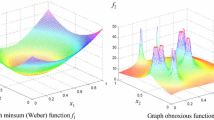

The aim of this work is to locate a semi-obnoxious facility, i.e. to minimize the distances to a given set of customers in order to save transportation costs on the one hand and to avoid undesirable interactions with other facilities within the region by maximizing the distances to the corresponding facilities on the other hand. Hence, the goal is to satisfy economic and environmental issues simultaneously. Due to the contradicting character of these goals, we obtain a non-convex objective function. We assume that distances can be measured by rectilinear distances and exploit the structure of this norm to obtain a very efficient dual pair of algorithms.

Similar content being viewed by others

1 Introduction

This paper deals with the problem of locating a semi-obnoxious facility such as an industrial plant, where the goal is to minimize travel distances to customers and suppliers and to maximize distances to nature reserves and residential areas. First attempts to solve location problems with undesirable facilities appeared in the 1970’s (Church and Garfinkel 1978; Dasarathy and White 1980; Goldman and Dearing 1975). Since then, researchers have focussed on many different variants of the problem, like different spaces (e.g. networks, discrete settings, \({\mathbb {R}}^2\) or \({\mathbb {R}}^n\)), different distance functions (e.g. Euclidean distance, Manhatten norm, maximum norm, polyhedral gauges), different objective functions (e.g. bi-objective models, dc formulations) and many more. For surveys and summaries on location problems with undesirable facilities the reader is referred for instance to Cappanera (1999), Carrizosa and Plastria (1999), Eiselt and Laporte (1995), Plastria (1996), Wagner (2015).

The case of Manhattan distances in the Euclidean space \({\mathbb {R}}^2\) using a single-objective model is considered for instance in Drezner and Wesolowsky (1991), Nickel and Dudenhöffer (1997). Compared to them, this paper provides an alternative approach (in \({\mathbb {R}}^n\)) which allows to obtain a dual pair of algorithms and a variant of the primal one that all mainly consist of a sorting process followed by a very efficient procedure to evaluate the objective at all candidate coordinates.

In Wagner et al. (2016) the problem is considered for the general case of mixed gauge distances. A dual pair of algorithms to find exact solutions is developed, based on the following discretization result: Both, the primal and the dual problem, provide grids w.r.t. attraction and w.r.t. repulsion, such that the primal grid points w.r.t. attraction provide a finite set of candidates for optimal primal solutions and the dual grid points w.r.t. repulsion provide a finite set of candidates for optimal dual solutions. In case of mixed gauge distances, those grids may be very complex and for determining its grid points it is suggested to apply a generalized version of Benson’s algorithm (Benson 1998; Löhne and Weißing 2015, 2016b).

In Löhne and Wagner (2017) a more general setting is considered. The goal is to minimize the difference of two convex functions where at least one of them is polyhedral convex, i.e. its epigraph is a polyhedral convex set. A dual pair of algorithms is presented, in which the vertices of the epigraphs are determined by solving a polyhedral projection problem, e.g. with a vlp solver (Löhne and Weißing 2016a). The projections of these vertices onto \({\mathbb {R}}^n\) provide finite sets of candidates for optimal solutions.

It turns out that the finite sets of candidates for optimal solutions in Löhne and Wagner (2017) and in Wagner et al. (2016) can efficiently be found by using for instance the implementation Bensolve (Löhne and Weißing 2015).

In case of Manhattan distances the grids have an axes-parallel structure and hence there is no further need for special solvers for multi-objective linear programs or polyhedral projection problems. We exploit the structure of the Manhattan norm in order to obtain more efficient variants of the algorithms presented in Löhne and Wagner (2017) and Wagner et al. (2016).

This paper is organized as follows: In Sect. 2 we introduce the mathematical formulation of the considered optimization problem and review the main results and algorithms presented in Löhne and Wagner (2017) and Wagner et al. (2016) which this paper is based on. In Sect. 3 we derive a method for determining the finite set of grid points, which are candidates for optimal solutions. Furthermore, in Sect. 4, we derive recursive methods for calculating the primal and dual objective values with help of the special structure given by the norm. The resulting algorithms are presented in Sect. 5. In Sect. 6 we provide computational results and compare different solving procedures. We close with a conclusion in Sect. 7.

2 Problem formulation and preliminary results

In this section we provide relevant terms, properties and problem formulations. For more detailed information the reader is referred to Löhne and Wagner (2017) and Wagner et al. (2016).

Let \(x,a\in {\mathbb {R}}^n\). Then the Manhattan distance between x and a is given by \(d_1(x,a)=\sum _{i=1}^n\left| x_i-a_i\right| \) and the corresponding unit ball is given by \(B=\left\{ x\in {\mathbb {R}}^n|\;\sum _{i=1}^n\left| x_i\right| \right\} =1\).

The optimization problem under consideration is a dc location problem (difference of convex functions) that can be formulated as

with functions \(g,h:\;{\mathbb {R}}^n\rightarrow {\mathbb {R}}_+\) defined as

where the parameters \({\overline{a}}^1,\ldots ,{\overline{a}}^{{\overline{M}}}\in {\mathbb {R}}^n\), \({\overline{M}}\ge 1\), denote the attracting points with weights \({\overline{w}}_1,\ldots ,{\overline{w}}_{{\overline{M}}}>0\), and \({\underline{a}}^1,\ldots ,{\underline{a}}^{{\underline{M}}}\in {\mathbb {R}}^n\), \({\underline{M}}\ge 1\), denote the repulsive points with weights \({\underline{w}}_1,\ldots ,{\underline{w}}_{{\underline{M}}}>0\). Based on Wagner et al. (2016) the Toland-Singer dual problem (Singer 1979; Toland 1978) results as

where the conjugate functions

of g and h, respectively, are obtained with help of basic calculus rules for conjugate functions, see e.g. Rockafellar (1997). For the dual pair of optimization problems (P) and (D) it holds (e.g. Singer 2006):

A direct consequence of Theorem 4.2 in Wagner et al. (2016) is the following:

Corollary 1

(Finiteness Criterion) A finite solution of problem (P) exists and is attained if and only if \(\sum _{m=1}^{{\underline{M}}}{\underline{w}}_m\le \sum _{m=1}^{{\overline{M}}}{\overline{w}}_m\).

According to Wagner et al. (2016) the following relations hold: For all \(y\in {{\,\mathrm{dom}\,}}g^*\) there exists a tuple \(\left( {\overline{y}}^1,\ldots ,{\overline{y}}^{{\overline{M}}}\right) \in {\overline{w}}_1{\overline{B}}_1^*\times \ldots \times {\overline{w}}_{{\overline{M}}}{\overline{B}}_{{\overline{M}}}^*\), such that \(\displaystyle y={\overline{y}}^1+\ldots +{\overline{y}}^{{\overline{M}}}\) and \(\displaystyle \bigcap _{m=1,\ldots ,{\overline{M}}}\left[ {\overline{a}}^m+N_{{\overline{w}}_m{\overline{B}}_m^*}({\overline{y}}^m)\right] \ne \emptyset \). Whenever this intersection in non-empty, it coincides with the subdifferential \(\partial g^*(y)\) and the corresponding tuple \(\left( {\overline{y}}^1,\ldots ,{\overline{y}}^{{\overline{M}}}\right) \) directly provides the objective value \(g^*(y)\), i.e.

Moreover,

Note that \(B^*\) defines the dual unit ball, which in case of Manhattan distances is \(B^*=[-1,1]^n\). The extreme points of these subdifferentials in (3) and (4) define the primal and dual grid points w.r.t. attraction. Analogously, the subdifferentials of h and \(h^*\), define primal and dual grids w.r.t. repulsion. These grids provide finite sets of candidates for optimal solutions, as the following result states.

Theorem 1

(Discretization Result, (Wagner et al. 2016, Theorem 4.11)) Let \(\mathcal {{\overline{I}}}\) denote the set of primal grid points w.r.t. attraction, \(\mathcal {{\underline{I}}}_D\) the set of dual grid points w.r.t. repulsion, and \({\mathcal {X}}\) and \({\mathcal {Y}}\) the sets of minimizers of (P) and (D), respectively. Then, \(\mathcal {{\overline{I}}}\cap {\mathcal {X}}\ne \emptyset \) and \(\mathcal {{\underline{I}}}_D\cap {\mathcal {Y}}\ne \emptyset \).

Due to the special structure of the Manhattan norm we can simplify the algorithms in Löhne and Wagner (2017) and Wagner et al. (2016), which mainly consist of determining all grid points and verifying their optimality. Solving the original non-convex problem (P) is reduced to a sorting process followed by a very efficient procedure to evaluate the objective at all candidate coordinates. No special solvers as used in Löhne and Wagner (2017) and Wagner et al. (2016) are necessary.

The following proposition can be applied to determine primal optimal solutions, when dual optimal points are known and vice verse.

Proposition 1

(Wagner et al. 2016, Remark 3.4) Let \({\mathcal {X}}\) be the set of minimizers of \(g-h\) and \({\mathcal {Y}}\) be the set of minimizers of \(h^*-g^*\). Then

Proposition 2

(Necessary Optimality Conditions, (Horst and Thoai 1999; Tuy 1998)) Let \(g,h: \;{\mathbb {R}}^n\rightarrow {\mathbb {R}}\cup {+\infty }\) be proper, convex and closed functions. If \({\hat{x}}\in {{\,\mathrm{dom}\,}}g\cap {{\,\mathrm{dom}\,}}h \) is a global minimizer of \(g-h\) on \({\mathbb {R}}^n\) then \(\partial h(x)\subseteq \partial g(x)\). Vice verse, if \({\hat{y}}\in {{\,\mathrm{dom}\,}}g^*\cap {{\,\mathrm{dom}\,}}h^* \) is a global minimizer of \(h^*-g^*\) on \({\mathbb {R}}^n\) then \(\partial g^*(x)\subseteq \partial h^*(x)\).

3 Determination of grid points

In this section we present methods for determining the sets of primal and dual grid points. These grid points are determined in the primal and the dual algorithm for solving the optimization problems (P) and (D).

First of all we reorder and consolidate the coordinates of the existing facilities. For \(i=1,\ldots ,n\) we denote by \({\overline{M}}_i\) the number of different values of the i-th coordinates \({\overline{a}}_i^1,\ldots ,{\overline{a}}_i^{{\overline{M}}}\) of all attracting facilities, sort them in ascending order and consolidate the weights of equal coordinates, such that

Analogously, we define

3.1 Determining primal grid points

Primal grid points w.r.t. attraction are given by the subdifferentials of \(g^*\), which have a rectangular axes-parallel shape in case of Manhattan distances. To see that, we consider the n components of the sets separately: Let \(({\overline{y}}^1,\ldots ,{\overline{y}}^{{\overline{M}}})\) be such that \(\bigcap _{m=1}^{{\overline{M}}}\left[ {\overline{a}}^m+N_{{{[-{\overline{w}}_m,{\overline{w}}_m]^n}}}({\overline{y}}^m)\right] \ne \emptyset \). Then, by (3),

Since for \(i=1,\ldots ,n\) and \(m=1,\ldots ,{\overline{M}}_i\) it holds

we directly obtain the extreme points of the subdifferentials and hence the sets \(\mathcal {{\overline{I}}}\) and \(\mathcal {{\underline{I}}}\) of primal grid points w.r.t. attraction and w.r.t. repulsion, respectively, as

3.2 Determination of dual grid points

Dual grid points w.r.t. attraction are given by the subdifferentials of g, which also have a rectangular axes-parallel shape in case of Manhattan distances. To see that, we again consider the n components of the sets separately. By (4) we have

where for \(i=1,2,\ldots ,n\) and \(m=1,\ldots ,{\overline{M}}_i\) it holds

Hence, we directly obtain the extreme points of the subdifferentials of g and thus the sets \(\mathcal {{\overline{I}}}_D\) and \(\mathcal {{\underline{I}}}_D\) of dual grid points w.r.t. attraction and w.r.t. repulsion, respectively, as

where for \(i=1,\ldots ,n\) the coordinates \({\overline{y}}_i^k\) can be determined recursively by

or explicitly by

Analogously, for \(i=1,\ldots ,n\), the coordinates \({\underline{y}}_i^k\) can be determined by

or

Obviously, in case of Manhattan distances the primal problem (P) and the dual problem (D) provide axes-parallel grid structures.

4 Determining objective values

For a more efficient implementation we derive recursive representations for determining the objective values of grid coordinates. In the primal algorithm this will substitute the obvious explicit determination and in the dual case it will even replace to solve a linear program for each grid candidate.

4.1 Determination of primal objective values

To check all primal grid points w.r.t. attraction for optimality we need to determine the differences \(g(x)-h(x)\), for all \(x\in \mathcal {{\overline{I}}}\). Instead of the function g and h we may consider subfunctions \(g_1,\ldots , g_n, h_1,\ldots ,h_n:\;{\mathbb {R}}\rightarrow {\mathbb {R}}_+\) such that

Since the increase of \(g_i\) from a grid coordinate \({\overline{\alpha }}_i^k\) to \({\overline{\alpha }}_i^{k+1}\) can be determined by

we obtain by (14)

and analogously by (16)

Since a grid coordinate \({\overline{\alpha }}_i^m\) w.r.t. attraction does not necessarily need to be a grid coordinate w.r.t. repulsion, we use the piecewise linearity of the subfunctions \(h_i\) to determine the values \(h_i({\overline{\alpha }}_i^m)\). We obtain for \(k=1,\ldots ,{\overline{M}}_i\)

While determining all objective values by applying (18) and (19) has quadratic computational costs, the recursive variant in (20) – (22), involving (14) and (16), has linear costs only. The results of this subsection are applied in Algorithm 1.

4.2 Determination of dual objective values

To check all dual grid points w.r.t. repulsion for optimality we need to determine the differences \(h^*(y)-g^*(y)\), for all \(y\in \mathcal {{\underline{I}}}_D\). In order to avoid solving linear programs as given in (1) and (2), we derive a method for calculating \(g^*(y)\) and \(h^*(y)\) with help of the special structure given by the norm.

Instead of the function \(g^*\) we may consider subfunctions \(g_1^*,\ldots , g_n^*\) which all together add up to \(g^*\), such that

where for \(i=1,\ldots ,n\) the functions \(g_i^*:\;{\mathbb {R}}\rightarrow {\mathbb {R}}\) are defined as

By (3) it follows that

Thus, by (11), for the dual grid components \({\overline{y}}_i^k\) w.r.t. attraction as defined in (14) and (15), we obtain for \(i=1,\ldots ,n\) the values

or explicitly

Analogously, for the dual grid components \({\underline{y}}_i^k\) w.r.t. repulsion as defined in (16) and (17), we obtain for \(i=1,\ldots ,n\) the values

or explicitly

Obviously, the functions \(g_i^*\) and \(h_i^*\) are piecewise linear.

Since a dual grid coordinate \({\underline{y}}_i^k\) w.r.t. repulsion does not necessarily need to be a grid point w.r.t. attraction, we use the piecewise linearity of the subfunctions \(g_i^*\) to determine the value \(g_i^*({\underline{y}}_i^k)\). Assume that a finite solution does exist, i.e. \(\sum _{m=1}^{{\underline{M}}}{\underline{w}}_m\le \sum _{m=1}^{{\overline{M}}}{\overline{w}}_m\). Then \({\overline{y}}_i^0\,\le \,{\underline{y}}_i^k\,\le \,{\overline{y}}_i^{{{\overline{M}}}_i}\) holds for \(i=1,\ldots ,n\). In particular, there exists \(j\in \left\{ 1,\ldots ,{{\overline{M}}}_i\right\} \) such that \({\overline{y}}_i^{j-1}\,\le \,{\underline{y}}_i^k\,\le \,{\overline{y}}_i^j.\) Since \(g_i^*\) is linear in \([{\overline{y}}_i^{j-1},{\overline{y}}_i^j]\) we have

While determining all objective values by applying (23) and the analogous program for \(h_i^*(y_i)\) involves \(2\cdot {\underline{M}}_i\) linear programs for \(i=1,\ldots ,n\), the recursive variant using (24), (25) and (26) has linear costs only.

Moreover, by (11), we obtain the assignment

which is applied in Algorithm 2 to easily deduce primal optimal solutions from dual ones, see Proposition 1. We obtain an analogous assignment between primal and dual elements w.r.t. repulsion.

The results of this subsection are applied in Algorithm 2.

4.3 Alternative variant

In Nickel and Dudenhöffer (1997) the authors provide an algorithm that has a similar solving strategy as Algorithm 1: Based on the piecewise linearity of the objective function (facilities are not distinguished between attractive and repulsive ones), their algorithm checks each grid point for local optimality and evaluates all local minimal points to find a global minimum of the objective. Since not all grid points are evaluated, an explicit determination is applied. The following serves to provide a combination of Algorithm 1 and the algorithm in Nickel and Dudenhöffer (1997).

Let us reformulate Problem (P) as follows:

where \(M:={\overline{M}}+{\underline{M}}\) and

As in (5)–(10) we reorder and consolidate the coordinates of the existing facilities. For \(i=1,\ldots ,n\) we denote by \(M_i\) the number of different values of the i-th coordinates of all facilities \(a_i^1,\ldots ,a_i^{M}\), sort them in ascending order and consolidate the weights of equal coordinates, such that

The following variables correspond to the derivatives of f from the left of all grid points, see Nickel and Dudenhöffer (1997),

Instead of checking for local minimality, and if so, evaluating explicitely, we determine all objective values recursively as we have done in Sect. 4.1 and obtain

The combined results of this subsection are applied in Algorithm 3.

5 Primal and dual algorithm

Based on the derived results, we can formulate the simplified algorithms for locating a semi-desirable facility in the special case of Manhattan distances.

Assume that the finiteness criterion is satisfied, i.e. \(\sum _{m=1}^{{\overline{M}}}{\overline{w}}_m\ge \sum _{m=1}^{{\underline{M}}}{\underline{w}}_m\), see Corollary 1. Then, the algorithms can be formulated as follows:

Algorithm 1

(Primal Algorithm)

Algorithm 2

(Dual Algorithm)

Remark 1

In the primal algorithm, we need to evaluate all grid coordinates w.r.t. attraction. Depending on the input data, we do not necessarily need to determine all grid coordinates w.r.t. repulsion. In fact, due to (22), we need only the coordinates

Thus, instead of pre-calculating all dual grid coordinates, we only determine those, that are really necessary and include this into Step (ii). Analogously, in the dual algorithm, we only need to determine the primal grid points w.r.t. attraction

and include this into Step (iii).

Remark 2

Whether or not the complete interval between two adjacent optimal grid coordinates \({\overline{\alpha }}_i^k\) and \({\overline{\alpha }}_i^{k+1}\) belongs to the set of optimal points can be decided by applying Proposition 2 and the piecewise linearity of the functions g and h. Due to this result, the interval is optimal, whenever there exists \(q\in \left\{ 1,\ldots ,{\underline{M}}_i\right\} \) such that \([{\overline{\alpha }}_i^k,\;{\overline{\alpha }}_i^{k+1}]\subseteq [{\underline{\alpha }}_i^q,\;{\underline{\alpha }}_i^{q+1}]\). This property may also be used for implementing the algorithms in order to reduce the number of grid coordinates to be checked.

Algorithm 3

(Variant of the Primal Algorithm)

6 Computational results

We solve several randomly generated instances of Problem (P) with up to 2.000.000 facilities, where different ratios of the numbers \({\overline{M}}\) and \({\underline{M}}\) of attracting and repulsive facilities, respectively, are considered. Tables 1, 2 and 3 state the computational results of Algorithms 1, 2 and 3, respectively. We furthermore compare our results with the algorithm presented in Nickel and Dudenhöffer (1997), see Tables 4 and 5. All tables are based on the same generated input data. The locations of all facilities are sampled from the continuous uniform distribution over the interval \((-0.5, 0.5)\). The number of digits is limited to \(\lceil {lg ({\overline{M}}+{\underline{M}})\rceil }\) to make repeating entries possible but not dominant. The weights of all facilities are uniformly generated values over the interval (0, 1). The generated weights \({\underline{w}}_1,\ldots ,{\underline{w}}_{{\underline{M}}}\) are scaled such that the sum over all weights equals 1.0.

All algorithms were implemented in MATLAB R2017a. All examples were run on a computer with Intel® Core™ i5–6300U CPU with 2.40GHz.

The computational effort of the Algorithms 1, 2, 3 and the algorithm in Nickel and Dudenhöffer (1997) consists of two main parts: first, sorting coordinates and consolidating weights, and second, determining objective values. The derived recursive structure for evaluating all objectives in Algorithms 1, 2 and 3 induces linear computational costs \({\mathcal {O}}(M)\). Depending on the input data each of the three algorithms might perform slightly better than the other two. For instance, generating data as described above provides a small advantage of Algorithm 3.

Conversely, the algorithm in Nickel and Dudenhöffer (1997) applies an explicit determination of the objective values at all local minima. Thus, the computational performance of this algorithm also depends on the number of local minima. The resulting running times and the corresponding amounts of local minimal points are illustrated in Tables 4 and 5.

Although the asymptotical computational complexity of the entire algorithms is driven by the \({\mathcal {O}}(M\log M)\) sorting part, the computational experiments show that the second part of evaluating all candidates (either all grid coordinates in Algorithms 1, 2 and 3 or all local minima as in Nickel and Dudenhöffer (1997)) can become a crucial part of the running time.

The algorithms in Löhne and Wagner (2017) and Wagner et al. (2016) find all grid points, whose number is up to \({\overline{M}}^n\) or \({\underline{M}}^n\) in the primal and the dual case, respectively. Compared to this, the Algorithms 1 and 2 determine all appearing grid coordinates whose number is at most \({\overline{M}}\cdot n\) or \({\underline{M}}\cdot n\), respectively. Thus, the number of candidates to be checked for optimality is much smaller. Additionally, for each candidate the determination of the objective value has less computational effort due to the used method (recursive determination vs. explicit determination or linear programs). Furthermore, the effort to find the candidate set by solving projection problems as described in Löhne and Wagner (2017) and Wagner et al. (2016) increases significantly when the dimension n increases. Compared to this, the separation of the problem into n subproblems is much more efficient, such that the computational effort increases linearly with the dimension n.

7 Conclusion

It turns out that exploiting the special structure of Manhattan distances instead of applying methods for mixed gauge distances, as in Wagner et al. (2016), or even more general dc structures, as in Löhne and Wagner (2017), leads to an improvement of the computational effort in the sense that the given non-convex optimization problem (P) can be solved by using a primal or a dual algorithm, both of which consist of a sorting process followed by a very efficient procedure to evaluate the objective at all candidate coordinates. No special solvers for polyhedral projection problems, vector linear programs, linear or convex problems are needed. Such a significant simplification of the solving procedures makes it worth to handle this special case of Manhattan distances separately.

References

Benson H (1998) An outer approximation algorithm for generating all efficient extreme points in the outcome set of a multiple objective linear programming problem. J Global Optim 13:1–24

Cappanera P (1999) A survey on obnoxious facility location problems. Technical report. University of Pisa, Pisa

Carrizosa E, Plastria F (1999) Location of semi-obnoxious facilities. Stud Locat Anal 12:1–27

Church RL, Garfinkel RS (1978) Locating an obnoxious facility on a network. Transp Sci 12(2):107–118

Dasarathy B, White LJ (1980) A maxmin location problem. Oper Res 28(6):1385–1401

Drezner Z, Wesolowsky GO (1991) The Weber problem on the plane with some negative weights. INFOR 29(2):87–99

Eiselt HA, Laporte G (1995) Objectives in location problems. In: Drezner Z (ed) Facility location, a survey of applications and methods. Springer series in operations research. Springer, Berlin

Goldman AJ, Dearing PM (1975) Concepts of optimal location for partially noxious facilities. Bull Oper Res Soc Am 23(1):B-31

Horst R, Thoai NV (1999) DC programming: overview. J Optim Theory Appl 103(1):1–43

Löhne A, Wagner A (2017) Solving dc programs with a polyhedral component utilizing a multiple objective linear programming solver. J Global Optim 69(2):369–385

Löhne A, Weißing B: Bensolve-VLP solver, version 2.0.1. www.bensolve.org

Löhne A, Weißing B (2016) Equivalence between polyhedral projection, multiple objective linear programming and vector linear programming. Math Methods Oper Res 84(2):411–426

Löhne A, Weißing B (2016) The vector linear program solver Bensolve: notes on theoretical background. Eur J Oper Res 260(3):807–813

Nickel S, Dudenhöffer EM (1997) Weber’s problem with attraction and repulsion under polyhedral gauges. J Global Optim 11(4):409–432

Plastria F (1996) Optimal location of undesirable facilities: a selective overview. Belg J Oper Res Stat Comput Sci 36(2–3):109–127

Rockafellar RT (1997) Convex analysis. Princeton landmarks in mathematics. Princeton University Press, Princeton (Reprint of the 1970 original, Princeton Paperbacks)

Singer I (1979) A Fenchel–Rockafellar type duality theorem for maximization. Bull Aust Math Soc 20(2):193–198

Singer I (2006) Duality for nonconvex approximation and optimization. CMS books in mathematics/Ouvrages de Mathématiques de la SMC, vol 24. Springer, New York

Toland JF (1978) Duality in nonconvex optimization. J Math Anal Appl 66(2):399–415

Tuy H (1998) Convex analysis and global optimization, nonconvex optimization and its applications, vol 22. Kluwer Academic Publishers, Dordrecht

Wagner A (2015) A new duality-based approach for the problem of locating a semi-obnoxious facility. Ph.D. thesis, Martin-Luther-University, Halle-Saale, Germany

Wagner A, Martinez-Legaz JE, Tammer C (2016) Locating a semi-obnoxious facility: a Toland–Singer duality based approach. J Convex Anal 23(4):1073

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wagner, A. Locating a semi-obnoxious facility in the special case of Manhattan distances. Math Meth Oper Res 90, 255–270 (2019). https://doi.org/10.1007/s00186-019-00671-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-019-00671-z