Abstract

We construct long-term prediction intervals for time-aggregated future values of univariate economic time series. We propose computational adjustments of the existing methods to improve coverage probability under a small sample constraint. A pseudo-out-of-sample evaluation shows that our methods perform at least as well as selected alternative methods based on model-implied Bayesian approaches and bootstrapping. Our most successful method yields prediction intervals for eight macroeconomic indicators over a horizon spanning several decades.

Similar content being viewed by others

1 Introduction

Long-term predictions of economic time series are published yearly by the US Congressional Budget Office (CBO) in its Long-term Budget and Economic Outlook.Footnote 1 In this report, the CBO predicts US federal spending and revenue growth in the coming decades under the assumption of stable tax and spending policies. However, structural changes occur over the long run (taking the turbulent period after the Great Moderation as an example), and not only as a result of changes in legislation. The CBO stated in its January 2000 Budget and Economic Outlook that the baseline projections allow for an average recession within the next 10 years (2000–2010). Today, we know that the 2008 recession was more severe than the predicted average recession. Moreover, in its 2011 reportFootnote 2 the US Financial Crisis Inquiry Commission concluded that the crisis would have been avoidable if timely preventive measures had been introduced. We do not link the absence of these measures with the CBO’s projections from 18 years ago, but we do believe that accurate long-term economic predictions can trigger right and timely decisions. Economic predictions for several decades ahead are crucial for strategic decisions made by trust funds, pension management and insurance companies, portfolio management of specific derivatives (Kitsul and Wright 2013) and assets (see Bansal et al. 2016). Several facts hamper the long-term prediction of economic time series: small sample size because most post-WWII economic indicators are reported on monthly/quarterly bases, (anti-) persistenceFootnote 3 (see Diebold and Rudebusch 1989; Baillie 1996; Diebold and Linder 1996, who also give PIs), heteroscedasticity and structural change (Cheng et al. 2016), the latter of which is inevitable in the long run (Stock and Watson 2005).

Sometimes, decision makers call for predictions of boundaries [L, U] covering the future value of interest with a certain probability. Unlike point forecasts, prediction intervals (PIs) can capture the uncertainty surrounding predictions. As a proxy for this uncertainty, one can look at the widths of different PIs. Most software packages offer PIs as part of their standard output. PIs from exponential smoothing, for instance, are readily available without any strict assumptions, but then as the forecast horizon grows, these PIs often become too wide to be informative (see Chatfield 1993, for more background). By contrast, PIs implied by arma–garch models often turn out to be too narrow because they ignore distributional, parameter and model uncertainty (see Pastor and Stambaugh 2012). Pascual et al. (2004, 2006) therefore compute predictive densities using bootstrapping without the usual distributional assumptions while incorporating parameter uncertainty. Using Bayesian methods, one can account for both model and parameter uncertainty, but the pre-assigned coverage of PIs is attained only on average relative to the specified prior. Müller and Watson (2016) construct bayes PIs for temporal averages of economic series’ growth rates over a horizon of 10–75 years. Using the so-called least favorable distribution solves the problem above with the pre-assigned coverage and makes their PIs more conservative. Zhou et al. (2010) provide theoretically valid long-term PIs for the same type of target as Müller and Watson (2016), i.e., the temporal aggregate of series over a long horizon. As opposed to Müller and Watson (2016), Zhou et al. (2010) do not require any model fitting (at least in our univariate setup) and thus provide a very simple alternative. While both papers allow for the presence of a long-memory component in the data generating process (DGP), Zhou et al. (2010) did not apply their methods to economic time series nor has either of the papers compared themselves with any benchmark. These facts pave the way for the following empirical research:

First, since Zhou et al. (2010) evaluate their PIs using only simulated data, we find it necessary to verify their results using real data.

Second, the methods of Zhou et al. (2010), although theoretically valid, do not to account for some characteristics of economic time series. Therefore, we propose computational adjustments of the PIs of Zhou et al. (2010) that lead to better predictive performance for small samples and long horizons. Our adjustments employ a stationary bootstrap (Politis and Romano 1994) and kernel quantile estimators (Sheather and Marron 1990).

Third, since neither Zhou et al. (2010) nor Müller and Watson (2016) compare their PIs to any benchmark, we take over this responsibility and conduct an extensive pseudo-out-of-sample (POOS) comparison. We augment the comparison with PIs implied by arfima–garch models computed as one of the following: (i) forecasts for time-aggregated series or (ii) time-aggregated forecasts of disaggregated series. To compute (i) and (ii) we use both analytic formulas and bootstrap path simulations (Pascual et al. 2006).

The main results of our paper may be summarized as follows:

First, our simulation study reveals that the PIs of Zhou et al. (2010) fail to achieve their nominal coverage rate under a growing horizon as a result of rapidly shrinking width. Particularly under long-memory DGP, the coverage rate reaches only half of the nominal level.

Second, using the proposed computational adjustments, we achieved an improvement in the coverage rate of 20pp, which may, however, still be below the nominal level.

Third, based on real data (S&P 500 returns and US 3-month TB interest rates), the adjusted PIs of Zhou et al. (2010) provide a valid competitor for Müller and Watson (2016). Particularly in case of asset returns, the PIs of Müller and Watson (2016) provide higher coverage but less precision (larger width), while for assets’ volatility, the roles are switched. In both cases, adjusted Zhou et al. (2010) PIs outperform the bootstrap PIs of Pascual et al. (2006).

Fourth, with the adjusted method of Zhou et al. (2010), we construct long-term prediction intervals for selected US macroeconomic time series including GDP growth, total factor productivity, inflation, population, and others. These PIs provide an alternative for PIs given by Müller and Watson (2016) in Table 5 on pages 1731–1732 in the referenced paper.

Our article is organized as follows: In Sect. 2 we review the methods discussed above with a focus on their scope and implementation. We further describe our computational adjustments of both methods of Zhou et al. (2010) and justify them using simulations. Section 3 summarizes the empirical comparison of all previously discussed methods. Section 4 provides PIs for eight macro-indicators over the horizon of up to seven decades from now. Section 5 contains concluding remarks. Plots and details concerning implementation and underlying theory are available in “Appendix.”

2 Methods and simulations

In this section, we first briefly discuss the three selected approaches for computing of PIs followed by their merits and demerits. Then we propose computational adjustments of the methods proposed by Zhou et al. (2010). Next, our simulations show that these adjustments improve the coverage when the horizon m is large compared to the sample size T, for example when \(m= T/2\). In the following, assume that we observe \(y_1,\ldots ,y_T\) and we want to provide a PI for the temporal average \((y_{T+1}+\cdots +y_{T+m})/m\). For the rest of the paper, we use the following notation (and analogous for the process of innovations \(e_t\))

2.1 Methods for computing prediction intervals of temporal averages

2.1.1 Bootstrap prediction intervals by Pascual et al. (2004, 2006)

For a specific description of their approach, let us assume a weakly stationary arma(1,1)–garch(1,1) process of the form

In order to obtain PIs for \(y_{T+m}\), one typically uses the estimated MSE predictors of \(y_{T+m}\) and \(\sigma _{T+m}\) given the past observations

The resulting analytic (anlt) PIs have the form

where \(\hat{\varPsi }_0=1\) and \(\hat{\varPsi }_j\), \(j=1,\ldots ,m-1\) are the estimates of coefficients from the causal representation of \(y_t\). \(Q^N\) denotes normal quantile. Besides the fact that these PIs ignore the parameter uncertainty, they would be inappropriate for heavy-tailed processes or when the innovations distribution is asymmetric. In order to deal with these issues, Pascual et al. (2004) introduce a re-sampling strategy using the estimated innovations in order to simulate \(y_{t}, t=T+1,\ldots ,T+m\), and then compute the conditional distribution of \(y_{T+m}\) directly, avoiding strict distributional assumptions on the innovations. Their approach does not need a backward representation and thus captures garch-type processes. They also show the validity of their PIs for arima processes. However, we are not aware of any extension of these results for arfima. Computationally, it is simple to obtain both the analytic and bootstrap PIs implied by arfima models, since all necessary ingredients are readily available in rugarch R-package (see Ghalanos 2017) which efficiently implements the bootstrap PIs of Pascual et al. (2004, 2006). While the re-sampling can also incorporate parameter uncertainty, Pascual et al. (2006) show that for the garch models, coverage of PIs is similar whether one accounts for parameter uncertainty or not. The superiority of bootstrap PIs over the analytic PIs (2.5) prevails especially when the innovations distribution is asymmetric.

In order to obtain PIs for \(\bar{y}_{+1:m}\) with arfima–garch-type models, we can either (i) use averages of the in-sample observations \(\bar{y}_{t(m)}\), as defined above, or (ii) average the forecasts of \(y_t\), over \(t=T+1,\ldots ,T+m\). In both cases, we fit arfima(p, d, q)–garch(P, Q) models to the data with the rugarch R-package. As already mentioned, the full re-sampling scheme takes into account the parameter uncertainty, however, for minor improvement of performance and high cost concerning computation time. Therefore, we use a partial re-sampling scheme which accounts for the uncertainty due to the unknown distribution of innovations. The fractional parameter \(d\in \left[ 0,0.5\right) \) is, depending on the series, either fixed to 0 (only for stock returns, see Sect. 3) or estimated by maximum likelihood (ML). The arma orders are restricted to \(p,q\in \left\{ 1,\ldots ,4\right\} \) and are selected by aic. The garch orders are restricted to \((P,Q)\in \left\{ (0,0), (1,1)\right\} \). The details of our implementation follow:

Fitting arfima–garch to averaged in-sample observations (avg-series):

-

1.

Compute the series of overlapping rolling averages \(\bar{y}_{t(m)}=m^{-1}\sum _{i=1}^{m} y_{t-i+1}\), for \(t=m,\ldots ,T\).

-

2.

Fit the selected arfima–garch model to the series of \(\bar{y}_{t(m)}\).

-

3.

Compute

- (anlt)

m-step-ahead MSE forecasts \(\hat{\bar{y}}_{T,+1:m}\) and \(\hat{\bar{\sigma }}^2_{T,+1:m}\) analogously to (2.3) by substituting the observations \(y_t\) by rolling averages \(\bar{y}_{t(m)}\). Then, PIs are given by (2.5).Footnote 4

- (boot)

residuals \(\hat{e}_t, t=1,\ldots ,T\), and generate \(b=1,\ldots ,B\) future paths \(\hat{\bar{y}}^b_{T(m),t}, t=T+1,\ldots ,T+m\), recursively using (2.5) and the parameter estimates from the original sample. Obtain the PIs by inverting the empirical distribution of \(\hat{\bar{y}}^b_{T(m),T+m}, b=1,\ldots ,B\).

- (anlt)

Fitting arfima–garch to original series and averaging forecasts (avg-forecasts):

-

1.

Fit the selected arfima–garch model to the series \(y_t\).

-

2.

Compute

- (anlt)

\(\hat{\bar{y}}_{T,+1:m}=m^{-1}\sum _{i=1}^m\hat{y}_{T,T+i}\), with \(\hat{y}_{T,T+i}\) the i-step-ahead analytic forecast from (2.3). The scaling factor in PI \([L,U]=\hat{\bar{y}}_{T,+1:m}+[ Q_t(\alpha /2), Q_t(1-\alpha /2)]\hat{\text {as}}_{T,+1:m}\) is derived in “Appendix C.”

- (boot)

residuals \(\hat{e}_t, t=1,\ldots ,T\) and generate \(b=1,\ldots ,B\) future paths \(\hat{y}^b_{T,t}, t=T+1,\ldots ,T+m\), recursively using (2.5) and the parameter estimates from the original sample. Compute the temporal averages \(\hat{\bar{y}}^b_{T,+1:m}\), as estimators of \(\bar{y}^b_{T,+1:m}\), \(b=1,\ldots ,B\). We obtain the PIs by inverting the empirical distribution of \(\hat{\bar{y}}^b_{T,+1:m}\), \(b=1,\ldots ,B\).

- (anlt)

2.1.2 Robust bayes prediction intervals by Müller and Watson (2016)

With both the sample size and horizon growing proportionally, Müller and Watson (2016) provide asymptotically valid long-term PIs under a rich class of models for series with long memory under a unified spectral representation. In order to capture a larger scope of long-run dynamics in economic time series beyond those described by arfima models, Müller and Watson (2016) consider two additional models, namely (i) the local-level model

and (ii) the local to unity ar(1) model defined by:

The former captures varying “local means” arising, e.g., from stochastic breaks, while the latter is useful for modeling highly persistent series. In (i) the role of the persistent component is determined by the parameter b, while in (ii) it is driven by c. The arfima models with fractional integration parameter d complete the triple of models in Müller and Watson (2016) who design a unified spectral representation of their long-run dynamics using the parametrization \(\vartheta =(b,c,d)\).

A natural way of how to incorporate the uncertainty about \(\vartheta \), which is crucial for the asymptotic predictive distribution of \(\bar{y}_{+1:m}\), is to assume a prior for \(\vartheta \). A practical drawback of such an approach is that the pre-assigned coverage holds only on average relative to the prior. Hence, Müller and Watson (2016) further robustify their bayes PIs in order to attain “frequentist coverage,” i.e., coverage that holds over the whole parameter space.

The main idea behind their approach is to extract the long-run information from selected low-frequency projections of \(y_t,t=1,\ldots ,T\). Assume that the set of predictors for \(\bar{y}_{+1:m}\) consists of q low-frequency cosine transformations \(X=(X_1,\ldots ,X_q)^{^{{\mathsf {T}}}}\) of \(y_t\). Then the asymptotic conditional density of \(\bar{y}_{+1:m}\) is a function of the covariance matrix of \((X_1,\ldots ,X_q,\bar{y}_{+1:m})\) denoted as \(\varSigma \), which in turn can be expressed as a function of properly scaled spectra \(S(m/T,q,\vartheta )\). When the number of frequencies q is kept small, the high-frequency noise is filtered out, thus providing more robustness. For fixed \(\vartheta =(0,0,0)\) the conditional distribution of \(\bar{y}_{+1:m}\) turns out to be Student’s t with q degrees of freedom and the PIs take the form

These (naive) PIs implied by fixed \(\vartheta =(0,0,0)\) can be enhanced by imposing a uniform prior on \(\vartheta \), giving equal weight to all combinations of parameters \(-0.4\le d\le 1\), \(b,c\ge 0\), and using standard Bayesian procedure to obtain posterior predictive density, which is no longer a simple t-distribution but rather a mixture of different t-distributions. We denote the implied PIs as (bayes) PIs. Finally, the (robust) PIs additionally guarantee the correct coverage uniformly across the parameter space \(\varTheta \) and simultaneously have optimal (mean) width. We conclude by giving our implementation steps for the (bayes) PIs, leaving the additional steps necessary for computing the (robust) PIs to our “Appendix B.”

Bayes PIs (bayes):

-

1.

Set q small and compute the cosine transformations \(X=(X_1, ,X_q)\) of the target series \(y_t\). Standardize them as \(Z=(Z_1,\cdots ,,Z_q)^{^{{\mathsf {T}}}} =X/\sqrt{X^{^{{\mathsf {T}}}}X}\).

-

2.

For a chosen grid of parameter values \(\vartheta =(b,c,d)\) satisfying \(-0.4\le d\le 1\); \(b,c\ge 0\) compute the matrices \(\varSigma (m/T,q,\vartheta )\) following formulas (9) and (20) from Müller and Watson (2016) and using, e.g., a numerical integration algorithm. (Details are given in “Appendix” of the original paper.)

-

3.

Choose a prior for \(\vartheta =(b,c,d)\) and compute the posterior covariance matrix \(\varSigma =\bigl ({\begin{matrix}\varSigma _{ZZ} &{} \varSigma _{Z\bar{e}}\\ \varSigma _{Z\bar{e}} &{} \varSigma _{\bar{e}\bar{e}}\end{matrix}}\bigr )\).

-

4.

Obtain the covariance matrix of the residuals as \(\varSigma _{UU}=\varSigma _{\bar{e}\bar{e}}-\varSigma _{Z\bar{e}}^{'}(\varSigma _{ZZ}^{-1})\varSigma _{Z\bar{e}}\).

-

5.

Compute the quantiles \(Q_q^{\text {tmix}}(\alpha /2),Q_q^{\text {tmix}}(1-\alpha /2)\) of the conditional (mixture-t) distribution of \(\bar{y}_{+1:m}\) using, e.g., sequential bisection approximation. (Details are given in “Appendix” of the original paper.)

-

6.

The PIs are given by \([L,U]=\bar{y}+[Q_q^{\text {tmix}}(\alpha /2),Q_q^{\text {tmix}}(1-\alpha /2)]\sqrt{X^{^{{\mathsf {T}}}}X}\).

2.1.3 Prediction intervals by Zhou et al. (2010)

For presentational clarity of their approach, assume

where \(e_t\) is a mean-zero stationary process and \(\mu \) is the unknown deterministic mean. The PI for \(y_t\) process will be constructed via that of the \(\hat{e}_t=y_t-\hat{\mu }\) process by adding the \(\hat{\mu }=\bar{y}\) to both components of the intervals. It is common practice and can also be proved to have the correct coverage using standard arguments. We first provide a summary of the two methods proposed in Zhou et al. (2010) and then we discuss their consistency.

CLT method (clt): If the process \(e_t\) shows short-range dependence and light-tailed behavior, then in the light of a quenched CLT, Zhou et al. (2010) propose the following PI for \(\bar{e}_{+1:m}\)

$$\begin{aligned}{}[L,U]=[Q^N(\alpha /2),Q^N(1-\alpha /2)]\frac{\sigma }{\sqrt{m}}, \end{aligned}$$(2.10)where \(\sigma \) is the long-run standard deviation (sd) of \(e_t\). However, since \(\sigma \) is unknown, it must be estimated. One popular choice is the lag window estimator

$$\begin{aligned} \hat{\sigma }^2=\sum _{k=-k_T}^{k_T}\hat{\gamma }_k=\sum _{k=-k_T}^{k_T}\frac{1}{T}\sum _{t=1}^{T-|k|}(\hat{e}_t-\bar{\hat{e}})(\hat{e}_{t+k}-\bar{\hat{e}}). \end{aligned}$$(2.11)The PI for \(\bar{y}_{+1:m}\) with nominal coverage \(100(1-\alpha )\%\) is given by

$$\begin{aligned}{}[L,U]=\bar{y}+[Q^N(\alpha /2),Q^N(1-\alpha /2)]\frac{\hat{\sigma }}{\sqrt{m}}. \end{aligned}$$(2.12)

Quantile method (qtl): If we allow for heavy tails and long memory, the PI for \(\bar{y}_{+1:m}\) with nominal coverage \(100(1-\alpha )\%\) can be obtained by

where \(\hat{Q}(\cdot )\) is the respective empirical quantile of \(\bar{\hat{e}}_{t(m)}, t=m,\ldots , T\).

The clt method is applicable only for processes with light-tailed behavior and short-range dependence. Let \(S_t=\sum _{1 \le j \le t}e_j\). Under stationarity, the problem of predicting \(\bar{e}_{+1:m}=(S_{T+m}-S_{T})/m\) after observing \(e_1,\ldots ,e_T\) can be analogically thought of as predicting \(S_m/\sqrt{m}\) after observing \(\ldots ,e_{-1},e_0\). Let \(\mathcal {F}_0\) be the \(\sigma \)-field generated by \(\ldots ,e_{-1},e_0\). Assume \({\mathbb {E}}(|e_t|^p)<\infty \) for some \(p>2\). Wu and Woodroofe (2004) proved that, if for some \(q>5/2\),

then we have the a.s. convergence

where \(\varDelta \) denotes the Levy distance, \(m \rightarrow \infty \), and \(\sigma ^2= \lim _{m \rightarrow \infty }\Vert S_m\Vert ^2/m\) is the long-run variance. Verifying (2.14) is elementary for many well-known time series models. We postpone the discussion on the verification of such a result for a linear and nonlinear process for the interested reader to “Appendix D.”

The qtl method is based on the intuitive fact that for the horizon m growing to \(\infty \) and in the light of weak dependence,

if m / T is not too small. Thus, it suffices to estimate the quantiles of \(\bar{e}_{T(m)}=(e_{T+1}+\cdots +e_{T+m})/m\) using, e.g., empirical quantiles of \(\bar{e}_{t(m)}, t=m,\ldots ,T\). The power of this method lies in its applicability to heavy-tailed error process. Zhou et al. (2010) provided a consistency result for this method for the subclass of linear processes (see Theorem 2 in our “Appendix” section D).

2.1.4 Practical comparison of the previously discussed methods

Pros and cons of Pascual et al. (2004, 2006) When comparing the bootstrap PIs to the analytic PIs, the former provide the advantage of including the uncertainty due to the unknown distribution of the residuals and unknown parameters into the uncertainty about the target. In cases when the distribution of the residuals is asymmetric and doubts about the proximity of the estimated model to the true DGP exist, the bootstrap approach dominates the analytic. Furthermore, regarding the implementation, the analytic PIs are more difficult to obtain since we deal with a nonstandard target. By contrast, the bootstrap PIs are readily available in the R-package rugarch. Hence, their estimation is cheap. Concerning the two ways of fitting the models to data, i.e., (i) using the series of rolling temporal averages or (ii) using the original series and averaging the forecasts, there are pros and cons for each approach regarding the implementation and effective use of our relatively small sample. The literature (e.g., page 302 in Lütkepohl 2006; Marcellino 1999) does not provide any conclusion about the superiority of (i) over (ii), or vice versa. Therefore, we include both (i) and (ii) in the POOS comparison in Sect. 3.

Pros and cons of Müller and Watson (2016) Their methods represent the state of the art, being robust against stylized peculiarities of economic time series. Their Bayesian approach accounts for both model and parameter uncertainty, but the focus is only on those parameters ruling the persistence, which is in contrast to the previously discussed bootstrap approach where the focus is on the short-term dynamics. To date, no package implementation has been available, which makes the approach less attractive to practitioners. Moreover, the PIs depend on several forecaster-made choices, such as the number of frequencies q to keep, the grid of values for parameters, the choice of prior. Even with these inputs fixed, the computation takes longer due to multiple advanced numerical approximations required for the (bayes) PIs and further optimization to attain the “frequentist coverage.” PIs for fixed parameters \(q=12\) and \(0.075 \le m /T\le 1.5\) used in their paper (and also in the current paper) are available faster thanks to some pre-computed inputs available from the replication files.Footnote 5

Pros and cons of Zhou et al. (2010) Their methods provide a simple alternative to the previously discussed ones. As to their scope of applicability, the clt method does not require any specific rate of how fast the horizon can grow compared to the sample size. However, the predictive performance heavily depends on the estimator of the long-term volatility \(\sigma \). Furthermore, for some processes with heavy-tailed innovations or long-range dependence, the notion of the long-run variance \(\sigma ^2\) does not exist, and thus this method is not applicable. The attractive feature of the qtl PIs is the simplicity and more general applicability than the clt. Their computation requires almost no optimization (at least in our univariate case) and is straightforward. Pascual et al. (2004, 2006) and Müller and Watson (2016) assume that the DGP of \(y_t\) is (possibly long-memory and heteroscedastic version of) an arma process. Zhou et al. (2010) do not a priori assume any parametrization for the dynamics of \(y_t\), but argue that both qtl and clt PIs are valid for arma processes, whereas only the former should be used for processes with a long memory. The simulations of Zhou et al. (2010) confirm their claims. However, as we demonstrate next, the qtl PIs under-perform when T is small and \(m/T\approx 1/2\). Therefore, we propose some computational adjustment and provide a simulation-based justification of their superiority over the original clt and qtl.

2.2 Zhou et al. (2010) under small sample: adjustment and simulations

2.2.1 Computational adjustments

The simulation setup in Zhou et al. (2010), page 1440, assumes \(T=6000\) and horizon \(m=168\). By contrast, in an economic forecasting setup, one typically has only a few hundred of observations, while our horizon m stays approximately the same. Here we show how one can easily modify the computation of clt and qtl PIs in order to enhance their performance. In particular, for qtl, we use a stationary bootstrap (Politis and Romano 1994) with optimal window width as proposed by Politis and White (2004) and Patton et al. (2009) to obtain a set of replicated series. Next, kernel quantile estimators (see Silverman 1986; Sheather and Marron 1990) are used instead of sample quantiles. In order to improve the clt method, we employ a different estimator (cf. 2.18) of \(\sigma \) than (2.11) and account for the estimation uncertainty. These three modifications are then shown to improve the empirical coverage using simulations.

Stationary bootstrap The procedure starts with the decomposition of the original sample into blocks by choosing the starting point i and the length of block \(L_i\) from a uniform and geometric distribution, respectively, that are independent of the data. For every starting point and length, we re-sample from the blocks of the original series. The resulting blocks are then concatenated. As proposed by Politis and Romano (1994) in their seminal paper, this way of re-sampling retains the weak stationarity and is less sensitive to the choice of block size than moving block bootstrap (Künsch 1989). It also retains the dependence structure asymptotically since every block contains consecutive elements of the original series. The two re-sampling schemes differ in the way how they deal with the end-effects. Under mixing conditions, the consistency of stationary bootstrap for the centered and normalized mean process has been studied in the literature. Gonçalves and de Jong (2003) show that under some mild moment conditions, for some suitable \(c_T \rightarrow 0\),

holds where \(\bar{y}^*\) and \({\mathbb {P}}^*\) refer to the re-sampled mean and the probability measure induced by the bootstrap. We conjecture that, along the same line of proof shown by Zhou et al. (2010), it is easy to show consistency results for the bootstrapped versions of the rolling averages of m consecutive realizations. This is immediate for linear processes. For nonlinear processes, one can use the functional dependence measure introduced by Wu (2005) and obtain analogous results. To keep our focus on empirical evaluations, we leave the proof of the consistency for our future work. Interested readers can also look at the arguments by Sun and Lahiri (2006) for moving block bootstrap and the corresponding changes as suggested in Lahiri (2013) to get an idea how to show quantile consistency result. For time series forecasting and quantile regression, the stationary bootstrap has been used by White (2000) and Han et al. (2016) among others.

Kernel quantile estimation The efficiency of kernel quantile estimators over the usual sample quantiles has been proved in Falk (1984) and was extended to several variants by Sheather and Marron (1990). As proposed in the latter, the improvement in MSE is a constant order of \(\int uK(u) K^{-1}(u)\mathrm{d}u\) for the used symmetric kernel K. The theorems mentioned in Sect. 2 are easily extendable to these kernel quantile estimators. We conjecture that one can use the Bahadur-type representations for the kernel quantile estimators as shown in Falk (1985) and obtain similar results of consistency for at least linear processes. We used the popular Epanechnikov kernel \(K(x)=0.75(1-x^2)_{+}\) for our computations because of its efficiency in terms of mean integrated square error.

Estimation of\(\sigma \)and degrees of freedom As mentioned above, Zhou et al. (2010) used clt as in (2.12) with normal quantiles. For many applications in economics and finance, the normal distribution fails to describe the possibly heavy-tailed behavior. Therefore, we propose to substitute the normal with the Student t-distribution, given the fact that \(\sigma \) has to be estimated. Accounting for the estimation uncertainty indeed gives a Student t-distribution of \(\bar{e}_{+1:m}\) in the limit. The question remains: How many degrees of freedom (df) we should use. Rather than some arbitrary choices such as 5, as used by default in many software packages, or ML-estimated df which would be very noisy given the small sample, we link them to the estimator of \(\sigma \). This would not be trivial for the lag window estimator (2.11). Instead, we use the subsampling block estimator (see eq. 2, page 142 in Dehling et al. 2013)

with the block length l and number of blocks \(\kappa =\lceil T/l\rceil \). Then the adjusted clt\(p=100(1-\alpha )\%\) PI for \(\bar{y}_{+1:m}\) is given by

where \(Q_{\kappa -1}^t(\cdot )\) denotes a quantile of Student’s t distribution with \(\kappa -1\) degrees of freedom. Note that, since we used non-overlapping blocks, under short-range dependence, these blocks behave almost independently and thus \(\tilde{\sigma }^2\) with proper normalization constant behaves similarly to a \(\chi ^2\) distribution with \(\kappa -1\) degrees of freedom. Below, we give the implementation steps for the adjusted qtl (kernel-boot):

- 1.

Replicate series \(e_t\), B times obtaining \(e_t^b, t=1,\ldots ,T\), \(b=1,\ldots ,B\).

- 2.

Compute \((\bar{e}_{t(m)}^b)=m^{-1} \sum _{i=1}^m e^b_{t-i+1}\), \(t=m,\ldots T\) from every replicated series.

- 3.

Estimate the \(\alpha /2\)th and \((1-\alpha /2)\)th quantiles \(\hat{Q}(\alpha /2)\) and \(\hat{Q}(1-\alpha /2)\) using the Epanechnikov kernel density estimator from \(\bar{e}_{T(m)}^b\), \(b=1,\ldots ,B\).

- 4.

The PI for \(\bar{y}_{+1:m}\) is \([L,U]=\bar{y}+[\hat{Q}(\alpha /2),\hat{Q}(1-\alpha /2)]\).

Similarly, the implementation of the adjusted clt method (clt-tdist):

- 1.

Estimate the long-run standard deviation from \(e_t\), \(t=1,\ldots ,T\), using the subsampling estimator (2.18) with block length as proposed by Carlstein (1986).

- 2.

The PI is given by \([L,U]=\bar{y}+[Q_{\kappa -1}^t(\alpha /2),Q_{\kappa -1}^t(1-\alpha /2)]\tilde{\sigma }/\sqrt{m}\).

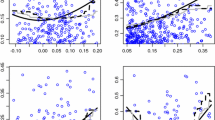

2.2.2 Simulations

An extensive out-of-sample forecasting evaluation based on independent samples is possible only with artificial data. Our simulation setup is designed to assess the performance of the original methods of Zhou et al. (2010) as described in Sect. 2.1.3 and the computational modifications described in 2.2.1. The simulation results provide evidence for the usefulness of these modifications in an artificial setup based on possibly long-memory arma-like processes. This setup would provide an advantage for approaches described in 2.1.1 and 2.1.2, should we challenge them. We leave this task for the next section and real data.

We adopt the following four scenarios for the \(e_t\) process from Zhou et al. (2010):

- (i)

\(e_t=0.6e_{t-1}+\sigma \epsilon _t\), for i.i.d mixture-normal \(\epsilon _t\sim 0.5 N(0,1)+0.5 N(0,1.25)\),

- (ii)

\(e_t=\sigma \sum _{j=0}^\infty (j+1)^{-0.8}\epsilon _{t-j}\), with noise as in (i),

- (iii)

\(e_t=0.6e_{t-1}+\sigma \epsilon _t\), for stable \(\epsilon _t\) with heavy tail index 1.5 and scale 1,

- (iv)

\(e_t=\sigma \sum _{j=0}^\infty (j+1)^{-0.8}\epsilon _{t-j}\), with noise as in (iii),

which correspond to (i) light tail and short memory, (ii) light tail and long memory, (iii) heavy tail and short memory, and (iv) heavy tail and long memory DGPs. For each scenario, we generate pseudo-data of length \(T+m\), using the first T observations for estimation and the last m for evaluation. The experiment is repeated \(N_{\text {trials}}=10~000\) times for each scenario.

The choice of parametersFootnote 6\(T=260,m=20, 30,40,60,90,130\) and \(\sigma =1.31\) mimics our setup for the real-data experiment in the next section. Following Müller and Watson (2016), we run our simulation for the nominal coverage probabilities \(p=1-\alpha =90\%\) (see Table 1A), resp. \(=67\%\) (see Table 1B), and compute the empirical coverage probability

where \(\mathbb {I}\) for the ith trial is 1 when the future mean for the ith trial \(\bar{e}_{i,+1:m}\) is covered by the \([L,U]_i\) and 0 otherwise. Furthermore, we report the relative median width

where \(\hat{Q}(\cdot )\) denotes the corresponding quantile of the empirical distribution of \(\bar{e}_{+1:m}\), estimated from \(\bar{e}_{i,+1:m}, i=1,\ldots ,N_\text {trials}\).

We focus on the evaluation under the longest horizon \(m=130\).

Scenario (i) When \(m=130\) the original qtl covers the future realizations in only around \(48\%\) of cases, while the nominal coverage is \(90\%\). Employing the kernel quantile adjustment on qtl increases this number by 4 percent points (pp), and when combined with the adjustment based on bootstrapping it yields an additional 26pp on top. Intuitively, using Student’s t quantiles (instead of normal) leads to a higher coverage probability for the clt. As expected, the two methods perform similarly well in this particular scenario.

Scenario (ii) Long memory of the DGP has a strongly negative impact on both methods. The combined kernel-bootstrap adjustment increases the coverage of qtl by 20pp, which is, however, still very low. The same holds for the performance of clt under t-quantile adjustment.

Scenario (iii) Heavy-tailed noise has also a negative impact on the original clt (coverage probability falls by 13pp compared to the light-tailed case), whereas qtl, as expected, is more robust (falls by 4–6pp). The kernel-bootstrap adjustment increases coverage probability by 27pp for the qtl, whereas the clt- tdist yields only negligible improvement compared to the original clt.

Scenario (iv) The combined effect of (ii) and (iii) cuts the coverage probabilities further down—below \(45\%\). The proposed adjustments increase the coverage probabilities by up to 10pp.

Overall, for the short and medium horizons, i.e., \(m=20,\ldots ,60\), we corroborate the conclusion from Zhou et al. (2010) that the (original) clt loses against the (original) qtl. However, both original methods exhibit rapid decay in their coverage probabilities as the forecasting horizon grows. For instance, in the scenario (iv) the gap between horizon \(m=20\) and \(m=130\) for the qtl is 47pp. Concerning the width of the PIs, we can see that both adjusted and original methods underestimate the dispersion and the gap between the width of PIs and the width of the empirical inter-quantile range increases with the horizon. However, our computational adjustments improve the original methods consistently over all scenarios. The improvement is most remarkable for the combined adjustment (kernel-boot).

3 Forecast comparison with long financial time series

This section summarizes a real-data POOS forecasting comparison for:

- (zxw)

adjusted PIs by Zhou et al. (2010),

- (mw)

robust bayes PIs by Müller and Watson (2016),

- (prr)

bootstrap PIs by Pascual et al. (2004, 2006) augmented by their analytical counterpart.

Data and setup for POOS exercise The data on univariate time series \(y_t\) are sampled at equidistant times \(t=1,\ldots ,T\). We forecast the average of m future values \(\bar{y}_{+1:m}=m^{-1}\sum _{t=1}^m y_{T+t}\). We design our POOS comparison using the following three time series (plots of the series are given in “Appendix A”),

- (spret)

S&P 500 value weighted daily returns including dividends available from January 2, 1926, till December 31, 2014, with a total of 23, 535 observations,

- (spret2)

squared daily returns, with the same period and

- (tb3m)

nominal interest rates for 3-month US Treasury Bills available from April 1, 1954, till August 13, 2015, with a total of 15,396 observations.

The sample size for post-WWII quarterly macroeconomic time series is \(4\times 68=272\) observations. We mimic the macroeconomic forecasting setup in that we use a rolling sample estimation with sample size \(T=260\) days (i.e., 1 year of daily data), and forecasting horizon \(m =20,30,40,60,90\) and 130 days. The rolling samples are overlapping in the last (resp. first) \(T-m\) observations, so that, e.g., for \(m=130\), the consecutive samples share half the observations. Hence, for the returns time series and for \(m=130\) (resp. \(m=20\)), we get \(N_{\text {trials}}=178\) (resp. 1163) non-overlapping in-or-out POOS trials. All models are selected and parameters estimated anew at each forecast origin.

The simulation results in Sect. 2.2.2 have shown that zxw PIs have decent coverage for short-memory \(e_t\sim I(0)\), but lose the coverage rapidly if the process has long memory. As a remedy, we apply an appropriate transformation before we use re-sampling and perform the reversed transformation immediately before the estimation of the kernel quantiles (see “Appendix B”). The re-sampling scheme also benefits from the prior transformation, since the stationary bootstrap is suitable for weakly dependent series. For zxw, we assume spret\(\sim I(0)\), spret2\(\sim I(d)\) with \(d=0.5\) (see Andersen et al. 2003) and tb3m\(\sim I(1)\), and we replace \(e_t\) by respective differences \(de_t=(1-L)^de_t\) (with L as lag operator). Concerning the bootstrap/analytic PIs for arfima(p,d,q)–garch(P,Q), we report only the best empirical coverage probability \(\hat{p}\) and corresponding relative width \(\hat{w}\) among two choices of \((P,Q)\in \left\{ (0,0), (1,1)\right\} \).

POOS results Similarly as in Sect. 2.2.2, we evaluate the coverage probability (2.20) and relative median width (2.21), for nominal coverage probabilities \(90\%\) (see Table 2A) and \(67\%\) (see Table 2B). Overall, the results show tight competition between mw and zxw. Better coverage probability is generally compensated by a larger width, hence less precision. Only for tb3m, zxw performs better in both aspects. The prr PIs show mixed performance, and it is difficult to draw any general conclusion whether one should prefer averaging of series (series) or averaging the forecasts (4cast) and whether to use analytic formulas (anlt) rather than bootstrapping (boot) to obtain PIs. We keep our focus on the coverage yield for the longer horizons.

spret Based on the simulation results for short-memory and heavy-tailed series, we expect that both methods of zxw should give decent coverage probability close to the nominal level. The real-data performance is better than suggested using artificial data, with an average drop of 9 resp. 12pp for kernel-boot resp. clt-tdist below the nominal level. On the other hand, mw exceed the nominal coverage even with the naive method. The difference in coverage probability between robust and kernel-boot reaches 15pp. The zxw provide advantage regarding the width, as the robust has twice the width of kernel-boot for \(m=130\). The prr gives decent coverage only for short horizon. For medium and long horizons, both the coverage and the width of prr exhibit a rapid decay. Averaging series dominates over averaging forecasts by 10pp. To our surprise, the bootstrap PIs do not outperform the analytic PIs. Regarding the width, the mw are by 40pp more conservative than the empirical inter-quantile range of the out-of-sample mean-returns, whereas zxw resp. prr are 23–30pp resp. 31–43pp below the inter-quantile range width.

spret2 Realized volatility is known for the persistence and heavy tails. The mw give slightly lower coverage probabilities than zxw compensated by a relatively smaller width, thus better precision. With the growing horizon all prr methods suffer a drop in coverage, at least 40pp below the nominal level, accompanied by the largest reduction of width among all methods. The bootstrap PIs dominate over analytic, and the competition between 4cast and series is tight. Concerning the relative width, when compared to the previous case of returns, all methods provide very narrow PIs. We believe that the seemingly shrinking width of PIs is caused by a larger dispersion of the entire spret2 [entering the denominator of (2.21)] compared to the dispersion of each local average [the nominator in (2.21)]. Note that for spret2 the denominator in (2.21) does not provide adequate scale and the discrepancy will become even worse for more persistent tb3m.

tb3m Interest rates exhibit strong persistence, and for enhanced performance of zxw, we again apply differencing, but with \(d=1\). Note that the naive PIs have coverage probabilities as low as \(25\%\). The coverage probabilities of all methods are lower than for the last two series, but zxw performs better than mw for all horizons. Moreover, zxw gives better results in terms of width. The coverage probability for prr falls far below the nominal level as the horizon grows. Here, series dominates 4cast, and bootstrap PIs are inferior to the analytic PIs, even though with only half the nominal coverage.

Except spret, clt-tdist gives slightly higher coverage probabilities than kernel-boot corresponding to smaller precision. Eventually, we prefer the kernel-boot method and use it in the following section for computing PIs for eight economic time series and S&P 500 returns.

4 Prediction intervals for economic series’ growth rates and S&P 500 returns

Müller and Watson (2016), in Table 5 on pages 1731–1732, gave their long-run PIs for eight quarterly post-WWII US economic time series and quarterly returns. Since our kernel-boot method performed well in the last real-data POOS comparison, we employ this method in order to obtain alternative PIs for these series. We report these PIs in Tables 3 and 4. Müller and Watson (2016) compare their PIs to those published by CBO. They conclude that some similarities between their PIs for series such as GDP are due to a combination of (i) CBO’s ignorance for parameter uncertainty and (ii) CBO’s ignorance of possible anti-persistence of GDP during Great Moderation. Since the effects of (i) and (ii) on the PIs width are the opposite, they eventually seem to cancel out.

The eight economic time series are real per capita GDP, real per capita consumption expenditures, total factor productivity, labor productivity, population, inflation (PCEFootnote 7), inflation (CPIFootnote 8) and Japanese inflation (CPI)—all transformed into log-differences. (Plots are given in “Appendix A.”) The data are available from 1Q-1947 till 4Q-2014, and we forecast them over next \(m= 10,25\) and 50 years. For a subset of these series, we report results based on longer (yearly) sample starting in 1Q-1920, and we add the horizon \(m=75\) years for these yearly series.

For per capita real GDP, per capita consumption and productivity, we use differencing with \(d=0.5\) for the kernel-boot PIs. Thus, these intervals are wider than in Müller and Watson (2016), especially those for GDP. This case is similar to the case of realized volatility in the previous section. Wide PIs are often considered as a failure of the forecasting method or model. On the other hand, they can also reflect the higher uncertainty about the series future. The width of PIs for GDP is not surprising given that similar as CBO, we do not account for the possible anti-persistence during the Great Moderation. With the longer yearly sample, our PIs get even wider, as a result of higher volatility in the early twentieth century. Interestingly, the growth in Labor production seems to be higher in general than reported by Müller and Watson (2016).

Consumption, population and inflation are well known as quite persistent. Therefore, we would expect that similarly as in case of interest rates, kernel-boot could give better coverage and possibly narrower PIs than robust. The uncertainty is similarly large according to both our kernel-boot and robust, but the location is generally shifted downward, especially for inflation, where the shift is about \(-2 \text {pp}\) compared to Müller and Watson (2016).

Finally, for the quarterly returns, we might expect kernel-boot to give less conservative thus narrow estimates, and we see this happening with discrepancy growing with the forecasting horizons. It is clear that robust is very conservative in uncertainty about positive returns, where the difference from kernel-boot reached \(11 \text {pp}\). Employing the longer yearly time series makes the difference fall to \(3 \text {pp}\). On the other hand, \(3 \text {pp}\) is a lot from an investors perspective.

5 Discussion

We have constructed prediction intervals for univariate economic time series. Our forecasting comparison shows that even the simple methods of Zhou et al. (2010) provide a valid alternative for sophisticated prediction intervals designed specifically for the economic framework by Müller and Watson (2016). However, based on our simulation results, we emphasize that both the methods and the series need to be suitably adjusted, especially under the small sample constraint, which, on the other hand, is quite common in practice. Based on the comparison results, we eventually provided alternative long-run prediction intervals for eight US economic indicators.

Forecasting average growth of economic series over the coming decades is a very ambitious task, and naturally, there are doubts about its usefulness in practice. The test of Breitung and Knüppel (2018), whether a forecast is informative, is based on the prediction error variance. They conclude that economic forecasts beyond a horizon of several quarters become uninformative. At first sight, such a claim seems to be an argument against following the research of Müller and Watson (2016) and Zhou et al. (2010). However, there are some differences in the assumptions and targets which have to be carefully analyzed before we make such statements. The assumption of a long-memory component is crucial, and it is hard to verify and distinguish it from a possible structural break. In our paper, we did not tackle the issue of whether long-term predictions are informative or not. We instead probed into the existing methods and provided new empirical comparison results.

Throughout this paper, we focused on PIs estimated from historical data on the predicted series. A multivariate or high-dimensional extension would, of course, be attractive. It is widely recognized that big data contain additional forecasting power. Unfortunately, in the economic literature, the boom of forecasting with many predictors (e.g., Stock and Watson 2012; Elliott et al. 2013; Kim and Swanson 2014) is mainly focused on short horizons and point-forecasting (for an exception see Bai and Ng 2006). This is not a coincidence. Many economic time series exhibit persistence (of varying degrees), and this is their essential property in the long run. These long-term effects, combined over many series, are difficult to understand, partially due to their dependence on unknown nuisance parameters (see Elliott et al. 2015). The role of cointegration in long-run forecasting is investigated by Christoffersen and Diebold (1998).

We do not use some methods such as quantile (auto-) regression (Koenker 2005) in the current study, and the out-of-sample forecasting comparison could be enhanced by statistical tests (see Clements and Taylor 2003; Gneiting and Raftery 2007, for example).

An extension (including the theory) of Zhou et al. (2010) into a high-dimensional regression framework using the LASSO estimator is currently being developed. Even more challenging is a case of multivariate target series and subsequent construction of simultaneous prediction intervals which can have interesting implications for market trading strategies.

Notes

Available from https://www.cbo.gov/publication/52480.

Available from https://www.govinfo.gov/app/details/GPO-FCIC.

Anti-persistence can be observed as well, often as a result of (over-) differencing.

Instead of the normal quantiles, we rather utilize Student’s t where df is estimated by ML.

The replication files for these methods are available in MATLAB from M. Watson’s homepage.

The value of \(\sigma \) was obtained from an ar(1) model fitted to the full data set of S&P 500 returns.

Personal consumption expenditure deflator.

Consumer price index.

References

Andersen TG, Bollerslev T, Diebold FX, Labys P (2003) Modeling and forecasting realized volatility. Econometrica 71(2):579–625

Bai J, Ng S (2006) Confidence intervals for diffusion index forecasts and inference for factor-augmented regressions. Econometrica 74:1133–1150

Baillie RT (1996) Long memory processes and fractional integration in econometrics. J Econ 73:5–59

Bansal R, Kiku D, Yaron A (2016) Risks for the long run: estimation with time aggregation. J Monet Econ 82:52–69

Box GE, Jenkins GM, Reinsel GC, Ljung GM (2015) Time series analysis: forecasting and control. Wiley, London

Breitung J, Knüppel M (2018) How far can we forecast? Statistical tests of the predictive content. Deutsche Bundesbank Discussion Paper No. 07/2018

Carlstein E (1986) The use of subseries values for estimating the variance of a general statistic from a stationary sequence. Ann Stat 14:1171–1179

Chatfield C (1993) Calculating interval forecasts. J Bus Econ Stat 11(2):121–135

Cheng X, Liao Z, Schorfheide F (2016) Shrinkage estimation of high-dimensional factor models with structural instabilities. Rev Econ Stud 83(4):1511–1543

Christoffersen PF, Diebold FX (1998) Cointegration and long-horizon forecasting. J Bus Econ Stat 16:450–458

Clements MP, Taylor N (2003) Evaluating interval forecasts of high-frequency financial data. Appl Econ 18:445–456

Dehling H, Fried R, Shapirov O, Vogel D, Wornowizki M (2013) Estimation of the variance of partial sums of dependent processes. Stat Probab Lett 83(1):141–147

Diebold FX, Linder P (1996) Fractional integration and interval prediction. Econ Lett 50:305–313

Diebold FX, Rudebusch GD (1989) Long memory and persistence in aggregate output. J Monet Econ 24:189–209

Elliott G, Gargano A, Timmermann A (2013) Complete subset regressions. J Econ 177(2):357–373

Elliott G, Mller UK, Watson MW (2015) Nearly optimal tests when a nuisance parameter is present under the null hypothesis. Econometrica 83(2):771–811

Falk M (1984) Relative deficiency of kernel type estimators of quantiles. Ann Stat 12(1):261–268

Falk M (1985) Asymptotic normality of the kernel quantile estimator. Ann Stat 13(1):428–433

Ghalanos A (2017) rugarch: Univariate GARCH models. R package version 1.3-8

Gneiting T, Raftery AE (2007) Strictly proper scoring rules, prediction, and estimation. J Am Stat Assoc 102(477):359–378

Gonçalves S, de Jong R (2003) Consistency of the stationary bootstrap under weak moment conditions. Econ Lett 81(2):273–278

Han H, Linton O, Oka T, Whang Y-J (2016) The cross-quantilogram: measuring quantile dependence and testing directional predictability between time series. J Econ 193(1):251–270

Kim H, Swanson N (2014) Forecasting financial and macroeconomic variables using data reduction methods: New empirical evidence. J Econ 178:352–367

Kitsul Y, Wright J (2013) The economics of options-implied inflation probability density functions. J Financ Econ 110:696–711

Koenker R (2005) Quantile regression. Cambridge University Press, Cambridge

Künsch HR (1989) The jackknife and the bootstrap for general stationary observations. Ann Stat 17(3):1217–1241

Lahiri SN (2013) Resampling methods for dependent data. Springer, Berlin

Lütkepohl H (2006) Forecasting with varma models. In: Granger G, Granger WJ, Timmermann AG (eds) Handbook of economic forecasting, vol 1. Elsevier B.V., North Holland, pp 287–325

Marcellino M (1999) Some consequences of temporal aggregation in empirical analysis. J Bus Econ Stat 17(1):129–136

Müller U, Watson M (2016) Measuring uncertainty about long-run predictions. Rev Econ Stud 83(4):1711–1740

Pascual L, Romo J, Ruiz E (2004) Bootstrap predictive inference for arima processes. J Time Ser Anal 25(4):449–465

Pascual L, Romo J, Ruiz E (2006) Bootstrap prediction for returns and volatilities in garch models. Comput Stat Data Anal 50(9):2293–2312

Pastor L, Stambaugh RF (2012) Are stocks really less volatile in the long run. J Finance 67(2):431–478

Patton A, Politis DN, White H (2009) Correction to automatic block-length selection for the dependent bootstrap. Econ Rev 28(4):372–375

Politis DN, Romano JP (1994) The stationary bootstrap. J Am Stat Assoc 89:1303–1313

Politis DN, White H (2004) Automatic block-length selection for the dependent bootstrap. Econ Rev 23(1):53–70

Sheather SJ, Marron JS (1990) Kernel quantile estimators. J Am Stat Assoc 85:410–416

Silverman B (1986) Density estimation for statistics and data analysis. Chapman & Hall/CRC, London

Stock J, Watson M (2012) Generalised shrinkage methods for forecasting using many predictors. J Bus Econ Stat 30(4):482–493

Stock JH, Watson MW (2005) Understanding changes in international business cycle dynamics. J Eur Econ Assoc 3:968–1006

Sun S, Lahiri SN (2006) Bootstrapping the sample quantile of a weakly dependent sequence. Sankhyā Indian J Stat 68:130–166

White H (2000) A reality check for data snooping. Econometrica 68(5):1097–1126

Wu WB (2005) Nonlinear system theory: another look at dependence. Proc Natl Acad Sci USA 102(40):14150–14154 (electronic)

Wu WB, Woodroofe M (2004) Martingale approximations for sums of stationary processes. Ann Probab 32(2):1674–1690

Zhang T, Wu WB (2015) Time-varying nonlinear regression models: nonparametric estimation and model selection. Ann Stat 43(2):741–768

Zhou Z, Xu Z, Wu WB (2010) Long-term prediction intervals of time series. IEEE Trans Inform Theory 56(3):1436–1446

Acknowledgements

Open access funding provided by University of Vienna. We would like to thank Jörg Breitung, Francis Diebold, Alexander Kment, Lubos Pastor, Irina Pimenova, Justin Veenstra, Mark Watson as well as the participants of the conference: Big Data in Predictive Dynamic Econometric Modeling held at the University of Pennsylvania and the 1st Vienna Workshop on Economic Forecasting 2018 held at the Institute for Advanced Studies for helpful discussion and for answering our questions. Special thanks go to Eric Nesbitt and the two anonymous referees. We also acknowledge the computational resources provided by the Vienna Scientific Cluster. Note that the opinions expressed in the paper are those of the authors and do not necessarily reflect the opinions of the Institute for Financial Policy.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Funding

The research was partly supported by grant NSF/DMS 1405410. M. Chudý received financial support from J.W. Fulbright Commission for Educational Exchange in the Slovak Republic, The Ministry of Education, Science, Research and Sport of the Slovak Republic and the Stevanovich Center for Financial Mathematics.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Figures of time series used in Sects. 3 and 4

Appendix B: Additional steps for implementation of zxw and mw

All macro-series in Sect. 4 are transformed to log-differences. This does not preclude long-memory dynamics or even a unit root. Note that if \(y_t\) is I(1) and has deterministic trend component rather than a constant level, the location of the PI would have to be shifted to \(\frac{m+1}{2} \overline{\varDelta y}\) instead of \(\bar{y}\).

kernel-boot:

- 1.

Compute the mean adjusted series \(e_t=y_t-\bar{y}\), \(t=1,\ldots ,T\).

- 2.

Fix \(d=0.5\) or \(d=1\) and compute the difference series \(de_t=(1-L)^d\), \(t=2,\ldots ,T\), where L denotes lag operator.

- 3.

Replicate \(de_t, t=2,\ldots ,T\)B times getting \(de_t^b, t=2,\ldots ,T\), \(b=1,\ldots ,B\).

- 4.

Compute the series of overlapping rolling means \(\bar{de}_{t(m)}^b=m^{-1} \sum _{i=1}^m de^b_{t-i+1}\), \(t=m,\ldots T\) from every replicated series.

- 5.

Estimate quantiles \(\hat{Q}(\alpha /2)\) and \(\hat{Q}(1-\alpha /2)\) from \(\bar{e}^b_{T(m)}\), \(b=1,\ldots ,B\) with \(T=260\) obtained as \(\bar{e}^b_{T(m)} = m^{-1}\sum _{i=1}^m(1-L)^{-d}de^b_{T-i+1}\).

- 6.

The PI is given by \([L,U]=\bar{y}+[Q_{\kappa -1}^t(\alpha /2),Q_{\kappa -1}^t(1-\alpha /2)]\sigma /\sqrt{m}\).

clt-tdist:

- 1.

Compute the mean adjusted series \(e_t=y_t-\bar{y}\), \(t=1,\ldots ,T\).

- 2.

Fix \(d=0.5\) or \(d=1\) and compute the difference series \(de_t=(1-L)^d\), \(t=2,\ldots ,T\), where L denotes lag operator.

- 3.

Estimate the long-run standard deviation \(\tilde{\sigma }\) of \(de_t\), \(t=2,\ldots ,T\).

- 4.

Compute the long-run standard deviation of \(e_t\): for \(d=1\), \(\sigma _e(\tilde{\sigma })=\tilde{\sigma }\sqrt{(m+1)/2}\) and forFootnote 9\(d=0.5\), \(\sigma _e(\tilde{\sigma })=\tilde{\sigma }m^{-1}\sqrt{\sum _{i=1}^m(\sum _{j=0}^{m-i}(-1)^j {{-0.5}\atopwithdelims (){j}})^2}\).

- 5.

The PI is given by \(\bar{y}+[Q_{\kappa -1}^t(\alpha /2),Q_{\kappa -1}^t(1-\alpha /2)]\sigma _e\).

robust after steps 1–4.

- 5.1

Compute weights for specific choice of q and m / T and the prior from step 3.

- 5.2

Numerically approximate s. c. least favorable distribution (LFD) of \(\theta \) for specific choice of q and m / T (see “Appendix” of Müller and Watson (2016)).

- 5.3

Using the weights and the LFD solve the minimization problem (14) on page 1721 in Müller and Watson (2016) to get quantiles which give uniform coverage and minimize the expected PIs width.

- 6.

The same as in bayes with the robust quantiles.

Appendix C: Derivation of an error standard deviation for time-aggregated forecast

The formula for computing prediction error sd is \(\hat{\text {as}}_{T,+1:m}=\frac{1}{m} \sqrt{\sum _{i=1}^m(\hat{\sigma }_{T,T+i}\sum _{j=0}^{m-i}\hat{\varPsi }_j)^2}\), where \(\hat{\varPsi }_0=1\) and \(\hat{\varPsi }_j\),\(j=2,\ldots ,m\) are the estimates of coefficients from the causal representation of \(y_t\). The \(\hat{\sigma }_{T,T+i}\) is the garch forecast for innovations deviation. For simplicity, we show the derivation for the case of constant innovation variance.

Assume that \(y_t\) has causal representation:

where \(\epsilon _t\sim (0,\sigma ^2)\) is the innovation process with constant second moment. Standing at time T the ith-step-ahead prediction error can be expressed as

The average prediction error over horizons \(i=1,\ldots ,m\) is therefore given by

with \(\varPsi _0=1\). Now, this can be rewritten as

where \(c_0=\varPsi _0=1\). Since innovations are uncorrelated, we can compute the variance of average prediction error over the horizons \(i=1,\ldots ,m\) as

Appendix D: Discussion on CLT and QTL methods

For the interested reader here we provide some discussion on the justification of the two original methods from Zhou et al. (2010) and how one can verify them in linear and possibly nonlinear processes. First, we discuss a result for the CLT method. Assume the process \(e_t\) admits the following linear form

where \(\epsilon _t\) are mean-zero, independent and identically distributed (i.i.d.) random variables with finite second moment. For this structural form, we can evaluate (2.14). We assume a particular decay rate of \(a_i\) and state the following theorem.

Theorem 1

Assume the process \(e_t\) admits representation (5.1) where \(a_i\) satisfies

where larger \(\chi \) and A mean fast decay rate of dependence. Further assume, \(A>5/2\) if \(1<\chi <3/2\). Then the sufficient condition (2.14) implies that convergence (2.15) to the asymptotic normal distribution holds.

Proof

where \(b_i=a_i+\cdots +a_m\). Note that \(\sum _{i=1}^m b_i^2\) assumes the following value depending on \(\chi >3/2\) or not. Thus, (2.14) holds since by elementary calculations,

\(\square \)

Note that Theorem 1 concerns only linear processes. This class covers a large class of time series processes already. However, we do not necessarily require linearity of the error process \((e_t)\). One can equivalently use the functional dependence measure introduced in Wu (2005) to state an equivalent result for stationary possibly nonlinear error processes of the form

where \(\epsilon _i\) are i.i.d. random variables. For this process assuming \(p \ge 2\) moments one can define the functional dependence measure

where \(\epsilon ^*_{(\cdot )}\) is an i.i.d. copy of \(\epsilon _{(\cdot )}\) process. For the specific case of \(e_t\) assuming a linear form as specified in (5.1), we have \(\delta _{j,p}=a_j\). This lays down a straightforward way in how our results for the linear process can be easily extended to nonlinear processes.

Next, for the sake of completeness, we borrow a result from Zhou et al. (2010) that discusses the quantile consistency for the QTL method. Recall that we will exhibit as promised that this method allows for the situation where the i.i.d. innovations \(\epsilon _t\) in the decomposition (5.1) can have both light tails, i.e., \({\mathbb {E}}(|\epsilon _t|^2)<\infty \), and heavy tails, i.e., \(\alpha <2\) where \(\alpha =\sup _{r>0} \{r:{\mathbb {E}}(|\epsilon _t|^r)<\infty \}\).

We will impose the following conditions on the coefficients for short- or long-range dependence and also assume boundedness of the density of \(\epsilon _t\) in the following sense:

where s. v. f. is a function g(x) such that \(\lim _{x \rightarrow \infty } g(tx)/g(x)=1 \) for any t. The condition (SRD) is a classic short-range-dependent condition (see Box et al. 2015, for more discussion). (LRD) refers to the long memory of the time series, and it is satisfied by a large class of models such as arfima. (DEN) is also a mild condition since by inversion theorem, all symmetric stable distributions fall under this condition. We borrow the following result from Zhou et al. (2010) for linear process. It is worth noting that one can extend this to nonlinear processes as well by defining the coupling-based dependence on predictive density of \(e_t\) as done in Zhang and Wu (2015), but we postpone that discussion for a future paper.

Quantile consistency results for the quantile method For a fixed \(0<q<1\), let \(\hat{Q}(q)\) and \(\tilde{Q}(q)\) denote the qth sample quantile and actual quantile of \((m~\bar{e}_{t(m)}/H_m);\)\(t=m,\ldots ,T\) where, using (5.5),

We have the following different rates of convergence of quantiles based on the nature of tail or dependence:

Theorem 2

(Zhou et al. (2010) Th 1:4)[Quantile consistency result]

Light-tailed (SRD) Suppose (DEN) and (SRD) hold and \({\mathbb {E}}(\epsilon _j^2)<\infty \). If \(m^3/T \rightarrow 0\), then for any fixed \(0<q<1\),

$$\begin{aligned} |\hat{Q}(q)-\tilde{Q}(q)|=O_{{\mathbb {P}}}(m/\sqrt{T}). \end{aligned}$$(5.7)Light-tailed (LRD) Suppose (LRD) and (DEN) hold with \(\gamma \) and \(l(\cdot )\) in (5.5). If \(m^{5/2-\gamma }T^{1/2-\gamma }l^2(T) \rightarrow 0\), then for any fixed \(0<q<1\),

$$\begin{aligned} |\hat{Q}(q)-\tilde{Q}(q)|=O_{{\mathbb {P}}}(mT^{1/2-\gamma }|l(T)|). \end{aligned}$$(5.8)Heavy-tailed (SRD) Suppose (DEN) and (SRD) hold and \({\mathbb {E}}(|\epsilon _j|^{\alpha })<\infty \) for some \(1<\alpha <2\). If \(m=O(T^{k})\) for some \(k<(\alpha -1)/(\alpha +1)\), then for any fixed \(0<q<1\),

$$\begin{aligned} |\hat{Q}(q)-\tilde{Q}(q)|=O_{{\mathbb {P}}}(mT^{\nu })\text { for all }\nu >1/\alpha -1. \end{aligned}$$(5.9)Heavy-tailed (LRD) Suppose (LRD) holds with \(\gamma \) and \(l(\cdot )\) in (5.5). If \(m=O(T^{k})\) for some \(k<(\alpha \gamma -1)/(2\alpha +1-\alpha \gamma )\), then for any fixed \(0<q<1\),

$$\begin{aligned} |\hat{Q}(q)-\tilde{Q}(q)|=O_{{\mathbb {P}}}(mT^{\nu })\text { for all }\nu >1/\alpha -\gamma . \end{aligned}$$(5.10)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Chudý, M., Karmakar, S. & Wu, W.B. Long-term prediction intervals of economic time series. Empir Econ 58, 191–222 (2020). https://doi.org/10.1007/s00181-019-01689-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-019-01689-2