Abstract

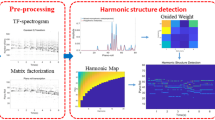

Pitch or fundamental frequency (\(f_0\)) estimation is a fundamental problem extensively studied for its potential speech and clinical applications. The existing \(f_0\) estimation methods degrade in performance when applied over real-time audio signals with varying \(f_0\) modulations and high SNR environment. In this work, a \(f_0\) estimation method using both signal processing and deep learning approaches is developed. Specifically, we train a convolutional neural network to map the periodicity-rich input representation to pitch classes, such that the number of pitch classes is drastically reduced compared to existing deep learning approaches. Then, the accurate \(f_0\) is estimated from the nominal pitch classes based on signal processing approaches. The observations from the experimental results showed that the proposed method generalizes to unseen modulations of speech and noisy signals (with various types of noise) for large-scale datasets. Also, the proposed hybrid model significantly reduces the learning parameters required to train the model compared to other methods. Furthermore, the evaluation measures showed that the proposed method performs significantly better than the state-of-the-art signal processing and deep learning approaches.

Similar content being viewed by others

References

H. Ba, N. Yang, I. Demirkol, W. Heinzelman, BaNa: a hybrid approach for noise resilient pitch detection. In 2012 IEEE Statistical Signal Processing Workshop (SSP) (IEEE, 2012), pp 369–372

A. Camacho, J.G. Harris, A sawtooth waveform inspired pitch estimator for speech and music. J. Acoust. Soc. Am. 124(3), 1638–1652 (2008)

W. Chu, A. Alwan, Reducing f0 frame error of f0 tracking algorithms under noisy conditions with an unvoiced/voiced classification frontend. In 2009 IEEE International Conference on Acoustics, Speech and Signal Processing (IEEE, 2009), pp. 3969–3972

A. De Cheveigné, H. Kawahara, YIN, a fundamental frequency estimator for speech and music. J. Acoust. Soc. Am. 111, 1917–1930 (2002)

T. Drugman, A. Alwan, Joint robust voicing detection and pitch estimation based on residual harmonics. In Twelfth Annual Conference of the International Speech Communication Association (2011)

T. Drugman, T. Dutoit, Glottal closure and opening instant detection from speech signals. In Tenth Annual Conference of the International Speech Communication Association (2009)

T. Drugman, G. Huybrechts, V. Klimkov, A. Moinet, Traditional machine learning for pitch detection. IEEE Signal Process. Lett. 25(11), 1745–1749 (2018)

T. Drugman, M. Thomas, J. Gudnason, P. Naylor, T. Dutoit, Detection of glottal closure instants from speech signals: a quantitative review. IEEE Trans. Audio Speech Lang. Process. 20(3), 994–1006 (2011)

H. Duifhuis, L.F. Willems, R.J. Sluyter, Measurement of pitch in speech: an implementation of Goldstein’s theory of pitch perception. J. Acoust. Soc. Am. 71(6), 1568–1580 (1982)

P.N. Garner, M. Cernak, P. Motlicek, A simple continuous pitch estimation algorithm. IEEE Signal Process. Lett. 20(1), 102–105 (2012)

B.R. Glasberg, B.C.J. Moore, Derivation of auditory filter shapes from notched-noise data. Hear. Res. 47(1–2), 103–138 (1990)

S. Gonzalez, M. Brookes, A pitch estimation filter robust to high levels of noise (PEFAC). In 2011 19th European Signal Processing Conference (IEEE, 2011), pp 451–455

K. Han, D.L. Wang, Neural networks for supervised pitch tracking in noise. In 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2014), pp. 1488–1492

N. Henrich, Study of the glottal source in speech and singing: modeling and estimation, acoustic and electroglottographic measurements, perception. Université Pierre et Marie Curie-Paris VI, Theses (2001)

N. Henrich, C. d’Alessandro, B. Doval, M. Castellengo, Glottal open quotient in singing: measurements and correlation with laryngeal mechanisms, vocal intensity, and fundamental frequency. J. Acoust. Soc. Am. 117(3), 1417–1430 (2005)

D.J. Hermes, Measurement of pitch by subharmonic summation. J. Acoust. Soc. Am. 83(1), 257–264 (1988)

S. Ioffe, C. Szegedy, Batch normalization: accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on International Conference on Machine Learning—volume 37, JMLR.org, ICML’15 (2015), pp 448–456. http://dl.acm.org/citation.cfm?id=3045118.3045167

H. Kawahara, H. Katayose, A. De Cheveigné, R.D. Patterson, Fixed point analysis of frequency to instantaneous frequency mapping for accurate estimation of F0 and periodicity (1999)

H. Kawahara, I. Masuda-Katsuse, A. De Cheveigne, Restructuring speech representations using a pitch-adaptive time-frequency smoothing and an instantaneous-frequency-based F0 extraction: Possible role of a repetitive structure in sounds. Speech Commun. 27(3–4), 187–207 (1999)

J.W. Kim, J. Salamon, P. Li, J.P. Bello, CREPE: a convolutional representation for pitch estimation. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2018), pp. 161–165

J. Kominek, A.W.Black, The CMU Arctic speech databases. In: Fifth ISCA workshop on speech synthesis (2004)

S.G. Koolagudi, R. Reddy, J. Yadav, K.S. Rao, IITKGP-SEHSC: Hindi speech corpus for emotion analysis. In 2011 International conference on devices and communications (ICDeCom) (IEEE, 2011), pp 1–5

B. Liu, J. Tao, D. Zhang, Y. Zheng, A novel pitch extraction based on jointly trained deep BLSTM recurrent neural networks with bottleneck features. In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2017), pp. 336–340

Y. Liu, D.L. Wang, Speaker-dependent multipitch tracking using deep neural networks. J. Acoust. Soc. Am. 141(2), 710–721 (2017)

J. Markel, The SIFT algorithm for fundamental frequency estimation. IEEE Trans. Audio Electroacoust. 20(5), 367–377 (1972)

M. Mauch, S. Dixon, pYIN: a fundamental frequency estimator using probabilistic threshold distributions, 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2014), pp. 659–663

R. Meddis, L. O’Mard, A unitary model of pitch perception. J. Acoust. Soc. Am. 102(3), 1811–1820 (1997)

A.M. Noll, Pitch determination of human speech by the harmonic product spectrum, the harmonic surn spectrum, and a maximum likelihood estimate. In: Symposium on Computer Processing in Communication, vol 19 (University of Broodlyn Press, New York), pp 779–797 (1970)

T.L. Nwe, H. Li, Exploring vibrato-motivated acoustic features for singer identification. IEEE Trans. Audio Speech Lang. Process. 15(2), 519–530 (2007)

F. Plante, G.F. Meyer, W.A. Ainsworth, A pitch extraction reference database. In: Fourth European Conference on Speech Communication and Technology (1995)

A. Pylypowich, E. Duff, Differentiating the symptom of dysphonia. J. Nurse Pract. 12(7), 459–466 (2016)

C. Quam, D. Swingley, Development in children’s interpretation of pitch cues to emotions. Child Dev. 83(1), 236–250 (2012)

L. Rabiner, On the use of autocorrelation analysis for pitch detection. IEEE Trans. Acoust. Speech Signal Process. 25(1), 24–33 (1977)

P. Rengaswamy, G. Reddy, K.S. Rao, P. Dasgupta, A robust non-parametric and filtering based approach for glottal closure instant detection. In: INTERSPEECH, pp 1795–1799 (2016)

M. Ross, H. Shaffer, A. Cohen, R. Freudberg, H. Manley, Average magnitude difference function pitch extractor. IEEE Trans. Acoust. Speech Signal Process. 22(5), 353–362 (1974)

J. Rouat, Y.C. Liu, D. Morissette, A pitch determination and voiced/unvoiced decision algorithm for noisy speech. Speech Commun. 21(3), 191–207 (1997)

K. Saino, H. Zen, Y. Nankaku, A. Lee, K. Tokuda, An HMM-based singing voice synthesis system. In: Ninth International Conference on Spoken Language Processing (2006)

J. Salamon, E. Gómez, Melody extraction from polyphonic music signals using pitch contour characteristics. IEEE Trans. Audio Speech Lang. Process. 20(6), 1759–1770 (2012)

E.D. Scheirer, Tempo and beat analysis of acoustic musical signals. J. Acoust. Soc. Am. 103(1), 588–601 (1998)

M. Schröder, Emotional speech synthesis: a review. In: Seventh European Conference on Speech Communication and Technology (2001)

M.R. Schroeder, Period histogram and product spectrum: new methods for fundamental-frequency measurement. J. Acoust. Soc. Am. 43(4), 829–834 (1968)

J.O. Smith, J.S. Abel, Bark and ERB bilinear transforms. IEEE Trans. Speech Audio Process. 7(6), 697–708 (1999)

T.V. Sreenivas, P.V.S. Rao, Pitch extraction from corrupted harmonics of the power spectrum. J. Acoust. Soc. Am. 65(1), 223–228 (1979)

X. Sun, A pitch determination algorithm based on subharmonic-to-harmonic ratio. In: Sixth International Conference on Spoken Language Processing (2000)

D. Talkin, A robust algorithm for pitch tracking (RAPT). Speech Coding Synth. 495, 518 (1995)

L.N. Tan, A. Alwan, Multi-band summary correlogram-based pitch detection for noisy speech. Speech Commun. 55(7–8), 841–856 (2013)

P. Verma, R.W. Schafer, Frequency estimation from waveforms using multi-layered neural networks. In INTERSPEECH, pp 2165–2169 (2016)

D. Wang, P.C. Loizou, J.H.L. Hansen, F0 estimation in noisy speech based on long-term harmonic feature analysis combined with neural network classification. In Fifteenth Annual Conference of the International Speech Communication Association (2014)

A.C. Wilson, R. Roelofs, M. Stern, N. Srebro, B. Recht, The marginal value of adaptive gradient methods in machine learning. In Advances in Neural Information Processing Systems (2017), pp 4148–4158

M. Wu, D.L. Wang, G.J. Brown, A multipitch tracking algorithm for noisy speech. IEEE Trans. Speech Audio Process. 11(3), 229–241 (2003)

S.A. Zahorian, H. Hu, A spectral/temporal method for robust fundamental frequency tracking. J. Acoust. Soc. Am. 123(6), 4559–4571 (2008)

J. Zhang, J. Tang, L.-R. Dai, RNN-BLSTM based multi-pitch estimation. In INTERSPEECH (2016), pp. 1785–1789

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rengaswamy, P., Reddy, M.G., Rao, K.S. et al. \(hf_0\): A Hybrid Pitch Extraction Method for Multimodal Voice. Circuits Syst Signal Process 40, 262–275 (2021). https://doi.org/10.1007/s00034-020-01468-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-020-01468-w