Abstract

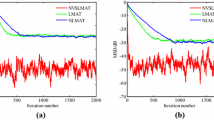

In the framework of the maximum a posteriori estimation, the present study proposes the nonparametric probabilistic least mean square (NPLMS) adaptive filter for the estimation of an unknown parameter vector from noisy data. The NPLMS combines parameter space and signal space by combining the prior knowledge of the probability distribution of the process with the evidence existing in the signal. Taking advantage of kernel density estimation to estimate the prior distribution, the NPLMS is robust against the Gaussian and non-Gaussian noises. To achieve this, some of the intermediate estimations are buffered and then used to estimate the prior distribution. Despite the bias-compensated algorithms, there is no need to estimate the input noise variance. Theoretical analysis of the NPLMS is derived. In addition, a variable step-size version of NPLMS is provided to reduce the steady-state error. Simulation results in the system identification and prediction show the acceptable performance of the NPLMS in the noisy stationary and non-stationary environments against the bias-compensated and normalized LMS algorithms.

Similar content being viewed by others

Notes

Subscripts are used to refer to time indices of vector variables and parentheses to refer to the time indices of scalar variables.

References

J. Arenas-Garcia, A.R. Figueiras-Vidal, A.H. Sayed, Mean-square performance of a convex combination of two adaptive filters. IEEE Trans. Signal Process. 54(3), 1078–1090 (2006). https://doi.org/10.1109/TSP.2005.863126

B.C. Arnold, p-norm bounds on the expectation of the maximum of a possibly dependent sample. J. Multivar. Anal. 17(3), 316–332 (1985)

B. Babadi, N. Kalouptsidis, V. Tarokh, Sparls: the sparse rls algorithm. IEEE Trans. Signal Process. 58(8), 4013–4025 (2010). https://doi.org/10.1109/TSP.2010.2048103

J. Benesty, H. Rey, L.R. Vega, S. Tressens, A nonparametric vss nlms algorithm. IEEE Signal Process. Lett. 13(10), 581–584 (2006)

N.J. Bershad, J.C.M. Bermudez, J.Y. Tourneret, Stochastic analysis of the lms algorithm for system identification with subspace inputs. IEEE Trans. Signal Process. 56(3), 1018–1027 (2008). https://doi.org/10.1109/TSP.2007.908967

J.V. Candy, Bayesian Signal Processing: Classical, Modern, and Particle Filtering Methods, vol. 54 (Wiley, Hoboken, 2016)

K. Crammer, O. Dekel, J. Keshet, S. Shalev-Shwartz, Y. Singer, Online passive-aggressive algorithms. J. Mach. Learn. Res. 7(Mar), 551–585 (2006)

R.C. De Lamare, R. Sampaio-Neto, Adaptive reduced-rank equalization algorithms based on alternating optimization design techniques for mimo systems. IEEE Trans. Veh. Technol. 60(6), 2482–2494 (2011)

J. Fernandez-Bes, V. Elvira, S. Van Vaerenbergh, A probabilistic least-mean-squares filter. In: 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2199–2203. IEEE (2015)

J. Gao, H. Sultan, J. Hu, W.W. Tung, Denoising nonlinear time series by adaptive filtering and wavelet shrinkage: a comparison. IEEE Signal Process. Lett. 17(3), 237–240 (2010)

A. Gilloire, M. Vetterli, Adaptive filtering in subbands with critical sampling: analysis, experiments, and application to acoustic echo cancellation. IEEE Trans. Signal Process. 40(8), 1862–1875 (1992)

S. Haykin, A.H. Sayed, J.R. Zeidler, P. Yee, P.C. Wei, Adaptive tracking of linear time-variant systems by extended rls algorithms. IEEE Trans. Signal Process. 45(5), 1118–1128 (1997)

S.S. Haykin, Adaptive Filter Theory (Pearson Education India, London, 2008)

C. Huemmer, R. Maas, W. Kellermann, The nlms algorithm with time-variant optimum stepsize derived from a bayesian network perspective. IEEE Signal Process. Lett. 22(11), 1874–1878 (2015)

A. Ilin, T. Raiko, Practical approaches to principal component analysis in the presence of missing values. J. Mach. Learn. Res. 11(Jul), 1957–2000 (2010)

S.M. Jung, P. Park, Normalised least-mean-square algorithm for adaptive filtering of impulsive measurement noises and noisy inputs. Electron. Lett. 49(20), 1270–1272 (2013)

S.M. Jung, P. Park, Stabilization of a bias-compensated normalized least-mean-square algorithm for noisy inputs. IEEE Trans. Signal Process. 65(11), 2949–2961 (2017)

J. Liu, X. Yu, H. Li, A nonparametric variable step-size nlms algorithm for transversal filters. Appl. Math. Comput. 217(17), 7365–7371 (2011)

W. Liu, J.C. Principe, S. Haykin, Kernel Adaptive Filtering: A Comprehensive Introduction, vol. 57 (Wiley, Hoboken, 2011)

I.M. Park, S. Seth, S. Van Vaerenbergh, Probabilistic kernel least mean squares algorithms. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 8272–8276. IEEE (2014)

A.H. Sayed, Adaptive Filters (Wiley, Hoboken, 2008)

A.H. Sayed, T. Kailath, A state-space approach to adaptive rls filtering. IEEE Signal Process. Mag. 11(3), 18–60 (1994)

S. Van Vaerenbergh, J. Fernandez-Bes, V. Elvira, On the relationship between online Gaussian process regression and kernel least mean squares algorithms. In: 2016 IEEE 26th International Workshop on Machine Learning for Signal Processing (MLSP), pp. 1–6. IEEE (2016)

S. Van Vaerenbergh, M. Lzaro-Gredilla, I. Santamara, Kernel recursive least-squares tracker for time-varying regression. IEEE Trans. Neural Netw. Learn. Syst. 23(8), 1313–1326 (2012)

S.V. Vaseghi, Advanced Digital Signal Processing and Noise Reduction (Wiley, Hoboken, 2008)

P. Wen, J. Zhang, A novel variable step-size normalized subband adaptive filter based on mixed error cost function. Signal Process. 138, 48–52 (2017)

H. Zhao, Z. Zheng, Bias-compensated affine-projection-like algorithms with noisy input. Electron. Lett. 52(9), 712–714 (2016)

J. Zhao, X. Liao, S. Wang, K.T. Chi, Kernel least mean square with single feedback. IEEE Signal Process. Lett. 22(7), 953–957 (2015)

Z. Zheng, H. Zhao, Bias-compensated normalized subband adaptive filter algorithm. IEEE Signal Process. Lett. 23(6), 809–813 (2016)

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 A

Considering Eqs. (1) and (3), one can rewrite (20) as

where \(R_{n} =\varvec{x}_{n}^\mathrm{T} \varvec{x}_{n} \) is the correlation matrix. Subtracting both sides of (51) from \(w_{o} \), we have

Defining \(\tilde{\varvec{w}}_{n} =w_{o} -\varvec{w}_{n} \), (52) can be rewritten as

where \(\tilde{\varvec{w}}_{i} =w_{o} -\varvec{w}_{i} \). Since \(\sum _{i=1}^{K}\mu _{i,\varvec{w}_{n} } =1\), (53) reduces to

On the other hand, it is clear that \(\left\| \varvec{w}_{n} -\varvec{w}_{i} \right\| =\left\| w_{o} -w_{o} +\varvec{w}_{n} -\varvec{w}_{i} \right\| =\left\| \tilde{\varvec{w}}_{i} -\tilde{\varvec{w}}_{n} \right\| \). Using the reverse triangle inequality, we have

Therefore,

and

The summation over (57) is

Since \(a_1 \le a_3\) and \(a_2 \le a_4\), one can write

Subtracting first inequality of (59) from the second one and vise versa leads to conclude that \(a_1a_4=a_2a_3\). In other words, \(\frac{a_1}{a_3}=\frac{a_2}{a_4}\). Therefore, considering (57), (58), and inequalities (59)\(, \mu _{i,\varvec{w}_{i} } \) can be rewritten as

Therefore, one can write (54) as

Remark 2

If we assume \(\tilde{\varvec{w}}_{i} \simeq \tilde{\varvec{w}}_{n} \), then \(\tilde{\varvec{w}}_{n+1} \) reduces to \(\tilde{\varvec{w}}_{n+1} =\left( I-\alpha R_{n} \right) \tilde{\varvec{w}}_{n} -\alpha \varvec{x}_{n}^\mathrm{T} \varvec{v}(n)\).

1.2 B

Lemma 1

[2]: Assume \(x_{i} ,i=1,\ldots ,m\) are possibly dependent identically distributed random variables with zero mean and finite pth absolute moment assumed, without loss of generality, to be equal to 1. Then

Considering (60), one can write

Using linear approximation of Maclaurin series of \(\exp \left( -\frac{1}{2} \left\| \tilde{\varvec{w}}_{i} \right\| ^{2} \right) \) and \(\exp \left( \left\| \tilde{\varvec{w}}_{j} \right\| \left\| \tilde{\varvec{w}}_{n} \right\| \right) \), one can approximate \(\mu _{i,\tilde{\varvec{w}}_{i} } \) as

Using Lemma 1,

therefore (63) changes to

Taking expectation of both sides of (61), considering (66) and \(\mathrm{\mathrm{E}}\left( \varvec{x}_{n}^\mathrm{T} \varvec{v}(n)\right) =0\), because samples \(\varvec{x}_{n}^\mathrm{T} \) and noise \(\varvec{v}(n)\) are independent, one has

If assumed \({\mathbb E}\left( \tilde{\varvec{w}}_{i} \right) =\beta _{i} {{\mathbb E}}\left( \tilde{\varvec{w}}_{n} \right) \), then (67) can be rewritten as

Without loss of generality, it is assumed that

Rights and permissions

About this article

Cite this article

Ashkezari-Toussi, S., Sadoghi-Yazdi, H. Incorporating Nonparametric Knowledge to the Least Mean Square Adaptive Filter. Circuits Syst Signal Process 38, 2114–2137 (2019). https://doi.org/10.1007/s00034-018-0954-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-018-0954-x