Abstract

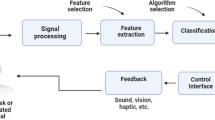

In this paper we focus on the software design of a multimodal driving simulator that is based on both multimodal driver’s focus of attention detection as well as driver’s fatigue state detection and prediction. Capturing and interpreting the driver’s focus of attention and fatigue state is based on video data (e.g., facial expression, head movement, eye tracking). While the input multimodal interface relies on passive modalities only (also called attentive user interface), the output multimodal user interface includes several active output modalities for presenting alert messages including graphics and text on a mini-screen and in the windshield, sounds, speech and vibration (vibration wheel). Active input modalities are added in the meta-User Interface to let the user dynamically select the output modalities. The driving simulator is used as a case study for studying its software architecture based on multimodal signal processing and multimodal interaction components considering two software platforms, OpenInterface and ICARE.

Similar content being viewed by others

8. References

SIMILAR, European Network of Excellence, WP2-OpenInterface platform. http://www.similar.cc. 49, 53

OpenInterface European STREP project. http://www.oi-project.org. 49, 53

J. Bouchet and L. Nigay, “ICARE: A Component-Based Approach for the Design and Development of Multimodal Interfaces”, inProc. CHI’04 conference extended abstract, pp. 1325–1328, ACM Press, 2004. 49, 53

J. Bouchet, L. Nigay, and T. Ganille, “ICARE Software Components for Rapidly Developing Multimodal Interfaces”, inProc. ICMI’04 conference, pp. 251–258, ACM Press, 2004. 49, 53

J.-F. Kamp,Man-machine interface for in-car systems. Study of the modalities and interaction devices. PhD thesis, ENST, Paris, 1998. 50

M. Tonnis, C. Sandor, G. Klinker, C. Lange, and H. Bubb, “Experimental Evaluation of an Augmented Reality Visualization Car Driver’s Attention”, inProc. ISMAR’05, pp. 56–59, IEEE Computer Society, 2005. 50

A. Benoit, L. Bonnaud, A. Caplier, P. Ngo, L. Lawson, D. Trevisan, V. Levacic, C. Mancas-Thillou, and G. Chanel, “Multimodal Focus Attention Detection in an Augmented Driver Simulator”, inProc. eNTERFACE’05 workshop, pp. 34–43, 2005. 50

TORCS Driver Simulator. http://torcs.sourceforge.net. 50, 54

W. Beaudot,The neural information processing in the vertebrate retina: A melting pot of ideas for artifficial vision. Computer science, INPG, Grenoble, December 1994. 51

A. Benoit and A. Caplier, “Head nods analysis: interpretation of non verbal communication gestures”, inIEEE ICIP, (Genova, Italy), 2005. 51

A. Benoit and A. Caplier, “Hypovigilence Analysis: Open or Closed Eye or Mouth? Blinking or Yawning Frequency?”, inIEEE AVSS, (Como, Italy), 2005. 51

A. Torralba and J. Herault, “An efficient neuromorphic analog network for motion estimation”,IEEE Transactions on Circuits and Systems-I: Special Issue on Bio-Inspired Processors and CNNs for Vision, vol. 46, February 1999. 52

I. Damousis and D. Tzovaras, “Correlation between SP1 data and parameters and WP 4.4.2 algorithms”, tech. rep., SENSATION Internal Report, November 2004. 52

A. H. Bullinger, “Criteria and algorithms for physiological states and their transitions”, tech. rep., SENSATION Deliverable 1.1.1, August 2004. 52

D. Esteve, M. Gonzalez-Mendoza, B. Jammes, and A. Titli, “Driver hypovigilance criteria, filter and HDM module”, tech. rep., AWAKE Deliverable 3.1, September 2003. 52

M. Johns, “The amplitude-Velocity Ratio of Blinks: A New Method for Monitoring Drowsiness”, tech. rep., Epworth Sleep Centre, Melbourne, Australia, 2003. 52

I. G. Damousis, D. Tzovaras, and M. Strintzis, “A Fuzzy Expert System for the Early Warning of Accidents Due to Driver Hypo-Vigilance”, inArtificial Intelligence Applications and Innovations (AIAI) 2006 Conference, (Athens, Greece), June 7–9 2006. 52

Sun Microsystems,JavaBeans 1.01 specification, 1997. http://java.sun.com/products/javabeans/docs/. 53

L. Nigay and J. Coutaz, “A Generic Platform for Addressing the Multimodal Challenge”, inProc. CHI’95 conference, pp. 98–105, ACM Press, 1995. 53

L. Nigay and J. Coutaz,Intelligence and Multimodality in Multimedia Interfaces: Research and Applications, ch. Multifeature Systems: The CARE Properties and Their Impact on Software Design, p. 16. AAAI Press, 1997. 53, 54

B. Mansoux, L. Nigay, and J. Troccaz, “Output Multimodal Interaction: The Case of Augmented Surgery”, inProc. HCI’06 conference, Springer-Verlag and ACM Press, 2006. 54

Machine Perception Toolbox (MPT). http://mplab.ucsd.edu/grants/project1/free-software/MPTWebSite/API/. 56

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Benoit, A., Bonnaud, L., Caplier, A. et al. Multimodal signal processing and interaction for a driving simulator: Component-based architecture. J Multimodal User Interfaces 1, 49–58 (2007). https://doi.org/10.1007/BF02884432

Issue Date:

DOI: https://doi.org/10.1007/BF02884432